Академический Документы

Профессиональный Документы

Культура Документы

Assumptions in Multiple Regression

Загружено:

api-162851533Исходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Assumptions in Multiple Regression

Загружено:

api-162851533Авторское право:

Доступные форматы

Running head: Assumptions in Multiple Regression

Importance of Assumptions in Multiple Regression Shawna Sjoquist EDPS 607 University of Calgary

Running head: Assumptions in Multiple Regression Introduction The use of assumptions in multiple regression allows researchers the ability to analyze data sets and draw reliable conclusions from the analysis. Assumptions have the power to substantially influence the results that are reported and as such assumptions affect the conclusions that are drawn from multiple regression analysis. The validity of the assumptions themselves directly influences the validity of the conclusions that are drawn from the data analysis. Review of the literature indicates that the number of assumptions that are considered varies and relates somewhat to a matter of statistical opinion (Berry, 1993). While some statisticians identify that a particular condition for multiple regression is required as part of

analysis others may report that the same condition is an assumption of the analysis (Berry, 1993). For example, in order to draw conclusions from a multiple regression data set the researcher may make assumptions regarding linearity, independence, homoscedasticity and normality. In the discussion that follows, the reader will gain a statistical awareness of what is meant by the terms multiple regression and assumptions, be presented with four assumptions germane to multiple regression and become familiar with methods of satisfying the identified assumptions. Multiple Regression In order to adequately discuss the importance of assumptions in multiple regression and the manner in which a researcher would test them, several definitions need to be established. First, the researcher requires a clear understanding of the definition of multiple regression. Multiple regression describes the process of predicting a dependent variable from a set of predictor variables (Stevens, 2009). In other words, the researcher is attempting to predict an effect in a dependent variable from a set of predictors that may in some way influence the result

Running head: Assumptions in Multiple Regression

of the dependent variable. For example, a researcher may be interested in predicting college SAT scores by considering standardized math test scores, standardized English test scores, general attitude toward education and overall study habits. This example highlights an important factor inherent to multiple regression. Multiple regression allows for a more realistic analysis that reflects the natural human condition (Multiple Regression, 2006). More specifically, multiple regression recognizes that the dependent variable is rarely influenced by a single isolated factor. In fact, the dependent variable, college SAT scores in the previous example, are often influenced by several factors, as seen in the example as general attitude toward education, overall study habits and standardized test scores. We know that the score one obtains on their college SAT is likely the result of a variety of contributing factors rather than any one sole factor alone. Taken in mathematical terms, multiple regression attempts to describe the relationship between a Y variable and a few predictor X variables (Stevens, 2009). Essentially, we are attempting to use the independent variables we identified, the X s to predict what the dependent variable, the Y will be (Multiple Regression, 2006). In order to analyze this relationship several important assumptions are made. Assumptions Second, the researcher requires a clear understanding of the definition of statistical assumptions. As we have discussed, through multiple regression we are attempting to use the independent variables, the X variables we introduced, to predict what the dependent variable, or Y variable, will be and use these variables to calculate a regression equation (Multiple Regression, 2006). In order to perform the regression calculation it is assumed that several certain conditions are present. These assumptions constitute our multiple regression assumptions. Technically speaking, multiple regression assumptions are the assumptions made that infer how

Running head: Assumptions in Multiple Regression predicted values of the dependent Y variable are produced from the values of the independent X

variables (Aiken & West, 1991). When the researcher is able to verify that the assumptions have been satisfied they are then able to analyze the data and produce estimates that are presumably reliable, or unbiased, and efficient (Aiken & West, 1991). Being able to produce reliable estimates means that the estimates the researcher puts forward are consistent with the actual results as opposed to being misleadingly higher or lower than the actual results. Essentially, the researcher wants their estimates to be as close to the true value as possible and therefore have a standard error that is as small as possible (Osborne & Waters, 2002). In other words, the researcher wants the estimated value to fit within a small window of values that encase the true value and thereby deemed acceptable. Though we want to ensure that the results we obtain are unbiased and efficient it also must be acknowledged that regression analysis, on its own, is relatively robust (Osborne & Waters, 2002). This means that it is possible for the analysis to produce results that are reasonably unbiased and efficient even if one or more of the assumptions have minor violations (Berry, 1993). The regression analysis is not so robust however to protect against a large violation of one or more of the assumptions (Osborne & Waters, 2002). A large violation is likely to produce faulty results (Berry, 1993). In other words, when the assumptions have not been met the researcher cannot completely trust the results they obtain from the regression analysis (Osborne & Waters, 2002). Moreover, the results the researcher obtains may be susceptible to Type I error, Type II error, and or an over or under estimation of significance (Osborne & Waters, 2002). Overall, knowledge and understanding of the situations when violations of assumptions lead to serious biases, and when they are of little consequences, are essential to meaningful data analysis (Pedhazur, 1997, p. 33). Though, regression analysis is a relatively robust analysis however there are several assumptions of multiple regression that are

Running head: Assumptions in Multiple Regression not robust to violation (Osborne & Waters, 2002). These assumptions will be the focus of the following discussion. Assumption of Linearity In its simplest form, multiple regression requires that there is a linear relationship between the independent and dependent variables (Osborne & Waters, 2002). To understand the assumption of linearity it is important to understand what the result may be should a non-linear relationship be found. Osborne & Waters (2002) offer that if a non-linear relationship is found between the independent and dependent variables the results offered to the researcher through the multiple regression analysis are likely to under estimate the true relationship occurring between the variables. Should the researcher under estimate their results, the concurrent observations have an increased risk of having a Type I error (Osborne & Waters, 2002). If we consider our previous example where the researcher is seeking to predict college SAT scores through analysis of several independent predictor variables, standardized math test scores,

standardized English scores, general attitude toward education and overall study habits, a Type I error would mean that the researcher may report that overall study habits, for example, significantly predict college SAT scores when in fact, true values may suggest otherwise. There are several ways a researcher can check for violations of this assumption. To gain information regarding linearity the researcher may examine the standardized residual plot of studentized residuals vs. predicted values provided by a statistical software program (Osborne & Waters, 2002; Stevens, 2009). If the assumption of linearity is not violated the researcher can expect to see a random scattering of standardized residuals surrounding the horizontal line as seen in the figure below left (Stevens, 2009). Generally speaking, observing any systematic

Running head: Assumptions in Multiple Regression patterns or clustering of the residuals suggests that there has been a violation of the assumption of linearity (Stevens, 2009). For example, the figure below right depicts a pattern and clusters that would suggest a violation of the assumption of linearity has occurred.

Additionally, statistical output can also assist with checking the assumption of linearity. For example, the researcher can use the lack of fit test, as seen in the figure below, to either accept or reject the null hypothesis that a linear regression model is appropriate. For example, using the information provided in the figure, we see that the F statistic (F = 1.49) had a p = .039 which is non-significant and therefore the null hypothesis is not rejected and the assumption of linearity is supported.

Assumption of Independence of Errors

As multiple regression is concerned with predicting a dependent variable from a set of predictors (Stevens, 2009) the assumption of independence of errors is also important to the successful prediction of results. The assumption of independence of errors has to do with measurement and is of particular note when the researcher is attempting to imitate the

Running head: Assumptions in Multiple Regression relationships present in the population the research sample is drawn from (Osborne & Waters,

2002). More specifically, the effect sizes of the variables used in the multiple regression analysis can be over-estimated if the covariate is not reliably measured as the full effect of the covariate would remain intact (Osborne & Waters, 2002). Any causes that are measured unintentionally constitute error and influence the results of multiple regression if not accounted for in an appropriate manner (Christensen & Bedrick, 1997). Multiple regression assumes that the errors or error term is not correlated with any of the independent variables (Christensen & Bedrick, 1997). It assumes that the errors or residuals are independent of one another (Stevens, 2009). Violations to this assumption can reflect errors made in omitting variables, reverse causation and or general measurement error (Christensen & Bedrick, 1997). More specifically, omission of a relevant variable that is correlated with other variables included in the regression will result in a violation of this assumption. Likewise, if the dependent variable has a causal effect on any of the independent variables and or if there is a measurement error concerning the independent variables then a violation in the assumption will likely result (Christensen & Bedrick, 1997). There are several ways a researcher can check for violations to this assumption. Generally speaking, the researcher wants to ensure the size of one residual does not influence the size of another residual (Jeng & Martin, 2006). Statistical output can assist the researcher in identifying violations to the assumption of independence of errors. For example, the researcher can use the Durbin-Watson statistic, presented in the figure below, to evaluate whether or not it is likely that one residual has not affected the next (Jeng & Martin, 2006). The Durbin-Watson statistic ranges from a statistic of 0 to a statistic of 4. In order for the assumption to be satisfied the Durbin-Watson statistic needs to be approximately 2 though the literature suggests that a statistic falling within the range of 1.50 to 2.50 is acceptable (Jeng & Martin, 2006). For

Running head: Assumptions in Multiple Regression

example, if we are to consider the data presented in the figure we can see that the Durbin-Watson statistic is considered acceptable and thus the assumption of independence of errors would be satisfied in this case.

Assumption of Homoscedasticity In order to understand the assumption of homoscedasticity the researcher must comprehend its definition. Homoscedasticity means that he variance of errors is the same across all levels of the independent variable (Osborne & Waters, 2002). In other words, homoscedasticity would indicate that the dependent variable would have the same amount of variability for each of the values of the independent variable (Aiken & West, 1991). Essentially, the researcher would want to see roughly the same amount of variance for all values examined in the analysis. If the researcher finds that all of the random variables in the same vector, or sequence, are equal the researcher would indicate that there is homogeneity of variance and the sequence, or vector, is homoscedastic (Stevens, 2009). Violation of the assumption of homoscedasticity can lead to distorted findings, weakened analysis and an increased possibility for Type I error (Osborne & Waters, 2002). A researcher can test for violations to the assumption of homoscedasticity in several ways. A visual review of the plot of standardized residuals by predicted values that was also used in detection of the previously discussed assumption of linearity can aid in the detection of the assumption of homoscedasticity. In order for the assumption of homoscedasticity to be met the plot, as depicted in the figure below, would demonstrate residuals that are evenly distributed about the horizontal line (Osborne & Waters, 2002).

Running head: Assumptions in Multiple Regression

Should the researcher find that the residuals are not evenly distributed the researcher could conclude that data is more likely heteroscedastic in nature than homoscedastic. There are also some statistical methods useful for checking the assumption of homoscedasticity or homogeneity of error variance. For instance, the researcher may use the Breusch-Pagan test, provided in may statistical output software to check for violations to this assumption (Osborne & Waters, 2002). The figure below displays the Breusch-Pagan test as provided in the statistical output.

Using this output the researcher would attempt to accept or reject the null hypothesis that the variance of the residuals is the same for all values of the independent variable. For example, in the figure provided, the researcher would note that the probability of the Breusch-Pagan statistic was p = .614 which is considered non-significant and would conclude that the null hypothesis is not rejected and the assumption of homogeneity of error variance would be satisfied. The results of this statistical output could be used to strengthen and support the conclusions regarding the assumption of homoscedasticity made from the scatter plot of residuals that was previously discussed.

Running head: Assumptions in Multiple Regression Assumption of Normality The fourth assumption that will be discussed is the assumption of normality. Multiple regression assumes that the variable used in analysis are normally distributed (Hindes, 2012). Variables that are normally distributed will form a relatively consistent bell shaped curve and

10

will be discussed further in the figures to follow (Stevens, 2009). The assumption of normality is influenced by the sized of the sample used (Stevens, 2009). More specifically, if the researcher uses a sufficiently large sample the assumption of normality is more likely to be met (Pfaffenberger & Dielman, 1991). Central Limit Theorem states that the sum of independent observations having any distribution whatsoever approaches a normal distribution as the number of observations increases (Stevens, 2009, p. 221). Further, Bock (1975) offers that even those distributions that may stray from normality, due to outliers or Kurtosis for example, will approximate toward normality where there is a sum of 50 or more observations. Variables that do not follow a normal pattern of distribution are likely being influenced by highly skewed or kurtotic variables and or variables with considerable outliers (Osborne & Waters, 2002). Given a general understanding of the assumption of normality the researcher also needs to be equipped with the knowledge of how to check for violations to the assumption. The assumption of normality can be assessed though a visual inspection of data plots, skew, Kurtosis and or P-P plots (Osborne & Waters, 2002; Stevens, 2009). The researcher may also gain information regarding normality by considering the presence of outliers. The researcher can identify outliers by inspecting a histogram or stem and leaf plot derived from the frequency distribution (Osborne & Waters, 2002). The figure below represents a histogram that follows a relatively normal pattern of distribution with the exception of the extreme outlier we see in red.

Running head: Assumptions in Multiple Regression

11

If we are to consider the value circled in red, this distribution would be considered to be skewed. Visual inspection of the extreme values table provided in statistical output can help the researcher gain information regarding normality. The extreme values table presented in the figure below highlights the highest and lowest scores presented in the data set and can indicate the existence of outliers that might influence the presence or absence of skew and kurtosis. The presence of extreme values, such as those represented by the highlighted portions of the figure below left have the potential to distort the data and create a distribution that is skewed. The information provided by the extreme values table is also supported by the box plot. By reviewing the box plot, as depicted by the figure below right, the researcher is able to see the extreme values or outliers also described by the extreme values table.

Running head: Assumptions in Multiple Regression

12

The researcher can also gain information about skew and kurtosis by referencing information provided in the descriptives table of the statistical output. In a perfectly normal distribution both skew and kurtosis will be 0 (Stevens, 2009). The farther the reported values move away from 0 represents movement toward a non-normal distribution (Stevens, 2009).

Further, the researcher may wish to run the Kolmogorov-Smirnov test and Shapiro-Wilk test which are also designed to provide information regarding normality (Pfaffenberger & Dielman, 1991). By reviewing the test of normality table provide by the resulting statistical analysis the researcher will be able to obtain information indicating the presence or absence of normality. For instance, statistically significant results, results .05 and greater, for example, indicate that the data is normally distributed while results less than .05, for example, indicate that the data is not normally distributed.

Running head: Assumptions in Multiple Regression

13

Additionally, the researcher may review the P-P plot also provided by the statistical output. The P-P plot allows the researcher to see how closely the two data sets agree with one another (Stevens, 2009). Distributions are considered equal if the data plot follows the comparison line. The figure below depicts a normal P-P plot where the standardized residuals follow the general path of the comparison line and represents the type of P-P plot a researcher would hope to see in their regression analysis.

Conclusion The use of assumptions in multiple regression allows researchers the ability to analyze data sets and draw reliable conclusions from the analysis. The discussion has provided evidence that indicates the ability of the researcher to check relevant assumptions has significant benefits for the research results. Though review of the literature has revealed that the number of assumptions that are considered varies and relates somewhat to a matter of statistical opinion (Berry, 1993) much of the literature agrees that the assumptions identified in this discussion are important to multiple regression. For example, it is generally agreed that multiple regression requires that there is a linear relationship between the independent and dependent variables and that a normal distribution exists. If the variance of errors is not the same across all levels of the

Running head: Assumptions in Multiple Regression independent variable and or errors are correlated with any of the independent variables the results and pursuant conclusions of multiple regression analysis will be significantly impacted.

14

When the researcher is able to verify that relevant assumptions have been satisfied the researcher is provided the freedom to produce estimates that can be considered reliable, free from bias and efficient.

Running head: Assumptions in Multiple Regression References Aiken, L. S.. & West, S. G. (1991). Multiple regression: Testing and interpreting interactions. Newbury Park, CA:Sage. Berry, W. D. (1993). Understanding Regression Assumptions. Newbury Park: Sage Publications Christensen, R. & Bedrick, J. (1997). Testing the Independence Assumption in Linear Models. Journal of the American Statistical Association 92(439) 1006-1016. http://www.jstor.org/stable/2965565 Hindes, Y, (2012, May). Multiple Regression Continued. Presented in EDPS 607 Research in Applied Psychology: Multivariate Analysis Lecture, University of Calgary, Calgary, Alberta. Jeng, J. Y & Martin, A. (2006). Residuals in multiple regression analysis. Journal of Pharmaceutical Sciences 74 (10)1053-1057. DOI: 10.1002/jps.2600741006 Multiple Regression. (2006). In Encyclopedia of Special Education: A Reference for the Education of the Handicapped and Other Exceptional Children and Adults. Retrieved from http://www.credoreference.com.ezproxy.lib.ucalgary.ca/entry/wileyse/ multiple_regression Osborne, Jason & Elaine Waters (2002). Four assumptions of multiple regression that researchers should always test. Practical Assessment, Research & Evaluation, 8(2). Retrieved June 16, 2012 from http://PAREonline.net/getvn.asp?v=8&n=2 .

15

Running head: Assumptions in Multiple Regression Pedhazur, E. J., (1997). Multiple Regression in Behavioral Research (3rd ed.). FL:Harcourt Brace. Pfaffenberger, R. C & Dielman, T, E. (1991). Testing normality of regression disturbances: A Monte Carlo study of the Filleben test. Computational Statistics Data Analysis, 11(3) from http://www.sciencedirect.com/science/article/pii/016794739190085G Stevens, J. P. (2009). Applied multivariate statistics for the social science (5th ed.). New York: Routledge. Orlando,

16

Вам также может понравиться

- Determining Sample Size: Glenn D. IsraelДокумент5 страницDetermining Sample Size: Glenn D. IsraelHandhi Montok SetiawanОценок пока нет

- Measurement and Scaling Fundamentals for ResearchДокумент28 страницMeasurement and Scaling Fundamentals for ResearchSavya JaiswalОценок пока нет

- Data Analysis Using SpssДокумент131 страницаData Analysis Using SpssSarbarup Banerjee100% (2)

- How To Determine Sample SizeДокумент5 страницHow To Determine Sample SizeShaleem DavidОценок пока нет

- Cronbach AlphaДокумент5 страницCronbach AlphaDrRam Singh KambojОценок пока нет

- Creating An Effective Monitoring and Evaluation System at Organization LevelДокумент23 страницыCreating An Effective Monitoring and Evaluation System at Organization Levelعدنان باحكيمОценок пока нет

- Sampling and Sample SizeДокумент32 страницыSampling and Sample SizePooja MehraОценок пока нет

- Advanced Writing Part 1: Formal Essay: Language For IntroductionsДокумент2 страницыAdvanced Writing Part 1: Formal Essay: Language For IntroductionsSara Albaladejo AlbaladejoОценок пока нет

- # RESEARCH METHODOLOGY Review On Comfirmatory Factor AnalysisДокумент22 страницы# RESEARCH METHODOLOGY Review On Comfirmatory Factor AnalysisMintiОценок пока нет

- What is a Sample SizeДокумент5 страницWhat is a Sample Sizerigan100% (1)

- Research Methodology: Sandeep Kr. SharmaДокумент37 страницResearch Methodology: Sandeep Kr. Sharmashekhar_anand1235807Оценок пока нет

- Formulating RP RevisedДокумент69 страницFormulating RP RevisedragucmbОценок пока нет

- Sample Size Calculator for Research StudiesДокумент35 страницSample Size Calculator for Research StudiesRobiОценок пока нет

- Measuement and ScalingДокумент31 страницаMeasuement and Scalingagga1111Оценок пока нет

- Ipoh International Secondary School Checkpoint Exam PreparationДокумент22 страницыIpoh International Secondary School Checkpoint Exam PreparationTharrshiny Selvaraj100% (2)

- IKM - Sample Size Calculation in Epid Study PDFДокумент7 страницIKM - Sample Size Calculation in Epid Study PDFcindyОценок пока нет

- Q2 L3 HypothesisДокумент16 страницQ2 L3 HypothesisKd VonnОценок пока нет

- Research Design Framework and MethodsДокумент53 страницыResearch Design Framework and MethodsDaizylie FuerteОценок пока нет

- Lecture2 Sampling ProcedureДокумент33 страницыLecture2 Sampling ProcedureJavier, Kristine MarieОценок пока нет

- Sampling Design and Terminology ExplainedДокумент27 страницSampling Design and Terminology ExplainedsannyruraОценок пока нет

- What Is Hypothesis TestingДокумент18 страницWhat Is Hypothesis TestingShanna Basallo AlentonОценок пока нет

- Business Research MethodsДокумент41 страницаBusiness Research MethodsBikal ShresthaОценок пока нет

- M&E Course ChaptersДокумент101 страницаM&E Course ChaptersHamse AbdihakinОценок пока нет

- Developing The M&e Work PlanДокумент47 страницDeveloping The M&e Work PlanAnonymous Xb3zHioОценок пока нет

- Project Monitoring and Evaluation Introduction and The Logical FrameworkДокумент99 страницProject Monitoring and Evaluation Introduction and The Logical FrameworkThet Win100% (1)

- Chapter 3Документ79 страницChapter 3Sisay Mesele100% (1)

- Determining Sample SizeДокумент25 страницDetermining Sample SizeAvanti ChinteОценок пока нет

- Assumptions of Multiple Regression AnalysisДокумент4 страницыAssumptions of Multiple Regression AnalysisSheikh Aakif100% (1)

- Research ReportДокумент26 страницResearch Reportchristopher0% (1)

- Multivariate Analysis of VarianceДокумент29 страницMultivariate Analysis of VarianceAC BalioОценок пока нет

- Activity Proposal For Graduation 2023Документ2 страницыActivity Proposal For Graduation 2023Jahzeel Rubio100% (1)

- Assignment Project Management INDIVIDUALДокумент21 страницаAssignment Project Management INDIVIDUALshewameneBegashawОценок пока нет

- Unit Ii: Research DesignДокумент93 страницыUnit Ii: Research DesignShabnam HabeebОценок пока нет

- Manage Project Info with PIMSДокумент9 страницManage Project Info with PIMSSumme Ma TigeОценок пока нет

- Chapter 14, Multiple Regression Using Dummy VariablesДокумент19 страницChapter 14, Multiple Regression Using Dummy VariablesAmin HaleebОценок пока нет

- Nonlinear regression models for IR spectroscopy dataДокумент9 страницNonlinear regression models for IR spectroscopy dataGustavo VelizОценок пока нет

- Path Analysis and SEM with AMOSДокумент11 страницPath Analysis and SEM with AMOSSajid hussain awanОценок пока нет

- Organize Projects with PMISДокумент8 страницOrganize Projects with PMISDonna MelgarОценок пока нет

- What Statistical Analysis Should I Use - Statistical Analyses Using SPSS - IDRE StatsДокумент43 страницыWhat Statistical Analysis Should I Use - Statistical Analyses Using SPSS - IDRE StatsIntegrated Fayomi Joseph AjayiОценок пока нет

- Evolution of Project ManagementДокумент14 страницEvolution of Project ManagementSUSHANT S JOSHIОценок пока нет

- A Call For Qualitative Power Analyses, 2007, Onwuegbuzie & LeechДокумент17 страницA Call For Qualitative Power Analyses, 2007, Onwuegbuzie & Leechzinou251Оценок пока нет

- Difference Between Quantitative and Qualitative Research in The Perspective of The Research ParadigmДокумент3 страницыDifference Between Quantitative and Qualitative Research in The Perspective of The Research ParadigmQamar Zaman MalikОценок пока нет

- Paired t-test, correlation, regression in 38 charsДокумент6 страницPaired t-test, correlation, regression in 38 charsvelkus2013Оценок пока нет

- Partial Correlation AnalysisДокумент2 страницыPartial Correlation AnalysisSanjana PrabhuОценок пока нет

- Project Cycle ManagementДокумент23 страницыProject Cycle ManagementChamara SamaraweeraОценок пока нет

- Power AnalysisДокумент8 страницPower Analysisnitin1232Оценок пока нет

- Phi CoefficientДокумент1 страницаPhi CoefficientBram HarunОценок пока нет

- Monitoring and Evaluation ME Plan Template Multiple ProjectsДокумент13 страницMonitoring and Evaluation ME Plan Template Multiple ProjectsJohn JobОценок пока нет

- Measures of Central TendencyДокумент40 страницMeasures of Central TendencyAnagha AnuОценок пока нет

- Communication Project Management and COVID 19Документ11 страницCommunication Project Management and COVID 19Abasi100% (1)

- Increase Employee CommitmentДокумент35 страницIncrease Employee CommitmentYousry El-FoweyОценок пока нет

- 6 Sample SizeДокумент15 страниц6 Sample Sizeorthopaedic08Оценок пока нет

- Measurement and Scaling TechniqueДокумент19 страницMeasurement and Scaling TechniqueShashank100% (3)

- Intuitive Biostatistics: Choosing A Statistical TestДокумент5 страницIntuitive Biostatistics: Choosing A Statistical TestKo Gree KyawОценок пока нет

- Testing The Assumptions of Linear RegressionДокумент14 страницTesting The Assumptions of Linear Regressionarshiya_nawaz7802100% (1)

- 5 Regression AnalysisДокумент43 страницы5 Regression AnalysisAC BalioОценок пока нет

- Hypothesis Tests: Irwin/Mcgraw-Hill © Andrew F. Siegel, 1997 and 2000Документ27 страницHypothesis Tests: Irwin/Mcgraw-Hill © Andrew F. Siegel, 1997 and 2000radislamy-1100% (1)

- Personality & AttitudeДокумент4 страницыPersonality & AttitudeShakher SainiОценок пока нет

- Regression With Dummy Variables Econ420 1Документ47 страницRegression With Dummy Variables Econ420 1shaharhr1Оценок пока нет

- Analyzing English Textbook QualityДокумент23 страницыAnalyzing English Textbook QualityBeynthaОценок пока нет

- Data Management and Statistical Analysis Using SPSSДокумент4 страницыData Management and Statistical Analysis Using SPSSKhairol Anuar MohammedОценок пока нет

- Fasds: Social and Emotional Interventions: University of Calgary Shawna Sjoquist Spring 2011Документ16 страницFasds: Social and Emotional Interventions: University of Calgary Shawna Sjoquist Spring 2011api-162851533Оценок пока нет

- 657 Case Study OneДокумент8 страниц657 Case Study Oneapi-162851533Оценок пока нет

- PresentationДокумент13 страницPresentationapi-162851533Оценок пока нет

- 603 Marked Ethical Decision Making ExerciseДокумент22 страницы603 Marked Ethical Decision Making Exerciseapi-162851533Оценок пока нет

- Martin SplashДокумент17 страницMartin Splashapi-162851533Оценок пока нет

- Ethical Issues in Multicultural PopulationsДокумент19 страницEthical Issues in Multicultural Populationsapi-162851533Оценок пока нет

- Finalpapersjoquist 1Документ11 страницFinalpapersjoquist 1api-162851533Оценок пока нет

- Historical Influence of Adhd in School Psycholog2Документ20 страницHistorical Influence of Adhd in School Psycholog2api-162851533Оценок пока нет

- Hearing Children of Deaf ParentsДокумент20 страницHearing Children of Deaf Parentsapi-162851533Оценок пока нет

- Fasds: Social and Emotional Interventions: University of Calgary Shawna Sjoquist Spring 2011Документ16 страницFasds: Social and Emotional Interventions: University of Calgary Shawna Sjoquist Spring 2011api-162851533Оценок пока нет

- 657 Case Study TwoДокумент18 страниц657 Case Study Twoapi-162851533Оценок пока нет

- 657 Test ReviewДокумент16 страниц657 Test Reviewapi-162851533Оценок пока нет

- Interview Assignment Reflection University of Calgary APSY 660 Shawna SjoquistДокумент7 страницInterview Assignment Reflection University of Calgary APSY 660 Shawna Sjoquistapi-162851533Оценок пока нет

- Sjoquist Math ComputationsДокумент18 страницSjoquist Math Computationsapi-162851533Оценок пока нет

- Treatment Efficacy in AdhdДокумент15 страницTreatment Efficacy in Adhdapi-162851533Оценок пока нет

- 603 Marked North Star Womenrevised ProjectДокумент19 страниц603 Marked North Star Womenrevised Projectapi-162851533Оценок пока нет

- Ed Opportunities of Adhd in School PsychДокумент12 страницEd Opportunities of Adhd in School Psychapi-162851533Оценок пока нет

- Case Study PresentationДокумент23 страницыCase Study Presentationapi-162851533Оценок пока нет

- 605 Parental Psych Control and Anxious Adjustment Research ProposalДокумент18 страниц605 Parental Psych Control and Anxious Adjustment Research Proposalapi-162851533Оценок пока нет

- 674 Journal Article ReviewДокумент23 страницы674 Journal Article Reviewapi-162851533Оценок пока нет

- 658 Intervention Plan AssignmentДокумент33 страницы658 Intervention Plan Assignmentapi-162851533100% (1)

- 651 Final ExamДокумент13 страниц651 Final Examapi-162851533Оценок пока нет

- 658 Sjoquist Best Practices PaperДокумент14 страниц658 Sjoquist Best Practices Paperapi-162851533Оценок пока нет

- 658 Anxiety HandoutДокумент2 страницы658 Anxiety Handoutapi-162851533Оценок пока нет

- Talks To Teachers PPRДокумент13 страницTalks To Teachers PPRapi-162851533Оценок пока нет

- Interview Assignment Reflection University of Calgary APSY 660 Shawna SjoquistДокумент7 страницInterview Assignment Reflection University of Calgary APSY 660 Shawna Sjoquistapi-162851533Оценок пока нет

- Michel Foucault - Poder - Conocimiento y Prescripciones EpistemológicasДокумент69 страницMichel Foucault - Poder - Conocimiento y Prescripciones EpistemológicasMarce FernandezОценок пока нет

- Evidence-Based Guideline Summary: Evaluation, Diagnosis, and Management of Facioscapulohumeral Muscular DystrophyДокумент10 страницEvidence-Based Guideline Summary: Evaluation, Diagnosis, and Management of Facioscapulohumeral Muscular DystrophyFitria ChandraОценок пока нет

- Unseen, Unheard, UnsaidДокумент322 страницыUnseen, Unheard, Unsaidamitseth123Оценок пока нет

- Essay - The Federal Emergency Relief Administration (FERA) 1933Документ7 страницEssay - The Federal Emergency Relief Administration (FERA) 1933SteveManningОценок пока нет

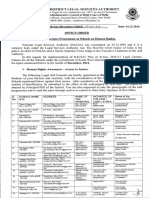

- Legal Awareness Programmes at Schools On Human RightsДокумент2 страницыLegal Awareness Programmes at Schools On Human RightsSW-DLSAОценок пока нет

- Public VersionДокумент170 страницPublic Versionvalber8Оценок пока нет

- DCS External Bursary Application Form 2022Документ3 страницыDCS External Bursary Application Form 2022Tandolwethu MaliОценок пока нет

- Written Output For Title DefenseДокумент6 страницWritten Output For Title DefensebryanОценок пока нет

- Tally Sheet (Check Sheet) : TemplateДокумент7 страницTally Sheet (Check Sheet) : TemplateHomero NavarroОценок пока нет

- At.2508 Understanding The Entitys Internal ControlДокумент40 страницAt.2508 Understanding The Entitys Internal Controlawesome bloggersОценок пока нет

- Anchoring Effect in Making DecisionДокумент11 страницAnchoring Effect in Making DecisionSembilan Puluh DuaОценок пока нет

- De ZG631 Course HandoutДокумент7 страницDe ZG631 Course HandoutmzaidpervezОценок пока нет

- Writing Qualitative Purpose Statements and Research QuestionsДокумент12 страницWriting Qualitative Purpose Statements and Research QuestionsBabie Angela Dubria RoxasОценок пока нет

- Impact of Corporate Ethical Values On Employees' Behaviour: Mediating Role of Organizational CommitmentДокумент19 страницImpact of Corporate Ethical Values On Employees' Behaviour: Mediating Role of Organizational CommitmentRudine Pak MulОценок пока нет

- SocratesДокумент119 страницSocratesLeezl Campoamor OlegarioОценок пока нет

- CourseWork ID GreenwichДокумент2 страницыCourseWork ID GreenwichQuinny TrầnОценок пока нет

- FORM SSC.1 School Sports Club Registration Form v1 SMAДокумент3 страницыFORM SSC.1 School Sports Club Registration Form v1 SMABaluyut Edgar Jr. MОценок пока нет

- Morning Sleepiness Among College Students: Surprising Reasons For Class-Time PreferenceДокумент4 страницыMorning Sleepiness Among College Students: Surprising Reasons For Class-Time PreferenceElijah NyakundiОценок пока нет

- ReviewДокумент182 страницыReviewRea Aguilar San PabloОценок пока нет

- Lecture-2.1.6Документ13 страницLecture-2.1.6gdgdОценок пока нет

- How Social Class Impacts EducationДокумент5 страницHow Social Class Impacts Educationnor restinaОценок пока нет

- CEG3185 Syllabus Winter2019Документ2 страницыCEG3185 Syllabus Winter2019MinervaОценок пока нет

- Lehmann HumanisticBasisSecond 1987Документ9 страницLehmann HumanisticBasisSecond 1987Rotsy MitiaОценок пока нет

- ISKCON Desire Tree - Voice Newsletter 06 Sept-07Документ5 страницISKCON Desire Tree - Voice Newsletter 06 Sept-07ISKCON desire treeОценок пока нет

- Proposal For MentorshipДокумент6 страницProposal For Mentorshipnaneesa_1Оценок пока нет

- JK Saini ResumeДокумент3 страницыJK Saini ResumePrateek KansalОценок пока нет

- Episode 1: The School As A Learning Resource CenterДокумент6 страницEpisode 1: The School As A Learning Resource CenterJonel BarrugaОценок пока нет