Академический Документы

Профессиональный Документы

Культура Документы

Youtube For Publishing Acad Med

Загружено:

api-235805441Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Youtube For Publishing Acad Med

Загружено:

api-235805441Авторское право:

Доступные форматы

Article

YouTube as a Platform for Publishing Clinical Skills Training Videos

David Topps, MD, Joyce Helmer, EdD, and Rachel Ellaway, PhD

Abstract

The means to share educational materials have grown considerably over the years, especially with the multitude of Internet channels available to educators. This article describes an innovative use of YouTube as a publishing platform for clinical educational materials. The authors posted online a series of short videos for teaching clinical procedures anticipating that they would be widely used. The project Web site attracted little traffic, alternatives were considered, and YouTube was selected for exploration as a publication channel. YouTubes analytics tools were used to assess uptake, and viewer comments were reviewed for specific feedback in support of evaluating and improving the materials posted. The uptake was much increased with 1.75 million views logged in the first 33 months. Viewer feedback, although limited, proved useful. In addition to improving uptake, this approach also relinquishes control over how materials are presented and how the analytics are generated. Open and anonymous access also limits relationships with end users. In summary, YouTube was found to provide many advantages over selfpublication, particularly in terms of technical simplification, increased audience, discoverability, and analytics. In contrast to the transitory interest seen in most YouTube content, the channel has seen sustained popularity. YouTubes broadcast model diffused aspects of the relationship between educators and their learners, thereby limiting its use for more focused activities, such as continuing medical education.

eveloping learners competence in performing clinical procedures continues to be a challenge for medical education, with many learners reporting insufficient opportunities to practice simple skills. Many practice opportunities are lost because learners are expected to observe a procedure repeatedly before trying it.1 Brief instructional videos have been shown to improve learning of clinical procedures.2,3 However, the ability of instructional videos to support learning is dependent on learners access to such resources, particularly at the bedside.4 Creating and publishing online videos of clinical procedures is a growing part of mainstream academic publishing.5 The means to publish educational videos has expanded in recent years, especially

Dr. Topps is professor, Department of Family Medicine, University of Calgary, Calgary, Alberta, Canada. Dr. Helmer is assistant professor of clinical sciences, Northern Ontario School of Medicine, Sudbury, Ontario, Canada. Dr. Ellaway is assistant dean of curriculum and planning, Northern Ontario School of Medicine, Sudbury, Ontario, Canada. Correspondence should be addressed to Dr. Topps, Sheldon Chumir Centre, 8th Floor, 1213 4 St. SW, Calgary; telephone: (855) 210-7525; e-mail: topps@ucalgary.ca. Acad Med. 2013;88:192197. First published online December 23, 2012 doi: 10.1097/ACM.0b013e31827c5352

with the many Internet channels now available to educators.6 However, not every channel may be appropriate for educational purposes.4 Some channels seem to be relatively respectable in academic circles, such as MedEdPORTAL7 and the Multimedia Educational Resource for Learning and Online Teaching (MERLOT),8 whereas other channels, including YouTube, have been linked to unprofessional behaviors9 and educational superficiality.10 YouTubes defenders have also described it as informative and accurate and useful particularly in combination with other learning materials.11 Despite the availability of these channels, there has been little investigation of the advantages of using particular publishing systems for medical education. This article describes our process for selecting a platform for online educational videos. We discuss our experience with this platform and offer recommendations for identifying effective ways to disseminate instructional materials online.

The PocketSnips Project

targeted at medical and nursing students, residents, and clinicians in practice. A multidisciplinary project team identified appropriate topics and developed source materials for making the videos.1 We used actors wherever possible and only used patients where a particular condition (such as a dislocated shoulder) needed to be seen. The videos were mostly shot in close-up and were presented with an overdubbed audio narrative to anonymize the participants as much as possible. All participants featured in the videos gave informed consent for the materials to be published as open-access online resources. The Laurentian University IRB approved the production of this video series. The production process closely followed medicolegal regulations, in particular Ontarios Freedom of Information and Protection of Privacy Act rules.13 The videos focused on the kinetic elements of each procedure so as to keep video clip lengths short. We published the videos on a dedicated PocketSnips project Web site14 under a Creative Commons license15 that allowed third parties to freely use and modify the resources. The PocketSnips Web site allowed users to provide feedback on any of the videos posted there. After six months, there had been fewer than 200 views of the videos, very little feedback had been provided,

In 2005, a group of health care professionals in Northern Ontario initiated the PocketSnips Project to create a series of clinical skills videos that were to be published online as reusable learning objects.12 These were

192

Academic Medicine, Vol. 88, No. 2 / February 2013

Article

and, as such, we felt that we had not managed to engage with the broad national and international populations of health professional practitioners and trainees that we had anticipated. We also encountered technical challenges in video distribution (particularly problems with insufficient bandwidth and slow playback), even at this low usage level, and decided to look for alternative ways to disseminate the material.

Selecting a Publishing Platform

In the end, the perceived advantages to the PocketSnips Project that YouTube offered outweighed the disadvantages, and we selected this as our alternative publishing platform. We closely monitored uptake of the videos on this platform through regular review of user comments and viewer counts over the first few months for evaluation and quality assurance purposes.

Using YouTube

July 2008 to a project-specific YouTube channel called CliniSnips.19 We named the channel by mutual agreement with other groups creating similar video material to create a neutral venue to be shared by all contributors. The 15 PocketSnips videos we uploaded to YouTube varied between 1:29 and 5:03 minutes in length (mean 2:41 minutes). The titles were Central Line, Circulation Test, Digital Block, Foley Catheter, Intravenous Line, Local Anesthetic, Nasogastric Tube, Shave Biopsy, Shoulder Dislocation, Simple Suturing, Sterile Gloves, Ulnar Gutter Cast, Vandenbos Procedure, Venipuncture, and Volar Slab Cast. Using simple analytics reports Thirty-three months after we posted the clips on YouTube, we compiled the data for the 10 most-viewed videos for analysis (see Table 2). Other videos subsequently added to the CliniSnips channel were omitted from detailed analysis. The data came from two sources: analytics data from YouTube (numbers of views, etc.) and the user-generated comments for each video. YouTube provided analytics data via a standard set of Web-based reports based on Googles standard analytics suite of tools (note that YouTubes analytics tool set is now called Insight). We filtered the analytics reports on the basis of date ranges and report functions (e.g., Demographics). Some of the calculated reports that YouTube provides are basic and unambiguous, such as the total number of views per video, individual view duration, or viewers location; some

Disappointed with the PocketSnips sites failure to realize our goals for distributing the videos, we began evaluating alternative publishing channels in early 2008. A subgroup of PocketSnips project members considered four candidate systems: MedEdPORTAL,7 Health Education Assets Library,16 MERLOT,8 and YouTube. At the time, other video distribution channels such as iTunesU and YouTubeEDU were not available. Despite having certain academic advantages, such as peer-review processes and a specific instructional focus, the education-specific platforms met fewer of our requirements than YouTube did (see Table 1). Some project members expressed concerns about using YouTube, in particular the lack of a disclaimer mechanism to limit the authors liability for the content presented (a feature which was provided on the PocketSnips project Web site). We mitigated this fact by adding descriptions to each YouTube video recommending that viewers seek further information from the PocketSnips Web site. There were also concerns as to whether an essentially entertainmentoriented channel was suitable for clinical training materials. Often, projects that involve the public distribution of educational materials raise concerns from faculty, such as reluctance to share materials and desire to obtain academic credit for publishing learning materials.17 Such concerns were less important to those involved in the PocketSnips project than selecting a platform that could engage a larger audience and record users feedback on the videos posted there. Additionally, we considered whether similar material was already available on the platform, the effort required to publish materials on the platform, the platforms usability, and the user-tracking information the platform provided.

Loading a video clip onto YouTube required minimal technical skill and was much simpler than doing so on the original local video-streaming server. YouTube automatically compresses the source material into a streaming format for easy access. This also solved our problem of choosing which video format to use because, prior to this, mobile devices did not share a common video format or player. Once we uploaded the YouTube videos, we annotated them with metadata tags and descriptive links that pointed back to the original videos on the PocketSnips project Web site.14 YouTube provides basic feedback tools, including the ability for viewers to add comments and vote on whether they liked a video, and recommender systems (similar to Amazons Customers also bought feature). Comments and voting could be disabled for each video at the authors discretion. Google Analytics were added to YouTube shortly after we created the channel,18 extending the available information about how the videos were being used. We uploaded the PocketSnips videos (with Creative Commons licenses) in

Table 1

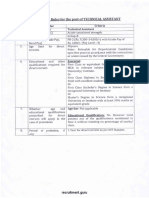

Selection Criteria and Ratings of Four Platforms Considered for Dissemination of PocketSnips Clinical Skills Educational Videos, 2008 Platform Criteria Size of user base Similar material already in the system Time to publish Usability/discoverability User feedback Video streaming services MedEdPORTAL HEAL* Small (health care Small (health care education only) education only) Yes Yes Long (peer-reviewed) Medium Some No Short Medium Some No No MERLOT* YouTube Medium Large (education only) (public) No Yes Long (peer-reviewed) Medium Some No No Instant Easy Extensive Yes Yes

Content conversion services No

*HEAL indicates Health Education Assets Library; MERLOT, Multimedia Educational Resource for Learning and Online Teaching.

Academic Medicine, Vol. 88, No. 2 / February 2013

193

Article

are more complex and less well defined (such as Attention, Engagement, and Discovery). We collated viewer comments and analyzed them for common themes using an open coding process. The underlying analytics data are now directly accessible via the YouTube application programming interface (API),20 but we have not had the programming resources to support this approach. Thus, our investigation of the user data was based on the standard analytics reports provided through YouTube. However, Google and YouTube (as its subsidiary) do not publish the algorithms on which their analytics reports are based, so we cannot comment on the underlying methodology used to generate them. We extracted the analytics data from YouTube in April 2011. There had been more than 1.74 million views on the channel during 33 months, with the most popular video, Intravenous Line, garnering 456,244 views. The mean number of views per video was 163,316. The analytics reports provided a detailed breakdown of viewer behaviors, including the exact amount of time viewers spent watching each video. The total aggregate viewing time for the top 10 videos was more than 74,000 hours. The CliniSnips channel attracted over 2,400 views

per day during the 18 months prior to preparing this article. It is particularly encouraging to see on subsequent monitoring that the usage rate has dropped very little, suggesting that the channel has reasonable sustainability. This contrasts strongly with general viewing patterns for YouTube videos, which show a marked decline over about six weeks.21 Despite their simplified approach, the Google Analytics reports we retrieved contained a level of detail that provided more useful information than hit counts alone, such as whether a video was viewed on a mobile device. The proportion of mobile viewers, initially small at around 3%, rose steadily to 9%. The Demographics report revealed that viewers were 56% male with a modal age of 45. Because of the nature of the videos, we anticipated that they would be most useful for trainees or less experienced clinicians, so the modal age was higher than expected. The Attention score reflected the time viewers spent on watching each video and is described by YouTube as an indicator of your videos ability to hold viewers attention compared to videos of similar length.22 The CliniSnips videos scored highly, with an average of 4.330.40, compared with the general mean of 3.3 (out of 5).

The report on Viewing Habits identified those segments of the videos that generated most interest, or were most often reviewed or replayed. Most viewers watched to the end of the clips, with some subtle peaks in the curve where details in the procedural techniques were reviewed several times. We used this particular type of analysis to refine certain clips to better respond to the users attention span, including cutting less important segments or annotating segments that had high viewing density to further explain the events being portrayed. A videos Community Engagement report was based on the quantity of discussion on the video and the number of links that users made from it to other sites. No details are provided by Google as to how this score is generated, and this lack of transparency made it less useful than other metrics. The Discovery report reflected how viewers reached a particular video, including whether they arrived from another YouTube video or Web site. Again, the algorithms used are a Google trade secret, but this report view did allow for further exploration as to why there had been an uptick in activity for a particular video. For example, an increase in viewer rates for the Intravenous Line video around January 2011 was correlated with the video featuring on the Medifix video referral site at that time.23 Google Analytics18 also tracked a small but nonsignificant increase in traffic to the original PocketSnips Web site following the videos being posted on YouTube. Social data analysis YouTube provides viewers with various ways of giving feedback, including approval ratings and text comments. These could be enabled, disabled, or hidden on a per-video basis. Comments and ratings were enabled for CliniSnips content (see Table 2). We compiled the 761 comments from the 10 mostviewed videos and entered them into Atlas.ti (Atlas.ti Scientific Software Development GmbH, Berlin, Germany) for thematic analysis using grounded theory techniques.24 We grouped similar concepts into two main categories to create a common axial coding model: responses, including applause, complaint,

Table 2

Google Analytics-Driven User Data for the Top 10 PocketSnips Clinical Skills Educational Videos on YouTube as of June 18, 2011 Duration (seconds) 258 146 89 113 103 176 303 142 137 147 No. of views 187,856 456,244 20,696 407,379 23,598 242,425 53,960 196,001 19,494 25,513 Attention score 4.1 4.8 4.9 1.4 4.0 4.8 3.9 4.8 4.1 4.0 No. of comments* 41 212 4 27 9 114 40 151 150 13 Ratio of comments to views* 1:4,581 1:2152 1:5,174 N/A 1:2,622 1:2,126 1:1,349 1:1,298 1:129 1:1,962

Video title Central line insertion Intravenous line insertion Digital block Foley catheter Local anesthetic Nasogastric tube Suturing Venipuncture Vandenbos procedure for ingrown toenails Shave and punch skin biopsies

* The mean number of comments per video was 76, and the mean ratio of comments to views was 1:2,377. Comments were suspended, so we did not calculate a ratio of comments to views.

194

Academic Medicine, Vol. 88, No. 2 / February 2013

Article

critique, discussion, emotional, and flippant responses (examples of each of these are given in Table 3); and educational activities constructed around the CliniSnips videos. The latter had three subordinate codes: (1) healthcare-provider-to-patient activities in which, for example, discussion might start with a lay question that would generate responses that appeared to come from health care providers, (2) healthcare-provider-to-health-care-provider activities exemplified by discussions between health care professionals about the merits of alternative methods for a particular procedure, and (3) patientto-patient education activities, such as comments from people with anxiety about an upcoming similar procedure or from people who had already had the procedure, often reassuring the anxious. Although they seemed credible and illustrated some interesting social processes, there was no practical way to confirm that genuine patients or health care professionals made these comments.

Was It Successful?

real numbers remain unknown. Given the number of comments originating from what appeared to be patients, this may in part explain the greater modal age. YouTube applied age restrictions for some videosthis appears to be a somewhat arbitrary process, and YouTube does not provide authors notification when this occurs. Further analysis did not reveal that the age restrictions had much effect on the quality of the comments received. The main drawbacks in using YouTube were its generic page format, an inability to track and identify specific users, and a lack of information on how analytics were generated. We considered that simplified data on very large numbers were much more useful than greater detail on the very low numbers of viewers from our original PocketSnips site. Viewers were anonymous, and we had little indication of whether they represented the target population of health professionals, other than any feedback they left. Specific educational outcomes were therefore difficult to assess in the original target population. Although learning analytics is a growing aspect of using educational technologies,25,26 and the YouTube analytics tools provided many insights into how the videos were used, the analytics algorithms are not disclosed by Google and must therefore be considered with significant caution. It is now possible to gain more direct access to YouTube analytics data through their API. This has been useful for other groups, but this was beyond our programming expertise.21,27 In creating a shared CliniSnips channel, we hoped that other video providers

would use the channel to share their clinical educational materials. This hope was not realized, however, and CliniSnips has so far only been used for PocketSnips material, with other groups running their own channels. However, the lack of a single portal has been offset by the greatly increased discoverability provided by YouTube through their metadata and search tools, along with the cross-referencing multiplier effect of their automatic recommender system. Examining the referral sources through the YouTube Discovery report has shown this to be a major contributor to the uptake of our videos. Although user feedback was useful, less than 1 in 2,300 viewers added comments. This low density of contributors is comparable to other reports.27 The level of discussion in the comments was variable and included much trivial content, although it was interspersed with more focused comments that raised a number of useful suggestions. For instance, one discussion thread explored variations on local aseptic techniques. When user feedback did merit a change to the material, as was the case with the Nasogastric Tube Insertion video, revising the sound track to address issues was relatively easy to achieve because we had avoided talking head shots when developing the videos. Ultimately, we made eight changes based on the feedback provided during the lifetime of the project. New features in YouTube, added since our detailed analysis period, have enabled more powerful annotation techniques, with cross-linking to other YouTube videos and channels. We are exploring new approaches that allow us to embed links to specific YouTube video segments from our PocketSnips Web site, allowing for more detailed analysis of users navigation behaviors. The authority of the material was not raised as an issue in the comments from viewers, which seems consistent with the findings of other authors.28,29 Quality assurance frameworks for health information, such as DISCERN30 and HONcode,31 raised no issues in an internal review. These frameworks were not well aligned to our needs and mostly focus on information for patients. Our principal focus was on providing information to health care professionals, who less often refer to formal portals and

Our team considered the adoption of YouTube a success because of the huge increase in uptake and the value added by the analytics and comments. The increased exposure of our material also generated additional funding and new opportunities to collaborate with other teams. Many of the teams original concerns about YouTube, in particular that it was too frivolous for the subject matter, proved unfounded. The modal viewing age was higher than expected for our target audience, although, because the available data are self-declared, the

Table 3

Characteristics of User Comments Derived From a Thematic Textual Analysis of Comments on the Top 10 PocketSnips Clinical Skills Educational Videos on YouTube, 2011 Category of comment Applause Complaint Critique Discussion Emotional responses Flippant responses Percentage of comments in category 7.9% 0.4% 9.1% 52.7% 12.9% 17%

Example Intense stuff. Cant wait to do my first central line. too bad the student hasnt had an English class. the thumb should occlude the hub as soon as the syringe is removed. in U.S., can nurses suture or put in central lines? I almost fainted. LOL so far.

Academic Medicine, Vol. 88, No. 2 / February 2013

195

Article

tend to use foraging behaviors to find suitable information.29 We did not pursue third-party continuing medical education (CME) accreditation of CliniSnips, and we acknowledge that there were drawbacks to this decision. For instance, of the 74,000 viewer hours logged, at least some of them were likely to have been relevant to CME, but such activity was not eligible for CME credits. Future activity will explore whether CME credits and YouTube publishing can be meaningfully combined. Our use of YouTube was not an exclusive option, and we continue to maintain the PocketSnips project Web site in addition to the CliniSnips YouTube channel. Although we did not originally select MedEdPORTAL and MERLOT, we are considering exploring publication of the PocketSnips videos on these platforms in addition to YouTube. Publishing the materials through a peer-reviewed model may facilitate the use of PocketSnips videos as registered CME activities.

Recommendations

materials to meet the needs of users. Although the density of social interaction was low on YouTube, the absolute quantity was much greater than through our initial project Web site. The levels of engagement we observed on YouTube, in terms of the numbers of views and the discussions, indicate that at least some viewers were using the materials in ways that we anticipated. The modest increase in traffic on the PocketSnips Web site also suggests that some users were willing to take the extra step of exploring the additional materials attached to the videos. Viewers could not be identified as representing a particular professional or educational group. The broader influence of our videos being seen by members of the public has not been assessed, but the effect of short videos on learning is generating much interest. The Khan Academy,32 for example, has had success providing open-access instructional videos at different educational levels on a very wide range of topics. Our selection of YouTube over more respected publishing channels raised many interesting issues. It moved the project significantly beyond the readonly culture of higher education33 by engaging social networking features, such as user comments and ratings of videos. But, by selecting a platform that did not offer peer review, CME credits, or other medical education infrastructure, we were unable to account for the projects specific medical educational outcomes. Our use of YouTube evolved over time, and the general acceptance of cloudbased services has increased both within our team and in information services generally. In addition, the way the resources are framed has also changed. For instance, reusability of educational materials used to be the focus in the debate over reusable learning objects.12,34 Now, the focus has shifted to accessfor instance, in the development of open educational resources33,35 and in exploring intellectual property rights. Initiatives such as OpenCourseWare36 and the development of open educational resources, as well as wider awareness of the social responsibility of medical schools, suggest that the experiences reported here have applicability beyond the specific technologies we used. Finally, the use of crowdsourcing (i.e., using large numbers of relatively anonymous

reviewers rather than a few experts) for reviewing educational materials is a technique that needs further exploration.37,38 In summary, the power of platforms such as YouTube to expose vast audiences to educational resources and then to engage viewers in enhancing them through the use of social media and learning analytics tools affords both advantages and disadvantages. We must continue to study the effectiveness and outcomes of using different publishing platforms for medical educational purposes, both to support the needs of the many stakeholders involved and to support ongoing innovation in using the Internet to advance medical education as a whole.

Acknowledgments: The authors would like to acknowledge the PocketSnips Project team: Andrea Smith, Brian Hart, Sherry Drysdale, Dr. Christine Kupsh, Dr. Laura Piccinin, Dr. Lorraine Carter, Richard Witham, Christina McMillanBoyles, and Joel Seguin. Funding/Support: The development of the PocketSnips videos were funded by the Northern Ontario Heritage Fund (www.mndmf.gov.on.ca/ nohfc/), the Inukshuk Fund (www.inukshuk.ca), and an unrestricted educational grant from Pfizer Canada (www.pfizer.ca). None of the funders had any influence on content or process. Other disclosures: The PocketSnips videos are provided free of charge, and the authors receive no income or other material benefits from their development or dissemination. The authors do not endorse the use of YouTube or any other media platform. Ethical approval: IRB approval was received from Laurentian University for PocketSnips content creation but was not required for the analysis of publicly available data.

Our experiences allow us to make several recommendations for others considering different platforms for the distribution of educational materials. First, different channels suit different audiences. Developers of educational materials should have clear goals and align their choice of platforms accordingly. Second, using one channel does not necessarily exclude using others (licensing allowing) to reach different audiences. Third, online technology should be considered to be transitory. Developers of materials should be prepared to move their collections as publishing options change. Finally, analytics tools provide valuable information on patterns of user access and behaviors. Analytics can provide a clearer picture of users actions than relying on their opinions and comments.

Conclusions

References

1 Topps D, Helmer J, Carter L, Ellaway R. PocketSnips: Health education, technology, and teamwork. J Distance Educ. 2009;23:147156. 2 Lee JC, Boyd R, Stuart P. Randomized controlled trial of an instructional DVD for clinical skills teaching. Emerg Med Australas. 2007;19:241245. 3 Gertler S, Ahrens K, Klausner JD. Increased knowledge of safe and appropriate penicillin injection after viewing brief instructional video titled how to inject Bicillin LA. Sex Transm Dis. 2009;36:147148. 4 Dinscore A, Andres A. Surgical videos online: A survey of prominent sources and future trends. Med Ref Serv Q. 2010;29:1027. 5 McMahon GT, Ingelfinger JR, Campion EW. Videos in clinical medicineA new journal feature. N Engl J Med. 2006;354:1635. 6 Ruiz JG, Mintzer MJ, Leipzig RM. The impact of e-learning in medical education. Acad Med. 2006;81:207212.

Our experience offers an innovative approach to disseminating open-access clinical skills training videos. YouTube solved a number of our technical challenges in video publication. It secured a very large audience for the project and, by providing rich data on how the material was used, allowed the project team members to continue to refine the

196

Academic Medicine, Vol. 88, No. 2 / February 2013

Article 7 Association of American Medical Colleges. MedEdPORTAL. https://www.mededportal. org/. Accessed October 29, 2012. 8 California State University. MERLOT Multimedia Educational Resource for Learning and Online Teaching. http://www. merlot.org/merlot/index.htm. Accessed October 29, 2012. 9 Farnan JM, Paro JA, Higa JT, Reddy ST, Humphrey HJ, Arora VM. Commentary: The relationship status of digital media and professionalism: Its complicated. Acad Med. 2009;84:14791481. 10 McGee JB, Begg M. What medical educators need to know about Web 2.0. Med Teach. 2008;30:164169. 11 Wood A, Struthers K, Herrington S. Modern pathology teaching and the Internet. Med Teach. 2009;31:187. 12 Wiley DA. The Instructional Use of Learning Objects. Bloomington, Ind: Agency for Instructional Technology, Association for Educational Communications and Technology; 2002. 13 Freedom of Information and Protection of Privacy Act. RSO, Chapter F.31. (1990). http://www.e-laws.gov.on.ca/html/statutes/ english/elaws_statutes_90f31_e.htm. Accessed November 2, 2012. 14 PocketSnips. Project overview. http:// pocketsnips.org. Accessed October 30, 2012. 15 Creative Commons. About the licenses: Attribution-noncommercial-sharealike [in Portuguese]. http://creativecommons.org/ licenses/. Accessed October 30, 2012. 16 Health Education Assets Library. http://www. healcentral.org/. Accessed October 30, 2012. 17 Uijtdehaage SH, Contini J, Candler CS, Dennis SE. Sharing digital teaching resources: Breaking down barriers by addressing the concerns of faculty members. Acad Med. 2003;78:286294. 18 Google Analytics. http://www.google.com/ analytics/. Accessed October 30, 2012. 19 CliniSnips [YouTube channel]. http://www. youtube.com/user/clinisnips. Accessed October 30, 2012. 20 Google Developers. YouTube Analytics API. https://developers.google.com/youtube/ analytics/. Accessed October 30, 2012. 21 Gill P, Arlitt M, Li Z, Mahanti A. YouTube traffic characterization: A view from the edge. In: Proceedings of the 7th ACM SIGCOMM Conference on Internet Measurement. New York, NY: Association for Computing Machinery; 2007:1528. 22 YouTube Help. YouTube insight: 7. Hot spots. http://support.google.com/youtube/bin/ static.py?hl=en&page=guide.cs&guide=125 4429&topic=1266628. Accessed October 30, 2012. 23 Medifix. www.medifix.org. Accessed April 23, 2010 [no longer available]. 24 Strauss A, Corbin J. Grounded theory methodology. In: Denzin NK, Lincoln YS, eds. Strategies of Qualitative Inquiry. London, UK: Sage Publications; 1998 :273285. 25 Ali L, Hatala M, Gasevic D, Jovanovic J. A qualitative evaluation of evolution of a learning analytics tool. Comput Educ. 2012;58:470489. 26 van Barneveld A, Arnold KE, Campbell JP. Analytics in Higher Education: Establishing a Common Language. http://www.educause. edu/ir/library/pdf/ELI3026.pdf. Accessed October 30, 2012. 27 Cheng X, Dale C, Liu J. Statistics and social network of YouTube videos. In: Proceedings of the 16th International Workshop on Quality of Service. Twente, The Netherlands: 2008:229238. 28 Jrvelin K. Explaining user performance in information retrieval: Challenges to IR evaluation. Adv Inf Retr Theory. 2009;5766:289296. Dwairy M, Dowell AC, Stahl JC. The application of foraging theory to the information searching behaviour of general practitioners. BMC Fam Pract. 2011;12:90. DISCERN: Quality Criteria for Consumer Health Information. http://www.discern.org. uk/. Accessed October 30, 2012. Health on the Net Foundation. HONcode. http://www.hon.ch/. Accessed October 30, 2012. Khan Academy. http://www.khanacademy. org/. Accessed October 30, 2012. Wiley D. 20052012: The OpenCourseWars. In: Iiyoshi T, Kumar MSV, eds. Opening Up Education: The Collective Advancement of Education Through Open Technology, Open Content, and Open Knowledge. Cambridge, Mass: Massachusetts Institute of Technology; 2008:245259. Littlejohn A. Reusing Online Resources: A Sustainable Approach to E-learning. London, UK: Routledge; 2003. Atkins DE, Brown JS, Hammond AL. A Review of the Open Educational Resources (OER) Movement: Achievements, Challenges, and New Opportunities. http://www.hewlett.org/uploads/files/ ReviewoftheOERMovement.pdf. Accessed October 30, 2012. OpenCourseWare Consortium Web site. http://ocwconsortium.org/. Accessed October 30, 2012. Surowiecki J. The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies, and Nations. New York, NY: Anchor Books; 2005. Keen A. The Cult of the Amateur. Boston, Mass: Nicholas Brealey Publishing; 2007.

29

30 31 32 33

34 35

36 37

38

Academic Medicine, Vol. 88, No. 2 / February 2013

197

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Government College of Engineering, Jalgaon: WWW - Gcoej.ac - inДокумент2 страницыGovernment College of Engineering, Jalgaon: WWW - Gcoej.ac - inchupchapОценок пока нет

- General Mathematics M1Документ26 страницGeneral Mathematics M1Ira Jane CaballeroОценок пока нет

- 102322641, Amanda Wetherall, EDU10024Документ7 страниц102322641, Amanda Wetherall, EDU10024Amanda100% (1)

- Aristotles Rational EmpiricismДокумент259 страницAristotles Rational EmpiricismPaoRobledoОценок пока нет

- 3.FIRST AID Snakebite LESSON PLANДокумент8 страниц3.FIRST AID Snakebite LESSON PLANdew2375% (4)

- Action Plan On Reading Intervention For Struggling Readers (Risr)Документ4 страницыAction Plan On Reading Intervention For Struggling Readers (Risr)Dennis De JesusОценок пока нет

- Klotz - The Keys To Heaven Also Open The Gates of Hell - Relativity and E mc2Документ19 страницKlotz - The Keys To Heaven Also Open The Gates of Hell - Relativity and E mc2EstimoОценок пока нет

- SEMINAR 08 Agreement and DisagreementДокумент5 страницSEMINAR 08 Agreement and Disagreementdragomir_emilia92Оценок пока нет

- (Edmond Bordeaux Szekely) Sexual Harmony (B-Ok - CC) PDFДокумент64 страницы(Edmond Bordeaux Szekely) Sexual Harmony (B-Ok - CC) PDFChristopher Carrillo100% (2)

- Carnap - Philosophy and Logical SyntaxДокумент10 страницCarnap - Philosophy and Logical SyntaxLorena PovedaОценок пока нет

- PHIL-IRI Manual 2018 Reorientation: Crisalie B. AnchetaДокумент76 страницPHIL-IRI Manual 2018 Reorientation: Crisalie B. AnchetaCrisalie ancheta100% (1)

- Fs 1-Episode 1Документ9 страницFs 1-Episode 1Louween Mendoza0% (1)

- 10 Mapeh ArtДокумент8 страниц10 Mapeh ArtMuhammad Amai-kurutОценок пока нет

- Soci1002 Unit 8 - 20200828Документ12 страницSoci1002 Unit 8 - 20200828YvanОценок пока нет

- NIT Recruitment RulesДокумент9 страницNIT Recruitment RulesTulasiram PatraОценок пока нет

- Detailed Lesson Plan in Mathematics Grade 3 Quarter Four Week Ten Day OneДокумент34 страницыDetailed Lesson Plan in Mathematics Grade 3 Quarter Four Week Ten Day OneJohn Paul Dela Peña86% (7)

- The Da Vinci Studio School of Creative EnterpriseДокумент32 страницыThe Da Vinci Studio School of Creative EnterpriseNHCollegeОценок пока нет

- Methods of TeachingДокумент11 страницMethods of TeachingnemesisОценок пока нет

- GCSE Computer Science Introduction To The Scheme WorkДокумент5 страницGCSE Computer Science Introduction To The Scheme WorkMujib AbdОценок пока нет

- Group Counseling Outline For Elementary Aged GirlsДокумент18 страницGroup Counseling Outline For Elementary Aged GirlsAllison Seal MorrisОценок пока нет

- The TKT Course Modules 1 2 and 3 2ndДокумент262 страницыThe TKT Course Modules 1 2 and 3 2ndEssential English CentreОценок пока нет

- ParaprofessionalДокумент2 страницыParaprofessionalapi-314847014Оценок пока нет

- Effective Management of Sales Force & Distribution ChannelsДокумент4 страницыEffective Management of Sales Force & Distribution Channelspkpratyush6305Оценок пока нет

- CHOUIT Aboubaker MS4 Seq03 MapДокумент1 страницаCHOUIT Aboubaker MS4 Seq03 Mapthe rose of snow زهرة الثلج100% (1)

- 123 - Jan-Feb1947 - The Purpose of EducationДокумент2 страницы123 - Jan-Feb1947 - The Purpose of EducationBen LernerОценок пока нет

- New Teacher Orientation Day Classroom ManagementДокумент3 страницыNew Teacher Orientation Day Classroom Managementapi-248910805Оценок пока нет

- I3 Final Summary - External Review of Charter School ApplicationДокумент6 страницI3 Final Summary - External Review of Charter School ApplicationTrisha Powell CrainОценок пока нет

- O Level Maths P2 November 2012 Mark Scheme 21Документ6 страницO Level Maths P2 November 2012 Mark Scheme 21Kelvin MuzaОценок пока нет

- NVS PGT Result 2023 PDFДокумент57 страницNVS PGT Result 2023 PDFEr Arti Kamal BajpaiОценок пока нет

- Republic of The PhilippinesДокумент3 страницыRepublic of The PhilippinesIanx Valdez100% (1)