Академический Документы

Профессиональный Документы

Культура Документы

Generalized Linear Models: Simon Jackman Stanford University

Загружено:

ababacarbaИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Generalized Linear Models: Simon Jackman Stanford University

Загружено:

ababacarbaАвторское право:

Доступные форматы

Generalized Linear Models

Simon Jackman

Stanford University

Generalized linear models (GLMs) are a large class of statistical models for relating re-

sponses tolinear combinations of predictor variables, includingmany commonly encountered

types of dependent variables and error structures as special cases. In addition to regression

models for continuous dependent variables, models for rates and proportions, binary, ordinal

and multinomial variables and counts can be handled as GLMs. The GLM approach is attrac-

tive because it (1) provides a general theoretical framework for many commonly encountered

statistical models; (2) simplifies the implementation of these different models in statistical

software, since essentially the same algorithm can be used for estimation, inference and

assessing model adequacy for all GLMs.

The canonical treatment of GLMs is McCullagh and Nelder (1989), and this review closely

follows their notation and approach. Begin by considering the familiar linear regression

model, y

i

= x

i

b + e

i

, where i = 1, . . . , n, y

i

is a dependent variable, x

i

is a vector of k

independent variables or predictors, b is a k-by-1 vector of unknown parameters and the

e

i

are zero-mean stochastic disturbances. Typically, the e

i

are assumed to be independent

across observations with constant variance r

2

, and distributed normal. That is, the normal

linear regression model is characterized by the following features:

1. stochastic component: the y

i

are usually assumed to have independent normal

distributions with E(y

i

) = l

i

, with constant variance r

2

, or y

i

iid

N(l

i

, r

2

)

2. systematic component: the covariates x

i

combine linearly with the coefficients to form

the linear predictor g

i

= x

i

b.

3. link between the random and systematic components: the linear predictor x

i

b = g

i

is

a function of the mean parameter l

i

via a link function, g(l

i

). Note that for the normal

linear model, g is an identity.

GLMs follow fromtwo extensions of this setup: (1) stochastic components following distribu-

tions other than the normal; (2) link functions other than the identity.

Stochastic Assumptions. GLMs may be used to model variables following distributions in

the exponential family: i.e.,

f (y; h, w) = exp

_

yh - b(h)

a(w)

+ c(y; w)

_

, or

log f (y; h, w) =

y h - b(h)

a(w)

+ c(y; w) (1)

where w is a dispersion parameter. For instance, the normal distribution

f (y; h, w) =

1

2pr

2

exp

_

-(y - l)

2

2r

2

_

, with log density

log f (y; h, w) =

y l -

l

2

2

r

2

-

1

2

_

y

2

r

2

+ log(2pr

2

)

_

results with h = l, w = r

2

, a(w) = w, b(h) = h

2

/2 and c(y; w) = -

1

2

(y

2

/r

2

+ log(2pr

2

)). Many

other commonly used distributions are in the exponential family. In addition to the examples

listed in Table 1, several other distributions are in the exponential family, including the beta,

multinomial, Dirichlet, and Pareto. Distributions that are not in the exponential family but are

used for statistical modeling include the students t and uniform distributions.

Link Functions. In theory, link functions g

i

= g(l

i

) can be any monotonic, differentiable

function. In practice, only a small set of link functions are actually utilized. In particular, links

are chosen such that the inverse link, l

i

= g

-1

(g

i

) is easily computed, and so that g

-1

maps

from x

i

b = g

i

R into the set of admissible values for l

i

. Table 1 lists the canonical links: for

instance, a log link is usually used for the Poisson model, since while g

i

= x

i

b R, because

y

i

is a count, we have l

i

0, 1, . . .. For binomial data, the link function maps from 0 < p < 1

to g

i

R, and three links are commonly used: (1) logit: g

i

= log (p

i

/(1 - p

i

)); (2) probit:

g

i

= w

-1

(l

i

), where w() is the normal cumulative distribution function; (3) complementary

log-log: g

i

= log (-log(1 - l

i

)). Note that binary data are handled in the GLM framework as

special cases of binomial data.

Estimation and Inference for b. Equation 1 provides an expression for the log density of

the dependent variable for a GLM; summing over these log-densities provides an expression

for the log-likelihood,

l(b, w; y, x) =

n

i=1

_

y

i

h

i

- b(h

i

)

a(w)

+ c(y

i

; w)

_

. (2)

Maximum likelihood estimates of b are generally not available in closed form, but can be

obtained via an algorithm known as iteratively weighted least squares (IWLS). IWLS is one

of the key practical ways in which GLMs are in fact general, providing MLEs for a wide

class of commonly used models. IWLS underlies the implementation of GLMs in software

2

N

o

r

m

a

l

P

o

i

s

s

o

n

B

i

n

o

m

i

a

l

G

a

m

m

a

N

o

t

a

t

i

o

n

N

(

l

,

r

2

)

P

(

l

)

B

(

n

,

p

)

/

n

G

(

l

,

m

)

l

o

g

-

d

e

n

s

i

t

y

1

r

2

_

y

l

-

12

l

2

-

12

y

2

_

-

12

l

o

g

(

2

p

r

2

)

y

l

o

g

l

-

l

-

l

o

g

(

y

!

)

n

_

y

l

o

g

(

p

1

-

p

)

+

l

o

g

(

1

-

p

)

+

l

o

g

_

n

n

y

_

m

_

-

yl

-

l

o

g

l

_

+

m

l

o

g

y

+

m

l

o

g

m

-

l

o

g

C

(

m

)

R

a

n

g

e

o

f

y

(

-

)

0

,

1

,

.

.

.

,

z

/

n

,

z

{

0

,

1

,

.

.

.

,

n

}

(

0

,

)

D

i

s

p

e

r

s

i

o

n

p

a

r

a

m

e

t

e

r

,

w

r

2

1

n

-

1

m

-

1

b

(

h

)

h

2

/

2

e

x

p

(

h

)

l

o

g

(

1

+

e

h

)

-

l

o

g

(

-

h

)

c

(

y

;

w

)

-

12

_

y

2

w

+

l

o

g

(

2

p

w

)

_

-

l

o

g

y

!

l

o

g

_

n

n

y

_

m

l

o

g

(

m

y

)

-

l

o

g

y

-

l

o

g

C

(

m

)

l

(

h

)

=

E

(

y

;

h

)

h

e

x

p

(

h

)

e

h

/

(

1

+

e

h

)

-

1

/

h

C

a

n

o

n

i

c

a

l

l

i

n

k

,

h

(

l

)

i

d

e

n

t

i

t

y

(

h

=

l

)

l

o

g

(

l

)

l

o

g

_

p

1

-

p

_

l

-

1

V

a

r

i

a

n

c

e

f

u

n

c

t

i

o

n

,

V

(

l

)

1

l

l

(

1

-

l

)

l

2

D

e

v

i

a

n

c

e

f

u

n

c

t

i

o

n

,

D

M

(

y

i

-

l

i

)

2

2

_

y

i

l

o

g

_

y

i

l

i

_

-

y

i

+

l

i

_

2

_

y

i

l

o

g

_

y

i

l

i

_

+

(

n

i

-

y

i

)

l

o

g

_

n

i

-

y

i

n

i

-

l

i

_

_

2

_

-

l

o

g

_

y

i

l

i

_

+

y

i

-

l

i

l

i

_

T

a

b

l

e

1

:

C

o

m

m

o

n

D

i

s

t

r

i

b

u

t

i

o

n

s

i

n

t

h

e

E

x

p

o

n

e

n

t

i

a

l

F

a

m

i

l

y

u

s

e

d

w

i

t

h

G

L

M

s

.

3

packages such as S-Plus and R; that is, rather than coding up problem-specific routines for

optimizing likelihood functions with respect to unknown parameters, the GLMframework and

IWLS provides a common framework for implementing models in statistical software. Briefly,

iteration t of IWLS consists of:

1. form the working responses z

(t)

i

= g

(t)

i

+ (y

i

- l

(t)

i

)

_

dg

dl

_

(t)

i

, where g

(t)

i

= x

i

b

(t-1)

and

l

(t)

i

= g

-1

(g

(t)

i

). For the standard models presented in Table 1, the derivative of the link

dg/dl is easy to compute.

2. form working weights W

(t)

i

=

_

_

dg

dl

_

2

i

V

(t)

i

_

-1

where V

(t)

i

= V(l

(t)

i

) is referred to as the

variance function; McCullagh and Nelder (1989, 29) show that var(y

i

) = b

(h)a(w), with

the first term the variance function, and the second term the dispersion parameter.

Table 1 lists the variance functions for commonly used GLMs.

3. run the weighted regression of the z

(t)

i

on the covariates x

i

with weights W

(t)

i

; designate

the coefficients from this weighted regression as

b

(t)

and proceed to the next iteration.

This algorithmcanberepeateduntil convergencein

bor thelog-likelihood, andtheasymptotic

variance of

b is given by V(

b) =

w(X

WX)

-1

where W = diag(W

1

, . . . , W

n

) computed at the final

iteration. Asymptotically-valid standard errors for the coefficients are obtained by taking the

square root of the leading diagonal of V(

b). Note also that the IWLS algorithm does not

involve the dispersion parameter

w, which is usually either fixed a priori by the model (e.g., for

binomial or Poisson models) or (as in the Gaussian case) estimated after computing residuals

with

b. Further details on the algorithmappear in McCullagh and Nelder (1989, 41-43); Nelder

and Wedderburn (1972) introduced the term generalized linear model when discussing

how the IWLS method could be used to obtains MLEs for exponential-family models.

Analysis of Deviance. In the GLM framework, it is customary to use a quantity known as

deviance to formally assess model adequacy and to compare models. Deviance statistics

are identical to those obtained using likelihood ratio test statistics. For a data set with n

observations, assume the dispersion parameter is known or fixed at w = 1, and consider two

extreme models: (1) a one parameter null model, setting E(y

i

) = l, i; (2) a n parameter

saturated model, denoted S, fitting a parameter for each data point, setting E(y

i

) = l

i

= y

i

.

For a normal regression, model (1) is an intercept-only, and model (2) results by fitting a

dummy variable for every observation. Any interesting statistical model M lies somewhere

in between, with p < n parameters, but the two extreme cases provide benchmarks for

assessing the performance of model M. In particular, if

h

M

i

= h

M

( l

i

) are the predictions of

4

model M, and

h

S

i

= h

S

(y

i

) = y

i

are the predictions of model S, then the deviance of model M is

D

M

= 2

n

i=1

__

y

i

h

S

(y

i

) - b(

h

S

i

)

_

-

_

y

i

h

M

i

- b(

h

M

i

)

_

. (3)

and when the dispersion w is estimated or known not to be 1, the scaled deviance is

D

*

M

= D

M

/w. Several features of deviance should be noted:

1. Deviance is minimized when M = S; alternatively, the log-likelihood of S, l

S

= l(y; y) is

(by definition) the highest log-likelihood attainable with data y.

2. The scaled deviance of model M is the likelihood ratio test statistic for the test of null

hypothesis that the n - p parameter restrictions in M relative to S are true, i.e.,

D

*

M

= 2[l(y; y) - l(

h

S

; y)].

3. Asymptotically, D

*

M

v

2

n-p

, facilitating hypothesis tests.

4. If model M

0

M (M

0

nests in M) with q < p parameters, then if w is known, the

difference in scaled deviances also provides a likelihood ratio statistic, for the null

hypothesis that the p - q restrictions in M

0

relative to M are true, i.e.,

D

M

0

- D

M

w

v

2

p-q

.

Again, for models other than the normal (with known dispersion), the v

2

distribution is

an asymptotically-valid approximation to the exact distribution of the test statistic.

Expressions for deviance for a number of commonly-used models appear in Table 1; note that

for the normal model, the deviance is a familiar quantity, the sum of the squared residuals.

Predicted Values, Residuals and Diagnostics. Predicted values and residuals are critical

to diagnosing lack of model fit in ordinary regression models, and this is also the case for

the broader class of GLMs. Residuals for the normal regression model are simply y

i

- l

i

, but

for the broader set of models with link functions other than the identity, predicted values

and residuals are more ambiguous. It is helpful to make a distinction between prediction

on the scale of the linear predictors g

i

= x

i

b and prediction on the scale of the observed

response y

i

, for which E(y

i

) = l

i

= g

-1

(g

i

). In turn, it is possible to define response residuals

r

i

= y

i

- g

-1

(x

i

b). However, unlike the residuals from linear regression models, the response

residuals for GLMs are not guaranteed to have the useful properties of ordinary regression

5

residuals (e.g., summing to zero within a given data set, and having mean zero and normal

distributions across repeated sampling).

A number of other types of residuals for GLMs have been proposed in the literature

McCullagh and Nelder (1989, 2.4), including deviance residuals, defined as r

D

i

= sign(y

i

-

l

i

)

d

i

, where d

i

is the observation-specific contributions to the deviance statistic D

M

defined

above. Deviance residuals have a number of useful properties: (a) they increase in magnitude

with y

i

- l

i

; (b)

(r

D

i

)

2

= D

M

; (c) have close to a normal distribution with mean zero and

standard deviation one, irrespective of the type of GLM employed.

Outlier detection and other measures of influence are important data analytic tools,

pioneered in the context of linear regression, but have been extended to GLMs; see Lindsey

(1997, 227) and Hastie and Pregibon (1992, 6.3.4) for examples and references.

Extensions: GLMs have been extended and elaborated in numerous directions:

Non-constant variance. The discussion so far has focused on GLMs where var(y

i

)

is constant (e.g., normal and binomial). But GLMs can be used for models such as

the Poisson, where the E(y

i

) = var(y

i

), extensions to such as the negative binomial

McCullagh and Nelder (1989, 6.2.3), and variants such as the gamma GLM for non-

negative continuous variables where var(y

i

) increases with the square of the mean of y

i

McCullagh and Nelder (1989, ch8).

Modeling dispersion. In the usual setup, covariates x

i

are used to model the conditional

mean of y

i

, l

i

= g

-1

(x

i

b), with var(y

i

) = wV(l

i

). When substantive interest focuses on

also modeling the variance of y

i

, the GLM approach can be extended by letting the

dispersion parameter w vary across observations conditional on covariates, typically

via a gamma GLM. Any joint modeling of mean effects and dispersion effects is fraught

with difficulty McCullagh and Nelder (1989, 10.3): the mean and dispersions models

necessarily interact with one another, such that misspecification of one can be captured

by the other, with the risk of misleading conclusions about where particular covariates

have their impact on y

i

(in the mean or variance).

Generalizing the link function. Theory is usually silent as to the mapping fromthe linear

predictors g

i

= x

i

b to E(y

i

) = l

i

. For many GLMs it is straightforward to specify a family

of links indexed by one or more parameters to be estimated (McCullagh and Nelder,

1989, 11.3). For instance, for a binomial GLM, rather than assume either a logit or

probit inverse-link, one could use the CDF of t-distribution with unknown degrees of

freedom m, with logit corresponding to m 8 and probit when m > 30 (e.g., Albert and

Chib, 1993).

6

Other references. While McCullagh and Nelder (1989) is the standard reference for

GLMs, other treatments include Fahrmeir and Tutz (1994) and Lindsey (1997). Many specific

treatments of the analysis of categorical variables make extensive use of the GLM approach,

such as Collet (1991). Hastie and Pregibon (1992) and Venables and Ripley (2002, Ch7)

discuss the implementation of GLMs in S-Plus and R.

References

Albert, James H. andSiddhartha Chib. 1993. BayesianAnalysis of Binary andPolychotomous

Response Data. Journal of the American Statistical Association 88:669--79.

Collet, D. 1991. Modelling Binary Data. London: Chapman and Hall.

Fahrmeir, F. and G. Tutz. 1994. Multivariate Statistical Modelling Based on Generalized Linear

Models. New York: Springer-Verlag.

Hastie, Trevor J. and Daryl Pregibon. 1992. Generalized Linear Models. In Statistical Models

in S, ed. John M. Chambers and Trevor J. Hastie. Pacific Grove, California: Wadsworth and

Brooks/Cole Chapter 6.

Lindsey, James K. 1997. Applying Generalized Linear Models. New York: Springer.

McCullagh, P. and J. A. Nelder. 1989. Generalized Linear Models. Second ed. London:

Chapman and Hall.

Nelder, J.A. and R.W.M. Wedderburn. 1972. Generalized linear models. Journal of the Royal

Statistical Society, Series A 135:370--84.

Venables, William N. and Brian D. Ripley. 2002. Modern Applied Statistics with S-PLUS.

Fourth ed. New York: Springer-Verlag.

7

Вам также может понравиться

- Cramer Raoh and Out 08Документ13 страницCramer Raoh and Out 08Waranda AnutaraampaiОценок пока нет

- Van Der Weele 2012Документ60 страницVan Der Weele 2012simplygadesОценок пока нет

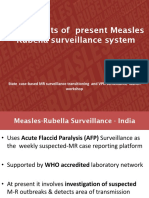

- 07a Ongoing MR Outbreak Surveillance SystemДокумент23 страницы07a Ongoing MR Outbreak Surveillance SystemDevendra Singh TomarОценок пока нет

- Abarbanel .-. The Analysis of Observed Chaotic Data in Physical SystemsДокумент62 страницыAbarbanel .-. The Analysis of Observed Chaotic Data in Physical SystemsluizomarОценок пока нет

- Binomial Logistic Regression Using SPSSДокумент11 страницBinomial Logistic Regression Using SPSSKhurram ZahidОценок пока нет

- SPSS ANNOTATED OUTPUT Discriminant Analysis 1Документ14 страницSPSS ANNOTATED OUTPUT Discriminant Analysis 1Aditya MehraОценок пока нет

- What Statistical Analysis Should I UseДокумент3 страницыWhat Statistical Analysis Should I UseeswarlalbkОценок пока нет

- Handleiding Spss Multinomial Logit RegressionДокумент35 страницHandleiding Spss Multinomial Logit RegressionbartvansantenОценок пока нет

- 08 Split PlotsДокумент25 страниц08 Split PlotsfrawatОценок пока нет

- Research Methods & Reporting: STARD 2015: An Updated List of Essential Items For Reporting Diagnostic Accuracy StudiesДокумент9 страницResearch Methods & Reporting: STARD 2015: An Updated List of Essential Items For Reporting Diagnostic Accuracy StudiesJorge Villoslada Terrones100% (1)

- The Logistic Regression Analysis in Spss - Statistics Solutions PDFДокумент2 страницыThe Logistic Regression Analysis in Spss - Statistics Solutions PDFSajjad HossainОценок пока нет

- Time Series Analysis and Its Applications: With R Examples: Second EditionДокумент18 страницTime Series Analysis and Its Applications: With R Examples: Second EditionPaula VilaОценок пока нет

- Quantile Regression (Final) PDFДокумент22 страницыQuantile Regression (Final) PDFbooianca100% (1)

- SPSS2 Workshop Handout 20200917Документ17 страницSPSS2 Workshop Handout 20200917kannan_r02Оценок пока нет

- Non Linear RegressionДокумент20 страницNon Linear RegressionPrasad TpОценок пока нет

- Quantile RegressionДокумент11 страницQuantile RegressionOvidiu RotariuОценок пока нет

- GSEMModellingusingStata PDFДокумент97 страницGSEMModellingusingStata PDFmarikum74Оценок пока нет

- Runs TestДокумент5 страницRuns TestdilpalsОценок пока нет

- Ordinal and Multinomial ModelsДокумент58 страницOrdinal and Multinomial ModelsSuhel Ahmad100% (1)

- 9.data AnalysisДокумент25 страниц9.data AnalysisEncik SyafiqОценок пока нет

- Logistic Regression TutorialДокумент25 страницLogistic Regression TutorialDaphneОценок пока нет

- Stata Graph Library For Network AnalysisДокумент41 страницаStata Graph Library For Network AnalysisAlexander SainesОценок пока нет

- How To Interpret and Report The Results From Multi Variable AnalysisДокумент6 страницHow To Interpret and Report The Results From Multi Variable AnalysisSofia Batalha0% (1)

- Logit Model For Binary DataДокумент50 страницLogit Model For Binary Datasoumitra2377Оценок пока нет

- Sampling Distribution and Simulation in RДокумент10 страницSampling Distribution and Simulation in RPremier PublishersОценок пока нет

- Microsoft Excel Essential Training: Raju Miyan Lecturer Khwopa College of EngineeringДокумент25 страницMicrosoft Excel Essential Training: Raju Miyan Lecturer Khwopa College of EngineeringDipjan ThapaОценок пока нет

- Introduction To RДокумент20 страницIntroduction To Rseptian_bbyОценок пока нет

- CatpcaДокумент19 страницCatpcaRodito AcolОценок пока нет

- Beta DistributionДокумент8 страницBeta DistributionSYED BAQAR HUSSAIN SHAHОценок пока нет

- Fernando, Logit Tobit Probit March 2011Документ19 страницFernando, Logit Tobit Probit March 2011Trieu Giang BuiОценок пока нет

- Arellano - Quantile Methods PDFДокумент10 страницArellano - Quantile Methods PDFInvestОценок пока нет

- 03 A Logistic Regression 2018Документ13 страниц03 A Logistic Regression 2018Reham TarekОценок пока нет

- Useful Stata CommandsДокумент48 страницUseful Stata CommandsumerfaridОценок пока нет

- I10064664-E1 - Statistics Study Guide PDFДокумент81 страницаI10064664-E1 - Statistics Study Guide PDFGift SimauОценок пока нет

- (Bruderl) Applied Regression Analysis Using StataДокумент73 страницы(Bruderl) Applied Regression Analysis Using StataSanjiv DesaiОценок пока нет

- Exploratory Data AnalysisДокумент38 страницExploratory Data Analysishss601Оценок пока нет

- Categorical Data AnalysisДокумент44 страницыCategorical Data Analysisamonra10Оценок пока нет

- Multivariate Analysis IBSДокумент20 страницMultivariate Analysis IBSrahul_arora_20Оценок пока нет

- T-Tests For DummiesДокумент10 страницT-Tests For DummiesMeganAislingОценок пока нет

- Session2-Simple Linear Model PDFДокумент56 страницSession2-Simple Linear Model PDFindrakuОценок пока нет

- Exploratory Data AnalysisДокумент106 страницExploratory Data AnalysisAbhi Giri100% (1)

- Data Science Course ContentДокумент8 страницData Science Course ContentQshore online trainingОценок пока нет

- 5 Regression AnalysisДокумент43 страницы5 Regression AnalysisAC BalioОценок пока нет

- Beta Distribution: First Kind, Whereasbeta Distribution of The Second Kind Is An Alternative Name For TheДокумент4 страницыBeta Distribution: First Kind, Whereasbeta Distribution of The Second Kind Is An Alternative Name For TheMichael BalzaryОценок пока нет

- Random ForestДокумент83 страницыRandom ForestBharath Reddy MannemОценок пока нет

- Survival Analysis in RДокумент16 страницSurvival Analysis in Rmipimipi03Оценок пока нет

- Prediction of Diabetes Using Logistics Regression Algorithms With FlaskДокумент10 страницPrediction of Diabetes Using Logistics Regression Algorithms With FlaskIJRASETPublicationsОценок пока нет

- Fundamental of Stat by S C GUPTAДокумент13 страницFundamental of Stat by S C GUPTAPushpa Banerjee14% (7)

- Chapter - 14 Advanced Regression ModelsДокумент49 страницChapter - 14 Advanced Regression ModelsmgahabibОценок пока нет

- Regression AnalysisДокумент58 страницRegression AnalysisHarshika DhimanОценок пока нет

- History of ProbabilityДокумент17 страницHistory of ProbabilityonlypingОценок пока нет

- 5682 - 4433 - Factor & Cluster AnalysisДокумент22 страницы5682 - 4433 - Factor & Cluster AnalysisSubrat NandaОценок пока нет

- Factor Analysis Xid-2898537 1 BSCdOjdTGSДокумент64 страницыFactor Analysis Xid-2898537 1 BSCdOjdTGSsandeep pradhanОценок пока нет

- Introduction To Neutrosophic StatisticsДокумент125 страницIntroduction To Neutrosophic StatisticsFlorentin SmarandacheОценок пока нет

- Analysis Analysis: Multivariat E Multivariat EДокумент12 страницAnalysis Analysis: Multivariat E Multivariat Epopat vishalОценок пока нет

- Lec Set 1 Data AnalysisДокумент55 страницLec Set 1 Data AnalysisSmarika KulshresthaОценок пока нет

- Graphical Representation of Multivariate DataОт EverandGraphical Representation of Multivariate DataPeter C. C. WangОценок пока нет

- Multivariate Analysis—III: Proceedings of the Third International Symposium on Multivariate Analysis Held at Wright State University, Dayton, Ohio, June 19-24, 1972От EverandMultivariate Analysis—III: Proceedings of the Third International Symposium on Multivariate Analysis Held at Wright State University, Dayton, Ohio, June 19-24, 1972Оценок пока нет

- Feasibility Study On The Seaweed Kappaphycus Alvarezii Cultivation Site in Indari Waters ofДокумент9 страницFeasibility Study On The Seaweed Kappaphycus Alvarezii Cultivation Site in Indari Waters ofUsman MadubunОценок пока нет

- Developing Agility and Quickness (Etc.) (Z-Library) - 66Документ2 страницыDeveloping Agility and Quickness (Etc.) (Z-Library) - 66guypetro6Оценок пока нет

- Chapter 5 TEstДокумент18 страницChapter 5 TEstJeanneau StadegaardОценок пока нет

- BCSL 058 Previous Year Question Papers by IgnouassignmentguruДокумент45 страницBCSL 058 Previous Year Question Papers by IgnouassignmentguruSHIKHA JAINОценок пока нет

- Sample Dewa Inspection CommentsДокумент2 страницыSample Dewa Inspection Commentsrmtaqui100% (1)

- Legend Of Symbols: Chú Thích Các Ký HiệuДокумент9 страницLegend Of Symbols: Chú Thích Các Ký HiệuKiet TruongОценок пока нет

- Moisture and Total Solids AnalysisДокумент44 страницыMoisture and Total Solids AnalysisNicholas BoampongОценок пока нет

- Mid Lesson 1 Ethics & Moral PhiloДокумент13 страницMid Lesson 1 Ethics & Moral PhiloKate EvangelistaОценок пока нет

- Classical Feedback Control With MATLAB - Boris J. Lurie and Paul J. EnrightДокумент477 страницClassical Feedback Control With MATLAB - Boris J. Lurie and Paul J. Enrightffranquiz100% (2)

- Unemployment in IndiaДокумент9 страницUnemployment in IndiaKhushiОценок пока нет

- Rumah Cerdas Bahasa Inggris Belajar Bahasa Inggris Dari Nol 4 Minggu Langsung BisaДокумент3 страницыRumah Cerdas Bahasa Inggris Belajar Bahasa Inggris Dari Nol 4 Minggu Langsung BisaArditya CitraОценок пока нет

- Effect of Vino Gano Ginger and Herbal Liquor On The Heamatological Parameters of The Wistar RatsДокумент5 страницEffect of Vino Gano Ginger and Herbal Liquor On The Heamatological Parameters of The Wistar RatsInternational Journal of Innovative Science and Research TechnologyОценок пока нет

- PositioningДокумент2 страницыPositioningKishan AndureОценок пока нет

- Famous Bombers of The Second World War - 1st SeriesДокумент142 страницыFamous Bombers of The Second World War - 1st Seriesgunfighter29100% (1)

- An Evaluation of The Strength of Slender Pillars G. S. Esterhuizen, NIOSH, Pittsburgh, PAДокумент7 страницAn Evaluation of The Strength of Slender Pillars G. S. Esterhuizen, NIOSH, Pittsburgh, PAvttrlcОценок пока нет

- Radiation Formula SheetДокумент5 страницRadiation Formula SheetJakeJosephОценок пока нет

- Barium SulphateДокумент11 страницBarium SulphateGovindanayagi PattabiramanОценок пока нет

- Outerstellar Self-Impose RulesДокумент1 страницаOuterstellar Self-Impose RulesIffu The war GodОценок пока нет

- 1943 Dentures Consent FormДокумент2 страницы1943 Dentures Consent FormJitender ReddyОценок пока нет

- Campa Cola - WikipediaДокумент10 страницCampa Cola - WikipediaPradeep KumarОценок пока нет

- 1704 Broschuere Metal-Coating en EinzelseitenДокумент8 страниц1704 Broschuere Metal-Coating en EinzelseiteninterponОценок пока нет

- Water Sensitive Urban Design GuidelineДокумент42 страницыWater Sensitive Urban Design GuidelineTri Wahyuningsih100% (1)

- DLP Physical Science Week1Документ2 страницыDLP Physical Science Week1gizellen galvezОценок пока нет

- Notice - Appeal Process List of Appeal Panel (Final 12.1.24)Документ13 страницNotice - Appeal Process List of Appeal Panel (Final 12.1.24)FyBerri InkОценок пока нет

- Worksheet - Labeling Waves: NameДокумент2 страницыWorksheet - Labeling Waves: NameNubar MammadovaОценок пока нет

- Hyundai Forklift Catalog PTASДокумент15 страницHyundai Forklift Catalog PTASjack comboОценок пока нет

- Brainedema 160314142234Документ39 страницBrainedema 160314142234Lulu LuwiiОценок пока нет

- DLL - Mapeh 6 - Q2 - W8Документ6 страницDLL - Mapeh 6 - Q2 - W8Joe Marie FloresОценок пока нет

- Charles Haanel - The Master Key System Cd2 Id1919810777 Size878Документ214 страницCharles Haanel - The Master Key System Cd2 Id1919810777 Size878Hmt Nmsl100% (2)

- Holowicki Ind5Документ8 страницHolowicki Ind5api-558593025Оценок пока нет