Академический Документы

Профессиональный Документы

Культура Документы

Coding Theory Tutorial 1 Homework

Загружено:

amit100singhОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Coding Theory Tutorial 1 Homework

Загружено:

amit100singhАвторское право:

Доступные форматы

Coding Theory (EEL710): Tutorial 1

Note: You have to submit the homework on 12.08.13 at 11 AM in the class room. Late

submission is not allowed.

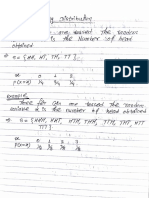

1) Consider tossing of a coin. Let X be a random variable which denotes number of

tosses required until the first head appears. Find

i) Entropy H(X) with a fair coin. (3)

ii) Entropy H(X) with an unfair coin with p as probability of occurring a

head. (4)

2) Let a random variable X with probability mass function as

() = {

1/4, = 0,1

1/2, = 2

Y and Z are two random variables generated as follows. When X = 0, we have Y

= Z = 0; For X = 1, then Y = 1, Z = 0; when X = 2, we have Z = 1 while Y is

randomly chosen from 0 and 1 with equal probability. Find the values of the

following quantities: H(X), H(Y), H(Z), H(Y|X), H(X, Y ), H(X|Y ), H(X, Z), H(X|Z),

H(Y, Z), H(Z|Y ). (20)

3) Let X, Y, and Z be joint random variables. Prove the following inequalities and

find conditions for equality.

i) H(X, Y| Z) H(X | Z). (2)

ii) I (X,Y; Z) I (X ; Z). (2)

iii) H(X,Y,Z) H(X,Y) H(X,Z) H(X). (2)

iv) I (X;Z|Y) I (Z; Y|X) I (Z; Y) + I (X; Z). (2)

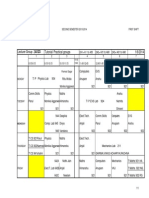

4) A DMS source outputs the following eight symbols {x1, x2, x3, x4, x5, x6, x7, x8}

with respective probabilities {0.35, 0.26, 0.19, 0.07, 0.04, 0.04, 0.03, 0.02}.

Determine the Huffman code by taking

i) two symbols at a time. (4)

ii) three symbols at a time. (4)

5) The below figure shows a non-symmetric binary channel. Prove that in this

case I(X,Y)=[q+(1pq)](p)(1)(q) where the function (p) =

2

1

+(1 )

2

1

1

. (7)

Вам также может понравиться

- Einstein HoaxДокумент343 страницыEinstein HoaxTS100% (1)

- NS 2Документ228 страницNS 2Shariq Mahmood Khan0% (1)

- Nicolas-Lewis vs. ComelecДокумент3 страницыNicolas-Lewis vs. ComelecJessamine OrioqueОценок пока нет

- AP Biology 1st Semester Final Exam Review-2011.2012Документ13 страницAP Biology 1st Semester Final Exam Review-2011.2012Jessica ShinОценок пока нет

- Dynamics of Bases F 00 BarkДокумент476 страницDynamics of Bases F 00 BarkMoaz MoazОценок пока нет

- LAS IN ENTREPRENEURSHIP WEEK 4Документ5 страницLAS IN ENTREPRENEURSHIP WEEK 4IMELDA CORONACIONОценок пока нет

- Health EconomicsДокумент114 страницHealth EconomicsGeneva Ruz BinuyaОценок пока нет

- Audience AnalysisДокумент7 страницAudience AnalysisSHAHKOT GRIDОценок пока нет

- PNP P.A.T.R.O.L. 2030 Score Card Dashboard FormulationДокумент89 страницPNP P.A.T.R.O.L. 2030 Score Card Dashboard FormulationMark Payumo83% (41)

- Entropy, Relative Entropy and Mutual InformationДокумент4 страницыEntropy, Relative Entropy and Mutual InformationamoswengerОценок пока нет

- HW 1Документ3 страницыHW 1Vera Kristanti PurbaОценок пока нет

- Tutorial Sheet3 DRVДокумент2 страницыTutorial Sheet3 DRVbekesy7Оценок пока нет

- EE5143 Problem Set 1 practice problemsДокумент4 страницыEE5143 Problem Set 1 practice problemsSarthak VoraОценок пока нет

- 1187w01 Prex3Документ2 страницы1187w01 Prex3Nguyen Manh TuanОценок пока нет

- 09EC352 Assignment 1Документ1 страница09EC352 Assignment 1aju1234dadaОценок пока нет

- Assignment 1Документ3 страницыAssignment 1Udeeptha_Indik_6784Оценок пока нет

- HW 1 SolwДокумент17 страницHW 1 SolwignitesriniОценок пока нет

- Problem Set 1Документ2 страницыProblem Set 1lsifualiОценок пока нет

- 4ma1 2223 Chapter 02 Discrete RVДокумент12 страниц4ma1 2223 Chapter 02 Discrete RVviviana minningОценок пока нет

- Problem Set1Документ2 страницыProblem Set1Abhijith ASОценок пока нет

- EE376A Homework 1 Entropy, Joint Entropy, Data Processing InequalityДокумент4 страницыEE376A Homework 1 Entropy, Joint Entropy, Data Processing InequalityhadublackОценок пока нет

- EE5143 Tutorial1Документ5 страницEE5143 Tutorial1Sayan Rudra PalОценок пока нет

- Lec36 - 210102032 - G V KRISHNA KIREETIДокумент5 страницLec36 - 210102032 - G V KRISHNA KIREETIvasu sainОценок пока нет

- Practice questions statistics probability distributionsДокумент2 страницыPractice questions statistics probability distributionsramarao pagadalaОценок пока нет

- Tutorial1 20Документ2 страницыTutorial1 20Er Rudrasen PalОценок пока нет

- Tutorial 8 PDFДокумент2 страницыTutorial 8 PDFanon_597095929Оценок пока нет

- Uperior Niversity Ahore: (D O S E)Документ6 страницUperior Niversity Ahore: (D O S E)attaОценок пока нет

- Assignment 1 (M5)Документ2 страницыAssignment 1 (M5)qwertyОценок пока нет

- Solutions To Information Theory Exercise Problems 1-4Документ11 страницSolutions To Information Theory Exercise Problems 1-4sodekoОценок пока нет

- Soln2 q1-2 c2Документ2 страницыSoln2 q1-2 c2PrathmeshОценок пока нет

- AssignmentДокумент2 страницыAssignmentChirag ChiragОценок пока нет

- Exercise Problems: Information Theory and CodingДокумент6 страницExercise Problems: Information Theory and CodingReagan TorbiОценок пока нет

- Tutsheet 4Документ2 страницыTutsheet 4vishnuОценок пока нет

- PSB-2024Документ5 страницPSB-2024Karunambika ArumugamОценок пока нет

- Tutsheet 5 - Bivariate Random Variables PDFДокумент1 страницаTutsheet 5 - Bivariate Random Variables PDFparasasundeshaОценок пока нет

- Cov corellationДокумент4 страницыCov corellation8918.stkabirdinОценок пока нет

- HW 2Документ3 страницыHW 2hadublackОценок пока нет

- Probability Distribution PDFДокумент44 страницыProbability Distribution PDFSimon KarkiОценок пока нет

- IB HL Classwork 3 - 4 - Discrete Random Variables and Expected ValueДокумент3 страницыIB HL Classwork 3 - 4 - Discrete Random Variables and Expected ValueDavid PerezОценок пока нет

- Info Theory Exercise SolutionsДокумент16 страницInfo Theory Exercise SolutionsAvishekMajumderОценок пока нет

- PS 3 - 2015Документ2 страницыPS 3 - 2015HarryОценок пока нет

- Information Theory - SAMPLE EXAM ONLY: MATH3010Документ5 страницInformation Theory - SAMPLE EXAM ONLY: MATH3010Pradeep RajaОценок пока нет

- 6 - Probability Density FunctionsДокумент9 страниц6 - Probability Density FunctionsSudibyo GunawanОценок пока нет

- Chapter 2 - Random VariablesДокумент28 страницChapter 2 - Random VariablesRockson YuОценок пока нет

- Homework 5 IENG513Документ2 страницыHomework 5 IENG513merveozerОценок пока нет

- Sample 440 FinalДокумент11 страницSample 440 FinalEd ZОценок пока нет

- Q3StatisticsProbability LESSONSДокумент94 страницыQ3StatisticsProbability LESSONSRoshel Lopez0% (1)

- STAT 211 Joint Probability Distributions and Random Samples: Handout 5 (Chapter 5)Документ6 страницSTAT 211 Joint Probability Distributions and Random Samples: Handout 5 (Chapter 5)Mazhar AliОценок пока нет

- MA 202 End SemДокумент1 страницаMA 202 End SemPrathamesh AdhavОценок пока нет

- Cox Partial Likelihood Writes As CRF LikelihoodДокумент1 страницаCox Partial Likelihood Writes As CRF LikelihoodMassiloОценок пока нет

- Ba BSC Hons Mathematics Sem 5 CC 11 0232Документ4 страницыBa BSC Hons Mathematics Sem 5 CC 11 0232My MathОценок пока нет

- Mathematical methods in communication homework entropy and correlationДокумент5 страницMathematical methods in communication homework entropy and correlationDHarishKumarОценок пока нет

- Tutorial 3Документ3 страницыTutorial 3Shanju ShanthanОценок пока нет

- Handout5 Extra On CovarianceДокумент1 страницаHandout5 Extra On Covarianceozo1996Оценок пока нет

- Problem Sheet 3.3Документ3 страницыProblem Sheet 3.3Niladri DuttaОценок пока нет

- 1.1 Random VariableДокумент23 страницы1.1 Random VariablejefrillevaldezОценок пока нет

- Exercise+Problems+for+MT 1Документ6 страницExercise+Problems+for+MT 1Necati Can ToklaçОценок пока нет

- Lecture 1: Entropy and Mutual Information: 2.1 ExampleДокумент8 страницLecture 1: Entropy and Mutual Information: 2.1 ExampleLokesh SinghОценок пока нет

- Tutsheet 5Документ2 страницыTutsheet 5qwertyslajdhjsОценок пока нет

- 2022 MidtermДокумент4 страницы2022 MidtermAntonОценок пока нет

- JNTU B.Tech Probability and Stochastic Processes Exam QuestionsДокумент3 страницыJNTU B.Tech Probability and Stochastic Processes Exam QuestionsmurthyОценок пока нет

- Probability problems on random variablesДокумент3 страницыProbability problems on random variablesJames AttenboroughОценок пока нет

- Statistical Learning Theory Binary ClassificationДокумент4 страницыStatistical Learning Theory Binary ClassificationNCT DreamОценок пока нет

- Discrete Mathematics and Algorithms (CSE611) : Dr. Ashok Kumar DasДокумент32 страницыDiscrete Mathematics and Algorithms (CSE611) : Dr. Ashok Kumar DasBhavaniChunduruОценок пока нет

- Stat 110 Strategic Practice 4, Fall 2011Документ16 страницStat 110 Strategic Practice 4, Fall 2011nxp HeОценок пока нет

- Module 5: Probability DistributionsДокумент30 страницModule 5: Probability Distributionsleyn sanburgОценок пока нет

- Tables of The Legendre Functions P—½+it(x): Mathematical Tables SeriesОт EverandTables of The Legendre Functions P—½+it(x): Mathematical Tables SeriesОценок пока нет

- Solution Manual Microprocessors and Interfacing DV HallДокумент64 страницыSolution Manual Microprocessors and Interfacing DV HallFaro Val0% (2)

- All SolДокумент43 страницыAll Solamit100singhОценок пока нет

- I8086 Instruction Set-With ExamplesДокумент53 страницыI8086 Instruction Set-With ExamplesNarsimha PuttaОценок пока нет

- Orientation ScheduleДокумент3 страницыOrientation Scheduleamit100singhОценок пока нет

- Assignment On C++Документ7 страницAssignment On C++amit100singhОценок пока нет

- Tutorial 2Документ3 страницыTutorial 2amit100singhОценок пока нет

- Signal Syst0Документ16 страницSignal Syst0amit100singhОценок пока нет

- TTДокумент52 страницыTTamit100singhОценок пока нет

- Room AllotmentДокумент43 страницыRoom Allotmentamit100singhОценок пока нет

- ADVT No - DMRC O M 1 2014Документ5 страницADVT No - DMRC O M 1 2014Er Ashish BahetiОценок пока нет

- TCS Sample Paper 21Документ3 страницыTCS Sample Paper 21amit100singhОценок пока нет

- Comm LabДокумент1 страницаComm Labamit100singhОценок пока нет

- Digital Circuit & System Lab List of ExperimentsДокумент2 страницыDigital Circuit & System Lab List of Experimentsamit100singhОценок пока нет

- Digital Circuit & System Lab List of ExperimentsДокумент2 страницыDigital Circuit & System Lab List of Experimentsamit100singhОценок пока нет

- Front Page Ofdbms LabДокумент1 страницаFront Page Ofdbms Labamit100singhОценок пока нет

- Program-27 W.A.P To Overload Unary Increment (++) OperatorДокумент20 страницProgram-27 W.A.P To Overload Unary Increment (++) Operatoramit100singhОценок пока нет

- Front Page Ofdbms LabДокумент1 страницаFront Page Ofdbms Labamit100singhОценок пока нет

- mc1776 - Datasheet PDFДокумент12 страницmc1776 - Datasheet PDFLg GnilОценок пока нет

- Global Trustworthiness 2022 ReportДокумент32 страницыGlobal Trustworthiness 2022 ReportCaroline PimentelОценок пока нет

- Fs Casas FinalДокумент55 страницFs Casas FinalGwen Araña BalgomaОценок пока нет

- Graphic Design Review Paper on Pop Art MovementДокумент16 страницGraphic Design Review Paper on Pop Art MovementFathan25 Tanzilal AziziОценок пока нет

- Present Simple Tense ExplainedДокумент12 страницPresent Simple Tense ExplainedRosa Beatriz Cantero DominguezОценок пока нет

- B.A./B.Sc.: SyllabusДокумент185 страницB.A./B.Sc.: SyllabusKaran VeerОценок пока нет

- Portal ScienceДокумент5 страницPortal ScienceiuhalsdjvauhОценок пока нет

- A Study of Outdoor Interactional Spaces in High-Rise HousingДокумент13 страницA Study of Outdoor Interactional Spaces in High-Rise HousingRekha TanpureОценок пока нет

- Legend of GuavaДокумент4 страницыLegend of GuavaRoem LeymaОценок пока нет

- James A. Mcnamara JR.: An Interview WithДокумент22 страницыJames A. Mcnamara JR.: An Interview WithMiguel candelaОценок пока нет

- Family Health Nursing Process Part 2Документ23 страницыFamily Health Nursing Process Part 2Fatima Ysabelle Marie RuizОценок пока нет

- Unit 3 Activity 1-1597187907Документ3 страницыUnit 3 Activity 1-1597187907Bryan SaltosОценок пока нет

- TRU BRO 4pg-S120675R0 PDFДокумент2 страницыTRU BRO 4pg-S120675R0 PDFtomОценок пока нет

- Validated UHPLC-MS - MS Method For Quantification of Doxycycline in Abdominal Aortic Aneurysm PatientsДокумент14 страницValidated UHPLC-MS - MS Method For Quantification of Doxycycline in Abdominal Aortic Aneurysm PatientsAkhmad ArdiansyahОценок пока нет

- Productivity in Indian Sugar IndustryДокумент17 страницProductivity in Indian Sugar Industryshahil_4uОценок пока нет

- 202002Документ32 страницы202002Shyam SundarОценок пока нет

- Earth-Song WorksheetДокумент2 страницыEarth-Song WorksheetMuhammad FarizОценок пока нет

- Novel anti-tuberculosis strategies and nanotechnology-based therapies exploredДокумент16 страницNovel anti-tuberculosis strategies and nanotechnology-based therapies exploredArshia NazirОценок пока нет

- Econometrics IntroductionДокумент41 страницаEconometrics IntroductionRay Vega LugoОценок пока нет

- (123doc) - Internship-Report-Improving-Marketing-Strategies-At-Telecommunication-Service-Corporation-Company-VinaphoneДокумент35 страниц(123doc) - Internship-Report-Improving-Marketing-Strategies-At-Telecommunication-Service-Corporation-Company-VinaphoneK59 PHAN HA PHUONGОценок пока нет

- Coek - Info Anesthesia and Analgesia in ReptilesДокумент20 страницCoek - Info Anesthesia and Analgesia in ReptilesVanessa AskjОценок пока нет

- Economic History Society, Wiley The Economic History ReviewДокумент3 страницыEconomic History Society, Wiley The Economic History Reviewbiniyam.assefaОценок пока нет