Академический Документы

Профессиональный Документы

Культура Документы

Linear Regression Analysis of Smoking and Lung Capacity

Загружено:

Prateek SharmaИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Linear Regression Analysis of Smoking and Lung Capacity

Загружено:

Prateek SharmaАвторское право:

Доступные форматы

12/11/2012

1

LinearRegressionAnalysis

Correlation

SimpleLinearRegression

TheMultipleLinearRegressionModel

LeastSquaresEstimates

R

2

andAdjustedR

2

OverallValidityoftheModel(F test)

Testingforindividualregressor (t test)

ProblemofMulticollinearity

Gaurav Garg (IIMLucknow)

SmokingandLungCapacity

Suppose,forexample,wewanttoinvestigatethe

relationshipbetweencigarettesmokingandlung

capacity

Wemightaskagroupofpeopleabouttheirsmoking

habits,andmeasuretheirlungcapacities

Cigarettes (X) Lung Capacity (Y)

0 45

5 42

10 33

15 31

20 29

Gaurav Garg (IIMLucknow)

Scatterplotofthedata

Wecanseethatassmokinggoesup,lung

capacitytendstogodown.

Thetwovariableschangethevaluesinopposite

directions.

0

20

40

60

0 10 20 30

LungCapacity

Gaurav Garg (IIMLucknow)

HeightandWeight

Considerthefollowingdataofheightsandweightsof5

womenswimmers:

Height(inch): 62 64 65 66 68

Weight(pounds): 102 108 115 128 132

Wecanobservethatweightisalsoincreasingwith

height.

0

50

100

150

60 65 70

Gaurav Garg (IIMLucknow)

Sometimestwovariablesarerelatedtoeach

other.

Thevaluesofbothofthevariablesarepaired.

Changeinthevalueofoneaffectsthevalueof

other.

Usuallythesetwovariablesaretwoattributesof

eachmemberofthepopulation

ForExample:

Height Weight

AdvertisingExpenditure SalesVolume

Unemployment CrimeRate

Rainfall FoodProduction

Expenditure Savings

Gaurav Garg (IIMLucknow)

Wehavealreadystudiedonemeasureofrelationship

betweentwovariables Covariance

Covariancebetweentworandomvariables,X andY is

givenby

ForpairedobservationsonvariablesX andY,

) ( ) ( ) ( ) , ( Y E X E XY E Y X Cov

XY

= = o

=

= =

n

i

i i XY

y y x x

n

Y X Cov

1

) )( (

1

) , ( o

y

x

x x

y y

Gaurav Garg (IIMLucknow)

12/11/2012

2

PropertiesofCovariance:

Cov(X+a, Y+b) = Cov(X, Y) [notaffectedbychangeinlocation]

Cov(aX, bY) = ab Cov(X, Y) [affectedbychangeinscale]

Covariancecantakeanyvaluefrom- to+.

Cov(X,Y) > 0 meansX andY changeinthesamedirection

Cov(X,Y) < 0 meansX andY changeintheoppositedirection

If X andY areindependent,Cov(X,Y) = 0 [otherwaymaynotbetrue]

Itisnotunitfree.

Soitisnotagoodmeasureofrelationshipbetweentwo

variables.

Abettermeasureiscorrelationcoefficient.

Itisunitfreeandtakesvaluesin[-1,+1].

Gaurav Garg (IIMLucknow)

Correlation

KarlPearsonsCorrelationcoefficientisgivenby

WhenthejointdistributionofX andY isknown

WhenobservationsonX andY areavailable

) ( ) (

) , (

) , (

Y Var X Var

Y X Cov

Y X Corr r

XY

= =

2 2 2 2

)] ( [ ) ( ) ( , )] ( [ ) ( ) (

) ( ) ( ) ( ) , (

Y E Y E Y Var X E X E X Var

Y E X E XY E Y X Cov

= =

=

= =

=

= =

=

n

i

i

n

i

i

n

i

i i

y y

n

Y Var x x

n

X Var

y y x x

n

Y X Cov

1

2

1

2

1

) (

1

) ( , ) (

1

) (

) )( (

1

) , (

Gaurav Garg (IIMLucknow)

PropertiesofCorrelationCoefficient

Corr(aX+b, cY+d) = Corr(X, Y),

Itisunitfree.

Itmeasuresthestrengthofrelationshipona

scaleof-1 to+1.

So,itcanbeusedtocomparetherelationshipsof

variouspairsofvariables.

Valuescloseto0 indicatelittleornocorrelation

Valuescloseto+1 indicateverystrongpositive

correlation.

Valuescloseto-1 indicateverystrongnegative

correlation.

Gaurav Garg (IIMLucknow)

ScatterDiagram

PositivelyCorrelated

NegativelyCorrelated

WeaklyCorrelated StronglyCorrelated NotCorrelated

X

Y

Gaurav Garg (IIMLucknow)

CorrelationCoefficientmeasuresthestrengthof

linear relationship.

r = 0 doesnotnecessarilyimplythatthereisno

correlation.

Itmaybethere,butisnotalinear one.

x

y

x

y

Gaurav Garg (IIMLucknow)

x

1.25

1.75

2.25

2.00

2.50

2.25

2.70

2.50

17.50

y

125

105

65

85

75

80

50

55

640

-0.9

-0.4

0.1

-0.15

0.35

0.1

0.55

0.35

0

45

25

-15

5

-5

0

-30

-25

0

0.8100

0.1600

0.0100

0.0225

0.1225

0.0100

0.3025

0.1225

1.560

SSX

2025

625

225

25

25

0

900

625

4450

SSY

-40.50

-10.00

-1.50

-0.75

-1.75

0

-16.50

-8.75

-79.75

SSXY

957 . 0

4450 56 . 1

75 . 79

) ( ) (

) , (

=

= = =

SSY SSX

SSXY

Y Var X Var

Y X Cov

r

x x y y

2

) ( x x

2

) ( y y

) )( ( y y x x

Gaurav Garg (IIMLucknow)

12/11/2012

3

x

1.25

1.75

2.25

2.00

2.50

2.25

2.70

2.50

17.20

y

125

105

65

85

75

80

50

55

640

x

2

1.5625

3.0625

5.0625

4.0000

6.2500

5.0625

7.2500

6.2500

38.54

y

2

15625

11025

4225

7225

5625

6400

2500

3025

55650

x.y

156.25

183.75

146.25

170.00

187.50

180.00

135.00

137.50

1296.25

( )

,

2

2

=

n

x

x SSX

( )

,

2

2

=

n

y

y SSY

( )( )

=

n

y x

xy SSXY

SSX =1.56

SSY = 4450

SSXY=-79.75

AlternativeFormulasforSumofSquares

957 . 0

4450 56 . 1

75 . 79

) ( ) (

) , (

=

= = =

SSY SSX

SSXY

Y Var X Var

Y X Cov

r

Gaurav Garg (IIMLucknow)

SmokingandLungCapacityExample

Cigarettes

(X)

XY

Lung

Capacity

(Y)

0 0 0 2025 45

5 25 210 1764 42

10 100 330 1089 33

15 225 465 961 31

20 400 580 841 29

50 750 1585 6680 180

2

X

2

Y

Gaurav Garg (IIMLucknow)

( )

2 2

(5)(1585) (50)(180)

(5)(750) 50 (5)(6680) 180

7925 9000

(3750 2500)(33400 32400)

1075

.9615

1250 (1000)

xy

r

=

( (

= =

Gaurav Garg (IIMLucknow)

RegressionAnalysis

HavingdeterminedthecorrelationbetweenXandY,we

wishtodetermineamathematicalrelationshipbetween

them.

Dependentvariable:thevariableyouwishtoexplain

Independentvariables:thevariablesusedtoexplainthe

dependentvariable

Regressionanalysisisusedto:

Predictthevalueofdependentvariablebasedonthe

valueofindependentvariable(s)

Explaintheimpactofchangesinanindependent

variableonthedependentvariable

Gaurav Garg (IIMLucknow)

TypesofRelationships

Y

X

Y

X

Y

Y

X

X

Linearrelationships Curvilinearrelationships

Gaurav Garg (IIMLucknow)

TypesofRelationships

Y

X

Y

X

Y

Y

X

X

Strong relationships Weak relationships

Gaurav Garg (IIMLucknow)

12/11/2012

4

TypesofRelationships

Y

X

Y

X

No relationship

Gaurav Garg (IIMLucknow)

SimpleLinearRegressionAnalysis

Thesimplestmathematicalrelationshipis

Y = a + bX + error (linear)

ChangesinY arerelatedtothechangesin X

Whatarethemostsuitablevaluesof

a (intercept)andb (slope)?

X

Y

X

Y

y = a + b.x

}a

1

b

Gaurav Garg (IIMLucknow)

X

Y

(x

i

, y

i

)

y

i

x

i

MethodofLeastSquares

i

bx a +

bX a +

The best fitted line would be for which all the

ERRORS are minimum.

error

Gaurav Garg (IIMLucknow)

Wewanttofitalineforwhichalltheerrorsare

minimum.

Wewanttoobtainsuchvaluesofa andb in

Y = a + bX + error forwhichalltheerrorsare

minimum.

Tominimizealltheerrorstogetherweminimize

thesumofsquaresoferrors(SSE).

=

=

n

i

i i

bX a Y SSE

1

2

) (

Gaurav Garg (IIMLucknow)

Togetthevaluesofa andb whichminimizeSSE,we

proceedasfollows:

Eq(1)and(2)arecallednormalequations.

Solvenormalequationstogeta and b

) 1 (

0 ) ( 2 0

1 1

1

= =

=

+ =

= =

c

c

n

i

i

n

i

i

i

n

i

i

X b na Y

bX a Y

a

SSE

) 2 (

0 ) ( 2 0

1

2

1 1

1

= = =

=

+ =

= =

c

c

n

i

i

n

i

i i

n

i

i

i i

n

i

i

X b X a X Y

X bX a Y

b

SSE

Gaurav Garg (IIMLucknow)

Solvingabovenormalequations,weget

= = =

= =

+ =

+ =

n

i

i

n

i

i

n

i

i i

n

i

i

n

i

i

X b X a X Y

X b na Y

1

2

1 1

1 1

( )( )

( )

SSX

SSXY

X X

X X Y Y

X X

X Y X Y n

b

n

i

i

n

i

i i

n

i

i

n

i

i

n

i

i

n

i

i

n

i

i i

=

=

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

=

=

= =

= = =

1

2

1

2

1 1

2

1 1 1

X b Y a =

Gaurav Garg (IIMLucknow)

12/11/2012

5

Thevaluesofa andb obtainedusingleastsquares

methodarecalledasleastsquaresestimates(LSE)

ofa andb.

Thus,LSEofa andb aregivenby

AlsothecorrelationcoefficientbetweenX andY is

.

SSX

SSXY

b , X b Y a = =

SSY

SSX

b

SSY

SSX

SSX

SSXY

SSY SSX

SSXY

Y Var X Var

Y X Cov

r

XY

) ( ) (

) , (

= = = =

Gaurav Garg (IIMLucknow)

x

1.25

1.75

2.25

2.00

2.50

2.25

2.70

2.50

17.50

y

125

105

65

85

75

80

50

55

640

-0.9

-0.4

0.1

-0.15

0.35

0.1

0.55

0.35

0

45

25

-15

5

-5

0

-30

-25

0

0.8100

0.1600

0.0100

0.0225

0.1225

0.0100

0.3025

0.1225

1.560

SSX

2025

625

225

25

25

0

900

625

4450

SSY

-40.50

-10.00

-1.50

-0.75

-1.75

0

-16.50

-8.75

-79.75

SSXY

x x y y

2

) ( x x

2

) ( y y

) )( ( y y x x

. 80 , 15 . 2 = = Y X

Gaurav Garg (IIMLucknow)

957 . 0 = =

SSY SSX

SSXY

r

12 . 51

= =

SSX

SSXY

b 91 . 189

= = X b Y a

0.25 0.50 0.75 1.00 1.25 1.50 1.75 2.00 2.25 2.50 2.75

140

120

100

80

60

40

X Y 12 . 51 91 . 189

= is Line Fitted

Gaurav Garg (IIMLucknow)

189.91istheestimatedmeanvalueofY when

thevalueofX iszero.

-51.12 isthechangeintheaveragevalueofY as

aresultofaoneunitchangeinX.

WecanpredictthevalueofY forsomegiven

valueofX.

ForexampleatX=2.15,predictedvalueofY is

189.91 51.12 x2.15=80.002

X Y 12 . 51 91 . 189

= is Line Fitted

Gaurav Garg (IIMLucknow)

ResidualistheunexplainedpartofY

Thesmallertheresiduals,thebettertheutilityof

Regression.

SumofResidualsisalwayszero.LeastSquare

procedureensuresthat.

Residualsplayanimportantroleininvestigating

theadequacyofthefittedmodel.

Weobtaincoefficientofdetermination(R

2

)

usingtheresiduals.

R

2

isusedtoexaminetheadequacyofthefitted

linearmodeltothegivendata.

i i i

Y Y e

= : Residuals

Gaurav Garg (IIMLucknow)

CoefficientofDetermination

X

Y

Y

( ) Y Y

( ) Y Y

( ) Y Y

=

=

n

i

i

Y Y SST

1

2

) ( : Squares of Sum Total

=

=

n

i

i

Y Y SSR

1

2

)

( : Squares of Sum Regression

=

=

n

i

i i

Y Y SSE

1

2

)

( : Squares of Sum Error

Also, SST = SSR + SSE

Gaurav Garg (IIMLucknow)

12/11/2012

6

ThefractionofSST explained byRegressionisgivenbyR

2

R

2

= SSR/ SST = 1 (SSE/ SST)

Clearly,0 R

2

1

WhenSSR isclosedtoSST, R

2

willbeclosedto1.

Thismeansthatregressionexplainsmostofthevariability

inY.(Fitisgood)

WhenSSE isclosedtoSST, R

2

willbeclosedto0.

Thismeansthatregressiondoesnotexplainmuch

variabilityinY. (Fitisnotgood)

R

2

isthesquareofcorrelationcoefficientbetweenX and

Y.(proofomitted)

Gaurav Garg (IIMLucknow)

r = 1

r = -1

R

2

= 1

Perfectlinear

relationship

100%ofthevariation

inY isexplainedbyX

0 < R

2

< 1

Weaklinear

relationships

Somebutnotallof

thevariationinY is

explainedbyX

R

2

= 0

Nolinear

relationship

Noneofthe

variationinY is

explainedbyX

Gaurav Garg (IIMLucknow)

CoefficientofDetermination:R

2

= (4450-370.5)/4450 = 0.916

CorrelationCoefficient: r = -0.957

CoefficientofDetermination=(CorrelationCoefficient)

2

X Y

1.25 125 126.0 45 -1 46 2025 1 2116

1.75 105 100.5 25 4.5 20.5 625 20.25 420.25

2.25 65 74.9 -15 -9.9 -5.1 225 98.00 26.01

2.00 85 87.7 5 -2.2 7.7 25 4.84 59.29

2.50 75 62.1 -5 12.9 -17.7 25 166.41 313.29

2.25 80 74.9 0 5.1 -5.1 0 26.01 26.01

2.70 50 51.9 -30 -1.9 -28.1 900 3.61 789.61

2.50 55 62.1 -25 -7.1 -17.9 625 50.41 320.41

17.20 640 4450 370.54 4079.4

6

Y

) ( Y Y

)

( Y Y )

( Y Y

2

) ( Y Y

2

)

( Y Y

2

)

( Y Y

Gaurav Garg (IIMLucknow)

Example:

Watchingtelevisionalsoreducestheamountofphysicalexercise,

causingweightgains.

Asampleoffifteen10yearoldchildrenwastaken.

Thenumberofpoundseachchildwasoverweightwasrecorded

(anegativenumberindicatesthechildisunderweight).

Additionally,thenumberofhoursoftelevisionviewingperweeks

wasalsorecorded.Thesedataarelistedhere.

Calculatethesampleregressionlineanddescribewhatthe

coefficientstellyouabouttherelationshipbetweenthetwo

variables.

Y=24.709+0.967XandR

2

=0.768

TV 42 34 25 35 37 38 31 33 19 29 38 28 29 36 18

Overweight 18 6 0 1 13 14 7 7 9 8 8 5 3 14 7

Gaurav Garg (IIMLucknow)

Gaurav Garg (IIMLucknow)

15.00

10.00

5.00

0.00

5.00

10.00

15.00

20.00

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

Y

PredictedY

Gaurav Garg (IIMLucknow)

12/11/2012

7

StandardError

Consideradataset.

Alltheobservationscannotbeexactlythesameas

arithmeticmean(AM).

VariabilityoftheobservationsaroundAMismeasured

bystandarddeviation.

Similarlyinregression,allY valuescannotbethesame

aspredictedY values.

VariabilityofY valuesaroundthepredictionlineis

measuredbySTANDARDERROROFTHEESTIMATE.

Itisgivenby

2

)

(

2

1

2

=

=

n

Y Y

n

SSE

S

n

i

i i

YX

Gaurav Garg (IIMLucknow)

Assumptions

TherelationshipbetweenXandYislinear

Errorvaluesarestatisticallyindependent

AlltheErrorshaveacommonvariance.

(Homoscedasticity)

Var(e

i

)=o

2

,where

E(e

i

)= 0

Nodistributionalassumptionabouterrorsis

requiredforleastsquaresmethod.

i i i

Y Y e

=

Gaurav Garg (IIMLucknow)

Linearity

NotLinear

Linear

X

r

e

s

i

d

u

a

l

s

X

Y

X

Y

X

r

e

s

i

d

u

a

l

s

Gaurav Garg (IIMLucknow)

Independence

NotIndependent Independent

X

X

r

e

s

i

d

u

a

l

s

r

e

s

i

d

u

a

l

s

X

r

e

s

i

d

u

a

l

s

Gaurav Garg (IIMLucknow)

EqualVariance

Unequalvariance

(Heteroscadastic)

Equalvariance

(Homoscadastic)

X

X

Y

X

X

Y

r

e

s

i

d

u

a

l

s

r

e

s

i

d

u

a

l

s

Gaurav Garg (IIMLucknow)

TVWatching WeightGainExample

ScatterPlotofXandY

ScatterPlotofXandResiduals

12.00

10.00

8.00

6.00

4.00

2.00

0.00

2.00

4.00

6.00

0 5 10 15 20 25 30 35 40 45

15.00

10.00

5.00

0.00

5.00

10.00

15.00

20.00

0 5 10 15 20 25 30 35 40 45

Gaurav Garg (IIMLucknow)

12/11/2012

8

TheMultipleLinearRegressionModel

Insimplelinearregressionanalysis,wefitlinearrelation

between

oneindependentvariable(X)and

onedependentvariable(Y).

WeassumethatY isregressedononlyoneregressor

variableX.

Insomesituations,thevariableY isregressedonmore

thanoneregressor variables(X

1

, X

2

, X

3

, ).

ForEXample:

Cost >Laborcost,Electricitycost,Rawmaterialcost

Salary >Education,EXperience

Sales >Cost,AdvertisingEXpenditure

Gaurav Garg (IIMLucknow)

Example:

Adistributoroffrozendessertpieswantsto

evaluatefactorswhichinfluencethedemand

Dependentvariable:

Y:Piesales(unitsperweek)

Independentvariables:

X

1

: Price(in$)

X

2

: AdvertisingExpenditure($100s)

Dataarecollectedfor15weeks

Gaurav Garg (IIMLucknow)

Week

Pie

Sales

Price

($)

Advertising

($100s)

1 350 5.50 3.3

2 460 7.50 3.3

3 350 8.00 3.0

4 430 8.00 4.5

5 350 6.80 3.0

6 380 7.50 4.0

7 430 4.50 3.0

8 470 6.40 3.7

9 450 7.00 3.5

10 490 5.00 4.0

11 340 7.20 3.5

12 300 7.90 3.2

13 440 5.90 4.0

14 450 5.00 3.5

15 300 7.00 2.7

Gaurav Garg (IIMLucknow)

Usingthegivendata,wewishtofitalinear

functionoftheform:

where

Y:Piesales(unitsperweek)

X

1

: Price(in$)

X

2

: AdvertisingExpenditure($100s)

Fittingmeans,wewanttogetthevaluesof

regressioncoefficientsdenotedby

Originalvaluesofsarenotknown.

Weestimatethemusingthegivendata.

,

2 2 1 1 0 i i i i

X X Y + + + =

. 15 , , 2 , 1 = i

Gaurav Garg (IIMLucknow)

TheMultipleLinearRegressionModel

Examinethelinearrelationshipbetween

onedependent (Y) and

twoormoreindependentvariables(X

1

, X

2

, , X

k

).

MultipleLinearRegressionModelwithk

IndependentVariables:

i ki k i i i

X X X Y + + + + + =

2 2 1 1 0

Intercept

Slopes RandomError

. , , 2 , 1 n i =

Gaurav Garg (IIMLucknow)

MultipleLinearRegressionEquation

Intercept and Slopes are estimated using observed

data.

Multiple linear regression equation with k

independent variables

ki k i i i

X b X b X b b Y + + + + =

2 2 1 1 0

Estimated

value

Estimates of slopes

Estimate of

intercept

. , , 2 , 1 n i =

Gaurav Garg (IIMLucknow)

12/11/2012

9

MultipleRegressionEquation

EXample withtwoindependentvariables

Y

X

1

X

2

2 2 1 1 0

X b X b b Y + + =

Gaurav Garg (IIMLucknow)

EstimatingRegressionCoefficients

Themultiplelinearregressionmodel

InmatriX notations

or

|

|

|

|

|

.

|

\

|

+

|

|

|

|

|

|

.

|

\

|

|

|

|

|

|

.

|

\

|

=

|

|

|

|

|

.

|

\

|

n

k

nk n n

k

k

n

X X X

X X X

X X X

Y

Y

Y

c

c

c

|

|

|

|

2

1

2

1

0

2 1

2 22 21

1 12 11

2

1

1

1

1

X Y + =

,...,n , ,i X X X Y

i ki k i i i

2 1

2 2 1 1 0

= + + + + + =

Gaurav Garg (IIMLucknow)

Assumptions

No.ofobservations(n)isgreaterthanno.of

regressors (k).i.e.,n> k

RandomErrorsareindependent

RandomErrorshavethesamevariances.

(Homoscedasticity)

Var(c

i

)=o

2

Inlongrun,meaneffectofrandomerrorsiszero.

E(c

i

)= 0.

NoAssumptionondistributionofRandomerrors

isrequiredforleastsquaresmethod.

Gaurav Garg (IIMLucknow)

Inordertofindtheestimateof,weminimize

WedifferentiateS() withrespectto andequate

tozero,i.e.,

Thisgives

b iscalledleastsquaresestimatorof.

X X Y X Y- Y

X (Y ) X (Y S(

n

i

i

' ' + ' ' ' =

' = ' = =

=

2

) )

1

2

|

,

S

0 =

c

c

Y X X) X ( b ' ' =

1

Gaurav Garg (IIMLucknow)

Example:Considerthepieexample.

Wewanttofitthemodel

Thevariablesare

Y:Piesales(unitsperweek)

X

1

: Price(in$)

X

2

: AdvertisingExpenditure($100s)

UsingthematriX formula,theleastsquaresestimate

(LSE)ofsareobtainedasbelow:

PieSales=306.53 24.98Price+74.13Adv.Expend.

,

2 2 1 1 0 i i i i

X X Y + + + =

LSE of Intercept

0

Intercept(b

0

) 306.53

LSE of slope

1

Price(b

1

) 24.98

LSE of slope

2

Advertising(b

2

) 74.13

Gaurav Garg (IIMLucknow)

) ( 13 74 ) ( 98 24 53 306 Sales

2 1

X . X . - . + =

b

1

= -24.98: sales will decrease, on

average, by 24.98 pies per week for

each $1 increase in selling price,

while advertising expenses are kept

fixed.

b

2

= 74.13: sales will

increase, on average, by

74.13 pies per week for

each $100 increase in

advertising, while selling

price are kept fixed.

Gaurav Garg (IIMLucknow)

12/11/2012

10

Prediction:

Predictsalesforaweekinwhich

sellingpriceis$5.50

AdvertisingeXpenditure is$350:

Sales=306.53 24.98X

1

+74.13X

2

=306.53 24.98(5.50)+74.13(3.5)

=428.62

Predictedsalesis428.62pies

NotethatAdvertisingisin$100s,soX

2

=3.5

Gaurav Garg (IIMLucknow)

Y X

1

X

2

PredictedY Residuals

350 5.5 3.3 413.77 63.80

460 7.5 3.3 363.81 96.15

350 8.0 3.0 329.08 20.88

430 8.0 4.5 440.28 10.31

350 6.8 3.0 359.06 9.09

380 7.5 4.0 415.70 35.74

430 4.5 3.0 416.51 13.47

470 6.4 3.7 420.94 49.03

450 7.0 3.5 391.13 58.84

490 5.0 4.0 478.15 11.83

340 7.2 3.5 386.13 46.16

300 7.9 3.2 346.40 46.44

440 5.9 4.0 455.67 15.70

450 5.0 3.5 441.09 8.89

300 7.0 2.7 331.82 31.85

2 1

13096 74 97509 24 52619 306

X . X . . Y + =

Gaurav Garg (IIMLucknow)

0

100

200

300

400

500

600

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

Y

PredictedY

Gaurav Garg (IIMLucknow)

CoefficientofDetermination

CoefficientofDetermination(R

2

)isobtainedusingthe

sameformulaaswasinsimplelinearregression.

R

2

= SSR/SST = 1 (SSE/SST)

R

2

istheproportionofvariationinY explainedby

regression.

=

=

n

i

i

Y Y

1

2

) ( SST Squares, of Sum Total

=

=

n

i

i

Y Y

1

2

)

( SSR Squares, of Sum Regression

=

=

n

i

i i

Y Y

1

2

)

( SSE Squares, of Sum Error

Also,SST = SSR + SSE

Gaurav Garg (IIMLucknow)

Since SST = SSR + SSE

andallthreequantitiesarenonnegative,

Also, 0 SSR SST

So 0 SSR/SST 1

Or 0 R

2

1

WhenR

2

iscloseto0,thelinearfitisnotgood

AndX variablesdonotcontributeinexplainingthe

variabilityinY.

WhenR

2

iscloseto1,thelinearfitisgood.

Inthepreviouslydiscussedexample,R

2

=0.5215

IfweconsiderY andX

1

only,R

2

=0.1965

IfweconsiderY andX

2

only,R

2

=0.3095

Gaurav Garg (IIMLucknow)

AdjustedR

2

Ifonemoreregressor isaddedtothemodel,thevalue

ofR

2

willincrease

Thisincreaseisregardlessofthecontributionofnewly

addedregressor.

So,anadjustedvalueofR

2

isdefined,whichiscalledas

adjustedR

2

anddefinedas

ThisAdjustedR

2

willonlyincrease,iftheadditional

variablecontributeinexplainingthevariationinY.

Forourexample,AdjustedR

2

=0.4417

) (n SST

) k (n SSE

R

Adj

1

1

1

2

=

Gaurav Garg (IIMLucknow)

12/11/2012

11

FTestforOverallSignificance

Wecheckifthereisalinearrelationshipbetweenallthe

regressors (X

1

, X

2

, , X

k

) andresponse(Y).

UseFteststatistic

Totest:

H

0

:

1

=

2

=

=

k

= 0 (noregressor issignificant)

H

1

: atleastone

i

0 (atleastoneregressor affectsY)

ThetechniqueofAnalysisofVarianceisused.

Assumptions:

n > k, Var(c

i

)=o

2

, E(c

i

)= 0.

c

i

s areindependent.ThisimpliesthatCorr (c

i

, c

j

)=0,fori j

c

i

s haveNormalDistribution.[c

i

~ N(0, o

2

)]

[NEWASSUMPTION]

Gaurav Garg (IIMLucknow)

TotalSumofSquare(SST)ispartitionedinto

SumofSquaresduetoRegression(SSR)and

SumofSquaresduetoResiduals(SSE)

where

e

i

s arecalledtheresiduals.

( )

( )

SSE SST SSR

Y Y e SSE

Y Y SST

n

i

i i

n

i

i

n

i

i

=

= =

=

= =

=

1

2

1

2

2

1

Gaurav Garg (IIMLucknow)

AnalysisofVarianceTable

TestStatistic: F

c

= MSR / MSE ~ F

(k, n-k-1)

ForthepreviouseXample,wewishtotest

H

0

:

1

=

2

= 0 AgainstH

1

: atleastone

i

0

ANOVATable

ThusH

0

isrejectedat5%levelofsignificance.

df SS MS F

c

Regression k SSR MSR MSR/MSE

ResidualorError n-k-1 SSE MSE

Total n-1 SST

df SS MS F F

(2,12)

(0.05)

Regression 2 29460.03 14730.01 6.5386 3.89

ResidualorError 12 27033.31 2252.78

Total 14 56493.33

Gaurav Garg (IIMLucknow)

IndividualVariablesTestsofHypothesis

Wetestifthereisalinearrelationshipbetweena

particularregressor X

j

andY

Hypotheses:

H

0

:

j

= 0 (nolinearrelationship)

H

1

:

j

0 (linearrelationshipexistsbetweenX

j

andY)

Weuseatwotailedttest

IfH

0

:

j

= 0 isaccepted,

thisindicatesthatthevariableX

j

canbedeleted

fromthemodel.

Gaurav Garg (IIMLucknow)

TestStatistic:

T

c

~ Studentst with(n-k-1) degreeoffreedom

b

j

istheleastsquaresestimateof

j

C

j j

isthe(j, j)thelementofmatrix(XX)

-1

(MSE isobtainedinANOVATable)

jj

j

c

C

b

T

2

o

=

MSE =

2

o

Gaurav Garg (IIMLucknow)

Inourexample

and

TotestH

0

:

1

= 0 againstH

1

:

1

0

T

c

= -2.3057

TotestH

0

:

2

= 0 againstH

1

:

2

0

T

c

=2.8548

Twotailedcriticalvaluesoft at12d.f.are

3.0545for1%levelofsignificance

2.6810for2%levelofsignificance

2.1788for5%levelofsignificance

2252.7755

2

= o

|

|

|

.

|

\

|

= '

2993 0 0038 0 0165 1

0038 0 0521 0 3312 0

0165 1 3312 0 7946 5

1

. . .

. . .

. . .

X) X (

Gaurav Garg (IIMLucknow)

12/11/2012

12

StandardError

Consideradataset.

Alltheobservationscannotbeexactlythesameas

arithmeticmean(AM).

VariabilityoftheobservationsaroundAMismeasured

bystandarddeviation.

Similarlyinregression,allY valuescannotbethesame

aspredictedY values.

VariabilityofY valuesaroundthepredictionlineis

measuredbySTANDARDERROROFTHEESTIMATE.

Itisgivenby

1

)

(

1

1

2

=

=

=

k n

Y Y

k n

SSE

S

n

i

i i

YX

Gaurav Garg (IIMLucknow)

AssumptionofLinearity

NotLinear

Linear

r

e

s

i

d

u

a

l

s

Y

X

Y

X

r

e

s

i

d

u

a

l

s

Y

Y

Gaurav Garg (IIMLucknow)

AssumptionofEqualVariance

WeassumethatVar(c

i

)=o

2

Thevarianceisconstantforallobservations.

Thisassumptionisexaminedbylookingatthe

plotof

Predictedvalues andresiduals

i

Y

i i i

Y Y e

=

Gaurav Garg (IIMLucknow)

ResidualAnalysisforEqualVariance

Unequal variance Equal variance

r

e

s

i

d

u

a

l

s

r

e

s

i

d

u

a

l

s

Y

Gaurav Garg (IIMLucknow)

AssumptionofUncorrelatedResiduals

DurbinWatsonstatisticisateststatisticusedtodetect

thepresenceofautocorrelation.

Itisgivenby

Thevalueofd alwaysliesbetween0 and4.

d = 2 indicatesnoautocorrelation.

Smallvaluesofd < 2 indicatesuccessiveerrortermsare

positivelycorrelated.

Ifd > 2 successiveerrortermsarenegativelycorrelated.

Thevalueofd morethan3andlessthan1arealarming.

=

=

=

n

i

i

n

i

i i

e

e e

d

1

2

2

2

1

) (

Gaurav Garg (IIMLucknow)

ResidualAnalysisforIndependence

(UncorrelatedErrors)

Not Independent Independent

r

e

s

i

d

u

a

l

s

r

e

s

i

d

u

a

l

s

r

e

s

i

d

u

a

l

s

Y

Gaurav Garg (IIMLucknow)

12/11/2012

13

AssumptionofNormality

WhenweuseF testort test,weassumethatc

1

,

c

2

, , c

n

arenormallydistributed.

Thisassumptioncanbeexaminedbyhistogram

ofresiduals.

NOTNORMAL

NORMAL

Gaurav Garg (IIMLucknow)

NormalitycanalsobeexaminedusingQQplot

orNormalprobabilityplot.

NOTNORMAL

NORMAL

Gaurav Garg (IIMLucknow)

StandardizedRegressionCoefficient

Inamultiplelinearregression,wemayliketoknow

whichregressor contributesmore.

Weobtainstandardizedestimatesofregression

coefficients.

Forthat,firstwestandardizetheobservations.

1

2

2

1 1

2

1 1 1 1

1 1

2

2 2 2 2

1 1

1 1

, ( )

1

1 1

, ( )

1

1 1

, ( )

1

n n

i Y i

i i

n n

i X i

i i

n n

i X i

i i

Y Y s Y Y

n n

X X s X X

n n

X X s X X

n n

= =

= =

= =

= =

= =

= =

Gaurav Garg (IIMLucknow)

StandardizeallY, X

1

andX

2

valuesasfollows:

Fittheregressioninthestandardizeddataandobtain

theleastsquaresestimateofregressioncoefficients.

Thesecoefficientsaredimensionlessorunitfreeand

canbecompared.

Lookfortheregressioncoefficienthavingthehighest

magnitude.

Correspondingregressor contributesthemost.

1 2

1 1 2 2

1 2

Standardized ,

Standardized , Standardized

i

Y

i i

i i

X X

Y Y

Y

s

X X X X

X X

s s

=

= =

Gaurav Garg (IIMLucknow)

Y = 0 0.461 X

1

+ 0.570 X

2

Since0.461 < 0.570

X

2

Contributesthemost

Standardized Data

Week

Pie

Sales

Price

($)

Advertising

($100s)

1

0.78 0.95 0.37

2

0.96 0.76 0.37

3

0.78 1.18 0.98

4

0.48 1.18 2.09

5

0.78 0.16 0.98

6

0.30 0.76 1.06

7

0.48 1.80 0.98

8

1.11 0.18 0.45

9

0.80 0.33 0.04

10

1.43 1.38 1.06

11

0.93 0.50 0.04

12

1.56 1.10 0.57

13

0.64 0.61 1.06

14

0.80 1.38 0.04

15

1.56 0.33 1.60

Gaurav Garg (IIMLucknow)

Notethat:

AdjustedR

2

canbenegative

AdjustedR

2

isalwayslessthanorequaltoR

2

Inclusionofintercepttermisnotnecessary.

Itdependsontheproblem.

Analystmaydecideonthis.

) 1 (

) 1 )( 1 (

1

2

2

=

k n

n R

R

Adj

) 1 (

) 1 (

2

2

R k

R k n

F

c

=

Gaurav Garg (IIMLucknow)

12/11/2012

14

Example:Followingdatawascollectedforthesales,numberof

advertisementspublishedandadvertizingexpenditurefor12

weeks.Fitaregressionmodeltopredictthesales.

Sales(0,000Rs) Ads(Nos.) AdvEx(000Rs)

43.6 12 13.9

38.0 11 12

30.1 9 9.3

35.3 7 9.7

46.4 12 12.3

34.2 8 11.4

30.2 6 9.3

40.7 13 14.3

38.5 8 10.2

22.6 6 8.4

37.6 8 11.2

35.2 10 11.1

Gaurav Garg (IIMLucknow)

ANOVA

b

Model

Sumof

Squares df Mean Square F Sig.

1 Regression 309.986 2 154.993 9.741 .006

a

Residual

143.201 9 15.911

Total

453.187 11

a. Predictors: (Constant), Ex_Adv, No_Adv

b. Dependent Variable: Sales

Coefficients

a

Model

Unstandardized Coefficients

Standardized

Coefficients

t Sig. B Std. Error Beta

1 (Constant) 6.584 8.542 .771 .461

No_Adv .625 1.120 .234 .558 .591

Ex_Adv 2.139 1.470 .611 1.455 .180

a. Dependent Variable: Sales

pvalue<0.05;H

0

isrejected;Allsarenotzero

Allpvalues>0.05;NoH

0

rejected.

0

=0,

1

=0,

2

=0

CONTRADICTION

Gaurav Garg (IIMLucknow)

Multicollinearity

Weassumethatregressors areindependentvariables.

WhenweregressY onregressors X

1

, X

2

, , X

k

.

Weassumethatallregressors X

1

, X

2

, , X

k

are

statisticallyindependentofeachother.

Alltheregressors affectthevaluesofY.

Oneregressor doesnotaffectthevaluesofother

regressor.

Sometimes,inpracticethisassumptionisnotmet.

Wefacetheproblemofmulticollinearity.

Thecorrelatedvariablescontributeredundantinformation

tothemodel

Gaurav Garg (IIMLucknow)

Includingtwohighlycorrelatedindependentvariablescan

adverselyaffecttheregressionresults

Canleadtounstablecoefficients

SomeIndicationsofStrongMulticollinearity:

Coefficientsignsmaynotmatchpriorexpectations

Largechangeinthevalueofapreviouscoefficientwhenanew

variableisaddedtothemodel

Apreviouslysignificantvariablebecomesinsignificantwhena

newindependentvariableisadded.

Fsaysatleastonevariableissignificant,butnoneofthets

indicatesausefulvariable.

Largestandarderrorandcorrespondingregressors isstill

significant.

MSEisveryhighand/orR

2

isverysmall

Gaurav Garg (IIMLucknow)

EXAMPLESINWHICHTHISMIGHTHAPPEN:

MilespergallonVs.horsepowerandenginesize

IncomeVs.ageandexperience

SalesVs.No.ofAdvertisementandAdvert.Expenditure

VarianceInflationaryFactor:

VIF

j

isusedtomeasuremulticollinearity generated

byvariableX

j

Itisgivenby

whereR

2

j

isthecoefficientofdeterminationofa

regressionmodelthatuses

X

j

asthedependentvariableand

allotherX variablesastheindependentvariables.

2

1

1

j

j

R

VIF

=

Gaurav Garg (IIMLucknow)

IfVIF

j

>5,X

j

ishighlycorrelatedwiththeother

independentvariables

Mathematically,theproblemofmulticollinearity occurs

whenthecolumnsofmatrixX havenearlinear

dependence

LSEb cannotbeobtainedwhenthematrixXX issingular

ThematrixXX becomessingularwhen

thecolumnsofmatrixX haveexactlineardependence

Ifanyoftheeigen valueofmatrixXX iszero

Thus,nearzeroeigen valueisalsoanindicationof

multicollinearity.

Themethodsofdealingwithmulticollinearity:

CollectingAdditionalData

VariableElimination

Gaurav Garg (IIMLucknow)

12/11/2012

15

Coefficients

a

Model

Unstandardized

Coefficients

Standardize

d

Coefficients

t Sig.

Collinearity

Statistics

B Std. Error Beta Tolerance VIF

1 (Constant) 6.584 8.542 .771 .461

No_Adv .625 1.120 .234 .558 .591 .199 5.022

Ex_Adv 2.139 1.470 .611 1.455 .180 .199 5.022

a. Dependent Variable: Sales

Collinearity Diagnostics

a

Model Dimension Eigenvalue

Condition

Index

Variance Proportions

(Constant) No_Adv Ex_Adv

1 1 2.966 1.000 .00 .00 .00

2 .030 9.882 .33 .17 .00

3 .003 30.417 .67 .83 1.00

a. Dependent Variable: Sales

Greaterthan5 Tolerance=1/VIF

LargeValue NegligibleValue

Gaurav Garg (IIMLucknow)

Wemayusethemethodofvariableelimination.

Inpractice,IfCorr (X

1

, X

2

) ismorethan0.7or

lessthan0.7,weeliminateoneofthem.

Techniques:

Stepwise (basedonANOVA)

ForwardInclusion (basedonCorrelation)

BackwardElimination (basedonCorrelation)

Gaurav Garg (IIMLucknow)

StepwiseRegression

Y = |

0

+ |

1

X

1

+ |

2

X

2

+ |

3

X

3

+ |

4

X

4

+ |

5

X

5

+ c

Step1:Run5simplelinearregressions:

Y = |

0

+ |

1

X

1

Y = |

0

+ |

2

X

2

Y = |

0

+ |

3

X

3

Y = |

0

+ |

4

X

4

Y = |

0

+ |

5

X

5

Step2:Run4twovariablelinearregressions:

Y = |

0

+ |

4

X

4

+ |

1

X

1

Y = |

0

+ |

4

X

4

+ |

2

X

2

Y = |

0

+ |

4

X

4

+ |

3

X

3

Y = |

0

+ |

4

X

4

+ |

5

X

5

<====haslowestpvalue(ANOVA)<0.05

<=haslowestpvalue(ANOVA)<0.05

Gaurav Garg (IIMLucknow)

Step3:Run3threevariablelinearregressions:

Y = |

0

+ |

3

X

3

+ |

4

X

4

+ |

1

X

1

Y = |

0

+ |

3

X

3

+ |

4

X

4

+ |

2

X

2

Y = |

0

+ |

3

X

3

+ |

4

X

4

+ |

5

X

5

Supposenoneofthesemodelshave

pvalues<0.05

STOP

BestmodelistheonewithX

3

andX

4

only

Gaurav Garg (IIMLucknow)

Example:Followingdatawascollectedforthesales,numberof

advertisementspublishedandadvertizingexpenditurefor12

months.Fitaregressionmodeltopredictthesales.

Sales(0,000Rs) Ads(Nos.) AdvEx(000Rs)

43.6 12 13.9

38.0 11 12

30.1 9 9.3

35.3 7 9.7

46.4 12 12.3

34.2 8 11.4

30.2 6 9.3

40.7 13 14.3

38.5 8 10.2

22.6 6 8.4

37.6 8 11.2

35.2 10 11.1

Gaurav Garg (IIMLucknow)

SummaryOutput1:SalesVs.No_Adv

Model Summary

Model R R Square Adjusted R Square

Std. Error of the

Estimate

1 .781

a

.610 .571 4.20570

a. Predictors: (Constant), No_Adv

ANOVA

b

Model Sumof Squares df Mean Square F Sig.

1 Regression 276.308 1 276.308 15.621 .003

a

Residual 176.879 10 17.688

Total 453.187 11

a. Predictors: (Constant), No_Adv

b. Dependent Variable: Sales

Coefficients

a

Model

Unstandardized Coefficients

Standardized

Coefficients

t Sig. B Std. Error Beta

1 (Constant) 16.937 4.982 3.400 .007

No_Adv 2.083 .527 .781 3.952 .003

a. Dependent Variable: Sales

Gaurav Garg (IIMLucknow)

12/11/2012

16

SummaryOutput2:SalesVs.Ex_Adv

Model Summary

Model R R Square Adjusted R Square

Std. Error of the

Estimate

1 .820

a

.673 .640 3.84900

a. Predictors: (Constant), Ex_Adv

ANOVA

b

Model Sumof Squares df Mean Square F Sig.

1 Regression 305.039 1 305.039 20.590 .001

a

Residual 148.148 10 14.815

Total 453.187 11

a. Predictors: (Constant), Ex_Adv

b. Dependent Variable: Sales

Coefficients

a

Model

Unstandardized Coefficients

Standardized

Coefficients

t Sig. B Std. Error Beta

1 (Constant) 4.173 7.109 .587 .570

Ex_Adv 2.872 .633 .820 4.538 .001

a. Dependent Variable: Sales

Gaurav Garg (IIMLucknow)

SummaryOutput3:SalesVs.No_Adv &Ex_Adv

Model Summary

Model R R Square Adjusted R Square

Std. Error of the

Estimate

1 .827

a

.684 .614 3.98888

a. Predictors: (Constant), Ex_Adv, No_Adv

ANOVA

b

Model Sumof Squares df Mean Square F Sig.

1 Regression 309.986 2 154.993 9.741 .006

a

Residual 143.201 9 15.911

Total 453.187 11

a. Predictors: (Constant), Ex_Adv, No_Adv

b. Dependent Variable: Sales

Coefficients

a

Model

Unstandardized Coefficients

Standardized

Coefficients

t Sig. B Std. Error Beta

1 (Constant) 6.584 8.542 .771 .461

No_Adv .625 1.120 .234 .558 .591

Ex_Adv 2.139 1.470 .611 1.455 .180

a. Dependent Variable: Sales

Gaurav Garg (IIMLucknow)

QualitativeIndependentVariables

JohnsonFiltration,Inc.,providesmaintenance

serviceforwaterfiltrationsystemsthroughout

southernFlorida.

Toestimatetheservicetimeandtheservicecost,

themanagerswanttopredicttherepairtime

necessaryforeachmaintenancerequest.

Repairtimeisbelievedtoberelatedtotwo

factors

Numberofmonthssincethelastmaintenance

service

Typeofrepairproblem(mechanicalorelectrical)

Gaurav Garg (IIMLucknow)

Dataforasampleof10servicecallsaregiven:

LetY denotetherepairtime,X

1

denotethenumberof

monthssincelastmaintenanceservice.

RegressionModelthatusesX

1

onlytoregressY is

Y=

0

+

1

X

1

+

ServiceCall

MonthsSinceLast

Service TypeofRepair

RepairTimein

Hours

1 2 electrical 2.9

2 6 mechanical 3.0

3 8 electrical 4.8

4 3 mechanical 1.8

5 2 electrical 2.9

6 7 electrical 4.9

7 9 mechanical 4.2

8 8 mechanical 4.8

9 4 electrical 4.4

10 6 electrical 4.5

Gaurav Garg (IIMLucknow)

Usingleastsquaresmethod,wefittedthemodelas

R

2

=0.534

At5%levelofsignificance,wereject

H

0

:

0

= 0 (Usingt test)

H

0

:

1

= 0 (Usingt andF test)

X

1

aloneexplains53.4%variabilityinrepairtime.

Tointroducethetypeofrepairintothemodel,wedefinea

dummyvariablegivenas

RegressionModelthatusesX

1

andX

2

toregressY is

Y=

0

+

1

X

1

+

2

X

2

+

Isthenewmodelimproved?

1

3041 . 0 1473 . 2

X Y + =

=

electrical is repair of type if 1,

mechanical is repair of type if , 0

2

X

Gaurav Garg (IIMLucknow)

Summary

Multiplelinearregressionmodel Y=X +

LeastSquaresEstimateof isgivenbyb= (XX)

-1

XY

R

2

andadjustedR

2

UsingANOVA(F test),weexamineifallsarezeroor

not.

t testisconductedforeachregressor separately.

Usingt test,weexamineif correspondingtothat

regressor iszeroornot.

ProblemofMulticollinearity VIF,eigen value

DummyVariable

Examiningtheassumptions:

commonvariance,independence,normality

Gaurav Garg (IIMLucknow)

Вам также может понравиться

- Comparative Statics and Derivatives ExplainedДокумент20 страницComparative Statics and Derivatives Explainedawa_caemОценок пока нет

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsОт EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsОценок пока нет

- Statistics Final Exam Review PDFДокумент27 страницStatistics Final Exam Review PDFEugine VerzonОценок пока нет

- Question - Bank - Biostatistics (2017!02!03 02-43-30 UTC)Документ53 страницыQuestion - Bank - Biostatistics (2017!02!03 02-43-30 UTC)Waqas Qureshi100% (4)

- Transformation of Axes (Geometry) Mathematics Question BankОт EverandTransformation of Axes (Geometry) Mathematics Question BankРейтинг: 3 из 5 звезд3/5 (1)

- AE 321 - Solution of Homework #5: (5×5 25 POINTS)Документ9 страницAE 321 - Solution of Homework #5: (5×5 25 POINTS)Arthur DingОценок пока нет

- AncovaДокумент21 страницаAncovaAgus IndrawanОценок пока нет

- Reyem Affiar Case StudyДокумент13 страницReyem Affiar Case Studymarobi12Оценок пока нет

- Linear Regression Analysis ExplainedДокумент96 страницLinear Regression Analysis ExplainedPushp ToshniwalОценок пока нет

- Linear Regression Analysis: Predicting Lung Capacity from Cigarette SmokingДокумент16 страницLinear Regression Analysis: Predicting Lung Capacity from Cigarette SmokingsumitОценок пока нет

- Correlation and Regression: Smoking and Lung CapacityДокумент7 страницCorrelation and Regression: Smoking and Lung CapacitymonuagarОценок пока нет

- Unit 2.3Документ12 страницUnit 2.3Laxman Naidu NОценок пока нет

- Linear Regression Analysis: Gaurav Garg (IIM Lucknow)Документ96 страницLinear Regression Analysis: Gaurav Garg (IIM Lucknow)Sakshi JainОценок пока нет

- Quiz 2bДокумент4 страницыQuiz 2bAhmed HyderОценок пока нет

- Discrete Random Variables and Probability DistributionsДокумент36 страницDiscrete Random Variables and Probability DistributionskashishnagpalОценок пока нет

- Simple Linear Regression and Correlation - Class ExampleДокумент18 страницSimple Linear Regression and Correlation - Class ExampleNokubonga RadebeОценок пока нет

- Rolle's Theorem:: Differential Calculus - One VariableДокумент4 страницыRolle's Theorem:: Differential Calculus - One VariableRohit RaoОценок пока нет

- Chap 1 Preliminary Concepts: Nkim@ufl - EduДокумент20 страницChap 1 Preliminary Concepts: Nkim@ufl - Edudozio100% (1)

- Correlation and Regression AnalysisДокумент23 страницыCorrelation and Regression AnalysisMichael EdwardsОценок пока нет

- Corr Reg UpdatedДокумент34 страницыCorr Reg UpdatedMugilan KumaresanОценок пока нет

- Special Matrices and Gauss-SiedelДокумент21 страницаSpecial Matrices and Gauss-Siedelfastidious_5Оценок пока нет

- Regression Basics: Predicting A DV With A Single IVДокумент20 страницRegression Basics: Predicting A DV With A Single IVAmandeep_Saluj_9509Оценок пока нет

- RegressionДокумент16 страницRegressionchinusccОценок пока нет

- Multiple Regression Analysis, The Problem of EstimationДокумент53 страницыMultiple Regression Analysis, The Problem of Estimationwhoosh2008Оценок пока нет

- Discrete Mathematics I: SolutionДокумент4 страницыDiscrete Mathematics I: Solutionryuu.ducatОценок пока нет

- 3 - Variational Principles - Annotated Notes Sept 29 and Oct 4Документ15 страниц3 - Variational Principles - Annotated Notes Sept 29 and Oct 4Luay AlmniniОценок пока нет

- 03 - Mich - Solutions To Problem Set 1 - Ao319Документ13 страниц03 - Mich - Solutions To Problem Set 1 - Ao319albertwing1010Оценок пока нет

- Domodar N. Gujarati: Chapter # 8: Multiple Regression AnalysisДокумент41 страницаDomodar N. Gujarati: Chapter # 8: Multiple Regression AnalysisaraОценок пока нет

- Session 18Документ15 страницSession 18Dipak Kumar PatelОценок пока нет

- Linear Regression Analysis in RДокумент14 страницLinear Regression Analysis in Raftab20Оценок пока нет

- 2D TRACKING KALMAN FILTER TUTORIALДокумент8 страниц2D TRACKING KALMAN FILTER TUTORIALpankaj_dliОценок пока нет

- Chapter # 6: Multiple Regression Analysis: The Problem of EstimationДокумент43 страницыChapter # 6: Multiple Regression Analysis: The Problem of EstimationRas DawitОценок пока нет

- B.A. (Hons) Business Economics DegreeДокумент10 страницB.A. (Hons) Business Economics DegreeMadhav ।Оценок пока нет

- Nonlinear Programming UnconstrainedДокумент182 страницыNonlinear Programming UnconstrainedkeerthanavijayaОценок пока нет

- DeretДокумент88 страницDeretMuhammad WildanОценок пока нет

- Tutorial Rotors - SolutionДокумент25 страницTutorial Rotors - SolutionMuntoiaОценок пока нет

- Engineering Apps: Session 4-Introduction To DAДокумент37 страницEngineering Apps: Session 4-Introduction To DAMohamed Hesham GadallahОценок пока нет

- Three Example Lagrange Multiplier Problems PDFДокумент4 страницыThree Example Lagrange Multiplier Problems PDFcarolinaОценок пока нет

- Reference Answers For Assignment4Документ4 страницыReference Answers For Assignment4creation portalОценок пока нет

- Mit6 041f10 l11Документ3 страницыMit6 041f10 l11api-246008426Оценок пока нет

- Chapter 2 Answer James Stewart 5th EditionДокумент86 страницChapter 2 Answer James Stewart 5th Edition654501Оценок пока нет

- ME-223 Assignment 7 Solutions: SolutionДокумент10 страницME-223 Assignment 7 Solutions: SolutionShivangОценок пока нет

- TM Xime PGDM QT IДокумент76 страницTM Xime PGDM QT IRiya SinghОценок пока нет

- PHD Course Lectures: OptimizationДокумент25 страницPHD Course Lectures: OptimizationGafeer FableОценок пока нет

- Problem Set 2 - AnswersДокумент5 страницProblem Set 2 - Answersdxd032Оценок пока нет

- ENGR 6951: Automatic Control Engineering Supplementary Notes On Stability AnalysisДокумент5 страницENGR 6951: Automatic Control Engineering Supplementary Notes On Stability AnalysisTharakaKaushalyaОценок пока нет

- Bài Tập Ước Lượng C12346Документ55 страницBài Tập Ước Lượng C12346Nguyễn Viết DươngОценок пока нет

- Circuit Analysis Summary: CS231 Boolean Algebra 1Документ14 страницCircuit Analysis Summary: CS231 Boolean Algebra 1huyvt01Оценок пока нет

- A matrisinin özvektörleri ve benzerlik dönüşümüДокумент3 страницыA matrisinin özvektörleri ve benzerlik dönüşümüEsmael AdemОценок пока нет

- Week 7Документ7 страницWeek 7Hasnain DanishОценок пока нет

- Chapter 2Документ30 страницChapter 2MengHan LeeОценок пока нет

- STAT - Lec.3 - Correlation and RegressionДокумент8 страницSTAT - Lec.3 - Correlation and RegressionSalma HazemОценок пока нет

- TensorsДокумент46 страницTensorsfoufou200350% (2)

- Principle of Least SquareДокумент6 страницPrinciple of Least SquareAiman imamОценок пока нет

- Finals (STS)Документ120 страницFinals (STS)Johnleo AtienzaОценок пока нет

- Probability Theory and Mathematical Statistics: Homework 2, Vitaliy PozdnyakovДокумент13 страницProbability Theory and Mathematical Statistics: Homework 2, Vitaliy PozdnyakovGarakhan TalibovОценок пока нет

- Correlation and Regression NotesДокумент14 страницCorrelation and Regression NotesSwapnil OzaОценок пока нет

- Chapter 4: Probability Distributions: Discrete Random Variables Continuous Random VariablesДокумент64 страницыChapter 4: Probability Distributions: Discrete Random Variables Continuous Random VariablesDaОценок пока нет

- Herimite Shape Function For BeamДокумент29 страницHerimite Shape Function For BeamPisey KeoОценок пока нет

- Motilal Nehru National Institute of Technology Civil Engineering Department Least Square Regression Curve FittingДокумент44 страницыMotilal Nehru National Institute of Technology Civil Engineering Department Least Square Regression Curve Fittingvarunsingh214761Оценок пока нет

- 15.1 Dynamic OptimizationДокумент32 страницы15.1 Dynamic OptimizationDaniel Lee Eisenberg JacobsОценок пока нет

- Updated Journal List 2015Документ5 страницUpdated Journal List 2015Prateek SharmaОценок пока нет

- Annexure BДокумент1 страницаAnnexure BDEEPAK2502Оценок пока нет

- SSRN Id1458478Документ49 страницSSRN Id1458478Prateek SharmaОценок пока нет

- Water Privatization Reduces Child Mortality by 8Документ39 страницWater Privatization Reduces Child Mortality by 8Prateek SharmaОценок пока нет

- Journal of Banking & Finance: Yufeng Han, Ting Hu, Jian YangДокумент21 страницаJournal of Banking & Finance: Yufeng Han, Ting Hu, Jian YangPrateek SharmaОценок пока нет

- CFT Outline Avk 2013Документ6 страницCFT Outline Avk 2013Prateek SharmaОценок пока нет

- Cash BudgetДокумент11 страницCash BudgetPrateek SharmaОценок пока нет

- WMC Abstract Poster - 9.12Документ27 страницWMC Abstract Poster - 9.12Prateek SharmaОценок пока нет

- Acme ExcelДокумент3 страницыAcme ExcelPrateek SharmaОценок пока нет

- Alhadi 2016Документ21 страницаAlhadi 2016Prateek SharmaОценок пока нет

- AssignmentДокумент1 страницаAssignmentPrateek SharmaОценок пока нет

- Cash DIscountsДокумент4 страницыCash DIscountsPrateek SharmaОценок пока нет

- List of FiguresДокумент2 страницыList of FiguresPrateek SharmaОценок пока нет

- Tables - All LatestДокумент16 страницTables - All LatestPrateek SharmaОценок пока нет

- Companies Act 2013 Key Highlights and Analysis in MalaysiaДокумент52 страницыCompanies Act 2013 Key Highlights and Analysis in MalaysiatruthosisОценок пока нет

- Realized Garch: A Joint Model For Returns and Realized Measures of VolatilityДокумент30 страницRealized Garch: A Joint Model For Returns and Realized Measures of VolatilitymshchetkОценок пока нет

- Labour Quality in Indian Manufacturing A State Level AnalysisДокумент50 страницLabour Quality in Indian Manufacturing A State Level AnalysisPrateek SharmaОценок пока нет

- Difference Between Private and Public Lts CompaniesДокумент8 страницDifference Between Private and Public Lts CompaniesPrateek SharmaОценок пока нет

- Filet NiftДокумент513 страницFilet NiftPrateek SharmaОценок пока нет

- Gene RealizedДокумент43 страницыGene RealizedPrateek SharmaОценок пока нет

- Ebes Lisbon Abstract Paper4Документ2 страницыEbes Lisbon Abstract Paper4Prateek SharmaОценок пока нет

- Cut Off IES 2014Документ1 страницаCut Off IES 2014ShanKar PadmanaBhanОценок пока нет

- Employ TrenzДокумент10 страницEmploy Trenzabhi1211pОценок пока нет

- BMand GBMdocДокумент7 страницBMand GBMdocPrateek SharmaОценок пока нет

- Measure IndiaДокумент9 страницMeasure IndiaPrateek SharmaОценок пока нет

- Globalisation and Child Labour Evidence From IndiaДокумент17 страницGlobalisation and Child Labour Evidence From IndiaPrateek SharmaОценок пока нет

- Final India TPR Report 3Документ14 страницFinal India TPR Report 3adityatnnlsОценок пока нет

- Itr 62 Form 15 GДокумент2 страницыItr 62 Form 15 GAccounting & TaxationОценок пока нет

- PGP-I Term I Mid Term Exam Invigilation Duty Chart July 28-31, 2014Документ1 страницаPGP-I Term I Mid Term Exam Invigilation Duty Chart July 28-31, 2014Prateek SharmaОценок пока нет

- StatisticsДокумент8 страницStatisticsMarinel Mae ChicaОценок пока нет

- Chap10 MasДокумент58 страницChap10 MasPhan Huynh Minh Tri (K15 HCM)Оценок пока нет

- ISLR Chap 7 Shaheryar-MutahiraДокумент15 страницISLR Chap 7 Shaheryar-MutahiraShaheryar ZahurОценок пока нет

- Statistik SoalДокумент3 страницыStatistik SoaljoslinmtggmailcomОценок пока нет

- Excel For Statistical Data AnalysisДокумент42 страницыExcel For Statistical Data Analysispbp2956100% (1)

- 2 Forecast AccuracyДокумент26 страниц2 Forecast Accuracynatalie clyde matesОценок пока нет

- 2.2 Hyphothesis Testing (Continuous)Документ35 страниц2.2 Hyphothesis Testing (Continuous)NS-Clean by SSCОценок пока нет

- Jigsaw Academy-Foundation Course Topic DetailsДокумент10 страницJigsaw Academy-Foundation Course Topic DetailsAtul SinghОценок пока нет

- Simple Linear Regression: Y XI. XI XДокумент25 страницSimple Linear Regression: Y XI. XI XyibungoОценок пока нет

- Negative Binomial Control Limits For Count Data With Extra-Poisson VariationДокумент6 страницNegative Binomial Control Limits For Count Data With Extra-Poisson VariationKraken UrОценок пока нет

- Analysis of Service, Price and Quality Products To Customer SatisfactionДокумент6 страницAnalysis of Service, Price and Quality Products To Customer SatisfactionInternational Journal of Innovative Science and Research TechnologyОценок пока нет

- Toledo GasДокумент5 страницToledo GasRongor10Оценок пока нет

- QT Past Exam Question Papers. Topic by TopicДокумент25 страницQT Past Exam Question Papers. Topic by TopicalbertОценок пока нет

- Probablity and Statistics - Education Related DistributionsДокумент10 страницProbablity and Statistics - Education Related DistributionsShakthiguru RadhaKrishnanОценок пока нет

- Chi Squared TestДокумент11 страницChi Squared TestJoyce OrtizОценок пока нет

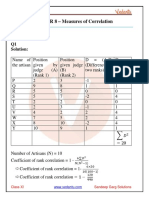

- Sandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationДокумент5 страницSandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationRiddhiman JainОценок пока нет

- Introduction To SamplingДокумент10 страницIntroduction To Samplingadiba ridahОценок пока нет

- Ch04 Sect01Документ47 страницCh04 Sect01yuenhueiОценок пока нет

- Statistics For Business and EconomicsДокумент13 страницStatistics For Business and Economicsakhil kvОценок пока нет

- Cohen 1992Документ5 страницCohen 1992Adriana Turdean VesaОценок пока нет

- Stat For Fin CH 5Документ9 страницStat For Fin CH 5Wonde Biru100% (1)

- Cohen's Conventions For Small, Medium, and Large Effects: Difference Between Two MeansДокумент2 страницыCohen's Conventions For Small, Medium, and Large Effects: Difference Between Two MeansJonathan Delos SantosОценок пока нет

- Anova in Excel - Easy Excel TutorialДокумент4 страницыAnova in Excel - Easy Excel TutorialJamalodeen MohammadОценок пока нет

- Key Ingredients for Inferential StatisticsДокумент65 страницKey Ingredients for Inferential StatisticsJhunar John TauyОценок пока нет

- Logistic Regression AssignmentДокумент20 страницLogistic Regression AssignmentKiran FerozОценок пока нет

- Ijsrmjournal, Study Habits and Their Effects With The Academic Performance of Bachelor of Science in Radiologic Technology StudentsДокумент17 страницIjsrmjournal, Study Habits and Their Effects With The Academic Performance of Bachelor of Science in Radiologic Technology StudentsVivek SinghОценок пока нет