Академический Документы

Профессиональный Документы

Культура Документы

541 693 1 PB

Загружено:

jeffa123Исходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

541 693 1 PB

Загружено:

jeffa123Авторское право:

Доступные форматы

Volume 3, No. 11, November 2012 Journal of Global Research in Computer Science RESEARCH PAPER Available Online at www.jgrcs.

info

PERFORMANCE EVALUATION OF MULTIVIEWPOINT-BASED SIMILARITY MEASURE FOR DATA CLUSTERING

K.A.V.L.Prasanna*1, Mr. Vasantha Kumar*2

*1

Post Graduate Student, Avanthi Institute of Engineering and Technology, Visakhapatnam chpraasanna48@yahoo.com *2 HOD, Dept of C.S.E, Avanthi Institute of Engineering and Technology, Visakhapatnam

Abstract: Some cluster relationship has to be considered for all clustering methods surrounded by the data objects which will be applie d on. There may be a similarity between a pair of objects which can be defined as a choice of explicitly or implicitly. We in this paper introduce a novel multiviewpoint based similarity measure and two related clustering methods. The main distinctness of our concept with a traditional dissimilarity/similarity measure is that the aforementioned dissimilarity/similarity exercises only a single view point for which it is the base an d where as the mentioned Clustering with Multiviewpoint-Based Similarity Measure uses many different viewpoints that are objects and are assumed to not be in the same cluster with two objects being measured. By utilizing multiple viewpoints, countless descriptive evaluation could be accomplished. In order to assist this declaration, the theoretical analysis and empirical study are carried. Depending on this new measure two criterion functions are proposed for document clustering. We examine them with certain distinguished clustering algorithms which use other preferred coincident measures on different group of documents in order to verify the improvement of our scheme. Index terms: Data mining, text mining, similarity measure, multi-viewpoint similarity measure, clustering methods.

INTRODUCTION Clustering is a process of grouping a set of physical or abstract objects into classes of similar objects and is a most interesting concept of data mining in which it is defined as a collection of data objects that are similar to one another. Purpose of Clustering is to catch fundamental structures in data and classify them into meaningful subgroup for additional analysis. Many of the clustering algorithms have been published every year and can be proposed for different research fields. They were developed by using various techniques and approaches. But according to the recent study k-means has been one of the top most data mining algorithms presently. For many of the practitioners k-means is the favorite algorithm in their related fields to use. Even though it is a top most algorithm, it has a few basic drawbacks when clusters are of differing sizes, densities and non-globular shape. Irrespective of the drawbacks is simplicity, understandability, and scalability is the main reasons that made the algorithm popular. An algorithm with adequate performance and usability in most of application scenarios could be preferable to one with better performance in some cases but limited usage due to high complexity. While offering reasonable results, kmeans is fast and easy to combine with other methods in larger systems. A common approach to the clustering problem is to treat it as an optimization process. An optimal partition is found by optimizing a particular function of similarity (or distance) among data. Basically, there is an implicit assumption that the true intrinsic structure of data could be correctly described by the similarity formula defined and embedded in the clustering criterion function. Hence, effectiveness of clustering algorithms under this approach depends on the appropriateness of the similarity measure to the data at hand. For instance, the original kmeans has sum-of-squared-error objective function that uses

JGRCS 2010, All Rights Reserved

Euclidean distance: In a very sparse and high-dimensional domain like text documents, spherical k-means, which uses cosine similarity (CS) instead of Euclidean distance as the measure, is deemed to be more suitable .In, Banerjee et al. showed that Euclidean distance was indeed one particular form of a class of distance measures called Bregman divergences. They proposed Bregman hard clustering algorithm, in which any kind of the Bregman divergences could be applied. Kullback-Leibler divergence was a special case of Bregman divergences that was said to give good clustering results on document data sets. Kullback-Leibler divergence is a good example of nonsymmetrical measure. Also on the topic of capturing dissimilarity in data, Pakalska et al. found that the discriminative power of some distance measures could increase when their non-Euclidean and nonmetric attributes were increased. They concluded that non-Euclidean and nonmetric measures could be informative for statistical learning of data. In , Pelillo even argued that the symmetry and nonnegative assumption of similarity measures was actually a limitation of current state-of-the-art clustering approaches. Simultaneously, clustering Still requires more robust dissimilarity or similarity measures; recent works such as [8] illustrate this need. The work in this paper is motivated by investigations from the above and similar research findings. It appears to us that the nature of similarity measure plays a very important role in the success or failure of a clustering method. Our first objective is to derive a novel method for measuring similarity between data objects in sparse and highdimensional domain, particularly text documents. From the proposed similarity measure, we then formulate new clustering criterion functions and introduce their respective clustering algorithms, which are fast and scalable like kmeans, but are also capable of providing high-quality and consistent performance.

21

K.A.V.L.Prasanna et al, Journal of Global Research in Computer Science, 3 (11), November 2012, 21-26

A common approach to the clustering problem is to treat it as an optimization process. An optimal partition is found by optimizing a particular function of similarity (or distance) among data. Basically, there is an implicit assumption that the true intrinsic structure of data could be correctly described by the similarity formula defined and embedded in the clustering criterion function. Hence, effectiveness of clustering algorithms under this approach depends on the appropriateness of the similarity measure to the data at hand. For instance, the original k-means has sum-of-squared-error objective function that uses Euclidean distance. In a very sparse and high-dimensional domain like text documents, spherical k-means, which uses cosine similarity (CS) instead of Euclidean distance as the measure, is deemed to be more suitable. The work in this paper is motivated by investigations from the above and similar research findings. It appears to us that the nature of similarity measure plays a very important role in the success or failure of a clustering method. Our first objective is to derive a novel method for measuring similarity between data objects in sparse and highdimensional domain, particularly text documents. From the proposed similarity measure, we then formulate new clustering criterion functions and introduce their respective clustering algorithms, which are fast and scalable like kmeans, but are also capable of providing high-quality and consistent performance. BACKGROUND WORK Document clustering is one of the text mining techniques. It has been around since the inception of text mining domain. It is s process of grouping objects into some categories or groups in such a way that there is maximization of intracluster object similarity and inter-cluster dissimilarity. Here an object does mean a document and term refers to a word in the document. Each document considered for clustering is represented as an m dimensional vector d. The represents the total number of terms present in the given document. Document vectors are the result of some sort of weighting schemes like TF-IDF (Term Frequency Inverse Document Frequency). Many approaches came into existence for document clustering. They include information theoretic coclustering, non negative matrix factorization, and probabilistic model based method and so on. However, these approaches did not use specific measure in finding document similarity. In this paper we consider methods that specifically use certain measurement. From the literature it is found that one of the popular measures is Eucludian distance. Dist (di,dj) = ||di dj|| K-means is one of the popular clustering algorithms in the world. It is in the list of top 10. Due to its simplicity and ease of use it is still being used in the mining domain. Euclidian distance measure is used in kmeans algorithm. The main purpose of the k-means algorithm is to minimize the distance, as per Euclidian measurement, between objects in clusters. The centroid of such clusters is represented as: Min k ||di Cr||2 (2)

r=1 di Sr In text mining domain, cosine similarity measure is also widely used measurement for finding document similarity, especially for hi-dimensional and sparse document clustering. The cosine similarity measure is also used in one of the variants of k-means known as spherical k-means. It is mainly used to maximize the cosine similairity between clusters centroid and the documents in the cluster. The difference between k-means that uses Euclidian distance and the k-means that make use of cosine similarity is that the former focuses on vector magnitudes while the latter focuses on vector directions. Another popular approach is known as graph partitioning approach. In this approach the document corpus is considered as a graph. Min max cut algorithm is the one that makes use of this approach and it focuses on minimizing centroid function. (3) Other graph partitioning methods include Normalized Cut and Average Weight is used for document clustering purposes successfully. They used pair wise and cosine similarity score for document clustering. For document clustering analysis of criterion functions is made. CLUTO software package where another method of document clustering based on graph partitioning is implemented. It builds nearest neighbor graph first and then makes clusters. In this approach for given non-unit vectors of document the extend Jaccard coefficient is:

Both direction and magnitude are considered in Jaccard coefficients when compared with cosine similarity and Euclidean distance. When the documents in clusters are represented as unit vectors, the approach is very much similar to cosine similarity. All measures such as cosine, Euclidean, Jaccard, and Pearson correlation are compared. The conclusion made here is that Ecludean and Jaccard are best for web document clustering. In [1] and research has been made on categorical data. They both selected related attributes for given subject and calculated distance between two values. Document similarities can also be found using approaches that are concept and phrase based. In [1] treemilarity measure is used conceptually while proposed phrase-based approach. Both of them used an algorithm known as Hierarchical Agglomerative Clustering in order to perform clustering. Their computational complexity is very high that is the drawback of these approaches. For XML documents also measures are found to know structural similarity [5]. However, they are different from normal text document clustering. MULTI-VIEWPOINT BASED SIMILARITY Our main aim is to find similarity between documents or objects while performing clustering is multi-view based similarity. It makes use of more than one point of reference as opposed to existing algorithms used for clustering text

22

JGRCS 2010, All Rights Reserved

K.A.V.L.Prasanna et al, Journal of Global Research in Computer Science, 3 (11), November 2012, 21-26

documents. As per our approach the similarity between two documents is calculated as: Sim(di,dj) = 1/n-nr Sim (di-dh, dj-dh) dt,djSrdhS\Sr (5)

m. n. o. p.

end for validity _ni1 validity(di)n return validity end procedure

Here the description of this approach can be given like this. Consider two point di and dj in cluster Sr. The similarity between those two points is viewed from a point dh which is outside the cluster. Such similarity is equal to the product of cosine angle between those points with respect to Eucludean distance between the points. An assumption on which this definition is based on is dh is not the same cluster as di and dj. When distances are smaller the chances are higher that the dh is in the same cluster. Though various viewpoints are useful in increasing the accuracy of similarity measure there is a possibility of having that give negative result. However the possibility of such drawback can be ignored provided plenty of documents to be clustered. A series of algorithms are proposed to achieve MVS (MultiView point Similarity). The following is a procedure for building similarity matrix of MVS. a. procedure BUILDMVSMATRIX (A) b. for r 1: c do c. DSISrI_di/ Sr di d. nSISr |S ISr| e. end for f. for i 1 : n do g. r class of di h. for j 1 : n do i. if dj Sr then j. aij dti dj dti DSISr nSISr dt j DS\Sr nSISr + 1 k. else l. aijdti djdti DSISr dj nSISr 1dt j DSISr dj nSISr 1 m. end if n. end for o. end for p. return A = {aij}nn q. end procedure Algorithm 3:Procedure for building MVS similarity matrix From the consition it is understood that when di is considered closer to dl, the dl can still be considered being closer to di as per MVS. For validation purpose the following algorithm is used. Require: 0 < percentage 1 a. procedure GETVALIDITY(validity,A, percentage) b. for r 1 : c do c. qr _percentage nr d. if qr = 0 then _ percentage too small e. qr 1 f. end if g. end for h. for i 1 : n do i. {aiv[1], . . . , aiv[n] } Sort {ai1, . . . , ain} j. s.t. aiv[1] aiv[2] . . . aiv[n] {v[1], . . . , v[n]} permute {1, . . . , n} k. r class of di l. validity(di) |{dv[1], . . . , dv[qr] } Sr| qr

JGRCS 2010, All Rights Reserved

Algorithm 4:Procedure for getting validity score The final validity is calculated by averaging overall the rows of A as given in line 14. When the validity score is higher, the suitability is more for clustering. SYSTEM DESIGN The system design for finding similarity between documents or objects is as follows: a. Initializing the weights parameters. b. Using the EM algorithm to estimate their means and covariance. c. Grouping the data to classes by the value of probability density to each class and calculating the weight of each class. d. Repeat the first step until the cluster number reaches the desired number or the largest OLR is smaller than the predefined threshold value. Go to step 3 and output the result. A distinctive element in this algorithm is to use the overlap rate to measure similarity between clusters.

Figure 4.1 Design Layout

Implementation is the stage of the project when the theoretical design is turned out into a working system. Thus it can be considered to be the most critical stage in achieving a successful new system and in giving the user, confidence that the new system will work and be effective. The implementation stage involves careful planning, investigation of the existing system and its constraints on implementation, designing of methods to achieve changeover and evaluation of changeover methods. HTML Parser: a. Parsing is the first step done when the document enters the process state. b. Parsing is defined as the separation or identification of meta tags in a HTML document. c. Here, the raw HTML file is read and it is parsed through all the nodes in the tree structure. Cumulative Document: a. The cumulative document is the sum of all the documents, containing meta-tags from all the documents. b. We find the references (to other pages) in the input base document and read other documents and then find references in them and so on. c. Thus in all the documents their meta-tags are identified, starting from the base document.

23

K.A.V.L.Prasanna et al, Journal of Global Research in Computer Science, 3 (11), November 2012, 21-26

Document Similarity: The similarity between two documents is found by the cosine-similarity measure technique. a. The weights in the cosine-similarity are found from the TF-IDF measure between the phrases (metatags) of the two documents. b. This is done by computing the term weights involved. c. TF = C / T d. IDF = D / DF. e. D quotient of the total number of documents f. DF number of times each word is found in the entire corpus g. C quotient of no of times a word appears in each document h. T total number of words in the document i. TFIDF = TF * IDF Clustering: a. Clustering is a division of data into groups of similar objects. b. Representing the data by fewer clusters necessarily loses certain fine details, but achieves simplification. c. The similar documents are grouped together in a cluster, if their cosine similarity measure is less than a specified threshold [9]. Proposed architecture:

a. b. c. d.

What data should be given as input? How the data should be arranged or coded? The dialog to guide the operating personnel in providing input. Methods for preparing input validations and steps to follow when error occur.

Objectives: a. Input Design is the process of converting a useroriented description of the input into a computerbased system. This design is important to avoid errors in the data input process and show the correct direction to the management for getting correct information from the computerized system. b. It is achieved by creating user-friendly screens for the data entry to handle large volume of data. The goal of designing input is to make data entry easier and to be free from errors. The data entry screen is designed in such a way that all the data manipulates can be performed. It also provides record viewing facilities. c. When the data is entered it will check for its validity. Data can be entered with the help of screens. Appropriate messages are provided as when needed so that the user will not be in maize of instant. Thus the objective of input design is to create an input layout that is easy to follow Output design: A quality output is one, which meets the requirements of the end user and presents the information clearly. In any system results of processing are communicated to the users and to other system through outputs. In output design it is determined how the information is to be displaced for immediate need and also the hard copy output. It is the most important and direct source information to the user. Efficient and intelligent output design improves the systems relationship to help user decision-making. a. Designing computer output should proceed in an organized, well thought out manner; the right output must be developed while ensuring that each output element is designed so that people will find the system can use easily and effectively. When analysis design computer output, they should Identify the specific output that is needed to meet the requirements. Select methods for presenting information. Create document, report, or other formats that contain information produced by the system.

b. c.

Figure 4.2 Architecture

Input design: The input design is the link between the information system and the user. It comprises the developing specification and procedures for data preparation and those steps are necessary to put transaction data in to a usable form for processing can be achieved by inspecting the computer to read data from a written or printed document or it can occur by having people keying the data directly into the system. The design of input focuses on controlling the amount of input required, controlling the errors, avoiding delay, avoiding extra steps and keeping the process simple. The input is designed in such a way so that it provides security and ease of use with retaining the privacy. Input Design considered the following things:

JGRCS 2010, All Rights Reserved

The output form of an information system should accomplish one or more of the following objectives. a. Convey information about past activities, current status or projections of the b. Future. c. Signal important events, opportunities, problems, or warnings. d. Trigger an action. e. Confirm an action. IMPLEMENTATION A use case is a set of scenarios that describing an interaction between a user and a system. A use case diagram displays

24

K.A.V.L.Prasanna et al, Journal of Global Research in Computer Science, 3 (11), November 2012, 21-26

the relationship among actors and use cases. The two main components of a use case diagram are use cases and actors. An actor is represents a user or another system that will interact with the system you are modeling. A use case is an external view of the system that represents some action the user might perform in order to complete a task.

s e le ct pa t h

and interactions are show as arrows. This article explains thepurpose and the basics of Sequence diagrams.

/select file /process /histogram /clusters /similarity /result

1 : select file()

proce s s

2 : process the file()

his t ogra m

3 : divide histograms()

4 : divide clusters()

clus t e rs

5 : no of similarities() 6 : result()

s im ila rit y

Re s ult

Figure 5.3: Sequence diagram Figure 5.1 Use case diagram

RESULTS

Class diagrams are widely used to describe the types of objects in a system and their relationships. Class diagrams model class structure and contents using design elements such as classes, packages and objects. Class diagrams describe three different perspectives when designing a system, conceptual, specification, and implementation. These perspectives become evident as the diagram is created and help solidify the design. Class diagrams are arguably the most used UML diagram type. It is the main building block of any object oriented solution.

select file +file() Process +process() Histogram +histogram()

result Clusters +cluster() Similarity +similarity()

Figure 5.2: Class diagram

It shows the classes in a system, attributes and operations of each class and the relationship between each class. In most modeling tools a class has three parts, name at the top, attributes in the middle and operations or methods at the bottom. In large systems with many classes related classes are grouped together to to create class diagrams. Different relationships between diagrams are show by different types of Arrows. Below is a image of a class diagram. Follow the link for more class diagram examples. Sequence diagrams in UML shows how object interact with each other and the order those interactions occur. Its important to note that they show the interactions for a particular scenario. The processes are represented vertically

JGRCS 2010, All Rights Reserved 25

K.A.V.L.Prasanna et al, Journal of Global Research in Computer Science, 3 (11), November 2012, 21-26

multiple viewpoints. This paper also concentrates on partitional clustering of documents. Further the proposed criterion functions for hierarchical clustering algorithms would also be achievable for applications .At last we have shown the application of MVS and its clustering algorithms for text data. REFERENCES

[1]. Clustering with Multiviewpoint-Based Similarity Measure Duc Thang Nguyen, Lihui Chen, Senior Member, IEEE, and Chee Keong Chan, IEEE transactions on knowledge and data engineering, vol. 24, no. 6, june 2012 I. Guyon, U.V. Luxburg, and R.C. Williamson, Clustering: Science or Art?, Proc. NIPS Workshop Clustering Theory, 2009. I. Dhillon and D. Modha, Concept Decompositions for Large Sparse Text Data Using Clustering, Machine Learning, vol. 42, nos. 1/2, pp. 143-175, Jan. 2001. S. Zhong, Efficient Online Spherical K-means Clustering, Proc. IEEE Intl Joint Conf. Neural Networks (IJCNN), pp. 3180-3185, 2005. A. Banerjee, S. Merugu, I. Dhillon, and J. Ghosh, Clustering with Bregman Divergences, J. Machine Learning Research, vol. 6, pp. 1705-1749, Oct. 2005. E. Pekalska, A. Harol, R.P.W. Duin, B. Spillmann, and H. Bunke, Non-Euclidean or Non-Metric Measures Can Be Informative, Structural, Syntactic, and Statistical Pattern Recognition, vol. 4109, pp. 871-880, 2006. M. Pelillo, What Is a Cluster? Perspectives from Game Theory, Proc. NIPS Workshop Clustering Theory, 2009. D. Lee and J. Lee, Dynamic Dissimilarity Measure for Support Based Clustering, IEEE Trans. Knowledge and Data Eng., vol. 22, no. 6, pp. 900-905, June 2010. A. Banerjee, I. Dhillon, J. Ghosh, and S. Sra, Clustering on the Unit Hypersphere Using Von Mises-Fisher Distributions, J. Machine Learning Research, vol. 6, pp. 1345-1382, Sept. 2005. W. Xu, X. Liu, and Y. Gong, Document Clustering Based on Non- Negative Matrix Factorization, Proc. 26th Ann. Intl ACM SIGIR Conf. Research and Development in Informaion Retrieval, pp. 267-273, 2003. I.S. Dhillon, S. Mallela, and D.S. Modha, InformationTheoretic Co-Clustering, Proc. Ninth ACM SIGKDD Intl Conf. Knowledge Discovery and Data Mining (KDD), pp. 89-98, 2003. http://iosrjournals.org/iosr-jce/papers/Vol4-issue6/ F0463742.pdf

[2].

[3].

[4].

[5].

[6].

[7]. [8].

CONCLUSION We in this paper propose a Multiviewpoint-based Similarity measuring method, named MVS.Both the Theoretical analysis and empirical examples represents that MVS is likely more supportive for text documents than the famous cosine similarity. Two criterion functions, IR and IV and the corresponding clustering algorithms MVSC-IR and MVSCIV have been introduced in this paper. The proposed algorithms MVSC-IR and MVSC-IV shows that they could afford significantly advanced clustering execution ,when compared with other state-of-the-art clustering methods that use distinctive methods of similarity measure on a very large number of document data sets concealed by various assessment metrics. The main aspect of our paper is to introduce the basic concept of similarity measure from

[9].

[10].

[11].

[12].

JGRCS 2010, All Rights Reserved

26

Вам также может понравиться

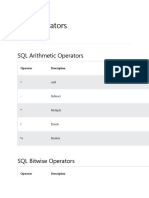

- SQL18Документ7 страницSQL18jeffa123Оценок пока нет

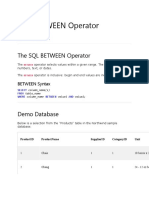

- The SQL BETWEEN OperatorДокумент8 страницThe SQL BETWEEN Operatorjeffa123Оценок пока нет

- Int Ques LokeshДокумент2 страницыInt Ques Lokeshjeffa123Оценок пока нет

- SQL18Документ7 страницSQL18jeffa123Оценок пока нет

- The SQL BETWEEN OperatorДокумент8 страницThe SQL BETWEEN Operatorjeffa123Оценок пока нет

- The SQL IN OperatorДокумент15 страницThe SQL IN Operatorjeffa123Оценок пока нет

- The SQL IN OperatorДокумент15 страницThe SQL IN Operatorjeffa123Оценок пока нет

- The SQL MIN and MAX FunctionsДокумент4 страницыThe SQL MIN and MAX Functionsjeffa123Оценок пока нет

- The SQL LIKE OperatorДокумент16 страницThe SQL LIKE Operatorjeffa123Оценок пока нет

- SQL WildcardsДокумент18 страницSQL Wildcardsjeffa123Оценок пока нет

- The SQL LIKE OperatorДокумент16 страницThe SQL LIKE Operatorjeffa123Оценок пока нет

- The SQL COUNT, AVG and SUM FunctionsДокумент7 страницThe SQL COUNT, AVG and SUM Functionsjeffa123Оценок пока нет

- SQL WildcardsДокумент18 страницSQL Wildcardsjeffa123Оценок пока нет

- SQL Top, Limit, Fetch First or ROWNUM ClauseДокумент7 страницSQL Top, Limit, Fetch First or ROWNUM Clausejeffa123Оценок пока нет

- The SQL DELETE StatementДокумент6 страницThe SQL DELETE Statementjeffa123Оценок пока нет

- The SQL AND, OR and NOT OperatorsДокумент15 страницThe SQL AND, OR and NOT Operatorsjeffa123Оценок пока нет

- SQL NULL Values: What Is A NULL Value?Документ6 страницSQL NULL Values: What Is A NULL Value?jeffa123Оценок пока нет

- SQL5Документ2 страницыSQL5jeffa123Оценок пока нет

- The SQL UPDATE StatementДокумент5 страницThe SQL UPDATE Statementjeffa123Оценок пока нет

- The SQL ORDER BY KeywordДокумент4 страницыThe SQL ORDER BY Keywordjeffa123Оценок пока нет

- The SQL INSERT INTO StatementДокумент5 страницThe SQL INSERT INTO Statementjeffa123Оценок пока нет

- SQL5Документ2 страницыSQL5jeffa123Оценок пока нет

- Learn SQL Tutorial - JavatpointДокумент7 страницLearn SQL Tutorial - Javatpointjeffa123Оценок пока нет

- The SQL AND, OR and NOT OperatorsДокумент15 страницThe SQL AND, OR and NOT Operatorsjeffa123Оценок пока нет

- The SQL Select Distinct StatementДокумент3 страницыThe SQL Select Distinct Statementjeffa123Оценок пока нет

- The SQL WHERE ClauseДокумент4 страницыThe SQL WHERE Clausejeffa123Оценок пока нет

- ETL Vs Database Testing - Tutorialspoint3Документ2 страницыETL Vs Database Testing - Tutorialspoint3jeffa123100% (1)

- ETL Testing  - Challenges - TutorialspointДокумент1 страницаETL Testing  - Challenges - Tutorialspointjeffa123Оценок пока нет

- ETL Â - Tester's Roles - TutorialspointДокумент2 страницыETL Â - Tester's Roles - Tutorialspointjeffa123Оценок пока нет

- ETL Testing Tutorial - Tutorialspoint1Документ1 страницаETL Testing Tutorial - Tutorialspoint1jeffa123Оценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (120)

- List - Assignment 1.1Документ2 страницыList - Assignment 1.1Pham Nguyen Thien Chuong K16 DNОценок пока нет

- Handout-1 Foundations of SetsДокумент3 страницыHandout-1 Foundations of Setsapi-253679034Оценок пока нет

- Solution - Real Analysis - Folland - Ch1Документ14 страницSolution - Real Analysis - Folland - Ch1胡爻垚Оценок пока нет

- Digital SAT Tests MATH Explanation (Mr. Amr Mustafa)Документ196 страницDigital SAT Tests MATH Explanation (Mr. Amr Mustafa)mr.samirjon.07Оценок пока нет

- Daily Practice Problems 2 PDFДокумент2 страницыDaily Practice Problems 2 PDFFUN 360Оценок пока нет

- ChassisДокумент1 страницаChassisariboroОценок пока нет

- Lecture 3 Bilinear TFДокумент32 страницыLecture 3 Bilinear TFNathan KingoriОценок пока нет

- D - AlgorithmДокумент17 страницD - AlgorithmpkashyОценок пока нет

- Math F241 All Test PapersДокумент5 страницMath F241 All Test PapersAbhinavОценок пока нет

- Simplex Method Additional ActivityДокумент3 страницыSimplex Method Additional ActivityIra Grace De CastroОценок пока нет

- Curious Numerical Coincidence To The Pioneer AnomalyДокумент3 страницыCurious Numerical Coincidence To The Pioneer AnomalylivanelОценок пока нет

- CASTEP StartupДокумент38 страницCASTEP StartupMuraleetharan BoopathiОценок пока нет

- Tybsc - Physics Applied Component Computer Science 1Документ12 страницTybsc - Physics Applied Component Computer Science 1shivОценок пока нет

- Datagrid View Cell EventsДокумент2 страницыDatagrid View Cell Eventsmurthy_oct24Оценок пока нет

- Problems Analysis and EOS 2011-1Документ2 страницыProblems Analysis and EOS 2011-1Jenny CórdobaОценок пока нет

- CS50 NotesДокумент2 страницыCS50 NotesFelix WongОценок пока нет

- 1-Data UnderstandingДокумент21 страница1-Data UnderstandingMahander OadОценок пока нет

- Skills in Mathematics Integral Calculus For JEE Main and Advanced 2022Документ319 страницSkills in Mathematics Integral Calculus For JEE Main and Advanced 2022Harshil Nagwani100% (3)

- Manual For Instructors: TO Linear Algebra Fifth EditionДокумент7 страницManual For Instructors: TO Linear Algebra Fifth EditioncemreОценок пока нет

- R Companion To Political Analysis 2nd Edition Pollock III Test BankДокумент10 страницR Companion To Political Analysis 2nd Edition Pollock III Test Bankwilliamvanrqg100% (30)

- Iitk Cse ResumeДокумент3 страницыIitk Cse ResumeAnimesh GhoshОценок пока нет

- Gpa8217 PDFДокумент151 страницаGpa8217 PDFdavid garduñoОценок пока нет

- Peter Maandag 3047121 Solving 3-Sat PDFДокумент37 страницPeter Maandag 3047121 Solving 3-Sat PDFVibhav JoshiОценок пока нет

- Vibration Analysis LevelДокумент230 страницVibration Analysis LevelAhmed shawkyОценок пока нет

- Tutorial 1Документ3 страницыTutorial 1Manvendra TomarОценок пока нет

- Numerical Methods in EconomicsДокумент349 страницNumerical Methods in EconomicsJuanfer Subirana Osuna0% (1)

- 32 2H Nov 2021 QPДокумент28 страниц32 2H Nov 2021 QPXoticMemes okОценок пока нет

- C4 Vectors - Vector Lines PDFДокумент33 страницыC4 Vectors - Vector Lines PDFMohsin NaveedОценок пока нет

- Chapter 8Документ13 страницChapter 8Allen AllenОценок пока нет

- Phy C332 167Документ2 страницыPhy C332 167Yuvraaj KumarОценок пока нет