Академический Документы

Профессиональный Документы

Культура Документы

DHS2 11revised

Загружено:

Aditya RaiИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

DHS2 11revised

Загружено:

Aditya RaiАвторское право:

Доступные форматы

Chapter 2

Bayesian Decision Theory

2.11

*Bayesian Belief Networks

The methods we have described up to now are fairly general all that we assumed, at base, was that we could parameterize the probability distributions by a vector . If we had prior information about , this too could be used. Sometimes our knowledge about a distribution is not directly expressed by a parameter vector, but instead about the statistical dependencies (or independencies) or the causal relationships among the component variables. (Recall that for some multidimensional distribution p(x), if for two features we have p(xi , xj ) = p(xi )p(xj ), we say those variables are statistically independent, as illustrated in Fig. 2.23.)

CHAPTER 2. BAYESIAN DECISION THEORY

Figure 2.23: A three-dimensional distribution which obeys p(x1 , x3 ) = p(x1 )p(x3); thus here x1 and x3 are statistically independent but the other feature pairs are not. There are many such cases where we know or can safely assume which variables are or are not causally related, even if it may be more dicult to specify the precise probabilistic relationships among those variables. Suppose, for instance, we are describing the state of an automobile: temperature of the engine, pressure of the brake uid, pressure of the air in the tires, voltages in the wires, and so on. Our basic knowledge of cars includes the fact that the oil pressure in the engine and the air pressure in a tire are not causally related while the engine temperature and oil temperature are causally related. Furthermore, we may know several variables that might inuence another: The coolant temperature is aected by the engine temperature, the speed of the radiator fan (which blows air over the coolant-lled radiator), and so on. We shall now exploit this structural information when reasoning about the system and its variables. We represent these causal dependencies graphically by means of Bayesian belief nets, also called causal networks, or simply belief nets. While these nets can represent continuous multidimensional distributions over their variables, they have enjoyed greatest application and success for discrete variables. For this reason, and because the calculations are simpler, we shall concentrate on the discrete case. node Each node (or unit) represents one of the system components, and here it takes on discrete values. We label nodes A, B, ... and their variables by the corresponding lowercase letter. Thus, while there are a discrete number of possible values of node A for instance two, a1 and a2 there may be continuous-valued probabilities on these discrete states. For example, if node A represents the automobile ignition switch

2.11. *BAYESIAN BELIEF NETWORKS

a1 = on, a2 = o we might have P (a1 ) = 0.739, P (a2 ) = 0.261, or indeed any other probabilities. Each link in the net is directional and joins two nodes; the link represents the causal inuence of one node upon another. Thus in the net in Fig. 2.24 A directly inuences D. While B also inuences D, such inuence is indirect, through C. In considering a single node in a net, it is useful to distinguish the set of nodes immediately before that node called its parents and the set of those immediately after it called its children. Thus in Fig. 2.24 the parents of D are A and C while the child of D is E. parent child

Figure 2.24: A belief network consists of nodes (labeled with uppercase bold letters) and their associated discrete states (in lowercase). Thus node A has states {a1, a2 , ...}, which collectively are denoted simply a; node B has states {b1, b2 , ...}, denoted b, and so forth. The links between nodes represent direct causal inuence. For example the link from A to D represents the direct inuence of A upon D. In this network, the variables at B may inuence those at D, but only indirectly through their eect on C. Simple probabilities are denoted P (a) and P (b), and conditional probabilities P (c|b), P (d|a, c) and P (e|d). Suppose we have a belief net, complete with causal dependencies indicated by the topology of the links. Through a direct application of Bayes rule, we can determine the probability of any conguration of variables in the joint distribution. To proceed, though, we also need the conditional probability tables, which give the probability of any variable at a node for each conditioning event that is, for the values of the variables in the parent nodes. Each row in a conditional probability table sums to 1, as its entries describe all possible cases for the variable. If a node has no parents, then the table just contains the prior probabilities of the variables. (There are sophisticated algorithms for learning the entries in such a table based on a data set of variable values. We shall not address such learning here as our main concern is how conditional probability table

CHAPTER 2. BAYESIAN DECISION THEORY

to represent and reason about this probabilistic information.) Since the network and conditional probability tables contain all the information of the problem domain, we can use them to calculate any entry in the joint probability distribution, as illustrated in Example 4.

Example 4: Belief Network for sh Consider again the problem of classifying sh, but now we want to incorporate more information than the measurements of the lightness and width. Imagine that a human expert has constructed the simple belief network in the gure, where node A represents the time of year and can have four values: a1 = winter, a2 = spring, a3 = summer and a4 = autumn. Node B represents the locale where the sh was caught: b1 = north Atlantic and b2 = south Atlantic. Node X, which represents the sh, has just two possible values: x1 = salmon and x2 = sea bass. A and B are the parents of the X. Similarly, our expert tells us that the children nodes of X represent lightness, C, with c1 = dark, c2 = medium and c3 = light, as well as thickness, D, with d1 = thick and d2 = thin. Thus the season and the locale determine directly what kind of sh is likely to be caught; the season and locale also determine the shs lightness and thickness, but only indirectly through their eect on X.

A simple belief net for the sh example. The season and the locale (and many other stochastic variables) determine directly the probability of the two dierent types of sh caught. The type of sh directly aects the lightness and thickness measured. The conditional probability tables quantifying these relationships are shown in pink. Imagine that shing boats go out throughout the year; then the probability distribu-

2.11. *BAYESIAN BELIEF NETWORKS

tion on the variables at A is uniform. Imagine, too, that boats generally spend more time in the north than the south Atlantic areas, specically the probabilities that any sh came from those areas are 0.6 and 0.4, respectively. The other conditional probabilities are similarly given in the tables. Now we can determine the value of any entry in the joint probability, for instance the probability that the sh was caught in the summer in the north Atlantic and is a sea bass that is dark and thin:

P (a3 , b1 , x2 , c3 , d2 )

= P (a3 )P (b1 )P (x2 |a3 , b1 )P (c3 |x2 )P (d2 |x2 ) = 0.25 0.6 0.4 0.5 0.4 = 0.012.

Note how the topology of the net is captured by the probabilities in the expression. Specically, since X is the only node to have two parents, only the P (x2 |, ) term has two conditioning variables; the other conditional probabilities have just one each. The product of these probabilities corresponds to the assumption of statistical independence.

We now illustrate more fully how to exploit the causal structure in a Bayes belief net when determining the probability of its variables. Suppose we wish to determine the probability distribution over the variables d1 , d2 , ... at D in the left network of Fig. 2.25 using the conditional probability tables and the network topology.

Figure 2.25: Two simple belief networks. The one on the left is a simple linear chain, the one on the right a simple loop. The conditional probability tables are indicated, for instance, as P (h|f , g).

CHAPTER 2. BAYESIAN DECISION THEORY

We evaluate this by summing the full joint distribution, P (a, b, c, d), over all the variables other than d:

P (d)

=

a,b,c

P (a, b, c, d) P (a)P (b|a)P (c|b)P (d|c)

a,b,c

(96)

= =

c

P (d|c)

b

P (c|b)

a

P (b|a)P (a) .

P (b) P (c)

P (d)

In Eq. 96 the summation variables can be split simply, and the intermediate terms have simple interpretations, as indicated. If we wanted the probability of a particular value of D, for instance d2 , we would compute

P (d2 ) =

a,b,c

P (a, b, c, d2 ),

(97)

and proceed as above. In either case, the conditional probabilities are simple because of the simple linear topology of the network. Now consider computing the probabilities of the variables at H in the network with the loop on the right of Fig. 2.25. Here we nd

P (h) =

e,f ,g

P (e, f , g, h) P (e)P (f |e)P (g|e)P (h|f , g) P (h|f , g).

f ,g

(98)

=

e,f ,g

=

e

P (e)P (f |e)P (g|e)

Note particularly that the expansion of the full sum diers somewhat from that in Eq. 96 because of the P (h|f , g) term, which itself arises from the loop topology of the network. Bayes belief nets are most useful in the case where are given the values of some evidence of the variables the evidence and we seek to determine some particular conguration of other variables. Thus in our sh example we might seek to determine the

2.11. *BAYESIAN BELIEF NETWORKS

probability that a sh came from the north Atlantic, given that it is springtime, and that the sh is a light salmon. (Notice that even here we may not be given the values of some variables such as the width of the sh.) In that case, the probability we seek is P (b1 |a2 , x1 , c1 ). In practice, we determine the values of several query variables (denoted collectively X) given the evidence of all other variables (denoted e) by

P (X|e) =

P (x, e) = P (X, e), P (e)

(99)

where is a constant of proportionality. As an example, suppose we are given the Bayes belief net and conditional probability tables in Example 4. Suppose we know that a sh is light (c1 ) and caught in the south Atlantic (b2 ), but we do not know what time of year the sh was caught nor it thickness. How shall we classify the sh for minimum expected classication error? Of course we must compute the probability it is a salmon, and also the probability it is sea bass. We focus rst on the relative probability the sh is a salmon given this evidence:

P (x1 |c1, b2 )

P (x1, c1 , b2 ) P (c1 , b2 ) P (x1 , a, b2 , c1 , d)

a,d

(100)

= =

a,d

P (a)P (b2 )P (x1 |a, b2 )P (c1 |x1 )P (d|x1 )

= P (b2 )P (c1 |x1 )

a

P (a)P (x1 |a, b2 )

P (d|x1 )

d

= P (b2 )P (c1 |x1 ) [P (a1 )P (x1|a1, b2 ) + P (a2 )P (x1 |a2 , b2 ) + P (a3 )P (x1 |a3 , b2 ) + P (a4 )P (x1 |a4 , b2 )] [P (d1 |x1 ) + P (d2|x1 )]

=1

= (0.4)(0.6) [(0.25)(0.7) + (0.25)(0.8) + (0.25)(0.1) + (0.25)(0.3)] 1.0 = 0.114. Note that in this case,

10

CHAPTER 2. BAYESIAN DECISION THEORY

P (d|x1 ) = 1,

d

(101)

that is, if we do not measure information corresponding to node D, the conditional probability table at D does not aect our results. A computation similar to that in Eq. 100 shows P (x2|c1 , b2 ) = 0.066. We normalize these probabilities (and hence eliminate ) and nd P (x1 |c1, b2 ) = 0.63 and P (x2 |c1 , b2 ) = 0.27. Thus given this evidence, we should classify this sh as a salmon. When the dependency relationships among the features used by a classier are unknown, we generally proceed by taking the simplest assumption, namely, that the features are conditionally independent given the category, that is,

P (x|a, b) = P (x|a)P (x|b). naive Bayes rule

(102)

In practice, this so-called naive Bayes rule or idiot Bayes rule often works quite well in practice, despite its manifest simplicity. Other approaches are to assume some functional form of conditional probability tables. Belief nets have found increasing use in complicated problems such as medical diagnosis. Here the uppermost nodes (ones without their own parents) represent a fundamental biological agent such as the presence of a virus or bacteria. Intermediate nodes then describe diseases, such as u or emphysema, and the lowermost nodes describe the symptoms, such as high temperature or coughing. A physician enters measured values into the net and nds the most likely disease or cause.

Вам также может понравиться

- 2 Graphical Models in A Nutshell: Daphne Koller, Nir Friedman, Lise Getoor and Ben TaskarДокумент43 страницы2 Graphical Models in A Nutshell: Daphne Koller, Nir Friedman, Lise Getoor and Ben TaskarFahad Ahmad KhanОценок пока нет

- AI Bayes TheoremДокумент10 страницAI Bayes TheoremKollipara KanakavardhiniОценок пока нет

- Bayesian Belief Network, Exact Inference, Approx Inference, Causal NetworkДокумент15 страницBayesian Belief Network, Exact Inference, Approx Inference, Causal Networksaipriy0904Оценок пока нет

- Example: Anscombe's Quartet Revisited: CC-BY-SA-3.0 GFDLДокумент10 страницExample: Anscombe's Quartet Revisited: CC-BY-SA-3.0 GFDLq1w2e3r4t5y6u7i8oОценок пока нет

- An Extended Relational Database Model For Uncertain and Imprecise InformationДокумент10 страницAn Extended Relational Database Model For Uncertain and Imprecise InformationdequanzhouОценок пока нет

- 4.3 Bayesian Belief Network in Artificial IntelligenceДокумент7 страниц4.3 Bayesian Belief Network in Artificial IntelligenceSian YemenОценок пока нет

- AIML-Unit 5 NotesДокумент45 страницAIML-Unit 5 NotesHarshithaОценок пока нет

- Bayesian Networks: Machine Learning, Lecture (Jaakkola)Документ8 страницBayesian Networks: Machine Learning, Lecture (Jaakkola)Frost AОценок пока нет

- Bayesian Belief Network in Artificial IntelligenceДокумент10 страницBayesian Belief Network in Artificial IntelligenceALYSSA JOYCE ROMEROОценок пока нет

- Unit 2 Probabilistic ReasoningДокумент21 страницаUnit 2 Probabilistic ReasoningnarmathaapcseОценок пока нет

- Lec. 21 - Zoubin G. Learning Dynamic Bayesian NetworksДокумент31 страницаLec. 21 - Zoubin G. Learning Dynamic Bayesian NetworksAlejandro VasquezОценок пока нет

- Lecture 4Документ13 страницLecture 4ZUHAL TUGRULОценок пока нет

- Restrepo 2007 ApproximatingДокумент6 страницRestrepo 2007 ApproximatingpastafarianboyОценок пока нет

- PGM 2014 Homework1Документ8 страницPGM 2014 Homework1Lokender TiwariОценок пока нет

- Theory and Decision 54: 261-285, 2003Документ25 страницTheory and Decision 54: 261-285, 2003omieosutaОценок пока нет

- Forward Backward ChainingДокумент5 страницForward Backward ChainingAnshika TyagiОценок пока нет

- Getting To Know Your Data: 2.1 ExercisesДокумент8 страницGetting To Know Your Data: 2.1 Exercisesbilkeralle100% (1)

- Random Variables: Corr (X, Y) Cov (X, Y) / Cov (X, Y) Is The Covariance (X, Y)Документ15 страницRandom Variables: Corr (X, Y) Cov (X, Y) / Cov (X, Y) Is The Covariance (X, Y)viktahjmОценок пока нет

- Bayesian Uncertainty QuantificationДокумент23 страницыBayesian Uncertainty QuantificationKowshik ThopalliОценок пока нет

- Chap GapДокумент29 страницChap GapAlyssa VuОценок пока нет

- Basic Network MetricsДокумент30 страницBasic Network MetricsRahulprabhakaran VannostranОценок пока нет

- Frequency Synchronisation in OFDM: - A Bayesian AnalysisДокумент5 страницFrequency Synchronisation in OFDM: - A Bayesian AnalysisAyu KartikaОценок пока нет

- Lesson1 - Simple Linier RegressionДокумент40 страницLesson1 - Simple Linier Regressioniin_sajaОценок пока нет

- Putting Differentials Back Into CalculusДокумент14 страницPutting Differentials Back Into Calculus8310032914Оценок пока нет

- Chapitre 2 PDFДокумент17 страницChapitre 2 PDFMouhaОценок пока нет

- BNP PDFДокумент108 страницBNP PDFVinh TranОценок пока нет

- Numpy / Scipy Recipes For Data Science: K-Medoids ClusteringДокумент6 страницNumpy / Scipy Recipes For Data Science: K-Medoids ClusteringJanhavi NistaneОценок пока нет

- 1 s2.0 S0166218X21002705 MainДокумент8 страниц1 s2.0 S0166218X21002705 MainRahmaОценок пока нет

- Unit 6Документ126 страницUnit 6skraoОценок пока нет

- 13 - Data Mining Within A Regression FrameworkДокумент27 страниц13 - Data Mining Within A Regression FrameworkEnzo GarabatosОценок пока нет

- Institute of Engineering and Technology, Lucknow: Special Lab (Kcs-751)Документ37 страницInstitute of Engineering and Technology, Lucknow: Special Lab (Kcs-751)Hîмanî JayasОценок пока нет

- Institute of Engineering and Technology, Lucknow: Special Lab (Kcs-751)Документ37 страницInstitute of Engineering and Technology, Lucknow: Special Lab (Kcs-751)Hîмanî JayasОценок пока нет

- On The Stochastic Increasingness of Future Claims in The Biihlmann Linear Credibility PremiumДокумент13 страницOn The Stochastic Increasingness of Future Claims in The Biihlmann Linear Credibility PremiumAliya 02Оценок пока нет

- MATLAB Lecture 3. Polynomials and Curve Fitting PDFДокумент3 страницыMATLAB Lecture 3. Polynomials and Curve Fitting PDFTan HuynhОценок пока нет

- Unit Regression Analysis: ObjectivesДокумент18 страницUnit Regression Analysis: ObjectivesSelvanceОценок пока нет

- 10-601 Machine Learning (Fall 2010) Principal Component AnalysisДокумент8 страниц10-601 Machine Learning (Fall 2010) Principal Component AnalysisJorge_Alberto__1799Оценок пока нет

- Bounds and Lattice-Based Transmission Strategies For The Phase-Faded Dirty-Paper ChannelДокумент8 страницBounds and Lattice-Based Transmission Strategies For The Phase-Faded Dirty-Paper Channelnomore891Оценок пока нет

- Reasoning With Uncertainty - Probabilistic Reasoning: Version 2 CSE IIT, KharagpurДокумент10 страницReasoning With Uncertainty - Probabilistic Reasoning: Version 2 CSE IIT, KharagpurVanu ShaОценок пока нет

- Polynomial Curve Fitting in MatlabДокумент3 страницыPolynomial Curve Fitting in MatlabKhan Arshid IqbalОценок пока нет

- Bayesian Networks Methods For Traffic Flow Prediction: Enrique CastilloДокумент24 страницыBayesian Networks Methods For Traffic Flow Prediction: Enrique CastilloVedant GoyalОценок пока нет

- PCA For Non Precise Data-Carlo N. LauroДокумент12 страницPCA For Non Precise Data-Carlo N. LauroAnonymous BvUL7MRPОценок пока нет

- PPTs - Set Theory & Probability (L-1 To L-6)Документ49 страницPPTs - Set Theory & Probability (L-1 To L-6)Daksh SharmaОценок пока нет

- Lecture 2 28 August, 2015: 2.1 An Example of Data CompressionДокумент7 страницLecture 2 28 August, 2015: 2.1 An Example of Data CompressionmoeinsarvaghadОценок пока нет

- PCA Tutorial: Instructor: Forbes BurkowskiДокумент12 страницPCA Tutorial: Instructor: Forbes Burkowskisitaram_1Оценок пока нет

- EconДокумент38 страницEconbasant singhОценок пока нет

- Random Generation of Bayesian Networks: Lecture Notes in Computer Science November 2002Документ11 страницRandom Generation of Bayesian Networks: Lecture Notes in Computer Science November 2002Prabhav PatilОценок пока нет

- An Intuitive Approach To The Effective Dielectric Constant of Binary CompositesДокумент13 страницAn Intuitive Approach To The Effective Dielectric Constant of Binary CompositesSami SozuerОценок пока нет

- Unit 2Документ5 страницUnit 2Saral ManeОценок пока нет

- Learning Optimal Augmented Bayes Networks: Vikas Hamine Paul HelmanДокумент4 страницыLearning Optimal Augmented Bayes Networks: Vikas Hamine Paul HelmanAnonymous RrGVQjОценок пока нет

- Material Summary AssignmentДокумент7 страницMaterial Summary AssignmentAwindya CandrasmurtiОценок пока нет

- Bayesian Network RepresentationДокумент6 страницBayesian Network RepresentationWaterloo Ferreira da SilvaОценок пока нет

- Multicollinearity Among The Regressors Included in The Regression ModelДокумент13 страницMulticollinearity Among The Regressors Included in The Regression ModelNavyashree B MОценок пока нет

- S. Catterall Et Al - Baby Universes in 4d Dynamical TriangulationДокумент9 страницS. Catterall Et Al - Baby Universes in 4d Dynamical TriangulationGijke3Оценок пока нет

- 2 Curve FittingДокумент4 страницы2 Curve FittingJack WallaceОценок пока нет

- Notes On Transport PhenomenaДокумент184 страницыNotes On Transport PhenomenarajuvadlakondaОценок пока нет

- Bayesian Updating Explained - 1547532565Документ7 страницBayesian Updating Explained - 1547532565Sherif HassanienОценок пока нет

- Predicting Time Series of Complex Systems: David Rojas Lukas Kroc Marko Thaler, VaДокумент14 страницPredicting Time Series of Complex Systems: David Rojas Lukas Kroc Marko Thaler, VaAbdo SawayaОценок пока нет

- Canonical Correlation PDFДокумент16 страницCanonical Correlation PDFAlexis SinghОценок пока нет

- Gate Cse 2005Документ22 страницыGate Cse 2005Aditya RaiОценок пока нет

- Linear Discriminant Functions Lesson 26: Characterization of The Decision BoundaryДокумент7 страницLinear Discriminant Functions Lesson 26: Characterization of The Decision BoundaryAditya RaiОценок пока нет

- Probabilistic Neural Network (PNN)Документ9 страницProbabilistic Neural Network (PNN)Aditya RaiОценок пока нет

- Representation of Patterns and Classes Lesson 3Документ8 страницRepresentation of Patterns and Classes Lesson 3Aditya RaiОценок пока нет

- A2ECS505GTДокумент1 страницаA2ECS505GTAditya RaiОценок пока нет

- Ad It YaДокумент5 страницAd It YaAditya RaiОценок пока нет

- G1CДокумент12 страницG1CKhriz Ann C ÜОценок пока нет

- ZF-FreedomLine TransmissionДокумент21 страницаZF-FreedomLine TransmissionHerbert M. Zayco100% (1)

- 2015 Nos-Dcp National Oil Spill Disaster Contingency PlanДокумент62 страницы2015 Nos-Dcp National Oil Spill Disaster Contingency PlanVaishnavi Jayakumar100% (1)

- Passenger Lift Alert - Health and Safety AuthorityДокумент4 страницыPassenger Lift Alert - Health and Safety AuthorityReginald MaswanganyiОценок пока нет

- Fully Automatic Coffee Machine - Slimissimo - IB - SCOTT UK - 2019Документ20 страницFully Automatic Coffee Machine - Slimissimo - IB - SCOTT UK - 2019lazareviciОценок пока нет

- Immigrant Italian Stone CarversДокумент56 страницImmigrant Italian Stone Carversglis7100% (2)

- Drilling & GroutingДокумент18 страницDrilling & GroutingSantosh Laxman PatilОценок пока нет

- The Broadband ForumДокумент21 страницаThe Broadband ForumAnouar AleyaОценок пока нет

- MTH100Документ3 страницыMTH100Syed Abdul Mussaver ShahОценок пока нет

- GROSS Mystery of UFOs A PreludeДокумент309 страницGROSS Mystery of UFOs A PreludeTommaso MonteleoneОценок пока нет

- Scanner and Xcal Comperative Analysis v2Документ22 страницыScanner and Xcal Comperative Analysis v2Ziya2009Оценок пока нет

- Cathodic Protection Catalog - New 8Документ1 страницаCathodic Protection Catalog - New 8dhineshОценок пока нет

- NF en Iso 5167-6-2019Документ22 страницыNF en Iso 5167-6-2019Rem FgtОценок пока нет

- Proefschrift T. Steenstra - tcm24-268767Документ181 страницаProefschrift T. Steenstra - tcm24-268767SLAMET PAMBUDIОценок пока нет

- Clevo W940tu Service ManualДокумент93 страницыClevo W940tu Service ManualBruno PaezОценок пока нет

- IMO Ship Waste Delivery Receipt Mepc - Circ - 645Документ1 страницаIMO Ship Waste Delivery Receipt Mepc - Circ - 645wisnukerОценок пока нет

- Bulacan Agricultural State College: Lesson Plan in Science 4 Life Cycle of Humans, Animals and PlantsДокумент6 страницBulacan Agricultural State College: Lesson Plan in Science 4 Life Cycle of Humans, Animals and PlantsHarmonica PellazarОценок пока нет

- NAT-REVIEWER-IN-PHYSICAL EDUCATIONДокумент4 страницыNAT-REVIEWER-IN-PHYSICAL EDUCATIONMira Rochenie CuranОценок пока нет

- Cobalamin in Companion AnimalsДокумент8 страницCobalamin in Companion AnimalsFlávia UchôaОценок пока нет

- Reviews: The Global Epidemiology of HypertensionДокумент15 страницReviews: The Global Epidemiology of Hypertensionrifa iОценок пока нет

- Week 1 - NATURE AND SCOPE OF ETHICSДокумент12 страницWeek 1 - NATURE AND SCOPE OF ETHICSRegielyn CapitaniaОценок пока нет

- Kindergarten Math Problem of The Day December ActivityДокумент5 страницKindergarten Math Problem of The Day December ActivityiammikemillsОценок пока нет

- PPT DIARHEA IN CHILDRENДокумент31 страницаPPT DIARHEA IN CHILDRENRifka AnisaОценок пока нет

- Structural Analysis and Design of Pressure Hulls - The State of The Art and Future TrendsДокумент118 страницStructural Analysis and Design of Pressure Hulls - The State of The Art and Future TrendsRISHABH JAMBHULKARОценок пока нет

- Shree New Price List 2016-17Документ13 страницShree New Price List 2016-17ontimeОценок пока нет

- Course Registration SlipДокумент2 страницыCourse Registration SlipMics EntertainmentОценок пока нет

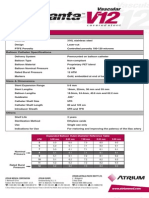

- Advanta V12 Data SheetДокумент2 страницыAdvanta V12 Data SheetJuliana MiyagiОценок пока нет

- Liver: Anatomy & FunctionsДокумент18 страницLiver: Anatomy & FunctionsDR NARENDRAОценок пока нет

- Curriculum Vitae - RadikaДокумент3 страницыCurriculum Vitae - RadikaradikahendryОценок пока нет

- Surface TensionДокумент13 страницSurface TensionElizebeth GОценок пока нет

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindОт EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindОценок пока нет

- Generative AI: The Insights You Need from Harvard Business ReviewОт EverandGenerative AI: The Insights You Need from Harvard Business ReviewРейтинг: 4.5 из 5 звезд4.5/5 (2)

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldОт EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldРейтинг: 4.5 из 5 звезд4.5/5 (55)

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveОт EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveОценок пока нет

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldОт EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldРейтинг: 4.5 из 5 звезд4.5/5 (107)

- 100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziОт Everand100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziОценок пока нет

- Four Battlegrounds: Power in the Age of Artificial IntelligenceОт EverandFour Battlegrounds: Power in the Age of Artificial IntelligenceРейтинг: 5 из 5 звезд5/5 (5)

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessОт EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessОценок пока нет

- Artificial Intelligence & Generative AI for Beginners: The Complete GuideОт EverandArtificial Intelligence & Generative AI for Beginners: The Complete GuideРейтинг: 5 из 5 звезд5/5 (1)

- The AI Advantage: How to Put the Artificial Intelligence Revolution to WorkОт EverandThe AI Advantage: How to Put the Artificial Intelligence Revolution to WorkРейтинг: 4 из 5 звезд4/5 (7)

- Who's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesОт EverandWho's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesРейтинг: 4.5 из 5 звезд4.5/5 (13)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewОт EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewРейтинг: 4.5 из 5 звезд4.5/5 (104)

- Your AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsОт EverandYour AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsОценок пока нет

- Working with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)От EverandWorking with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)Рейтинг: 5 из 5 звезд5/5 (5)

- HBR's 10 Must Reads on AI, Analytics, and the New Machine AgeОт EverandHBR's 10 Must Reads on AI, Analytics, and the New Machine AgeРейтинг: 4.5 из 5 звезд4.5/5 (69)

- Artificial Intelligence: A Guide for Thinking HumansОт EverandArtificial Intelligence: A Guide for Thinking HumansРейтинг: 4.5 из 5 звезд4.5/5 (30)

- T-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerОт EverandT-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerРейтинг: 4 из 5 звезд4/5 (4)

- Architects of Intelligence: The truth about AI from the people building itОт EverandArchitects of Intelligence: The truth about AI from the people building itРейтинг: 4.5 из 5 звезд4.5/5 (21)

- AI and Machine Learning for Coders: A Programmer's Guide to Artificial IntelligenceОт EverandAI and Machine Learning for Coders: A Programmer's Guide to Artificial IntelligenceРейтинг: 4 из 5 звезд4/5 (2)

- Demystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)От EverandDemystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)Рейтинг: 4 из 5 звезд4/5 (1)

- 2084: Artificial Intelligence and the Future of HumanityОт Everand2084: Artificial Intelligence and the Future of HumanityРейтинг: 4 из 5 звезд4/5 (82)

- Artificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.От EverandArtificial Intelligence: The Complete Beginner’s Guide to the Future of A.I.Рейтинг: 4 из 5 звезд4/5 (15)

- Python Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionОт EverandPython Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionРейтинг: 5 из 5 звезд5/5 (2)