Академический Документы

Профессиональный Документы

Культура Документы

Li, Chang - Computational Methods For American Put Options

Загружено:

Edwin HauwertОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Li, Chang - Computational Methods For American Put Options

Загружено:

Edwin HauwertАвторское право:

Доступные форматы

COMPUTATIONAL METHODS FOR AMERICAN PUT

OPTIONS

Chang Li 759728

September 25, 2005

Abstract

In this paper, we shall study various numerical methods of pricing American vanilla

put options, including the most popular projected successive overrlaxation (PSOR) al-

gorithm, parametric principal pivoting (PPP) algorithm, large-scale solution of a linear

programming formulation, explicit method and well-known tree method. We shall test

all these ve approaches empirically, modeling their timing and accuracy (error) behavior

as functions of two discretization parameters Nt and Ns. Then we shall furthermore to

draw for each case of them the optimal curves capturing the optimal relationship between

the accuracy (error) level and cputime and henceforth make relevant comparisons among

algorithms.

Key Words: American options, parabolic PDEs, linear complementarity problem,

least elements, linear programming, large-scale method, projected successive overrelax-

ation, parametric principal pivoting

1 Introduction

The aim of this paper is to investigate a number of numerical methods of pricing American

(vanilla) put optionve dierent algorithms are of our great interest: Projected Successive

OverRelaxation algorithm, Parametric Principal Pivoting algorithm, Linear Programming,

Explicit method and Tree method. We shall approximate for each case the timing and error

models (as functions of both time steps and space steps), and thereby describe the relationship

between the accuracy (error) level and minimum cputime.

1

In Section 2, we shall rst start with a brief summary of well-known results for Amer-

ican vanilla put option, then we set up the equivalence between various formulations of an

American option problem including linear complementarity problem, variational inequality,

least element and abstract linear program. These equivalence properties are our theoretical

cornerstones of empirical experiments.

In Section 3, we shall consider nite dierence approximations to various equivalent for-

mulations of American put problem in Section 2 as well as binomial tree approximation

mechanism. Standard algorithms are written in matrix language.

In Section 4, we test numerically the timing and error behaviors of each algorithm, and

based on these observations, we specify and estimate our empirical timing and error functions

with respect to two discretization parametersthe numbers of time steps and space steps.

Then we will be able to go one step further to obtain for each case the optimal curve

describing the optimal relationship between the accuracy (error) level and cputime.

2 The American Put Option

2.1 Pricing in theory

Let us consider the pricing of American stock option in the standard Black-Scholes setting,

namely, we postulate that the evolutions of stock price and bond price satisfy the following

stochastic dierential equations (PDEs):

dS

t

= S

t

dt +S

t

dW

t

dB

t

= rB

t

dt

where, for the sake of simplicity, we assume that , r, and are positive constants denoting

the volatility parameter, risk-less interest rate and drift parameter respectively, and W

t

is a

standard Wiener process with mean zero and variance dt. After the equivalent martingale

measure transformation (due to the Girsanovs theorem), the stock price process under the

risk-neutral world can be presented as:

dS

t

= rS

t

dt +S

t

d

W

t

,

where

W

t

is a Wiener process under this measure. Let (S(t)) = (K S(t))

+

denote the

given payo function of a standard (vanilla) American put option on a stock with the time

2

of expiry T and a given strike price K, that is, the payo of a American put on exercise at

any stopping time t [0, T] is given by its payo function. The rst main objective of this

paper is to characterize, in a manner suitable for numerical solution, the value of the option

V (x, t) : R

+

[0, T] R as a function of the stock price x > 0 and time t [0, T].

If we rst of all consider the case in which the security were European, then the V (x, t)

of an European option is simply the solution of the linear parabolic (PDE) derived by Black

and Scholes [1], i.e.

L

BS

V +

V

t

= 0

for (x, t) R

+

[0, T] and terminal condition V (, T) = , where the dierential operator is

dened as: L

BS

:=

1

2

2

x

2

2

x

2

+rx

x

r.

However, pricing an American put is more dicult than just solving a PDE. The value

function can be dealt with as the solution of a classical optimal stopping problem, namely

to choose the stopping time that maximizes the conditional expectation of the discounted

payo, and this optimal stopping time (t) (which is a stochastic variable) may be shown to

be given by

(t) = inf{s [t, T] : V (S(s), s) = (S(s))}

that is, the rst time the option value falls to simply that of the payo for the immediate

exercise. Hence, the domain of the value function may be partitioned into an implicitly

dened region C called continuation region and a stopping region S given by:

C = {(x, t) R

+

[0, T] : V (x, t) > (x)}

S = {(x, t) R

+

[0, T] : V (x, t) = (x)}

Clearly this is a partition, because we have V (x, t) (x) everywhere.

On the whole domain R

+

[0, T], we have L

BS

V + (V/t) 0 (the Black-Scholes

inequality), since in order to preclude arbitrage opportunities, the drift of the (undiscounted)

price process cannot be greater than the risk-free rate. Hence, as long as the current stock

price process (S(t), t) is in C, it is optimal to continue, and the value of the American option

is equal to the value of a European contract that pays (S(t), t) at the exercise boundary

between the continuation and stopping regions. This is also known as the free boundary

condition compared with the terminal condition in the European case, and sometimes one

3

more condition is necessary to dene the exercise boundary, usually the smooth pasting

condition i.e. (V/x) = 1 on the boundary.

6

P

P

P

P

P

P

P

P

P

P

P

P

P

P

Pq

B

B

B

B

B

B

B

B

1

V (x, t)

T t

x

S

p

C

p

Figure 2.1 Sketch of American put value function

However, as soon as the price process crosses this exercise boundary into the stopping

region meaning that it is optimal to exercise the option right away, the value of the option is

clearly equal to the payo function (x), and the Black-Scholes (strict) inequality holds.

To summarize, the value of an American put option V (x, t) satises the following condi-

tions for all (x, t) R

+

[0, T]: either

L

BS

V + (V/t) = 0, and V > in C

or

L

BS

V + (V/t) 0, and V = in S.

If we formally reverse the direction of time of the value function, that is, we can change

to a more standard setting by introducing a new unknown function v(t) = V (T t), in terms

of this new unknown, the above equations become to: either

(v/t) = L

BS

v, and v > in C

or

(v/t) > L

BS

v, and v = in S

and both of the conditions are consistent in the property ((v/t) L

BS

v) (v ) = 0,

where the notation denotes the pointwise minimum of the two functions.

4

Figure 2.1 presents a theoretical sketch of the American put value function. The projec-

tions of the continuation and stopping regions onto the value surface are marked as C

p

and

S

p

respectively.

2.2 Formulations of the problem

There are various standard mathematical expressions for the same American put problem

described above; in fact, we have already seen the free boundary problem in the previous

subsection. In this following subsection, I shall briey introduce some of those remaining

formulations, namely, the linear order complementarity problem (LOCP), the variational

inequality (VI), the least element problem (LE) and nally the abstract linear program (LP).

The (OCP) and its corresponding (LP) formats outlined in the following will eventually allow

us to compute a numerical approximation to the value function of the American put.

2.2.1 (OCP) and (VI) formulations

Now let us rst of all express the pricing of the American put option in a form that encap-

sulates these main complementary properties as the following linear order complementarity

problem (LOCP):

Theorem 2.1 The American put value function is the unique solution to the linear order

complementarity problem:

(OCP)

_

_

v(, 0) =

v

v/t L

BS

v

(v/t) L

BS

v) (v ) = 0 a.e. R [0, T]

This (LOCP) format can be regarded as somehow the most straightforward and simple one to

the pricing problem of an American put option. However, in order to verify the validity of the

theorem, we still need to introduce another equivalent formulation, namely, the variational

inequality (VI)

i

. The equivalence between these two formulations has been extensively studied

and proved by many mathematicians in the history. (For more detailed knowledge, readers

i

The (VI) formulation itself is not among our main interests here, so I simply omit it and keep focus on

those key results i.e. the equivalence between (VI)and (LOCP) and the condition on the uniqueness for (VI).

5

may refer to [2].) Hence, the requirement that the dierential operator L

BS

is coercive

ii

for the uniqueness of the solution to the corresponding (VI) (which the Lions-Stampacchia

theorem implies) should be also considered as that for the original (LOCP). In the American-

styled problem, it can be shown (ideally) that the dierential operator L

BS

is indeed coercive

(due to [8]), and this may complete the verifying of the uniqueness of the (LOCP).

2.2.2 (LE) and (LP) formulations

The main results are that the original linear order complementarity problem for American

put is also equivalent to a least element problem and hence to an abstract linear program and

under the condition that L

BS

is a coercive type Z temporally homogeneous elliptic dierential

operator, these three equivalent problems have a unique solution V . Since it has been proved

that for Black-Scholes model, L

BS

is indeed coercive type Z (see [8]), this general result also

provides the equivalence between these various formulations hence furthermore suggests a

simple way to solve the equivalent problems numericallyby a suitable discretization: the

innite-dimensional abstract linear program (LP) reduces to an ordinary linear program with

solution in R

n

. I shall elaborate on this issue later in the following section.

3 Discretization Schemes

In this section, we consider several numerical solutions of the American vanilla put problem

as solutions to (LOCP), (LP) and widely-used binomial trees. For the numerical treatment

of (LOCP) and (LP), we shall discretize space and time by standard nite dierence ap-

proximation in order to reduce them into a linear complementarity problem and an ordinary

linear program respectively which may allow us to solve by well-known algorithms.

3.1 Tree method

3.1.1 Risk neutral valuation

A realistic binomial tree model is the one that assumes stock price movements are composed

of a large number of small binomial movements. Each of those small binomial movements

corresponds to a very small time interval of length t, and we assume that in each time

ii

An operator T is coercive on a Hilbert space H i R

+

s.t. v, T v v

2

v H.

6

interval the stock price moves from its initial value of S

0

to one of two new values, S

0

u and

S

0

d. In general, the parameter u which denotes an up movement should be greater than 1

and the down movement parameter d < 1. However, here we impose an extra condition on

the value of u and d (which is rstly proposed by Cox, Ross, and Rubinstein [6]), namely,

u =

1

d

. This model is illustrated in the Figure 3.1.

1

P

P

P

P

P

P

P

P

Pq

S

0

p

1 p

S

0

u

S

0

d

Figure 3.1 Stock price movements in time t

We are going to apply the risk-neutral valuation principle. In the risk neutral world, the

expected return from all traded securities is the risk-free interest rate and future cash ows can

be valued by discounting their expected value at the risk-free interest rate. Mathematically,

it follows that:

Se

rt

= pSu + (1 p)Sd

or

e

rt

= pu + (1 p)d (3.1)

where r is the risk-free interest rate, and p denotes the corresponding risk neutral probability.

In order to give the correct values for the parameters u , d and p, we still need to establish

one more connection between the input parameter

iii

and u, d and p. The stochastic process

assumed for the stock price implies that the variance of the proportional change in the stock

price in a small time interval of the length t is

2

t. Since the variance of a random variable

Q is E(Q

2

) E

2

(Q), it follows that,

pu

2

+ (1 p)d

2

[pu (1 p)d]

2

=

2

t (3.2)

Substituting from the equation (3.1) for p, this reduces to

e

rt

(u +d) ud e

2rt

=

2

t (3.3)

iii

represents the volatility level of the stock which I included uniformly in all the MatLab programs as an

input parameter.

7

Recall the extra condition imposed on the relation between u and d,

u =

1

d

(3.4)

then equations (3.1), (3.3) and (3.4) imply

iv

p =

e

rt

d

u d

u = e

t

(3.5)

d = e

t

3.1.2 Pricing backward through the stock tree

We shall rst of all investigate the generating of a complete tree of stock prices. At time

zero, the stock price, S

0

, is known. At time t, according to the mechanism of binomial tree

described previously, there are two possible stock prices, namely, S

0

u and S

0

d; each of these

nodes are going to be treated as a new initial point, therefore at time 2t, three possible

stock prices have been evolved, they are S

0

u

2

, S

0

, S

0

d

2

; and so on. In general, at time idt,

i + 1 stock prices will be considered, the calculative formula for them are

S

0

u

j

d

ij

j = 0, 1, . . . , i (3.6)

Note that the tree combines in the sense that an up movement followed by a down

movement leads to the same stock price as a down movement followed by an up movement.

The pricing procedure is to work back through the tree from the end to the beginning,

checking at each node whether early exercise is preferable to holding the option for a further

time period t. Assuming that our pricing is in a risk-neutral world, this procedure therefore

requires us to reserve the greater number between the discounted value from the later nodes

applying the risk-neutral valuation principle and the payo of immediate exercise as the value

of American put at current time and stock price. The option values for the nal nodes are the

same as for the European option which are known as (K S(t))

+

. Eventually, by working

back through all the nodes, the option value at time zero is obtained.

To summarize, let us express the approach in an algebraical way: the value of an American

put at its expiration date is

f

N,j

= max(K S

0

u

j

d

Nj

, 0) j = 0, 1, . . . , N (3.7)

iv

The solutions of u and d are only the closely approximate ones to the equations systems (3.1), (3.3)and

(3.4) where the terms of higher order than dt are ignored.

8

where we designate the (i, j) as the jth node at time it. The value at (i, j) of the option

therefore can be formulated as:

f

i,j

= max(K S

0

u

j

d

ij

, e

rt

[pf

i+1,j+1

+ (1 p)f

i+1,j

]) (3.8)

for 0 i N, 0 j i.

3.2 Finite dierence approximation

Before we apply the nite dierence discretization scheme to (LOCP) and (LP), it may be

advantageous to adopt the usual log-transformation to the stock price S, i.e. we dene again

a new function by (t, ) = v(t, exp()). Based on this transformation, the original Black-

Scholes PDE for an American put therefore reduces to (/t) = L, where L is the constant

coecient elliptic operator which doesnt have state dependent coecients, in contrast to the

original L

BS

L =

1

2

2

2

2

+ (r

1

2

2

)

r (3.9)

and now refers to the option value as a function of . Correspondingly, some revisions

are also needed to be made for the payo function

() = (K e

)

+

and continuation and

stopping regions C and S which are dened with respect to the new variable .

3.2.1 Implicit schemes

Discretization scheme

As the rst approximation, lets just restrict the domain of the value function R[0, T] to a

nite region [L, U] [0, T], for any L < log K < U, this is called the localized version of the

value function. To avoid those unnecessary inaccuracy arising from the approximation on the

boundaries points and hence the bad inuence on those inside the boundaries, we initialize

the value function on the boundaries as (L, ) =

(L) and (U, ) =

(U). It can be shown

that as L, U the solution to the localized (LOCP) and (LP) i.e. the localized function

tends uniformly to the exact solution of (LOCP) and (LP), which are the same American

put value function on the whole domain. This result is rstly demonstrated by Jaillet et al.

(1990) for the equivalent localized variational inequality.

As a usual numerical procedure, we shall discretize the localized (LOCP) by approxi-

mating the value function by a piecewise constant function, constant on rectangular interval

9

points in a regular mesh, on the domain [L, U] [0, T]. In order to retain the simplicity, lets

furthermore introduce some shorthand notations: rst of all write

m

i

for the value of the

general function at points (i, m) dened by:

m

i

= (L +i, mt) (3.10)

where m {0, 1, . . . , M} := M, and i {0, 1, . . . , I} := I; then write

i

=

(L + i)

as the terminal payo at each space point; and corresponding to the initialization we made

previously, the boundary values therefore follow that

m

0

=

0

,

m

I

=

I

, and since m is a

backwards time index,

0

i

=

i

.

Now we are ready to translate the partial derivatives that appear in L into their corre-

sponding discrete analogues, using nite dierence approximations. We estimate the partial

derivatives of the value function at a point indexed by (i, m) in the interior of the domain

I M by

m

i+1

m

i1

2

+ (1 )

m1

i+1

m1

i1

2

m

i+1

2

m

i

+

m

i1

()

2

+ (1 )

m1

i+1

2

m1

i

+

m1

i1

()

2

(3.11)

t

m1

i

m

i

t

for [0, 1]. The cases = 0, =

1

2

, = 1 correspond to explicit, Crank-Nicolson, and the

implicit

v

discretization schemes, respectively, all of which are second-order accuracy in

and rst-order accurate in t, except for =

1

2

, which gives second-order accuracy in t.

Substitution of these discrete forms for their counterparts in (LOCP) gives the discrete

order complementarity problem (DOCP):

_

m

i

i

,

0

i

=

i

,

m

I

= 0,

m

0

=

0

a

m

i1

+b

m

i

+c

m

i+1

+d

m1

i1

+e

m1

i

+f

m1

i+1

0

(a

m

i1

+b

m

i

+c

m

i+1

+d

m1

i1

+e

m1

i

+f

m1

i+1

) (

m

i

i

) = 0

i I/{0, I}, m M/{0},

(3.12)

v

We distinguish between the full implicit method which has = 1, and general implicit methods, which

have > 0.

10

where

a :=

_

2

t

2

2

(r

2

/2)t

2

_

b := 1 +rt +

2

t

2

c :=

_

2

t

2

2

+

(r

2

/2)t

2

_

d := (1 )

_

2

t

2

2

(r

2

/2)t

2

_

e := (1 )

2

t

2

1 f := (1 )

_

2

t

2

2

+

(r

2

/2)t

2

_

(3.13)

For the next step, lets write the above element-wise complementarity condition of equation

(3.12) in a matrix form by collapsing the space and time indices into vectors. Dene

m

:=

_

_

_

_

_

m

1

.

.

.

m

I1

_

_

_

_

_

:=

_

_

_

_

_

1

.

.

.

I1

_

_

_

_

_

:=

_

_

_

_

_

_

_

_

(a +d)

0

0

.

.

.

0

_

_

_

_

_

_

_

_

. (3.14)

Then, substituting

m

0

=

0

and

m

I

= 0 into the equation (3.12), the complementarity

condition becomes

_

B

m1

+A

m

0

(

m

) (B

m1

+A

m

) = 0

(3.15)

with the boundary values

()

I

= 0,

()

0

=

0

, and

0

=

, where A and B are the (I 1)-

square tridiagonal matrices given by

A =

_

_

_

_

_

_

_

_

_

_

_

b c

a b c

.

.

.

.

.

.

.

.

.

a b c

a b

_

_

_

_

_

_

_

_

_

_

_

B =

_

_

_

_

_

_

_

_

_

_

_

e f

d e f

.

.

.

.

.

.

.

.

.

d e f

d e

_

_

_

_

_

_

_

_

_

_

_

(3.16)

Since those boundary conditions have been substituted into (3.15) and hence do not appear

in its solution, they also have to be given, although separately.

The complementarity problem described in equation (3.15) is usually referred to a discrete

(LOCP), because

m1

is known at each time step and hence we can get all those solutions

m

, m = 1, . . . , M by running iterations through out time indices. Correspondingly, the

global linear complementarity problem requires encapsulating all the solution vectors

m

, m =

11

1, . . . , M into an even bigger vector, if we denote

=

_

_

_

_

_

1

.

.

.

M

_

_

_

_

_

, (3.17)

we can express the discrete (LOCP) problem as

_

C

(

) (C ) = 0

(3.18)

where

, C, are given by

=

_

_

_

_

_

_

_

_

B

.

.

.

_

_

_

_

_

_

_

_

C =

_

_

_

_

_

_

_

_

A

B A

.

.

.

.

.

.

B A

_

_

_

_

_

_

_

_

=

_

_

_

_

_

.

.

.

_

_

_

_

_

(3.19)

However, before we write down the well-posed equivalent linear programs for both dis-

crete and global cases, we still have to verify that the equivalence conditions, namely, the

type Z property and coercivity of the dierential operator indeed holds in the matrix sense.

Considering the early discrete complementarity problem presented in (3.15), since

m1

is

known at step m, the discretized operator L is represented in nite dimensions by matrix A,

so we require that A be type Z and coercive. It is simple to show that a matrix is type Z i

it has nonnegative o-diagonal coecients, which is the classic denition of a Z matrix. It is

clear here that A is of type Z i a 0 and c 0, which imposes that

r

2

/2

2

/ (3.20)

As a matter of fact, as long as we take I large enough, this condition holds for all parameter

values, that is for realistic parameter values the critical value of I is very small. It is also

simple to be shown that under this condition, A is coercive [7]. We now give the corresponding

formats of linear program in both discrete and global senses. For any xed c

1

> 0 in R

(I1)M

and c

2

> 0 in R

(I1)

[4],

(OLP)

_

_

min c

s.t.

C

_

_

min c

m

s.t.

m

A

m

B

m1

(3.21)

12

with the boundary values

()

I

= 0,

()

0

=

0

, and

0

=

.

Jaillet et al. have shown that as M, I , and in case < 1, such that the mesh

ratio := [t/()

2

] 0, the solution of the equivalent discretized localized variational

inequality converges to the solution of the localized variational inequality, which, as already

mentioned previously, itself converges uniformly, as L, U , to the American put value

function on the whole domain. Due to the (conditional) equivalence of these various formu-

lations, the same convergence properties also hold for (DOCP) and (OLP).

Though the unconditional convergence and stability for the case

1

2

1 is not yet

proven, it is well known for the case of equations, i.e. it will be routinely assumed that there

is no condition on the time step that is needed to guarantee stability in the (full) implicit

scheme; for 0 <

1

2

, we have convergence of the scheme if and only if

0

1

2

(1 2)

. (3.22)

Solving the discrete problem

There are two main approaches to solving the discrete order complementarity problem in

equation (3.15) at each time step: the iterative algorithm of Projected Successive OverRelax-

ation (PSOR)[9] and the direct algorithm of Parametric Principal Pivoting (PPP)[3]. Start-

ing with an initial guess vector, say, x

0

, the (PSOR) method updates the current processing

vector at each iteration until a certain tolerance condition (which has been specied previ-

ously) is met. It can be shown that as k , x

k

x, the solution of the problem at each

time step, and by properly choosing the constant called the relaxation parameter within the

interval (1, 2) (which depends on the coecient matrix at that time step), the convergence

can be optimized. Since we assume t is small, it could be expected that the solution of one

subproblem has only a few basic variables changed from that of preceding problem, hence

once we hot-start the (PSOR) solver from the previous time steps solution, this revised

one should be superior to the primal.

In contrast, the backbone of (PPP) algorithm [3], is the parametric (LOCP)(q +d, M)

with a specially chosen parametric vector d > 0 (which is called an n-step vector). The

parametric is initially set at a suciently large positive value so that x = 0 is a solution

of the (LOCP)(q +d, M). The goal is to decrease until it reaches zero, at which point, a

solution of the original (LOCP)(q, M) is obtained. The decrease of is accomplished by the

13

parametric principal pivoting method, and it also can be shown that the (PPP) method with

a predetermined n-step vector d can compute a solution of the (LOCP)(q, M) in at most n

iterations.

For solving the equivalent discrete version of linear program in equation, we shall concen-

trate on the Large-scale algorithm which is based on LIPSOL (Linear Interior Point Solver)a

variant of Mehrotras predictor-corrector algorithm, a primal-dual interior-point method.

3.2.2 Explicit method

The nite-dierence discretization scheme we formed earlier is quite general, in fact, as

can be chosen as any value within the closed interval [0, 1], these various algorithms for

solving discrete problem described above are theoretically applicable to the implicit scheme

corresponding to = 1, Crank-Nicolson scheme with = 1/2, and explicit scheme when is

set to be zero. However, for the explicit method, we can write each times problem in a very

simple way: the coecient matrix A dened by equation (3.16)reduces to the (I 1)-square

diagonal matrix diag(1 +rt), so that the mth subproblems of discrete (LOCP) and (OLP)

given by (3.15) and (3.21) both reduce to

u

m

=

_

1

1 +rt

( Bu

m1

)

_

. (3.23)

This is clearly a very rapid calculation for each iteration, since the only signicant calcu-

lation is a single matrix multiplication. However, the inherited stability constraint for explicit

scheme represented by the equation (3.22) with = 0 implies that one should always take a

number of time steps of the order of the square of the number of the space steps in order to

maintain the stability of the scheme, i.e.

t x, (3.24)

therefore, if for instance the step in the space direction is halved in order to improve the

accuracy of approximation along the x-direction, then the number of time steps must be

quadrupled so that the computation time is multiplied by four, in addition to the eects of

working with a larger matrix A. This big disadvantage of explicit scheme could make itself

computationally very demanding sometimes.

14

4 Numerical Tests

In this section, I am going to report some computational results from empirical tests of

various algorithms pertaining to nite-dierence method (including both the explicit and

(full) implicit schemes) and tree method. Five algorithms with their corresponding MatLab

codes are of our great interest, namely, tree, explicit, (PSOR), (PPP) and (OLP) for American

put. All the program codes employed herein can be found in the Appendix attached behind.

Our main purpose is somehow to reveal the connection between timings and accuracy for

each algorithm and furthermore to determine the optimal curve between the number of time

steps and the number of space steps.

This section has been organized as follows: the rst subsection presents some computa-

tional details as the basis of all the empirical experiments; the second subsection aims to for

each algorithm characterize both the cputime and max |error| as functions of two technical

parameters Ns and Nt denoting the number of space steps and time steps respectively, and

furthermore to nd out the optimal relation between Ns and Nt, in other words, given cer-

tain cputime, say, cputime = c, which Ns and Nt we should adopt in order to minimize the

approximation error, and the same procedure for the determination given certain accuracy

level; in the following the third subsection, comparison among various algorithm based on

the results of subsection 2 are made; the whole section ends up with a plot of solution surface

based on the exact solution also used in the determination of the error in each algorithm

earlier.

4.1 Computational details

All results in the sequel are computed in double precision on an Intel Pentium 4-M 2.2

GHz computer with 256 megabytes (MB) of RAM, running under Microsoft XP OS. All the

program codes were written and executed in MatLab (Release 14). In the (PSOR) algorithm,

relaxation parameter is set as 1.5 (the optimal value of is not known analytically, but

empirically found as close to 1.5 for a range of problems), convergence tolerance as 10

8

, i.e.

the tolerance condition as

x

k+1

x

k

< and starting vector is chosen to be the previous

time steps solution vector.

15

For (PPP) algorithm, the n-step vector is determined by[5]

p =

_

A+

A

2

_

d (4.1)

for any vector d such that

Ad > 0, where

A denotes the comparison matrix of A, which is

dened by

A

ij

:=

_

_

_

|A

ij

| if i = j,

|A

ij

| if i = j.

(4.2)

With the above p, (PPP) algorithm computes the unique solution of the (LOCP) in at most

n pivots.

For the explicit method, a stable method must be used. The stability constraint of

equation (3.24) implies that the number of time steps Nt Nt

min

, where

Nt

min

:=

2

T(Ns)

2

(U L)

2

. (4.3)

4.2 The determination of optimal curve

Experiments are set up as follows: unless otherwise stated, all problems are solved on the

truncated log-stock interval [log(50), log(300)] with maturity T = 1 (1 year), strike price

K = 100, riskless interest rate r = 0.05 (per annum), and volatility level = 0.2 (per

annum), and the exact solution(the most reliable solution) is calculated applying (PPP)

algorithm under the (full) implicit scheme ( = 1) with the number of time steps and the

space steps being set as 100 and 400 respectively. For each pair of time step and space step,

5 cputime samples (measured in seconds) were taken and resulting sample mean was hence

calculated and assigned to that pair.

4.2.1 Timings of numerical algorithms

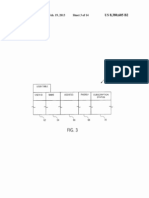

Table 4.1 rstly gives times for the vanilla put for three algorithms, namely, (PSOR), (PPP)

and (OLP), and corresponding plots of each methods time as function of time steps Nt are

given in Figure 4.1.

16

Table 4.1 Times for Varying Time Steps

Time Space steps Ns = 50

steps Nt 30 40 50 60 70 80 90 100 120 150

PSOR 0.96 1.23 1.52 1.82 2.11 2.41 2.74 3.09 3.74 4.79

PPP 2.55 3.14 3.91 4.69 5.51 6.32 6.99 7.80 9.45 12.50

OLP 2.89 3.36 4.14 4.91 5.67 6.53 7.35 7.83 9.31 11.49

0 50 100 150

2

0

2

4

6

8

10

12

14

time steps

t

i

m

e

s

(

s

e

c

s

)

PSOR, PPP and OLP times (Ns=50)

PSOR

PPP

OLP

Figure 4.1 PSOR, PPP and OLP times for varying time steps

Clearly enough, all above three algorithms show the linear dependence on the number

of time steps, so that each time step takes approximately the same amount of time. The

reason for this is quite straightforward: since the number of space steps is kept as constant

and t is small, the size (depends on the size of the constraint matrix A) and complication

level of each subproblem therefore is very close or even the same, hence the time spent in

solving the whole problem can be expected to be proportional to the number of time steps it

takes. However, the sloping of these three algorithms are dierent, comparatively, the line of

(PSOR) is the most at one indicating the least time demanding among three. However, the

time of PSOR fail to show much sub-linear dependence on time steps as time steps increase,

which suggests that the hot-start design does not function as well as we expected earlier

(at least for Ns = 50), reecting that the previous steps solution, used as the starting point

for the iteration, is not that closer to the current time steps solution even for smaller t.

Mathematically, times of three algorithms as functions of number of time steps Nt can be

17

estimated empirically by

Time

PSOR

= 0.077695 + 0.031868Nt for Ns = 50

Time

PPP

= 0.18182 + 0.081862Nt for Ns = 50 (4.4)

Time

OLP

= 0.57579 + 0.073072Nt for Ns = 50.

For explicit algorithm, since much less eort is involved per time step to calculate the

solution of each subproblem (as mentioned previously, the only signicant calculation the

explicit method works with is a matrix multiplication), therefore it would be reasonable to

practice with much larger number of time steps. In fact, this is also due to the consideration

of stability. Some results were collected in Table 4.2 and represented as Figure 4.2.

Table 4.2 Times for Explicit (Space steps Ns = 200)

Time steps Nt Times Time steps Nt Times

600 0.0742 1300 0.1502

700 0.08 1400 0.1582

800 0.0962 1500 0.1722

900 0.1042 1600 0.1802

1000 0.1122 1800 0.2024

1100 0.1282 2000 0.2262

1200 0.1382 2500 0.2804

600 800 1000 1200 1400 1600 1800 2000 2200 2400 2600

0.05

0.1

0.15

0.2

0.25

0.3

Explicit times(Ns=200)

t

i

m

e

s

(

s

e

c

s

)

time steps

Figure 4.2 Explicit times

Similarly, Table 4.2 and Figure 4.2 both show the linear dependence of explicit method

time on the number of time steps. Based on these 14 observations, empirical function of

18

Explicit time can be estimated using standard Ordinary Least Squares estimation technique:

Time

Exp

= 0.006148 + 0.00010962Nt for Ns = 200. (4.5)

In contrast, samples taken for the tree method clearly present a power functions nature

of the time dependence rather than a linear. The reason for this phenomenon may lie in

the inverse structure of the tree scheme. Instead of solving Nt subproblems backwards along

time axis, the tree method rst of all generates a stock tree for each single space point, and

whereafter repeat pricing the options value at each space point by discounting backwards

the stock tree. Thus, if we vary the number of time steps keeping the number of space steps

as constant, the size and hence the complication level for each space grid are modied quite

a lot from time to time. On the other hand, if we test the time dependence in another way

around by varying the number of space steps, we could probably expect a linear dependence

of time on the number of space steps. To linearize the function, log-transformation were

made for both the number of time steps and time of each sample before I did the regression.

Table 4.3 and Figure 4.3 give the outcomes of tree method, and resulting empirical function

can be expressed as

Time

Tree

= e

10.621

Nt

2.0911

for Ns = 50. (4.6)

Table 4.3 Times for Tree method (Space steps Ns = 50)

Nt 30 40 50 60 70 80 90 100 120 150

Times 0.04 0.05 0.08 0.11 0.16 0.21 0.28 0.35 0.55 1.16

0 50 100 150

0

0.2

0.4

0.6

0.8

1

1.2

1.4

Explicit times(Nt=1000)

t

i

m

e

s

(

s

e

c

s

)

space steps

Figure 4.3 Times of Tree method

19

After modeling the time dependence on number of time steps for each algorithm, our next

aim is to determine the time dependence of number of space steps for certain xed Nt. All

the estimation procedures are similar. Lets rst of all check our early conjecture about the

linear dependence of tree methods time upon Ns. Results were summarized as in Table 4.4

and Figure 4.4.

Table 4.4 Times for Tree method (Time steps Nt = 50)

Ns 30 40 50 60 70 80 90 100 120 150

Times 0.05 0.06 0.08 0.09 0.11 0.12 0.14 0.15 0.18 0.23

0 50 100 150

0

0.05

0.1

0.15

0.2

0.25

Tree times(Nt=50)

t

i

m

e

s

(

s

e

c

s

)

space steps

Figure 4.4 Times of Tree method

As we can see from the plot, a perfect match between 10 sample points and the regres-

sion line clearly indicates that our earlier guesswork is indeed correct. We hence can express

the estimated function as:

Time

Tree

= 0.0044 + 0.0015Ns for Nt = 50. (4.7)

Finally, I give mathematical expressions and plots of time functions for remaining 4 algo-

rithms.

Comparing timing behaviors of rst three algorithms as in Figure 4.5 (the rst plot), OLP

has the most at sloping curve indicating the least time required per space steps, so that for

larger Ns, OLP would be highlighted. Whereas for smaller Ns, PSOR is faster, and PPP is

faster still. In case of explicit method, much shorter time than any of rst three algorithms is

required to achieve the solution even though Nt is kept as 1000, and due to this special feature

of explicit method, any negligible invariant operating time by MatLab (in cases of PSOR,

20

0 50 100 150

0

5

10

15

20

25

30

35

40

space steps

t

i

m

e

s

(

s

e

c

s

)

PSOR, PPP and OLP times (Nt=50)

PSOR

PPP

OLP

0 0.005 0.01 0.015 0.02 0.025

0.84

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

1.02

Seleting of c0

R

s

q

u

a

r

e

v

a

lu

e

c0

0 50 100 150 200 250

0

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

Explicit times(Nt=1000)

t

im

e

s

(

s

e

c

s

)

space steps

Figure 4.5 Times of PSOR, PPP and OLP and Times of Explicit

PPP and OLP) such as fetching data and plotting graph now becomes far more signicant

so that it can not be ignored any more. Therefore, a revised version of timing function for

explicit method with an unspecied constant term, namely, Time = c

0

+a(Ns)

b

is preferable.

The estimating of this constant coecient is based on the maximum R

2

principle, and the

above left lower plot presents the curve of R

2

with varying c

0

values. Obviously, a constant

coecients value of 0.023 gives the maximum R

2

which is 0.9862, and corresponding curve

of timing function is described aside. All empirical functions are listed as follows:

Time

PSOR

= e

4.6089

(Ns)

1.2955

for Nt = 50

Time

PPP

= e

6.4487

(Ns)

2.0060

for Nt = 50

Time

OLP

= e

0.2439

(Ns)

0.4361

for Nt = 50

Time

Exp

= 0.0230 +e

20.2646

(Ns)

3.4272

for Nt = 1000.

Now we are ready to characterize the full timing function with two technical variables Nt

and Ns, i.e. cputime = f(Nt, Ns). Based on all above empirical functions we have obtained

either along Nt-axis or Ns-axis, we can further conjecture that: for PSOR, PPP and OLP,

similar timing functions are of the form: (+Nt)Ns

; for explicit method, a revised version

21

is adopted, that is +Nt(Ns)

; and for tree method, we need exchange the roles of Nt and

Ns: (+Ns)Nt

. Notice that, except for explicit method, specifying these three parameters

requires solving a overdetermined linear system with 4 equations but only 3 unknowns for

each algorithm (there are 5 equations with 3 unknowns in case of explicit method), hence I

approximate them again applying OLS technique. After some tedious calculations, we have

PSOR cputime = (0.00048891 + 0.00020903Nt)Ns

1.2955

PPP cputime = (0.00007102 + 0.00003307Nt)Ns

2.0060

OLP cputime = (0.1046 + 0.0136Nt)Ns

0.4361

Tree cputime = (0.000001233 + 0.000000463Ns)Nt

2.0911

(4.8)

For explicit method, since the resulting equation system has a considerably large discrep-

ancy in the intercept (Nt data implies = 0.006148, whereas Ns data implies = 0.023),

a tradeo has to be made between the intercepts as determined by the Ns data and by the

Nt data. Instead of simply taking the average of the two values, I also took the R

2

values

for dierent intercepts for the Nt data, just as I did for the Ns data. It might be that the

R

2

for the Nt data is less sensitive to the choice of the constant than the R

2

for the Ns

data. In that case the nal estimated should be closer to 0.023 than to 0.006148. Formally,

I maximize the sum of the two R

2

s, and the following plot displays the ndings:

0 0.005 0.01 0.015 0.02 0.025

1.84

1.86

1.88

1.9

1.92

1.94

1.96

1.98

c0

Sum of RSquare Value

Figure 4.6 The determination of two estimated intercepts

Clearly, the R

2

of Ns data completely dominates that of Nt data, since the shape of the

above curve is almost the same as the R

2

of Ns data. Hence, the nal estimated intercept

is determined as 0.023. We have:

Explicit cputime = 0.023 + 1.5011 10

12

Nt(Ns)

3.4272

.

(4.9)

22

4.2.2 Accuracy analysis of numerical algorithms

In this subsection, we are going to build up the connection between accuracy and two

technical parameters Ns and Nt for each algorithm. Our accuracy here is dened in terms of

maximum absolute error compared with our most reliable solution, and due to the global sense

of maximum absolute error, we may expect a nice convergence property for some algorithms.

Before we start our standard estimation procedure, it may be of great interest to get a rst

impression of the errors behavior from the following comparison (Nt = 60, Ns = 60 for

PSOR, PPP, OLP and Tree method; Nt = 1500, Ns = 200 for Explicit method; error =

exact solution resulting solution)

0 100 200 300

15

10

5

0

5

x 10

3 Error of Explicit

stock price

0 100 200 300

0.005

0

0.005

0.01

0.015

0.02

0.025

Error of ImplicitPSOR

stock price

0 100 200 300

0.01

0

0.01

0.02

0.03

0.04

Error of ImplicitPivoting

stock price

e

r

r

o

r

0 100 200 300

0.005

0

0.005

0.01

0.015

0.02

0.025

Error of ImplicitLP

e

r

r

o

r

stock price

0 100 200 300

0.04

0.03

0.02

0.01

0

0.01

Error of Treemethod

stock price

e

r

r

o

r

Figure 4.7 Error comparison of various algorithms

As exhibited by the above graph, the errors of PSOR, PPP and OLP are roughly similar:

all have maximum (positive) errors appearing at (or around) strike price K = 100 and

much smaller but still observable (negative) errors somewhere in the middle of (100, 200),

in particular, for PSOR and OLP, the errors behavior are even the same at some accuracy

level; on the contrary, Explicit and Tree method seem as in the same category except that

tree method displays a much irregular behavior uctuating so heavily around strike price.

We may also check out the corresponding comparison of relative error:

Although the maximum errors occur closely around the neighborhood of strike price for

all algorithms, they are relatively small enough such that none of them have any signicant

inuence on nal solutions. On the other hand, the errors from larger values of stock price

are magnied, since the exact solution are very close to zero for some points in that area,

23

0 100 200 300

0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

Relative Error of Explicit

stock price

0 100 200 300

1.5

1

0.5

0

0.5

Relative Error of ImplicitPSOR

stock price

0 100 200 300

1.5

1

0.5

0

0.5

Relative Error of ImplicitPivoting

r

e

la

tiv

e

e

r

r

o

r

stock price

0 100 200 300

4

3

2

1

0

1

Relative Error of ImplicitLP

stock price

r

e

la

tiv

e

e

r

r

o

r

0 100 200 300

0.2

0

0.2

0.4

0.6

0.8

1

1.2

Relative Error of Treemethod

stock price

r

e

la

tiv

e

e

r

r

o

r

Figure 4.8 Relative error comparison of various algorithms

and we may also see clearly that for PSOR, PPP and OLP, errors from larger values of stock

price always remain negative, whereas for latter two positive.

Lets rst of all try to express the max |error| as a function of Nt for PSOR, PPP and

OLP. Figure 4.9 and Table 4.5 give some ideas:

20 40 60 80 100 120 140 160

0

0.01

0.02

0.03

0.04

0.05

0.06

0.07

0.08

0.09

time steps

m

a

x

i

m

u

m

a

b

s

o

l

u

t

e

e

r

r

o

r

PSOR, PPP and OLP Errors

PSOR

PPP

OLP

Figure 4.9 Errors of PSOR, PPP and OLP

Table 4.5 Errors for PSOR, PPP, and OLP

Time PSOR & OLP: Ns = 80 PPP:Ns = 100

steps Nt 30 40 50 60 70 80 90 100 120 150

PSOR 0.040 0.030 0.024 0.020 0.017 0.014 0.013 0.012 0.012 0.012

PPP 0.033 0.023 0.017 0.014 0.011 0.011 0.009 0.010 0.009 0.008

OLP 0.040 0.030 0.024 0.020 0.017 0.014 0.013 0.012 0.012 0.012

24

The decreasing of the maximum absolute errors of rst three algorithms also present a

power functions nature, so that we may try to trace errors behavior by building up our

model as max |error| = c + aNt

b

, b > 0. In order to estimate these three parameters, we

may again use OLS technique and choose the constant c that maximizes the R

2

value. Also

notice the fact that maximum absolute errors of PSOR and OLP are exactly the same at 3

decimal accuracy, hence it leads to a very close empirical function for both cases (this is why

there are only two dierent curves on above graph). The following are collected empirical

functions:

PSOR : max |error| = 0.0102 +e

3.5195

Nt

2.0395

for Ns = 80

PPP : max |error| = 0.0064 +e

2.4381

Nt

1.7883

for Ns = 100

OLP : max |error| = 0.0102 +e

3.5212

Nt

2.0400

for Ns = 80

(4.10)

Next we are going to capture the error dependence for explicit method. Experiment is

set up along the axis of time steps at 20 frequency in the range of [600, 2500] with Ns kept

as 200. Table 4.6 and Figure 4.10 collect all summarized results:

Table 4.6 Errors for Explicit (Space steps Ns = 200)

Time steps Nt Error Time steps Nt Error Time steps Nt Error

600 0.015232 1300 0.01397 2000 0.013602

700 0.014891 1400 0.013895 2100 0.01357

800 0.01464 1500 0.01383 2200 0.01354

900 0.014444 1600 0.013773 2300 0.013513

1000 0.01429 1700 0.013723 2400 0.013489

1100 0.014161 1800 0.013677 2500 0.013466

1200 0.014057 1900 0.013637 2600

Assuming again the error function for explicit method follows a power form, estimation of

parameters therefore is simply a repeat of the previous. The resulting R

2

is 0.9999 implying

a nearly perfect coincidence of theoretical curve and samples. Our empirical function of error

for explicit method is:

Explicit method : max |error| = 0.0129 +e

0.2584

Nt

0.9894

for Ns = 200

(4.11)

However, in case of tree method, resulting maximum absolute errors from various inputs

of time steps clearly show an oscillatory behavior depending on whether the number of time

25

0 500 1000 1500 2000 2500

0.013

0.014

0.015

0.016

0.017

0.018

0.019

0.02

Explicit times(Nt=1000)

t

i

m

e

s

(

s

e

c

s

)

space steps

Figure 4.10 Errors of Explicit (Ns=200)

steps is odd or even (which is a well known phenomenon, and also seen in the pricing of

European options). We can see this point from the following graph:

0 20 40 60 80 100

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

Error of Tree (Ns=50)

m

a

x

im

u

m

a

b

s

o

lu

t

e

e

r

r

o

r

time steps

50 60 70 80 90 100

0.02

0.022

0.024

0.026

0.028

0.03

0.032

0.034

0.036

0.038

0.04

Time steps

m

a

x

im

u

m

a

b

s

o

lu

t

e

e

r

r

o

r

Error of Tree

Figure 4.11 Oscillatory behavior of errors for Tree method

The plot at left hand side is a full image of errors generated at 20 frequency on the whole

range of [5, 100] along the axis of time steps, whereas the right-hand-side only magnies those

errors located in indierent area from 50 to 100. Although there are always some slightly

oscillatory movements depending on whether the number of time steps is odd or even (as

we can see from the right-hand-side plot, errors occurring at odd numbers of time steps

deviate slightly downwards from those occurring at even numbers of time steps), an overall

view still implies that the error function could roughly follow the power form. Based on

this observation, we may model the error function for tree method as (an intercept c is not

included in the case of tree method, the reason for this might be clear as revealed later on):

Tree method : max |error| = e

0.3117

Nt

0.7472

for Ns = 50

(4.12)

26

In order to approximate the full error function with two variables Ns and Nt, we still

need to formulate the connection along the space direction. All modeling procedures would

remain the same. As before, lets rst of all check out PSOR, PPP and OLP cases.

Table 4.7 Errors for PSOR, PPP, and OLP

Space Time steps Nt = 50

steps Ns 30 40 50 60 70 80 90 100 120 150

PSOR 0.054 0.031 0.029 0.026 0.027 0.024 0.019 0.016 0.015 0.016

PPP 0.061 0.034 0.052 0.036 0.029 0.025 0.034 0.017 0.016 0.017

OLP 0.054 0.031 0.029 0.026 0.027 0.024 0.019 0.016 0.015 0.016

20 40 60 80 100 120 140 160

0.01

0.02

0.03

0.04

0.05

0.06

0.07

0.08

0.09

space steps

m

a

x

i

m

u

m

a

b

s

o

l

u

t

e

e

r

r

o

r

PSOR, PPP and OLP Errors (Nt=50)

PSOR

PPP

OLP

Figure 4.12 Errors of PSOR, PPP, and OLP (Nt=50)

As similar as we approximate the link between the error and the number of time steps,

it appears that maximum absolute errors of PSOR, PPP and OLP also decrease in some

reciprocal proportion rates as the number of space steps increases, and this again (possibly)

implies the power forms of the error functions for these three cases. Particularly, the er-

rors of PSOR and OLP remain exactly the same at 3 decimal accuracy predicting a closely

linked empirical functions for both of them. Under the assumption that they all function as

max |error| = c +aNs

b

, b > 0, we may obtain them empirically as follows:

PSOR : max |error| = 0.0069 +e

0.2437

Ns

1.0198

for Nt = 50

PPP : max |error| = 0.0004 +e

0.0091

Ns

0.8347

for Nt = 50

OLP : max |error| = 0.0069 +e

0.2447

Ns

1.0201

for Nt = 50

(4.13)

27

In case of explicit method, experiment is designed along the axis of space steps at 50

frequency in the range of [5, 250] with Nt kept as 2000. Figure 4.13 plots the results:

0 50 100 150 200 250

0

0.5

1

1.5

2

2.5

Explicit error (Nt=2000)

m

a

x

im

u

m

a

b

s

o

lu

t

e

e

r

r

o

r

space steps

50 100 150 200 250

0

0.005

0.01

0.015

0.02

0.025

0.03

Space steps

m

a

x

im

u

m

a

b

s

o

lu

t

e

e

r

r

o

r

Error of Explicit (Nt=2000)

Figure 4.13 Errors of Explicit (Nt=2000)

As revealed by the above graph, almost all the errors generated at larger numbers of

space steps are quite close to zero, in fact, if we microscope these approximately constant

errors as we did in the tree case, we may observe a slightly upward trendas presented

by the right-hand-side plot. However, from a wider windows view, this tiny trend can be

regarded as negligible, and hence we could still approximate the errors behavior of explicit

method using the standard power form. The empirical functions for explicit method can be

represented as (maximum R

2

principle implies the intercept c = 0):

Explicit method : max |error| = e

0.3159

Ns

0.9639

for Nt = 2000

(4.14)

The experiments on tree method do not show us much causal relationship between the

maximum absolute error and space steps, as we can see from the following several plots:

0 20 40 60 80 100

0.04

0.045

0.05

0.055

0.06

0.065

Space steps

m

a

x

im

u

m

a

b

s

o

lu

te

e

rro

r

Error of Tree (Nt=30)

0 20 40 60 80 100

0.0388

0.039

0.0392

0.0394

0.0396

0.0398

0.04

0.0402

0.0404

0.0406

0.0408

Error of Tree (Nt=50)

m

a

x

im

u

m

a

b

s

o

lu

te

e

rro

r

space steps

0 20 40 60 80 100

1

0.5

0

0.5

1

1.5

Space steps

m

a

x

im

u

m

a

b

s

o

lu

te

e

rro

r

Error of Tree (Nt=80)

0 20 40 60 80 100

0.005

0.01

0.015

0.02

0.025

0.03

0.035

Space steps

m

a

x

im

u

m

a

b

s

o

lu

te

e

rro

r

Error of Tree (Nt=85)

Figure 4.14 Error for Tree: Indierence of space steps

(From left to right Nt = 30, 50, 80, 85)

However, this result is actually not so surprising as it may sound like at rst. Lets

once again recall the pricing mechanism of the tree method. As mentioned before, tree

method simply prices every single space point (that is every initial stock price) by discounting

28

backwards the stock tree generated in the earlier phase, therefore dierent inputs of space

steps only result in dierent set of initial space grids that we are going to price and what we

nally obtain is still the same solution although appearing as a new vector whose elements

corresponding to this new set of initial space grids. Above four plots with Nt = 30, 50, 80, 85

show totally distinct version of errors behavior, but at least they are only visible from a

microscopic viewindeed, noises at that accuracy level could arise form many sources, such

as interpolation and half-adjusting procedures, therefore for sake of simplicity, we would

rather assume that all results are approximately constant hence indierent with space steps.

Now we are ready to merge our two error functions of each algorithm (except for tree

method) either in time step direction or space step direction togeter into a whole one with both

variables. For this purpose, we may further conjecture the function forms for each case, that

is, we assume that all the error functions follow a function form of max|error| = c

1

(Nt)

1

+

c

2

(Ns)

2

. The specifying of all 4 parameters also requires solving 2 overdetermined linear

systems, for instance, in case of PSOR, we are confronted with:

_

_

_

c

1

= e

3.5195

,

1

= 2.0395

c

1

(50)

1

= 0.0069

_

_

_

c

2

= e

0.2437

,

2

= 1.0198

c

2

(80)

2

= 0.0102

(4.15)

Hence the nal estimated values of all 4 parameters are determined by applying OLS (pro-

jecting) technique. All empirical error functions for various algorithms are summarized as

follows:

PSOR : max |error| = e

3.4896

(Nt)

2.1564

+e

0.2267

(Ns)

1.0943

PPP : max |error| = e

2.2493

(Nt)

2.5267

+e

0.0433

(Ns)

1.0761

OLP : max |error| = e

3.4913

(Nt)

2.1569

+e

0.2277

(Ns)

1.0945

Explicit : max |error| = e

0.2458

(Nt)

0.9894

+e

0.3306

(Ns)

0.8863

Tree : max |error| = e

0.3117

(Nt)

0.7472

(4.16)

4.2.3 Optimizations and Comparisons

Having estimated both the timing function and error function for all algorithms, our next

step would be to decide the minimum time expense given certain error level, say, u. Then it

becomes natural that we express the min|cputime| as a function of error level u. Since those

models we constructed for PSOR, PPP and OLP cases are of the same type, optimization

problems for them therefore inherit this similarity as well. More specically, we are confronted

with

29

min{(a +bNt)(Ns)

}

s.t. c

1

(Nt)

1

+c

2

(Ns)

2

= u ,

i

> 0 i = 1, 2.

(4.17)

Such an equality-constrained optimization problem can be easily reduced to an uncon-

strained one by introducing the Lagrangian L(Nt, Ns, ). After taking the First Order Con-

dition (FOC), one can show that the optimal Nt

and Ns

satisfy the following equation

system:

_

_

_

(

2

c

1

+c

1

1

)(Nt

1

+

ac

1

1

b

(Nt

1

1

2

u = 0

Ns

=

_

uc

1

(Nt

1

c

2

_

1/

2

(4.18)

The left-hand side of rst equation can be seen as a polynomial but with non-integer power

terms, the root to which is therefore hard to derive analytically. Instead, I approximate

its numerical solution by implementing the Newton method, namely, to iterate Nt

k+1

=

Nt

k

f(Nt

k

)

f

(Nt

k

)

as long as the absolute value of above polynomial is not small enough (the zero-

tolerance level is set as 10

8

in practice). By substituting both Nt

and Ns

(as functions of

u) back into the criterion, we arrive at the optimal curves of PSOR, PPP and OLP (y-axis

denotes the log-cputime rather than the original).

0 0.02 0.04 0.06 0.08 0.1

2

1

0

1

2

3

4

Optimal Curve and LogCputime/Error Combinations (PSOR)

L

o

g

C

p

u

t

im

e

maximum absolute error

0 0.02 0.04 0.06 0.08 0.1

4

3

2

1

0

1

2

3

4

Optimal Curve and LogCputime/Error Combinations (PPP)

L

o

g

C

p

u

t

im

e

maximum absolute error

0 0.02 0.04 0.06 0.08 0.1

0

0.5

1

1.5

2

2.5

3

Optimal Curve and LogCputime/Error Combinations (OLP)

L

o

g

C

p

u

t

im

e

maximum absolute error

Figure 4.15 Optimal Curves for PSOR, PPP and OLP

30

All above three curves are lying northwest towards southeast which is consistent with our

straightforward experience that the rougher precision it requires, the less computational time

it is needed to calculate the result. Also notice that, for all three algorithms, some random

sample points combining error level and log-cputime distribute right above the optimal curves

(although in case of PPP algorithm, samples seem deviating the curve a little further away,

which probably indicates that we might be too optimistic about the minimum cputime for a

certain range of error level), therefore this evidence may in turn provide some condence in

the validity of our models.

Another result we may also derive from the optimization problem is the optimal pairs

of time steps and space steps dened in the sense of minimum cost of cputime. The following

table maps each number of time steps to its corresponding number of space steps:

Table 4.8 Optimal Pairs of Time steps and Space steps

Time steps Nt

30 50 60 80 70 90 100 120 150

Space steps PSOR: 19 50 71 96 124 156 192 274 424

Ns

PPP: 89 286 436 622 849 1116 1426 2180 3670

OLP: 39 114 167 229 301 384 475 688 1080

In case of Explicit method, a similar optimization procedure can be adopted except that

an analytical solution now is derivable. The optimal Nt

and Ns

given error tolerance level

u are

_

_

Nt

=

_

2

u

c

1

1

+

2

c

1

_

1/

1

Ns

=

_

uc

1

(Nt

1

c

2

_

1/

2

(4.19)

Again by substituting both Nt

and Ns

(as functions of u) back into the criterion, we arrive

at the optimal curves of Explicit method (y-axis denotes the log-cputime rather than the

original).

The most apparent feature of the optimal curve for Explicit method would be that the

log-cputime becomes invariant when the error level goes large. This phenomenon coincide

with the existence of the intercept term in the timing model for Explicit method which we

previously interpreted as unnegligibly constant operating time by MatLab. In order to get

some idea about the validity, I also sampled some error/log-cputime combinations in the plot:

6 out of 9 sample points located right above the curve whereas the rest 3 below indicates

31

0 0.02 0.04 0.06 0.08 0.1

4

3

2

1

0

1

2

3

Optimal Curve and LogCputime/Error Combinations (Explicit)

L

o

g

C

p

u

t

im

e

maximum absolute error

Figure 4.16 Optimal Curve for Explicit method

that we might be too conservative about the minimum cputime for a certain range of error

level. The following gives the optimal pairs for Explicit method:

Table 4.9 Optimal Pairs for Explicit method

Time steps Nt

1000 1200 1400 1500 1800 2000 2200 2500

Space stepsNs

541 663 787 850 1042 1172 1304 1504

For the purpose of comparison among algorithms, we could also gather all the optimal curves

in one single plot:

0 0.01 0.02 0.03 0.04 0.05 0.06 0.07 0.08 0.09 0.1

0

5

10

15

20

25

30

35

maximum absolute error

m

in

im

u

m

c

p

u

tim

e

Optimal Curves of Various Algorithms

PSOR

PPP

OLP

Explicit

Figure 4.17 Optimal Curves for Various Algorithms

Assuming all the curves we estimated are approximately valid, then Explicit method

would be our best choice for a long range of accuracy (error) level from approximately 0.005

to 0.1, since its optimal curve always lies the lowest among all 4 algorithms. However, the

time demanding of Explicit method increases very fast as soon as the error level goes below

0.01 (even more accurate) such that it wont be optimal again when for instance we require

32

the maximum absolute error of the solution no larger than 0.003. Also notice that the optimal

curves for PSOR, PPP and OLP almost intersect at the same point around (0.01, 10), and

they have a complete reversed ranking of optimality at each side of this point: PSOR always

shows a mediocre behavior; for larger error level, PPP algorithm is superior; whereas for

smaller error, OLP is the fastest.

However, special (simpler) care need to be taken in case of Tree method, since its error

function does not depend on the number of space steps. Therefore, one can always optimize

his criterion (timing function) by reducing the number of space steps to 1, in other words, by

pricing the options value only for one specic stock price, and this so-called optimal curve

we nally arrive at is actually the time expense of doing that. Equivalently, we can also draw

the dependence of computational time on the accuracy (error) level for a xed number of

space steps, say 50. The following graph gives some idea:

0 0.02 0.04 0.06 0.08 0.1

6

5

4

3

2

1

0

1

2

3

4

Connection between Logcputime and Error for Tree method

L

o

g

c

p

u

t

im

e

maximum absolute error

Figure 4.18 Connection between log-cputime and error for Tree method

4.2.4 American option solution surface graphically

Finally I shall give the plot of the solution surface of the American vanilla put option solved

for this section. Figure 4.19 shows the American vanilla put value function with respect to

the true stock price (Ns = 50, Nt = 50), and we can recognize in it all the theoretical features

of Figure 2.1.

5 Conclusion

In this paper, we discuss and test ve computational methods for American vanilla put op-

tion, namely, Projected Successive OverRelaxation algorithm, Parametric Principal Pivoting

33

0

0.5

1

0

50

100

150

200

250

300

10

0

10

20

30

40

50

60

Time to maturity

American vanilla put

stock price

o

p

t

i

o

n

v

a

l

u

e

Figure 4.19 Solution surface with true stock price axis

algorithm, Linear Programming, Explicit method and Tree method. Tree method is somehow

the most straightforward and simplest method, and it is mainly developed for the illustration

purpose pricing the options value at one single stock grid (the optimization problem for Tree

method also reects this argument). Hence, it is always not ecient to apply Tree method

proposed here to price a solution vector. Apart from Tree method, all the rest 4 algorithms

are built up under the Finite Dierence Discretization framework sharing the same problem

format (or equivalent problem format), that is LOCP (or equivalent OLP), and furthermore

they can be categorized into implicit scheme( = 1) and explicit scheme( = 0). A com-

parison among all the optimal curves drawn from the empirical functions implies that: for

rougher precision requirement, Explicit method would be our best choice for its lowest time

demanding. However, when the accuracy must be made such that the maximum absolute

error in a solution vector can not exceed 0.005, we should otherwise consider PPP algorithm

our optimal choice.

Until now, all the conclusions are made under the assumption that those timing and error

functions we estimated for each case are approximately valid. As a matter of fact, we only

exploited very few of sample points to model either the timing behavior or the error behavior

of each algorithm, estimation error is therefore inevitable. Even though all the empirical

functions were perfectly correct, it would be the case that they are only reliable for a certain

range of cputime and accuracy (error) level, in other words the explanatory power of the

functions are limited within our interest region.

Also consider that the PSOR algorithm used here is specically designed for this problem,

34

but the large-scale and PPP solver utilized are somehow general purpose algorithms. There-

fore, we may expect that more recent (or specially developed) large-scale and PPP codes

would perform even better.

Appendix

function [z,nu]=Projected_SOR(q0,M,omega,eps,z0)

%LCP solver applying PSOR algorithm; q0 and M are the characteristic

%parameters describing the LCP, omega is relaxation parameter, and eps

%denotes the tolerance level.

z(:,1)=10*ones(length(M),1);

z(:,2)=z0; %in order to realize the first round of the looping,

%i.e. norm((z(:,2)-z(:,1))>=eps for sure

y(:,1)=zeros(length(M),1); %initializations

nu=2; %step-counter

while norm((z(:,nu)-z(:,nu-1)))>=eps

y(1,nu+1)=1/M(1,1)*(-q0(1,:)-M(1,[2:1:length(M)])*z([2:1:length(M)],nu));

z(1,nu+1)=max(0,z(1,nu)+omega*(y(1,nu+1)-z(1,nu)));

for i=2:length(M)-1

y(i,nu+1)=1/M(i,i)*(-q0(i,:)-M(i,[1:1:i-1])*z([1:1:i-1],nu+1)

-M(i,[i+1:1:length(M)])*z([i+1:1:length(M)],nu));

z(i,nu+1)=max(0,z(i,nu)+omega*(y(i,nu+1)-z(i,nu)));

end

y(length(M),nu+1)=1/M(length(M),length(M))*(-q0(length(M),:)