Академический Документы

Профессиональный Документы

Культура Документы

CFA Level 1 Review - Quantitative Methods

Загружено:

Aamirx64Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

CFA Level 1 Review - Quantitative Methods

Загружено:

Aamirx64Авторское право:

Доступные форматы

CFA Level One Quantitative Methods:

Session 2 Basic Statistics and Probability

Frequency Distributions:

Appropriate Number of Classes 2

k

N (N = total number of observations)

Sample Mean

N

x

x

Weighted Mean

) (

) (

w

wx

x

w

Median: midpoint in the data (half of datapoints above and half below) important if mean is affected by

outliers

Mode: most frequently occurring value

Positive Skewness: Mode < Median < Mean

Negative Skewness: Mean < Median < Mode

Arithmetic Mean (using classes)

N

fx

x

(where f = frequency of observations in a class)

Median (locate the class in which the median lies and interpolate with:

i

f

CF

n

L Median .

2

1

1

]

1

+

(where L = lower limit of class containing the median; CF = cumulative frequency preceding the median

class; f = frequency of observations in the median class; I = class interval of median class)

Variance and Standard Deviation:

Sample Sandard Deviation

( )

1 1

) (

2

2

2

n

n

x

x

n

x x

s

For population Std Dev, use

instead of x and use N instead of n-1

(Variance is the square of Std Dev)

For a frequency distribution:

( )

1

2

2

n

n

fx

fx

s

(f is frequency by class; x the class midpoint)

68% of observations are within 1 S.D. of the mean

95% of observations are within 2 S.D. of the mean

99.7% of observations are within 3 S.D. of the mean

Coefficient of Variance enables comparison of two or more S.D.s where the units differ:

100 .

1

]

1

x

StdDev

CV

Skewness can be quantified using:

[ ]

StdDev

Median Mean

S

k

. 3

Basic Probability

For mutually exclusive events: P(A or B) = P(A) + P(B)

For non-mutually exclusive events: P(A or B) = P(A) + P(B) P(A and B)

For independent events: P(A and B) = P(A) x P(B)

For conditional events: P(A and B) = P(A) x P(B given that A occurs) = P(A) x P(B|A)

Bayes theory calculates the posterior probability which is a revision of probability based on a new event, i.e.

the probability that A occurs once we know B has occured:

P(A1|B) = P(A 1) x P(B|A1)

P(A1) x P(B|A1) + P(A2) x P(B|A2)

Mean of a Probability Distribution

[ ]

) (x xP

(where x is the value of a discrete random variable and P(x) the probability of that value occurring)

Standard Deviation of a Probability Distribution ( ) ( ) x P x

2

(Variance =

2

)

Binomial Probability

2 mutually exclusive outcomes for each trial

The random variable is the result of counting the successes of the total number of trials

The probability of success is constant from one trial to another

Trials are independent of one another

Binomial Probability of success: ( )

x n x

x

p p

x n x

n

P

) 1 (

)! ( !

!

Good for small values of n

For each value of n binomal tables can be used, plotted for a given value of n as:

- p (probability of success)

- x (number of observed successes)

Probabilities can be summed to give a cumulative probability

For a binomial distribution . . . .

Mean:

np

Std. Dev: ) 1 ( p np

Poisson Probability Distribution

Used when p < 0.05 and n > 100 (it is the limiting form of the binomial distribution function):

( )

!

) )( (

x

e

P

x

x

For a poisson distribution mean = variance np

2

so std dev. np

Poisson distribution tables are available and give the probability of x (number of successes) for a given

(mean number of successes)

Normal Probability Distribution

Standard Normal Distribution

Mean = median = mode

Mean = 0

= 1

Used as a comparison for other normal distributions

Z values

Used to standardize observations from normal distributions. The Z value describes how far an observation is

from the population mean in terms of standard deviations:

Z = Observation - Mean = x -

Std Dev

The area under the curve in a normal distribution between two values is the probability of an observation

falling between the two values.

A table of Z values is used to find the area under the normal curve between the mean and a particular figure;

these are given as a decimal so (x100) to get the percentage probability. These tables are used for:

Finding the probability that an observation will fall between the mean and a given value

A one-tailed test of Z

Finding the probability that an observation will fall in a range around the mean (a confidence interval)

A two-tailed test of Z

Confidence intervals are two tailed tests of Z at integer standard deviations and describe the probability of an

observation falling between the mean and n standard deviations from the mean

34% of observations fall between the mean and 1 so 68% t 1

45% of observations fall between the mean and 1.65 so 90% t 1.65

47.5% of observations fall between the mean and 1.96 so 95% t 1.96

49.5% of observations fall between the mean and 2.58 so 99% t 2.58

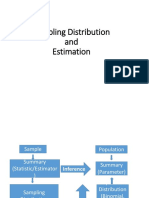

Standard Errors

Sampling error =

x

(also for Variance and Std. Dev. Is the diff between the sample statistic

and the population statistic)

A distribution of sample means from a large sample size has approximately normal distribution, a mean of

and a variance of

2

Point estimates are a single sample value used to estimate population parameters (e.g. sample mean x )

Interval estimates area calculated from the point estimates and describe the range around the point estimate in

which the population parameter is likely to fall for a given level of confidence as from Z values:

95% of the time population mean is within t 1.96 of the sample mean x

99% of the time population mean is within t 2.58 of the sample mean x

The standard error of sample means (std. Dev. of sample means distribution) proves this:

n

x

If is not known use sample standard deviation s

Calculation of the confidence intervals uses the Z values:

e.g. 95% confidence interval

,

_

t

n

s

x 96 . 1

For other intervals replace 1.96 with the Z value from the Z value table for a given confidence level

Hypothesis Testing

Step Action Steps

1 Write the Null

Hypothesis and the

Alternative

Hypothesis

H0 Will always contain an equality

H1 Never contains an equality

For a one tailed test H0 is greater than or equal to (or less than or equal to) a

given test value; H1 is subsequently less than (or greater than) the test value

For a two tailed test H0 is equal to a test value and H1 does not equal that

value

2 Select level of

significance

The significance level is the probability of rejecting the Null Hypothesis when

it is true (related to confidence intervals):

5% significance level: 95% confidence level . . . . t 1.96 (for 2 tailed test)

1% significance level: 99% confidence level . . . . t 2.58 (for 2 tailed test)

Risk of rejecting a correct H0 is risk; Risk of accepting a wrong H0 is risk

H0 Accept Reject

Is True correct error

Is False error correct

Step Action Comments

3 Calculate the test

statistic

Use:

n

x

Z

*

Where:

Z = Test statistic

x = Sample Mean

= Population Mean (H0)

= Standard error of sample means (if unavailable use sample std. dev.**)

* for single observations use just Z value

Z = x-

**

n

s

s

x

4 Establish the

decision rule

The decision rule states when to reject the null hypothesis:

How many Standard Deviations (Z) the sample mean x must be from

H0 in order to be rejected

Driven by the significance level

Critical Z value

Significance Level One tailed Two tailed

10% 1.29 1.65

5% 1.645 1.96

1% 2.33 2.58

If the sample mean is less than the critical Z value from H0 then the null

hypothesis is accepted

5 Make the decision Based on the data

Addtionally, P-values are considered; this is the probability of observing another sample as extreme as the

current one assuming that the null hypothesis is true.

If the P-value is less than the significance level then H0 is rejected. For a two-tailed test:

Pvalue = 2 x (0.5 - The area under the curve from the centre of the distribution to Z *)

* taken from the standard Z tables

Correlation Coefficient:

[ ][ ]

2 2 2 2

) ( ) ( ) ( ) (

) )( ( ) (

y y n x x n

y x xy n

r

Coefficient of determination = R

2

= r

2

= % of Ys variability explained by variability in X

Ys total variability

e.g. R

2

= 0.80 between a stock and the index means that 80% of the stocks movement is explained by

movement in the index (systematic risk) and 20% is specific to the stock (unsystematic risk)

Total risk = Systematic Risk + Unsystematic Risk

t-statistics and hypothesis testing

t-Statistics are used in place of Z-statistics for small (n < 30) samples; they are used in hypothesis testing just

as the Z-statistic

2

1

2

r

n r

t

(r = correlation coefficient; r

2

= coefficient of determination ; n-2 = degrees of freedom)

Us t-Statistics to determine if the correlation of x and y is significantly different from zero; i.e. the null

hypothesis is that x and y are uncorrelated:

H0: r = 0

H0: r 0

Once the t-value is computed look up on a table of critical t-values for a given number of degrees of freedom

and significance level; if the computed t-value is greater than the critical t-value then reject the null

hypothesis.

Linear Regression Analysis

Relationship between x and y can be shown on a regression line the best fit straight line through a set of

data points. The regression line is found by the least squares principle and is based on the equation for a

straight line:

bx a y +

where:

2 2

) ( ) (

) )( ( ) (

x x n

y x xy n

b and

n

x

b

n

y

a

Non Linear series (e.g. a curvilinear series) can be plotted by taking logs (NB a curvilinear series is an

example of compounding and is exponential)

log (Y) = log (a) + log (bX)

Once the linear relationship has been established then the Standard Error can be calculated using the sum of

squared errors (SSE) method:

( )

2

'

2

,

n

y y

S

i

y x

Where y' is the value of y for each value of x from the regression line and yi is the actual value of y for each x

Could also use:

2

) ( ) (

2

,

n

xy b y a y

S

y x

In order to determine how good the regression is (i.e. how closely correlated variation is y is with variation in

x) we use the coefficient of determination R

2

( ) [ ] ( ) [ ]

( )

2

2 2

2

'

y y

y y y y

R

i

where ( )

2

' y y

i

= SSE; ( )

2

y y = ys sum of

squares

As SSE tends to 0; R

2

tends to 1; telling us that the observations of y for a given x are falling closer and closer

onto the regression line.

Also since

r R

2

; when R

2

= 1; r = 1; so as r tends to 0; the standards error of estimates tends to y (the

standard deviation of y)

The following assumptions underpin linear regression:

Normality: for each observation of x the distribution of dependent y variables is normal

Linearity: the mean of each dependent observation y lies on the regression line

Homoskedasticity: the variability of y doesnt change with x

Statistical Independence: the dependent observations of y are unrelated to one another

Confidence intervals are used in regression analysis to determine the range in which a dependent variable y

lies for a given value of the independent variable x and to determine the probability that it applies to the whole

group:

Confidence Interval:

( )

( )

1

1

1

1

]

1

+ t

n

x

x

x x

n

Error Std t y

n prob 2

2

2

2 ,

1

) . )( ( '

The prediction interval is very similar but applies only to a specific dependent variable and not to the entire

group:

Prediction Interval

( )

( )

1

1

1

1

]

1

+ + t

n

x

x

x x

n

Error Std t y

n prob 2

2

2

2 ,

1

1 ) . )( ( '

The prediction interval is wider than the confidence interval since we are less confident to predict a specific

value of y from x than predicting the value for a group

As SSE increases the confidence and prediction intervals widen i.e. the error factor (unsystematic risk)

increases and confidence in using the regression model to predict y falls

Steps for using the confidence and prediction intervals:

First compute the intercept and the slope for the data set (from equations for the b and a constants)

Compute the standard error estimate from:

2

) ( ) (

2

,

n

xy b y a y

S

y x

Then, clarify the confidence and prediction interval that we are trying to compute i.e. what data are

we seeking for which a confidence or prediction interval is needed

Find the critical t-Statistic for the given significance level and degrees of freedom (n 2)

Compute the confidence and prediction interval using the equations above (NB for a small sample size

adjustment is seldom required so use:

Confidence interval = prediction interval

) . )( ( '

2 ,

Error Std t y

n prob

t

Time Series

A data set which is a function of time e.g. a stock price series

Key components:

Secular trend: smooth, long term trend embedded in a time series

Cyclical trend: intermediated term variation (> 1 year) e.g. the business cycle

Seasonal variation: shorter term variation (< 1 year) patters than may repeat over time

Irregular variation episodic variation that is definable but unpredictable

The linear trend equation is the same as the basic regression equation but t replaces x:

y = a + bt

where:

n

t

t

n

t y

ty

b

2

2

) (

) (

) )( (

) (

and

n

t

b

n

y

a

Moving averages smooth out the variability of a data series by calculating and plotting a series of simple

averages. In order to apply a moving average the data series must have a Linear Trend (T) and a Rhythmic

Cycle (C) the moving average smoothes out the cyclical and irregular components of a time series.

PVs, FVs, Annuities and Holding Periods

Future Value:

n

y

i

PV FV

'

+ 1

Present Value:

n

y

i

FV

PV

'

1

Rule of 72: 72 = time taken for money to double

Percentage i

Future Value of an Ordinary Annuity:

'

1

1

]

1

,

_

y

i

y

i

PMT FV

n

1 1

Present Value of an Ordinary Annuity:

'

1

1

1

1

1

1

]

1

,

_

,

_

1

1

1

1

]

1

,

_

n

y

i

y

i

y

i

PMT PV

1

1 1

Assumes annual payments received at the end of each time period

NB for an annuity due payments fall at the start of each period so to calculate the PV either:

Set the HP12C into begin mode

or

Treat as an ordinary annuity that is one year shorter than the annuity due and simply add back on the

very first payment which will be received at its net value

Present Value of a Perpetuity:

y

i

PMT

PV

(for console bonds and pref. stock)

Non-annual compounding and amortisation:

To get Effective Annual Rate: 1

1

,

_

n

n

i

EAR (i = rate per period; n = periods per year)

To get back: ( ) [ ] 1 1 +

n

EAR n i

The limiting condition is continuous compounding:

,

_

n

y

i

e PV FV

.

.

For amortising loans:

Interest Paid = Starting balance x Interest Rate

Principle Paid = Payment Interest Paid

New Balance = Previous Starting Balance Principle Paid

Holding Period Return = HPR = Ending Value of Investment

Beginning Value of Investment

Holding Period Yield = HPY = HPR - 1

Annualised HPR

n

HPR

1

Annualised HPY

1

1

n

HPR

If the investment returns dividends this must also be included: HPR(div) = Dividend + Ending Value

Beginning Value

The simple average yield of a number of investments over time is given by the Arithmetic mean:

n

HPY

AM

Alternatively the geometric mean return can be calculated for a series of investments to incorporate any

variance:

1

n

HPR GM

Attributes of Arithmetic and Geometric Mean:

Geometric mean is the best measure of historical returns as it represents the compound annual rate of

return

AM GM; Any variance between HPRs will reduce the GM, in the absence of variance AM = GM:

GM

2

= AM

2

Variance

AM can give nonsensical results: e.g. $1 invested that doubled to $2 and then fell back to $1 over

subsequent periods would have HPYs of 100% and 50% this gives an AM(HPY) of 25%; GM returns

the true HPY of 0% - since we began with $1 and finished with $1

Average Portfolio Returns:

W HPY HPY

investment portfolio

.

) ( ) (

Expected Return: ER = (probability of a possible return) x (Value of Return)

Variance of Returns: Variance(returns) = (probability of a possible return) x (Value of Return - ER)

2

Std. Dev. Returns =

) (returns

Variance

Coefficient of Variation (of returns):

ER

CV

returns) (

CV gives a relative risk per unit return which can be used to determine which of two stocks is more risky

Historical Risk:

n

Y P H HPY

2

) (

Expected Return (Stocks and Stock Indices): ER = Expected Div. + [End Price Beginning Price]

Beginning Price

Вам также может понравиться

- Economics for CFA level 1 in just one week: CFA level 1, #4От EverandEconomics for CFA level 1 in just one week: CFA level 1, #4Рейтинг: 4.5 из 5 звезд4.5/5 (2)

- CFA Level 1 Calculation Workbook: 300 Calculations to Prepare for the CFA Level 1 Exam (2023 Edition)От EverandCFA Level 1 Calculation Workbook: 300 Calculations to Prepare for the CFA Level 1 Exam (2023 Edition)Рейтинг: 4.5 из 5 звезд4.5/5 (5)

- Test Collections CFA-Level-I Question Bank PDFДокумент1 568 страницTest Collections CFA-Level-I Question Bank PDFsaurabh100% (1)

- CFA 2012: Exams L1 : How to Pass the CFA Exams After Studying for Two Weeks Without AnxietyОт EverandCFA 2012: Exams L1 : How to Pass the CFA Exams After Studying for Two Weeks Without AnxietyРейтинг: 3 из 5 звезд3/5 (2)

- Ninja Study Plan: The Definitive Guide to Learning, Taking, and Passing the CFA® ExaminationsОт EverandNinja Study Plan: The Definitive Guide to Learning, Taking, and Passing the CFA® ExaminationsРейтинг: 4 из 5 звезд4/5 (13)

- Wiley CFA Society Mock Exam 2020 Level I Afternoon QuestionsДокумент33 страницыWiley CFA Society Mock Exam 2020 Level I Afternoon QuestionsThaamier Misbach100% (1)

- CFA Level 2 FSAДокумент3 страницыCFA Level 2 FSA素直和夫Оценок пока нет

- Wiley CFA Society Mock Exam 2020 Level I Morning SolutionsДокумент51 страницаWiley CFA Society Mock Exam 2020 Level I Morning SolutionsThaamier Misbach100% (1)

- Cfa Mnemonic S Level 1 SampleДокумент19 страницCfa Mnemonic S Level 1 Samplecustomer7754Оценок пока нет

- CFA Level 1 - Economics Flashcards - QuizletДокумент20 страницCFA Level 1 - Economics Flashcards - QuizletSilviu Trebuian100% (3)

- CFA Level I Quick Sheet PDFДокумент9 страницCFA Level I Quick Sheet PDFGhulam Hassan100% (1)

- Equity Investment for CFA level 1: CFA level 1, #2От EverandEquity Investment for CFA level 1: CFA level 1, #2Рейтинг: 5 из 5 звезд5/5 (1)

- Free CFA Level 2 Mock Exam (300hours)Документ20 страницFree CFA Level 2 Mock Exam (300hours)ShrutiОценок пока нет

- Wiley CFA Society Mock Exam 2020 Level I Afternoon SolutionsДокумент47 страницWiley CFA Society Mock Exam 2020 Level I Afternoon SolutionsLucas Coelho100% (1)

- Jobs After CFAДокумент26 страницJobs After CFAhari96973Оценок пока нет

- CFA Level III FormulaДокумент39 страницCFA Level III Formulammqasmi100% (1)

- Corporate Finance for CFA level 1: CFA level 1, #1От EverandCorporate Finance for CFA level 1: CFA level 1, #1Рейтинг: 3.5 из 5 звезд3.5/5 (3)

- Wiley CFA Society Mock Exam 2020 Level I Morning QuestionsДокумент36 страницWiley CFA Society Mock Exam 2020 Level I Morning QuestionsThaamier MisbachОценок пока нет

- CFA I Study Plan 12weeksДокумент1 страницаCFA I Study Plan 12weeksconnectshyamОценок пока нет

- CFA TipsДокумент4 страницыCFA Tipsfdomingojr_doe100% (1)

- CFA Level I Mock Test IДокумент36 страницCFA Level I Mock Test IAspanwz Spanwz60% (5)

- DA4387 Level I CFA Mock Exam 2018 Morning AДокумент37 страницDA4387 Level I CFA Mock Exam 2018 Morning AAisyah Amatul GhinaОценок пока нет

- CFA Level I Formula SheetДокумент27 страницCFA Level I Formula SheetAnonymous P1xUTHstHT100% (4)

- FinQuiz Level1Mock2018Version2JuneAMSolutionsДокумент79 страницFinQuiz Level1Mock2018Version2JuneAMSolutionsYash Joglekar100% (2)

- CFA Level 1 Ethical Standards NotesДокумент23 страницыCFA Level 1 Ethical Standards NotesAndy Solnik100% (7)

- Cfa Level 2 冲刺班讲义Документ100 страницCfa Level 2 冲刺班讲义Nicholas H. WuОценок пока нет

- CFA Level I: Ethical and Professional StandardsДокумент13 страницCFA Level I: Ethical and Professional StandardsBeni100% (3)

- Mock Exam 1 AnsДокумент110 страницMock Exam 1 Ansteeravac vac100% (3)

- FinQuiz - Curriculum Note, Study Session 4-6, Reading 13-21 - EconomicsДокумент124 страницыFinQuiz - Curriculum Note, Study Session 4-6, Reading 13-21 - EconomicsNattKoon100% (2)

- Past CFA Level 1 FRA QsДокумент29 страницPast CFA Level 1 FRA QsAditya BajoriaОценок пока нет

- CFA Prep Level 1 Session 1 Exam Topics and WeightageДокумент15 страницCFA Prep Level 1 Session 1 Exam Topics and WeightagePrakarsh Aren100% (1)

- CFA Level 1 NotesДокумент158 страницCFA Level 1 Notessuritrue9644Оценок пока нет

- CFA Level 1 SummaryДокумент3 страницыCFA Level 1 SummaryTháng Mười100% (1)

- Economics for Investment Decision Makers Workbook: Micro, Macro, and International EconomicsОт EverandEconomics for Investment Decision Makers Workbook: Micro, Macro, and International EconomicsОценок пока нет

- SolsДокумент59 страницSolsSumit Kumar100% (1)

- CFA Level 1 NotesДокумент154 страницыCFA Level 1 Noteskazimeister172% (18)

- CFA Level 1 - Section 2 QuantitativeДокумент81 страницаCFA Level 1 - Section 2 Quantitativeapi-376313867% (3)

- CFA Level 1 Formulae Booklet - PArt 2Документ99 страницCFA Level 1 Formulae Booklet - PArt 2Rohit DevanaboinaОценок пока нет

- FinTree - FRA Sixty Minute Guide PDFДокумент38 страницFinTree - FRA Sixty Minute Guide PDFkamleshОценок пока нет

- CFA Level I Mock Exam C Morning SessionДокумент67 страницCFA Level I Mock Exam C Morning SessionSai Swaroop MandalОценок пока нет

- CFA Level2 FormulaДокумент34 страницыCFA Level2 FormulaRoy Tan100% (3)

- 2019 CFA Level 1 MindmapДокумент88 страниц2019 CFA Level 1 MindmapLimin Zhu100% (8)

- CFA Level I Questions and Answers PDFДокумент10 страницCFA Level I Questions and Answers PDFAbdul WadudОценок пока нет

- Financial Analyst CFA Study Notes: Economics Level 1Документ9 страницFinancial Analyst CFA Study Notes: Economics Level 1Andy Solnik0% (2)

- Ift Study Notes Vol 5 PDFДокумент259 страницIft Study Notes Vol 5 PDFKarlОценок пока нет

- 2018 Level II Mock Exam PMДокумент26 страниц2018 Level II Mock Exam PMHui GuoОценок пока нет

- 2020 Mock Exam C - Afternoon Session (With Solutions)Документ62 страницы2020 Mock Exam C - Afternoon Session (With Solutions)Tushar Gupta100% (1)

- Cfa Level I Ethics 2018 TДокумент144 страницыCfa Level I Ethics 2018 TStevenTsai100% (1)

- Cfa Ques AnswerДокумент542 страницыCfa Ques AnswerAbid Waheed100% (4)

- CFA Level 1Документ90 страницCFA Level 1imran0104100% (2)

- CFA Level I NotesДокумент42 страницыCFA Level I NotesMSA-ACCA100% (6)

- 2019 Mock Exam A - Morning Session (With Solutions)Документ63 страницы2019 Mock Exam A - Morning Session (With Solutions)LU GenОценок пока нет

- 2020 Mock Exam A - Afternoon Session (With Solutions)Документ62 страницы2020 Mock Exam A - Afternoon Session (With Solutions)NikОценок пока нет

- Level I of CFA Program 6 Mock Exam June 2020 Revision 1Документ43 страницыLevel I of CFA Program 6 Mock Exam June 2020 Revision 1JasonОценок пока нет

- Notes Cfa SummaryДокумент336 страницNotes Cfa SummaryForis Lee33% (3)

- Lecture21 HypothesisTest1Документ53 страницыLecture21 HypothesisTest1Sonam AlviОценок пока нет

- 3.1 4.4 Fundamentals of IP Addresses.pdfДокумент20 страниц3.1 4.4 Fundamentals of IP Addresses.pdfAamirx64Оценок пока нет

- Kansai Nerolac Paints Price ListДокумент6 страницKansai Nerolac Paints Price ListAamirx64Оценок пока нет

- Part Catalog Bajaj PulsarДокумент37 страницPart Catalog Bajaj Pulsarbirkov83% (12)

- Project Profile On HDPE Edible Oil Container 15 LtsДокумент9 страницProject Profile On HDPE Edible Oil Container 15 LtsAamirx64Оценок пока нет

- Tally Erp 9.0 Material Central Sales Tax (CST) in Tally Erp 9.0Документ23 страницыTally Erp 9.0 Material Central Sales Tax (CST) in Tally Erp 9.0Raghavendra yadav KMОценок пока нет

- 2926Документ11 страниц2926Aamirx64Оценок пока нет

- Tally Erp 9.0 Material Advanced Inventory in Tally Erp 9.0Документ57 страницTally Erp 9.0 Material Advanced Inventory in Tally Erp 9.0Raghavendra yadav KMОценок пока нет

- MFCДокумент1 страницаMFCAamirx64Оценок пока нет

- Final Account Statements From Tally - Erp 9 - Tally Downloads - Tally AMC - Tally - NET ServicesДокумент67 страницFinal Account Statements From Tally - Erp 9 - Tally Downloads - Tally AMC - Tally - NET ServicesjohnabrahamstanОценок пока нет

- Tally Erp 9.0 Material Central Sales Tax (CST) in Tally Erp 9.0Документ23 страницыTally Erp 9.0 Material Central Sales Tax (CST) in Tally Erp 9.0Raghavendra yadav KMОценок пока нет

- User Manual With FAQs - Sales Invoice For JewelersДокумент11 страницUser Manual With FAQs - Sales Invoice For JewelersMohit GuptaОценок пока нет

- ReadmeДокумент10 страницReadmeAamirx64Оценок пока нет

- 2926Документ11 страниц2926Aamirx64Оценок пока нет

- Project Profile-HDPE Bottles & ContainersДокумент7 страницProject Profile-HDPE Bottles & ContainersSiddharth SinghОценок пока нет

- 11 Project Human Resources ManagementДокумент32 страницы11 Project Human Resources ManagementAamirx64Оценок пока нет

- Advertising, Marketing, Promotions, Public Relations, and Sales ManagersДокумент3 страницыAdvertising, Marketing, Promotions, Public Relations, and Sales ManagersBeatrice NebanceaОценок пока нет

- E Commerce ArchitectureДокумент10 страницE Commerce ArchitectureNavneet KumarОценок пока нет

- ASTM Laboratory Information Management Systems Rte1nzgДокумент27 страницASTM Laboratory Information Management Systems Rte1nzgAamirx64Оценок пока нет

- Chapter 9Документ22 страницыChapter 9mohanjaiОценок пока нет

- Insurance Underwriting 1Документ5 страницInsurance Underwriting 1Aamirx64Оценок пока нет

- CB2 MFCДокумент15 страницCB2 MFCAamirx64Оценок пока нет

- Project PlanningДокумент54 страницыProject PlanningmdkaikiniОценок пока нет

- Management Control Systems University Question PapersДокумент6 страницManagement Control Systems University Question PapersApurva Dhakad100% (3)

- Project PlanningДокумент54 страницыProject PlanningmdkaikiniОценок пока нет

- Management Control System Processes, Stages, Internal Control, Financial ControlДокумент80 страницManagement Control System Processes, Stages, Internal Control, Financial ControlAnuranjanSinha85% (13)

- COMPANY (Sec. 2 (17) )Документ8 страницCOMPANY (Sec. 2 (17) )Aamirx64Оценок пока нет

- CB1a MFCДокумент49 страницCB1a MFCAamirx64Оценок пока нет

- Profit CenterДокумент3 страницыProfit CenterAshish NaikОценок пока нет

- Strategic Business Units: Definition, Criteria, Advantages & DisadvantagesДокумент4 страницыStrategic Business Units: Definition, Criteria, Advantages & DisadvantagesJitendra AshaniОценок пока нет

- Market Analysis: Market Analysis Is The First Step of Project Analysis. It Simply Estimates TheДокумент10 страницMarket Analysis: Market Analysis Is The First Step of Project Analysis. It Simply Estimates TheAamirx64Оценок пока нет

- Econometrics Multiple Linear RegressionДокумент32 страницыEconometrics Multiple Linear Regressionrs photocopyОценок пока нет

- B.Sc. (Statistics) 1st To 6th Sem 2016-17 Modified - 25 - 7 - 17 PDFДокумент19 страницB.Sc. (Statistics) 1st To 6th Sem 2016-17 Modified - 25 - 7 - 17 PDFAyush ParmarОценок пока нет

- Assignment 2 - Doc - ECON1193 - s3753738Документ26 страницAssignment 2 - Doc - ECON1193 - s3753738Trang HaОценок пока нет

- Data Science Tips and Tricks To Learn Data Science Theories EffectivelyДокумент208 страницData Science Tips and Tricks To Learn Data Science Theories Effectivelyenock-readersОценок пока нет

- Netflix PDFДокумент12 страницNetflix PDFTomás R PistachoОценок пока нет

- What Is Correlation AnalysisДокумент73 страницыWhat Is Correlation AnalysisAshutosh PandeyОценок пока нет

- Measurement (KWiki - Ch1 - Error Analysis)Документ14 страницMeasurement (KWiki - Ch1 - Error Analysis)madivala nagarajaОценок пока нет

- Insider Outsider TheoryДокумент21 страницаInsider Outsider TheorysukandeОценок пока нет

- Ho: Profitabilitas, Kepemilikan Manajerial Dan Kepemilikan Institusional Tidak Berpengaruh Terhadap Nilai Perusahaan ManufakturДокумент4 страницыHo: Profitabilitas, Kepemilikan Manajerial Dan Kepemilikan Institusional Tidak Berpengaruh Terhadap Nilai Perusahaan Manufakturmsvalentt herlinasariОценок пока нет

- INDU 6310 Outline Winter 2021Документ5 страницINDU 6310 Outline Winter 2021김영휘Оценок пока нет

- Final Report Textile Internship1Документ46 страницFinal Report Textile Internship1Prashant SinghОценок пока нет

- ANOVA Guide: Analyze Variance Between GroupsДокумент32 страницыANOVA Guide: Analyze Variance Between GroupsChristian Daniel100% (1)

- Assignment - Predictive ModelingДокумент66 страницAssignment - Predictive ModelingAbhay Poddar87% (23)

- Niu Et AlДокумент17 страницNiu Et Allolita putriОценок пока нет

- GridDataReport-Surfer - Curvas de NivelДокумент7 страницGridDataReport-Surfer - Curvas de NivelDanny JeffersonОценок пока нет

- Sampling Distribution and EstimationДокумент53 страницыSampling Distribution and EstimationAKSHAY NANGIAОценок пока нет

- Module - 4 PDFДокумент15 страницModule - 4 PDFKeyur PopatОценок пока нет

- Analyzing SEM Structural ModelsДокумент16 страницAnalyzing SEM Structural ModelsIslam HamadehОценок пока нет

- Two-stage Cluster Sampling Design and AnalysisДокумент119 страницTwo-stage Cluster Sampling Design and AnalysisZahra HassanОценок пока нет

- Data Science Chapitre 2Документ132 страницыData Science Chapitre 2Sadouli SaifОценок пока нет

- Hetero-Autocorr Econometrics Fall 2008Документ31 страницаHetero-Autocorr Econometrics Fall 2008Victor ManuelОценок пока нет

- Input Data SPSS: Quiz Sesi II Statistik Berbasis KomputerДокумент16 страницInput Data SPSS: Quiz Sesi II Statistik Berbasis KomputerAndi BangsawanОценок пока нет

- Project 2 Factor Hair Revised Case StudyДокумент25 страницProject 2 Factor Hair Revised Case StudyrishitОценок пока нет

- Individual reserving and nonparametric estimation of claim amounts subject to large reporting delaysДокумент29 страницIndividual reserving and nonparametric estimation of claim amounts subject to large reporting delaysrai nowОценок пока нет

- Practical Guide Students PDFДокумент24 страницыPractical Guide Students PDFHaya Muqattash100% (2)

- Revisiting A 90-Year-Old Debate - The Advantages of The Mean DeviationДокумент10 страницRevisiting A 90-Year-Old Debate - The Advantages of The Mean DeviationAreem AbbasiОценок пока нет

- SMMD: Practice Problem Set 6 Topic: The Simple Regression ModelДокумент6 страницSMMD: Practice Problem Set 6 Topic: The Simple Regression ModelArkaОценок пока нет

- 1 A Tutorial Guide To Geostatistics Computing and Modelling Variograms and Kriging PDFДокумент14 страниц1 A Tutorial Guide To Geostatistics Computing and Modelling Variograms and Kriging PDFkrackku kОценок пока нет

- UPSA Postgraduate Exam for Statistics Course Covers Regression, Probability & Hypothesis TestingДокумент14 страницUPSA Postgraduate Exam for Statistics Course Covers Regression, Probability & Hypothesis TestingErnnykeyz AyiviОценок пока нет

- Working-Paper 110 2004Документ46 страницWorking-Paper 110 2004Shahid MahmoodОценок пока нет