Академический Документы

Профессиональный Документы

Культура Документы

Probability and Stats Notes

Загружено:

Michael GarciaОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Probability and Stats Notes

Загружено:

Michael GarciaАвторское право:

Доступные форматы

Moment Generating Function and

the Central Limit Theorem

Aria Nosratinia Probability and Statistics 7-1

Motivation

Moment generating function:

Systematic calculation of all moments

Recovery of pdf from the moments

Central Limit Theorem:

Guassianity of appropriate sums of random variables

Characterization of noise

Important approximations

Aria Nosratinia Probability and Statistics 7-2

Moments of Sums

If W = X

1

+ +X

n

, then

E[W] = E[X

1

] + +E[X

n

]

V ar(W) =

n

i=1

V ar(X

i

) + 2

n1

i=1

n

j=i

Cov(X

i

, X

j

)

When X

i

are uncorrelated,

V ar(W) = V ar(X

1

) + +V ar(X

n

)

Aria Nosratinia Probability and Statistics 7-3

Sum of Two RV

Take two random variables X and Y , we wish to nd the pdf of the

sum. Dene:

W = X +Y

V = Y

Then use the Jacobian method to nd f

WV

(w, v). It is easy to see

|J| = 1, therefore:

f

WV

(w, v) = f

XY

(x, y) = f

XY

(w y, y)

Integrate to nd the marginal

f

W

(w) =

_

f

XY

(w y, y) dy

This is an integration of f

XY

along a diagonal line.

Aria Nosratinia Probability and Statistics 7-4

Example

Random Variables X, Y are distributed

f

XY

(x, y) =

_

_

_

2 0 x, y 1, x +y 1

0 else

Find the pdf of the sum.

Using the previous result:

f

W

(w) =

_

f

XY

(w y, y) dy

=

_

w

0

2dy 0 w 1

= 2w

Aria Nosratinia Probability and Statistics 7-5

Sum of Independent RV

If X, Y are independent and W = X +Y , then:

f

W

(w) =

_

f

XY

(w y, y) dy =

_

f

X

(w y)f

Y

(y) dy

= f

X

f

Y

The pdf of sum of independent r.v. is given by the convolution of the

individual pdfs.

In the discrete case:

P

W

(w) =

k=

P

X

(k) P

Y

(w k)

NOTE: Naturally the pmf values may be zero for some of these k.

Aria Nosratinia Probability and Statistics 7-6

Example

Random variable X is Gaussian (0, 1) and random variable Y is

Gaussian with mean 2 and variance 1. Find the pdf of X +Y .

Aria Nosratinia Probability and Statistics 7-7

Example

Random variables X, Y are distributed according to:

f

XY

(x, y) =

_

_

_

2e

x

e

2y

x, y > 0

0 else

Find the pdf of W = X +Y

Aria Nosratinia Probability and Statistics 7-8

Moment Generating Function

Denition: For random variable X, the moment generating function

is:

X

(s) = E

_

e

sX

_

For continuous r.v., this is (almost) the Laplace transform of the pdf:

X

(s) =

_

e

sx

f

X

(x) dx

For discrete distributions:

X

(s) =

e

sx

i

P

X

(x

i

)

Aria Nosratinia Probability and Statistics 7-9

Example

Find the MGF of the Bernoulli distribution:

P

X

(x) =

_

_

_

p x = 1

1 p x = 0

X

(s) = (1 p)e

0

+pe

s

= 1 p +pe

s

Aria Nosratinia Probability and Statistics 7-10

Example

Find the MGF of the exponential R.V.

f

X

(x) =

_

_

_

e

x

x 0

0 else

X

(s) =

_

0

e

xs

e

x

dx

=

_

0

e

x(s)

dx

=

s

_

e

x(s)

_

0

=

s

Aria Nosratinia Probability and Statistics 7-11

Moments via MGF

d

X

(s)

ds

=

d

ds

_

e

sx

f

X

(x)dx

=

_

xe

sx

f

X

(x)dx

Set s = 1 and we get:

d

X

(s)

ds

s=0

= E[X]

If we take n derivatives, we get n powers of x, therefore:

Theorem: A random variable X with MGF

X

(s) has moments

E[X

n

] =

d

n

X

(s)

ds

n

s=0

Aria Nosratinia Probability and Statistics 7-12

Why Moments from MGF?

Each moment requires an integral.

If we need multiple moments, we can take one integral to get MGF,

and then calculate all moments with derivatives (easier than integrals).

Example: Find the rst four moments of an exponential random

variable with parameter .

Aria Nosratinia Probability and Statistics 7-13

MGF of Independent Sums

Theorem: If X, Y are independent,

X+Y

(s) =

X

(s)

Y

(s)

Proof:

X+Y

(s) = E

_

e

s(X+Y )

_

= E

_

e

sX

e

sY

_

= E

_

e

sX

_

E

_

e

sY

_

=

X

(s)

Y

(s)

Aria Nosratinia Probability and Statistics 7-14

Application of MGF Properties

Using the MGF properties it is easy to show that:

The sum of n i.i.d. Bernoulli-p variables is a binomial (n, p)

The sum of n i.i.d. geometric-p variables is a Pascal (n, p)

The sum of n independent Poisson-

i

random variables is a

Poisson

i

The sum of n independent Gaussians is a Gaussian (but this is

also true for non-independent Gaussians).

The sum of n i.i.d. exponential variables is Erlang.

Aria Nosratinia Probability and Statistics 7-15

Characteristic Function (optional)

The characteristic function of variable X is dened as:

X

() =

_

e

jx

f

X

(x) dx

The characteristic function is related to the Fourier transform of

the PDF, just like MGF is related to the Laplace transform.

E[X

n

] =

1

j

n

d

n

d

n

X

()

No two PDF can share the same characteristic function.

All pdf have characteristic functions, not all pdf have MGF.

Therefore characteristic function is a powerful tool.

Aria Nosratinia Probability and Statistics 7-16

Sum of I.I.D. Variables

We wish to investigate sums of a large group of i.i.d. variables

whose distribution we may not know. Is that possible?

Consider the i.i.d. sum W = X

1

+ +X

n

as n .

W

= n

X

and V ar(W) = n V ar(X). Both go to innity.

Oops!

To make the analysis more meaningful, we subtract the means,

and divide by

n to avoid exploding the variance.

Aria Nosratinia Probability and Statistics 7-17

Central Limit Theorem

Theorem: Consider an i.i.d. sequence X

1

, X

2

, . . . with mean

X

and variance

2

X

. Then random variables Z

n

=

1

n

i=1

X

i

X

have the property:

lim

n

F

Z

n

(z) = (z)

This means, if W

n

= X

1

+ +X

n

, for large n:

F

W

n

(w)

_

w n

X

n

_

Aria Nosratinia Probability and Statistics 7-18

Practical Application of CLT

Problem: Find probabilities involving sum of iid variables

W = X

1

+ +X

n

.

Solution: Find

X

and

2

X

. Then use CLT.

Interesting Note: CLT also applies to discrete variables.

Even though sum of discrete variables is always discrete, the CDF

approaches a Gaussian.

This is enough to make approximations involving probabilities.

Aria Nosratinia Probability and Statistics 7-19

Example 1

Consider X

i

to be a uniform distribution over [1, 1]. What is the

probability that W = X

1

+ +X

16

takes values in the interval

[1, 1]?

Aria Nosratinia Probability and Statistics 7-20

Example 1

Consider X

i

to be a uniform distribution over [1, 1]. What is the

probability that W = X

1

+ +X

16

takes values in the interval

[1, 1]?

X

= 0 V ar(X) =

1

3

P(1 < W 1) = F

W

(1) F

W

(1)

=

_

1 0

4/

3

_

_

1 0

4/

3

_

= 2

_

3

4

_

1

= 0.3328

Aria Nosratinia Probability and Statistics 7-20

Example 2

We ip a fair coin a thousand times. What are the chances that we

will have more than 510 heads?

Aria Nosratinia Probability and Statistics 7-21

Example 2

We ip a fair coin a thousand times. What are the chances that we

will have more than 510 heads?

Denote by X the ip of a coin and the number of heads to be

A = X

1

+X

2

+ +X

1000

We know

X

= 0.5 and V ar(X) = 0.25.

P(A > 510) = 1 F

A

(510)

= 1

_

510 1000 0.5

0.5

1000

_

= 1 (0.63) = 0.2643

Question: What would be the probability of exactly 510 heads? Does

this lead to problems?

Aria Nosratinia Probability and Statistics 7-21

Example 3

DeMoivre-Laplace Formula: For a binomial (n, p) variable K,

P(k

1

K k

2

)

_

k

2

np + 0.5

_

np(1 p)

_

_

k

1

np 0.5

_

np(1 p)

_

IDEA: whichever side that is incldued, we use a margine of 0.5 on the

probability. This avoids problems with approximating discrete variables.

Example: Calculate the P(K = 8) for a binomial (20, 0.4).

Using the previous formula, the answer is zero!

Using the DeMoivre-Laplace formula,

P(8 K 8) P(7.5 K 8) = (

0.5

4.8

) (

0.5

4.8

) = 0.1803

The exact answer using the binomial formula is 0.1797.

Aria Nosratinia Probability and Statistics 7-22

Laplace and De Moivre

Abraham de Moivre

(1654-1705)

De Moivre Formula

(cos x+i sin x)

n

= cos nx+i sin nx

Gaussian probabilities

Pierre-Simon Laplace

(1749-1827)

Laplace Transform, Scalar potentials,

Laplace equation (PDE),

Celestial mechanics

De Moivres formula predates the Euler formula e

ix

= cos x +i sinx.

Laplace almost predicted the existence of black holes!

Aria Nosratinia Probability and Statistics 7-23

Advance Topics

Aria Nosratinia Probability and Statistics 7-24

Random Sums of Independent Variables

We draw a random integer N according to some distribution, then

form:

W = X

1

+ +X

N

using i.i.d. variables X

i

.

The MGF of the sum is:

W

(s) =

N

_

ln

X

(s)

_

For proof, see your textbook

This MGF can be used to calculate probabilities involving random

sums.

Aria Nosratinia Probability and Statistics 7-25

Cherno Bound

This is used to bound the tail of a probability distribtion.

Theorem: For a random variable X,

P(X c) min

s0

e

sc

X

(s)

IDEA: the probability is hard to calculate, but the bound is easier.

Proof:

P(X c) =

_

c

f

X

(x)dx =

_

u(x c)f

X

(x)dx

e

s(xc)

f

X

(x)dx

= e

sc

_

e

sx

f

X

(x)dx

= e

sc

X

(s)

Aria Nosratinia Probability and Statistics 7-26

Вам также может понравиться

- A-level Maths Revision: Cheeky Revision ShortcutsОт EverandA-level Maths Revision: Cheeky Revision ShortcutsРейтинг: 3.5 из 5 звезд3.5/5 (8)

- Multivariate Distributions: Why Random Vectors?Документ14 страницMultivariate Distributions: Why Random Vectors?Michael GarciaОценок пока нет

- Radically Elementary Probability Theory. (AM-117), Volume 117От EverandRadically Elementary Probability Theory. (AM-117), Volume 117Рейтинг: 4 из 5 звезд4/5 (2)

- Why Bivariate Distributions?Документ26 страницWhy Bivariate Distributions?Michael GarciaОценок пока нет

- B671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsДокумент13 страницB671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsDesmond SeahОценок пока нет

- 1 Regression Analysis and Least Squares EstimatorsДокумент7 страниц1 Regression Analysis and Least Squares EstimatorsFreddie YuanОценок пока нет

- 1 Regression Analysis and Least Squares EstimatorsДокумент8 страниц1 Regression Analysis and Least Squares EstimatorsJenningsJingjingXuОценок пока нет

- Class6 Prep AДокумент7 страницClass6 Prep AMariaTintashОценок пока нет

- MIE1605 1b ProbabilityReview PDFДокумент96 страницMIE1605 1b ProbabilityReview PDFJessica TangОценок пока нет

- Multiple Linear RegressionДокумент18 страницMultiple Linear RegressionJohn DoeОценок пока нет

- MIT6 262S11 Lec02Документ11 страницMIT6 262S11 Lec02Mahmud HasanОценок пока нет

- Estimation Theory PresentationДокумент66 страницEstimation Theory PresentationBengi Mutlu Dülek100% (1)

- 5 Continuous Random VariablesДокумент11 страниц5 Continuous Random VariablesAaron LeãoОценок пока нет

- Expectation: Moments of A DistributionДокумент39 страницExpectation: Moments of A DistributionDaniel Lee Eisenberg JacobsОценок пока нет

- Ebook Course in Probability 1St Edition Weiss Solutions Manual Full Chapter PDFДокумент63 страницыEbook Course in Probability 1St Edition Weiss Solutions Manual Full Chapter PDFAnthonyWilsonecna100% (6)

- Polynomial InterpolationДокумент10 страницPolynomial InterpolationJuwandaОценок пока нет

- Multivariate Probability: 1 Discrete Joint DistributionsДокумент10 страницMultivariate Probability: 1 Discrete Joint DistributionshamkarimОценок пока нет

- Random VariablesДокумент44 страницыRandom VariablesOtAkU 101Оценок пока нет

- Lecture Notes Week 1Документ10 страницLecture Notes Week 1tarik BenseddikОценок пока нет

- 7.0 More MLEДокумент27 страниц7.0 More MLERakshay PawarОценок пока нет

- Financial Engineering & Risk Management: Review of Basic ProbabilityДокумент46 страницFinancial Engineering & Risk Management: Review of Basic Probabilityshanky1124Оценок пока нет

- 5 The Stochastic Approximation Algorithm: 5.1 Stochastic Processes - Some Basic ConceptsДокумент14 страниц5 The Stochastic Approximation Algorithm: 5.1 Stochastic Processes - Some Basic ConceptsAaa MmmОценок пока нет

- Sums of A Random VariablesДокумент21 страницаSums of A Random VariablesWaseem AbbasОценок пока нет

- נוסחאות ואי שיוויוניםДокумент12 страницנוסחאות ואי שיוויוניםShahar MizrahiОценок пока нет

- 9.0 Lesson PlanДокумент16 страниц9.0 Lesson PlanSähilDhånkhårОценок пока нет

- Actsc 432 Review Part 1Документ7 страницActsc 432 Review Part 1osiccorОценок пока нет

- Lecture 6. Order Statistics: 6.1 The Multinomial FormulaДокумент19 страницLecture 6. Order Statistics: 6.1 The Multinomial FormulaLya Ayu PramestiОценок пока нет

- Review of Basic Probability: 1.1 Random Variables and DistributionsДокумент8 страницReview of Basic Probability: 1.1 Random Variables and DistributionsJung Yoon SongОценок пока нет

- Frequentist Estimation: 4.1 Likelihood FunctionДокумент6 страницFrequentist Estimation: 4.1 Likelihood FunctionJung Yoon SongОценок пока нет

- Foss Lecture1Документ32 страницыFoss Lecture1Jarsen21Оценок пока нет

- The Binary Entropy Function: ECE 7680 Lecture 2 - Definitions and Basic FactsДокумент8 страницThe Binary Entropy Function: ECE 7680 Lecture 2 - Definitions and Basic Factsvahap_samanli4102Оценок пока нет

- Large Sample Results For The Linear Model: Walter Sosa-EscuderoДокумент32 страницыLarge Sample Results For The Linear Model: Walter Sosa-EscuderoRoger Asencios NuñezОценок пока нет

- Probability For Ai2Документ8 страницProbability For Ai2alphamale173Оценок пока нет

- Lec 12Документ6 страницLec 12spitzersglareОценок пока нет

- Unit 2 Ma 202Документ13 страницUnit 2 Ma 202shubham raj laxmiОценок пока нет

- The Uniform DistributnДокумент7 страницThe Uniform DistributnsajeerОценок пока нет

- Statistics 512 Notes I D. SmallДокумент8 страницStatistics 512 Notes I D. SmallSandeep SinghОценок пока нет

- On the difference π (x) − li (x) : Christine LeeДокумент41 страницаOn the difference π (x) − li (x) : Christine LeeKhokon GayenОценок пока нет

- Sms Essay 2Документ6 страницSms Essay 2akhile293Оценок пока нет

- Instructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeДокумент13 страницInstructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeNazmi O. Abu JoudahОценок пока нет

- Topic 6: Convergence and Limit Theorems: ES150 - Harvard SEAS 1Документ6 страницTopic 6: Convergence and Limit Theorems: ES150 - Harvard SEAS 1RadhikanairОценок пока нет

- Fixed Points of Multifunctions On Regular Cone Metric SpacesДокумент7 страницFixed Points of Multifunctions On Regular Cone Metric SpaceschikakeeyОценок пока нет

- Topic 3: The Expectation and Other Moments of A Random VariableДокумент20 страницTopic 3: The Expectation and Other Moments of A Random Variablez_k_j_vОценок пока нет

- S1) Basic Probability ReviewДокумент71 страницаS1) Basic Probability ReviewMAYANK HARSANIОценок пока нет

- Industrial Mathematics Institute: Research ReportДокумент25 страницIndustrial Mathematics Institute: Research ReportpostscriptОценок пока нет

- Class 4Документ9 страницClass 4Smruti RanjanОценок пока нет

- Theoretical Grounds of Factor Analysis PDFДокумент76 страницTheoretical Grounds of Factor Analysis PDFRosHan AwanОценок пока нет

- Probability Theory Nate EldredgeДокумент65 страницProbability Theory Nate Eldredgejoystick2inОценок пока нет

- Cramer RaoДокумент11 страницCramer Raoshahilshah1919Оценок пока нет

- Chapter 1 - Applied Analysis Homework SolutionsДокумент11 страницChapter 1 - Applied Analysis Homework SolutionsJoe100% (4)

- Answer: Given Y 3x + 1, XДокумент9 страницAnswer: Given Y 3x + 1, XBALAKRISHNANОценок пока нет

- Mathematical Economics: 1 What To StudyДокумент23 страницыMathematical Economics: 1 What To Studyjrvv2013gmailОценок пока нет

- CS 717: EndsemДокумент5 страницCS 717: EndsemGanesh RamakrishnanОценок пока нет

- HMWK 4Документ5 страницHMWK 4Jasmine NguyenОценок пока нет

- 3 Fall 2007 Exam PDFДокумент7 страниц3 Fall 2007 Exam PDFAchilles 777Оценок пока нет

- University of California, Los Angeles Department of Statistics Statistics 100A Instructor: Nicolas ChristouДокумент16 страницUniversity of California, Los Angeles Department of Statistics Statistics 100A Instructor: Nicolas ChristouDr-Rabia AlmamalookОценок пока нет

- Chapter 02Документ50 страницChapter 02Jack Ignacio NahmíasОценок пока нет

- BasicsДокумент61 страницаBasicsmaxОценок пока нет

- R300 Advanced Econometrics Methods Lecture SlidesДокумент362 страницыR300 Advanced Econometrics Methods Lecture SlidesMarco BrolliОценок пока нет

- UntitledДокумент9 страницUntitledAkash RupanwarОценок пока нет

- Mathematics Trial SPM 2015 P2 Bahagian BДокумент2 страницыMathematics Trial SPM 2015 P2 Bahagian BPauling ChiaОценок пока нет

- ChitsongChen, Signalsandsystems Afreshlook PDFДокумент345 страницChitsongChen, Signalsandsystems Afreshlook PDFCarlos_Eduardo_2893Оценок пока нет

- How Is Extra-Musical Meaning Possible - Music As A Place and Space For Work - T. DeNora (1986)Документ12 страницHow Is Extra-Musical Meaning Possible - Music As A Place and Space For Work - T. DeNora (1986)vladvaidean100% (1)

- Measuring Trend and TrendinessДокумент3 страницыMeasuring Trend and TrendinessLUCKYОценок пока нет

- SPQRДокумент8 страницSPQRCamilo PeraltaОценок пока нет

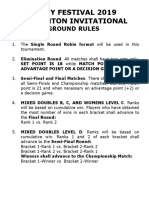

- Ground Rules 2019Документ3 страницыGround Rules 2019Jeremiah Miko LepasanaОценок пока нет

- Indus Valley Sites in IndiaДокумент52 страницыIndus Valley Sites in IndiaDurai IlasunОценок пока нет

- General Health Questionnaire-28 GHQ-28Документ3 страницыGeneral Health Questionnaire-28 GHQ-28srajanОценок пока нет

- (U) Daily Activity Report: Marshall DistrictДокумент6 страниц(U) Daily Activity Report: Marshall DistrictFauquier NowОценок пока нет

- Cognitive ApprenticeshipДокумент5 страницCognitive ApprenticeshipRandall RobertsОценок пока нет

- Far Eastern University-Institute of Nursing In-House NursingДокумент25 страницFar Eastern University-Institute of Nursing In-House Nursingjonasdelacruz1111Оценок пока нет

- Arctic Beacon Forbidden Library - Winkler-The - Thousand - Year - Conspiracy PDFДокумент196 страницArctic Beacon Forbidden Library - Winkler-The - Thousand - Year - Conspiracy PDFJames JohnsonОценок пока нет

- Rubrics For Field Trip 1 Reflective DiaryДокумент2 страницыRubrics For Field Trip 1 Reflective DiarycrystalОценок пока нет

- MOA Agri BaseДокумент6 страницMOA Agri BaseRodj Eli Mikael Viernes-IncognitoОценок пока нет

- Average Waves in Unprotected Waters by Anne Tyler - Summary PDFДокумент1 страницаAverage Waves in Unprotected Waters by Anne Tyler - Summary PDFRK PADHI0% (1)

- CogAT 7 PlanningImplemGd v4.1 PDFДокумент112 страницCogAT 7 PlanningImplemGd v4.1 PDFBahrouniОценок пока нет

- Crime Scene Manual FullДокумент19 страницCrime Scene Manual FullMuhammed MUZZAMMILОценок пока нет

- Test Statistics Fact SheetДокумент4 страницыTest Statistics Fact SheetIra CervoОценок пока нет

- TugasFilsS32019.AnthoniSulthanHarahap.450326 (Pencegahan Misconduct)Документ7 страницTugasFilsS32019.AnthoniSulthanHarahap.450326 (Pencegahan Misconduct)Anthoni SulthanОценок пока нет

- From Jest To Earnest by Roe, Edward Payson, 1838-1888Документ277 страницFrom Jest To Earnest by Roe, Edward Payson, 1838-1888Gutenberg.org100% (1)

- Adm Best Practices Guide: Version 2.0 - November 2020Документ13 страницAdm Best Practices Guide: Version 2.0 - November 2020Swazon HossainОценок пока нет

- Screening: of Litsea Salicifolia (Dighloti) As A Mosquito RepellentДокумент20 страницScreening: of Litsea Salicifolia (Dighloti) As A Mosquito RepellentMarmish DebbarmaОценок пока нет

- 1 - HandBook CBBR4106Документ29 страниц1 - HandBook CBBR4106mkkhusairiОценок пока нет

- German Monograph For CannabisДокумент7 страницGerman Monograph For CannabisAngel Cvetanov100% (1)

- Domestic and Foreign Policy Essay: Immigration: Salt Lake Community CollegeДокумент6 страницDomestic and Foreign Policy Essay: Immigration: Salt Lake Community Collegeapi-533010636Оценок пока нет

- EELE 202 Lab 6 AC Nodal and Mesh Analysis s14Документ8 страницEELE 202 Lab 6 AC Nodal and Mesh Analysis s14Nayr JTОценок пока нет

- Sayyid Jamal Al-Din Muhammad B. Safdar Al-Afghani (1838-1897)Документ8 страницSayyid Jamal Al-Din Muhammad B. Safdar Al-Afghani (1838-1897)Itslee NxОценок пока нет

- Ielts Reading Whale CultureДокумент4 страницыIelts Reading Whale CultureTreesa VarugheseОценок пока нет

- Binary SearchДокумент13 страницBinary SearchASasSОценок пока нет

- Peter Lehr Militant Buddhism The Rise of Religious Violence in Sri Lanka Myanmar and Thailand Springer International PDFДокумент305 страницPeter Lehr Militant Buddhism The Rise of Religious Violence in Sri Lanka Myanmar and Thailand Springer International PDFIloviaaya RegitaОценок пока нет

- Mental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)От EverandMental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Оценок пока нет

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsОт EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsРейтинг: 4.5 из 5 звезд4.5/5 (3)

- Images of Mathematics Viewed Through Number, Algebra, and GeometryОт EverandImages of Mathematics Viewed Through Number, Algebra, and GeometryОценок пока нет

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeОт EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticeРейтинг: 4 из 5 звезд4/5 (2)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.От EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Рейтинг: 5 из 5 звезд5/5 (1)

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormОт EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormРейтинг: 5 из 5 звезд5/5 (5)

- Math Workshop, Grade K: A Framework for Guided Math and Independent PracticeОт EverandMath Workshop, Grade K: A Framework for Guided Math and Independent PracticeРейтинг: 5 из 5 звезд5/5 (1)

- Limitless Mind: Learn, Lead, and Live Without BarriersОт EverandLimitless Mind: Learn, Lead, and Live Without BarriersРейтинг: 4 из 5 звезд4/5 (6)

- Mathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingОт EverandMathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingРейтинг: 4.5 из 5 звезд4.5/5 (21)

- A Guide to Success with Math: An Interactive Approach to Understanding and Teaching Orton Gillingham MathОт EverandA Guide to Success with Math: An Interactive Approach to Understanding and Teaching Orton Gillingham MathРейтинг: 5 из 5 звезд5/5 (1)