Академический Документы

Профессиональный Документы

Культура Документы

02 LinearAlgebraReview

Загружено:

Rashmi GuptaОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

02 LinearAlgebraReview

Загружено:

Rashmi GuptaАвторское право:

Доступные форматы

Background Material

A computer vision "encyclopedia": CVonline.

http://homepages.inf.ed.ac.uk/rbf/CVonline/

Linear Algebra Review and Matlab Tutorial

Assigned Reading:

Eero Simoncelli A Geometric View of Linear Algebra

http://www.cns.nyu.edu/~eero/NOTES/geomLinAlg.pdf

Linear Algebra:

Eero Simoncelli A Geometric View of Linear Algebra

http://www.cns.nyu.edu/~eero/NOTES/geomLinAlg.pdf

Michael Jordan slightly more in depth linear algebra review

http://www.cs.brown.edu/courses/cs143/Materials/linalg_jordan_86.pdf

Online Introductory Linear Algebra Book by Jim Hefferon.

http://joshua.smcvt.edu/linearalgebra/

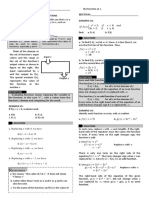

Notation

Overview

Standard math textbook notation

Scalars are italic times roman: Vectors are bold lowercase:

n, N x

xT

Row vectors are denoted with a transpose:

Matrices are bold uppercase: Tensors are calligraphic letters:

Vectors in R2 Scalar product Outer Product Bases and transformations Inverse Transformations Eigendecomposition Singular Value Decomposition

Warm-up: Vectors in Rn

Vectors in Rn

We can think of vectors in two ways:

Notation:

Points in a multidimensional space with respect to some coordinate system translation of a point in a multidimensional space ex., translation of the origin (0,0)

Length of a vector:

2 2 x = x12 + x2 + L xn =

x

i =1

2 i

Dot product or scalar product

Scalar Product

Dot product is the product of two vectors Example:

Notation

x1 y1 x y = = x1 y1 + x2 y2 = s x2 y2

x, y

x y = xT y = [x1

It is the projection of one vector onto another

y1 L xn ] M yn

x y = x y cos

x.y

We will use the last two notations to denote the dot product

Scalar Product

Norms in Rn

xy = y x

Commutative: Distributive: Linearity

Euclidean norm (sometimes called 2-norm):

2 2 x = x 2 = x x = x12 + x2 + L + xn =

(x + y ) z = x z + y z

(cx ) y = c(x y ) x (cy ) = c(x y )

(c1x) (c2 y ) = (c1c2 )(x y )

x

i =1

2 i

The length of a vector is defined to be its (Euclidean) norm. A unit vector is of length 1. Non-negativity properties also hold for the norm:

Non-negativity:

Orthogonality:

x 0, y 0 x y = 0 x y

Bases and Transformations

Linear Dependence

We will look at:

Linear combination of vectors x1, x2, xn

Linear Independence Bases Orthogonality Change of basis (Linear Transformation) Matrices and Matrix Operations

c1x1 + c2 x 2 + L + cn x n

A set of vectors X={x1, x2, xn} are linearly dependent if there exists a vector

xi X

that is a linear combination of the rest of the vectors.

Linear Dependence

Bases (Examples in R2)

In R

sets of n+1vectors are always dependent there can be at most n linearly independent vectors

Bases

Bases

A basis is a linearly independent set of vectors that spans the whole space. ie., we can write every vector in our space as linear combination of vectors in that set. Every set of n linearly independent vectors in Rn is a basis of Rn A basis is called

Standard basis in Rn is made up of a set of unit vectors:

1 e

2 e

n e

We can write a vector in terms of its standard basis:

1 e 2 e

3 e

orthogonal, if every basis vector is orthogonal to all other basis vectors orthonormal, if additionally all basis vectors have length 1.

Observation: -- to find the coefficient for a particular basis vector, we project our vector onto it.

i x xi = e

Change of basis

Outer Product

[

L bn ]

,

and a vector x R terms of B

Suppose we have a new basis B = b 1

m

bi R

that we would like to represent in

b2

x2

x

~ x2

~ x

~ x1

x1 x o y = xy T = M [ y1 xn

b1

ym ] = M

A matrix M that is the outer product of two vectors is a matrix of rank 1.

Compute the new components When B is orthonormal

~ x = B 1 x

b T x 1 ~ x= M b T x n

~ x

is a projection of x onto bi

Note the use of a dot product

Matrix Multiplication dot product

Matrix Multiplication outer product

Matrix multiplication can be expressed using dot products

Matrix multiplication can be expressed using a sum of outer products

T a1 BA = b1 L b n M aT n T T T = b1a1 + b 2a 2 + Lb n a n

BA =

b1T

M

bmT

a1 L b1 a1 O b m a1

an

b1 a n bm an

= bi o ai

i =1

Rank of a Matrix

Singular Value Decomposition: D=USVT =

D

U

A matrix D R

I1x I 2

has a column space and a row space

SVD orthogonalizes these spaces and decomposes D

D = USV T

( (

U V

contains the left singular vectors/eigenvectors ) contains the right singular vectors/eigenvectors )

Rewrite as a sum of a minimum number of rank-1 matrices

D= u

r =1 r

ovr

Matrix SVD Properties:

D=USV

D = u ov

R r =1 r r r

Matrix Inverse

Rank Decomposition: sum of min. number of rank-1 matrices

v1T

v2T

..

vRT

u1

u2

R1 R2

uR

Multilinear Rank Decomposition:

D = u ov

r1 =1 r2 =1 r1 r 2 r1

r2

Some matrix properties

Matlab Tutorial

Вам также может понравиться

- Intro To Network SecurityДокумент50 страницIntro To Network SecurityRashmi GuptaОценок пока нет

- MMMMMMMДокумент12 страницMMMMMMMNikhil AggОценок пока нет

- 303 NaveenДокумент4 страницы303 NaveenRashmi GuptaОценок пока нет

- An Adaptive Approach To Controlling Parameters of Evolutionary AlgorithmsДокумент365 страницAn Adaptive Approach To Controlling Parameters of Evolutionary AlgorithmsRashmi GuptaОценок пока нет

- Circuit SystemsДокумент28 страницCircuit SystemsRashmi GuptaОценок пока нет

- A Focused Approach: Section B Registration Package Section A Section BДокумент2 страницыA Focused Approach: Section B Registration Package Section A Section BRashmi GuptaОценок пока нет

- Project ReportДокумент26 страницProject ReportRashmi GuptaОценок пока нет

- Electrostatics NC/M and EДокумент2 страницыElectrostatics NC/M and ERashmi GuptaОценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Algorithms OverviewДокумент30 страницAlgorithms OverviewgrtrОценок пока нет

- OJS File PDFДокумент8 страницOJS File PDFPranav sharmaОценок пока нет

- Functions Section 2.3: MTK3013 Discrete StructuresДокумент24 страницыFunctions Section 2.3: MTK3013 Discrete StructuresFarhan ZahidiОценок пока нет

- LM Pre Calculus Q2 M11 V2-CamatДокумент19 страницLM Pre Calculus Q2 M11 V2-CamatTERESA N. DELOS SANTOSОценок пока нет

- Chapter 13Документ61 страницаChapter 13Alice BotОценок пока нет

- Solution of Weighted Residual Problems by Using Galerkin's MethodДокумент3 страницыSolution of Weighted Residual Problems by Using Galerkin's MethodAbel LopezОценок пока нет

- Li, Yin, Zhang - 2010 - User ' S Guide For TVAL3 TV Minimization by Augmented Lagrangian and Alternating Direction AlgorithmsДокумент8 страницLi, Yin, Zhang - 2010 - User ' S Guide For TVAL3 TV Minimization by Augmented Lagrangian and Alternating Direction AlgorithmsXavier AriasОценок пока нет

- CLC - Fourier Series and Integral FourierДокумент24 страницыCLC - Fourier Series and Integral FourierNam GiangОценок пока нет

- Partial Differential Equations 3Документ200 страницPartial Differential Equations 3bstockus100% (4)

- Numerical Analysis: MATLAB Practical (Autumn 2020) B.E. III Semester Thapar Institute of Engineering & Technology PatialaДокумент8 страницNumerical Analysis: MATLAB Practical (Autumn 2020) B.E. III Semester Thapar Institute of Engineering & Technology PatialaAarohan Verma0% (1)

- Matrix of Curriculum Standards (Competencies), With Corresponding Recommended Flexible Learning Delivery Mode and Materials Per Grading PeriodДокумент4 страницыMatrix of Curriculum Standards (Competencies), With Corresponding Recommended Flexible Learning Delivery Mode and Materials Per Grading PeriodAjoc Grumez Irene100% (15)

- Solutions Graphs - Pre-Calculus 12 - Ch. 5 - Extra ReviewДокумент2 страницыSolutions Graphs - Pre-Calculus 12 - Ch. 5 - Extra ReviewVicky CaltaОценок пока нет

- BBA - 103 - BM - Lesson PlanДокумент3 страницыBBA - 103 - BM - Lesson PlanAmit JainОценок пока нет

- Assignment ProblemДокумент16 страницAssignment ProblemLudwig KelwulanОценок пока нет

- Lecture#26 Integration by Partial FractionДокумент31 страницаLecture#26 Integration by Partial FractionBewa JokhioОценок пока нет

- Holomorphic Dynamics: S. Morosawa, Y. Nishimura, M. Taniguchi, T. UedaДокумент25 страницHolomorphic Dynamics: S. Morosawa, Y. Nishimura, M. Taniguchi, T. UedaOsama AlBannaОценок пока нет

- Introduction To Mathematical Physics-Laurie CosseyДокумент201 страницаIntroduction To Mathematical Physics-Laurie CosseyJean Carlos Zabaleta100% (9)

- Lecture 3Документ10 страницLecture 3tomaОценок пока нет

- Trigonometry 1st Edition Blitzer Solutions ManualДокумент210 страницTrigonometry 1st Edition Blitzer Solutions ManualAdelaide92Оценок пока нет

- Angry Birds Quadratic Transformation ExplorationДокумент7 страницAngry Birds Quadratic Transformation Explorationapi-492684571Оценок пока нет

- 5 Analytic ContinuationДокумент17 страниц5 Analytic Continuationmaurizio.desio4992Оценок пока нет

- Evaluating FunctionsДокумент1 страницаEvaluating FunctionsOfelia OredinaОценок пока нет

- CALCULUS 2 FUNCTION and LIMIT PDFДокумент74 страницыCALCULUS 2 FUNCTION and LIMIT PDFZikraWahyudiNazirОценок пока нет

- Acm Cheat SheetДокумент43 страницыAcm Cheat SheetIsrael MarinoОценок пока нет

- Mathematics (P-1) Question PaperДокумент5 страницMathematics (P-1) Question PaperB GALAОценок пока нет

- Weak-L and BMO: Annals of Mathematics May 1981Документ13 страницWeak-L and BMO: Annals of Mathematics May 1981Alvaro CorvalanОценок пока нет

- Shortest PathДокумент8 страницShortest PathSiddhesh PatwaОценок пока нет

- Python Exp 7 - MergedДокумент13 страницPython Exp 7 - MergedjnknОценок пока нет

- Module 4 AssignmentДокумент4 страницыModule 4 AssignmentSophia June NgОценок пока нет

- Institute of Technology of Cambodia: Department of Applied Mathematics and StatisticДокумент8 страницInstitute of Technology of Cambodia: Department of Applied Mathematics and StatisticLoy NasОценок пока нет