Академический Документы

Профессиональный Документы

Культура Документы

CUDA May 09 TB L5

Загружено:

proxymo1Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

CUDA May 09 TB L5

Загружено:

proxymo1Авторское право:

Доступные форматы

Lecture 5

Multi-GPU computing with CUDA and MPI Tobias Brandvik

The story so far

Getting started (Pullan) An introduction to CUDA for science (Pullan) Developing kernels I (Gratton) Developing kernels II (Gratton) CUDA with multiple GPUs (Brandvik) Medical imaging registration (Ansorge)

Agenda

MPI overview The MPI programming model Heat conduction example (CPU) MPI and CUDA Heat conduction example (GPU) Performance measurements

MPI overview

MPI is a specification of a Message Passing Interface The specification is a set of functions with prescribed behaviour Not a library there are multiple competing implementations of the specification Two popular open-source implementations are Open-MPI and MPICH2 Most MPI implementations from vendors are customized versions of these.

Why use MPI?

Performance Scalability Stability

What hardware does MPI run on?

Distributed memory clusters MPIs popularity is in large part due to the rise of cheap clusters with commodity x86 nodes over the last 15 years Ethernet or Infiniband interconnects Shared memory Some MPI implementations are also suitable for multi-core shared memory machines (e.g. high-end desktops)

MPI programming model

An MPI program consists of several processes Each process can execute different instructions Each process has its own memory space Processes can only communicate by sending messages to each other

MPI programming model

Communicator Rank 0 Memory Rank 1 Memory

Rank: A unique integer identifier for a process Communicator: The collection of processes which may communicate with each other

CPU

CPU

A simple example in pseudo-code

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait()

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait()

A simple example

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait() memory location

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait()

A simple example

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait() memory location message length

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait()

A simple example

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait() datatype memory location message length

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait()

A simple example

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait() datatype memory location message length

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait() sending rank

A simple example

We want to copy an array from one processor to another

rank 0 float a[10]; float b[10]; rank 1 float a[10]; float b[10];

recv(b, 10, float, 1, 200) send(a, 10, float 1, 300) wait() datatype memory location

message tag

recv(b, 10, float, 0, 300) send(a, 10, float 0, 200) wait()

sending rank message length

The only 7 MPI functions youll ever need

MPI-1 has more than 100 functions But most applications only use a small subset of these In fact, you can write production code using only 7 MPI functions But youll probably use a few more

The only 7 MPI functions youll ever need

MPI_Init MPI_Comm_size MPI_Comm_rank MPI_Isend MPI_Irecv MPI_Waitall MPI_Finalize The MPI specification is defined for C, C++ and Fortran well consider the C function prototypes

A closer look at the functions

int MPI_Init( int *argc, char ***argv ) Initialises the MPI execution environment int MPI_Comm_size ( MPI_Comm comm, int *size ) Determines the size of the group associated with a communicator int MPI_Comm_rank ( MPI_Comm comm, int *rank ) Determines the rank of the calling process in the communicator int MPI_Finalize() Terminates MPI execution environment

A closer look at the functions

int MPI_Irecv( void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Request *request ) buf: memory location for message count: number of elements in message datatype: type of elements in message (e.g. MPI_FLOAT) source: rank of source tag: message tag comm: communicator request: communication request (used for checking message status)

A closer look at the functions

int MPI_Isend( void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm, MPI_Request *request ) buf: memory location for message count: number of elements in message datatype: type of elements in message (e.g. MPI_FLOAT) dest: rank of src tag: message tag comm: communicator request: communication request (used for checking message status)

The structure of an MPI program

Startup

MPI_Init MPI_Comm_size/MPI_Comm_rank Read in and initialise data based on the process rank

Inner loop

Post all receives MPI_Irecv Post all sends MPI_Isend Wait for message passing to finish MPI_Waitall Perform computation

End

Write out data MPI_Finalize

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize(); }

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize(); }

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize();

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize();

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize();

An actual MPI program

#include <mpi.h> int main(int argc, char *argv[]) { MPI_Request req_in, req_out; MPI_Status stat_in, stat_out; float a[10], b[10]; int mpi_rank, mpi_size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &mpi_rank); MPI_Comm_size(MPI_COMM_WORLD, &mpi_size); if (mpi_rank == 0) { MPI_Irecv(b, 10, MPI_FLOAT, 1, 200, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 1, 300, MPI_COMM_WORLD, &req_out); } if (mpi_rank == 1) { MPI_Irecv(b, 10, MPI_FLOAT, 0, 300, MPI_COMM_WORLD, &req_in); MPI_Isend(a,10, MPI_FLOAT, 0, 200, MPI_COMM_WORLD, &req_out); } MPI_Waitall(1, &req_in, &stat_in); MPI_Waitall(1, &req_out, &stat_out); MPI_Finalize();

Compiling and running MPI programs

MPI implementations provide wrappers for popular compilers These are normally named mpicc/mpicxx/mpif77 etc. Running an MPI program normally through mpirun np N ./a.out So, for previous example: mpicc mpi_example.c mpirun np 2 ./a.out These commands are for Open-MPI, others may differ slightly

Heat conduction example (CPU)

Well modify the head conduction example from earlier to work with multiple CPUs

2D heat conduction

In 2D:

2T 2T T = 2 + 2 t y x

2D heat conduction

In 2D:

2T 2T T = 2 + 2 t y x

For which a possible finite difference approximation is:

Ti +1, j 2Ti, j + Ti1, j Ti, j +1 2Ti, j + Ti, j 1 T = + 2 2 t x y

where T is the temperature change over a time t and i,j are indices into a uniform structured grid (see next slide)

Stencil

Update red point using data from blue points (and red point)

Finding more parallelism

In the previous lectures, we have tried to find enough parallelism in the problems for 1000s of threads This is fine-grained parallelism For MPI, we need another level of parallelism on top of this This is coarse-grained parallelism

Domain decomposition and halos

Domain decomposition and halos

Domain decomposition and halos

Domain decomposition and halos

Domain decomposition and halos

The fictitious boundary nodes are called halos

Message passing pattern

The left-most rank sends data to the right The inner ranks send data to both the left and the right The right-most rank sends data to the left

Rank 0

Rank 1

Rank 2

Message buffers

MPI can read and write directly from 2D arrays using an advanced feature called datatypes (but this is complicated and doesnt work for GPUs) Instead, we use 1D incoming and outgoing buffers Message-passing strategy is then: Fill outgoing buffers (2D -> 1D) Send from outgoing buffers, receive into incoming buffers Wait Fill arrays from incoming buffers (1D -> 2D)

Heat conduction example (single CPU)

for (i=0; i; nstep; i++) { step_kernel(); }

Heat conduction example (multi-CPU)

for (i=0; i; nstep; i++) fill_out_buffers(); if (mpi_rank == 0) { // left receive_right(); send_right(); } if (mpi_rank > 0 && mpi_rank < mpi_size-1) { // inner receive_left(); receive_right(); send_left(); send_right(); } if (mpi_rank == mpi_size-1) { // right receive_left(); send_left(); } wait_all(); empty_in_buffers(); step_kernel(); }

Heat conduction example (multi-GPU)

How does all this work when we use GPUs? Just like with CPUs, except we need buffers on both the CPU and the GPU Use one MPI process per GPU

Message buffers with GPUs

Message-passing strategy with GPUs: Fill outgoing buffers on GPU using a kernel (2D -> 1D) Copy buffers to CPU - cudaMemcpy(DeviceToHost) Send from outgoing buffers, receive into incoming buffers Wait Copy buffers to GPU - cudaMemcpy(HostToDevice) Fill arrays from incoming buffers on GPU using a (1D -> 2D)

Heat conduction example (multi-GPU)

for (i=0; i; nstep; i++) fill_out_buffers_cpu(); recv(); send(); wait(); empty_in_buffers_cpu(); step_kernel_cpu(); }

Heat conduction example (multi-GPU)

for (i=0; i; nstep; i++) fill_out_buffers_gpu(); // (2D -> 1D) cudaMemcpy(DeviceToHost); recv(); send(); wait(); cudaMemcpy(HostToDevice); empty_in_buffers_gpu(); // (1D -> 2D) step_kernel_gpu(); }

Compiling code with CUDA and MPI

Can use a .cu file and use nvcc like before, but need to include the MPI headers and library: nvcc mpi_example.cu I $HOME/open-mpi/include L $HOME/open-mpi-lib lmpi Or, compile C code with mpicc and CUDA code with nvcc and link the results together into an executable For simple examples, the first approach is fine, but for complicated applications the second approach is cleaner

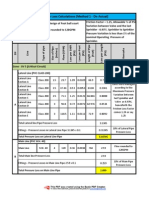

Scaling performance

When benchmarking MPI applications, we look at two issues: Strong scaling how well does the application scale with multiple processors for a fixed problem size? Weak scaling how well does the application scale with multiple processors for a fixed problem size per processor?

GPU scaling issues

Achieving good scaling is more difficult with GPUs for two reasons: 1. There is an extra memory copy involved for every message 2. The kernels are much faster so the MPI communication becomes a larger fraction of the overall runtime

Typical scaling experience

Weak scaling Performance Performance Strong scaling

Ideal CPU GPU

Procs

Procs

GPU scaling issues

Achieving good scaling is more difficult with GPUs for two reasons: 1. There is an extra cudaMemcpy() involved for every message 2. The kernels are much faster so the communication becomes a larger fraction of the overall runtime

Summary

MPI is a good approach to parallelism on distributed memory machines It uses an explicit message-passing model Grid problems can be solved in parallel by using halo nodes You dont need to change your kernels to use MPI, but you will need to add the message passing logic Using MPI and CUDA together can be done by using both host and device message buffers Achieving good scaling is more difficult since the kernels are faster on the GPU

Вам также может понравиться

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Android Debug Bridge - Android DevelopersДокумент11 страницAndroid Debug Bridge - Android Developersproxymo1Оценок пока нет

- Extending OpenMP To ClustersДокумент1 страницаExtending OpenMP To Clustersproxymo1Оценок пока нет

- Parallel Novikov Si 1-2-2013Документ14 страницParallel Novikov Si 1-2-2013proxymo1Оценок пока нет

- Device Tree MigrationДокумент19 страницDevice Tree Migrationproxymo1Оценок пока нет

- Khem Raj Embedded Linux Conference 2014, San Jose, CAДокумент29 страницKhem Raj Embedded Linux Conference 2014, San Jose, CAproxymo1Оценок пока нет

- OpenCL Jumpstart GuideДокумент17 страницOpenCL Jumpstart Guideproxymo1Оценок пока нет

- Factor of Safety and Stress Analysis of Fuselage Bulkhead Using CompositeДокумент7 страницFactor of Safety and Stress Analysis of Fuselage Bulkhead Using Compositeproxymo1Оценок пока нет

- GBA Programming Manual v1.22Документ172 страницыGBA Programming Manual v1.22proxymo1Оценок пока нет

- Model 0710 & Experiment OverviewДокумент10 страницModel 0710 & Experiment Overviewproxymo1Оценок пока нет

- Aircraft Structures II LabДокумент15 страницAircraft Structures II Labproxymo10% (1)

- Network World Magazine Article 1328Документ4 страницыNetwork World Magazine Article 1328proxymo1Оценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Assignment 1: Drive Fundamentals: (4 Marks)Документ1 страницаAssignment 1: Drive Fundamentals: (4 Marks)kd35Оценок пока нет

- Astm D 2699 - 01 - Rdi2otktmdeДокумент49 страницAstm D 2699 - 01 - Rdi2otktmdeSamuel EduardoОценок пока нет

- Infinique Structured Cabling Installation ChecklistДокумент2 страницыInfinique Structured Cabling Installation Checklistroot75% (4)

- B766Документ9 страницB766marriolavОценок пока нет

- Uahel NoticeДокумент2 страницыUahel NoticeChiranjivi ChudharyОценок пока нет

- Single Head Nest Detail: Turbonest F644-12Mm-S355-04Документ5 страницSingle Head Nest Detail: Turbonest F644-12Mm-S355-04Vv ZzОценок пока нет

- LODLOD Church Cost Proposal For Supply of Labor of 6" CHB With Lintel Beam/ColumnДокумент1 страницаLODLOD Church Cost Proposal For Supply of Labor of 6" CHB With Lintel Beam/ColumnJeve MilitanteОценок пока нет

- Cat Reforming Part 2 3 PDF FreeДокумент68 страницCat Reforming Part 2 3 PDF FreeLê Trường AnОценок пока нет

- Design of Earth-Quake Resistant Multi Storied RCC Building On A Sloping GroundДокумент24 страницыDesign of Earth-Quake Resistant Multi Storied RCC Building On A Sloping GroundKakula SasidharОценок пока нет

- Fizik F4 P2 (2016)Документ19 страницFizik F4 P2 (2016)AHMAD FAISALОценок пока нет

- The Flow Chart of Tires Pyrolysis EquipmentДокумент4 страницыThe Flow Chart of Tires Pyrolysis EquipmentpyrolysisoilОценок пока нет

- Iei Pe MC PDFДокумент3 страницыIei Pe MC PDFKulbir ThakurОценок пока нет

- AASHTO GeoTechnical Design of PileДокумент5 страницAASHTO GeoTechnical Design of PiletrannguyenvietОценок пока нет

- Fire Hydrant System Design Installation Commisioning and TestingДокумент5 страницFire Hydrant System Design Installation Commisioning and Testingjaianit89Оценок пока нет

- Conformity Assessment For The Execution of Steel & Aluminium StructuresДокумент14 страницConformity Assessment For The Execution of Steel & Aluminium StructuresJevgenijsKolupajevsОценок пока нет

- QuoteДокумент3 страницыQuotemjnasar khan jamalОценок пока нет

- Btree Practice ProbsДокумент2 страницыBtree Practice ProbsAbyssman ManОценок пока нет

- Exp-3 (Speed Control by V-F MethodДокумент4 страницыExp-3 (Speed Control by V-F MethoduttamОценок пока нет

- Nmon Analyser & ConsolidatorДокумент7 страницNmon Analyser & ConsolidatorJuan InagakiОценок пока нет

- Ihs Kingdom Hot Keys ReferenceДокумент1 страницаIhs Kingdom Hot Keys ReferenceMuhammad Jahangir100% (1)

- Copeland Scroll Compressors For Refrigeration Zs09kae Zs11kae Zs13kae Application Guidelines en GB 4214008Документ24 страницыCopeland Scroll Compressors For Refrigeration Zs09kae Zs11kae Zs13kae Application Guidelines en GB 4214008Cesar Augusto Navarro ChirinosОценок пока нет

- Procedures For Solar Electric (Photovoltaic Abbreviated As PV) System Design and InstallationДокумент5 страницProcedures For Solar Electric (Photovoltaic Abbreviated As PV) System Design and InstallationUmamaheshwarrao VarmaОценок пока нет

- Precio Por Escala Dosyu Efectiva HasДокумент4 страницыPrecio Por Escala Dosyu Efectiva HasAliss SanchezОценок пока нет

- Guide To The Systems Engineering Body of Knowledge (Sebok), Version 1.1Документ51 страницаGuide To The Systems Engineering Body of Knowledge (Sebok), Version 1.1António FerreiraОценок пока нет

- Friction Loss Calculations of Irrigation Design A Foot Ball CourtДокумент13 страницFriction Loss Calculations of Irrigation Design A Foot Ball Courtmathewmanjooran100% (2)

- Info02e5 Safety ValveДокумент2 страницыInfo02e5 Safety ValveCarlos GutierrezОценок пока нет

- History of Control EngineeringДокумент2 страницыHistory of Control EngineeringAhmed HamoudaОценок пока нет

- Critical Care Systems Test Equipment For Repairs and PMS in The USA and CanadaДокумент6 страницCritical Care Systems Test Equipment For Repairs and PMS in The USA and CanadaMedsystem atОценок пока нет

- Planning For Procurement of Construction ContractsДокумент41 страницаPlanning For Procurement of Construction ContractsJoel AlcantaraОценок пока нет

- C# Jumpstart Module 1 IntroДокумент31 страницаC# Jumpstart Module 1 IntroAnjana JayasekaraОценок пока нет