Академический Документы

Профессиональный Документы

Культура Документы

19 F Dilbag Singh 1

Загружено:

ragvshahОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

19 F Dilbag Singh 1

Загружено:

ragvshahАвторское право:

Доступные форматы

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

High Availability of Clouds: Failover Strategies for Load Balancing Algorithms using Checkpointing

Dilbag Singh

AbstractThis paper deals with the issue of Failover Strategies in Cloud Computing using Load Balancing Algorithms. Since we know that it happens periodically that some Servers or Virtual Servers get failed then question arises how to deal with it, as some jobs are working on these Servers, causes overall restartation of work, whether some work has been successfully done on other nodes or servers. This can be achieved by having multiple secondary Virtual servers that are exact replicas of a primary server. Constantly, they monitor the work of the primary server waiting to take over if it fails. In this basic form, at least a single machine (server) is in stand-by mode use while the remaining ones are active that is doing execution. But due to lack of checkpoints implementation it is difficult to detect the working load of failed servers that is we dont know which work is going to transfer on other nodes or servers. However in Purposed system I have tried to keep all nodes or servers busy for achieving Load Balancing, and implement checkpoints to achieve failover successfully. However all Research work is based on Cloud computing, but it deals only with failover and Load balancing, no security and management issue is concerned. Keywords-Failover, Load Balancing, Virtual Servers, Stand-by, Endeavour.

balancing, which is one of most important factors that influence the overall performance of application. Although load balancing methods in conventional parallel and distributed systems have been intensively studied, they do not work in cloud architectures because its architecture is different and also we need high availability and high performance which also include the problem that what will happen when some sort of failure occur and also what will happen when out of order node recovered? II. PROBLEM DEFINITION In Cloud environments, the available resources are dynamic in nature, which in turn affects application performance. Workload and resource management are two essential functions provided at the service level of the Cloud software infrastructure. To improve the global throughput of these environments, effective and efficient load balancing algorithms are fundamentally important. Load sharing of jobs is one of the best ways to balance the load. The focus of this study is to consider factors which can be used as characteristics for decision making to initiate load balancing as it is one of the most important factors which can affect the performance of the Cloud application. This research work focus on providing High Availability in Cloud environment by using refinement in current Load balancing mechanism in Cloud environment using checkpoints and failover strategies. The two problems that will be addressed in this research work are: A. Failover Strategies To provide High availability in Cloud environment we first focus on how to develop and implement failover strategies in Cloud environment. Our main focus is to use such a method which achieves High availability without using any backup node. To achieve this we need to develop a algorithm which will use load sharing technique to provide High availability by balancing the load on active nodes. B. Checkpoints To provide High availability and consistency to clients we focus on how to implement checkpoints in cloud environment as there exist no other method by which Central node of Cloud service provider come to know that this node is doing

I. INTRODUCTION A typical cloud environment is an distributed system which will have a number of interconnected resources that can work independently or in cooperation with each other.In cloud environment clouds consists some high performance servers and other resources which actually does the job of its clients. However it may happens that some servers get more load than others and it may possible some are either lightly loaded or stay idle, which leads to underutilization of resources. In this scenario load balancing plays key role when there exist unbalanced environment. To minimize this unbalancing situation (time needed to perform all tasks), the workload should be evenly distributed over all resources based on their processing speed. Essential objective of load balancing consists primarily in optimizing the average response time of applications, which often means maintaining the workload proportionally equivalent on the whole resources of a system. This work focuses on load balancing in a cloud environment. So to achieve high performance we need to understand the factors that can affect the performance of an application like load.

Dilbag singh with Department of Computer Science and Engineering, Guru Nanak Dev University Amritsar ,Punjab (dggill2@gmail.com)

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

this or that job. For sharing load of failed node with other nodes it is necessary to know which jobs are currently executing in failed node and also the jobs which are placed in waiting queue of failed node. III. LITERATURE SURVEY Checkpoint is defined as a designated place in a program at which normal processing is interrupted specifically to preserve the status information necessary to allow resumption of processing at a later time. By periodically invoking the check pointing process, one can save the status of a program at regular intervals. If there is a failure one may restart com-putation from the last checkpoint thereby avoiding repeating the computation from the beginning. The process of resuming computation by rolling back to a saved state is called rollback recovery [1,2]. Cloud vendors are based on automatic load balancing services, which allowed entities to increase the number of CPUs or memories for their resources to scale with the increased demands [3]. This service is optional and depends on the entitys business needs. Therefore load balancers served two important needs, primarily to promote availability of cloud resources and secondarily to promote performance. Cloud Computing is a kind of distributed computing where massively scalable IT-related capabilities are provided to multiple external cus-tomers as a service using internet technologies. A Cloud Computing can allows selection, sharing and aggregation of large collections of geographically and organizationally dis-tributed heterogeneous resources for solving large-scale data and compute intensive problems [4]. The cloud providers have to achieve a large, general-purpose computing infrastructure; and virtualization of infrastructure for different customers and services to provide the multiple application services. Furthermore, the ZEUS Company develops software that can let the cloud provider easily and cost-effectively offer every customer a dedicated application delivery solution [5]. The ZXTM software is much more than a shared load balancing service and it offers a low-cost starting point in hardware development, with a smooth and cost-effective upgrade path to scale as your service grows [4,5]. Availability is a reoccurring and a growing concern in software intensive systems. Cloud systems services can be turned offline due to conservation, power outages or possible denial of service invasions. Fundamentally, its role is to determine the time that the system is up and running correctly; the length of time between failures and the length of time needed to resume operation after a failure. Availability needs to be analyzed through the use of presence information, forecasting usage patterns and dynamic resource scaling [6].

IV. PROPOSED FAILOVER TECHNIQUE USING CHECK-POINTING In order to achieve high availability using failover strategies and use of check-pointing to provide accurate and reliable results. Load balancing is defined as the feasible allocation or distribution of the work to highly feasible resources wher-ever it suits and execution time of the application could be minimized. This Section discusses the factors for initiating load balancing algorithms, comparative study of existing load balancing algorithms and design of proposed load balancing algorithm which has ability to provide failover strategies.

Fig. 1. 3 Node Architecture

A. Factors for Initiating Load Balancing Algorithm There are some decision making factors, like arrival and completion of job, arrival and withdrawing of resources, on occurrence of which the load balancing algorithm initiates. Other factors are machine failure and node overloading which have surfaced from literature survey. These factors can be summarized as: (a) Arrival of any new job: Whenever any new job arrives to scheduler, it demands for a resource which initiates load balancing algorithms. (b) Completion of execution of any job: Whenever any new job gets complete, it leaves resources and they are reassigned to another job. (c) Arrival of any new resource: Whenever any idle resource comes to systems resource pool then it is assigned to some job considering its requirement. (d) Withdrawal of any existing resource: Withdrawing of any resource increases the computation overhead over remaining resources. (e) Machine failure at any node: Failure of any machine increases the overhead of remaining resources that initiate load balancing algorithms. (f) Node become overloaded: Whenever any node becomes overloaded due to any reason then load balancing algorithms starts and manages the stopped job of the overloaded node. In Cloud environments, the available resources are dynamic in nature, which in turn affects application performance. Workload and resource management are two essential functions provided at the service level of the Cloud software infrastructure. To

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

improve the global throughput of these environments, effective and efficient load balancing algorithms are fundamentally important. Load sharing of jobs is one of the best ways to balance the load. The focus of this study is to consider factors which can be used as characteristics for decision making to initiate load balancing as it is one of the most important factors which can affect the performance of the Cloud application. Proposed load balancing algorithm is developed considering main characteristics like reliability, high availability, performance, throughput, and resource utilization. However to full fill requirement of Failover strategy. I am going to purpose two different algorithm Named as Global Algorithm and Local Algorithm. The flow chart of global algorithm is shown in Fig. 2. in which we will see how global algorithm works but before that 3-node Cloud computing environment is shown. Fig. 1. is showing the three level frame work for cloud computing environment. Local algorithm belongs to Service manager, which is shown in Fig. 3.

these requests between two sub clouds and each sub cloud has three high end servers as shown in Fig. 4.

Fig. 4.

Local Algorithm

V. EXPERIMENTAL SET-UP To successfully simulate the purposed work I have made a experimental set-up as shown in Fig. 4. in which clients send their requests to Cloud Service Provider and CSP divide

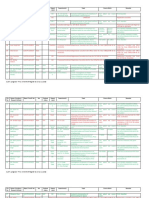

VI. EXPERIMENTAL RESULTS

Fig. 2. Global Algorithm

As defined in Experimental set-up, A virtual environment has been made in visual basic. Whenever client send its request CSP divides it into two sub clouds and Global checkpoint will be updated. After it Local Algorithm works and it divides jobs in available 3 high end virtual servers, and Local Checkpoint will be updated. Now in developed GUI(Graphical User Interface) we have options to fail either high end server or also sub cloud, and their Failure or if recovered then Local Checkpoint will be updated. Checkpoint will be rerun periodically after each 5 seconds. If any node is founded failed/recovered then checkpoint will balance the work in available nodes, if in any case all node failed then user will be notify by Cloud not responding. A. Global Checkpoint Fig. 5. is showing Global checkpoint it showing relative information about the jobs and also it shows the division of jobs into threads, it has also shown that whenever a job met it passes

Fig. 3.

Local Algorithm

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

Fig. 5. Global Algorithm

Fig. 7.

Local checkpoint

Fig. 6.

Local checkpoint

Fig. 8.

Local checkpoint

it into one sub cloud which has minimum load, which actually does Load balancing at initial stages. B. Local checkpoint Fig. 6 is showing the Local checkpoint in it threads has been assigned to available high end servers by assigning each coming thread to minimum completed time server which achieves further load balancing. Only one local checkpoint is used in my simulator to have local knowledge of all high end servers either belongs to sub cloud1 or sub cloud2. C. Failover Strategy To achieve failover I have failed and also recovered some of the nodes and GUI automatically update Local and Global Checkpoints as I have coded it. After each 5 seconds Both checkpoint rerun and do following things. 1) Completed: Each completed job transferred to History table and acknowledgement send to its client, and it will be deleted from both Local and Global checkpoints. 2) Failure Node: GUI has been coded in such a way that if any node get failed then checkpoint detect it when Checkpoint rerun, and share its load among available servers, keeping load balancing in mind. As shown in Fig. 7. In Fig. 7 Node e has

been failed, and you can see its load has been shared with Node a, as Node a is lightly loaded. 3) Recovered Node: GUI has been coded in such a way that if any node get Recovered then checkpoint detect it when Checkpoint rerun, and share load of servers with it, keeping load balancing in mind. As shown in Fig. 8. In Fig. 8 Node e has been recovered, and you can see it has taken some load from Node a and c, as Node a and c is heavily loaded. VII. CONCLUSION In this paper I have tried to purpose a failover strategy for cloud computing taking load balancing in mind. The developed simulator which is showing us that how cloud provider provide high availability to its client by using failover strategy. This paper shows us that without any additional cost and keeping load balancing we can achieve failover strategies. VII. ACKNOWLEDGMENT It is a matter of extreme pleasure satisfaction for me to present this paper namely Failover Strategies in Cloud Computting using Load Balancing, before the readers. Although my

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

topic is not a simple as it is still area of research, but I have tried my best to make it easy to use. I would like to thank my guide Mr. Jaswinder Singh other persons involved who helped me to complete this research paper provide valuable suggestions constant guidance that helps me to complete this paper. I am also very thankful to all the Faculty members of my Department and H.O.D. Dr Gurwinder Singh of the Department of Computer. Science Guru Nanak Dev University Amritsar who helped me to do my Research paper by providing the various facilities and guidance to complete this term paper. I would also like to thank my beloved parents who have permitted me frequently at odd timings for the purpose of the completion of the research paper. Also heartily thanks my friends for their helpful suggestions. I immensely grateful to almighty God for enabling me to complete this task.

VIII. BIBLIOGRAPHY [1]Y. J. Wen, S. D. Wang, Minimizing Migration on Grid Environments: An Experience on Sun Grid Engine, National Taiwan University, Taipei, Taiwan Journal of Information Technology and Applications, March, 2007, pp. 297-30. [2] S. Kalaiselvi, A Survey of Check-Pointing Algorithms for Parallel and Distributed Computers, Supercomputer Education and Research Centre (SERC), Indian Institute of Science, Bangalore V Rajaraman Jawaharlal Nehru Centre for Advanced Scientific Research, Indian Institute of Science Campus, Bangalore Oct. 2000,pp. 489510, [Online]. Available: www.ias.ac.in/sadhana/Pdf2000Oct/Pe838.pdf. [3] Reese, G., Cloud Application Architectures: Building Applications and Infrastructure in the Cloud (Theory in Practice), OReilly Media, 1st Ed., 2009. [4] Load Balancing, Load Balancer, Jan. 2010. [Online]. Available: http://www.zeus.com- lproductsIzxtmlb/index.html [5] ZXTM for Cloud Hosting Providers, Jan. 2010, [Online]. Available: http://www.zeus.com/cloud-computing/for-cloud-providers.html. [6] K. Stanoevska-Slabeva, T. W. S. Ristol, Grid and Cloud Computing and Applications, A Business Perspective on Technology, 1st Ed., pp. 23-97, 2004

Green Technologies: Smart and Efficient Management 2012, SLIET Longowal

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Ph.D. Enrolment Register As On 22.11.2016Документ35 страницPh.D. Enrolment Register As On 22.11.2016ragvshahОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Global Institute of Technology: Attendance Report (Department of Electrical)Документ5 страницGlobal Institute of Technology: Attendance Report (Department of Electrical)ragvshahОценок пока нет

- MACOR Data SheetДокумент4 страницыMACOR Data SheetragvshahОценок пока нет

- Fundamental of Electronics Engineering Lab (EC-211)Документ2 страницыFundamental of Electronics Engineering Lab (EC-211)ragvshahОценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Notice: Kind Attention To Class Representatives (UG & PG)Документ2 страницыNotice: Kind Attention To Class Representatives (UG & PG)ragvshahОценок пока нет

- Ashoka Rish THДокумент23 страницыAshoka Rish THragvshahОценок пока нет

- Antenna ManualДокумент30 страницAntenna ManualragvshahОценок пока нет

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Ec-622 Microwave Engineering Assignment IДокумент1 страницаEc-622 Microwave Engineering Assignment IragvshahОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Notice: Kind Attention To Class Representatives (UG & PG)Документ2 страницыNotice: Kind Attention To Class Representatives (UG & PG)ragvshahОценок пока нет

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- GEC-14 List of StudentsДокумент2 страницыGEC-14 List of StudentsragvshahОценок пока нет

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Game Theory 8Документ15 страницGame Theory 8ragvshahОценок пока нет

- Multi Objective 11Документ10 страницMulti Objective 11ragvshahОценок пока нет

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- T 12 N (1) D (T 1) C TF (N, D) Step (C) GridДокумент3 страницыT 12 N (1) D (T 1) C TF (N, D) Step (C) GridragvshahОценок пока нет

- Global Technical Campus, Jaipur Department of Electronics and Communication Internal Practical Marks DistributionДокумент5 страницGlobal Technical Campus, Jaipur Department of Electronics and Communication Internal Practical Marks DistributionragvshahОценок пока нет

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- Global Technical Campus, Jaipur Monthly Attendence (4/1/16 To 5/2/16) Atdnor05Fb16Gct01Ic8Sa 8EI1A 8EI2A 8EI3A 8EI4.1A 8EI5AДокумент6 страницGlobal Technical Campus, Jaipur Monthly Attendence (4/1/16 To 5/2/16) Atdnor05Fb16Gct01Ic8Sa 8EI1A 8EI2A 8EI3A 8EI4.1A 8EI5AragvshahОценок пока нет

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Starting MATLAB: Fig. M1.1 MATLAB Desktop (Version 7.0, Release 14)Документ3 страницыStarting MATLAB: Fig. M1.1 MATLAB Desktop (Version 7.0, Release 14)ragvshahОценок пока нет

- Matlab Module 1: 1. Command Window 2. Current Directory 3. Workspace 4. Command HistoryДокумент3 страницыMatlab Module 1: 1. Command Window 2. Current Directory 3. Workspace 4. Command HistoryragvshahОценок пока нет

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Notice Regarding Weekly ScheduleДокумент1 страницаNotice Regarding Weekly ScheduleragvshahОценок пока нет

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Unit-2 Network Theorems: Fig (A)Документ2 страницыUnit-2 Network Theorems: Fig (A)ragvshahОценок пока нет

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)