Академический Документы

Профессиональный Документы

Культура Документы

Quotient Spaces

Загружено:

Simon RampazzoИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Quotient Spaces

Загружено:

Simon RampazzoАвторское право:

Доступные форматы

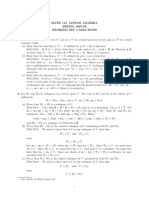

NOTES ON QUOTIENT SPACES

SANTIAGO CANEZ

Let V be a vector space over a eld F, and let W be a subspace of V . There is a sense in which we can divide V by W to get a new vector space. Of course, the word divide is in quotation marks because we cant really divide vector spaces in the usual sense of division, but there is still an analog of division we can construct. This leads the notion of whats called a quotient vector space. This is an incredibly useful notion, which we will use from time to time to simplify other tasks. In particular, at the end of these notes we use quotient spaces to give a simpler proof (than the one given in the book) of the fact that operators on nite dimensional complex vector spaces are upper-triangularizable. For each v V , denote by v + W the following subset of V : v + W = {v + w | w W }. So, v + W is the set of all vectors in V we can get by adding v to elements of W . Note that v itself is in v + W since v = v + 0 and 0 W . We call a subset of the form v + W a coset of W in V . The rst question to ask is: when are two such subsets equal? The answer is given by: Proposition 1. Let v, v V . Then v + W = v + W if and only if v v W . Proof. Suppose that v + W = v + W . Since v v + W , v must also be in v + W . Thus there exists w W so that v = v + w. Hence v v = w W as claimed. Conversely, suppose that v v W . Without loss of generality, it is enough to show that v v + W . Denote by w the element v v of W . Then v v = w, so v = v + w. Hence v v + W as required. Denition 1. The set V /W , pronounced V mod W , is the set dened by V /W = {v + W | v V }. That is, V /W is the collection of cosets of W in V . According to Proposition 1, two elements v and v of V determine the same element of V /W if and only if v v W . Because of this, it is also possible, and maybe better, to think of V /W as consisting of all elements of V under the extra condition that two elements of V are declared to be the same if their dierence is in W . This is the point of view taken when dening V /W be the set of equivalence classes of the equivalence relation on V dened by v v if v v W. For further information about equivalence relations, please consult the Notes on Relations paper listed on the website for my previous Math 74 course. We will not use this terminology here, and will just use the coset point of view when dealing with quotient spaces.

SANTIAGO CANEZ

We want to turn V /W into a vector space, so we want to dene an addition on V /W and a scalar multiplication. Since we already have such things dened on V , we have natural candidates for the required operations on V /W : Denition 2. The sum of two elements v + W and v + W of V /W is dened by (v + W ) + (v + W ) := (v + v ) + W. For a F, the scalar multiplication of a on v + W is dened by a (v + W ) := av + W. Now we run into a problem: namely, these operations should not depend on which element of v + W we choose to represent v + W . Meaning, we know that if v v W , then v + W = v + W . For the above denition of addition to make sense, it should be true that for any other v + W , we should have (v + W ) + (v + W ) = (v + W ) + (v + W ). After all, v + W and v + W are supposed to be equal, so computing sums with either one should give the same result. Similarly, performing scalar multiplication on either one should give the same result. Both of these requirements are true: Proposition 2. Suppose that v + W = v + W . Then (v + W )+(v + W ) = (v + W )+(v + W ) for any v + W V /W and a (v + W ) = a (v + W ) for any a F. Because of this, we say that addition and scalar multiplication on V /W are well-dened. Proof. We rst show that addition is well-dened. Without loss of generality, it is enough to show that (v + W ) + (v + W ) (v + W ) + (v + W ). To this end, let u (v + W ) + (v + W ) = (v + v ) + W. Then there exists w W such that u = (v + v ) + w. We want to show that u (v + W ) + (v + W ) = (v + v ) + W. Since v + W = v + W , we know that v v W . Denote this element by w , so then v = v + w. Thus u = (v + v ) + w = ((v + w ) + v ) + w = (v + v ) + (w + w), showing that u (v + v ) + W since w + w W . We conclude that the sets (v + v ) + W and (v + v ) + W are equal, so addition on V /W is well-dened. Now, let a F. To show that scalar multplication is well-dened, it is enough to show that a (v + W ) a (v + W ). So, let u a (v + W ) = av + W. Then u = av + w for some w W . Again, denote by w the element v v W . Then u = av + w = a(v + w ) + w = av + (aw + w) av + W, since aw + w W as W is closed under addition and scalar multiplication. Thus the sets a (v + W ) and a (v + W ) are equal, so scalar multiplication on V /W is well-dened.

NOTES ON QUOTIENT SPACES

Note that the above depends crucially on the assumption that W is a subspace of V ; if W were just an arbitrary subset of V , addition and scalar multiplication on V /W would not be well-dened. With these operations then, V /W becomes a vector space over F: Theorem 1. With addition and scalar multiplication dened as above, V /W is an F-vector space called the quotient space of V by W , or simply the quotient of V by W . Proof. All associative, commutative, and distributive laws follow directly from those of V . We only have to check the existence of an additive identity and of additive inverses. We claim that W = 0 + W is an additive identity. Indeed, let v + W V /W . Then (v + W ) + W = (v + 0) + W = v + W and W + (v + W ) = (0 + v ) + W = v + W, which is what we needed to show. Also, given v + W V /W , we have (v + W ) + (v + W ) = (v v ) + W = 0 + W = W and (v + W ) + (v + W ) = (v + W ) + W = 0 + W = W, so v + W is an additive inverse of v + W . We conclude that V /W is a vector space under the given operations. Just to emphasize, note that the zero vector of V /W is W itself, which is the coset corresponding to the element 0 of W . Now we move to studying these quotient spaces. The rst basic question is: when is V /W nite-dimensional, and what is its dimension? The basic result is the following: Proposition 3. Suppose that V is nite-dimensional. Then V /W is nite-dimensional and dim(V /W ) = dim V dim W. So, when everything is nite-dimemsional, we have an easy formula for the dimension of the quotient space. Of course, even if V is innite-dimesionsal, it is still possible to get a nitedimensional quotient. We will see an example of this after the proof of the above proposition. Proof of Proposition. We will construct an explicit basis for V /W . Let (w1 , . . . , wn ) be a basis of W , and extend it to a basis (w1 , . . . , wn , v1 , . . . , vk ) of V . Note that with this notation, dim W = n and dim V = n + k . We claim that (v1 + W, . . . , vk + W ) forms a basis of V /W . If so, we will then have dim(V /W ) = k = (n + k ) n = dim V dim W as claimed. First we check linear independence. Suppose that a1 (v1 + W ) + ak (vk + W ) = W for some scalars a1 , . . . , ak . Recall that W is the zero vector of V /W , which is why we have W on the right side of the above equation. We want to show that all ai are zero. We can rewrite the above equation as (a1 v1 + ak vk ) + W = W. By Proposition 1 then, it must be that a1 v1 + ak vk W,

SANTIAGO CANEZ

so we can write this vector in terms of the chosen basis of W ; i.e. a1 v1 + ak vk = b1 w1 + + bn wn for some b1 , . . . , bn F. But then a1 v1 + ak vk b1 w1 bn wn = 0, so all coecients are zero since (w1 , . . . , wn , v1 , . . . , vk ) is linearly independent. In particular, all the ai are zero, showing that (v1 + W, . . . , vk + W ) is linearly independent. Now, let v + W V /W . Since the wi s and vj s span V , we know that we can write v = a1 w1 + + an wn + b1 v1 + + bk vk for some scalars ai , bj F. We then have v + W = (a1 w1 + + an wn + b1 v1 + + bk vk ) + W = [(b1 v1 + + bk vk ) + (a1 w1 + + an wn )] + W = (b1 v1 + + bk vk ) + W = b1 (v1 + W ) + + bk (vk + W ), where the third equality follows from the fact that a1 w1 + + an wn W . Hence (v1 + W, . . . , vk + W ) spans V /W , so we conclude that this forms a basis of V /W . Example 1. Let W = {0}. Then two elements v and v of V determine the same element of V /W if and only if v v {0}; that is, if and only if v = v . Hence V /{0} is really just V itself. Similarly, if W is all of V , then any two things in V determine the same element of V /W , so we can say V /V is the vector space containing one element; i.e. V /V is {0}. Example 2. Let V = R2 and let W be the y -axis. We want to give a simple description of V /W . Recall that two elements (x, y ) and (x , y ) of R2 give the same element of V /W , meaning (x, y ) + W = (x , y ) + W , if and only if (x, y ) (x , y ) = (x x , y y ) W. This means that x x = 0 since W is the y -axis. Thus, two elements (x, y ) and (x , y ) of V determine the same element of V /W if and only if x = x . So, a vector of V /W is completely determined by specifying the x-coordinate since the value of the y -coordinate does not matter. In particular, any element of V /W is represented by exactly one element of the form (x, 0), so we can identify V /W with the set of vectors of the form (x, 0) i.e. with the x-axis. Of course, the precise meaning of identify is that the map from V /W to the x-axis which sends (x, y ) + W (x, 0) is an isomorphism. We will not spell out all the details of this here, but the point is that it really does make sense to think of the quotient V /W in this case as being the same as the x-axis.

NOTES ON QUOTIENT SPACES

Example 3. Let V = F and let W be the following subspace of V : W := {(0, x2 , x3 , . . .) | xi F}. As above, you can check that two elements of V determine the same element of V /W if and only if they have the same rst coordinate. Hence an element of V /W is just determined by the value of that rst coordinate x1 . This gives an identication of V /W with F itself (or, more precisely, with the x1 -axis of F ). Note that in this case, even though V and W are innite-dimensional, the quotient V /W is nite-dimsneional indeed it is in fact one-dimensional. Here is a fundamental fact which we can now prove: Theorem 2. Let T : V W be linear. Dene a map S : V / null T range T , by S (v + W ) = T v. Then S is well-dened and is an isomorphism. Proof. As in the case of the denition of addition and scalar multiplication, we may have dierent elements v and v of V giving the same element of V /W . For S to be well-dened, we must know that the denition of S does not depend on such v and v . So, suppose that v + null T = v + null T . Then v v null T , so T (v v ) = 0. Hence T v = T v , so S (v + null T ) = S (v + null T ), showing that S is well-dened. Also, linearity of S follows from that of T . Now, note that surjectivity of S follows from the fact that T is surjective onto its range. We claim that S is injective. Indeed, let v + null T null S . This means that 0 = S (v + null T ) = T v. Hence v null T , and thus v +null T = null T is the zero vector of V /W , showing that null S = {0}. Therefore S is injective, and we conclude that S is an isomorphism as claimed. To get a sense of the previous theorem, note that given any linear map T : V W , we can construct a surjective linear map T : V range T simply by restricting the target space. Now, this new map is not necessarily invertible since it may have a nonzero null space. However, by taking the quotient of V by null T , we are forcing the part that makes T possibly not injective to be zero. Thus the resulting map on V / null T should be injective. So, from any linear map T : V W we can construct an isomorphism between some possibly dierent spaces that still reects many of the properties of T . In particular, since we know what the dimension of a quotient space is, one can use this theorem (or more precisely the consequence that dim(V / null T ) = dim range T ) to give a dierent proof that when V and W are nite dimensional, dim V = dim null T + dim range T . Now, given an operator T L(V ), we can try to dene an operator on V /W , which by abuse of notation we will still denote by T , by setting T (v + W ) := T v + W. However, as should now be seen to be a common thing when dealing with quotient spaces, we must check that this is well-dened . To see when this is the case, rst recall that v +W = v +W if and only if v v W . Call this vector w. Then, we have v = v + w so T (v + W ) = T v + W = (T v + T w) + W, which should equal T v + W . We see that this is the case only when T w W . Thus, for T to descend to a well-dened operator on V /W , we must require that T w W for any w W .

SANTIAGO CANEZ

In other words, we have shown: Proposition 4. Let T L(V ). Dene T : V /W V /W by T (v + W ) = T v + W for any v + W V /W . Then T : V /W V /W is a well-dened operator if and only if W is T -invariant. One can also show that any operator on V /W arises in this way, so the operators on V /W come from operators on V under which W is invariant. Finally, we use this new fancy language of quotient spaces to give a relatively simple proof that any operator on a nite-dimensional complex vector space has an upper-triangular matrix in some basis. Theorem 3. Suppose that V is a nite dimensional complex vector space and let T L(V ). Then there exists a basis of V with respect to which M(T ) is upper-triangular. Proof. Say dim V = n. We know that T has at least one nonzero eigenvector, call it v1 . This will be the rst element of our basis. The idea of the proof is to construct the rest of the basis by taking eigenvectors of the operator induced by T on certain quotients of V . We proceed as follows. Since v1 is an eigenvector of T , span(v1 ) is T -invariant. Thus T descends to a well-dened operator T : V / span(v1 ) V / span(v1 ). This quotient space is again a nite dimensional complex vector space, so this induced map also has a nonzero eigenvector, call it v2 + span(v1 ). Then if is the eigenvalue of this eigenvector, we have T (v2 + span(v1 )) = (v2 + span(v1 )). Let us see what this equation really means. Rewriting both sides, we have T v2 + span(v1 ) = v2 + span(v1 ), so T v2 v2 span(v1 ). This implies that T v2 span(v1 , v2 ), so span(v1 , v2 ) is T -invariant. Note also that since this v2 + span(v1 ) is nonzero in V / span(v1 ), v2 / span(v1 ), so (v1 , v2 ) is linearly independent. This v2 V gives us the second basis element we are looking for. Now T gives a well-dened operator T : V / span(v1 , v2 ) V / span(v1 , v2 ). Pick an eigenvector v3 + span(v1 , v2 ) of this, and argue as above to show that span(v1 , v2 , v3 ) is T -invariant and that (v1 , v2 , v3 ) is linearly independent. Continuing this process, we construct a basis of V : (v1 , v2 , . . . , vn ), with the property that for any k , span(v1 , . . . , vk ) is T -invariant. By one of the characterizations of M(T ) being upper-triangular, we conclude that M(T ) is upper-triangular with respect to this basis. Compare this proof with the one given in the book. There it is not so clear where the proof comes from, let alone where the basis constructed itself really comes from. Here we see that this result is simply a consequence of the repeated application of the fact that operators on nite dimensional complex vector spaces have eigenvectors. The basis we constructed comes exactly from eigenvectors of successive quotients of V .

Вам также может понравиться

- Practice Exercises Advanced MicroДокумент285 страницPractice Exercises Advanced MicroSimon Rampazzo40% (5)

- CCA Selected AnswersДокумент84 страницыCCA Selected Answerswerywreyert83% (6)

- Homework #4, Sec 11.1 and 11.2Документ5 страницHomework #4, Sec 11.1 and 11.2Masaya Sato100% (3)

- Vector Space Over F2Документ7 страницVector Space Over F2Anonymous 8b4AuKDОценок пока нет

- Linalg Friedberg SolutionsДокумент32 страницыLinalg Friedberg SolutionsRyan Dougherty100% (7)

- Linear Algebra Done Right - SolutionsДокумент9 страницLinear Algebra Done Right - SolutionsfunkageОценок пока нет

- Linear AlgebraДокумент18 страницLinear AlgebraT BlackОценок пока нет

- Vector SpacesДокумент4 страницыVector SpacesAaron WelsonОценок пока нет

- Homework Solutions Week 5: Math H54 Professor Paulin Solutions by Manuel Reyes October 3, 2008Документ3 страницыHomework Solutions Week 5: Math H54 Professor Paulin Solutions by Manuel Reyes October 3, 2008FABIANCHO2210Оценок пока нет

- KConrad - Connectedness of Hyperplane ComplementsДокумент5 страницKConrad - Connectedness of Hyperplane ComplementsMarkoGeekyОценок пока нет

- MAT 217 Lecture 4 PDFДокумент3 страницыMAT 217 Lecture 4 PDFCarlo KaramОценок пока нет

- Lecture 6 - Vector Spaces, Linear Maps, and Dual SpacesДокумент5 страницLecture 6 - Vector Spaces, Linear Maps, and Dual Spacespichus123Оценок пока нет

- 4.2 Subspaces: 4.2.1 de Nitions and ExamplesДокумент5 страниц4.2 Subspaces: 4.2.1 de Nitions and ExamplesdocsdownforfreeОценок пока нет

- Santiago Ca NEZДокумент4 страницыSantiago Ca NEZSourav ShekharОценок пока нет

- 1 Orthogonality and Orthonormality: Lecture 4: October 7, 2021Документ3 страницы1 Orthogonality and Orthonormality: Lecture 4: October 7, 2021Pushkaraj PanseОценок пока нет

- 1 Linear Spaces: Summer Propaedeutic CourseДокумент15 страниц1 Linear Spaces: Summer Propaedeutic CourseJuan P HDОценок пока нет

- Test 1 SolutionsДокумент2 страницыTest 1 SolutionsDibya DillipОценок пока нет

- Linear Algebra 1A - Solutions of Ex. 6Документ8 страницLinear Algebra 1A - Solutions of Ex. 6SandraF.ChОценок пока нет

- Introduction To Continuum Mechanics Lecture Notes: Jagan M. PadbidriДокумент33 страницыIntroduction To Continuum Mechanics Lecture Notes: Jagan M. PadbidriManoj RajОценок пока нет

- M Tech NotesДокумент32 страницыM Tech NotesNikhil SinghОценок пока нет

- Appendix 1Документ4 страницыAppendix 1Priyatham GangapatnamОценок пока нет

- Notes On Elementary Linear Algebra: Adam Coffman July 30, 2007Документ33 страницыNotes On Elementary Linear Algebra: Adam Coffman July 30, 2007zachglОценок пока нет

- Linear Algebra, Fried Berg, 4th Editoion Solutions 1.5 (8a, 9, 10, 11, 13, 14, 16, 18) & 1.6 (10a, 16, 22, 23, 25, 29, 30, 31)Документ5 страницLinear Algebra, Fried Berg, 4th Editoion Solutions 1.5 (8a, 9, 10, 11, 13, 14, 16, 18) & 1.6 (10a, 16, 22, 23, 25, 29, 30, 31)MathMan22Оценок пока нет

- λ,µ ∈ F and all v,w ∈ V we have λ· (v +w) = λ·v +λ·w, (λ+µ) ·v = λ·v +λ·w, λ· (µ·v) = (λµ) ·v, and 1Документ1 страницаλ,µ ∈ F and all v,w ∈ V we have λ· (v +w) = λ·v +λ·w, (λ+µ) ·v = λ·v +λ·w, λ· (µ·v) = (λµ) ·v, and 1Tom DavisОценок пока нет

- 1 Self-Adjoint Transformations: Lecture 5: October 12, 2021Документ4 страницы1 Self-Adjoint Transformations: Lecture 5: October 12, 2021Pushkaraj PanseОценок пока нет

- Lecture 02Документ3 страницыLecture 02Dinesh BarwarОценок пока нет

- mat67-Lj-Inner Product SpacesДокумент13 страницmat67-Lj-Inner Product SpacesIndranil KutheОценок пока нет

- 2 SubspacesДокумент15 страниц2 SubspacesalienxxОценок пока нет

- WEEK 5 - Vector Space, SubspaceДокумент28 страницWEEK 5 - Vector Space, Subspacemira_stargirlsОценок пока нет

- 5 Tensor ProductsДокумент22 страницы5 Tensor ProductsEmkafsОценок пока нет

- Math110s Hw2solДокумент4 страницыMath110s Hw2solankitОценок пока нет

- Quotient Space PDFДокумент5 страницQuotient Space PDFritik dwivediОценок пока нет

- Assignment-2 Name-Asha Kumari Jakhar Subject code-ECN511 Enrollment No.-20915003Документ10 страницAssignment-2 Name-Asha Kumari Jakhar Subject code-ECN511 Enrollment No.-20915003Asha JakharОценок пока нет

- 1 Revision of Vector Spaces: September 7, 2012Документ4 страницы1 Revision of Vector Spaces: September 7, 2012Eduard DumiОценок пока нет

- Notas de Aula de Algebra Linear Aplicada - Aula 2: Vanessa BottaДокумент52 страницыNotas de Aula de Algebra Linear Aplicada - Aula 2: Vanessa BottaRaul VillalbaОценок пока нет

- Vector Space Interpretation of Random VariablesДокумент6 страницVector Space Interpretation of Random VariablesSiva Kumar GaniОценок пока нет

- Assign 2Документ10 страницAssign 2Asha JakharОценок пока нет

- Vectors PDFДокумент5 страницVectors PDFBIKILA DESSUОценок пока нет

- Linear Algebra NotesДокумент36 страницLinear Algebra Notesdeletia666Оценок пока нет

- Introduction To Continuum Mechanics Lecture Notes: Jagan M. PadbidriДокумент26 страницIntroduction To Continuum Mechanics Lecture Notes: Jagan M. PadbidriAshmilОценок пока нет

- Tutorial 6 So LnsДокумент2 страницыTutorial 6 So LnspankajОценок пока нет

- Linear AlgebraДокумент68 страницLinear AlgebraNaveen suryaОценок пока нет

- Lec# 4Документ4 страницыLec# 4Batool MurtazaОценок пока нет

- BasisДокумент8 страницBasisUntung Teguh BudiantoОценок пока нет

- Introduction To Continuum Mechanics Lecture Notes: Jagan M. PadbidriДокумент33 страницыIntroduction To Continuum Mechanics Lecture Notes: Jagan M. Padbidrivishnu rajuОценок пока нет

- Lectures On Linear AlgebraДокумент101 страницаLectures On Linear AlgebraMaciej PogorzelskiОценок пока нет

- MATH2611 Ass2Документ3 страницыMATH2611 Ass2Tshepo SeiteiОценок пока нет

- UCB Math 110, Fall 2010: Homework 1 Solutions To Graded ProblemsДокумент1 страницаUCB Math 110, Fall 2010: Homework 1 Solutions To Graded ProblemskevincshihОценок пока нет

- Vector SpacesДокумент16 страницVector SpacesRaulОценок пока нет

- 76 - Sample - Chapter Kunci M2K3 No 9Документ94 страницы76 - Sample - Chapter Kunci M2K3 No 9Rachmat HidayatОценок пока нет

- Tensor Product Dual SpaceДокумент2 страницыTensor Product Dual SpaceLuis OcañaОценок пока нет

- Multilinear Algebra - MITДокумент141 страницаMultilinear Algebra - MITasdОценок пока нет

- MAT240 Assignment 2 PDFДокумент2 страницыMAT240 Assignment 2 PDFchasingsunbeamsОценок пока нет

- Subtraction, Summary, and SubspacesДокумент5 страницSubtraction, Summary, and SubspacesMahimaОценок пока нет

- Kinds of Proofs Math 130 Linear AlgebraДокумент2 страницыKinds of Proofs Math 130 Linear AlgebraIonuț Cătălin SanduОценок пока нет

- Notas de Algebrita PDFДокумент69 страницNotas de Algebrita PDFFidelHuamanAlarconОценок пока нет

- Math 4310 Homework 3 Solutions: 1 N 1 N 1 N 1 N 1 NДокумент2 страницыMath 4310 Homework 3 Solutions: 1 N 1 N 1 N 1 N 1 NGag PafОценок пока нет

- Linalg Friedberg Solutions PDFДокумент32 страницыLinalg Friedberg Solutions PDFQuinn NguyenОценок пока нет

- Chapter5 (5 1 5 3)Документ68 страницChapter5 (5 1 5 3)Rezif SugandiОценок пока нет

- Fundamentals of Vector Spaces and Subspaces: Kevin JamesДокумент54 страницыFundamentals of Vector Spaces and Subspaces: Kevin JamesGustavo G Borzellino CОценок пока нет

- Generative Learning Algorithms: CS229 Lecture NotesДокумент14 страницGenerative Learning Algorithms: CS229 Lecture NotesSimon RampazzoОценок пока нет

- Support Vector Machines: CS229 Lecture NotesДокумент25 страницSupport Vector Machines: CS229 Lecture Notesmaki-toshiakiОценок пока нет

- Generative Learning Algorithms: CS229 Lecture NotesДокумент14 страницGenerative Learning Algorithms: CS229 Lecture NotesSimon RampazzoОценок пока нет

- Generative Learning Algorithms: CS229 Lecture NotesДокумент14 страницGenerative Learning Algorithms: CS229 Lecture NotesSimon RampazzoОценок пока нет

- Aging of A New Niobium-Modified MAR-M247 Nickel-Based SuperalloyДокумент6 страницAging of A New Niobium-Modified MAR-M247 Nickel-Based SuperalloySimon RampazzoОценок пока нет

- Aging of A New Niobium-Modified MAR-M247 Nickel-Based SuperalloyДокумент6 страницAging of A New Niobium-Modified MAR-M247 Nickel-Based SuperalloySimon RampazzoОценок пока нет

- Solution Heat-Treatment of Nb-Modified MAR-M247 SuperalloyДокумент6 страницSolution Heat-Treatment of Nb-Modified MAR-M247 SuperalloySimon RampazzoОценок пока нет

- Reaction SolutionsДокумент1 страницаReaction SolutionsSimon RampazzoОценок пока нет

- Advanced Microeconomics: Konstantinos Serfes September 24, 2004Документ102 страницыAdvanced Microeconomics: Konstantinos Serfes September 24, 2004Simon RampazzoОценок пока нет

- Reaction SolutionsДокумент1 страницаReaction SolutionsSimon RampazzoОценок пока нет

- 1 Functions On Finite SetsДокумент5 страниц1 Functions On Finite SetsSimon RampazzoОценок пока нет

- Binomial TheoremДокумент12 страницBinomial TheoremSamar VeeraОценок пока нет

- Ext 0 HomДокумент16 страницExt 0 HomDzoara Nuñez RamosОценок пока нет

- Pages From College Mathematic by ApollosДокумент75 страницPages From College Mathematic by ApollosSonal MulaniОценок пока нет

- Grade 10 - REAL NUMBERS AND POLYNOMIAL Case StudyДокумент4 страницыGrade 10 - REAL NUMBERS AND POLYNOMIAL Case StudyσмОценок пока нет

- Descriptive Statistics and Graphs: Statistics For PsychologyДокумент14 страницDescriptive Statistics and Graphs: Statistics For PsychologyiamquasiОценок пока нет

- Unit 3 Fourier Transforms Questions and Answers - Sanfoundry PDFДокумент3 страницыUnit 3 Fourier Transforms Questions and Answers - Sanfoundry PDFzohaibОценок пока нет

- Greatest Integer FunctionДокумент6 страницGreatest Integer FunctionKushal ParikhОценок пока нет

- Computational Fluid Dynamics IДокумент3 страницыComputational Fluid Dynamics Iapi-296698256Оценок пока нет

- Potions CompleteДокумент29 страницPotions Completeapi-376226040100% (1)

- Timoshenko1-s2.0-S0022460X73802767-mainДокумент16 страницTimoshenko1-s2.0-S0022460X73802767-mainristi nirmalasariОценок пока нет

- Week8 PDFДокумент31 страницаWeek8 PDFosmanfıratОценок пока нет

- The K Ahler Geometry of Toric Manifolds: E-Mail Address: Apostolov - Vestislav@uqam - CaДокумент61 страницаThe K Ahler Geometry of Toric Manifolds: E-Mail Address: Apostolov - Vestislav@uqam - CaIvan Coronel MarthensОценок пока нет

- Assgn 3 4Документ2 страницыAssgn 3 4Swaraj PandaОценок пока нет

- GEN MATH MODULE Final PDFДокумент103 страницыGEN MATH MODULE Final PDFDaniel Sigalat LoberizОценок пока нет

- Nek5000 Tutorial: Velocity Prediction, ANL MAX ExperimentДокумент99 страницNek5000 Tutorial: Velocity Prediction, ANL MAX ExperimentSmitten ClarkОценок пока нет

- Exam STCM-1Документ3 страницыExam STCM-1FarahОценок пока нет

- I Problems 1: PrefaceДокумент7 страницI Problems 1: PrefaceShanmuga SundaramОценок пока нет

- Finite Element AnalysisДокумент113 страницFinite Element AnalysisBruno CoelhoОценок пока нет

- Formal Report For Experiment 2 PDFДокумент7 страницFormal Report For Experiment 2 PDFJewelyn SeeОценок пока нет

- Ib Maths HL SL Exploration 200 IdeasДокумент2 страницыIb Maths HL SL Exploration 200 IdeasShruti KabraОценок пока нет

- Boris Stoyanov - The Universal Constructions of Heterotic Vacua in Complex and Hermitian Moduli SuperspacesДокумент38 страницBoris Stoyanov - The Universal Constructions of Heterotic Vacua in Complex and Hermitian Moduli SuperspacesBoris StoyanovОценок пока нет

- Mt1 Egyptian MultiplicationДокумент13 страницMt1 Egyptian MultiplicationfarahshazwaniОценок пока нет

- JOBS OmniTRANS MPS 2019Документ5 страницJOBS OmniTRANS MPS 2019Nikola JasikaОценок пока нет

- Sheet 3 DerivativesДокумент3 страницыSheet 3 DerivativeszakОценок пока нет

- DWDM Unit6-Data Similarity MeasuresДокумент40 страницDWDM Unit6-Data Similarity MeasuresmounikaОценок пока нет

- New Ideas in Recognition of Cancer and Neutrosophic SuperHyperGraph by Eulerian-Path-Cut As Hyper Eulogy-Path-Cut On Super EULA-Path-CutДокумент26 страницNew Ideas in Recognition of Cancer and Neutrosophic SuperHyperGraph by Eulerian-Path-Cut As Hyper Eulogy-Path-Cut On Super EULA-Path-CutHenry GarrettОценок пока нет

- History of Mathema 00 CA Jo RichДокумент536 страницHistory of Mathema 00 CA Jo RichBranko NikolicОценок пока нет