Академический Документы

Профессиональный Документы

Культура Документы

Lec16 Timing1

Загружено:

8148593856Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Lec16 Timing1

Загружено:

8148593856Авторское право:

Доступные форматы

Spring 2013 EECS150 - Lec16-timing1 Page

EECS150 - Digital Design

Lecture 16 - Circuit Timing

March 14, 2013

John Wawrzynek

1

Spring 2013 EECS150 - Lec16-timing1 Page

Performance, Cost, Power

How do we measure performance?

operations/sec? cycles/sec?

Performance is directly proportional to clock frequency.

Although it may not be the entire story:

Ex: CPU performance

= # instructions X CPI X clock period

2

Spring 2013 EECS150 - Lec16-timing1 Page

Timing Analysis

1600 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 36, NO. 11, NOVEMBER 2001

Fig. 1. Process SEM cross section.

The process was raised from [1] to limit standby power.

Circuit design and architectural pipelining ensure low voltage

performance and functionality. To further limit standby current

in handheld ASSPs, a longer poly target takes advantage of the

versus dependence and source-to-body bias is used

to electrically limit transistor in standby mode. All core

nMOS and pMOS transistors utilize separate source and bulk

connections to support this. The process includes cobalt disili-

cide gates and diffusions. Low source and drain capacitance, as

well as 3-nm gate-oxide thickness, allow high performance and

low-voltage operation.

III. ARCHITECTURE

The microprocessor contains 32-kB instruction and data

caches as well as an eight-entry coalescing writeback buffer.

The instruction and data cache fill buffers have two and four

entries, respectively. The data cache supports hit-under-miss

operation and lines may be locked to allow SRAM-like oper-

ation. Thirty-two-entry fully associative translation lookaside

buffers (TLBs) that support multiple page sizes are provided

for both caches. TLB entries may also be locked. A 128-entry

branch target buffer improves branch performance a pipeline

deeper than earlier high-performance ARM designs [2], [3].

A. Pipeline Organization

To obtain high performance, the microprocessor core utilizes

a simple scalar pipeline and a high-frequency clock. In addition

to avoiding the potential power waste of a superscalar approach,

functional design and validation complexity is decreased at the

expense of circuit design effort. To avoid circuit design issues,

the pipeline partitioning balances the workload and ensures that

no one pipeline stage is tight. The main integer pipeline is seven

stages, memory operations follow an eight-stage pipeline, and

when operating in thumb mode an extra pipe stage is inserted

after the last fetch stage to convert thumb instructions into ARM

instructions. Since thumb mode instructions [11] are 16 b, two

instructions are fetched in parallel while executing thumb in-

structions. A simplified diagram of the processor pipeline is

Fig. 2. Microprocessor pipeline organization.

shown in Fig. 2, where the state boundaries are indicated by

gray. Features that allow the microarchitecture to achieve high

speed are as follows.

The shifter and ALU reside in separate stages. The ARM in-

struction set allows a shift followed by an ALU operation in a

single instruction. Previous implementations limited frequency

by having the shift and ALU in a single stage. Splitting this op-

eration reduces the critical ALU bypass path by approximately

1/3. The extra pipeline hazard introduced when an instruction is

immediately followed by one requiring that the result be shifted

is infrequent.

Decoupled Instruction Fetch. Atwo-instruction deep queue is

implemented between the second fetch and instruction decode

pipe stages. This allows stalls generated later in the pipe to be

deferred by one or more cycles in the earlier pipe stages, thereby

allowing instruction fetches to proceed when the pipe is stalled,

and also relieves stall speed paths in the instruction fetch and

branch prediction units.

Deferred register dependency stalls. While register depen-

dencies are checked in the RF stage, stalls due to these hazards

are deferred until the X1 stage. All the necessary operands are

then captured from result-forwarding busses as the results are

returned to the register file.

One of the major goals of the design was to minimize the en-

ergy consumed to complete a given task. Conventional wisdom

has been that shorter pipelines are more efficient due to re-

Spring 2003 EECS150 Lec10-Timing Page 7

Example

Parallel to serial converter:

a

b

T ! time(clk"Q) + time(mux) + time(setup)

T ! #

clk"Q

+ #

mux

+ #

setup

clk

f T

1 MHz 1 s

10 MHz 100 ns

100 MHz 10 ns

1 GHz 1 ns

Timing Analysis

What is the

smallest T that

produces correct

operation?

3

ARM processor Microarch

Spring 2013 EECS150 - Lec16-timing1 Page

Timing Analysis and Logic Delay

If T > worst-case delay through CL, does

this ensures correct operation?

1600 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 36, NO. 11, NOVEMBER 2001

Fig. 1. Process SEM cross section.

The process was raised from [1] to limit standby power.

Circuit design and architectural pipelining ensure low voltage

performance and functionality. To further limit standby current

in handheld ASSPs, a longer poly target takes advantage of the

versus dependence and source-to-body bias is used

to electrically limit transistor in standby mode. All core

nMOS and pMOS transistors utilize separate source and bulk

connections to support this. The process includes cobalt disili-

cide gates and diffusions. Low source and drain capacitance, as

well as 3-nm gate-oxide thickness, allow high performance and

low-voltage operation.

III. ARCHITECTURE

The microprocessor contains 32-kB instruction and data

caches as well as an eight-entry coalescing writeback buffer.

The instruction and data cache fill buffers have two and four

entries, respectively. The data cache supports hit-under-miss

operation and lines may be locked to allow SRAM-like oper-

ation. Thirty-two-entry fully associative translation lookaside

buffers (TLBs) that support multiple page sizes are provided

for both caches. TLB entries may also be locked. A 128-entry

branch target buffer improves branch performance a pipeline

deeper than earlier high-performance ARM designs [2], [3].

A. Pipeline Organization

To obtain high performance, the microprocessor core utilizes

a simple scalar pipeline and a high-frequency clock. In addition

to avoiding the potential power waste of a superscalar approach,

functional design and validation complexity is decreased at the

expense of circuit design effort. To avoid circuit design issues,

the pipeline partitioning balances the workload and ensures that

no one pipeline stage is tight. The main integer pipeline is seven

stages, memory operations follow an eight-stage pipeline, and

when operating in thumb mode an extra pipe stage is inserted

after the last fetch stage to convert thumb instructions into ARM

instructions. Since thumb mode instructions [11] are 16 b, two

instructions are fetched in parallel while executing thumb in-

structions. A simplified diagram of the processor pipeline is

Fig. 2. Microprocessor pipeline organization.

shown in Fig. 2, where the state boundaries are indicated by

gray. Features that allow the microarchitecture to achieve high

speed are as follows.

The shifter and ALU reside in separate stages. The ARM in-

struction set allows a shift followed by an ALU operation in a

single instruction. Previous implementations limited frequency

by having the shift and ALU in a single stage. Splitting this op-

eration reduces the critical ALU bypass path by approximately

1/3. The extra pipeline hazard introduced when an instruction is

immediately followed by one requiring that the result be shifted

is infrequent.

Decoupled Instruction Fetch. Atwo-instruction deep queue is

implemented between the second fetch and instruction decode

pipe stages. This allows stalls generated later in the pipe to be

deferred by one or more cycles in the earlier pipe stages, thereby

allowing instruction fetches to proceed when the pipe is stalled,

and also relieves stall speed paths in the instruction fetch and

branch prediction units.

Deferred register dependency stalls. While register depen-

dencies are checked in the RF stage, stalls due to these hazards

are deferred until the X1 stage. All the necessary operands are

then captured from result-forwarding busses as the results are

returned to the register file.

One of the major goals of the design was to minimize the en-

ergy consumed to complete a given task. Conventional wisdom

has been that shorter pipelines are more efficient due to re-

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.9

General C/L Cell Delay Model

Combinational Cell (symbol) is fully specified by:

functional (input -> output) behavior

- truth-table, logic equation, VHDL

Input load factor of each input

Propagation delay from each input to each output for each transition

- T

HL

(A, o) = Fixed Internal Delay + Load-dependent-delay x load

Linear model composes

Cout

Vout

Cout

Delay

Va -> Vout

X

X

X

X

X

X

Ccritical

delay per unit load

A

B

X

.

.

.

Combinational

Logic Cell

Internal Delay

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.10

Storage Elements Timing Model

Clk

D Q

Setup Time: Input must be stable BEFORE trigger clock edge

Hold Time: Input must REMAIN stable after trigger clock edge

Clock-to-Q time:

Output cannot change instantaneously at the trigger clock edge

Similar to delay in logic gates, two components:

- Internal Clock-to-Q

- Load dependent Clock-to-Q

Dont Care Dont Care

Hold Setup

D

Unknown

Clock-to-Q

Q

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.11

Clocking Methodology

Clk

Combination Logic

.

.

.

.

.

.

.

.

.

.

.

.

All storage elements are clocked by the same clock

edge

The combination logic blocks:

Inputs are updated at each clock tick

All outputs MUST be stable before the next clock tick

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.12

Critical Path & Cycle Time

Clk

.

.

.

.

.

.

.

.

.

.

.

.

Critical path: the slowest path between any two storage

devices

Cycle time is a function of the critical path

must be greater than:

Clock-to-Q + Longest Path through Combination Logic + Setup

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.9

General C/L Cell Delay Model

Combinational Cell (symbol) is fully specified by:

functional (input -> output) behavior

- truth-table, logic equation, VHDL

Input load factor of each input

Propagation delay from each input to each output for each transition

- T

HL

(A, o) = Fixed Internal Delay + Load-dependent-delay x load

Linear model composes

Cout

Vout

Cout

Delay

Va -> Vout

X

X

X

X

X

X

Ccritical

delay per unit load

A

B

X

.

.

.

Combinational

Logic Cell

Internal Delay

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.10

Storage Elements Timing Model

Clk

D Q

Setup Time: Input must be stable BEFORE trigger clock edge

Hold Time: Input must REMAIN stable after trigger clock edge

Clock-to-Q time:

Output cannot change instantaneously at the trigger clock edge

Similar to delay in logic gates, two components:

- Internal Clock-to-Q

- Load dependent Clock-to-Q

Dont Care Dont Care

Hold Setup

D

Unknown

Clock-to-Q

Q

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.11

Clocking Methodology

Clk

Combination Logic

.

.

.

.

.

.

.

.

.

.

.

.

All storage elements are clocked by the same clock

edge

The combination logic blocks:

Inputs are updated at each clock tick

All outputs MUST be stable before the next clock tick

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.12

Critical Path & Cycle Time

Clk

.

.

.

.

.

.

.

.

.

.

.

.

Critical path: the slowest path between any two storage

devices

Cycle time is a function of the critical path

must be greater than:

Clock-to-Q + Longest Path through Combination Logic + Setup

Combinational Logic

4

Spring 2013 EECS150 - Lec16-timing1 Page

Limitations on Clock Rate

1 Logic Gate Delay

2 Delays in flip-flops

What must happen in one clock cycle for correct operation?

All signals connected to FF (or memory) inputs must be

ready and setup before rising edge of clock.

For now we assume perfect clock distribution (all flip-flops

see the clock at the same time).

5

What are typical delay values?

Both times contribute to

limiting the clock period.

Spring 2013 EECS150 - Lec16-timing1 Page

Example

Parallel to serial

converter circuit

T time(clkQ) + time(mux) + time(setup)

T

clkQ

+

mux

+

setup

a

b

clk

6

Spring 2013 EECS150 - Lec16-timing1 Page

In General ...

T

clkQ

+

CL

+

setup

7

For correct operation:

for all paths.

How do we enumerate all paths?

Any circuit input or register output to any register input or

circuit output?

Note:

setup time for outputs is a function of what it connects to.

clk-to-q for circuit inputs depends on where it comes from.

Spring 2013 EECS150 - Lec16-timing1 Page

CMOS Delay: Transistors as water valves

If electrons are water molecules,

and a capacitor a bucket ...

A on p-FET lls

up the capacitor

with charge.

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.29

Delay Model:

CMOS

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.30

Review: General C/L Cell Delay Model

Combinational Cell (symbol) is fully specified by:

functional (input -> output) behavior

- truth-table, logic equation, VHDL

load factor of each input

critical propagation delay from each input to each output for each

transition

- T

HL

(A, o) = Fixed Internal Delay + Load-dependent-delay x load

Linear model composes

Cout

Vout

Cout

Delay

Va -> Vout

X

X

X

X

X

X

Ccritical

delay per unit load

A

B

X

.

.

.

Combinational

Logic Cell

Internal Delay

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.31

Basic Technology: CMOS

CMOS: Complementary Metal Oxide Semiconductor

NMOS (N-Type Metal Oxide Semiconductor) transistors

PMOS (P-Type Metal Oxide Semiconductor) transistors

NMOS Transistor

Apply a HIGH (Vdd) to its gate

turns the transistor into a conductor

Apply a LOW (GND) to its gate

shuts off the conduction path

PMOS Transistor

Apply a HIGH (Vdd) to its gate

shuts off the conduction path

Apply a LOW (GND) to its gate

turns the transistor into a conductor

Vdd = 5V

GND = 0v

Vdd = 5V

GND = 0v

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.32

Basic Components: CMOS Inverter

Vdd

Circuit

Inverter Operation

Out In

Symbol

PMOS

NMOS

In Out

Vdd

Open

Charge

Vout

Vdd

Vdd

Out

Open

Discharge

Vin

Vdd

Vdd

A on n-FET

empties the bucket.

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.29

Delay Model:

CMOS

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.30

Review: General C/L Cell Delay Model

Combinational Cell (symbol) is fully specified by:

functional (input -> output) behavior

- truth-table, logic equation, VHDL

load factor of each input

critical propagation delay from each input to each output for each

transition

- T

HL

(A, o) = Fixed Internal Delay + Load-dependent-delay x load

Linear model composes

Cout

Vout

Cout

Delay

Va -> Vout

X

X

X

X

X

X

Ccritical

delay per unit load

A

B

X

.

.

.

Combinational

Logic Cell

Internal Delay

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.31

Basic Technology: CMOS

CMOS: Complementary Metal Oxide Semiconductor

NMOS (N-Type Metal Oxide Semiconductor) transistors

PMOS (P-Type Metal Oxide Semiconductor) transistors

NMOS Transistor

Apply a HIGH (Vdd) to its gate

turns the transistor into a conductor

Apply a LOW (GND) to its gate

shuts off the conduction path

PMOS Transistor

Apply a HIGH (Vdd) to its gate

shuts off the conduction path

Apply a LOW (GND) to its gate

turns the transistor into a conductor

Vdd = 5V

GND = 0v

Vdd = 5V

GND = 0v

1/28/04 UCB Spring 2004

CS152 / Kubiatowicz

Lec3.32

Basic Components: CMOS Inverter

Vdd

Circuit

Inverter Operation

Out In

Symbol

PMOS

NMOS

In Out

Vdd

Open

Charge

Vout

Vdd

Vdd

Out

Open

Discharge

Vin

Vdd

Vdd

Spring 2003 EECS150 Lec10-Timing Page 10

Gate Switching Behavior

nverter:

NAND gate:

1

0

Time

Water level

Spring 2003 EECS150 Lec10-Timing Page 10

Gate Switching Behavior

nverter:

NAND gate:

0

1

Time

Water level

This model is often good enough ...

8

Spring 2013 EECS150 - Lec16-timing1 Page

Transistors as Conductors

Improved Transistor Model:

nFET

We refer to transistor "strength" as

the amount of current that flows for

a given Vds and Vgs.

The strength is linearly proportional

to the ratio of W/L.

pFET

9

Spring 2013 EECS150 - Lec16-timing1 Page

Gate Delay is the Result of Cascading

Cascaded gates:

transfer curve for inverter.

10

Spring 2013 EECS150 - Lec16-timing1 Page

Delay in Flip-flops

Setup time results from delay

through first latch.

clk

clk

clk

clk

clk

clk

clk

clk

11

Clock to Q delay results from

delay through second latch.

Spring 2013 EECS150 - Lec16-timing1 Page

Wire Delay

Ideally, wires behave as

transmission lines:

signal wave-front moves close

to the speed of light

~1ft/ns

Time from source to

destination is called the

transit time.

In ICs most wires are short,

and the transit times are

relatively short compared to

the clock period and can be

ignored.

Not so on PC boards.

12

Spring 2013 EECS150 - Lec16-timing1 Page

Wire Delay

Even in those cases where the

transmission line effect is

negligible:

Wires posses distributed

resistance and capacitance

Time constant associated with

distributed RC is proportional

to the square of the length

For short wires on ICs,

resistance is insignificant

(relative to effective R of

transistors), but C is important.

Typically around half of C of

gate load is in the wires.

For long wires on ICs:

busses, clock lines, global

control signal, etc.

Resistance is significant,

therefore distributed RC effect

dominates.

signals are typically rebuffered

to reduce delay:

v1 v2 v3 v4

13

v1

v4

v3

v2

time

Spring 2013 EECS150 - Lec16-timing1 Page

Delay and Fan-out

The delay of a gate is proportional to its output capacitance.

Connecting the output of gate to more than one other gate increases

its output capacitance. It takes increasingly longer for the output of

a gate to reach the switching threshold of the gates it drives as we

add more output connections.

Driving wires also contributes to fan-out delay.

What can be done to remedy this problem in large fan-out situations?

1

3

2

14

Spring 2013 EECS150 - Lec16-timing1 Page

Critical Path

Critical Path: the path in the entire design with the maximum

delay.

This could be from state element to state element, or from

input to state element, or state element to output, or from input

to output (unregistered paths).

For example, what is the critical path in this circuit?

Why do we care about the critical path?

15

Spring 2013 EECS150 - Lec16-timing1 Page

Components of Path Delay

1. # of levels of logic

2. Internal cell delay

3. wire delay

4. cell input capacitance

5. cell fanout

6. cell output drive strength

16

Spring 2013 EECS150 - Lec16-timing1 Page

Who controls the delay?

17

Silicon

foundary

engineer

Cell Library

Developer, FPGA-

chip designer

CAD Tools

(logic synthesis,

place and route

Designer (you)

1. # of levels synthesis RTL

2. Internal cell

delay

physical

parameters

cell topology,

trans sizing

cell selection

3. Wire delay physical

parameters

place & route layout

generator

4. Cell input

capacitance

physical

parameters

cell topology,

trans sizing

cell selection

5. Cell fanout synthesis RTL

6. Cell drive

strength

physical

parameters

transistor sizing cell selection instantiation

(ASIC)

Spring 2013 EECS150 - Lec16-timing1 Page

Searching for processor critical path 1600 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 36, NO. 11, NOVEMBER 2001

Fig. 1. Process SEM cross section.

The process was raised from [1] to limit standby power.

Circuit design and architectural pipelining ensure low voltage

performance and functionality. To further limit standby current

in handheld ASSPs, a longer poly target takes advantage of the

versus dependence and source-to-body bias is used

to electrically limit transistor in standby mode. All core

nMOS and pMOS transistors utilize separate source and bulk

connections to support this. The process includes cobalt disili-

cide gates and diffusions. Low source and drain capacitance, as

well as 3-nm gate-oxide thickness, allow high performance and

low-voltage operation.

III. ARCHITECTURE

The microprocessor contains 32-kB instruction and data

caches as well as an eight-entry coalescing writeback buffer.

The instruction and data cache fill buffers have two and four

entries, respectively. The data cache supports hit-under-miss

operation and lines may be locked to allow SRAM-like oper-

ation. Thirty-two-entry fully associative translation lookaside

buffers (TLBs) that support multiple page sizes are provided

for both caches. TLB entries may also be locked. A 128-entry

branch target buffer improves branch performance a pipeline

deeper than earlier high-performance ARM designs [2], [3].

A. Pipeline Organization

To obtain high performance, the microprocessor core utilizes

a simple scalar pipeline and a high-frequency clock. In addition

to avoiding the potential power waste of a superscalar approach,

functional design and validation complexity is decreased at the

expense of circuit design effort. To avoid circuit design issues,

the pipeline partitioning balances the workload and ensures that

no one pipeline stage is tight. The main integer pipeline is seven

stages, memory operations follow an eight-stage pipeline, and

when operating in thumb mode an extra pipe stage is inserted

after the last fetch stage to convert thumb instructions into ARM

instructions. Since thumb mode instructions [11] are 16 b, two

instructions are fetched in parallel while executing thumb in-

structions. A simplified diagram of the processor pipeline is

Fig. 2. Microprocessor pipeline organization.

shown in Fig. 2, where the state boundaries are indicated by

gray. Features that allow the microarchitecture to achieve high

speed are as follows.

The shifter and ALU reside in separate stages. The ARM in-

struction set allows a shift followed by an ALU operation in a

single instruction. Previous implementations limited frequency

by having the shift and ALU in a single stage. Splitting this op-

eration reduces the critical ALU bypass path by approximately

1/3. The extra pipeline hazard introduced when an instruction is

immediately followed by one requiring that the result be shifted

is infrequent.

Decoupled Instruction Fetch. Atwo-instruction deep queue is

implemented between the second fetch and instruction decode

pipe stages. This allows stalls generated later in the pipe to be

deferred by one or more cycles in the earlier pipe stages, thereby

allowing instruction fetches to proceed when the pipe is stalled,

and also relieves stall speed paths in the instruction fetch and

branch prediction units.

Deferred register dependency stalls. While register depen-

dencies are checked in the RF stage, stalls due to these hazards

are deferred until the X1 stage. All the necessary operands are

then captured from result-forwarding busses as the results are

returned to the register file.

One of the major goals of the design was to minimize the en-

ergy consumed to complete a given task. Conventional wisdom

has been that shorter pipelines are more efficient due to re-

Must consider all connected register pairs,

paths from input to register, register to

output. Dont forget the controller.

?

18

Design tools help in the search.

Synthesis tools report delays on paths,

Special static timing analyzers accept

a design netlist and report path delays,

and, of course, simulators can be used

to determine timing performance.

Tools that are expected to do something

about the timing behavior (such as

synthesizers), also include provisions for

specifying input arrival times (relative to the

clock), and output requirements (set-up

times of next stage).

Spring 2013 EECS150 - Lec16-timing1 Page

Real Stuff: Timing Analysis

From The circuit and physical design of the POWER4 microprocessor, IBM J Res and Dev, 46:1, Jan 2002, J.D. Warnock et al.

netlist. Of these, 121713 were top-level chip global nets,

and 21711 were processor-core-level global nets. Against

this model 3.5 million setup checks were performed in late

mode at points where clock signals met data signals in

latches or dynamic circuits. The total number of timing

checks of all types performed in each chip run was

9.8 million. Depending on the conguration of the timing

run and the mix of actual versus estimated design data,

the amount of real memory required was in the range

of 12 GB to 14 GB, with run times of about 5 to 6 hours

to the start of timing-report generation on an RS/6000*

Model S80 congured with 64 GB of real memory.

Approximately half of this time was taken up by reading

in the netlist, timing rules, and extracted RC networks, as

well as building and initializing the internal data structures

for the timing model. The actual static timing analysis

typically took 2.53 hours. Generation of the entire

complement of reports and analysis required an additional

5 to 6 hours to complete. A total of 1.9 GB of timing

reports and analysis were generated from each chip timing

run. This data was broken down, analyzed, and organized

by processor core and GPS, individual unit, and, in the

case of timing contracts, by unit and macro. This was one

component of the 24-hour-turnaround time achieved for

the chip-integration design cycle. Figure 26 shows the

results of iterating this process: A histogram of the nal

nominal path delays obtained from static timing for the

POWER4 processor.

The POWER4 design includes LBIST and ABIST

(Logic/Array Built-In Self-Test) capability to enable full-

frequency ac testing of the logic and arrays. Such testing

on pre-nal POWER4 chips revealed that several circuit

macros ran slower than predicted from static timing. The

speed of the critical paths in these macros was increased

in the nal design. Typical fast ac LBIST laboratory test

results measured on POWER4 after these paths were

improved are shown in Figure 27.

Summary

The 174-million-transistor 1.3-GHz POWER4 chip,

containing two microprocessor cores and an on-chip

memory subsystem, is a large, complex, high-frequency

chip designed by a multi-site design team. The

performance and schedule goals set at the beginning of

the project were met successfully. This paper describes

the circuit and physical design of POWER4, emphasizing

aspects that were important to the projects success in the

areas of design methodology, clock distribution, circuits,

power, integration, and timing.

Figure 25

POWER4 timing flow. This process was iterated daily during the

physical design phase to close timing.

VIM

Timer files Reports Asserts

Spice

Spice

GL/1

Reports

< 12 hr

< 12 hr

< 12 hr

< 48 hr

< 24 hr

Non-uplift

timing

Noise

impact

on timing

Uplift

analysis

Capacitance

adjust

Chipbench /

EinsTimer

Chipbench /

EinsTimer

Extraction

Core or chip

wiring

Analysis/update

(wires, buffers)

Notes:

Executed 23 months

prior to tape-out

Fully extracted data

from routed designs

Hierarchical extraction

Custom logic handled

separately

Dracula

Harmony

Extraction done for

Early

Late

Extracted units

(flat or hierarchical)

Incrementally

extracted RLMs

Custom NDRs

VIMs

Figure 26

Histogram of the POWER4 processor path delays.

40 20 0 20 40 60 80 100 120 140 160 180 200 220 240 260 280

Timing slack (ps)

L

a

t

e

-

m

o

d

e

t

i

m

i

n

g

c

h

e

c

k

s

(

t

h

o

u

s

a

n

d

s

)

0

50

100

150

200

IBM J. RES. & DEV. VOL. 46 NO. 1 JANUARY 2002 J. D. WARNOCK ET AL.

47

Most paths have hundreds of

picoseconds to spare.

The critical path

19

Spring 2013 EECS150 - Lec16-timing1 Page

Clock Skew

Unequal delay in distribution of the clock signal to various parts of

a circuit:

if not accounted for, can lead to erroneous behavior.

Comes about if:

clock wires have different delay,

circuit is designed with a different number of clock buffers from

the clock source to the various clock loads, or

buffers have unequal delay.

All synchronous circuits experience some clock skew:

more of an issue for high-performance designs operating with very

little extra time per clock cycle.

clock skew, delay in distribution

20

Spring 2013 EECS150 - Lec16-timing1 Page

Clock Skew (cont.)

If clock period T = T

CL

+T

setup

+T

clkQ

, circuit will fail.

Therefore:

1. Control clock skew

a) Careful clock distribution. Equalize path delay from clock source to

all clock loads by controlling wires delay and buffer delay.

b) dont gate clocks in a non-uniform way.

2. T T

CL

+T

setup

+T

clkQ

+ worst case skew.

Most modern large high-performance chips (microprocessors)

control end to end clock skew to a small fraction of the clock period.

clock skew, delay in distribution

CL

CLK

CLK

CLK

CLK

21

Spring 2013 EECS150 - Lec16-timing1 Page

Clock Skew (cont.)

Note reversed buffer.

In this case, clock skew actually provides extra time (adds to

the effective clock period).

This effect has been used to help run circuits as higher

clock rates. Risky business!

CL

CLK

CLK

clock skew, delay in distribution

CLK

CLK

22

Spring 2013 EECS150 - Lec16-timing1 Page

1600 IEEE JOURNAL OF SOLID-STATE CIRCUITS, VOL. 36, NO. 11, NOVEMBER 2001

Fig. 1. Process SEM cross section.

The process was raised from [1] to limit standby power.

Circuit design and architectural pipelining ensure low voltage

performance and functionality. To further limit standby current

in handheld ASSPs, a longer poly target takes advantage of the

versus dependence and source-to-body bias is used

to electrically limit transistor in standby mode. All core

nMOS and pMOS transistors utilize separate source and bulk

connections to support this. The process includes cobalt disili-

cide gates and diffusions. Low source and drain capacitance, as

well as 3-nm gate-oxide thickness, allow high performance and

low-voltage operation.

III. ARCHITECTURE

The microprocessor contains 32-kB instruction and data

caches as well as an eight-entry coalescing writeback buffer.

The instruction and data cache fill buffers have two and four

entries, respectively. The data cache supports hit-under-miss

operation and lines may be locked to allow SRAM-like oper-

ation. Thirty-two-entry fully associative translation lookaside

buffers (TLBs) that support multiple page sizes are provided

for both caches. TLB entries may also be locked. A 128-entry

branch target buffer improves branch performance a pipeline

deeper than earlier high-performance ARM designs [2], [3].

A. Pipeline Organization

To obtain high performance, the microprocessor core utilizes

a simple scalar pipeline and a high-frequency clock. In addition

to avoiding the potential power waste of a superscalar approach,

functional design and validation complexity is decreased at the

expense of circuit design effort. To avoid circuit design issues,

the pipeline partitioning balances the workload and ensures that

no one pipeline stage is tight. The main integer pipeline is seven

stages, memory operations follow an eight-stage pipeline, and

when operating in thumb mode an extra pipe stage is inserted

after the last fetch stage to convert thumb instructions into ARM

instructions. Since thumb mode instructions [11] are 16 b, two

instructions are fetched in parallel while executing thumb in-

structions. A simplified diagram of the processor pipeline is

Fig. 2. Microprocessor pipeline organization.

shown in Fig. 2, where the state boundaries are indicated by

gray. Features that allow the microarchitecture to achieve high

speed are as follows.

The shifter and ALU reside in separate stages. The ARM in-

struction set allows a shift followed by an ALU operation in a

single instruction. Previous implementations limited frequency

by having the shift and ALU in a single stage. Splitting this op-

eration reduces the critical ALU bypass path by approximately

1/3. The extra pipeline hazard introduced when an instruction is

immediately followed by one requiring that the result be shifted

is infrequent.

Decoupled Instruction Fetch. Atwo-instruction deep queue is

implemented between the second fetch and instruction decode

pipe stages. This allows stalls generated later in the pipe to be

deferred by one or more cycles in the earlier pipe stages, thereby

allowing instruction fetches to proceed when the pipe is stalled,

and also relieves stall speed paths in the instruction fetch and

branch prediction units.

Deferred register dependency stalls. While register depen-

dencies are checked in the RF stage, stalls due to these hazards

are deferred until the X1 stage. All the necessary operands are

then captured from result-forwarding busses as the results are

returned to the register file.

One of the major goals of the design was to minimize the en-

ergy consumed to complete a given task. Conventional wisdom

has been that shorter pipelines are more efficient due to re-

Real Stuff: Floorplanning Intel XScale 80200

23

Spring 2013 EECS150 - Lec16-timing1 Page

the total wire delay is similar to the total buffer delay. A

patented tuning algorithm [16] was required to tune the

more than 2000 tunable transmission lines in these sector

trees to achieve low skew, visualized as the atness of the

grid in the 3D visualizations. Figure 8 visualizes four of

the 64 sector trees containing about 125 tuned wires

driving 1/16th of the clock grid. While symmetric H-trees

were desired, silicon and wiring blockages often forced

more complex tree structures, as shown. Figure 8 also

shows how the longer wires are split into multiple-ngered

transmission lines interspersed with V

dd

and ground shields

(not shown) for better inductance control [17, 18]. This

strategy of tunable trees driving a single grid results in low

skew among any of the 15200 clock pins on the chip,

regardless of proximity.

From the global clock grid, a hierarchy of short clock

routes completed the connection from the grid down to

the individual local clock buffer inputs in the macros.

These clock routing segments included wires at the macro

level from the macro clock pins to the input of the local

clock buffer, wires at the unit level from the macro clock

pins to the unit clock pins, and wires at the chip level

from the unit clock pins to the clock grid.

Design methodology and results

This clock-distribution design method allows a highly

productive combination of top-down and bottom-up design

perspectives, proceeding in parallel and meeting at the

single clock grid, which is designed very early. The trees

driving the grid are designed top-down, with the maximum

wire widths contracted for them. Once the contract for the

grid had been determined, designers were insulated from

changes to the grid, allowing necessary adjustments to the

grid to be made for minimizing clock skew even at a very

late stage in the design process. The macro, unit, and chip

clock wiring proceeded bottom-up, with point tools at

each hierarchical level (e.g., macro, unit, core, and chip)

using contracted wiring to form each segment of the total

clock wiring. At the macro level, short clock routes

connected the macro clock pins to the local clock buffers.

These wires were kept very short, and duplication of

existing higher-level clock routes was avoided by allowing

the use of multiple clock pins. At the unit level, clock

routing was handled by a special tool, which connected the

macro pins to unit-level pins, placed as needed in pre-

assigned wiring tracks. The nal connection to the xed

Figure 6

Schematic diagram of global clock generation and distribution.

PLL

Bypass

Reference

clock in

Reference

clock out

Clock distribution

Clock out

Figure 7

3D visualization of the entire global clock network. The x and y

coordinates are chip x, y, while the z axis is used to represent

delay, so the lowest point corresponds to the beginning of the

clock distribution and the final clock grid is at the top. Widths are

proportional to tuned wire width, and the three levels of buffers

appear as vertical lines.

D

e

l

a

y

Grid

Tuned

sector

trees

Sector

buffers

Buffer level 2

Buffer level 1

y

x

Figure 8

Visualization of four of the 64 sector trees driving the clock grid,

using the same representation as Figure 7. The complex sector

trees and multiple-fingered transmission lines used for inductance

control are visible at this scale.

D

e

l

a

y

Multiple-

fingered

transmission

line

y

x

J. D. WARNOCK ET AL. IBM J. RES. & DEV. VOL. 46 NO. 1 JANUARY 2002

32

Clock Tree

Delays,

IBM Power

CPU

D

e

l

a

y

24

Spring 2013 EECS150 - Lec16-timing1 Page

the total wire delay is similar to the total buffer delay. A

patented tuning algorithm [16] was required to tune the

more than 2000 tunable transmission lines in these sector

trees to achieve low skew, visualized as the atness of the

grid in the 3D visualizations. Figure 8 visualizes four of

the 64 sector trees containing about 125 tuned wires

driving 1/16th of the clock grid. While symmetric H-trees

were desired, silicon and wiring blockages often forced

more complex tree structures, as shown. Figure 8 also

shows how the longer wires are split into multiple-ngered

transmission lines interspersed with V

dd

and ground shields

(not shown) for better inductance control [17, 18]. This

strategy of tunable trees driving a single grid results in low

skew among any of the 15200 clock pins on the chip,

regardless of proximity.

From the global clock grid, a hierarchy of short clock

routes completed the connection from the grid down to

the individual local clock buffer inputs in the macros.

These clock routing segments included wires at the macro

level from the macro clock pins to the input of the local

clock buffer, wires at the unit level from the macro clock

pins to the unit clock pins, and wires at the chip level

from the unit clock pins to the clock grid.

Design methodology and results

This clock-distribution design method allows a highly

productive combination of top-down and bottom-up design

perspectives, proceeding in parallel and meeting at the

single clock grid, which is designed very early. The trees

driving the grid are designed top-down, with the maximum

wire widths contracted for them. Once the contract for the

grid had been determined, designers were insulated from

changes to the grid, allowing necessary adjustments to the

grid to be made for minimizing clock skew even at a very

late stage in the design process. The macro, unit, and chip

clock wiring proceeded bottom-up, with point tools at

each hierarchical level (e.g., macro, unit, core, and chip)

using contracted wiring to form each segment of the total

clock wiring. At the macro level, short clock routes

connected the macro clock pins to the local clock buffers.

These wires were kept very short, and duplication of

existing higher-level clock routes was avoided by allowing

the use of multiple clock pins. At the unit level, clock

routing was handled by a special tool, which connected the

macro pins to unit-level pins, placed as needed in pre-

assigned wiring tracks. The nal connection to the xed

Figure 6

Schematic diagram of global clock generation and distribution.

PLL

Bypass

Reference

clock in

Reference

clock out

Clock distribution

Clock out

Figure 7

3D visualization of the entire global clock network. The x and y

coordinates are chip x, y, while the z axis is used to represent

delay, so the lowest point corresponds to the beginning of the

clock distribution and the final clock grid is at the top. Widths are

proportional to tuned wire width, and the three levels of buffers

appear as vertical lines.

D

e

l

a

y

Grid

Tuned

sector

trees

Sector

buffers

Buffer level 2

Buffer level 1

y

x

Figure 8

Visualization of four of the 64 sector trees driving the clock grid,

using the same representation as Figure 7. The complex sector

trees and multiple-fingered transmission lines used for inductance

control are visible at this scale.

D

e

l

a

y

Multiple-

fingered

transmission

line

y

x

J. D. WARNOCK ET AL. IBM J. RES. & DEV. VOL. 46 NO. 1 JANUARY 2002

32

Clock Tree Delays, IBM Power

clock grid was completed with a tool run at the chip level,

connecting unit-level pins to the grid. At this point, the

clock tuning and the bottom-up clock routing process still

have a great deal of exibility to respond rapidly to even

late changes. Repeated practice routing and tuning were

performed by a small, focused global clock team as the

clock pins and buffer placements evolved to guarantee

feasibility and speed the design process.

Measurements of jitter and skew can be carried out

using the I/Os on the chip. In addition, approximately 100

top-metal probe pads were included for direct probing

of the global clock grid and buffers. Results on actual

POWER4 microprocessor chips show long-distance

skews ranging from 20 ps to 40 ps (cf. Figure 9). This is

improved from early test-chip hardware, which showed

as much as 70 ps skew from across-chip channel-length

variations [19]. Detailed waveforms at the input and

output of each global clock buffer were also measured

and compared with simulation to verify the specialized

modeling used to design the clock grid. Good agreement

was found. Thus, we have achieved a correct-by-design

clock-distribution methodology. It is based on our design

experience and measurements from a series of increasingly

fast, complex server microprocessors. This method results

in a high-quality global clock without having to use

feedback or adjustment circuitry to control skews.

Circuit design

The cycle-time target for the processor was set early in the

project and played a fundamental role in dening the

pipeline structure and shaping all aspects of the circuit

design as implementation proceeded. Early on, critical

timing paths through the processor were simulated in

detail in order to verify the feasibility of the design

point and to help structure the pipeline for maximum

performance. Based on this early work, the goal for the

rest of the circuit design was to match the performance set

during these early studies, with custom design techniques

for most of the dataow macros and logic synthesis for

most of the control logican approach similar to that

used previously [20]. Special circuit-analysis and modeling

techniques were used throughout the design in order to

allow full exploitation of all of the benets of the IBM

advanced SOI technology.

The sheer size of the chip, its complexity, and the

number of transistors placed some important constraints

on the design which could not be ignored in the push to

meet the aggressive cycle-time target on schedule. These

constraints led to the adoption of a primarily static-circuit

design strategy, with dynamic circuits used only sparingly

in SRAMs and other critical regions of the processor core.

Power dissipation was a signicant concern, and it was a

key factor in the decision to adopt a predominantly static-

circuit design approach. In addition, the SOI technology,

including uncertainties associated with the modeling

of the oating-body effect [2123] and its impact on

noise immunity [22, 2427] and overall chip decoupling

capacitance requirements [26], was another factor behind

the choice of a primarily static design style. Finally, the

size and logical complexity of the chip posed risks to

meeting the schedule; choosing a simple, robust circuit

style helped to minimize overall risk to the project

schedule with most efcient use of CAD tool and design

resources. The size and complexity of the chip also

required rigorous testability guidelines, requiring almost

all cycle boundary latches to be LSSD-compatible for

maximum dc and ac test coverage.

Another important circuit design constraint was the

limit placed on signal slew rates. A global slew rate limit

equal to one third of the cycle time was set and enforced

for all signals (local and global) across the whole chip.

The goal was to ensure a robust design, minimizing

the effects of coupled noise on chip timing and also

minimizing the effects of wiring-process variability on

overall path delay. Nets with poor slew also were found

to be more sensitive to device process variations and

modeling uncertainties, even where long wires and RC

delays were not signicant factors. The general philosophy

was that chip cycle-time goals also had to include the

slew-limit targets; it was understood from the beginning

that the real hardware would function at the desired

cycle time only if the slew-limit targets were also met.

The following sections describe how these design

constraints were met without sacricing cycle time. The

latch design is described rst, including a description of

the local clocking scheme and clock controls. Then the

circuit design styles are discussed, including a description

Figure 9

Global clock waveforms showing 20 ps of measured skew.

1.5

1.0

0.5

0.0

0 500 1000 1500 2000 2500

20 ps skew

V

o

l

t

s

(

V

)

Time (ps)

IBM J. RES. & DEV. VOL. 46 NO. 1 JANUARY 2002 J. D. WARNOCK ET AL.

33

25

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Pharmeceutics IIIДокумент5 страницPharmeceutics III8148593856Оценок пока нет

- B-Form Data - 2016-17Документ2 страницыB-Form Data - 2016-178148593856100% (1)

- R FormДокумент23 страницыR FormChandradeep ReddyОценок пока нет

- Resultacio II Exe 231112Документ23 страницыResultacio II Exe 231112Sourabh SharmaОценок пока нет

- Medieval IndiaДокумент1 страницаMedieval India8148593856Оценок пока нет

- IES Syllabus For ETCДокумент5 страницIES Syllabus For ETCAnand ShankarОценок пока нет

- Medieval IndiaДокумент1 страницаMedieval India8148593856Оценок пока нет

- Indian Language and LiteratureДокумент1 страницаIndian Language and Literature8148593856Оценок пока нет

- Modern IndiaДокумент1 страницаModern India8148593856Оценок пока нет

- Ancient IndiaДокумент1 страницаAncient India8148593856Оценок пока нет

- Lesson 2 - Indian CultureLesson 2 - Indian Culture (58 KB)Документ9 страницLesson 2 - Indian CultureLesson 2 - Indian Culture (58 KB)Pradeep MeduriОценок пока нет

- Ancient India (160 KB) PDFДокумент26 страницAncient India (160 KB) PDFPraveen VermaОценок пока нет

- NEILIT Scientist-B MeitY 20160922Документ6 страницNEILIT Scientist-B MeitY 201609228148593856Оценок пока нет

- UPSC Engineering Exam NoticeДокумент25 страницUPSC Engineering Exam NoticeMohitSinhaОценок пока нет

- NITT Notice: M.Tech Students Must Reregister Courses by August 19thДокумент1 страницаNITT Notice: M.Tech Students Must Reregister Courses by August 19th8148593856Оценок пока нет

- BSPGCL Gate 2016Документ5 страницBSPGCL Gate 2016Ajay GoelОценок пока нет

- Form 2Документ2 страницыForm 2Sushant BakoreОценок пока нет

- BSPHCL 2016Документ2 страницыBSPHCL 20168148593856Оценок пока нет

- Euro 5 and Euro 6 Emissions Reg Light PassengercommvehiclesДокумент6 страницEuro 5 and Euro 6 Emissions Reg Light PassengercommvehiclesZvezdan DjurdjevicОценок пока нет

- Recruitment JE (ATC) AndJE (Electronics) 030915Документ11 страницRecruitment JE (ATC) AndJE (Electronics) 030915rajakumarrockzОценок пока нет

- WC Policy 2014Документ1 страницаWC Policy 20148148593856Оценок пока нет

- Writeup cgl2015Документ2 страницыWriteup cgl2015Nitin VashisthОценок пока нет

- Bluedart OfficeДокумент1 страницаBluedart Office8148593856Оценок пока нет

- Writeup cgl2015Документ2 страницыWriteup cgl2015Nitin VashisthОценок пока нет

- RH 2015Документ1 страницаRH 20158148593856Оценок пока нет

- April 2015 Current AffairsДокумент86 страницApril 2015 Current AffairsSagargn SagarОценок пока нет

- Model Question Paper NpcilДокумент19 страницModel Question Paper NpcilmanikandaprabhuОценок пока нет

- PH 2015Документ1 страницаPH 20158148593856Оценок пока нет

- 800xa Architecture OverviewДокумент12 страниц800xa Architecture OverviewmaulanayasirОценок пока нет

- BSNL Mobile Payment June 2014Документ1 страницаBSNL Mobile Payment June 2014ayush0403Оценок пока нет

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- A Capacitor-Free CMOS Low-Dropout Regulator With Damping-Factor-Control Frequency CompensationДокумент12 страницA Capacitor-Free CMOS Low-Dropout Regulator With Damping-Factor-Control Frequency Compensationtotoya38Оценок пока нет

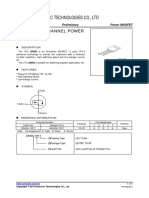

- Single N-Channel Trench MOSFET 30V, 100.0A, 2.9m : Features General DescriptionДокумент6 страницSingle N-Channel Trench MOSFET 30V, 100.0A, 2.9m : Features General DescriptionMatheus RizziОценок пока нет

- 25V 9.5mΩ N-Channel MOSFETДокумент5 страниц25V 9.5mΩ N-Channel MOSFETTankard_grОценок пока нет

- Isl95836 PDFДокумент33 страницыIsl95836 PDFKanthan KanthОценок пока нет

- Irjet V4i5125 PDFДокумент5 страницIrjet V4i5125 PDFAnonymous plQ7aHUОценок пока нет

- TN 2251Документ34 страницыTN 2251Pavan Singh TomarОценок пока нет

- Industrial Automation 1Документ41 страницаIndustrial Automation 1Chandan Chandan100% (2)

- A Voltage-Mode PWM Buck Regulator With End-Point PredictionДокумент5 страницA Voltage-Mode PWM Buck Regulator With End-Point PredictionWen DwenОценок пока нет

- RF Power Field Effect Transistors: N - Channel Enhancement - Mode Lateral MosfetsДокумент26 страницRF Power Field Effect Transistors: N - Channel Enhancement - Mode Lateral MosfetsJhonny ColmanОценок пока нет

- Rohini 37095079304Документ6 страницRohini 37095079304zga5v5Оценок пока нет

- University of Zimbabwe: Power Electronics and Motion Control: Ee420Документ10 страницUniversity of Zimbabwe: Power Electronics and Motion Control: Ee420shania msipaОценок пока нет

- UGC NET Electronic Science SyllabusДокумент3 страницыUGC NET Electronic Science Syllabuskumar HarshОценок пока нет

- CadenceДокумент129 страницCadenceSanjay NargundОценок пока нет

- Rutgers University, Department of Electrical and Computer Engineering Abet Course Syllabus COURSE: 14:332:366Документ3 страницыRutgers University, Department of Electrical and Computer Engineering Abet Course Syllabus COURSE: 14:332:366HUANG YIОценок пока нет

- Using DRV To Drive Solenoids - DRV8876/DRV8702-Q1/DRV8343-Q1Документ13 страницUsing DRV To Drive Solenoids - DRV8876/DRV8702-Q1/DRV8343-Q1cutoОценок пока нет

- 650V 7A N-Channel MOSFET SpecificationДокумент2 страницы650V 7A N-Channel MOSFET SpecificationAris RomdoniОценок пока нет

- Allen-Holberg - CMOS Analog Circuit DesignДокумент797 страницAllen-Holberg - CMOS Analog Circuit Designbsrathor75% (4)

- EE1303 Lab Manual: Power Electronics ExperimentsДокумент45 страницEE1303 Lab Manual: Power Electronics ExperimentsChaitanya SingumahantiОценок пока нет

- CMOS readout techniques for infrared focal-plane arraysДокумент12 страницCMOS readout techniques for infrared focal-plane arraysOmkar KatkarОценок пока нет

- EE5320 Analog IC Design Assignment - 3 Characterization and Amplifier DesignДокумент19 страницEE5320 Analog IC Design Assignment - 3 Characterization and Amplifier DesignSure AvinashОценок пока нет

- 1 1699487Документ14 страниц1 1699487SHUBHAM 20PHD0773Оценок пока нет

- Q 0765 RTДокумент22 страницыQ 0765 RTMark StewartОценок пока нет

- TOP204YAIДокумент16 страницTOP204YAIHuynh Ghi NaОценок пока нет

- Department of Electronics and Communication Engineering: Dhirajlal College of TechnologyДокумент5 страницDepartment of Electronics and Communication Engineering: Dhirajlal College of TechnologyRavi ChandranОценок пока нет

- 1513EДокумент28 страниц1513EMukesh ThakkarОценок пока нет

- Huayi-Hyg011n04ls1ta C2874971Документ9 страницHuayi-Hyg011n04ls1ta C2874971naridsonorio1Оценок пока нет

- 6N60 PDFДокумент7 страниц6N60 PDFRey TiburonОценок пока нет

- Unisonic Technologies Co., LTD: 20A, 500V N-Channel Power MosfetДокумент4 страницыUnisonic Technologies Co., LTD: 20A, 500V N-Channel Power MosfetMahmoud IssaОценок пока нет

- Ecen 3103Документ2 страницыEcen 3103gaurav kumarОценок пока нет