Академический Документы

Профессиональный Документы

Культура Документы

Ch7 Markov Chains Part II

Загружено:

Serge Mandhev SwamotzАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Ch7 Markov Chains Part II

Загружено:

Serge Mandhev SwamotzАвторское право:

Доступные форматы

1

241-460 Introduction to Queueing

Networks : Engineering Approach

Ch t 77 M k Ch i

Assoc. Prof. Thossaporn Kamolphiwong

Centre for Network Research (CNR)

Department of Computer Engineering, Faculty of Engineering

Prince of Songkla University, Thailand

Chapter 77 Markov Chains

Email : kthossaporn@coe.psu.ac.th

Outline

Markov Chains Part II

Birth and Death Process Birth and Death Process

Transition Matrix

State Transition

Steady State

Flow Based approach

Example

Chapter 7 : Markov Chains

2

Birth-Death Process

Special case of a Markov process in which

transitions fromstate k are permitted only to transitions from state k are permitted only to

neighboring statek+1, k and k-1

2 1 0 0

2 1

Chapter 7 : Markov Chains

Birth Birth- -Death Process Death Process

Birth-Death Process

Continuous-time Markov chain [X(t)|t > 0] with the

state {0, 1, 2, } is known as birth-death state {0, 1, 2, } is known as birth death

process if there exist constants

k

(k = 0,1,)

and

k

(k = 1, 2, ) such that the transition rates

are given by

q

k,k+1

=

k

q = q

k,k-1

=

k

q

kj

= 0 for |k j| > 1

q

kk

= 1-(

k+

k

)

Chapter 7 : Markov Chains

3

Birth Death Process Example

0

1

k-1

k

0 1 2 k-1 k

Chapter 7 : Markov Chains

Birth-Death Process

Birth rate

k

is the rate at which births occur

when the population is of size k when the population is of size k

Death rate

k

is the rate at which deaths occur

when the population is of size k

Chapter 7 : Markov Chains

4

Transition matrix

0

1

k-1

q

00

q

01

q

02

q

0k

k

0 1 2 k k -1

q

10

q

11

q

12

q

1k

Q =

Chapter 7 : Markov Chains

q

11

q

12

q

1k

q

k0

q

k1

q

k2

q

kk

Q =

0

1

k-1

Transition matrix

0

1

k-1

k

1-

0

0

0

1

1-(

1

+

1

)

1

0 0

0 0

Q =

0 1 2 k k -1

Chapter 7 : Markov Chains

0

2

1-(

2

+

2

)

2

0

0 0

3

1-(

3

+

3

)

3

Q

5

State Distribution

k-1

1

k

0

2

k-2

Let X(t) : # of customer in the system at time t

P

k

(t) : prob. of finding system in state k at time t

k-1 2 k

1 0 k+1

k-1

k+1

3

Then

P

k

(t) = P[X(t) = k]

Chapter 7 : Markov Chains

State Transition

Consider state k at time t + At

k population at time t and no state changes k population at time t and no state changes

occurred

k 1 in the population at time t and we had a

birth during the interval (t, t + At)

k + 1 members in the population at time t and

had one death during the interval (t, t + At)

Chapter 7 : Markov Chains

6

State Transition

Death

k+1

Death

No change

Birth

k k

k-1

A

time

Chapter 7 : Markov Chains

t+At t

P

k

(t+At) = P

k-1

(t)p

k-1,k

(At)+P

k

(t)p

k,k

(At) +P

k+1

(t)p

k+1,k

(At)

(Continue)

Let

k

= birth rate in state k

k

=death rate in state k

k

= death rate in state k

Then

P[state k to state k 1 in t] =

k

t

P[state k to state k + 1 in t] =

k

t

P[state k to state k in t] = 1 (

k

+

k

)t

P[state k to other state in t] = 0

Chapter 7 : Markov Chains

7

State Transition

k-1 k 1 0

k+1

k-1

k

0

2

k+1

k = 0

P

0

(t+At) = P

0

(t)p

00

(At) + P

1

(t)p

10

(At)

P

k

(t+At) = P

k

(t)p

k,k

(At)+P

k-1

(t)p

k-1,k

(At)+P

k+1

(t)p

k+1,k

(At)

k+1

2

= P

0

(t)[1-(

0

+

0

)At] + P

1

(t)

1

At

= P

0

(t)[1-

0

At] + P

1

(t)

1

At

= P

0

(t) -

0

AtP

0

(t) +

1

AtP

1

(t)

Chapter 7 : Markov Chains

(Continue)

k = 0

P

0

(t+At) = P

0

(t) -

0

AtP

0

(t) +

1

AtP

1

(t)

( ) ( )

( ) ( )

1 1 0 0

0 0

t P t P

t

t P t t P

+ =

A

A +

Chapter 7 : Markov Chains

( ) ( )

0 0

t A

8

(Continue)

k-1 k 1 0

k+1

k-1

k

0

2

k+1

k > 1

P

k

(t+At)=P

k

(t)[1-(

k

+

k

)At]+P

k-1

(t)

k-1

At+ P

k+1

(t)

k+1

At

P

k

(t+At) = P

k

(t)p

k,k

(At)+P

k-1

(t)p

k-1,k

(At)+P

k+1

(t)p

k+1,k

(At)

k+1

2

Chapter 7 : Markov Chains

= P

k

(t) - (

k

+

k

)AtP

k

(t) +

k-1

At P

k-1

(t) +

k+1

AtP

k+1

(t)

(Continue)

k > 1

P

k

(t+At) = P

k

(t)-(

k

+

k

)AtP

k

(t)+

k-1

At P

k-1

(t) +

k+1

AtP

k+1

(t)

( ) ( )

( ) ( ) ( )

( )

1 1

1 1

t P

t P t P

t

t P t t P

k k

k k k k k

k k

+

+ + =

A

A +

Chapter 7 : Markov Chains

( )

1 1

t P

k k + +

+

k-1

k-1

k

k

k

k+1

k+1

9

(Continue)

When At 0 When At 0

( ) ( ) ( )

lim

0

dt

t dP

t

t P t t P

k k k

t

=

A

A +

A

Chapter 7 : Markov Chains

k = 0

(Continue)

k > 1

( )

( ) ( ) t P t P

dt

t dP

1 1 0 0

0

+ =

( ) d

Chapter 7 : Markov Chains

( )

( ) ( ) ( ) ( ) t P t P t P

dt

t dP

k k k k k k k

k

1 1 1 1 + +

+ + + =

10

Steady State

( )

0 =

dt

t dP

k

In steady state,

Balance eqns dt

k 1

0

2

k 2

Balance eqns.

-

0

P

0

+

1

P

1

= 0 k = 0

-(

k

+

k

)P

k

+

k-1

P

k-1

+

k+1

P

k+1

= 0 k > 1

Chapter 7 : Markov Chains

k-1 2 k

k-1

k-1

1 0

1

k+1

k+1

2

k-2

3

Steady State

Re-arranging,

1

P

1

=

0

P

0

k = 0

k-1

P

k-1

+

k+1

P

k+1

=

k

P

k

+

k

P

k

k > 1

k-1

k-1

k

k

k+1

0

1

1

Chapter 7 : Markov Chains

Flow rate in to k = Flow rate out of k

k 1 k

k

k+1

k+1

1

11

Flow-Based Method

k-1

k

k-2

Flow rate into state k =

k-1

P

k-1

(t) +

k+1

P

k+1

(t)

k-1 k

k-1

k+1

k+1

Flow rate out of state k = (

k

+

k

)P

k

(t)

Effective probability flow rate at k = Flow into

state k Flow out of state k

Chapter 7 : Markov Chains

Flow-Based Approach

A flow-Based Approach is the way of solving

problem. This approach would be usable if the

arrival process is a Poisson process and the

service process has exponentially distributed

services times.

Step for flow-based approach

(1) Draw the state transition diagram

(2) Drawclosed boundaries and equate flowacross this (2) Draw closed boundaries and equate flow across this

boundary.

(3) Solve the equation in (2) to obtain the equilibrium

state probability distribution

Chapter 7 : Markov Chains

12

Flow Balance Equations

Draw a closed boundary around

state k

k-1

k

k

= =

=

j k

jk k

j k

kj k

p P p P

Global Balance Equation

k

k+1

- Draw a closed boundary between

k

k+1

k+1

Chapter 7 : Markov Chains

state k and state k + 1

- Detailed Balance Equation

p

k,k+1

P

k

= p

k+1,k

P

k+1

Detailed Balance Equation

Detailed balance equation lead to

P P k 0 1 2

1

1

=

k

k

k

k

P P

k-1

P

k-1

=

k

P

k

k = 0, 1, 2,

,... 3 , 2 , 1

1

1

0

0

=

(

=

+

=

H

k P P

i

i

k

i

k

1

1 =

k

P

Chapter 7 : Markov Chains

(

+

=

=

+

= 1

1

1

0

0

1

1

k

i

i

k

i

P

1 = k

The probability of the

system being empty

13

Pure Birth system

k-1

1

k

0

2

k-2

Let

k

= 0 for all k

k

= for all k = 0, 1, 2,

Th b i i 0 i h 0 b

k-1 2 k 1 0

k+1

( )

=

=

=

0

0

0

1

0

k

k

P

k

Chapter 7 : Markov Chains

The system begin at time 0 with 0 member

Pure Birth System

( )

( ) ( ) 1

1

> =

k t P t P

dt

t dP

k k

k

( )

( ) ( ) ( ) ( ) t P t P t P

dt

t dP

k k k k k k k

k

+ =

+ + 1 1 1 1

dt

( )

( ) 0

0

= = k t P

dt

t dP

k

Chapter 7 : Markov Chains

Solution for Solution for P

0

(t)

P

0

(t) = e

-t

14

Pure Birth System

For k =1

( )

Solution P

1

(t) = te

-t

F k 0 0

( )

( ) ( ) t P t P

dt

t dP

1 0

1

=

( )

( ) t P e

dt

t dP

t

1

1

=

( )

( )

0 , 0

!

> > =

t k e

k

t

t P

t

k

k

Chapter 7 : Markov Chains

For k > 0, t > 0

Solution

(Continue)

( )

( )

t

k

k

e

k

t

t P

=

!

Poisson Distribution

P

k

(t) is probability that k arrivals occur during the

time interval (0,t)

is average rate at which customers arrive

k!

Chapter 7 : Markov Chains

15

Pure Death System

k-1 2 k

1 0 k+1

Let

k

= for all k = 0, 1, 2, , N

k

= 0 for all k

k-1

k+1

3

The system begin at N member

Chapter 7 : Markov Chains

Pure Death System

( )

( ) ( ) N k t P t P

dt

t dP

k k k k

k

< < + =

+ +

0

1 1

( )

( )

0

N k

t

P

t

k N

S l f

dt

Erlang Distribution

( )

( ) N k t P

dt

t dP

N

N

= =

1

( )

( ) 0

1

0

= = k t P

dt

t dP

( )

( )

( )

( ) ( )

( )

0

! 1

0

!

1

0

=

=

s <

k e

N

t

dt

t dP

N k e

k N

t

t P

t

N

t

k

Chapter 7 : Markov Chains

Solution for P

k

(t)

16

Birth-Death process Example

An ticket reservation system has 2 computers, one

on-line and one standby. The operating on line and one standby. The operating

computer fails after an exponentially distributed

time, having mean t

f

and then it is replaced by

standby computer, i.e., at any time only 1 or 0

computers are operating. There is one repair

facility, i.e., at any time only 1 or 0 computers

can be repaired. The repair times are

exponentially distributed with mean t

r

. What

fraction of the time will the system be down?

(that is, both computers failed)

Chapter 7 : Markov Chains

What fraction of the time will the system be down?

(that is both computers failed)?

Solution

(that is, both computers failed)?

State = # of working (not failed) computer

1/t

r

1/t

r

0 2

P

0

: prob. that both

computers have failed.

Chapter 7 : Markov Chains

1/t

f

1/t

f

0 2 1

17

Solution

1/t

r

1/t

r

0 2 1

State Rate In = Rate Out

0 (1/t

f

)P

1

= (1/t

r

)P

0

1 (1/t )P

0

+ (1/t

f

)P

2

= (1/t )P

1

+ (1/t

f

)P

1

1/t

f

1/t

f

0 2 1

1 (1/t

r

)P

0

+ (1/t

f

)P

2

(1/t

r

)P

1

+ (1/t

f

)P

1

(1/t

r

)P

0

+ (1/t

f

)P

2

= (1/t

r

)P

1

+ (1/t

r

)P

0

(1/t

f

)P

2

= (1/t

r

)P

1

2 (1/t

r

)P

1

= (1/t

f

)P

2

Chapter 7 : Markov Chains

Finding Steady State Process

Solution

(1/t

f

)P

1

= (1/t

r

)P

0

(1/t

f

)P

2

= (1/t

r

)P

1

(1/t

f

)(1/t

f

)P

1

P

2

= (1/t

r

)(1/t

r

)P

0

P

1 f f

P

2

= (t

f

/t

r

)

2

P

0

Chapter 7 : Markov Chains

18

1

2

=

P

Solution

1

1

0

=

= n

n

P

1

2 1 0

= + + P P P

2

| | t t

2

2

0

1

1

t

t

t

t

t

P

r

f

r

f

(

(

|

|

.

|

\

|

+ +

=

Chapter 7 : Markov Chains

1

0 0 0

=

|

|

.

|

\

|

+ + P

t

t

P

t

t

P

r

f

r

f

2 2

f

r

t t t t

f r r

+ +

=

Example

A gasoline station has only one pump. Cars arrive

at a rate of 20/hour. However, if the pump is at a rate of 20/hour. However, if the pump is

already in use, these potential customers make

'balk', i.e. drive on to another gasoline station. If

there are n cars already at the station the

probability that an arriving car will balk is n/4 for

n = 1, 2, 3, 4, and 1 for n > 4. Time required to

service a car is exponentially distributed with

mean 3 min.

What is the probability of no cars in gasoline

station?

Chapter 7 : Markov Chains

19

(Continue)

Cars arrive at a rate of 20/hour. If there are n

cars already at the station the probability that an cars already at the station the probability that an

arriving car will balk is n/4 for n = 1, 2, 3, 4, and 1

for n > 4.

0

= 20,

1

= 20,

2

= 20,

3

= 20 per hour

n

= 0 when n 0, 1, 2, 3

Time required to service a car is exponentially Time required to service a car is exponentially

distributed with mean 3 min.

n

= (1/3)60 = 20/hour for all n

Chapter 7 : Markov Chains

Solution

20

4

3

20

4

1

20

4

2

20

Rate In = Rate Out

20P

0

= 20P

1

20 20 20 20

0

1

2 3 4

1

4

=

n

P

Chapter 7 : Markov Chains

20P

0

20P

1

20(3/4)P

1

= 20P

2

20(2/4)P

2

= 20P

3

20(1/4)P

3

= 20P

4

0

= n

P

0

+ P

1

+ P

2

+ P

3

+ P

4

= 1

20

Solution

Rate In =Rate Out

20P

0

= 20P

1

P

4

= (3/4)(2/4)(1/4)P

0

20P

0

20P

1

20(3/4)P

1

= 20P

2

20(2/4)P

2

= 20P

3

20(1/4)P

3

= 20P

4

= (3/32)P

0

P

1

= P

0

P

2

= (3/4)P

0

Chapter 7 : Markov Chains

P

3

= (3/8)P

0

P

0

+ P

1

+ P

2

+ P

3

+ P

4

= 1

Solution

P

0

+ P

0

+(3/4) P

0

+(3/8) P

0

+ (3/32) P

0

= 1

P

0

= 32/103 = 0.31068

P

1

= 0.31068

P = 0 23301 P

2

= 0.23301

P

3

= 0.116505

P

4

= 0.029126

Chapter 7 : Markov Chains

21

References

1. Alberto Leon-Garcia, Probability and Random

Processes for Electrical Engineering,Addision- Processes for Electrical Engineering,Addision

Wesley Publishing, 1994

2. Roy D. Yates, David J . Goodman, Probability

and Stochastic Processes: A Friendly

Introduction for Electrical and Computer

Engineering, 2nd, J ohn Wiley & Sons, Inc, 2005 g g, , y , ,

3. J ay L. Devore, Probability and Statistics for

Engineering and the Sciences, 3rd

edition, Brooks/Cole Publishing

Company, USA, 1991.

Chapter 7 : Markov Chains

(Continue)

4. Robert B. Cooper, Introduction to Queueing

Theory, 2nd edition, North Holland,1981. Theory, 2nd edition, North Holland,1981.

5. Donald Gross, Carl M. Harris, Fundamentals of

Queueing Theory, 3rd edition, Wiley-

Interscience Publication, USA, 1998.

6. Leonard Kleinrock, Queueing Systems Volumn

I: Theory, A Wiley-Interscience Publication, I: Theory, A Wiley Interscience Publication,

Canada, 1975.

Chapter 7 : Markov Chains

22

(Continue)

7. Georges Fiche and Gerard Hebuterne,

Communicating Systems &Networks: Traffic & Communicating Systems & Networks: Traffic &

Performance, Kogan Page Limited, 2004.

8. J erimeah F. Hayes, Thimma V. J . Ganesh Babu,

Modeling and Analysis of Telecommunications

Networks, J ohn Wiley & Sons, 2004.

Chapter 7 : Markov Chains

Вам также может понравиться

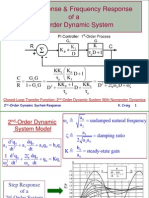

- Control Lecture 8 Poles Performance and StabilityДокумент20 страницControl Lecture 8 Poles Performance and StabilitySabine Brosch100% (1)

- Stochastic Models: Death Process, Equations of Motions, - Birth Equilibrium)Документ23 страницыStochastic Models: Death Process, Equations of Motions, - Birth Equilibrium)mod nodОценок пока нет

- Queueingtheory (1) 1Документ61 страницаQueueingtheory (1) 1ashwin josephОценок пока нет

- Ziegler-Nichols Controller Tuning ExampleДокумент6 страницZiegler-Nichols Controller Tuning ExampleChristine AvdikouОценок пока нет

- Chapter 8: Introduction To Systems Control: 8.1 System Stability From Pole-Zero Locations (S-Domain)Документ47 страницChapter 8: Introduction To Systems Control: 8.1 System Stability From Pole-Zero Locations (S-Domain)Sreedharachary SimhaaОценок пока нет

- EECE 301 Note Set 13 FS DetailsДокумент21 страницаEECE 301 Note Set 13 FS DetailsrodriguesvascoОценок пока нет

- Time Domain Analysis of 2nd Order SystemДокумент57 страницTime Domain Analysis of 2nd Order SystemNicholas NelsonОценок пока нет

- Classical Control System Design: Dutch Institute of Systems and ControlДокумент49 страницClassical Control System Design: Dutch Institute of Systems and Controlजनार्धनाचारि केल्लाОценок пока нет

- QueueingtheoryДокумент66 страницQueueingtheoryQi Nam WowwhstanОценок пока нет

- (EXE) Random Walknetwork SДокумент7 страниц(EXE) Random Walknetwork SChristian F. VegaОценок пока нет

- 14.1 Write The Characteristics Equation and Construct Routh Array For The Control System Shown - It Is Stable For (1) KC 9.5, (Ii) KC 11 (Iii) KC 12Документ12 страниц14.1 Write The Characteristics Equation and Construct Routh Array For The Control System Shown - It Is Stable For (1) KC 9.5, (Ii) KC 11 (Iii) KC 12Desy AristaОценок пока нет

- Second-Order Dynamic Systems KCC 2011Документ39 страницSecond-Order Dynamic Systems KCC 2011Naguib NurОценок пока нет

- MIT22 05F09 Lec05Документ31 страницаMIT22 05F09 Lec05dmp130Оценок пока нет

- Dynamic Behavior of More General SystemsДокумент32 страницыDynamic Behavior of More General Systemsjasonbkyle9108Оценок пока нет

- Molecular Dynamics Simulations: Che 520 Fall 2009Документ8 страницMolecular Dynamics Simulations: Che 520 Fall 2009Fabio OkamotoОценок пока нет

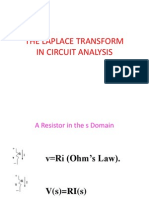

- CS - II (Laplace and M Modelling)Документ63 страницыCS - II (Laplace and M Modelling)abdul.baseerОценок пока нет

- Stochastic Models: M/M/1 Queuing Model)Документ12 страницStochastic Models: M/M/1 Queuing Model)mod nodОценок пока нет

- BME Capstone Final Examination - SolutionДокумент8 страницBME Capstone Final Examination - SolutionTín Đức ĐoànОценок пока нет

- Mechatronics Frequency Response Analysis & Design K. Craig 1Документ121 страницаMechatronics Frequency Response Analysis & Design K. Craig 1rub786Оценок пока нет

- Process Dynamics and Control, Ch. 8 Solution ManualДокумент12 страницProcess Dynamics and Control, Ch. 8 Solution ManualBen SpearmanОценок пока нет

- Convergence Analysis of Extended KalmanДокумент10 страницConvergence Analysis of Extended Kalmansumathy SОценок пока нет

- MAE653 Sp10 Lesson06Документ9 страницMAE653 Sp10 Lesson06Edwin MachacaОценок пока нет

- Estabilidad Interna y Entrada-Salida de Sistemas Continuos: Udec - DieДокумент10 страницEstabilidad Interna y Entrada-Salida de Sistemas Continuos: Udec - DieagustinpinochetОценок пока нет

- Liserre Lecture 7-1Документ36 страницLiserre Lecture 7-1Melissa TateОценок пока нет

- L2 - Modelling SystemsДокумент21 страницаL2 - Modelling SystemsOmer AhmedОценок пока нет

- Z TransformДокумент22 страницыZ Transformvignanaraj100% (1)

- Queuing Theory and Traffic Analysis CS 552 Richard Martin Rutgers UniversityДокумент149 страницQueuing Theory and Traffic Analysis CS 552 Richard Martin Rutgers UniversitySenthil SoundarajanОценок пока нет

- Lecture Note 650507181101410Документ20 страницLecture Note 650507181101410Rishu ShuklaОценок пока нет

- Peretmuan 12 Laplace in CircuitsДокумент56 страницPeretmuan 12 Laplace in CircuitsSando CrisiasaОценок пока нет

- Expt 3Документ5 страницExpt 3Vinit SinghОценок пока нет

- Ee 5307 HomeworksДокумент15 страницEe 5307 HomeworksManoj KumarОценок пока нет

- EE 312 Lecture 1Документ12 страницEE 312 Lecture 1دكتور كونوهاОценок пока нет

- Enee 660 HW #7Документ2 страницыEnee 660 HW #7PeacefulLion0% (1)

- Lec 14Документ39 страницLec 14Henrique Mariano AmaralОценок пока нет

- Chapter 3 - MatlabДокумент59 страницChapter 3 - MatlabZe SaОценок пока нет

- 4 Microsoft Word - L-12 - SS - IA - C - EE - NPTELДокумент10 страниц4 Microsoft Word - L-12 - SS - IA - C - EE - NPTELRicky RawОценок пока нет

- A Comparison Study of The Numerical Integration Methods in The Trajectory Tracking Application of Redundant Robot ManipulatorsДокумент21 страницаA Comparison Study of The Numerical Integration Methods in The Trajectory Tracking Application of Redundant Robot ManipulatorsEmre SarıyıldızОценок пока нет

- Matlab Project Section B1Документ50 страницMatlab Project Section B1Biruk TadesseОценок пока нет

- Polynomial Root Motion: Christopher Frayer and James A. SwensonДокумент6 страницPolynomial Root Motion: Christopher Frayer and James A. SwensonMarie.NgoОценок пока нет

- Some Past Exam Problems in Control Systems - Part 2Документ5 страницSome Past Exam Problems in Control Systems - Part 2vigneshОценок пока нет

- Control Systems: Dynamic ResponseДокумент35 страницControl Systems: Dynamic ResponseLovemore MakombeОценок пока нет

- Queensland University of Technology School of Electrical and Electronic Systems Engineering EEB511 - Tutorial 2Документ2 страницыQueensland University of Technology School of Electrical and Electronic Systems Engineering EEB511 - Tutorial 2karimi_mhОценок пока нет

- Poles Selection TheoryДокумент6 страницPoles Selection TheoryRao ZubairОценок пока нет

- Beat Phenomenon - Vibration - Sys. Analys.Документ5 страницBeat Phenomenon - Vibration - Sys. Analys.Miguel CervantesОценок пока нет

- Root Locus Diagram - GATE Study Material in PDFДокумент7 страницRoot Locus Diagram - GATE Study Material in PDFAtul Choudhary100% (1)

- Chapter 7: Quicksort: DivideДокумент18 страницChapter 7: Quicksort: DivideShaunak PatelОценок пока нет

- Spectrum AnalysisДокумент35 страницSpectrum AnalysisdogueylerОценок пока нет

- Logic Synthesis: Timing AnalysisДокумент33 страницыLogic Synthesis: Timing AnalysisAnurag ThotaОценок пока нет

- Higgs DiscoveryДокумент74 страницыHiggs DiscoveryAndy HoОценок пока нет

- Sparse Vector Methods: Lecture #13 EEE 574 Dr. Dan TylavskyДокумент24 страницыSparse Vector Methods: Lecture #13 EEE 574 Dr. Dan Tylavskyhonestman_usaОценок пока нет

- Phase Lead Compensator Design Project Mauricio Oñoro: Figure 1 Circuit To Be ControlledДокумент8 страницPhase Lead Compensator Design Project Mauricio Oñoro: Figure 1 Circuit To Be ControlledMauricio OñoroОценок пока нет

- Root LocusДокумент95 страницRoot LocusPiyooshTripathi100% (1)

- Control Systems Formula SheetДокумент12 страницControl Systems Formula SheetliamhrОценок пока нет

- Communication IITN Review1Документ28 страницCommunication IITN Review1Pankaj MeenaОценок пока нет

- Electronic Systems: Study Topics in Physics Book 8От EverandElectronic Systems: Study Topics in Physics Book 8Рейтинг: 5 из 5 звезд5/5 (1)

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesОт EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesОценок пока нет

- The Spectral Theory of Toeplitz Operators. (AM-99), Volume 99От EverandThe Spectral Theory of Toeplitz Operators. (AM-99), Volume 99Оценок пока нет

- Espring Roadshow2013Документ74 страницыEspring Roadshow2013BomezzZ Enterprises100% (1)

- Apsa EngДокумент55 страницApsa EngBomezzZ Enterprises100% (1)

- Espring Contaminant Reduction List: (Including Health Effects & Sources of Contamination)Документ14 страницEspring Contaminant Reduction List: (Including Health Effects & Sources of Contamination)BomezzZ EnterprisesОценок пока нет

- My Health - Product Cat Aug 13 - P6-39 LowДокумент34 страницыMy Health - Product Cat Aug 13 - P6-39 LowBomezzZ EnterprisesОценок пока нет

- Special Pay in UOBДокумент1 страницаSpecial Pay in UOBBomezzZ EnterprisesОценок пока нет

- NothingCompares ARTISTRYAntiAgeing AUДокумент3 страницыNothingCompares ARTISTRYAntiAgeing AUBomezzZ EnterprisesОценок пока нет

- SevenDays LostWeightДокумент3 страницыSevenDays LostWeightBomezzZ Enterprises100% (1)

- Achieve May 13Документ92 страницыAchieve May 13BomezzZ EnterprisesОценок пока нет

- Installment PscFeb13Документ1 страницаInstallment PscFeb13BomezzZ EnterprisesОценок пока нет

- Installment FirstChoiceFeb13Документ1 страницаInstallment FirstChoiceFeb13BomezzZ EnterprisesОценок пока нет

- 5 Achieve May 2012Документ96 страниц5 Achieve May 2012BomezzZ EnterprisesОценок пока нет