Академический Документы

Профессиональный Документы

Культура Документы

A Minimal Infrared Obstacle Detection Scheme

Загружено:

Lăcătuş DanielИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

A Minimal Infrared Obstacle Detection Scheme

Загружено:

Lăcătuş DanielАвторское право:

Доступные форматы

A Minimal Infrared Obstacle Detection Scheme

Department of Computing Science University of Alberta Edmonton, Alberta, Canada T6G 2H1 kube@cs.ualberta.ca October 23, 1998

C. Ronald Kube

Introduction

Obstacle detection is often the rst perceptual system we add to our mobile robots. After all, once the robot is moving around, constantly bumping into things doesn't make a very impressive demonstration of the robot's intellect 1 . A simple solution is a contact sensor in the form of a bump switch or whisker, but this approach to obstacle detection is not very impressive and reminiscent of a blindfolded C3PO of Star Wars fame. An alternate solution is near-proximity sensing, possible with an infrared emitter/detector pair. These optoelectronic infrared components are inexpensive, easy to use and widely available from several manufacturers. In this article we'll look at two such devices, the Siemens SFH484 infrared emitter and its matching detector, the BP103B infrared phototransistor. To demonstrate its use, we'll build a simple minimal obstacle detection system using just two sensors and show how to gather and plot data from them. Then we'll con gure the two sensors for a simple obstacle avoidance system and implement it on a reactive robot, reminiscent of Braitenberg's Vehicles 1], by connecting the sensor's output to a 68HC11. Finally, we'll show how this simple strategy has been used to create obstacle avoidance behaviors in our system of 10 mobile robots, and we'll discuss some possible enhancements and alternate uses for the sensors.

A previous version of this article appeared in The Robotics Practitioner, spring 1996. 1 If you're trying to generates laughs for the observers (or tears for the creators) then let your robot roam without obstacle avoidance.

Infrared Emitters and Detectors

Infrared radiation is a radiant energy longer than visible red with wavelengths between 770 and 1500 nanometers. Two optoelectronic devices that make use of this energy are the Infrared-Emitting Diode (IRED), and the Infrared Phototransistor. Two types of IRED radiant-energy sources are Gallium Arsenide (GaAs) and Gallium Aluminum Arsenide (GaAlAs), which emit in the 940 nm and 820 nm portion of the near-infrared spectrum respectively. Infrared phototransistors are simply transistors designed to be responsive to this radiant energy and are typically silicon bipolar NPN types without a base terminal. Figure 1 shows the relative spectral characteristics of the human eye, a Silicon Phototransistor, two types of Infrared Light Emitting Diodes, and a Tungsten light source.

RELATIVE SPECTRAL CHARACTERISTICS 1.2

Relative Spectral Response or Output

1.0 0.8 0.6 0.4

Response of Human Eye

Output of Tungsten Source at 2870 K

GaAlAs

0.2

Response of Silicon Phototransistors GaAs

0 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 1.1 1.2

Wavelength m

Figure 1: The relative spectral characteristics of the human eye, a tungsten light source and a silicon phototransitor (adapted from 3]). To help you make sense of the data sheets that describe these components let's consider a few important terms: Acceptance Angle is measured from the optical 1

The Robotics Practitioner, 2(2):15-20, 1996. axis and is the maximum angle at which a phototransistor will detect a travelling infrared ray. Beam Angle is the total angle between the half intensity points of an IRED's emitted energy. Radiant Flux or Power output usually quoted for IREDs is the time rate of ow of radiant energy. Photocurrent is the current that ows when a photosensitive device is exposed to radiant energy. IRED emitter's typically come as GaAs, originally developed in the 1960's, or GaAlAs. Comparing Gallium Aluminum Arsenide with the older Gallium Arsenide we note that GaAlAs have 2]: 1.5 to 2 times the power output than GaAs for the same current Peak wavelength of 880nm versus 940nm Forward voltage (Vf ) is slightly higher Fewer failures, with failure de ned as a 50 percent drop in power output. The Siemens SFH484 is a very high power GaAlAs infrared emitter, with a power output of 20 mW at a forward current of 100 mA, and a beam angle of 16 degrees. The matching phototransistor is the BP103B-4 with a photocurrent 6.3 mA and an acceptance angle of 50 degrees. For our purpose, we will con gure the emitter/detector pair to measure the amount of re ected IR energy from obstacles in front of the robot. And we would like to correlate the re ected reading to a distance from the sensor to the obstacle. This will depend, among other things, on the emitter's radiant ux, the distance to the object, the nature of the object's surface re ectance, and the light current of the detector.

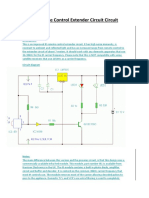

2 IR pairs to J 3 shown in Figure 3. Remember to install jumpers in all unused LED positions. Power and ground is brought in on J 4 and a shunt is normally installed in JP 1 and removed when adding an ammeter to adjust the IREDs forward current. The emitter/detector pair can be mounted using T 1;3=4 LED holders in 1=4 inch holes spaced 1=2 inch apart on a small piece of angle aluminum. Figure 3 shows three techniques for mounting IR pairs. In the bottom photo wires are soldered directly to the component with hot-glue added for mechanical strength. The middle photo shows the IR pair mounted to a small circuit board with a four position header. The top photo uses a plug/socket combination to connect to the component. Both the IRED and phototransistor are soldered to a two position machine pin plug, with the matching socket soldered to the data cable used to bring the signal to the control board. Each method allows the sensor to be easily positioned anywhere on the robot. To test the circuit connect one sensor pair to LED1/T1 watching for correct polarity. Remember to install jumpers in the unused IRED position (LED2). Connect an ammeter between JP 1 pins 1 and 2, add power and enable F ET 1 by connecting J 1 to +5. Adjust R5 to read 90 - 100 mA. Turn the power o and remove the ammeter, reinstalling the jumper in JP 1. Connect an ammeter between JP 2 pins 1 and 2, add power and enable F ET 1 by connecting J 1 to +5. Adjust R7 to read 90 - 100 mA. Measure the output voltage on pin 1 of J 2 with the power on, enable set to +5 and nothing in front of the sensor, you should read a low voltage (around 0.5 VDC) in a dimly lit room. As you move your hand in front of the sensor the voltage should rise and continue to rise as you approach, reading about 4.9 VDC at a distance of two inches from the sensor. With these tests complete we are now ready to perform a few simple experiments.

Implementation and Testing

The circuit shown in Figure 2 will allow you to connect up to four IR emitter/detector pairs. Table 1 lists the components used to construct the circuit. Header J 1 is connected to an output pin on the 6811 microcontroller (Port B pin 0 for example) and will be used to turn the IREDs on and o the extra pin is used to daisy-chain the enable signal to a second board. Header J 2 is connected to the A/D input port (Port E) on the 6811. The mechanical layout shown for the circuit makes use of male strip headers to connect the

Experimenting

Before writing an algorithm that uses the sensor's data it would help to get a feel for what the sensor output actually looks like. Towards this end, we'll plot both the voltage versus angle of a target passing in front of the sensor on a semicircular arc, and the voltage versus distance to a target for two di erent lighting conditions. Figure 4 shows the setup for the rst experiment, in which we'll determine the approximate acceptance angle of the phototransistor in a con guration using re-

The Robotics Practitioner, 2(2):15-20, 1996.

+5

3

Part Ref.

FET1

Qty.

IR3

2 1

IR1

2 1

PT1

1 2 1

PT2

1 2 1

PT3

1 2 1

PT4

1 2 1

IR4

2 1

IR2

2 1

+5 J4

R9

2 1

R8

2 1 2 2 3

R1

2

R2

2

R3

2

R4

2

J2 JP2

1 2 3 2

JP1

1 D

4 3 2 1

R7

R5

O4 O3 O2 O1

LED1-4 T1-4 R1-4 R5,R7 R6 R8,R8 JP1-2, J1 J2 J3 J4

4 4 4 2 1 2 3 1 1 1

J1 enable

R6

G S

FET1

IR Control

-+ LED1 T1 LED2 T2 LED3 T3 LED4 T4

IRF510 Motorola N-channel TMOS Power FET TO-220AB Siemens SFH 484 Infrared Emitter Siemens BP 103B-4 Phototransistor Resistor 100K 1/4 Watt Trimmer Potentiometer 100 Ohms Resistor 10K 1/4 Watt Resistor 15 Ohms 1/4 Watt Single Row Strip Header 2 contacts Male Straight Dual Row Strip Header 4 contacts Male Straight Dual Row Strip Header 16 contacts Male Straight Molex 0.100" Header 2 contacts Male Straight polarizing wall

Description

J3 J4 +5

1

+ S D G FET R1 1 1 2 R4 2 R5 1 2 3 R6 1 JP2 2 4 J2 3 R8 R9 1 JP1 2

1 1/2"

Table 1: Parts list for the IR obstacle detection module. Any commonly available IR pair may be substituted, just remember to adjust the forward LED current and measure the angle of acceptance of the phototransistor. with the IRED switched OFF. The \OFF" reading from the phototransistor is ambient light and if the reading is the same as the \ON" reading then chances are there is no obstacle to re ect infrared energy2 . If the readings are di erent, within a small error bar, then an obstacle has been detected. The next experiment measures the output voltage as a function of distance to a white target. Record the output voltage of the phototransistor (with the IRED ON) as you move a white paper envelope along a tape measure towards the sensor. Also try the same experiment with di erent targets and varying lighting conditions, then plot your results as voltage versus distance to target. Figure 6 shows an example using a white paper envelope with results for both the IRED ON and OFF conditions. In Figure 7 a comparison is made with another phototransistor commonly available at Radio Shack. Now that we have a feel for the type of data we can expect from our sensor, the next step is to design a con guration that will allow us to turn obstacle detection into avoidance. We'll test our obstacle detection scheme on a simple reactive robot that uses two wheel motors for steering and propulsion. By using a random-walk, two avoidance behaviours and a simple model for motion, the robot reliably wanders a cluttered room with relative ease using just two sensors.

2 Although this is also true of objects whose colour or surface characteristic doesn't re ect infrared, making them IR invisible

3 1 2

R7

2"

Figure 2: The schematic and mechanical layout of the IR obstacle detection module. The pot is used to adjust the forward current of the IR LEDs when switched ON by the FET. The analog voltage output from the IR phototransistors are sent to Port E (A/D input) of the 68HC11 where they are converted to digital values and compared with threshold functions. ected energy. The emitter and detector are mounted 1=2 inch apart and facing a small one inch square white target placed at the same height as the sensor and at a distance of ve inches. The target is moved on a semicircle in 10 degree increments and the voltage at the output of the phototransistor (J 21 ) recorded. Figure 5 shows the results plotted for two lighting conditions, a dimly lit room and one with sunlight from a nearby window. Note the e ect sunlight has on the overall readings. Since sunlight has natural infrared radiation it serves to increase the amount of noise the phototransistor sees. One method to help separate signal from noise, is to compare the output voltage of the phototransistor with the IRED switched ON to the output voltage

The Robotics Practitioner, 2(2):15-20, 1996.

4 Obstacle Sensor to Actuator Mapping Stimulus Obstacle Avoidance Behaviours

L R

A Simple Model for Motion

When we build robots that autonomously roam their environment, we create a mapping between perception and action that forms the basis of how the robot behaves for any given stimulus. This mapping might be xed in the case of a reactive robot or adaptive if some form of learning is used. Obstacle avoidance represents one such perception-to-action mapping necessary in any successful navigation scheme. To use our just created obstacle sensors we'll need to decide what the robot should do when an obstacle is detected. This will depend very much on the type of motion the robot is capable of generating. In a charming rendition of imaginary robotics3 , Valentino Braitenberg creates a set of 14 increasingly complex robots based on simple stimulus-response behaviours 1]. Braitenberg's second robot is capable of moving using a pair of left and right wheel motors. Both the direction and speed of each motor can be changed, resulting in a simple di erentially steered platform on which to mount an assortment of stimulus speci c sensors. Behaviour is created by \wiring" left and right sensors to either the left or right wheel motors of these hypothetical machines. To create a light-seeking robot the left and right light sensors are cross-connected to the left and right wheel motors, such that when a stimulus appears on the left side it causes the right wheel motor to turn forward causing the robot to turn left towards the light source. For our purpose here, we'll use the same simple model for motion and create a set of actions which result in the robot's movement in small xed increments. The robot pictured in Figure 9 is capable of the moving using the following motion commands: forward, left-turn, left-rotate, right-turn, right-rotate, stop and idle. The idle output simply means the behaviour is not competing for control of the robot's actuators. Since our plan is to use just two forward pointing sensors we won't include backward in our motion command set. All motion is in discrete increments of 1/8 inch linear or 2 angular movement. Thus to get the robot to move forward a constant stream of forward commands are issued on each iteration of the perception/action loop. We can create a WANDER behaviour that moves the robot forward and occassionally issues a random left or right turn. On the perception or input side of our model we'll divide our obstacles into near ( 5 inches) or far (> 5 but 10 inches) by thresholding the sensor's output to be used for CONTACT and AVOID behaviours shown

3

0 0 1 1

0 1 0 1

idle left-turn right-turn right-turn

AVOID

idle left-rotate right-rotate right-rotate

CONTACT

Table 2: The stimulus-response mappings for obstacle avoidance behaviours. Obstacle sensor data is thresholded to produce boolean outputs, which are divided into Left and Right stimuli to match the left and right division of the actuator model. The \idle" output means the behaviour doesn't compete for actuator control. in Figure 6. Triggering the thresholds will cause the robot to rotate or turn away from the obstacle depending on which threshold was tripped. Table 2 lists the stimulus-response mappings used to create our two avoidance behaviours. A \1" in the Stimulus column indicates a threshold voltage has been reached by either the robot's left or right obstacle sensor. If both sensors detect an obstacle we arbitrarily choose to go right to avoid it. The minimum straight-on detection range can be varied by the careful positioning of the left and right obstacle sensors. Figures 8 and 9 show how the left and right obstacle sensors are mounted and oriented on our circular robot. The sensors are pointed so that their eld-of-views overlap at approximately 24 inches from the robot, allowing for their diverging sensing cones to detect head-on collisions with another similar robot. To generate collision free motion a WANDER behaviour is added to the CONTACT and AVOID behaviours and processed in a prioritized loop with each behaviour issuing its own motion commands. The controller is then tested in a cluttered room and the sensors orientation adjusted until collision free navigation is achieved. To test the obstacle detection scheme in a more dynamic environment, 10 identical robots were initially placed together and switched on. Obstacle avoidance causes them to disperse while trying to maintain the minimum obstacle to robot distance in the forward looking view (see Figure 10). Of course not all obstacles will re ect infrared energy in an amount detectable by the phototransistor. Having said that, your obstacle avoidance system should also include contact sensors so that in the event of a hard collision your robot can still recover, possibly with an apologetic \oh sorry I didn't see you."

Required reading for any serious roboticist.

The Robotics Practitioner, 2(2):15-20, 1996.

Future Enhancements

A more omni-directional obstacle sensor can be had by either adding additional IR sensor pairs or by rotating a single sensor on a servo or stepper motor. For the later to be useful, you would also need to know the position the sensor is pointing when a reading is taken. Depending on the angular resolution you wish to obtain, you may avoid having to add a slotted encoder wheel to obtain motor position by using the step count when using a stepper motor, or the pulse width input to position the servo motor. In each case the angular resolution of your sensor readings should be greater than twice the motor's positional error. For example, if you command the servo, with a 2 degree position error, to a position of 5 degrees, then it may actually be anywhere from 3 - 7 degrees. In order for your sensor to \see" a stimulus source at 5 degrees the sensor's acceptance angle should be at least 4 degrees (or 2 degrees from center) so that if the position, say for example, is at 3 degrees then the sensor will detect a stimulus source radiating at an angle from 1 - 5 degrees. Since the phototransistor's spectral sensitivity is usually as low as 400 nanometers (see Figure 1) they can also be used to track other light sources. A simple game of Cops `N Robbers, played in our mobile robotics course two years ago, is possible by adding a bright light to the tops of two robots. Program the Cop to chase the light by using a seek-light behaviour, and the Robber should avoid the Cop by using a seekdark behaviour. Videotape the game and send us a copy and we'll send you a University of Alberta robot team shirt for your e ort.

References

1] Braitenberg, V. (1984). Vehicles: Experiments in Synthetic Psychology, MIT Press, Cambridge, MA. 2] Optoelectronics Infrared Products Catalog E26, Honeywell, 1993. 3] The Optoelectronics Data Book for Design Engineers, Fifth Edition, Texas Instruments Inc. 1978.

Figure 3: Shown are three techniques for connecting the IR sensor. The top photo uses a plug/socket connection. The middle photo solders both the IR LED and phototransistor to a small circuit board with female header. The bottom photo shows how hot-glue can be used to strengthen a wire to component solder connection.

The Robotics Practitioner, 2(2):15-20, 1996.

0 Target Motion

-25

+25

-90 IR Pair

5"

90

Obstacle Sensor Voltage of White Paper Target 4.6 Sun Light 4.4 Dim Lighting 4.2 4 3.8 3.6 3.4 3.2 3 2.8 2.6 2.4 2.2 2 1.8 1.6 1.4 1.2 1 0.8 0.6 -30 -25 -20 -15 -10 -5 0 5 10 15 20 25 30 Angle of White Paper Target to Sensor (degrees)

PhotoTransistor (BP103B) Output (volts DC)

Figure 4: Once you've mounted the emitter/detector you can determine its angle of acceptance by making a voltage as a function of angle plot. Place a small target at the height of the sensor and record the output voltage as you move the target along a semicircle.

Figure 5: The output voltage of a Siemens BP103B phototransistor as a function of angle to a small 1" by 1" white target. Shown are two room lighting conditions: Sun Light (which contains lots of IR noise) and a dimly lit room with low ambient IR noise.

The Robotics Practitioner, 2(2):15-20, 1996.

Figure 6: The output voltage of a Siemens BP103B phototransistor as a function of distance to a white target. Shown is the output of the phototransistor with the IR LED (a Siemens SFH484) on and o . Ambient IR noise can be eliminated by subtracting the \OFF" from the \ON" voltage.

Figure 8: For a minimal obstacle detection system, mount a left/right pair of IR sensors pointing at an angle such that their eld-of-view (angle of acceptance) overlaps at the minimum straight-on detection range.

Figure 7: Compared are the output voltages of a Radio Shack SY-54PTR and a Siemens BP103B phototransistor as a function of distance to a white target.

The Robotics Practitioner, 2(2):15-20, 1996.

Figure 9: The IR LED/phototransistor pair can be mounted in 1/4" hole using a standard LED mounting holder on an aluminum bracket as shown.

Figure 10: By adjusting the obstacle avoidance thresholds, you a ect the distance the robot maintains from detected obstacles. Shown here are the start and end positions for 10 robots trying to maintain a minimum avoidance distance. These robots only detect obstacles in the forward direction using two sensors.

Вам также может понравиться

- Optocoupler DevicesДокумент7 страницOptocoupler Devicesjimdigriz100% (2)

- Optical CouplersДокумент36 страницOptical CouplersSyed muhammad zaidiОценок пока нет

- Diode (Lab Report)Документ12 страницDiode (Lab Report)Benjamin Cooper50% (6)

- Obstacle Sensor PCB Project ReportДокумент13 страницObstacle Sensor PCB Project ReportHammad Umer0% (2)

- IR Sensor Project ReportДокумент14 страницIR Sensor Project ReportAbhijeet chaudhary100% (1)

- Newnes Electronics Circuits Pocket Book (Linear IC): Newnes Electronics Circuits Pocket Book, Volume 1От EverandNewnes Electronics Circuits Pocket Book (Linear IC): Newnes Electronics Circuits Pocket Book, Volume 1Рейтинг: 4.5 из 5 звезд4.5/5 (3)

- DiodeДокумент20 страницDiodeMahmoudKhedrОценок пока нет

- TheEconomist 2023 04 01Документ297 страницTheEconomist 2023 04 01Sh FОценок пока нет

- ABP - IO Implementing - Domain - Driven - DesignДокумент109 страницABP - IO Implementing - Domain - Driven - DesignddoruОценок пока нет

- Adi Design SolutionДокумент7 страницAdi Design SolutionMark John Servado AgsalogОценок пока нет

- Photo-Coupler and Touch Alarm Switch: Experiment #9Документ10 страницPhoto-Coupler and Touch Alarm Switch: Experiment #9Taha Khan 4AОценок пока нет

- Obstacle Sensed Switching in Industrial ApplicationsДокумент68 страницObstacle Sensed Switching in Industrial ApplicationsAbhijit PattnaikОценок пока нет

- Proximity Sensor TutorialДокумент13 страницProximity Sensor TutorialShubham AgrawalОценок пока нет

- Overview of IR SensorДокумент8 страницOverview of IR SensorMahesh KallaОценок пока нет

- Photo-Coupler and Touch Alarm SwitchДокумент9 страницPhoto-Coupler and Touch Alarm SwitchZeeshan RafiqОценок пока нет

- Ir SensorДокумент6 страницIr SensorCharming RizwanОценок пока нет

- Ir Trans and ReceiverДокумент13 страницIr Trans and ReceiverAnil KumarОценок пока нет

- 71M651x Energy Meter IC: Using IR Diodes and PhototransistorsДокумент7 страниц71M651x Energy Meter IC: Using IR Diodes and Phototransistorsagus wiyonoОценок пока нет

- Fire Alarms 497Документ6 страницFire Alarms 497MohanОценок пока нет

- Intro to sensors lab manualДокумент7 страницIntro to sensors lab manualTayyab HussainОценок пока нет

- 1.2 Block Diagram With Explanation:: Chapter-1Документ27 страниц1.2 Block Diagram With Explanation:: Chapter-1Vamshi KrishnaОценок пока нет

- Basic Electronics (18ELN14/18ELN24) - Special-Purpose Diodes and 7805 Voltage Regulator (Module 1)Документ6 страницBasic Electronics (18ELN14/18ELN24) - Special-Purpose Diodes and 7805 Voltage Regulator (Module 1)Shrishail Bhat100% (2)

- Optocouplers: Electrical Isolation and Transfer CharacteristicsДокумент7 страницOptocouplers: Electrical Isolation and Transfer CharacteristicsKainat MalikОценок пока нет

- Letterbox Letter CounterДокумент27 страницLetterbox Letter CounterSAMEULОценок пока нет

- Open Loop Control Using PWM for Motor and LightДокумент18 страницOpen Loop Control Using PWM for Motor and Lightafshan ishaqОценок пока нет

- Laser Security System Project ReportДокумент29 страницLaser Security System Project ReportAnkit baghelОценок пока нет

- Obstacle Detector: Name Roll No Name Roll No Name Roll No Name Roll NoДокумент9 страницObstacle Detector: Name Roll No Name Roll No Name Roll No Name Roll NoErole Technologies Pvt ltd Homemade EngineerОценок пока нет

- Applications of Ir Sensor:Smart DC Fan & Vehicle Collision AvoidanceДокумент17 страницApplications of Ir Sensor:Smart DC Fan & Vehicle Collision AvoidanceJayWinnerОценок пока нет

- Optical Communication Lab Manual 2022 Multitech KitsДокумент27 страницOptical Communication Lab Manual 2022 Multitech KitsGouthamiОценок пока нет

- Det01cfc High Speed Detector IngaasДокумент20 страницDet01cfc High Speed Detector Ingaasnithin_v90Оценок пока нет

- Chapter TwoДокумент39 страницChapter TwodanielОценок пока нет

- Opto Isolators Lesson 07-17-2012Документ35 страницOpto Isolators Lesson 07-17-2012whynot05Оценок пока нет

- Photoelectric Smoke Detector IC with InterconnectДокумент10 страницPhotoelectric Smoke Detector IC with InterconnectManu SeaОценок пока нет

- Procedure - AC Circuits and Signal Modulation - W20Документ6 страницProcedure - AC Circuits and Signal Modulation - W20ChocoОценок пока нет

- IR SensorДокумент8 страницIR Sensoraksaltaf9137100% (1)

- Proximity Sensor TutorialДокумент13 страницProximity Sensor TutorialKaran Banthia100% (1)

- Identifying Electronic ComponentsДокумент9 страницIdentifying Electronic ComponentsKshitij DudheОценок пока нет

- Experiment No.2Документ10 страницExperiment No.2Pankaj GargОценок пока нет

- PDA100A ManualДокумент17 страницPDA100A ManualMahmoud AllawiОценок пока нет

- (Type The Document Title) : Light Detector Using LDRДокумент7 страниц(Type The Document Title) : Light Detector Using LDRRishabh GoyalОценок пока нет

- 17 - Mini Project ReportДокумент16 страниц17 - Mini Project ReportPriyadarshi M67% (3)

- 4 Way Security System Major CorrectedДокумент72 страницы4 Way Security System Major CorrectedMarndi KashrayОценок пока нет

- Optocoupler Input Drive CircuitsДокумент7 страницOptocoupler Input Drive CircuitsLuis Armando Reyes Cardoso100% (1)

- Obstacle Avoider Using Atmega 8, IR Sensor, Embedded C Programming On AVR TutorialДокумент7 страницObstacle Avoider Using Atmega 8, IR Sensor, Embedded C Programming On AVR TutorialMUDAM ALEKYAОценок пока нет

- ElectronicsДокумент18 страницElectronicsSrinjay BhattacharyaОценок пока нет

- Exp 3Документ6 страницExp 3deek2711Оценок пока нет

- Scope of Work in The ProjectДокумент16 страницScope of Work in The Projectmeen19111087 KFUEITОценок пока нет

- Project Report On Infra Red Remote ControlДокумент19 страницProject Report On Infra Red Remote ControlDIPAK VINAYAK SHIRBHATE88% (8)

- Expt Write-UpДокумент37 страницExpt Write-UpTushar PatwaОценок пока нет

- Feedback-Based Automatic Tuner for Small Magnetic LoopДокумент16 страницFeedback-Based Automatic Tuner for Small Magnetic LoopIngo KnitoОценок пока нет

- IR Remote Control Extender Circuit GuideДокумент4 страницыIR Remote Control Extender Circuit GuideAhmedОценок пока нет

- Line Follower Using L293DДокумент8 страницLine Follower Using L293DPrithaj JanaОценок пока нет

- UIET Lab Manual Basic ElectronicsДокумент48 страницUIET Lab Manual Basic ElectronicsHarry HarryОценок пока нет

- Ref1 Characteristics of SensorsДокумент8 страницRef1 Characteristics of Sensorsosama.20en714Оценок пока нет

- High-Speed Op Amp Enables Infrared (IR) Proximity SensingДокумент3 страницыHigh-Speed Op Amp Enables Infrared (IR) Proximity SensingLucas Gabriel CasagrandeОценок пока нет

- ShaktiДокумент26 страницShaktisshakti006Оценок пока нет

- 1982 National AN301 Conditioning ElectronicsДокумент13 страниц1982 National AN301 Conditioning ElectronicsTelemetroОценок пока нет

- I. What Is IR SENSOR?Документ9 страницI. What Is IR SENSOR?Mạnh QuangОценок пока нет

- Ir SensorДокумент7 страницIr Sensoransh goelОценок пока нет

- Apd Intro JraДокумент8 страницApd Intro JraIftekher1175Оценок пока нет

- Optical Communication LabДокумент44 страницыOptical Communication LabSaivenkat BingiОценок пока нет

- Celsius To FarenheitДокумент1 страницаCelsius To FarenheitLăcătuş DanielОценок пока нет

- How To Login From An Internet Cafe Without Worrying About Keyloggers PDFДокумент2 страницыHow To Login From An Internet Cafe Without Worrying About Keyloggers PDFJose Arnaldo Bebita DrisОценок пока нет

- Mason Rule 1Документ10 страницMason Rule 1Lăcătuş DanielОценок пока нет

- Controller Area Network: Serial Network Technology For Embedded SolutionsДокумент67 страницController Area Network: Serial Network Technology For Embedded SolutionsVictor PradoОценок пока нет

- PcbdesignerДокумент69 страницPcbdesignerBishal KarkiОценок пока нет

- PSpice Notes v5.0Документ15 страницPSpice Notes v5.0Lăcătuş DanielОценок пока нет

- Infrared Headlights: Page 236 Robotics With The Boe-BotДокумент6 страницInfrared Headlights: Page 236 Robotics With The Boe-BotJeff MburuОценок пока нет

- Joule Thief Detailed ConstructionДокумент8 страницJoule Thief Detailed ConstructionLăcătuş Daniel100% (1)

- Conv VersationДокумент4 страницыConv VersationCharmane Barte-MatalaОценок пока нет

- Unit 1 TQM NotesДокумент26 страницUnit 1 TQM NotesHarishОценок пока нет

- Radio Frequency Transmitter Type 1: System OperationДокумент2 страницыRadio Frequency Transmitter Type 1: System OperationAnonymous qjoKrp0oОценок пока нет

- تاااتتاااДокумент14 страницتاااتتاااMegdam Sameeh TarawnehОценок пока нет

- Steam Turbine Theory and Practice by Kearton PDF 35Документ4 страницыSteam Turbine Theory and Practice by Kearton PDF 35KKDhОценок пока нет

- Accomplishment Report 2021-2022Документ45 страницAccomplishment Report 2021-2022Emmanuel Ivan GarganeraОценок пока нет

- Audit Acq Pay Cycle & InventoryДокумент39 страницAudit Acq Pay Cycle & InventoryVianney Claire RabeОценок пока нет

- 15142800Документ16 страниц15142800Sanjeev PradhanОценок пока нет

- Movement and Position: Question Paper 4Документ14 страницMovement and Position: Question Paper 4SlaheddineОценок пока нет

- Difference Between Mark Up and MarginДокумент2 страницыDifference Between Mark Up and MarginIan VinoyaОценок пока нет

- Table of Specification for Pig Farming SkillsДокумент7 страницTable of Specification for Pig Farming SkillsYeng YengОценок пока нет

- ITU SURVEY ON RADIO SPECTRUM MANAGEMENT 17 01 07 Final PDFДокумент280 страницITU SURVEY ON RADIO SPECTRUM MANAGEMENT 17 01 07 Final PDFMohamed AliОценок пока нет

- 8dd8 P2 Program Food MFG Final PublicДокумент19 страниц8dd8 P2 Program Food MFG Final PublicNemanja RadonjicОценок пока нет

- 2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTДокумент5 страниц2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTIbrahim JaberОценок пока нет

- Web Api PDFДокумент164 страницыWeb Api PDFnazishОценок пока нет

- GLF550 Normal ChecklistДокумент5 страницGLF550 Normal ChecklistPetar RadovićОценок пока нет

- Controle de Abastecimento e ManutençãoДокумент409 страницControle de Abastecimento e ManutençãoHAROLDO LAGE VIEIRAОценок пока нет

- Wi FiДокумент22 страницыWi FiDaljeet Singh MottonОценок пока нет

- STAT455 Assignment 1 - Part AДокумент2 страницыSTAT455 Assignment 1 - Part AAndyОценок пока нет

- Personalised MedicineДокумент25 страницPersonalised MedicineRevanti MukherjeeОценок пока нет

- AATCC 100-2004 Assesment of Antibacterial Dinishes On Textile MaterialsДокумент3 страницыAATCC 100-2004 Assesment of Antibacterial Dinishes On Textile MaterialsAdrian CОценок пока нет

- To Introduce BgjgjgmyselfДокумент2 страницыTo Introduce Bgjgjgmyselflikith333Оценок пока нет

- Resume of Deliagonzalez34 - 1Документ2 страницыResume of Deliagonzalez34 - 1api-24443855Оценок пока нет

- Prof Ram Charan Awards Brochure2020 PDFДокумент5 страницProf Ram Charan Awards Brochure2020 PDFSubindu HalderОценок пока нет

- DC Motor Dynamics Data Acquisition, Parameters Estimation and Implementation of Cascade ControlДокумент5 страницDC Motor Dynamics Data Acquisition, Parameters Estimation and Implementation of Cascade ControlAlisson Magalhães Silva MagalhãesОценок пока нет

- House Rules For Jforce: Penalties (First Offence/Minor Offense) Penalties (First Offence/Major Offence)Документ4 страницыHouse Rules For Jforce: Penalties (First Offence/Minor Offense) Penalties (First Offence/Major Offence)Raphael Eyitayor TyОценок пока нет

- Google Dorks For PentestingДокумент11 страницGoogle Dorks For PentestingClara Elizabeth Ochoa VicenteОценок пока нет

- PLC Networking with Profibus and TCP/IP for Industrial ControlДокумент12 страницPLC Networking with Profibus and TCP/IP for Industrial Controltolasa lamessaОценок пока нет