Академический Документы

Профессиональный Документы

Культура Документы

Computer Architecture Unit V - Advanced Architecture Part-A

Загружено:

Jennifer PeterОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Computer Architecture Unit V - Advanced Architecture Part-A

Загружено:

Jennifer PeterАвторское право:

Доступные форматы

COMPUTER ARCHITECTURE UNIT V ADVANCED ARCHITECTURE PART-A What is parallelism and pipelining in computer Architecture? 2. What is instruction pipelining?

? 3. What is super pipelining? 4. Explain pipelining in CPU design? 5. Write short note on Hazards of pipelining. 6. What is a multiprocess ? 7. Explain about parallel computers. 8. What is parallelism 9. What is pipelining in computer Architecture? 10. What is RISC and CISC?

1.

PART-B 1. 2. 3. 4. 5. What is parallel processing? Explain Flynn's classification of parallel processing. What are the characteristics of RISC architecture? What is pipeline? Explain its simplified & expanded view. Explain about the multiprocessor? What are reasons of pipeline conflicts in pipelined processor ? How are they resolved?

ANSWER KEY UNIT V ADVANCED ARCHITECTURE PART-A

1. LOOK AHEAD, PARALLELISM , AND PIPELINING IN COMPUTER ARCHITECTURE Look ahead techniques were introduced to prefetch instruction in order to overlap I/F (Instruction fetch/decode and execution) operations and to enable functional parallelism. Functional parallelism was supported by two approaches : One is to use multiple functional units simultaneously and the other is to practice pipelining at various processing levels. The later includes pipelined instruction execution, pipelined arithmetic computation, and memory access operations. Pipelining has proven especially attractive in performing identical operations repeatedly over vector data strings. Vector operations were originally carried out implicitly by software controlled looping using scalar pipeline processors. 2. An instruction pipeline reads Consecutive instruction from memory previous instructions are being executed in other segments Pipeline processing can occur not only in data stream but in the instruction stream as well. This causes the instruction fetch and executes phases to overlap and perform simultaneous. The pipeline must be emptied and all instructions that have been read from memory after the branch instruction must be discarded.

3. Pipelining is the concept of overlapping of multiple instructions during execution time. Pipeline splits one task into multiple subtasks. These subtasks of two or more different tasks are executed parallel by hardware unit. The concept of pipeline can be same as the water tab. The amount of water coming out of tab in equal to amount of water enters at pipe of tab. Suppose there are five tasks to be executed. Further assume that each task can be divided into four subtasks so that each of these subtasks are executed by one state of hardware. The execution of these five tasks is super pipe thing. 4. Pipelining is a technique of decomposing a sequential process into sub-operations, with each subprocess being executed in a special dedicated segment that operates concurrently with all other segments A pipeline is a collection of processing segments through which binary information which is deforms partial processing dictated by the way the task is partitioned. The result obtained from the computation in each segment is transferred to next segment in the pipeline. The final result is obtained after the data have passed through all segments.

5. Pipelining is a technique of decomposing a sequential process into sub operations, with each sub process being executed in special dedicated segment that operates Concurrently with all other segments. Pipeline can be visualized as a collection of processing segments through which binary information flows. Each segment performs partial processing. The result obtained from computation in each segment is transferred to next segment in pipeline. It implies a flow of information to an assembly line. The Simplest way of viewing the pipeline structure is that each segment consists of an input register followed by a Combination circuit. The register holds the data and the combinations circuit performs the sub operations in the particular segment. The output of Combination CK is applied to input register

of the next segment. A clock is applied to all registers after enough time has elapsed to perform all segment activity.

6. A multiprocessor system is having two or more processors. So, multiprocessor is which execute more than of one and two processes. The main feature multiprocessor system is to share main memory or other resources by all processors. 7. Parallel computers provides parallelism in unprocessor or multiple processors can enhance the performance of computer. The concurrency in unprocessor or superscalar in terms of hardwares and software implementation can lead to faster execution of programs in computer. Parallel processing provides simultaneous data processing to increase the computational seed of computer. 8. LOOK AHEAD, PARALLELISM , AND PIPELINING IN COMPUTER ARCHITECTURE Look ahead techniques were introduced to prefetch instruction in order to overlap I/F (Instruction fetch/decode and execution) operations and to enable functional parallelism. Functional parallelism was supported by two approaches : One is to use multiple functional units simultaneously and the other is to practice pipelining at various processing levels. 9. Pipelining is an implementation technique where multiple instructions are overlapped in execution. The computer pipeline is divided in stages. Each stage completes a part of an instruction in parallel. The stages are connected one to the next to form a pipe - instructions enter at one end, progress through the stages, and exit at the other end. 10. RISC : It means Reduced instruction set computing. RISC machine use the simple addressing mode. Logic for implementation of these instructions is simple because instruction set is small in RISC machine. CISC: It means complex instruction set computing. It uses wide range of instruction. These instructions produce more efficient result. It uses the micro programmed control unit when RISC machines mostly uses hardwired control unit. It uses high level statement. It is easy to understand for human being. PART-B 1. Parallel processing is another method used to improve performance in computer system, when a system processes two different instructions simultaneously, it is performing parallel processing. Flynns Classification: Flynns classification is based on multiplicity of instruction streams and data streams observed by the CPU during program execution. Let Is and Ds are minimum number of streams flowing at any point in the execution, then the computer organization can be categorized as follows: 1) Single Instruction and Single Data stream (SISD) In this organization, sequential execution of instructions is performed by one CPU containing a single processing element (PE), i.e., ALU under one control unit. Therefore, SISD machines are conventional serial computers that process onlyone stream of instructions and one stream of data. 2) Single Instruction and Multiple Data stream (SIMD)

In this organization, multiple processing elements work under the control of a single control unit. It has one instruction and multiple data stream. All the processing elements of this organization receive the same instruction broadcast from the CU. Main memory can also be divided into modules for generating multiple data streams acting as a distributed memory. Therefore, all the processing elements simultaneously execute the same instruction and are said to be 'lock-stepped' together. Each processor takes the data from its own memory and hence it has on distinct data streams. (Some systems also provide a shared global memory for communications.) Every processor must be allowed to complete its instruction before the next instruction is taken for execution. Thus, the execution of instructions is synchronous. Examples of SIMD organization are ILLIAC-IV, PEPE, BSP, STARAN, MPP, DAP and the Connection Machine (CM-1). 3) Multiple Instruction and Single Data stream (MISD) In this organization, multiple processing elements are organized under the control of multiple control units. Each control unit is handling one instruction stream and processed through its corresponding processing element. But each processing element is processing only a single data stream at a time. Therefore, for handling multiple instruction streams and single data stream, multiple control units and multiple processing elements are organized in this classification. All processing elements are interacting with the common shared memory for the organization of single data stream. The only known example of a computer capable of MISD operation is the C.mmp built byCarnegie-Mellon University. This classification is not popular in commercial machines as the concept of single data streams executing on multiple processors is rarely applied. But for the specialized applications, MISD organization can be very helpful. For example, Real time computers need to be fault tolerant where several processors execute the same data for producing the redundant data. This is also known as N- version programming. All these redundant data are compared as results which should be same; otherwise faulty unit is replaced. Thus MISD machines can be applied to fault tolerant real time computers. 4) Multiple Instruction and Multiple Data stream (MIMD) In this organization, multiple processing elements and multiple control units are organized as in MISD. But the difference is that now in this organization multiple instruction streams operate on multiple data streams. Therefore, for handling multiple instruction streams, multiple control units and multiple processing elements are organized such that multiple processing elements are handling multiple data streams from the Main memory. The processors work on their own data with their own instructions. Tasks executed by different processors can start or finish at different times. They are not lock-stepped, as in SIMD computers, but run asynchronously. This classification actually recognizes the parallel computer. That means in the real sense MIMD organization is said to be a Parallel computer. All multiprocessor systems fall under this classification.

Вам также может понравиться

- Deutz EMR3 210408 ENG System DescriptionДокумент65 страницDeutz EMR3 210408 ENG System DescriptionMircea Gilca100% (10)

- Parallel Architecture ClassificationДокумент41 страницаParallel Architecture ClassificationAbhishek singh0% (1)

- Operating System 2 Marks and 16 Marks - AnswersДокумент45 страницOperating System 2 Marks and 16 Marks - AnswersDiana Arun75% (4)

- OptiX PTN 960 V100R005C01 Hardware Description 02Документ228 страницOptiX PTN 960 V100R005C01 Hardware Description 02Michael WongОценок пока нет

- Unit 6 - Pipeline, Vector Processing and MultiprocessorsДокумент23 страницыUnit 6 - Pipeline, Vector Processing and MultiprocessorsPiyush KoiralaОценок пока нет

- Unit 5Документ29 страницUnit 5kiran281196Оценок пока нет

- Pipelining in Computer ArchitectureДокумент46 страницPipelining in Computer ArchitectureMag CreationОценок пока нет

- Parallel Computing (Unit5)Документ25 страницParallel Computing (Unit5)Shriniwas YadavОценок пока нет

- Introduction To Parallel ProcessingДокумент11 страницIntroduction To Parallel ProcessingSathish KumarОценок пока нет

- Chapter 08 - Pipeline and Vector ProcessingДокумент14 страницChapter 08 - Pipeline and Vector ProcessingBijay MishraОценок пока нет

- Advanced Computer ArchitectureДокумент28 страницAdvanced Computer ArchitectureSameer KhudeОценок пока нет

- 5 Marks Q. Describe Array Processor ArchitectureДокумент11 страниц5 Marks Q. Describe Array Processor ArchitectureGaurav BiswasОценок пока нет

- COA Chapter 6Документ6 страницCOA Chapter 6Abebe GosuОценок пока нет

- CA Classes-241-245Документ5 страницCA Classes-241-245SrinivasaRaoОценок пока нет

- Ca Unit 4 PrabuДокумент24 страницыCa Unit 4 Prabu6109 Sathish Kumar JОценок пока нет

- MIPS Report FileДокумент17 страницMIPS Report FileAayushiОценок пока нет

- Unit 5-2 COAДокумент52 страницыUnit 5-2 COAmy storiesОценок пока нет

- Aca Univ 2 Mark and 16 MarkДокумент20 страницAca Univ 2 Mark and 16 MarkmenakadevieceОценок пока нет

- Parallelism in Computer ArchitectureДокумент27 страницParallelism in Computer ArchitectureKumarОценок пока нет

- Chapter 9Документ28 страницChapter 9Hiywot yesufОценок пока нет

- Parallel Computig AssignmentДокумент15 страницParallel Computig AssignmentAnkitmauryaОценок пока нет

- CA Classes-76-80Документ5 страницCA Classes-76-80SrinivasaRaoОценок пока нет

- Concept of Pipelining - Computer Architecture Tutorial What Is Pipelining?Документ5 страницConcept of Pipelining - Computer Architecture Tutorial What Is Pipelining?Ayush KumarОценок пока нет

- Parallel Computer Models: PCA Chapter 1Документ61 страницаParallel Computer Models: PCA Chapter 1AYUSH KUMARОценок пока нет

- Parallel Archtecture and ComputingДокумент65 страницParallel Archtecture and ComputingUmesh VatsОценок пока нет

- Operating Systems-1Документ24 страницыOperating Systems-1M Jameel MydeenОценок пока нет

- Unit 3 NotesДокумент9 страницUnit 3 NotesPurvi ChaurasiaОценок пока нет

- COMPUTER ARCHITECTURE - Final - Set2Документ5 страницCOMPUTER ARCHITECTURE - Final - Set2anshika mahajanОценок пока нет

- Computer Architecture Assignment 1Документ5 страницComputer Architecture Assignment 1Etabo Moses KapusОценок пока нет

- COMP3231 80questionsДокумент16 страницCOMP3231 80questionsGenc GashiОценок пока нет

- ACA AssignmentДокумент18 страницACA AssignmentdroidОценок пока нет

- CA Unit IV Notes Part 1 PDFДокумент17 страницCA Unit IV Notes Part 1 PDFaarockiaabins AP - II - CSEОценок пока нет

- Cep 4Документ11 страницCep 4ShivamОценок пока нет

- OS Question Bank CS1252Документ21 страницаOS Question Bank CS1252Nancy PeterОценок пока нет

- Unit 7 - Parallel Processing ParadigmДокумент26 страницUnit 7 - Parallel Processing ParadigmMUHMMAD ZAID KURESHIОценок пока нет

- Swami Vivekananda Institute of Science &: TechnologyДокумент8 страницSwami Vivekananda Institute of Science &: Technologyamit BeraОценок пока нет

- Os-2 Marks-Q& AДокумент21 страницаOs-2 Marks-Q& ARaja MohamedОценок пока нет

- Types of Operating System SchedulersДокумент26 страницTypes of Operating System SchedulersVa SuОценок пока нет

- Ec 6009 - Advanced Computer Architecture 2 MarksДокумент8 страницEc 6009 - Advanced Computer Architecture 2 MarksNS Engg&Tech EEE DEPTОценок пока нет

- Hardware MultithreadingДокумент10 страницHardware MultithreadingFarin KhanОценок пока нет

- ACA Assignment 4Документ16 страницACA Assignment 4shresth choudharyОценок пока нет

- COA MidtermДокумент13 страницCOA MidtermAliaa TarekОценок пока нет

- Chapter 8Документ14 страницChapter 8Gemechis GurmesaОценок пока нет

- Sri Manakula Vinayagar Engineering CollegeДокумент21 страницаSri Manakula Vinayagar Engineering Collegenaveenraj_mtechОценок пока нет

- Computer PerformanceДокумент36 страницComputer PerformancemotazОценок пока нет

- Suyash Os NotesДокумент13 страницSuyash Os Notesakashcreation0199Оценок пока нет

- 3a FlynnsДокумент17 страниц3a FlynnsHitarth Anand RohraОценок пока нет

- 2mark and 16 OperatingSystemsДокумент24 страницы2mark and 16 OperatingSystemsDhanusha Chandrasegar SabarinathОценок пока нет

- Cs6303-Computer Architecture Unit-Iv Parallelism Part A: SvcetДокумент4 страницыCs6303-Computer Architecture Unit-Iv Parallelism Part A: SvcetKingsley SОценок пока нет

- Introduction To Os 2mДокумент10 страницIntroduction To Os 2mHelana AОценок пока нет

- Aos VivaДокумент14 страницAos VivaChaithanya DamerlaОценок пока нет

- 07 - Chapter 1 PDFДокумент27 страниц07 - Chapter 1 PDFنورالدنياОценок пока нет

- Architecture and OrganizationДокумент4 страницыArchitecture and OrganizationNickОценок пока нет

- OS - 2 Marks With AnswersДокумент28 страницOS - 2 Marks With Answerssshyamsaran93Оценок пока нет

- Design of 32-Bit Risc Cpu Based On Mips: Journal of Global Research in Computer ScienceДокумент5 страницDesign of 32-Bit Risc Cpu Based On Mips: Journal of Global Research in Computer ScienceAkanksha Dixit ManodhyaОценок пока нет

- Unit 9: Fundamentals of Parallel ProcessingДокумент16 страницUnit 9: Fundamentals of Parallel ProcessingLoganathan RmОценок пока нет

- PipeliningДокумент13 страницPipeliningCS20B1031 NAGALAPURAM DAATHWINAAGHОценок пока нет

- AssignmentДокумент4 страницыAssignmentMuqaddas PervezОценок пока нет

- 24-25 - Parallel Processing PDFДокумент36 страниц24-25 - Parallel Processing PDFfanna786Оценок пока нет

- Chapter 3-Processes: Mulugeta AДокумент34 страницыChapter 3-Processes: Mulugeta Amerhatsidik melkeОценок пока нет

- Cloud ComputingДокумент27 страницCloud ComputingmanojОценок пока нет

- Operating Systems Interview Questions You'll Most Likely Be AskedОт EverandOperating Systems Interview Questions You'll Most Likely Be AskedОценок пока нет

- VB ControlsДокумент8 страницVB ControlsJennifer PeterОценок пока нет

- Research Methodology Course MaterialДокумент30 страницResearch Methodology Course MaterialJennifer PeterОценок пока нет

- Waterfall ModelДокумент18 страницWaterfall ModelJennifer Peter100% (1)

- Sherwin Chan - RISC ArchitectureДокумент28 страницSherwin Chan - RISC ArchitectureArun Kumar DasariОценок пока нет

- Computer Architecture Unit I - Introduction To Digital Design Part-AДокумент1 страницаComputer Architecture Unit I - Introduction To Digital Design Part-AJenniferОценок пока нет

- Railway Reservation SystemДокумент17 страницRailway Reservation Systemnavmpi50% (2)

- Railway Reservation SystemДокумент53 страницыRailway Reservation SystemJennifer Peter53% (19)

- C C C C C C CДокумент19 страницC C C C C C CJennifer PeterОценок пока нет

- Arduino Part 1: Topics: Microcontrollers Programming Basics: Structure and Variables Digital OutputДокумент192 страницыArduino Part 1: Topics: Microcontrollers Programming Basics: Structure and Variables Digital OutputJenica NavarroОценок пока нет

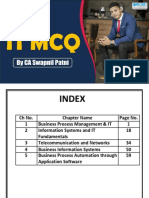

- It MCQДокумент77 страницIt MCQHEMANT PARMARОценок пока нет

- Indiabix Computer Fundamental Section 1-6Документ39 страницIndiabix Computer Fundamental Section 1-6SaimAlHasanОценок пока нет

- Low Power VLSI Circuits and Systems Prof. Ajit Pal Department of Computer Science and Engineering Indian Institute of Technology, KharagpurДокумент22 страницыLow Power VLSI Circuits and Systems Prof. Ajit Pal Department of Computer Science and Engineering Indian Institute of Technology, KharagpurDebashish PalОценок пока нет

- UNIT 5 RISC ArchitectureДокумент16 страницUNIT 5 RISC ArchitectureKESSAVAN.M ECE20Оценок пока нет

- BIC10503 Computer Architecture: Chap 1: IntroductionДокумент35 страницBIC10503 Computer Architecture: Chap 1: IntroductionAfiq DanialОценок пока нет

- Foundations of Computer Science - Chapter 1Документ34 страницыFoundations of Computer Science - Chapter 1Shaun NevilleОценок пока нет

- Computer Organization: Sandeep KumarДокумент117 страницComputer Organization: Sandeep KumarGaurav NОценок пока нет

- 16-05-04-131 Cpe 503 Assignment SolutionsДокумент5 страниц16-05-04-131 Cpe 503 Assignment Solutionssuleman idrisОценок пока нет

- R094 02 PDFДокумент4 страницыR094 02 PDFSergio Eu CaОценок пока нет

- COE 381 Microprocessors - Dr. Boateng v2Документ195 страницCOE 381 Microprocessors - Dr. Boateng v2CArl Simpson LennonОценок пока нет

- H AMACHERДокумент59 страницH AMACHERPushpavalli Mohan0% (1)

- FPGA Based 32 Bit ALU For Automatic Antenna Control Unit SM - Adnan21@Документ5 страницFPGA Based 32 Bit ALU For Automatic Antenna Control Unit SM - Adnan21@Edwin GeraldОценок пока нет

- RISC Vs CISC - The Post-RISC Era - Jon "Hannibal" StokesДокумент26 страницRISC Vs CISC - The Post-RISC Era - Jon "Hannibal" Stokesjlventigan100% (1)

- REF542plus Om 755869 ENgДокумент144 страницыREF542plus Om 755869 ENgcharlnnmdОценок пока нет

- Forouzan 5Документ41 страницаForouzan 5Angelo ViniciusОценок пока нет

- William Stallings Computer Organization and Architecture 7 Edition System BusesДокумент55 страницWilliam Stallings Computer Organization and Architecture 7 Edition System BusespuyОценок пока нет

- Electronic Engineering 23 March 2021Документ33 страницыElectronic Engineering 23 March 2021utkarsh mauryaОценок пока нет

- 8087Документ23 страницы8087nadar uthayakumarОценок пока нет

- Project 12springДокумент5 страницProject 12springFarzin GhotbiОценок пока нет

- About Computer System and Block DaigramДокумент3 страницыAbout Computer System and Block DaigramNaman KОценок пока нет

- LL GC223Документ4 страницыLL GC223petarlОценок пока нет

- C Programing Manual 2015 (Updated)Документ75 страницC Programing Manual 2015 (Updated)Smridhi ChoudhuryОценок пока нет

- COA - Chapter # 3Документ26 страницCOA - Chapter # 3Set EmpОценок пока нет

- Microprocessor: MICROPROCESSOR: Block Diagram and Features Ock Diagram and FeaturesДокумент2 страницыMicroprocessor: MICROPROCESSOR: Block Diagram and Features Ock Diagram and FeaturesYoyo MaitrayОценок пока нет

- Slides Adapted From: Foundations of Computer Science, Behrouz ForouzanДокумент21 страницаSlides Adapted From: Foundations of Computer Science, Behrouz Forouzanahmad alkasajiОценок пока нет

- 2.1.1 Computer Organization AutosavedДокумент15 страниц2.1.1 Computer Organization AutosavedSnigdha KodipyakaОценок пока нет

- Air Brake System Using The Application of Exhaust Gas in Ic EnginesДокумент6 страницAir Brake System Using The Application of Exhaust Gas in Ic EnginesVinayОценок пока нет