Академический Документы

Профессиональный Документы

Культура Документы

Of Two Minds When Making A Decision

Загружено:

Khairul Anwar Abd HamidОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Of Two Minds When Making A Decision

Загружено:

Khairul Anwar Abd HamidАвторское право:

Доступные форматы

Of Two Minds When Making a Decision

We may make snap judgments, or mull things carefully. Why and when do we use the brain systems behind these decision-making styles?

Jun 3, 2008 |By Alan G. Sanfey and Luke J. Chang

exclusive to istockphoto

One of the more enduring ideas in psychology, dating back to the time of William James a little more than a century ago, is the notion that human behavior is not the product of a single process, but rather reflects the interaction of different specialized subsystems. These systems, the idea goes, usually interact seamlessly to determine behavior, but at times they may compete. The end result is that the brain sometimes argues with itself, as these distinct systems come to different conclusions about what we should do. The major distinction responsible for these internal disagreements is the one between automatic and controlled processes. System 1 is generally automatic, affective and heuristic-based, which means that it relies onmental shortcuts. It quickly proposes intuitive answers to problems as they arise. System 2, which corresponds closely with controlled processes, is slow, effortful,conscious, rule-based and also can be employed to monitor the quality of the answer provided by System 1. If its convinced that our intuition

is wrong, then its capable of correcting or overriding the automatic judgments. One way to conceptualize these systems is to think of the processes involved in driving a car: the novice needs to rely on controlled processing, requiring focusedconcentration on a sequence of operations that require mental effort and are easily disrupted by any distractions. In contrast, the well-practiced driver, relying on automatic processes, can carry out the same task efficiently while engaged in other activities (such as chatting with a passenger or tuning in to a radio station). Of course, he or she can always switch to more deliberative processing when necessary, such as conditions of extreme weather, heavy traffic or mechanical failure. In terms of decision-making, the description of System 2 bears a close resemblance to the rational, general-purpose processor presupposed by standard economic theory. Although these economic models have provided a strong and unifying foundation for the development of theory about decision-making, several decades of research on these topics has produced a wealth of evidence demonstrating that, in practice, these models do not provide a satisfactory description of actual human behavior. For instance, its been recognized for several decades the people are more sensitive to losses than to gains, a phenomenon known as loss aversion. This doesn't fit with economic theory, but it appears to be hard-wired into the brain. A major cause of these observed idiosyncrasies of decision-making may be that controlled processing accounts for only part of our overall behavioral repertoire, and in some circumstances can face stiff competition from domainspecific automatic processes that are part of System 1. One recent compelling demonstration of this phenomenon comes from Princeton University psychologist Adam Alter and colleagues, who examined how subtle changes in contextual cues, such as altering the legibility of a font, can facilitate switching between System 1 and System 2 processing. In a series of clever experiments, the authors manipulated the perceptual fluency of various sets of stimuli. In other words, they made it harder for

people to understand or decipher the scenarios they were asked to judge. For example, in one experiment participants were asked a series of questions, known as the Cognitive Reflection Test, designed to assess the degree to which System 1 intuitive processes are engaged in decision-making. In this test the gut reaction answer is invariably incorrect. (An example: if a bat and a ball together cost $1.10, and the bat costs $1 more than the ball, how much does the ball cost? If you find yourself wanting to shout out 10 cents, of course, then youre in the majority, but sadly also wrong.) Alter et al. found that by making the problem simply more difficult to read (by using grayed-out, reduced-size font), participants seemed to shift to more considered, System 2 responses, and as a result answered more of the questions correctly. The authors repeated this effect in various situations. For example they degraded the byline of the author on a review of an MP3 player. As a result, participants were less influenced by the apparent competence of the reviewer, which would have been based on viewing a picture of him or her, and more by the actual content of the review. In an additional scenario, they ask participants to either furrow their brow or puff their cheeks while assessing statistical information. The former activity is a cue for cognitive effort and as such led to decreased reliance on (incorrect) intuition, and more on dispassionate analytic thinking. These examples are important for several reasons. Most trivially, they are a good example of the ingenuity of researchers in finding interesting new ways to demonstrate the existence of the two purported systems. More important however, they begin to address the issue, largely ignored until now, of exactly why and when the various systems are employed in judgments. The work can lead towards more accurate predictions of when the respective Systems may be engaged. Finally, the examples illustrated here have the potential to contribute to how these systems may be usefully applied to construct environments that foster more sensible decisions. In a similar vein, a recent movement in behavioral economics seeks to acknowledge the limitations of everyday decision-making (such as the apparent reluctance of workers to contribute to 401K plans) and therefore design institutions in such a way as to encourage better choices

(such as introducing default options for retirement savings). Work led by Richard Thaler has demonstrated that, when people are asked to commit to saving money in the distant future (as opposed to right now), they end up making much more economically rational decisions. This is because System 2 seems to be in charge of making decisions that concern the future, while System 1 is more interested in the present moment. Of course, there are still many outstanding questions regarding the multiplesystem model, not least the degree to which these proposed systems actually exist and are truly separable. The welcome integration of neuroscience with traditional experimental psychology has led to some debate about how, and where, exactly, these systems are instantiated in the brain. Although there is a good deal of evidence for some level of dissociation between multiple systems that approximate controlled and automatic processing respectively, with parts of the brain such as areas of frontal cortex (controlled) and limbic regions (automatic) implicated in these processes, it seems highly unlikely that there are dedicated, independent, sub-systems at the neural level that are specific to these modes of processing. Therefore, one important question is whether the types of systems that have been described at the psychological level are a good analogue for the way information is organized and processed in the brain. Research such as Alter et al.s work points to the importance of becoming increasingly more specific about the situations and conditions that engage these distinct systems, which will prove to be essential in understanding how these multiple systems interact at a neural level.

Вам также может понравиться

- Nickel Experiment XWДокумент4 страницыNickel Experiment XWKhairul Anwar Abd HamidОценок пока нет

- Fire ExplosionДокумент2 страницыFire ExplosionKhairul Anwar Abd HamidОценок пока нет

- Introduction To Process Simulation of Plant DesignДокумент41 страницаIntroduction To Process Simulation of Plant DesignKhairul Anwar Abd HamidОценок пока нет

- Peer Teaching (Individu)Документ11 страницPeer Teaching (Individu)Khairul Anwar Abd HamidОценок пока нет

- 1 s2.0 S0141391014002535 Main PDFДокумент7 страниц1 s2.0 S0141391014002535 Main PDFKhairul Anwar Abd HamidОценок пока нет

- Microsoft Word - OvercomingSpeechAnxietyДокумент2 страницыMicrosoft Word - OvercomingSpeechAnxietyKonie LappinОценок пока нет

- Global and Local Outlook of Hydrogen ProductionДокумент2 страницыGlobal and Local Outlook of Hydrogen ProductionKhairul Anwar Abd HamidОценок пока нет

- Hydrogen ProductionДокумент3 страницыHydrogen ProductionKhairul Anwar Abd HamidОценок пока нет

- Smoking Habits Among Medical Students in A Private Institution - PublishДокумент8 страницSmoking Habits Among Medical Students in A Private Institution - PublishKhairul Anwar Abd HamidОценок пока нет

- Microsoft Word - OvercomingSpeechAnxietyДокумент2 страницыMicrosoft Word - OvercomingSpeechAnxietyKonie LappinОценок пока нет

- Apparatus For ScienceДокумент2 страницыApparatus For ScienceKhairul Anwar Abd HamidОценок пока нет

- Learning Issues Cs2 Dynamic ModelsДокумент4 страницыLearning Issues Cs2 Dynamic ModelsKhairul Anwar Abd HamidОценок пока нет

- Letter of Indemnity for Trainee InternshipДокумент3 страницыLetter of Indemnity for Trainee InternshipAhmad NaqiuddinОценок пока нет

- 1 Kalendar Perancangan 2013 Utm JBДокумент7 страниц1 Kalendar Perancangan 2013 Utm JBKhairul Anwar Abd HamidОценок пока нет

- Course Outline SKF 3323 (Separation 1)Документ5 страницCourse Outline SKF 3323 (Separation 1)Khairul Anwar Abd HamidОценок пока нет

- Course Outline SKF 3323 (Separation 1)Документ5 страницCourse Outline SKF 3323 (Separation 1)Khairul Anwar Abd HamidОценок пока нет

- Letter of Indemnity for Trainee InternshipДокумент3 страницыLetter of Indemnity for Trainee InternshipAhmad NaqiuddinОценок пока нет

- Homework1 SKKK1113 1112-2Документ1 страницаHomework1 SKKK1113 1112-2Khairul Anwar Abd HamidОценок пока нет

- MethodologiesДокумент2 страницыMethodologiesKhairul Anwar Abd HamidОценок пока нет

- Try Test 1Документ1 страницаTry Test 1Khairul Anwar Abd HamidОценок пока нет

- Try Test 1Документ1 страницаTry Test 1Khairul Anwar Abd HamidОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- PSY1020 Foundation Psychology Essay Cover SheetДокумент7 страницPSY1020 Foundation Psychology Essay Cover SheetklediОценок пока нет

- Developmental Psychology Theories in ClassroomsДокумент5 страницDevelopmental Psychology Theories in ClassroomskarenОценок пока нет

- B F SkinnerДокумент34 страницыB F Skinnersangha_mitra_2100% (2)

- Smartick Vs KhanДокумент2 страницыSmartick Vs KhanMuhammad BasraОценок пока нет

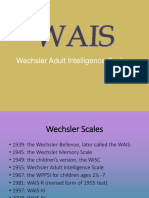

- Wechsler Adult Intelligence ScalesДокумент20 страницWechsler Adult Intelligence ScalesUkhtSameehОценок пока нет

- Theories of LearningДокумент18 страницTheories of Learningzahabia k.bОценок пока нет

- Students Perceptions of Teaching and LearningДокумент15 страницStudents Perceptions of Teaching and Learninganas0lОценок пока нет

- Gregersen Horwitz 2002Документ10 страницGregersen Horwitz 2002Júlia BánságiОценок пока нет

- Introduction To Linguistics Chapter 13 PsychoДокумент5 страницIntroduction To Linguistics Chapter 13 PsychoRantti AnwarОценок пока нет

- JNDДокумент13 страницJNDkuldeepedwa100% (2)

- OK Quiz 5Документ4 страницыOK Quiz 5ChiОценок пока нет

- Disorders of Memory 1Документ26 страницDisorders of Memory 1Sreeraj VsОценок пока нет

- Cognitive Neuroscience: A Very Short Introduction - Richard PassinghamДокумент5 страницCognitive Neuroscience: A Very Short Introduction - Richard Passinghamhefokiba100% (1)

- Deep Learning: A Visual IntroductionДокумент39 страницDeep Learning: A Visual IntroductionLuisОценок пока нет

- Applying Learning Theories and Instructional Design Models For Effective InstructionДокумент23 страницыApplying Learning Theories and Instructional Design Models For Effective InstructionSoren DescartesОценок пока нет

- Jezicki MozakДокумент36 страницJezicki MozakSinisa RisticОценок пока нет

- Classroom Observation Checklist Pre-School/KindergartenДокумент5 страницClassroom Observation Checklist Pre-School/KindergartenathyiraОценок пока нет

- Lesson 3 - Clarity of Learning TargetsДокумент19 страницLesson 3 - Clarity of Learning TargetsMila Gamido100% (4)

- Mixed Method: Guest Ratings and Comments of An Island Resort in Negros Occidental (Lakawon Resort)Документ4 страницыMixed Method: Guest Ratings and Comments of An Island Resort in Negros Occidental (Lakawon Resort)anngelyn legardaОценок пока нет

- Task 5Документ4 страницыTask 5api-307403208Оценок пока нет

- Motivation: Motivation Is The Act of Stimulating Someone or Oneself To Get A Desired Course of ActionДокумент13 страницMotivation: Motivation Is The Act of Stimulating Someone or Oneself To Get A Desired Course of Actionsalini sasiОценок пока нет

- WILLIS The Language Teacher Online 22.07 - Task-Based Learning - What Kind of AdventureДокумент5 страницWILLIS The Language Teacher Online 22.07 - Task-Based Learning - What Kind of AdventurelaukingsОценок пока нет

- Approaches To School CurriculumДокумент31 страницаApproaches To School CurriculumGerald Eledia NeulidОценок пока нет

- MoCA 7.2 ScoringДокумент5 страницMoCA 7.2 Scoringszhou52100% (2)

- Schema Theory HandoutДокумент3 страницыSchema Theory HandoutDinoDemetilloОценок пока нет

- Rainy Day Lesson Plan Edu 330Документ3 страницыRainy Day Lesson Plan Edu 330api-307989771Оценок пока нет

- Magro Gutiérrez, Carrascal Domínguez - 2019 - El Desing Thinking Como Recurso y Metodología para La Alfabetización Visual y El AprendizaДокумент25 страницMagro Gutiérrez, Carrascal Domínguez - 2019 - El Desing Thinking Como Recurso y Metodología para La Alfabetización Visual y El AprendizaJorge TriscaОценок пока нет

- Teaching Language To Children With Autism Developmental DisabilitiesДокумент4 страницыTeaching Language To Children With Autism Developmental DisabilitiesravibhargavaraamОценок пока нет

- Art Psychotherapy-Harriet WadesonДокумент3 страницыArt Psychotherapy-Harriet WadesonfasfasfОценок пока нет

- Sub TopicДокумент1 страницаSub TopicApril AcompaniadoОценок пока нет