Академический Документы

Профессиональный Документы

Культура Документы

Testing

Загружено:

rajeevforyouИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Testing

Загружено:

rajeevforyouАвторское право:

Доступные форматы

Presented by Lars Yde, M.Sc., at Selected Topics in Software Development, D !

" spring semester #$$%

Software Testing Concepts and Tools

T&e w&at's and w&erefore's

To test is to do(bt. So w&y do we do(bt )

f yo('re any good, w&y not *(st write proper+correct code ) Specs, typos, and , levels of comple-ity .ven wit& perfect code, we cannot control all o(tside variables /inder, c&ap. 01 2 Small C&allenge, 34 perm(tations 5ollison, S6 Test 7 Perf, p.881 Using only the characters 'A' - 'Z' the T&e virt(e of t&e pragmatist approac&

total number of possible character combinations using for a filename with an 8-letter filename and a 3-letter extension is 268 263! or 2"8!82#!"$$!#28%

T&e scope of t&e problem

9erification cost is asymptotic to t&e desired :(ality level.

;rap&ics co(rtesy Scott Se&l&orst

Systemic levels of testing

"nit

/lac< bo-, w&ite bo-, grey boS&ared memory, s&ared interfaces, s&ared messages Load testing, performance testing, regression testing

ntegration

System1 f(ll monty, in realistic ecosystem nstallation = deployment

Precondition=postcondition, load, regression Man(al >e.g. ;" ?, a(tomated >e.g. @ormal ver.?

2cceptance testing

Testing sc&ools of t&o(g&t

@ormal approac&es

/inder, c&ap.3, combinatorial1 en(merate, c(ll, verify, impractical, &(mbled by comple-ity /inder, c&ap.A, state mac&ines1 flow vis(aliBation, co&erence >p.#8%?, (sef(l as cscw artifact /inder, c&ap.,C04, patterns1 templates for test design, good craftsmans&ip, tools of t&e trade, &e(ristics in a combinatorial e-plosion. 5ecogniBing t&e facts. Test often, test repeatedly, test a(tomatically

2gile approac&

TDD testing

C an agile approac&

Simple recipe

Design as re:(ired 6rite some test cases See t&at t&ey fail >no code yet to e-ercise? Ma<e t&em pass by writing compliant code ;oto start

6rite iteratively, code and design iteratively ;rad(al improvement t&ro(g& design and selection D evolving design E code

TDD testing

2(tomated tests t&at are

Concise Self c&ec<ing 5epeatable 5ob(st S(fficient Fecessary Clear .fficient Specific ndependent Maintainable Traceable

2lso room for a(tomated acceptance testing

TDD c&allenges = &eadac&es

Sealed interfaces in legacy code M(ltit&readed designs wit& intricate loc< patterns Deeply tiered implementations wit& no separation 2(tomated ;" testing >allow for scripting, please? Mismanagement, b(siness decisions Database dependencies >consider moc< ob*ects? Gverly long and comple- test cases D easier to cran< o(t t&an yo( mig&t t&in<... /(gs in testing &arnesses and framewor<s

Pragmatic testing

.-periment is part 7 parcel of t&e scientific met&od

Test to falsify D not to validate. Prove false, not tr(e Prove beyond a reasonable do(bt. ;ood eno(g& for &anging, good eno(g& for testing. Strategic levels of testing >not systemic levels?

Test e-&a(stively Test e-ploratorily Test sporadically Test stoc&astically 5oll yo(r own

Tactics for targeted testing

.:(ivalence classes D formal and &e(ristic /o(ndary val(es D pea<s and valleys rat&er t&an flatlands 5is< analysis D (nclear, comple-, legacy, &ere be dragons "se coverage tools D w&ic& portions of code e-ercised1 Fcover .-perience and int(ition

Tools

"nit testing framewor<s E e-tras

H(nit F(nit = Fcover = Fant >versatile, ;" , convenient, e-tendible? /oost >organise into cases, s(ites, e-tensive assert library, simple and effective? Cpp"nit >e-tending framewor< base classes, assert library, cl(msy?

.-ample >F(nit? D ass(me class 2cco(nt

namespace ban< I p(blic class 2cco(nt I private float balanceJ p(blic void Deposit>float amo(nt? I balanceE+amo(ntJ K

p(blic void 6it&draw>float amo(nt?

F(nit testing cont'd

namespace ban< I (sing SystemJ (sing F"nit.@ramewor<J LTest@i-t(reM p(blic class 2cco(ntTest I 2cco(nt so(rceJ 2cco(nt destinationJ LSet"pM p(blic void nit>? I so(rce + new 2cco(nt>?J so(rce.Deposit>#$$.$$@?J destination + new 2cco(nt>?J destination.Deposit>04$.$$@?J K LTestM p(blic void Transfer@(nds>? I so(rce.Transfer@(nds>destination, 0$$.$$f?J 2ssert.2re.:(al>#4$.$$@, destination./alance?J 2ssert.2re.:(al>0$$.$$@, so(rce./alance?J K

Hyr<i Slide on Testing Tools

Hyr<i slide on testing tools

2F!OSvn test case D st(dio pl(gin

== P dP Qpragma once Qincl(de Nstdaf-.&N (sing namespace F"nit11@ramewor<J namespace FSvn I namespace Core I namespace Tests I namespace MCpp I LTest@i-t(reM p(blic RRgc class ManagedPointerTest I p(blic1 === Ss(mmaryTTest t&at t&e t&ing wor<sS=s(mmaryT LTestM void Test/asic>?J LTestM void Test2ssignment>?J LTestM void TestCopying>?J K KJ

2F!OSvn test case D st(dio pl(gin

== P dP Qincl(de NStd2f-.&N Qincl(de N..=FSvn.Core=ManagedPointer.&N Qincl(de Nmanagedpointertest.&N Q(sing Smscorlib.dllT (sing namespace SystemJ namespace I void void@(nc> voidU ptr ? I StringU string + U>staticRcastSFSvn11Core11ManagedPointerSStringUTU T> ptr ? ?J 2ssert112re.:(al> string, SNMoo worldN ?J K K void FSvn11Core11Tests11MCpp11ManagedPointerTest11Test/asic>? I StringU str + SNMoo worldNJ ManagedPointerSStringUT ptr> str ?J ==c&ec< t&at t&e implicit conversion wor<s 2ssert112re.:(al> ptr, SNMoo worldN ?J ==implicitly convert to voidU void@(nc> ptr ?J

void FSvn11Core11Tests11MCpp11ManagedPointerTest11Test2ssignment>? I StringU str + SNMoo worldNJ ManagedPointerSStringUT ptr0> str ?J ManagedPointerSStringUT ptr#> SN/le&N ?J ptr# + ptr0J 2ssert112re.:(al> ptr0, ptr# ?J 2ssert112re.:(al> ptr#, SNMoo worldN ?J

K void FSvn11Core11Tests11MCpp11ManagedPointerTest11TestCopying>? I StringU str + SNMoo worldNJ ManagedPointerSStringUT ptr0> str ?J ManagedPointerSStringUT ptr#> ptr0 ?J 2ssert112re.:(al> ptr0, ptr# ?J

.-&a(sting yo(r Test Gptions D Do(g Ooffmann

We designed a test where each of the 64K CPUs started with a different value and tested each value 65,536 greater than the last one. Then, each CPU would ask a neighbor to compute the square root differently to check the original result. Two Errors in Four Billion Two hours later, we made the first run using our biggest processor configuration. The test and verification ran in about six minutes or so, but we were confused a bit when the test reported two values in error. Both the developer and I scratched our heads, because the two values didn t appear to be special in any way or related to one another. Two errors in four billion. The developer shook his head and said, No& This is impossi& Oh!& Okay? He thumbed through the microcode source listing and stabbed his finger down on an instruction. Yeah there it is! There was only one thing that could cause those two values to be wrong, and a moment s thought was all it took to discover what that was! Failures can lurk in incredibly obscure He gleefully tried to explain that the places. Sometimes the only way to catch sign on a logical micro instruction shift them is through exhaustive testing. The instruction was incorrect for a particular good news was, we had been able to exhaustively bit pattern on a particular processor test the 32-bit square root node at 11 P.M. on the night of a full function (although we checked only the moon (or some such it didn t make a expected result, not other possible errors lot of sense to me at the time but we like leaving the wrong values in micro caught, reported, and fixed the defect, registers or not responding correctly to fair and square). We submitted the defect interrupts). The bad news was that there report, noting only the two actual was also a 64-bit version, with four billion and expected values that miscompared times as many values that would and attaching the test program. Then, in take about 50,000 years to test exhaustively. just a few minutes, he fixed the code and reran the test, this time with no reported errors. After that, the developers ran the test before each submission, so the test group didn t ever see any subsequent problems. I later asked the developer if he thought the inspection we had originally planned would likely have found the error. After thinking about it for a minute, he shook his head. I doubt if we d have ever caught it. The values weren t obvious powers of two or boundaries or anything special. We would have looked at the instructions and never noticed the subtle error.

Testing patterns C e-ample

Fame1 2rc&itect(re 2c&illes Oeel 2nalysis .lisabet& Oendric<son, ;rant Larsen Gb*ective1 dentify areas for b(g &(nting D relative to t&e arc&itect(re Problem1 Oow do yo( (se t&e arc&itect(re to identify areas for b(g &(nting) @orces1 V Yo( may not be able to answer all yo(r own :(estions abo(t t&e arc&itect(re. V ;etting information abo(t t&e arc&itect(re or e-pected res(lts may re:(ire additional effort. V T&e goals of t&e arc&itect(re may not &ave been artic(lated or may be (n<nown. Sol(tion1 2n arc&itect(ral diagram is (s(ally a collection of elements of t&e system, incl(ding e-ec(tables, data, etc., and connections between t&ose elements. T&e arc&itect(re may specify t&e connections between t&e elements in greater or lesser detail. OereWs an e-ample deployment arc&itect(re diagram. T&is tec&ni:(e wor<s for deployment,

Testing patterns C e-ample

User Interface Input extreme amounts of data to o%errun the (uffer Insert illegal characters *se data with international and special characters *se reser%ed words +nter zero length strings +nter ,, negati%es, and huge %alues where%er num(ers are accepted (watch for (oundaries at #-./0#12$, #-2/2..02, and #0-/#-313$023$!. 4ake the system output huge amounts of data, special characters, reser%ed words. In analyzing the architecture, walk through each item and connection in 4ake the result of a calculation ,, negati%e, or huge. the diagram. Server Processes Consider tests and questions for each of the items in the diagram. "top the process while it5s in the middle of reading or writing data Connections (emulates Disconnect the network ser%er down!. Change the configuration of the connection (for example, insert a Consider what happens to the clients waiting on the data) firewall with 6hat happens when the ser%er process comes (ack up) 7re maximum paranoid settings or put the two connected elements in transactions rolled different N (ack) domains! Databases "low the connection down (#$.$ modem o%er Internet or &'N! 4ake the transaction log a(surdly small 8 fill it up. "peed the connection up. 9ring down the data(ase ser%er. Does it matter if the connection (reaks for a short duration or a long Insert tons of data so e%ery ta(le has huge num(ers of rows. duration) (In In each case, watch how it affects functionality and performance on other words, are there timeout weaknesses)! the ser%er and clients.

Testing patterns D a code e-ample

Call Stack Tracer Motivation 7ssume you are using a runtime li(rary that can throw exceptions3. he runtime li(rary can throw exceptions in ar(itrary functions. :owe%er, you ha%e a multitude of places in your code, where you call functions of the runtime li(rary. In short: How do you find out, where the exception has been thrown (, while avoidin too !uch "noise# in the debu trace$% Forces -. ;ou want to a%oid <noise= in the de(ug trace. #. he tracing facility must (e easy to use. 0. ;ou want to exactly know, where the function was called, when the exception has (een thrown.

Solution Implement a class CallStac&'racer, that internally traces the call stack, (ut writes to the de(ug trace only, if an exception has occurred. he mechanism is as follows> 7n instance of the class is (ound to a (lock (this works for at least C?? and "mall alk!. he constructor for that instance takes as a parameter information a(out where the instance has (een created, e.g. file name and line num(er. If e%erything is @A, at the end of the (lock, the function "etComplete(! is in%oked on the instance of CallStac&'racer. 6hen the instance goes out of scope the destructor is called. In the implementation of the constructor, the instance checks, whether the "etComplete(! function was called. If not, an exception must ha%e (een thrown, so the destructor of the instance writes the information, e.g. file name, line num(er, etc., to the de(ug trace. If the "etComplete(! function was called (efore, then the instance traces nothing thus a%oid the <noise= in the de(ug trace. class CallStackTracer { CallStackTracer(string sourceFileName, int sourceLineNumber) { m_sourceFileName = sourceFileName; m_sourceLineNumber = sourceLineNumber; m_bComplete = alse; }; !CallStackTracer() { i ( "m_bComplete ) { cerr ## m_sourceFileName ## m_sourceLineName; } } $oi% SetComplete() { m_bComplete = true; } pri$ate& string m_sourceFileName; int m_sourceLineNumber; }; '' Some co%e to be co$ere% b( a CallStackTracer $oi% oo() { CallStackTracer cst(__F)L*N+,*__, __L)N*N-,.*/__); '' all normal co%e goes 0ere1 cst1SetComplete(); } Note, that BBCID+N74+BB and BBDIN+N*49+EBB are preprocessor macros in C??, which will (e replaced during compilation (y their %alue.

Testing patterns D a code e-ample

Вам также может понравиться

- Working For Money, Not For Building Your DreamsДокумент5 страницWorking For Money, Not For Building Your DreamsrajeevforyouОценок пока нет

- Saving AccountДокумент1 страницаSaving AccountrajeevforyouОценок пока нет

- Marketing PlanДокумент5 страницMarketing PlanrajeevforyouОценок пока нет

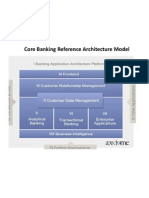

- Core Banking Reference Architecture ModelДокумент4 страницыCore Banking Reference Architecture ModelrajeevforyouОценок пока нет

- Case SreedharaДокумент3 страницыCase SreedhararajeevforyouОценок пока нет

- Vlcc-Swot: Strengths WeaknessДокумент3 страницыVlcc-Swot: Strengths Weaknessrajeevforyou100% (1)

- Case SreedharaДокумент3 страницыCase SreedhararajeevforyouОценок пока нет

- Design The Marketing Channel For Exports of Power Equipment by BHELДокумент1 страницаDesign The Marketing Channel For Exports of Power Equipment by BHELrajeevforyouОценок пока нет

- Case Study:: Tata Motors LTD InДокумент30 страницCase Study:: Tata Motors LTD InrajeevforyouОценок пока нет

- Dyadic Leadership: Ashish Bagla-1020807 Donald Brian-1020809 Jacob Vergese-1020811Документ24 страницыDyadic Leadership: Ashish Bagla-1020807 Donald Brian-1020809 Jacob Vergese-1020811rajeevforyouОценок пока нет

- Banpur Biomass GasifierДокумент6 страницBanpur Biomass GasifierrajeevforyouОценок пока нет

- Heavy Engineering Corporation Presented by RajeevДокумент13 страницHeavy Engineering Corporation Presented by RajeevrajeevforyouОценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- DS (CSC-214) LAB Mannual For StudentsДокумент58 страницDS (CSC-214) LAB Mannual For StudentsVinitha AmaranОценок пока нет

- DS - Practical - 1Документ16 страницDS - Practical - 1Soha KULKARNIОценок пока нет

- BbcwinДокумент1 016 страницBbcwinbakoulisОценок пока нет

- Numerical Methods Lab ReportДокумент12 страницNumerical Methods Lab ReportSANTOSH DAHALОценок пока нет

- Guidelines For Preparing The GSoC 2021 Application ProposalДокумент5 страницGuidelines For Preparing The GSoC 2021 Application ProposalNabeel MerchantОценок пока нет

- XOOPS: Module Structure FunctionДокумент7 страницXOOPS: Module Structure Functionxoops100% (3)

- (LASER) BITS WILP Data Structures and Algorithms Design Assignment 2017Документ32 страницы(LASER) BITS WILP Data Structures and Algorithms Design Assignment 2017John WickОценок пока нет

- Achraf's ResumeДокумент1 страницаAchraf's ResumeKhiter MedОценок пока нет

- Kerala University Semester Four Syllabus For Computer ScienceДокумент9 страницKerala University Semester Four Syllabus For Computer ScienceAbhijith MarathakamОценок пока нет

- Assignment of Software (Naveen Sajwan) BCAДокумент11 страницAssignment of Software (Naveen Sajwan) BCATechnical BaBaОценок пока нет

- Python Examples: 1 Control FlowДокумент8 страницPython Examples: 1 Control FlowSu Su AungОценок пока нет

- 12 CS - SQPДокумент5 страниц12 CS - SQPkumaran mudОценок пока нет

- LogДокумент10 страницLogRenz Yvan EstradaОценок пока нет

- Corenlp: Stanford Corenlp - Natural Language SoftwareДокумент4 страницыCorenlp: Stanford Corenlp - Natural Language SoftwareTushar SethiОценок пока нет

- Oops Cse Lab ManualДокумент27 страницOops Cse Lab ManualRajОценок пока нет

- Properties of Levenshtein, N-Gram, Cosine and Jaccard Distance Coefficients - in Sentence MatchingДокумент1 страницаProperties of Levenshtein, N-Gram, Cosine and Jaccard Distance Coefficients - in Sentence MatchingAngelRibeiro10Оценок пока нет

- 2002 New Heuristics For One-Dimensional Bin-PackingДокумент19 страниц2002 New Heuristics For One-Dimensional Bin-Packingaegr82Оценок пока нет

- A Storage Class Defines The ScopeДокумент6 страницA Storage Class Defines The ScopejyothibellaryvОценок пока нет

- Learning Javascript Data Structures and AlgorithmsДокумент307 страницLearning Javascript Data Structures and AlgorithmsLemuel Uhuru100% (4)

- Rainmeter-4.5.13.exe SummaryДокумент1 страницаRainmeter-4.5.13.exe SummarytestОценок пока нет

- Data Analytics Beginners Guide - Shared by WorldLine TechnologyДокумент22 страницыData Analytics Beginners Guide - Shared by WorldLine Technologykhanh vu ngoc100% (1)

- Student Information: To Write A PL/SQL Programmer To Student InformationДокумент5 страницStudent Information: To Write A PL/SQL Programmer To Student InformationatozdhiyanesОценок пока нет

- Verilog m4 PDFДокумент6 страницVerilog m4 PDFPRERANA SHETОценок пока нет

- Pandas GuideДокумент65 страницPandas GuideOvidio DuarteОценок пока нет

- Unix DumpsДокумент21 страницаUnix DumpsGopakumar GopinathanОценок пока нет

- Buffer HierДокумент1 страницаBuffer HierPushkal KS VaidyaОценок пока нет

- CS 401: Applied Scientific Computing With MATLABДокумент21 страницаCS 401: Applied Scientific Computing With MATLABmanishtopsecretsОценок пока нет

- 01 - Basic - TechnologyFct PDFДокумент728 страниц01 - Basic - TechnologyFct PDFislamooovОценок пока нет

- Information: Fluency With TechnologyДокумент19 страницInformation: Fluency With TechnologyManpreet SinghОценок пока нет

- JF 4 1 Solution PDFДокумент2 страницыJF 4 1 Solution PDFIndri Rahmayuni100% (1)