Академический Документы

Профессиональный Документы

Культура Документы

Calibrating Colorimeters For Display Measurements

Загружено:

JeransОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Calibrating Colorimeters For Display Measurements

Загружено:

JeransАвторское право:

Доступные форматы

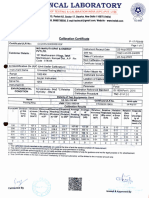

Article manuscript: Information Display 12/99 pp.

30-34

Calibrating Colorimeters for Display Measurements

S. W. Brown, Y. Zong and Y. Ohno

How red is red? Many display applications have stringent requirements for color accuracy where precision is critical, yet measuring that accuracy is more difficult than you might think. Medical and avionics displays must have reliable color to convey essential information. Color management systems must have accurate color data in order to perform effectively. The manufacturers who develop these products and the people responsible for purchasing them typically rely on results from commercial color measuring instruments: both colorimeters and spectroradiometers. In many cases, results from these instruments are used to accept or reject display products without much attention paid to the accuracy of their measurements. If the colorimetric measurements of these displays are inaccurate, a user may accept an inadequate product or reject a perfectly good one. In order to make effective use of any instrument, you need to know the measurement uncertainty, and this is true for colorimetry. Only then can you tell how well your colormeasurement system is performing, and whether or not it can do the job you need it to do. There is an increased appreciation of this issue in military and commercial fields; many applications now include requirements for the uncertainties for display color measurements. For example, some military specifications require expanded uncertainties (k =2) of 0.004 in chromaticity (x,y) for test equipment, while uncertainties of 0.005 or less in x,y are recommended in international standards for color measurements of cathode ray tubes (CRT) and liquid crystal displays (LCD). An expanded uncertainty of k =2 means that your measurements will agree with the true value to within the stated value 95.4 % of the time. Instrument manufacturers typically calibrate their colorimeters. Product data sheets routinely specify uncertainty values on the order of 0.002 in x,y, and 2% in luminance (Y). A note of caution: these values may be misleading, in particular for display measurements. They are often based on measurements of an incandescent source with a color temperature of approximately 2856 K. These sources approximate the spectral power distribution of the Commission Internationale de lEclairage (CIE) Standard

Illuminant A, which is a common standard source used in both photometry and colorimetry. There are problems with using just this type of source. If you measure an artifact with the same spectral distribution as the calibration source, the uncertainty may appear to be small. As long as you use the instrument to measure sources with spectral power distributions similar to that of Illuminant A, you could expect to get accurate results. However, we typically want to measure the color and luminance of displays having spectral distributions that are quite different from Illuminant A. Comparative testing shows that the accuracy of color measuring instruments can vary significantly, depending on the spectra of the colors measured. In the case of colorimeters utilizing three or four broadband detectors called "tristimulus colorimeters" the relative spectral responsivities of the individual channels to the CIE color matching functions (x-bar, y-bar, and z-bar) may not be matched well. This results in errors when the device is used to measure different spectral distributions. In the case of array spectroradiometers, a number of factors can contribute to the accuracy of color measurements, including stray light, wavelength scale errors, and the linearity of the detector array. No matter what type of device is used, the error analyses are complex and the uncertainties of color measuring instruments for various displays and for different colors are often not well known. So even if an instrument is calibrated accurately against an incandescent source, we dont know how well the instrument can subsequently measure the chromaticity and luminance of display colors. Empirical evidence demonstrates how significant this problem can be. We took a number of colorimeters and measured the chromaticity of various colors of a display. We found that inter-instrument variations in the measurements were as large as 0.01 in x,y and 10% in Y, depending on the color and type of display being measured. These variations are an order of magnitude larger than expected from the manufacturers specified calibration uncertainties, and imply that the uncertainty of some of the commercial instruments are at this level for display color measurements. Uncertainties in color measurements on this order are too large for many current commercial, industrial, and military applications. In many applications, calibration approaches relying solely on incandescent sources are not adequate to meet current needs in display measurements. The National Institute of Standards and Technology (NIST) has addressed this problem by developing a calibration facility in Gaithersburg, Maryland, for colorimeters tailored to display measurements.

During a calibration, test instruments colorimeters or spectroradiometers are calibrated in direct comparison with a reference instrument while measuring various colors of actual displays. Upon calibration, a colorimeter can be used to measure any color of the display with a known uncertainty in chromaticity and luminance.

NIST Calibration Facility

Both source-based and detector-based methods are commonly used as transfer standards in calibrations. Unfortunately, displays are not colorimetrically stable and reproducible enough over long time periods to be used as a source for transfer standards. As a result, we have employed a detector-based calibration strategy. The detector-based scheme starts with a reference instrument with known characteristics. This reference instrument and the instrument to be calibrated are used to measure various colors of a particular display. The differences in results determine the errors of a test instrument. Once these errors are known, they can be corrected when the test instrument is subsequently used. In general, the errors in both chromaticity and luminance vary depending on the color of the display. A lookup table for the errors with different colors would be difficult to maintain. We chose a more manageable approach, deriving a correction matrix for the test instrument based on measured errors. This matrix makes it possible to correct for any color of a particular display, transferring the calibration from the NIST reference instrument to the test instrument. In order for this strategy to work reliably, three cornerstones must be in place. We require a stable, well-characterized reference detector to be used for the display measurements, an effective matrix correction technique, and a display that is colorimetrically stable over the course of a typical calibration measurement sequence. The NIST Reference Instrument The reference spectroradiometer consists of imaging optics, a double-grating scanning monochromator for wavelength selection, and a photomultiplier tube (PMT) (Fig. 1). The output of the PMT is sent to a digital voltmeter and the voltage is recorded as a function of wavelength by a computer. The input optics include a depolarizer and ordersorting filters. The depolarizer reduces the polarization sensitivity of the instrument to less than 1% while order-sorting filters placed in front of the spectrometer entrance slits eliminate higher order grating diffraction effects. This design results in a reference instrument with suitable characteristics (Table 1). It can measure CRT colors with an expanded uncertainty of approximately 0.001 in chromaticity and 1% in luminance.

Parameter Wavelength uncertainty Slit scattering function Stray light factor Polarization Sensitivity Random Noise

Value 0.1 nm 5 0.1 nm; triangular < 2 x 10-6 < 1% < 0.2% of the peak signal

Table 1: Reference spectroradiometer characteristics. The Four-Color Matrix Correction Method

The second cornerstone is an effective correction matrix technique that you can use to improve the accuracy of tristimulus colorimeters for color display measurements. The first step is to measure the differences in results from the reference instrument and the instrument being tested, when both measure various colors of a display. This data can then be used to create a three-by-three correction matrix. The correction matrix can then be used to transform the test instruments tristimulus values to more closely approximate the reference values. The corrected values from the test instrument are thus expressed as linear combinations of the uncorrected values. The trick here is to create a correction matrix that accurately transforms the values. Several different approaches have been developed to derive the correction matrix. For example, the American Society of Testing and Materials (ASTM) recommends a method that minimizes the root-mean-square (RMS) difference between measured and reference tristimulus values for several different colors of a display. The chromaticity values are then calculated from the corrected tristimulus values. However, this method does not always work as well as expected. The ASTM method is based on the transformation of tristimulus values. It is therefore susceptible to luminance errors arising from display instabilities, and inconsistent alignment of the instruments that affect the accuracy of the corrected chromaticity values. In contrast, measurements of chromaticity values are normally more stable and reproducible than measurements of tristimulus values. They are relative measurements and many of the sources of luminance error tend to be reduced or canceled. Consequently, a matrix correction method the Four-Color Method was developed that reduces the difference between measured and reference chromaticity values rather than tristimulus values. Because the new method is based on chromaticity values, it is 4

insensitive to luminance errors and consequently tends to work better than previous methods. A comparison of correction by various matrix methods demonstrates the differences in residual errors after correction (Fig. 2). In a test with ten colors of a display, the FourColor Method reduced the corrected RMS chromaticity differences more than the other techniques. Matrix correction techniques assume that the spectral distributions of the three primary colors do not change. However, there are small variations in primary spectra between displays incorporating the same type of phosphors and, frequently, large variations between displays incorporating different types of phosphors. The Four-Color Method works well (within 0.001 in x,y) for small spectral variations in the primary colors within the same types of displays, but is not effective if there are large variations between the measured display and the display used in the derivation of the correction matrix. A calibrated instrument can therefore measure any number of different displays utilizing similar phosphors to the display used for the calibration, but a separate correction matrix should be obtained for each different type of display.

Calibration Results

At NIST, we calibrated five disparate commercial instruments including tristimulus colorimeters and array spectroradiometers for a CRT against the NIST reference instrument. We used a broadcast-quality color CRT as the calibration source. This display demonstrated negligible chromaticity changes over the course of a comparison measurement, which took about 30 minutes. The five test instruments and the reference instrument measured ten colors of the CRT, and the chromaticity differences between the NIST reference instrument and the test instruments were recorded (Fig. 3). We observed significant differences in the measured chromaticity values between the different units, with some instruments agreeing with the reference instrument to within 0.002 in x,y for all colors measured, and other instruments disagreeing by greater than 0.005 for certain colors. This is perhaps to be expected since these instruments varied greatly in design and complexity, and as a result, also in price. In general, they were all high-quality instruments; we would expect even larger differences with lower grade instruments. Using the primary color measurements and white, we derived the correction matrix for each instrument using the Four-Color Method. We then applied the corrections to the ten colors measured, and calculated the residual chromaticity differences between the NIST reference instrument and the calibrated test instruments (Fig. 4) The results provide a dramatic illustration of this calibration methods effectiveness.

We obtained similar results for the luminance measurements, with the calibrated instruments agreeing much better with the reference instrument results for four out of the five instruments. In the one exception, the difference in luminance measurements between the test and the reference instruments was not reduced upon application of the correction matrix. This result underscores that when experimental noise or luminance errors are significantly large, the Four-Color Method is not the best suitable for luminance correction. We know that we can measure various colors of the CRT with the reference instrument with an expanded uncertainty of 0.0012 or less in x,y and that the calibrated instrument agrees with the reference instrument to within 0.001 in x,y. Therefore, the expanded uncertainty of chromaticity measurements of the CRT using the calibrated instrument taking the maximum uncertainty in each case and taking the root-sum-square of the uncertainties is 0.0015 in x,y or less. Taking into account the repeatability of the test instrument as well, the expanded uncertainty of chromaticity measurements of a CRT using a NIST-calibrated instrument should be on the order of be 0.002 or less. Our results demonstrate that this calibration procedure can be used to correct measurements from different colorimeters, using a reference instrument for comparison, even when those test instruments produce large differences in uncalibrated measurements for the same display colors. This calibration can bring the expanded uncertainty for these devices to 0.002 in x,y or less well within the limits required for general test equipment and those recommended for international standards. The NIST facility will offer official calibration services using this technique early next year. As a result, display manufacturers and users will be able to know if the red on the screen is truly red.

Acknowledgments

We would like to thank Joe Velasquez and John Grangaard for useful discussions. This work was supported by the U. S. Air Force under CCG contract 97-422. Author Bios: Steven W. Brown is a Physicist, Yuqin Zong is a Guest Researcher, and Yoshi Ohno is a Physicist in the Optical Technology Division at the National Institute of Standards and Technology, 100 Bureau Drive, Gaithersburg, MD 20899. All correspondence should be addressed to Yoshi Ohno at 301-975-2321, or by email at ohno@nist.gov.

PMT Exit Slit Mirror Slit Drive Center Slit Grating Relay Lens Depolarizer Wavelength Drive

Grating Aperture Filter Wheel Entrance Slit

Figure 1. Schematic diagram of the NIST reference spectroradiometer.

0.010 0.008 0.006 0.004 0.002 y 0.000 x x y

ASTM96 Data Set

Colorimeter Experiment

Figure 2. Root-mean-square differences in chromaticy for ten CRT colors, after correction by various matrix methods.

0.006 0.004 0.002

x y

0 -0.002 -0.004 -0.006 -0.008 0.015 0.01 0.005 0 -0.005 -0.01

FULL WHITE

MAGENTA

YELLOW

GREEN

BLUE

RED

CYAN

COLOR 8

COLOR 9

Colors

Figure 3. Uncorrected chromaticity differences between five test instruments and the NIST reference instrument for ten colors of a CRT display.

COLOR 10

0.008 0.006 0.004

x y

0.002 0 -0.002 -0.004 -0.006 -0.008 0.015 0.01 0.005 0 -0.005 -0.01

FULL WHITE

MAGENTA

YELLOW

RED

GREEN

BLUE

COLOR 8

COLOR 9

CYAN

Colors

Figure 4. Residual chromaticity differences between five calibrated test instruments and the NIST reference instrument for ten colors of a CRT display. 10

COLOR 10

Вам также может понравиться

- 3D Optical Scanner Dimensional VerificatДокумент10 страниц3D Optical Scanner Dimensional VerificatVolca CmmОценок пока нет

- Colorimetric CalibrationДокумент16 страницColorimetric CalibrationRoberto RicciОценок пока нет

- Purpose of CalibrationДокумент2 страницыPurpose of Calibrationsakata_abera4Оценок пока нет

- Force Calibration Results of Force Tranducers According ISO 376Документ64 страницыForce Calibration Results of Force Tranducers According ISO 376Hi Tech Calibration ServicesОценок пока нет

- Microphone and Sound Level Meter Calibration in FR PDFДокумент7 страницMicrophone and Sound Level Meter Calibration in FR PDFumair2kplus492Оценок пока нет

- Use and Calibration of SpectrophotometerДокумент11 страницUse and Calibration of SpectrophotometerTinashe W MangwandaОценок пока нет

- Fluke - Counter - CalДокумент2 страницыFluke - Counter - CalpolancomarquezОценок пока нет

- Power Quality Analysis Calibration CertificateДокумент3 страницыPower Quality Analysis Calibration Certificateradha krishnaОценок пока нет

- ..Percentage Oxygen Analyzer Calibration ProcedureДокумент5 страниц..Percentage Oxygen Analyzer Calibration ProcedureVignesh KanagarajОценок пока нет

- Nabl News Letter 40Документ40 страницNabl News Letter 40Bala MuruОценок пока нет

- U 926 Muffle FurnaceДокумент11 страницU 926 Muffle FurnaceAmit KumarОценок пока нет

- (A Division of Testing & Calibration India (Opc) Pvt. LTD)Документ1 страница(A Division of Testing & Calibration India (Opc) Pvt. LTD)santanushee8Оценок пока нет

- Thermocouple Cable CatalogueДокумент12 страницThermocouple Cable CatalogueIrfan AshrafОценок пока нет

- Lab3 LVDT CalibrationДокумент14 страницLab3 LVDT CalibrationSaurabhОценок пока нет

- Nist TN 1900 PDFДокумент105 страницNist TN 1900 PDFAllan RosenОценок пока нет

- Gas CalibrationДокумент6 страницGas CalibrationSatiah WahabОценок пока нет

- Calibrating ThermometersДокумент7 страницCalibrating ThermometersAlexander MartinezОценок пока нет

- HUIDELIN NDT LTD Leeb Hardness Tester Calibration CertificateДокумент1 страницаHUIDELIN NDT LTD Leeb Hardness Tester Calibration Certificateশেখ আতিফ আসলামОценок пока нет

- NABL 122-05 W.E.F. 01.01.16-Specific Criteria For Calibration Laboratories in Mechanical Discipline - Density and Viscosity Measurement PDFДокумент36 страницNABL 122-05 W.E.F. 01.01.16-Specific Criteria For Calibration Laboratories in Mechanical Discipline - Density and Viscosity Measurement PDFzilangamba_s4535Оценок пока нет

- CIE Standards For Assessing Quality of Light SourcДокумент12 страницCIE Standards For Assessing Quality of Light SourcCamilo Andres Ardila OrtizОценок пока нет

- Magnetic Field IndicatorsДокумент2 страницыMagnetic Field IndicatorsdantegimenezОценок пока нет

- Jeep 112Документ7 страницJeep 112Ishan LakhwaniОценок пока нет

- Hydrometer Calibration by Hydrostatic Weighing With Automated Liquid Surface PositioningДокумент15 страницHydrometer Calibration by Hydrostatic Weighing With Automated Liquid Surface PositioningSebastián MorgadoОценок пока нет

- How To Convert Thermocouple Milivolts To TemperatureДокумент9 страницHow To Convert Thermocouple Milivolts To TemperaturesakthiОценок пока нет

- Infrared Thermometer Calibration - A Complete Guide: Application NoteДокумент6 страницInfrared Thermometer Calibration - A Complete Guide: Application NoteSomkiat K. DonОценок пока нет

- Sanas TR 79-03Документ9 страницSanas TR 79-03Hi Tech Calibration ServicesОценок пока нет

- SH750 Cross Hatch Cutter 2017 v1Документ2 страницыSH750 Cross Hatch Cutter 2017 v1abualamalОценок пока нет

- A New Ultrasonic Flow Metering Technique Using Two Sing-Around Paths, Along With The Criticism of The Disadvantages Inherent in Conventional Ultrasonic Flow Metering Transducers PDFДокумент10 страницA New Ultrasonic Flow Metering Technique Using Two Sing-Around Paths, Along With The Criticism of The Disadvantages Inherent in Conventional Ultrasonic Flow Metering Transducers PDFvitor_pedroОценок пока нет

- SOP For UV-Vis SpectrophotometerДокумент7 страницSOP For UV-Vis SpectrophotometerArchana PatraОценок пока нет

- Colorimetry: Understanding the CIE SystemОт EverandColorimetry: Understanding the CIE SystemJanos SchandaОценок пока нет

- Is 15575 2 2005 PDFДокумент42 страницыIs 15575 2 2005 PDFHVHBVОценок пока нет

- Miller 210 Contact Resistance Test SetДокумент1 страницаMiller 210 Contact Resistance Test SetjorgemichelaОценок пока нет

- HumedadДокумент49 страницHumedadCarlos Jose Sibaja CardozoОценок пока нет

- Lab1 Straightness Composite Lab 2 PDFДокумент9 страницLab1 Straightness Composite Lab 2 PDFawad91Оценок пока нет

- Water Level Calibration EZДокумент3 страницыWater Level Calibration EZHarry Wart WartОценок пока нет

- Uncertinity QuestionsДокумент19 страницUncertinity QuestionsShaikh Usman AiОценок пока нет

- MSL Technical Guide 2 Infrared Thermometry Ice Point: Introduction and ScopeДокумент2 страницыMSL Technical Guide 2 Infrared Thermometry Ice Point: Introduction and ScopeEgemet SatisОценок пока нет

- Calculating Correlated Color Temperatures Across The Entire Gamut of Daylight and Skylight ChromaticitiesДокумент7 страницCalculating Correlated Color Temperatures Across The Entire Gamut of Daylight and Skylight ChromaticitiesdeejjjaaaaОценок пока нет

- Color MeasurementДокумент2 страницыColor Measurementmanox007Оценок пока нет

- Specifying and Verifying The Performance of Color-Measuring InstrumentsДокумент11 страницSpecifying and Verifying The Performance of Color-Measuring InstrumentsEnriqueVeОценок пока нет

- MetrologyДокумент157 страницMetrologyVishwajit HegdeОценок пока нет

- Microscope CalibrationДокумент5 страницMicroscope CalibrationInuyashayahooОценок пока нет

- Theoretical Uncertainty of Orifice Flow Measurement 172KBДокумент7 страницTheoretical Uncertainty of Orifice Flow Measurement 172KBSatya Sai Babu YeletiОценок пока нет

- Calibration of Radiation Thermometers below Silver PointДокумент41 страницаCalibration of Radiation Thermometers below Silver PointebalideОценок пока нет

- A Study On Verification of SphygmomanometersДокумент3 страницыA Study On Verification of SphygmomanometerssujudОценок пока нет

- Iec 60068.2.48-82Документ15 страницIec 60068.2.48-82Antonio SanchezОценок пока нет

- EURAMET Cg-7 V 1.0 Calibration of Oscilloscopes 01Документ45 страницEURAMET Cg-7 V 1.0 Calibration of Oscilloscopes 01libijahansОценок пока нет

- Chapter 1 - Introduction and Basic ConceptsДокумент8 страницChapter 1 - Introduction and Basic ConceptsYeevön LeeОценок пока нет

- Fluorescent Light Source Color ChartsДокумент1 страницаFluorescent Light Source Color Chartsninadpujara2007Оценок пока нет

- Procedure For Calibration and Installation of InstrumentsДокумент62 страницыProcedure For Calibration and Installation of InstrumentsAbdul SammadОценок пока нет

- ISO 21501-4 Calibratio of Air Particle Counters From A Metrology Perpective PDFДокумент8 страницISO 21501-4 Calibratio of Air Particle Counters From A Metrology Perpective PDFManuel TorresОценок пока нет

- Calibrate Measuring Tape CertificateДокумент2 страницыCalibrate Measuring Tape CertificateKiranОценок пока нет

- Metrology: ME3190 Machine Tools and MetrologyДокумент64 страницыMetrology: ME3190 Machine Tools and MetrologySujit MuleОценок пока нет

- Case Study On Rapid PrototypingДокумент7 страницCase Study On Rapid PrototypingSachin KumbharОценок пока нет

- Mettler pj3000 manual download guideДокумент3 страницыMettler pj3000 manual download guideMehrdad AkbarifardОценок пока нет

- Uncertainty Budget TemplateДокумент4 страницыUncertainty Budget TemplateshahazadОценок пока нет

- Calibration Report / Certificate:: Qamr Qamv Qave Qals QPCT TQ76TДокумент3 страницыCalibration Report / Certificate:: Qamr Qamv Qave Qals QPCT TQ76TsrinimullapudiОценок пока нет

- Optical Measuring - Field Surveying With FARO Laser Tracker VantageДокумент1 страницаOptical Measuring - Field Surveying With FARO Laser Tracker VantageKОценок пока нет

- Introduction to Spectrophotometer for Fabric Color AnalysisДокумент3 страницыIntroduction to Spectrophotometer for Fabric Color AnalysisRakibul HasanОценок пока нет

- Instruments For Color Measurement - 72Документ11 страницInstruments For Color Measurement - 72Abhishek GuptaОценок пока нет

- Damn LiesДокумент10 страницDamn LiesJeransОценок пока нет

- Poynton Barsotti 3DLUT PDFДокумент8 страницPoynton Barsotti 3DLUT PDFJeransОценок пока нет

- Presence and Television: The Role of Screen SizeДокумент24 страницыPresence and Television: The Role of Screen SizeJeransОценок пока нет

- Windows 8 Resource GuideДокумент1 страницаWindows 8 Resource GuideJeransОценок пока нет

- Why Should YOU Calibrate PDFДокумент8 страницWhy Should YOU Calibrate PDFJeransОценок пока нет

- Not Plants or AnimalsДокумент10 страницNot Plants or AnimalsarcyriaОценок пока нет

- Image Resolution of 35mm Film in Theatrical PresentationДокумент10 страницImage Resolution of 35mm Film in Theatrical PresentationJeransОценок пока нет

- Time & Group Delay in Loudspeaker SystemsДокумент9 страницTime & Group Delay in Loudspeaker SystemsJeransОценок пока нет

- High Performance Diaphragm MaterialsДокумент5 страницHigh Performance Diaphragm MaterialsJeransОценок пока нет

- Visual Acuity Standards 1Документ18 страницVisual Acuity Standards 1Sandi MotmotОценок пока нет

- A Pixel Is Not A Little SquareДокумент11 страницA Pixel Is Not A Little SquareGreg SlepakОценок пока нет

- Linkwitz On DipolesДокумент32 страницыLinkwitz On DipolesJeransОценок пока нет

- B&W Nautilus DevelopmentДокумент50 страницB&W Nautilus DevelopmentJeransОценок пока нет

- Room Reflections MisunderstoodДокумент17 страницRoom Reflections MisunderstoodJeransОценок пока нет

- Display Measurement and EvaluationДокумент33 страницыDisplay Measurement and EvaluationJeransОценок пока нет

- All About Decibels, What's Your DB IQДокумент14 страницAll About Decibels, What's Your DB IQJeransОценок пока нет

- A New Loudspeaker Technology - Parametric Acoustic ModellingДокумент8 страницA New Loudspeaker Technology - Parametric Acoustic ModellingJerans100% (1)

- Video Calibration From The Inside - Volume I - 2nd EditionДокумент54 страницыVideo Calibration From The Inside - Volume I - 2nd EditionJeransОценок пока нет

- IST98.1 NIST Reference Spectroradiometer For Color Display CalibrationsДокумент4 страницыIST98.1 NIST Reference Spectroradiometer For Color Display CalibrationsJeransОценок пока нет

- Electronic Display Metrology - Not A Simple MatterДокумент10 страницElectronic Display Metrology - Not A Simple MatterJeransОценок пока нет

- The Relationship Among Video Quality, Screen Resolution, and Bit Rate - IEEE11Документ5 страницThe Relationship Among Video Quality, Screen Resolution, and Bit Rate - IEEE11JeransОценок пока нет

- Acoustics of Small RoomsДокумент44 страницыAcoustics of Small RoomseskilerdenОценок пока нет

- The Expression of Uncertainty and Confidence in Measurement (Edition 3, November 2012)Документ82 страницыThe Expression of Uncertainty and Confidence in Measurement (Edition 3, November 2012)JeransОценок пока нет

- CIE Color Space ExplainedДокумент29 страницCIE Color Space ExplainedJeransОценок пока нет

- B&W 800 Development PaperДокумент22 страницыB&W 800 Development PaperJeransОценок пока нет

- The Future Arrives For Four Clean Energy TechnologiesДокумент14 страницThe Future Arrives For Four Clean Energy TechnologiesJeransОценок пока нет

- IAEEE Journal reviews H.264 video coding standardДокумент10 страницIAEEE Journal reviews H.264 video coding standardJeransОценок пока нет

- Understanding TFT-LCD TechnologyДокумент11 страницUnderstanding TFT-LCD TechnologyAnonymous VWlCr439Оценок пока нет

- Physics 1 Pretest ReviewДокумент11 страницPhysics 1 Pretest ReviewKaren Talastas100% (1)

- ARMA Model Selection via Adaptive LassoДокумент9 страницARMA Model Selection via Adaptive LassoDAVI SOUSAОценок пока нет

- Thirishali ReportДокумент119 страницThirishali Reportthirishali karthikОценок пока нет

- New Light On Space and Time by Dewey B LarsonДокумент258 страницNew Light On Space and Time by Dewey B LarsonJason Verbelli100% (6)

- Review ExercisesДокумент18 страницReview ExercisesFgj JhgОценок пока нет

- Skittles Project 1Документ7 страницSkittles Project 1api-299868669100% (2)

- StataДокумент14 страницStataDiego RodriguezОценок пока нет

- Chapter 3 Morgan Integrating Qualitative and Quantitative Methods 2 PDFДокумент18 страницChapter 3 Morgan Integrating Qualitative and Quantitative Methods 2 PDFcsskwxОценок пока нет

- Leok 9Документ2 страницыLeok 9Ali RumyОценок пока нет

- IELTS Preparation Materials With2Документ312 страницIELTS Preparation Materials With2navdeep kaurОценок пока нет

- VGU Probability Study GuideДокумент22 страницыVGU Probability Study GuideAn Huynh Nguyen HoaiОценок пока нет

- Causal Comparative Research DefinedДокумент2 страницыCausal Comparative Research DefinedFitri handayaniОценок пока нет

- Business Analytics: Probability ModelingДокумент58 страницBusiness Analytics: Probability Modelingthe city of lightОценок пока нет

- Food Engineering Laboratory 2021Документ2 страницыFood Engineering Laboratory 2021Kamran AlamОценок пока нет

- Advertising in Context of ': A Project Report ONДокумент68 страницAdvertising in Context of ': A Project Report ONRahul Pansuriya100% (1)

- X Is Variable: Equal Marks and Figures Statistical PermitedДокумент4 страницыX Is Variable: Equal Marks and Figures Statistical PermitedTaio VamosОценок пока нет

- Prediksi Kelulusan Mahasiswa Menggunakan Algoritma Naive Bayes (Studi Kasus 5 PTS Di Banda Aceh)Документ5 страницPrediksi Kelulusan Mahasiswa Menggunakan Algoritma Naive Bayes (Studi Kasus 5 PTS Di Banda Aceh)Jurnal JTIK (Jurnal Teknologi Informasi dan Komunikasi)Оценок пока нет

- Robust Nonparametric Statistical MethodsДокумент505 страницRobust Nonparametric Statistical MethodsPetronilo JamachitОценок пока нет

- Advance Research in Textile EngineeringДокумент4 страницыAdvance Research in Textile EngineeringAustin Publishing GroupОценок пока нет

- Solomon Press S1GДокумент4 страницыSolomon Press S1GnmanОценок пока нет

- Analytical WritingДокумент4 страницыAnalytical Writingcellestial_knightОценок пока нет

- Chapter 8Документ30 страницChapter 8Handika's PutraОценок пока нет

- HPLC SOP for Chromatographic SeparationДокумент3 страницыHPLC SOP for Chromatographic SeparationRainMan75Оценок пока нет

- Introduction To SPSS and Epi-InfoДокумент129 страницIntroduction To SPSS and Epi-Infoamin ahmed0% (1)

- 6220010Документ37 страниц6220010Huma AshrafОценок пока нет

- BPS 1 - Ashlyn LaningДокумент2 страницыBPS 1 - Ashlyn Laningashlyn laningОценок пока нет

- Statistics and Probability: Quarter 4 - Week 4Документ8 страницStatistics and Probability: Quarter 4 - Week 4Elijah CondeОценок пока нет

- Math408 Incourse AssignmentДокумент2 страницыMath408 Incourse AssignmentMD. MAJIBUL HASAN IMRANОценок пока нет

- Statistical Analysis of Data With Report WritingДокумент16 страницStatistical Analysis of Data With Report WritingUsmansiddiq1100% (1)

- Time Series With EViews PDFДокумент37 страницTime Series With EViews PDFashishankurОценок пока нет