Академический Документы

Профессиональный Документы

Культура Документы

Error Correcting Codes

Загружено:

hmbxАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Error Correcting Codes

Загружено:

hmbxАвторское право:

Доступные форматы

Error Correcting Codes

Hamid Mohammadi

Communication II

08/04/2014

Overview

As long as the information transfer rate over the channel is within the

channel bandwidth, then it is possible to construct a code such that the

error probability can be made arbitrarily small.

Costs of increased reliability:

1- transfer rate decreased

2- code becomes more complex

Overview

Types of errors (Distortion, Noise, Interference)

Single bit errors

Mostly in parallel communications (for example corruption of one of

the eight bits to be send)

Burst errors

Mostly in serial communications (according to the duration of the noise

and data transfer rate)

Overview

The key to achieving error-free digital communication in

the presence of noise is the addition of appropriate

redundancy to the original data bits

Block codes

Every block of kdata digits convert to a longer ndigits

(n>k)

Convolutional codes

The coded sequence of ndigits depends not only in the

kdata digits but also (N-1) earlier data digits (The

encoder has memory)

Shannons Theorem

Shannons noisy channel coding theorem

For a noisy channel with capacity C, there exists a

code of rate R<C such that:

Shannons Theorem

Code length n: The number of bits in codeword

Data digits k

Check digits m=n-k, n>k

Code rate R=k/n

This code is (n, k) code

The higher the number of check bits m(and hence the more

reliable the code), the lower the information rate R.

Shannons Theorem

Data digits (d

1

,d

2

,d

3

,..,d

k

) : kdigit vector

Code-word (c

1

,c

2

,c

3

,....,c

n

) : ndigit vector

Number of errors we want to detect or correct: t

Total code words : 2

n

Total data words : 2

k

We want to transmit d

j

using codeword c

j

c

j(Noise)

c

j

(t errors or fewer) then c

j

will lie somewhere inside the

Hammingsphereof radius t centered c

j

In other words, If the received word be inside the Hamming sphere of

radius t then we decide that the true transmitted code word was c

j

Hamming distance

The Hamming distance is the number of bits which differ between the

codewords.

and

This is the number of edges in a shortest path between the two vertices of

these shape corresponding to the codewords

Hamming distance

The minimum distance dof a code is the minimal distance between any two

non-identical codewords.

For the (3,2) parity check code d=2

The (4,1) repetition code (consisting of codewords 0000 and 1111) has

minimum distance 4 any two errors can be detected. In addition, any

single error can be corrected.

In general, a code with minimum distance dwill detect up to d/2

errors and will correct up to t=(d-1)/2 errors. or

d

min

=2t+1

PARITY CHECK CODES

Sample of parity checking block code : RS232

Hamming distance

(n, k) block codes

Information rate: R = k/n

So, the higher the number of check bits (and hence the more reliable the

code), the lower the information rate.

The problem is now to devise a code which maximizes reliability and

information rate, while still allowing detection of transmission errors.

Hamming Bound

The number of ways in which up to t errors can occur:

Thus for each codeword we must leave vertices unused.

We have 2

k

codewords so we must leave a total of words unused.

Therefore the total number of words must at least be :

But the total number of words available is : 2

n

So we require :

Hamming Bound

Hamming bound is a necessary but not sufficient condition.

But for single error correcting is sufficient too.

Hamming Codes

Acode for which this inequality changes to equality, is known as Perfect code

Binary, Single error correcting, Perfect codes are called Hammingcodes

t=1 so d

min

=(2 t) +1 = 3

From Eq. (15.3b) we have:

or n= 2

m

-1

So Hamming Codes are (n,k) codes which n= 2

m

-1 and d

min

=3

(15.3b)

Hamming Codes

Example : (7 , 4) systematic Hamming code

Hamming Codes

Example : (7 , 4) systematic Hamming code

[0 1 1 0]

1 1 1

0 1 1

1 0 1

1 1 0

=

[1 1 0]

P1 P2 P3

d P = c

p

Linear Block Codes

c= (c

1

,c

2

,c

3

,....c

k

,,c

n

) : ndigit vector

d= (d

1

,d

2

,d

3

,..,d

k

) : kdigit vector

c

1

=d

1

, c

2

=d

2

, , c

k

=d

k

Remaining digits from c

k+1

to c

n

are linear

combinations of d

1

,d

2

,..., d

k

systematic code:

The leading k digits of word are the data digits and

the remaining m=n-k digits are the parity check

digits that are formed by the linear combination of

data digits.

Linear Block Codes

or

Linear Block Codes

Generator matrix:

Linear Block Codes

Linear Block Codes

Note that the distance between any

two codewords is at least 3. Hence,

the code can correct at least one error.

d

min

= 2t+1

Linear Block Codes, Decoding

c

p

= d.P (15.8)

Parity check matrix:

Linear Block Codes, Decoding

So every code word must satisfy equation (15.10a):

c.H

T

= 0

Assume we have an error in received data r so

r = 1 0 1 1 0 1

c= 1 0 0 1 0 1 e= 0 0 1 0 0 0

Syndrome:

In case of error, s= rH

T

will no longer be zero

Linear Block Codes, Decoding

s = e

i

H

T

We want to find e

i

, but solving this equation wont give us a unique answer for e

i

This equation is satisfied by 2

k

error vectors

Linear Block Codes, Decoding

Linear Block Codes, Decoding

Linear Block Codes, Decoding

Linear Block Codes, Decoding

Linear Block Codes, Decoding

Cyclic Redundancy Check

Cyclic Codes

Cyclic Codes

Systematic Cyclic Codes

Systematic Cyclic Codes

Systematic Cyclic Codes

Decoding

Decoding

Decoding

Вам также может понравиться

- Low Density Parity Check CodesДокумент21 страницаLow Density Parity Check CodesPrithvi Raj0% (1)

- CRCДокумент35 страницCRCsatishОценок пока нет

- L 02 Error Detection and Correction Part 01Документ35 страницL 02 Error Detection and Correction Part 01Matrix BotОценок пока нет

- Low Density Parity Check Codes1Документ41 страницаLow Density Parity Check Codes1Prithvi RajОценок пока нет

- Asst. Prof. Anindita Paul: Mintu Kumar Dutta Sudip Giri Saptarshi Ghosh Tanaka Sengupta Srijeeta Roy Utsabdeep RayДокумент38 страницAsst. Prof. Anindita Paul: Mintu Kumar Dutta Sudip Giri Saptarshi Ghosh Tanaka Sengupta Srijeeta Roy Utsabdeep RayUtsav Determined RayОценок пока нет

- CN Assignment No2Документ22 страницыCN Assignment No2sakshi halgeОценок пока нет

- DC Unit Test 2 Question BankДокумент4 страницыDC Unit Test 2 Question BankSiva KrishnaОценок пока нет

- Notes For Turbo CodesДокумент15 страницNotes For Turbo CodesMaria AslamОценок пока нет

- Linear Block CodingДокумент18 страницLinear Block CodingPavuluri SairamОценок пока нет

- Comm ch10 Coding en PDFДокумент67 страницComm ch10 Coding en PDFShaza HadiОценок пока нет

- Unit Iv Linear Block Codes: Channel EncoderДокумент26 страницUnit Iv Linear Block Codes: Channel EncoderSudhaОценок пока нет

- LDPC - Low Density Parity Check CodesДокумент6 страницLDPC - Low Density Parity Check CodespandyakaviОценок пока нет

- LBCДокумент14 страницLBCanililhanОценок пока нет

- Ec2301 Digital Communication Unit-3Документ5 страницEc2301 Digital Communication Unit-3parthidhanОценок пока нет

- Channel Coding: Binit Mohanty Ketan RajawatДокумент16 страницChannel Coding: Binit Mohanty Ketan Rajawatsam mohaОценок пока нет

- CH 3 DatalinkДокумент35 страницCH 3 DatalinksimayyilmazОценок пока нет

- A Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedДокумент104 страницыA Short Course On Error-Correcting Codes: Mario Blaum C All Rights ReservedGaston GBОценок пока нет

- 4 Error Detection and CorrectionДокумент26 страниц4 Error Detection and CorrectionHasan AhmadОценок пока нет

- Coding Theory and ApplicationsДокумент5 страницCoding Theory and Applicationsjerrine20090% (1)

- 10 ErrorДокумент51 страница10 Errorベラ ジェークОценок пока нет

- ITC Mod-4 Ktunotes - in PDFДокумент88 страницITC Mod-4 Ktunotes - in PDFSivakeerthi SanthoshОценок пока нет

- EX - NO: 3b Design and Performance Analysis of Error Date: Control Encoder and Decoder Using Hamming CodesДокумент7 страницEX - NO: 3b Design and Performance Analysis of Error Date: Control Encoder and Decoder Using Hamming CodesbbmathiОценок пока нет

- Linear Block Coding: Presented byДокумент12 страницLinear Block Coding: Presented bypranay639Оценок пока нет

- Reed SolomonДокумент18 страницReed Solomonrampravesh kumarОценок пока нет

- Error Detection and CorrectionДокумент11 страницError Detection and CorrectionAfrina DiptiОценок пока нет

- 10 ErrorДокумент51 страница10 ErrorprogressksbОценок пока нет

- Design and Performance Analysis of Channel Coding Scheme Based On Multiplication by Alphabet-9Документ7 страницDesign and Performance Analysis of Channel Coding Scheme Based On Multiplication by Alphabet-9Ishtiaque AhmedОценок пока нет

- Error Unit3Документ15 страницError Unit3MOHAMMAD DANISH KHANОценок пока нет

- Convolutional CodesДокумент7 страницConvolutional CodesgayathridevikgОценок пока нет

- CS601 Update NotesДокумент110 страницCS601 Update NotesFarman Ali50% (2)

- Chapter-1: 1.1: Coding TheoryДокумент35 страницChapter-1: 1.1: Coding Theoryhari chowdaryОценок пока нет

- Data Communications: Error Detection and CorrectionДокумент38 страницData Communications: Error Detection and CorrectionAkram TahaОценок пока нет

- CH 10 NotesДокумент15 страницCH 10 NotesAteeqa KokabОценок пока нет

- 5CS3-01: Information Theory & Coding: Unit-3 Linear Block CodeДокумент75 страниц5CS3-01: Information Theory & Coding: Unit-3 Linear Block CodePratapОценок пока нет

- Agniel 2Документ14 страницAgniel 2Killer Boys7Оценок пока нет

- Ijert Ijert: FPGA Implementation of Orthogonal Code Convolution For Efficient Digital CommunicationДокумент7 страницIjert Ijert: FPGA Implementation of Orthogonal Code Convolution For Efficient Digital Communicationtariq76Оценок пока нет

- Performance Evaluation For Convolutional Codes Using Viterbi DecodingДокумент6 страницPerformance Evaluation For Convolutional Codes Using Viterbi Decodingbluemoon1172Оценок пока нет

- Hamming and CRCДокумент69 страницHamming and CRCturi puchhiОценок пока нет

- LDPC OptimizationДокумент32 страницыLDPC OptimizationShajeer KaniyapuramОценок пока нет

- 4 - Channel Coding 5 - 2019 - 02 - 23!08 - 37 - 49 - PMДокумент10 страниц4 - Channel Coding 5 - 2019 - 02 - 23!08 - 37 - 49 - PMتُحف العقولОценок пока нет

- Comparison of Convolutional Codes With Block CodesДокумент5 страницComparison of Convolutional Codes With Block CodesEminent AymeeОценок пока нет

- Detecting Bit Errors: EctureДокумент6 страницDetecting Bit Errors: EctureaadrikaОценок пока нет

- Module 4Документ20 страницModule 4ragavendra4Оценок пока нет

- Concatenation and Implementation of Reed - Solomon and Convolutional CodesДокумент6 страницConcatenation and Implementation of Reed - Solomon and Convolutional CodesEditor IJRITCCОценок пока нет

- Distance Properties of Block Codes (Cond..) Minimum Distance Decoding Some Bounds On The Code SizeДокумент28 страницDistance Properties of Block Codes (Cond..) Minimum Distance Decoding Some Bounds On The Code SizemailstonaikОценок пока нет

- Performance Analysis of Reed-Solomon Codes Concatinated With Convolutional Codes Over Awgn ChannelДокумент6 страницPerformance Analysis of Reed-Solomon Codes Concatinated With Convolutional Codes Over Awgn ChannelkattaswamyОценок пока нет

- Lecture Notes Sub: Error Control Coding and Cryptography Faculty: S Agrawal 1 Semester M.Tech, ETC (CSE)Документ125 страницLecture Notes Sub: Error Control Coding and Cryptography Faculty: S Agrawal 1 Semester M.Tech, ETC (CSE)Yogiraj TiwariОценок пока нет

- The Data Link LayerДокумент35 страницThe Data Link Layernitu2012Оценок пока нет

- UNIT5 Part 2Документ54 страницыUNIT5 Part 2Venkateswara RajuОценок пока нет

- UNIT5 Part 2-1-35Документ35 страницUNIT5 Part 2-1-35Venkateswara RajuОценок пока нет

- UNIT5 Part 2-1-19Документ19 страницUNIT5 Part 2-1-19Venkateswara RajuОценок пока нет

- 8 Reading MaterialsДокумент15 страниц8 Reading MaterialspriyeshОценок пока нет

- Experiment No.5: Title: Aim: Apparatus: Theory: (1) Explain Linear Block Codes in DetailДокумент8 страницExperiment No.5: Title: Aim: Apparatus: Theory: (1) Explain Linear Block Codes in Detailabdulla qaisОценок пока нет

- Design of Convolutional Encoder and Viterbi Decoder Using MATLABДокумент6 страницDesign of Convolutional Encoder and Viterbi Decoder Using MATLABThu NguyễnОценок пока нет

- Ex - No:3C Convolutional Codes Date AIMДокумент5 страницEx - No:3C Convolutional Codes Date AIMbbmathiОценок пока нет

- Data Link Layer:: Error Detection and CorrectionДокумент51 страницаData Link Layer:: Error Detection and CorrectionUjwala BhogaОценок пока нет

- Channel Coding ExerciseДокумент13 страницChannel Coding ExerciseSalman ShahОценок пока нет

- Experimental Verification of Linear Block Codes and Hamming Codes Using Matlab CodingДокумент17 страницExperimental Verification of Linear Block Codes and Hamming Codes Using Matlab CodingBhavani KandruОценок пока нет

- Viterbi DecodingДокумент4 страницыViterbi DecodingGaurav NavalОценок пока нет

- C++ LPCДокумент7 страницC++ LPCMostafa BayomeОценок пока нет

- Englis Phrases For Writing and SpeakingДокумент8 страницEnglis Phrases For Writing and SpeakinghmbxОценок пока нет

- Solution Guide, Differential EquationДокумент53 страницыSolution Guide, Differential EquationhmbxОценок пока нет

- DSP Chapter 10Документ70 страницDSP Chapter 10hmbxОценок пока нет

- Hydraulic Elevator Thyssenkrupp ElevatorsДокумент187 страницHydraulic Elevator Thyssenkrupp Elevatorshmbx100% (3)

- Chapter 07Документ110 страницChapter 07hmbxОценок пока нет

- NICE 1000 Elevator Integrated Controller User ManualДокумент172 страницыNICE 1000 Elevator Integrated Controller User Manualhmbx100% (4)

- TCBCДокумент29 страницTCBChmbx67% (12)

- 06laplac Ti 89 LaplasДокумент10 страниц06laplac Ti 89 LaplashmbxОценок пока нет

- DY-20 & 30 Manual Rev 1.1Документ141 страницаDY-20 & 30 Manual Rev 1.1hmbx67% (3)

- DY 20L ManualДокумент154 страницыDY 20L Manualhmbx100% (6)

- DTT IptvДокумент9 страницDTT Iptvpeter mureithiОценок пока нет

- IFM Speed Relay DD0203 Data SheetДокумент2 страницыIFM Speed Relay DD0203 Data SheetRaymund GatocОценок пока нет

- Lovepedal Purple Plexi 800Документ1 страницаLovepedal Purple Plexi 800Shibbit100% (1)

- Sucoflex 104 PBДокумент13 страницSucoflex 104 PBdspa123Оценок пока нет

- Rockewell Dynamix 1444-Um001 - En-P PDFДокумент458 страницRockewell Dynamix 1444-Um001 - En-P PDFronfrendОценок пока нет

- UPS Repairing PDFДокумент65 страницUPS Repairing PDF11111100% (2)

- Harmonics in Three Phase TransformersДокумент3 страницыHarmonics in Three Phase TransformersSaurabhThakurОценок пока нет

- Fractional-N Frequency SynthesizerДокумент5 страницFractional-N Frequency SynthesizerbaymanОценок пока нет

- Steady-State Analysis of A 5-Level Bidirectional Buck+Boost DC-DC ConverterДокумент6 страницSteady-State Analysis of A 5-Level Bidirectional Buck+Boost DC-DC Converterjeos20132013Оценок пока нет

- Sleek Performance.: SLIM 7 (14")Документ4 страницыSleek Performance.: SLIM 7 (14")RaditОценок пока нет

- D4 - Live SoundДокумент6 страницD4 - Live SoundGeorge StrongОценок пока нет

- Smart Metering Sensor Board 2.0engДокумент46 страницSmart Metering Sensor Board 2.0engStefan S KiralyОценок пока нет

- Circuit Analysis Open EndedДокумент8 страницCircuit Analysis Open EndedHuzaifaОценок пока нет

- Motorola Driver Log2Документ5 страницMotorola Driver Log2Alessio FiorentinoОценок пока нет

- Kre1052503 - 9 (Odv-065r15m18jj-G)Документ2 страницыKre1052503 - 9 (Odv-065r15m18jj-G)Jŕ MaiaОценок пока нет

- A2000 enДокумент48 страницA2000 enUsman SaeedОценок пока нет

- Mipi DsiДокумент79 страницMipi Dsiback_to_batteryОценок пока нет

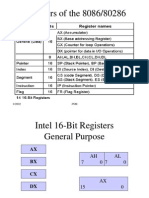

- RegisterДокумент18 страницRegisterWaqar AliОценок пока нет

- Architecture of Fpga Altera Cyclone: BY:-Karnika Sharma Mtech (2 Year)Документ29 страницArchitecture of Fpga Altera Cyclone: BY:-Karnika Sharma Mtech (2 Year)karnika143100% (1)

- Theoretical Analysis of DC Link Capacitor Current Ripple Reduction in The HEV DC-DC Converter and Inverter System Using A Carrier Modulation MethodДокумент8 страницTheoretical Analysis of DC Link Capacitor Current Ripple Reduction in The HEV DC-DC Converter and Inverter System Using A Carrier Modulation MethodVanHieu LuyenОценок пока нет

- 4C+26R - Amphenol 6878300Документ2 страницы4C+26R - Amphenol 6878300angicarОценок пока нет

- Ze Gcs04a20-Eng 247-270Документ24 страницыZe Gcs04a20-Eng 247-270ikrima BenОценок пока нет

- File - D - Desktop - DVD - Final - DVD - GAC Controller - Troubleshooting - PDFДокумент16 страницFile - D - Desktop - DVD - Final - DVD - GAC Controller - Troubleshooting - PDFNAVANEETHОценок пока нет

- 3G High-Speed Wan Interface Cards For Isrs: Chandrodaya PrasadДокумент52 страницы3G High-Speed Wan Interface Cards For Isrs: Chandrodaya PrasadgmondaiОценок пока нет

- Radwin Training Catalog v3.1Документ19 страницRadwin Training Catalog v3.1Beto De HermosilloОценок пока нет

- GA-770T-D3L: User's ManualДокумент100 страницGA-770T-D3L: User's Manualcap100usdОценок пока нет

- Brand Audit Nepal TelecomДокумент27 страницBrand Audit Nepal TelecomAmit PathakОценок пока нет

- Rfic & Mmic-0Документ12 страницRfic & Mmic-0PhilippeaОценок пока нет

- 23NM60ND STMicroelectronicsДокумент12 страниц23NM60ND STMicroelectronicskeisinhoОценок пока нет

- DYNA 70025 APECS Integrated Actuator: For Stanadyne "D" Series Injection PumpsДокумент16 страницDYNA 70025 APECS Integrated Actuator: For Stanadyne "D" Series Injection PumpsmichaeltibocheОценок пока нет