Академический Документы

Профессиональный Документы

Культура Документы

VM Inifinband

Загружено:

Jeff EdstromОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

VM Inifinband

Загружено:

Jeff EdstromАвторское право:

Доступные форматы

InfiniBand: Enhancing

Virtualization ROI With

New Data Center Efficiencies

Sujal Das

Director, Product Management

Agenda

Introduction to Mellanox Technologies and Products

Meeting Data Center Server and Storage Connectivity Needs

InfiniBand in VMware Community Source Development

Experiences

Enhancements and Optimizations

Performance Testing and Results

End User Benefits

Release Plan

Future Enhancements

Summary

Mellanox Technologies

A global leader in semiconductor-based server and storage connectivity

solutions

Leading provider of high performance InfiniBand solutions

10Gb/s and 20Gb/s Node-to-Node

30Gb/s and 60Gb/s Switch-to-Switch

2.25us latency

3.5W per HCA (host channel adapter) port

RDMA and hardware based connection reliability

Efficient and scalable I/O virtualization

Price-performance advantages

Converges clustering, communications, management and storage onto a

single link with Quality of Service

InfiniBand Interconnect Building Blocks

SERVERS STORAGE COMMUNICATIONS

INFRASTRUCTURE

EQUIPMENT

EMBEDDED

SYSTEMS

INFINIBAND

SWITCH SILICON** INFINIBAND ADAPTERS*

OEMs

END USERS

*Single-port and dual-port

** 8-port and 24-port

Enterprise

Data Centers

High Performance

Computing

Embedded

Systems

InfiniBand Software & OpenFabrics Alliance

SA Subnet

Administrator

MAD Management

Datagram

SMA Subnet Manager

Agent

IPoIB IP over InfiniBand

SDP Sockets Direct

Protocol

SRP SCSI RDMA

Protocol (Initiator)

iSER iSCSI RDMA

Protocol (Initiator)

UDAPL User Direct

Access

Programming Lib

HCA Host Channel

Adapter

Mellanox HCA

Hardware

Specific Driver

Connection

Manager

MAD

OpenFabrics Kernel Level Verbs / API

SA

Client

Connection Manager

Abstraction (CMA)

OpenFabrics User Level Verbs / API

SDP IPoIB SRP iSER

SDP Lib

User Level

MAD API

Open

SM

Diag

Tools

Hardware

Provider

Mid-Layer

Upper

Layer

Protocol

User

APIs

Kernel Space

User Space

Application

Level

SMA

Sockets

Based

Access

Various

MPIs

Block

Storage

Access

IP Based

App

Access

UDAPL

K

e

r

n

e

l

b

y

p

a

s

s

Components

are in kernel

2.6.11+

SLES 10

and RHEL 4

Distributions

Microsoft

WHQL

program

Components used

with VMware VI 3

Global Market Penetration

Strong Tier-1 OEM relationships - the key channel to end-users

Connectivity Needs in Server Farms

Front End Servers: Web

and other services

Application Servers:

Business Logic

Back End Servers:

Database systems

Storage Servers and

Systems

Server islands different connectivity needs

10/100 Mb/s, 1 Gb/s 10/100 Mb/s, 1 Gb/s

Ethernet Ethernet

1 Gb/s 1 Gb/s

Ethernet Ethernet

1 1- -10 Gb/s 10 Gb/s

Ethernet, InfiniBand Ethernet, InfiniBand

1 1- -10 Gb/s 10 Gb/s FC, FC,

InfiniBand, iSCSI InfiniBand, iSCSI

Uniform connectivity that serves most demanding apps

Need for higher resource utilization

Shared pool of resources

Maximum flexibility, minimum cost

Why 10Gb/s+ Connectivity?

More applications per More applications per

server and I/O server and I/O

More traffic per I/O with More traffic per I/O with

server I/O server I/O

consolidation consolidation

I/O capacity per server I/O capacity per server

dictated by the most dictated by the most

demanding apps demanding apps

10Gb/s+ 10Gb/s+

connectivity connectivity

for all data for all data

center center

servers servers

Multi-core CPUs mandating 10Gb/s+ connectivity

Multi Core

CPUs

SAN

Adoption

Shared

Resources

Emerging IT Objectives

IT as a

IT as a

Business

Business

Utility

Utility

IT as a

IT as a

Competitive

Competitive

Advantage

Advantage

Reliable service

High availability

Agility and Scalability

Quicker business results

Server and storage I/O takes on a new role

Applications just work

Low maintenance cost

Do more with less

Price-performance-power

Delivering Service Oriented I/O

Congestion control @ source

Resource allocation

End-to-End Quality

Of Service

I/O Consolidation

Optimal Path

Management

Multiple traffic types over one adapter

Up to 40% power savings

Packet drop prevention

Infrastructure scaling @ wire speed

Dedicated Virtual

Machine Services

Virtual machine partitioning

Near native performance

Guaranteed services under adverse conditions

Clustering

Communications

Storage

Management

Converged I/O

End-to-end Services

Quality of service

More than just 802.1p

Multiple link partitions

Per traffic class

Shaping

Resource allocation

Congestion control

L2 based

Rate control at source

Class based link level flow control

Protection against bursts to guarantee

no packet drops

Enables E2E traffic differentiation, maintains latency and performance

Link Partitions to Schedule

Queue Mapping

..

Link partitions for traffic class assignment

Shaper

..

Link Arbitration

HCA Port

With Virtual Lanes

Resource

allocation

Scalable E2E Services for VMs

Scaling to millions of VEPs

(virtual functions) per

adapter

Isolation per VEP

Switching between VEPs

Inter VM switching

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

VM

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

VM

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

VM

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

VM

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

A

p

p

l

i

c

a

t

i

o

n

VM

Virtualization Enabler/Intermediary

IB HCA IB HCA IB HCA IB HCA

QoS, congestion control per

VEP

End-to-end service delivery

per VEP

Virtual End Points (VEP)

20 Gb/s

3

rd

Generation Advantage

Power Efficient with leading Performance and Capabilities

Vendor A

Vendor B

Bandwidth

per port

Power/

port RDMA

Stateless

Offload

Full

Offload

~4-6W

~20W

12W

10 or

20Gb/s

Yes Yes Yes

10Gb/s No Yes Yes

10Gb/s No Yes No

Price-Performance Benefits

Source for GigE and 10GigE iWARP: Published press release adapter and switch pricing, from Chelsio, Dell, Force10

10Gb/ s

InfiniBand

Chelsio

10GigE iWARP

2

End User Price per MB/ s

(Adapter+Cable+Switch Port)/ Measured Throughput

$0.61

$2.16

$6.33

$1

$2

$4

$

p

e

r

M

B

/

s

$1.12

Lower is better

$0.63

20Gb/ s

InfiniBand

GigE

Industry leading price-performance

VMware Community Source Involvement

One of the first to join Community Source

Active involvement since

Motivations

Work with virtualization vendor with most market share

Spirit of partnership and excellent support

Eventual Virtual Infrastructure-based product deployments

Benefits over Xen

Real-world customer success stories

Proven multi-OS VM support

Go-to-market partnership possibilities

Pioneering role in the Community Source program

The InfiniBand Drivers

Linux based drivers used as basis

Device driver, IPoIB and SRP (SCSI RDMA Protocol)

Storage and Networking functionality

Subnet Management functionality

Sourced from OpenFabrics Alliance (www.openfabrics.org)

Uses latest 2.6.xx kernel API

Device Driver

IPoIB SRP

Net I/F SCSI I/F

TCP/IP

Stack

SCSI/FS

Stack

The Challenges

ESX Linux API is based on a 2.4 Linux Kernel

Not all the 2.4 APIs are implemented

Some 2.4 APIs are slightly different in ESX

Different memory management

New build environment

Proprietary management for networking and storage

Enhancements or Optimizations

ESX kernel changes

Common spinlock implementation for network and storage drivers

Enhancement to VMkernel loader to export Linux-like symbol

mechanism

New API for network driver to access internal VSwitch data

SCSI command with multiple scatter list of 512-byte aligned buffer

InfiniBand driver changes

Abstraction layer to map Linux 2.6 APIs to Linux 2.4 APIs

Module heap mechanism to support shared memory between

InfiniBand modules

Use of new API by network driver for seamless VMotion support

Limit one SCSI host and net device per PCI function

Effective collaboration to create compelling solution

InfiniBand with Virtual Infrastructure 3

VM-0

NIC HBA

InfiniBand Network

Driver (IPoIB)

InfiniBand Storage

Driver (SRP)

VM-2

NIC HBA

VM-3

NIC HBA

Virtual

Center

NIC HBA

SCSI/FS

Virtualization

Console

OS

IB HCA IB HCA IB HCA IB HCA

Network

Virtualization

(V-Switch)

Hypervisor

Transparent to VMs and Virtual Center

VM Transparent Server I/O Scaling & Consolidation

VM VM VM VM

Virtualization Layer

VM

GE FC GE GE GE FC

VM VM VM VM

Virtualization Layer

VM

IB

Typical Deployment Configuration

IB

With Mellanox InfiniBand Adapter

3X networking, 10X SAN performance

Per adapter performance. Based on comparisons with GigE and 2 Gb/s Fibre Channel

Using Virtual Center Seamlessly with InfiniBand

Storage configuration

vmhba2

Using Virtual Center Seamlessly with InfiniBand

Storage configuration

vmhba2

Using Virtual Center Seamlessly with InfiniBand

Network configuration

vmnic2 (shows as vmhba2:bug)

vSwitch1

Performance Testing Configuration

ESX Server:

2 Intel Dual Core

Woodcrest CPUs

4GB Memory

InfiniBand 20Gb/s HCA

Intel Woodcrest

Based Server

Intel CPU based

Storage Target

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

InfiniBand Switch

20Gb/s

10Gb/s

VM-0

NIC HBA

VM-1

NIC HBA

VM-3

NIC HBA

VMware ESX Virtualization Layer and Hypervisor

InfiniBand Network

Driver

InfiniBand Storage

Driver

Intel CPU based

Storage Target

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

10Gb/s

Intel Woodcrest

Based Server

Intel CPU based

Storage Target

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

InfiniBand Switch

20Gb/s

10Gb/s

VM-0

NIC HBA

VM-1

NIC HBA

VM-3

NIC HBA

VMware ESX Virtualization Layer and Hypervisor

InfiniBand Network

Driver

InfiniBand Storage

Driver

Intel CPU based

Storage Target

I

n

f

i

n

i

B

a

n

d

A

d

a

p

t

e

r

10Gb/s

Switch:

Flextronics 20 Gb/s 24 port Switch

Native InfiniBand Storage (SAN)

2 Mellanox MTD1000 Targets

(Reference Design)

www.mellanox.com

Performance Testing Results Sample - Storage

128KB Read

benchmark from

one VM

128KB Read

benchmarks from

two VMs

More Compelling Results

Same as four dedicated 4Gb/s FC HBAs

128KB Read

benchmarks from

four VMs

Tested Hardware

Mellanox 10 and 20 Gb/s InfiniBand Adapters

Cisco and Voltaire InfiniBand Switches

Cisco Fibre Channel Gateways with EMC back-end storage

LSI Native InfiniBand Storage Target

Compelling End User Benefits

Up to 40% savings

Lower Initial

Purchase Cost

Lower Per-port

Maintenance Cost

Lower I/O Power

Consumption

Up to 67% savings Up to 44% savings

Seamless

Transparent interfaces to VM

apps and Virtual Center

Management

Best of both worlds seamless + cost/power savings

Based on $150/port maintenance cost (source: VMware, IDC), end user per port cost (adapter port + cable + switch port)

comparisons between 20Gb/s IB HCA ($600/port), GigE NIC ($150/port) and 2Gb/s FC HBA ($725/port) (Source: IDC)

Typical VMware virtual server configuration (source: VMware), 2Wpower per GigE port, 5W power per FC port, 4-6W

power per 20Gb/s IB port (source: Mellanox)

Compelling End User Benefits (contd.)

VI 3 Component

Acceleration

VM Application

Acceleration

Backup, Recovery,

Cloning,

Virtual Appliance

deployment

Database, file system and

other storage intensive

applications

Best of both worlds seamless + I/O scaling

* Benchmark data not available yet

Experimental Release Plans

VMware Virtual Infrastructure (incl. ESX) Experimental Release

Mellanox InfiniBand drivers installation package

Targeted for late Q1 2007

For further details, contact:

J unaid Qurashi, Product Management, VMware,

jqurashi@vmware.com

Sujal Das, Product Management, Mellanox,

sujal@mellanox.com

Future Work

Evaluate experimental release feedback

GA product plans

Feature enhancements

Based on customer feedback

VMotion acceleration over RDMA

Networking performance improvements

Call to Action: Evaluate Experimental Release

InfiniBand: for high performance, reliable server & storage connectivity

Tier 1 OEM channels and global presence

Multi-core & virtualization driving 10Gb/s connectivity in the data center

I/O convergence & IT objectives driving stringent I/O requirements

InfiniBand delivers Service Oriented I/O with service guarantees

Pioneering role in community source program

VI 3 with InfiniBand seamless IT experience with I/O scale-out

Compelling cost, power and performance benefits

Up to 40% savings

Lower Initial

Purchase Cost

Lower Per-port

Maintenance Cost

Lower I/O Power

Consumption

Up to 67% savings Up to 44% savings

Presentation Download

Please remember to complete your

session evaluation form

and return it to the room monitors

as you exit the session

The presentation for this session can be downloaded at

http://www.vmware.com/vmtn/vmworld/sessions/

Enter the following to download (case-sensitive):

Username: cbv_rep

Password: cbvfor9v9r

Вам также может понравиться

- Radware LP - Alteon5224 ProductPresentationДокумент26 страницRadware LP - Alteon5224 ProductPresentationanas1988MAОценок пока нет

- Power Vault MD3600i Training PresentationДокумент59 страницPower Vault MD3600i Training PresentationAdam Van HarenОценок пока нет

- LP 1358Документ28 страницLP 1358sekenmahn.2Оценок пока нет

- Upgrading The Data Center To 10 Gigabit Ethernet: Arista WhitepaperДокумент4 страницыUpgrading The Data Center To 10 Gigabit Ethernet: Arista WhitepaperkuzzuloneОценок пока нет

- 10gb Ethernet Solution GuideДокумент7 страниц10gb Ethernet Solution GuideAtif RahilОценок пока нет

- thirdIO PDFДокумент13 страницthirdIO PDFAthanasios™Оценок пока нет

- HP 8-20q Fibre Channel SwitchДокумент17 страницHP 8-20q Fibre Channel SwitchJocelyn ÁlvarezОценок пока нет

- ProgrammableFlow Intro - Sep2011Документ39 страницProgrammableFlow Intro - Sep2011Serge StasovОценок пока нет

- 96 Switch Fibra HPДокумент20 страниц96 Switch Fibra HPtperugachi123Оценок пока нет

- Grid Computing Case Study 011409Документ4 страницыGrid Computing Case Study 011409Dinesh KumarОценок пока нет

- Peter Gaspar Consulting Systems Engineer, SP Mobility, MEAДокумент47 страницPeter Gaspar Consulting Systems Engineer, SP Mobility, MEAmansour14Оценок пока нет

- How Open-Source Networking Changes the Game for Service ProvidersДокумент31 страницаHow Open-Source Networking Changes the Game for Service ProvidersKun Nursyaiful Priyo PamungkasОценок пока нет

- HP Virtual Connect Family DatasheetДокумент12 страницHP Virtual Connect Family DatasheetOliver AcostaОценок пока нет

- Ericsson RedBack Smart Edge 800 DatasheetДокумент6 страницEricsson RedBack Smart Edge 800 DatasheetMannus PavlusОценок пока нет

- A10 Networks - AX5200Документ4 страницыA10 Networks - AX5200umairpbОценок пока нет

- Sonus SBC White PaperДокумент14 страницSonus SBC White PaperSharique NakhawaОценок пока нет

- Emulex Leaders in Fibre Channel Connectivity: Fibre Chanel Over EthernetДокумент29 страницEmulex Leaders in Fibre Channel Connectivity: Fibre Chanel Over Etherneteasyroc75Оценок пока нет

- Huawei - Sx700 - Series - Switch - Pre-Sales Training - Slides 2013-10-23 PDFДокумент56 страницHuawei - Sx700 - Series - Switch - Pre-Sales Training - Slides 2013-10-23 PDFArdhy BaskaraОценок пока нет

- Optimize Skype For Business User Experience Over Wi-Fi Admin Guide.Документ8 страницOptimize Skype For Business User Experience Over Wi-Fi Admin Guide.Gino AnticonaОценок пока нет

- Ethernet x520 Server Adapters BriefДокумент4 страницыEthernet x520 Server Adapters BriefFiroj KhanОценок пока нет

- Answer: Gigabit EthernetДокумент9 страницAnswer: Gigabit EthernetRaine LopezОценок пока нет

- Elx Ds All 554M NC553m HPДокумент2 страницыElx Ds All 554M NC553m HPyogesh_mmОценок пока нет

- Conquer The Cloud - Part 2 - Optimizing ApplicationДокумент41 страницаConquer The Cloud - Part 2 - Optimizing ApplicationYahya NursalimОценок пока нет

- Intel 10 Gigabit Af Da Dual Port Server Adapte Manual de UsuarioДокумент4 страницыIntel 10 Gigabit Af Da Dual Port Server Adapte Manual de UsuarioDavid NavarroОценок пока нет

- A Study of Hardware Assisted Ip Over Infiniband and Its Impact On Enterprise Data Center PerformanceДокумент10 страницA Study of Hardware Assisted Ip Over Infiniband and Its Impact On Enterprise Data Center PerformanceR.P.K. KOTAODAHETTIОценок пока нет

- Exinda 4061 Datasheet PDFДокумент2 страницыExinda 4061 Datasheet PDFrebicОценок пока нет

- Track Cisco Ucs SlidesДокумент81 страницаTrack Cisco Ucs Slidesshariqyasin100% (3)

- Brocade 10Gb CNA For IBM System XДокумент9 страницBrocade 10Gb CNA For IBM System XAlejandro BerardinelliОценок пока нет

- HP FlexFabric 20Gb 2-Port 650FLB AdapterДокумент11 страницHP FlexFabric 20Gb 2-Port 650FLB Adapterquangson3210Оценок пока нет

- Network-Based Application RecognitionДокумент6 страницNetwork-Based Application RecognitionGilles de PaimpolОценок пока нет

- Ds FX SeriesДокумент3 страницыDs FX SeriesarzeszutОценок пока нет

- Ceragon ManualДокумент56 страницCeragon ManualMarlon Biagtan100% (1)

- 4aa4 8302enwДокумент24 страницы4aa4 8302enwNguyễn Trọng TrườngОценок пока нет

- NerateallДокумент925 страницNerateallMiguel RivasОценок пока нет

- Preparing Your Network for 802.11ax (Wi-Fi 6Документ20 страницPreparing Your Network for 802.11ax (Wi-Fi 6chopanalvarezОценок пока нет

- ENA 12.6-ILT-Mod 01-Extreme's Solution-Rev02-111230Документ30 страницENA 12.6-ILT-Mod 01-Extreme's Solution-Rev02-111230Luis Tovalino BadosОценок пока нет

- Solving Server Bottlenecks With Intel Server AdaptersДокумент8 страницSolving Server Bottlenecks With Intel Server AdapterslakekosОценок пока нет

- IBM System Networking SAN24B-5 SwitchДокумент12 страницIBM System Networking SAN24B-5 SwitchAntonio A. BonitaОценок пока нет

- 11 LTE Radio Network Planning IntroductionДокумент41 страница11 LTE Radio Network Planning Introductionusman_arain_lhr80% (5)

- BRKCRS 2000Документ73 страницыBRKCRS 2000PankajОценок пока нет

- 01-HUAWEI DSLAM Pre-Sales Specialist Training V1.0Документ43 страницы01-HUAWEI DSLAM Pre-Sales Specialist Training V1.0rechtacekОценок пока нет

- Vodafone IP Transit Data Sheet 2019Документ2 страницыVodafone IP Transit Data Sheet 2019Lucas MagalhãesОценок пока нет

- Dell Poweredge Half-Height M610 and Full-Height M710 Blade ServersДокумент4 страницыDell Poweredge Half-Height M610 and Full-Height M710 Blade Serversswatikhosla25Оценок пока нет

- Emulex 10GBe and FC For IBM MatrixДокумент2 страницыEmulex 10GBe and FC For IBM MatrixJoi NewmanОценок пока нет

- Cisco For Managed Shared Services Frequently Asked QuestionsДокумент7 страницCisco For Managed Shared Services Frequently Asked QuestionsGirma MisganawОценок пока нет

- Versatile Routing and Services with BGP: Understanding and Implementing BGP in SR-OSОт EverandVersatile Routing and Services with BGP: Understanding and Implementing BGP in SR-OSОценок пока нет

- SOA Patterns with BizTalk Server 2013 and Microsoft Azure - Second EditionОт EverandSOA Patterns with BizTalk Server 2013 and Microsoft Azure - Second EditionОценок пока нет

- Google Anthos in Action: Manage hybrid and multi-cloud Kubernetes clustersОт EverandGoogle Anthos in Action: Manage hybrid and multi-cloud Kubernetes clustersОценок пока нет

- Set Up Your Own IPsec VPN, OpenVPN and WireGuard Server: Build Your Own VPNОт EverandSet Up Your Own IPsec VPN, OpenVPN and WireGuard Server: Build Your Own VPNРейтинг: 5 из 5 звезд5/5 (1)

- CISSP Domain 2 v2 CompleteДокумент29 страницCISSP Domain 2 v2 CompleteJeff EdstromОценок пока нет

- THM67 PDFДокумент96 страницTHM67 PDFJeff Edstrom100% (1)

- CISSP Domain 3 v2 CompleteДокумент178 страницCISSP Domain 3 v2 CompleteJeff Edstrom100% (1)

- CISSP Domain 1 v3 CompleteДокумент92 страницыCISSP Domain 1 v3 CompleteJeff Edstrom100% (1)

- Iny House: For Micro, Tiny, Small, and Unconventional House EnthusiastsДокумент77 страницIny House: For Micro, Tiny, Small, and Unconventional House EnthusiastsJeff Edstrom100% (1)

- Latacora - The SOC2 Starting SevenДокумент13 страницLatacora - The SOC2 Starting SevenJeff EdstromОценок пока нет

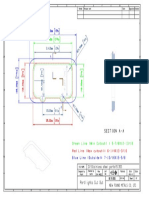

- 513SS-Dimension Drawing PDFДокумент1 страница513SS-Dimension Drawing PDFJeff EdstromОценок пока нет

- WiFly RN UM PDFДокумент79 страницWiFly RN UM PDFJeff EdstromОценок пока нет

- 513SS-Dimension Drawing PDFДокумент1 страница513SS-Dimension Drawing PDFJeff EdstromОценок пока нет

- HCIBench 2.0 User GuideДокумент23 страницыHCIBench 2.0 User GuideAasavari AwingОценок пока нет

- 317SS-Dimension Drawing PDFДокумент1 страница317SS-Dimension Drawing PDFJeff EdstromОценок пока нет

- LTRT-38127 Connecting Genesys SIP Server With AT&T IP Toll Free SIP Trunking Service With MIS, PNT or AT&T VPN Transport, Via Mediant E-SBCДокумент96 страницLTRT-38127 Connecting Genesys SIP Server With AT&T IP Toll Free SIP Trunking Service With MIS, PNT or AT&T VPN Transport, Via Mediant E-SBCJeff EdstromОценок пока нет

- Latacora - The SOC2 Starting SevenДокумент13 страницLatacora - The SOC2 Starting SevenJeff EdstromОценок пока нет

- THM49 PDFДокумент80 страницTHM49 PDFJeff Edstrom100% (2)

- Refrige TroubleshootingДокумент1 страницаRefrige TroubleshootingJeff EdstromОценок пока нет

- 2004 Jet Away Owner's ManualДокумент72 страницы2004 Jet Away Owner's ManualJeff EdstromОценок пока нет

- The Ashley Book of Knots by Clifford W AshleyДокумент638 страницThe Ashley Book of Knots by Clifford W Ashleyxenocid3rОценок пока нет

- EyeQueue User Guide Version 8.0.8Документ318 страницEyeQueue User Guide Version 8.0.8Jeff EdstromОценок пока нет

- AudioCodes-Genesys SIP Server Ver 8 GW InstallДокумент57 страницAudioCodes-Genesys SIP Server Ver 8 GW Installjsh118Оценок пока нет

- The Mass OrnamentДокумент209 страницThe Mass OrnamentJeff EdstromОценок пока нет

- NLP Ashida Kim Ninja Mind ControlДокумент70 страницNLP Ashida Kim Ninja Mind ControlFran CineОценок пока нет

- Infiniband™ Frequently Asked Questions: Document Number 2078giДокумент5 страницInfiniband™ Frequently Asked Questions: Document Number 2078giEdwin VargheseОценок пока нет

- PB SN2010 Fibre Switch PDFДокумент3 страницыPB SN2010 Fibre Switch PDFAnuj KumarОценок пока нет

- HP Cluster Platforms - Accelerate Innovation and Discovery With HP HPC Solutions - Family Data SheetДокумент8 страницHP Cluster Platforms - Accelerate Innovation and Discovery With HP HPC Solutions - Family Data SheetIsaias GallegosОценок пока нет

- MLNX-OS - IB - Version - 3.9.0900 - Release NotesДокумент25 страницMLNX-OS - IB - Version - 3.9.0900 - Release Notesdu2efsОценок пока нет

- LAN - MFT - User - Manual by MellanoxДокумент57 страницLAN - MFT - User - Manual by MellanoxNanard78Оценок пока нет

- Top500 HPCДокумент156 страницTop500 HPCrubenfv.cicaОценок пока нет

- Reference Guide: Dell and Mellanox Joint SolutionsДокумент3 страницыReference Guide: Dell and Mellanox Joint SolutionsMehmet GargınОценок пока нет

- Dell DCS XA90 Spec Sheet - November 2014Документ2 страницыDell DCS XA90 Spec Sheet - November 2014derushiОценок пока нет

- Nvidia DGX A100 DatasheetДокумент2 страницыNvidia DGX A100 DatasheettimonocОценок пока нет

- Deploying HPC Cluster With Mellanox Infiniband Interconnect SolutionsДокумент37 страницDeploying HPC Cluster With Mellanox Infiniband Interconnect Solutionskhero1967Оценок пока нет

- NVIDIA Networking Solutions for HPE Deliver Unmatched System PerformanceДокумент14 страницNVIDIA Networking Solutions for HPE Deliver Unmatched System PerformanceYUU100% (1)

- Connectx Vs Intel xxv710Документ2 страницыConnectx Vs Intel xxv710Bename DoostОценок пока нет

- DGX Pod Reference Design WhitepaperДокумент15 страницDGX Pod Reference Design WhitepaperAntoОценок пока нет

- Mellanox EVB BriefДокумент2 страницыMellanox EVB BriefИгорь ПодпальченкоОценок пока нет

- (12.1.4) BR - 4 - PB - Mellanox - NEOДокумент2 страницы(12.1.4) BR - 4 - PB - Mellanox - NEOKharisma MuhammadОценок пока нет

- Cluster Computing TutorialДокумент101 страницаCluster Computing TutorialJoão EliakinОценок пока нет

- Oracle RAC Over VPI Ref Design BAKДокумент19 страницOracle RAC Over VPI Ref Design BAKsherif hassan younisОценок пока нет

- LS-DYNA AnalysisДокумент29 страницLS-DYNA AnalysisJohn TerryОценок пока нет

- HP SSP Server Support GuideДокумент31 страницаHP SSP Server Support Guidepera_petronijevicОценок пока нет

- Understanding Up:Down InfiniBand Routing Algorithm - Mellanox Interconnect CommunityДокумент7 страницUnderstanding Up:Down InfiniBand Routing Algorithm - Mellanox Interconnect Communitysmamedov80Оценок пока нет

- What Is IO VirtualizationДокумент3 страницыWhat Is IO VirtualizationVijay KumarОценок пока нет

- RA 2114 Nutanix Enterprise Cloud For AIДокумент66 страницRA 2114 Nutanix Enterprise Cloud For AIDavid YehОценок пока нет

- RFD Tnavigator Analysis and Profiling Broadwell PDFДокумент15 страницRFD Tnavigator Analysis and Profiling Broadwell PDFYamal E Askoul TОценок пока нет

- Mellanox PartnerFIRST Cable GuideДокумент2 страницыMellanox PartnerFIRST Cable GuideMN Titas TitasОценок пока нет

- Mlnx-Os Switch-Based Management Software: HighlightsДокумент3 страницыMlnx-Os Switch-Based Management Software: HighlightsKharisma MuhammadОценок пока нет

- Dell NSS NFS Storage Solution Final PDFДокумент38 страницDell NSS NFS Storage Solution Final PDFtheunfОценок пока нет

- STAC Report HighlightsДокумент22 страницыSTAC Report HighlightsJuniper NetworksОценок пока нет

- Silicon Photonic SДокумент24 страницыSilicon Photonic SManojKarthikОценок пока нет

- Mellanox Ethernet Switch BrochureДокумент4 страницыMellanox Ethernet Switch BrochureTimucin DIKMENОценок пока нет