Академический Документы

Профессиональный Документы

Культура Документы

Pat 02 Sol

Загружено:

musalmanmusalmanИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Pat 02 Sol

Загружено:

musalmanmusalmanАвторское право:

Доступные форматы

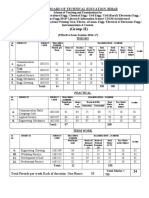

Pattern Recognition 2011

Lab Sheet 2

Solution

Exercise 1 Total probability and Bayes Theorem 5

Suppose that we have three coloured boxes r (red), b (blue), and g (green). Box r contains

3 apples (a), 4 oranges (o), and 3 limes (), box b contains 1 apple, 1 orange, and 0 limes,

and box g contains 3 apples, 3 oranges, and 4 limes. A box is chosen at random with

probabilities p (r) = 0.2, p (b) = 0.2, p (g) = 0.6 and a piece of fruit is removed from the

box with equal probability of selecting any of the items in the box.

a. What is the probability of selecting an apple? 2

b. If we observe that the selected fruit is in fact an orange, what is the probability

that it came from the green box? 3

________________________________________________

a. p (a) = p (a | r) p (r) +p (a | b) p (b) +p (a | g) p (g) =

3

10

0.2+

1

2

0.2+

3

10

0.6 = 34%

b. p (g | o) =

p(o | g)p(g)

=r,b,g

p(o | )

= 50%

Exercise 2 Probability density and likelyhood function 5

Suppose two one-dimensional likelyhoods with equal prior probabilities are of the form

p (x |

i

) e

|xa

i

|

b

i

for i = 1, 2 and 0 b

i

.

a. Write an expression for each density. 2

b. Determine the likelyhood ratio

p(x|

1

)

p(x|

2

)

. 1

1

c. Plot the likelyhood ration for a

1

= 0, b

1

=1, a

2

=1, and b

2

= 2. 2

________________________________________________

a. p (x |

i

) =

1

2b

i

e

|xa

i

|

b

i

b.

b

2

b

1

exp

_

|xa

2

|

b

2

|xa

1

|

b

1

_

c.

10 5 0 5 10

0

1

2

3

x

p

(

x

|

w

1

)

/

p

(

x

|

w

2

)

Exercise 3 Minimum-error-rate classication 14

(For this exercise you might require to read section 2.3 (Minimum error rate classica-

tion) which will be dealt with in the lecture on April 20th!)

Generalize the minimax decision rule in order to classify patterns from three categories

having triangle densities as follows:

p(x|

i

) = T(

i

,

i

) =

_

_

_

(

i

|x

i

|)

2

i

for |x

i

| <

i

0 otherwise

,

where

i

> 0 is the half-width of the distribution (i = 1, 2, 3). Assume that

1

<

2

<

3

and

i

,

i+1

i+1

i

i

+

i+1

for i = 1, 2.

a. Explain if and how the assumptions constrain the generality of the problem. 3

b. In terms of the priors P (

i

), means

i

, and half-widths

i

, nd the optimal decision

points x

1

and x

2

under a zero-one (categorization) loss. 4

2

c. However, assume that the priors P (

i

) are unknown. Find two decision points

x

1

and x

2

so that the error rate is independent of the priors for such triangular

distributions. Why are these decision points called the minimax decision points?

(Consult section 2.3.1 in the textbook if you dont know the answer.) 4

d. Let {

i

,

i

} = {0, 1} , {0.5, 0.5} , and {1, 1}. Find the minimax decision rule (i.e.,

x

1

and x

2

) for this case. What is the minimax risk R? 3

________________________________________________

a. Assumption

1

<

2

<

3

: excludes the special case that two or all of the three

means are equal. No further loss of generality, only simplies notation.

Assumption

i

,

j

i+1

i

i

+

i+1

for i = 1, 2: Ensures that there are exactly

two decision points and the probabilities density functions do not spread beyond

center of neighboring probability density functions.

b. x

i

=

P(

i

)

2

i+1

(

i

+

i

)+P(

i+1

)

2

i

(

i+1

i+1

)

P(

i

)

2

i+i

+P(

i+1

)

2

i

for i = 1, 2

c.

R = a

R

1

+R

2

P (

3

) p

3

(x) dx +

R

1

+R

3

P (

2

) p

2

(x) dx +

R

2

+R

3

P (

1

)

. .

1P(

2

)P(

3

)

p

1

(x) dx

=

R

2

+R

3

p

1

(x) dx + P (

2

)

_

R

1

+R

3

p

2

(x) dx

. .

1

R

2

p

2

(x) dx

R

2

+R

3

p

1

(x) dx

. .

R

1

p

1

(x) dx1

_

+P (

3

)

_

R

1

+R

2

p

3

(x) dx

. .

1

R

3

p

3

(x) dx

R

2

+R

3

p

1

(x) dx

. .

R

1

p

1

(x) dx1

_

=

R

2

+R

3

p

1

(x) dx + P (

2

)

_

R

1

p

1

(x) dx

R

2

p

2

(x) dx

_

. .

=0 for minimax

+P (

3

)

_

R

1

p

1

(x) dx

R

3

p

3

(x) dx

_

. .

=0 for minimax

3

Condition for minimax solution:

R

1

p

1

(x) dx =

R

2

p

2

(x) dx =

R

3

p

3

(x) dx

1

R

1

p

1

(x) dx = 1

R

2

p

2

(x) dx = 1

R

3

p

3

(x) dx

(

1

+

1

x

1

)

2

2

1

=

(x

1

(

2

2

))

2

+ (

2

+

2

x

2

)

2

2

2

=

(x

2

(

3

3

))

2

2

3

Note that you can the rst and the last term imply

1

+

1

x

1

=

x

2

(

3

3

)

3

.

d. The above condition holds even if constraint

i

,

j

i+1

i

i

+

i+1

is replaced by

1

2

2

3

3

,

1

+

1

2

+

2

3

.

For the specic values, we obtain: 1 x

1

= x

2

and 4x

2

1

+ 4

_

1 x

2

2

. .

x

!

_

= x

2

2

,

hence (x

1

, x

2

) =

_

1

1+

8

,

8

1+

8

_

(0.26, 0.74).

R = 1

R

1

p

1

(x) dx =

(

1

+

1

x

1

)

2

2

2

1

=

_

1

1

1+

8

_

2

2

=

4

(1+

8)

2

0.27

Exercise 4 Descriptive statistics / Matlab 11

Exercise has been shifted from Lab Sheet 1. Please familiarize yourself with Matlab in

case it is new for you!!

Download the le pattern1.mat from the course website and load its content into your

MATLAB session using the load command. In your MATLAB workspace, two variables

must appear: patterns and targets. The rst variable contains 580 patterns coded as

2D feature vectors. The second variable is a vector of 580 labels assigning the patterns

to one of two classes, w

0

or w

1

. Write a MATLAB script to accomplish the following

tasks:

a. Display the patterns in a 2D plot with the help of the scatter command. Use

dierent colors for the classes 0 and 1. 2

b. Use the hist command, to visualize the 1D distributions of the x

1

and x

2

com-

ponents and of the class variable w. 2

4

c. Usually, we are interested in the class-conditional distributions, such as x

1

|w

0

and

x

1

|w

1

. Use the output of the hist command to compute the class-conditional

distributions for both components of the feature vector: that is x

1

|w

0

, x

1

|w

1

and

x

2

|w

0

, x

2

|w

1

. Normalize the histograms to range from 0 to 1 and display the 4

class-conditional distributions in a single gure using the subplot command. Is it

possible to use only one of the two components for the purpose of classication? 3

d. Write your own script (i.e., do not use the mean or cov commands) to compute

the class- conditional mean vector the covariance matrix and the correlation co-

ecients for the two classes. Try to utilize matrix notation avoiding explicit for

loops whenever possible. 4

________________________________________________

Total Score 35

5

Вам также может понравиться

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsОт EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsОценок пока нет

- Homework 1: 1. Solve The Following Problems From Chapter 2 of The Text Book: 7, 12, 13, 31, 38Документ6 страницHomework 1: 1. Solve The Following Problems From Chapter 2 of The Text Book: 7, 12, 13, 31, 38김창민Оценок пока нет

- Solutions To Selected Problems-Duda, HartДокумент12 страницSolutions To Selected Problems-Duda, HartTiep VuHuu67% (3)

- Solution 8Документ22 страницыSolution 8Sigan ChenОценок пока нет

- Week 16 Tuesday Midterm-Exam-Review 2020Документ3 страницыWeek 16 Tuesday Midterm-Exam-Review 2020Adam JabriОценок пока нет

- Exam P Formula SheetДокумент14 страницExam P Formula SheetToni Thompson100% (4)

- CS771: Machine Learning: Tools, Techniques and Applications Mid-Semester ExamДокумент7 страницCS771: Machine Learning: Tools, Techniques and Applications Mid-Semester ExamanshulОценок пока нет

- 1-JEE NotesДокумент132 страницы1-JEE NotesKarthick RajapandiyanОценок пока нет

- Hw2 - Raymond Von Mizener - Chirag MahapatraДокумент13 страницHw2 - Raymond Von Mizener - Chirag Mahapatrakob265Оценок пока нет

- Mathematics TДокумент62 страницыMathematics TRosdy Dyingdemon100% (1)

- 3 Discrete Random Variables and Probability DistributionsДокумент26 страниц3 Discrete Random Variables and Probability DistributionsRenukadevi RptОценок пока нет

- FX GX FX GX FXGX: AP Calculus AB:: 2007-2008:: Shubleka NameДокумент1 страницаFX GX FX GX FXGX: AP Calculus AB:: 2007-2008:: Shubleka NameteachopensourceОценок пока нет

- 2ND Term S2 Mathematics - 2Документ51 страница2ND Term S2 Mathematics - 2Adelowo DanielОценок пока нет

- For Instructor's Use Only: Make Sure That Your Exam Paper Has PagesДокумент9 страницFor Instructor's Use Only: Make Sure That Your Exam Paper Has PagesNebil YisehakОценок пока нет

- Biomat Bio-Geo Calim Qmed 2014-15Документ8 страницBiomat Bio-Geo Calim Qmed 2014-15David GonçalvesОценок пока нет

- Lecture Notes (Chapter 2.1 Double Integral)Документ9 страницLecture Notes (Chapter 2.1 Double Integral)shinee_jayasila2080Оценок пока нет

- Polynomials: Nur Liyana BT Za'Im Siti Izzati Dyanah BT Zainal Wan Izzah KamilahДокумент39 страницPolynomials: Nur Liyana BT Za'Im Siti Izzati Dyanah BT Zainal Wan Izzah KamilahLiyana Za'imОценок пока нет

- 1.1. Real Numbers, Intervals, and InequalitiesДокумент12 страниц1.1. Real Numbers, Intervals, and InequalitiesTearlëşşSufíåñОценок пока нет

- Grade 11 Math Exam NotesДокумент13 страницGrade 11 Math Exam Notespkgarg_iitkgpОценок пока нет

- Polynomial FunctionДокумент6 страницPolynomial FunctionSaifodin AlangОценок пока нет

- CrapДокумент8 страницCrapvincentliu11Оценок пока нет

- Chapter 2 Normed SpacesДокумент60 страницChapter 2 Normed SpacesMelissa AylasОценок пока нет

- Polynomial InequalitiesДокумент16 страницPolynomial InequalitiesCharlie Sambalod Jr.Оценок пока нет

- ACFrOgDY3QTlWh7sSk7tO7In8r GjzTEnRCizwcnoJ4DuL5 Pvl55JBQSqVkepdYOo4pqlPIyUQeaiwqCQKTM5sHXIBsQafwS6ikzxpipQSo2KLQ2cziJUuT5HdEFr9Q96ynrmQMhkK1z6X2mUOoДокумент15 страницACFrOgDY3QTlWh7sSk7tO7In8r GjzTEnRCizwcnoJ4DuL5 Pvl55JBQSqVkepdYOo4pqlPIyUQeaiwqCQKTM5sHXIBsQafwS6ikzxpipQSo2KLQ2cziJUuT5HdEFr9Q96ynrmQMhkK1z6X2mUOoVictoria LiuОценок пока нет

- 3 Discrete Random Variables and Probability DistributionsДокумент22 страницы3 Discrete Random Variables and Probability DistributionsTayyab ZafarОценок пока нет

- Mat1341 TT2 2010WДокумент4 страницыMat1341 TT2 2010WexamkillerОценок пока нет

- Online Assignment 1 Math Econ 2021 22Документ6 страницOnline Assignment 1 Math Econ 2021 22Panagiotis ScordisОценок пока нет

- WORKSHEET 2.1 & 2.2 1.10.1 Remainder Theorem and Factor TheoremДокумент3 страницыWORKSHEET 2.1 & 2.2 1.10.1 Remainder Theorem and Factor TheoremKevin DanyОценок пока нет

- National Board For Higher Mathematics M. A. and M.Sc. Scholarship Test September 22, 2012 Time Allowed: 150 Minutes Maximum Marks: 30Документ7 страницNational Board For Higher Mathematics M. A. and M.Sc. Scholarship Test September 22, 2012 Time Allowed: 150 Minutes Maximum Marks: 30Malarkey SnollygosterОценок пока нет

- Second Grading Period Periodical Exam Exponential, Inverse, Logarithmic, Rational FunctionsДокумент4 страницыSecond Grading Period Periodical Exam Exponential, Inverse, Logarithmic, Rational FunctionsFathima Ricamonte CruzОценок пока нет

- Factoring and Solving Polynomial EquationsДокумент13 страницFactoring and Solving Polynomial EquationsMark Francis HernandezОценок пока нет

- MathEcon17 FinalExam SolutionДокумент13 страницMathEcon17 FinalExam SolutionCours HECОценок пока нет

- Title of The Activity: Mean, Variance, and Standard Deviation of A Discrete RandomДокумент6 страницTitle of The Activity: Mean, Variance, and Standard Deviation of A Discrete RandomALLYSSA MAE PELONIAОценок пока нет

- Math10 - Q1 - Mod2 - Lessons1-2 Proving-Remainder Theorem and Factor Theorem v3Документ21 страницаMath10 - Q1 - Mod2 - Lessons1-2 Proving-Remainder Theorem and Factor Theorem v3Manelyn Taga70% (10)

- U1 Limit and Continuity HW AnswersДокумент17 страницU1 Limit and Continuity HW AnswersnevinОценок пока нет

- Grade 11 Functions Unit 2 - Equivalent Algebraic Expressions Student NotesДокумент15 страницGrade 11 Functions Unit 2 - Equivalent Algebraic Expressions Student NotesȺȺОценок пока нет

- Mata33 Final 2012wДокумент14 страницMata33 Final 2012wexamkillerОценок пока нет

- 175 Fall2009 Final SolДокумент6 страниц175 Fall2009 Final Solnurullah_bulutОценок пока нет

- ConfidentialДокумент8 страницConfidentialhannahjoymachucateberioОценок пока нет

- Genmath Module 2Документ14 страницGenmath Module 2Mori OugaiОценок пока нет

- MHF 4U Final Exam JANUARY 2008 Part A - Answer in The Space ProvidedДокумент11 страницMHF 4U Final Exam JANUARY 2008 Part A - Answer in The Space Providedsonya63265Оценок пока нет

- Assign 1Документ5 страницAssign 1darkmanhiОценок пока нет

- Exponential LogarithmicДокумент54 страницыExponential LogarithmicShella M LuarОценок пока нет

- LOGARITHMIC FUNCTIONS ST ArnoldДокумент45 страницLOGARITHMIC FUNCTIONS ST ArnoldBea Dacillo BautistaОценок пока нет

- Assignment1 2014Документ2 страницыAssignment1 2014Joshua Nolan LuffОценок пока нет

- Synthetic DivisionДокумент19 страницSynthetic DivisionLailah Rose AngkiОценок пока нет

- Integration by Partial FractionДокумент26 страницIntegration by Partial FractionSyarifah Nabila Aulia RantiОценок пока нет

- Q3 Mathematics Peer Tutoring: Students' Advisory BoardДокумент93 страницыQ3 Mathematics Peer Tutoring: Students' Advisory BoardKiminiОценок пока нет

- 3.1 Least-Squares ProblemsДокумент28 страниц3.1 Least-Squares ProblemsGabo GarcíaОценок пока нет

- Reviewer in MATH 7 3 Periodical Test Content UpdateДокумент4 страницыReviewer in MATH 7 3 Periodical Test Content UpdateGayzelОценок пока нет

- Finding Equation of Quadratic FunctionДокумент2 страницыFinding Equation of Quadratic Functiondiane carol roseteОценок пока нет

- Final Exam Advanced Functions 12Документ7 страницFinal Exam Advanced Functions 12Laura RibbaОценок пока нет

- Arafe Mayanga XI - MeadДокумент16 страницArafe Mayanga XI - Meadrafi mayangaОценок пока нет

- Ncert Solutions For Class 10 Maths Chapter 289Документ16 страницNcert Solutions For Class 10 Maths Chapter 289Uma MishraОценок пока нет

- Full Download Calculus Early Transcendentals 10th Edition Anton Solutions ManualДокумент35 страницFull Download Calculus Early Transcendentals 10th Edition Anton Solutions ManualmaddisontulisОценок пока нет

- Mtap G10S2 Polynomial FunctionsДокумент2 страницыMtap G10S2 Polynomial FunctionsLedesma, Elijah O.Оценок пока нет

- A-level Maths Revision: Cheeky Revision ShortcutsОт EverandA-level Maths Revision: Cheeky Revision ShortcutsРейтинг: 3.5 из 5 звезд3.5/5 (8)

- Homework 1 Solutions - ECE6553, Spring 2011: H U T H U TДокумент2 страницыHomework 1 Solutions - ECE6553, Spring 2011: H U T H U TmusalmanmusalmanОценок пока нет

- Fire Combustion ProjectДокумент6 страницFire Combustion ProjectmusalmanmusalmanОценок пока нет

- HW1 PDFДокумент2 страницыHW1 PDFmusalmanmusalmanОценок пока нет

- Image Processing Techniques For Object Tracking in Video Surveillance - A Survey 2015-2Документ6 страницImage Processing Techniques For Object Tracking in Video Surveillance - A Survey 2015-2musalmanmusalmanОценок пока нет

- PDFДокумент32 страницыPDFmusalmanmusalmanОценок пока нет

- Book 1Документ7 страницBook 1musalmanmusalmanОценок пока нет

- Merit List-3: Class: B.A/B.SC HONORS 2012: Geography Base: Open MeritДокумент1 страницаMerit List-3: Class: B.A/B.SC HONORS 2012: Geography Base: Open MeritmusalmanmusalmanОценок пока нет

- Unification of Evidence Theoretic Fusion Algorithms: A Case Study in Level-2 and Level-3 Fingerprint FeaturesДокумент12 страницUnification of Evidence Theoretic Fusion Algorithms: A Case Study in Level-2 and Level-3 Fingerprint FeaturesmusalmanmusalmanОценок пока нет

- Test For University EntryДокумент1 страницаTest For University EntrymusalmanmusalmanОценок пока нет

- Ep-1004p 1007pДокумент1 страницаEp-1004p 1007pmusalmanmusalmanОценок пока нет

- 0Sxqo/Z/Sx :KVWZ/SX 7K MRSXQ 3nox SPSMK Syx OmrxyvyqcДокумент5 страниц0Sxqo/Z/Sx :KVWZ/SX 7K MRSXQ 3nox SPSMK Syx OmrxyvyqcmusalmanmusalmanОценок пока нет

- Questions & Answers: If You Face Any New Interview Questions Please Put in Comments, We Will Work It OutДокумент11 страницQuestions & Answers: If You Face Any New Interview Questions Please Put in Comments, We Will Work It OutHarsh ChauhanОценок пока нет

- QABD Group AssignmentДокумент4 страницыQABD Group Assignmenttinsaefaji900Оценок пока нет

- The DES Algorithm IllustratedДокумент15 страницThe DES Algorithm IllustratedRishi SharmaОценок пока нет

- Stdin Stdout: Solve Me FirstДокумент2 страницыStdin Stdout: Solve Me FirstBharanidharanjiОценок пока нет

- Btech Cs 7 Sem Application of Soft Computing rcs071 2020Документ2 страницыBtech Cs 7 Sem Application of Soft Computing rcs071 2020Lalit SaraswatОценок пока нет

- Algorithms & Flow ChartsДокумент4 страницыAlgorithms & Flow ChartsSaravana KumarОценок пока нет

- Control in LabVIEW PDFДокумент104 страницыControl in LabVIEW PDFJuan PabloОценок пока нет

- Resume NLP V1Документ1 страницаResume NLP V1chaiОценок пока нет

- Ch04 DistributionsДокумент121 страницаCh04 DistributionsalikazimovazОценок пока нет

- Evaluation Metrics For Regression: Dr. Jasmeet Singh Assistant Professor, Csed Tiet, PatialaДокумент13 страницEvaluation Metrics For Regression: Dr. Jasmeet Singh Assistant Professor, Csed Tiet, PatialaDhananjay ChhabraОценок пока нет

- Player Stats Analysis Using Machine LearningДокумент4 страницыPlayer Stats Analysis Using Machine LearningInternational Journal of Innovative Science and Research TechnologyОценок пока нет

- AN3190Документ6 страницAN3190noopreply5316Оценок пока нет

- IT430 Assignment No2 Idea Solution by Fuad NetДокумент3 страницыIT430 Assignment No2 Idea Solution by Fuad Netdeeplover1Оценок пока нет

- Omdm Project - VinayДокумент8 страницOmdm Project - VinayRajagopalan GanesanОценок пока нет

- Randomly Scattered Error Analysis of Data: Lab. Report MeasurementДокумент6 страницRandomly Scattered Error Analysis of Data: Lab. Report MeasurementAhmed El-erakyОценок пока нет

- VL 7201 Cad For Vlsi CircuitsДокумент2 страницыVL 7201 Cad For Vlsi CircuitsAnonymous NV7nnJDeОценок пока нет

- LBM GPU Hsu2018Документ14 страницLBM GPU Hsu2018Lado KranjcevicОценок пока нет

- Preparing Grids For MAGMAP FFT ProcessingДокумент8 страницPreparing Grids For MAGMAP FFT ProcessingPratama AbimanyuОценок пока нет

- Handbook On Decision Making, 2012 EditionДокумент456 страницHandbook On Decision Making, 2012 EditionmarnoonpvОценок пока нет

- CH 2 (AI)Документ32 страницыCH 2 (AI)Biruk D Real KingОценок пока нет

- Diagnostic Quiz 0: Introduction To AlgorithmsДокумент97 страницDiagnostic Quiz 0: Introduction To AlgorithmsshunaimonaiОценок пока нет

- Rosenthal CoverLetter2 BlackRock Feb2021Документ1 страницаRosenthal CoverLetter2 BlackRock Feb2021Mitchell RosenthalОценок пока нет

- Lab Task - 2: Control Statement Related ProblemsДокумент3 страницыLab Task - 2: Control Statement Related ProblemsTANZIN TANZINAОценок пока нет

- Ref (81) - Cooperative Spectrum Sensing in Cognitive PDFДокумент23 страницыRef (81) - Cooperative Spectrum Sensing in Cognitive PDFSélima SahraouiОценок пока нет

- QP UpdatedДокумент2 страницыQP Updatedsambhav raghuwanshiОценок пока нет

- 1st Sem 2Документ19 страниц1st Sem 2Sunil GuptaОценок пока нет

- Load FlowДокумент42 страницыLoad FlowTahir KedirОценок пока нет

- 108 Emergency Ambulance ServicesДокумент5 страниц108 Emergency Ambulance ServicesKartik Hinsoriya100% (1)

- Hamiltonian SystemДокумент3 страницыHamiltonian SystemShita JungОценок пока нет

- Design of Temperature Control System Using Conventional PID and Intelligent Fuzzy Logic ControllerДокумент6 страницDesign of Temperature Control System Using Conventional PID and Intelligent Fuzzy Logic ControllerAnonymous uYbQQWОценок пока нет