Академический Документы

Профессиональный Документы

Культура Документы

Ordinary Least Squares Regression and Regression Diagnostics

Загружено:

ssheldon_222Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Ordinary Least Squares Regression and Regression Diagnostics

Загружено:

ssheldon_222Авторское право:

Доступные форматы

Ordinary Least Squares Regression

And Regression Diagnostics

University of Virginia

Charlottesville, VA.

April 20, 2001

Jim Patrie.

Department of Health Evaluation Sciences

Division of Biostatistics and Epidemiology

University of Virginia

Charlottesville, VA

jpatrie@virginia.edu

Presentation Outline

I ) Overview of Regression Analysis.

II) Different Types of Regression Analysis.

III) The General Linear Model.

IV) Ordinary Least Squares Regression Parameter

Estimation.

V) Statistical Inference for the OLS Regression Model.

VI) Overview of the Model Building Process.

VII) An Example Case Study.

Introduction

The term regression analysis describes a collection of statistical

techniques which serve as the basis for drawing inference as to

whether or not a relationship exists between two or more quantities

within a system, or within a population.

More specifically, regression analysis is a method to quantitatively

characterize the relationship between a response variable Y, which is

assumed to be random, and one or more explanatory variables (X),

which are generally assumed to have values that are fixed.

I) Regression analysis is typically utilized for one of the

following purposes.

Description

To assess whether or not a response variable, or perhaps

a function of the response variable, is associated with one

or more independent variables.

Control

To control for secondary factors which may influence the

response variable, but are not consider as the primary explanatory

variables of interest.

Prediction

To predict the value of the response variable at specific values

of the explanatory variables.

II) Types of Regression Analysis

General Linear Regression.*

Non-Linear Regression.

Robust Regression.

Least median squares regression.

Least absolute deviation regression.

Weighted least square.

Non-Parametric Regression.

Generalized Linear Regression.

Logistic regression.

Log-linear regression.

III). In regard to form, the general linear model is expressed as:

+ + + + + =

1 - p , 2 1

1 p 1

1 - p , 1 p 2 , 2 1 , 1

random error associated t independen is the

constants. known are x , x , x

. the regression parameters. are , ,

where

x x x y

i

i i i

o

i i i i o i

L

L

L

with the ith response, typically assume to be

distributed N(0,

2

).

y

i

is the ith response.

In matrix notation the general linear model is expressed as:

y = X +

where

y = n x 1 vector of response values.

X = n x p matrix of known constants (covariates).

= p x 1 vector of regression parameters.

= n x 1 vector of identically distributed random

errors, typically assume to be distributed N(0,

2

).

x x x 1

x x x 1

x x x 1

X

1 - p

1

1) x p (

1 - p n, n2 n1

1 - p 2, 22 21

1 - p 1, 12 11

p) (n x

2

1

1) (n x

=

o

n

y

y

y

y

M

L

M M M M M

L

L

M

=

n

2

1

1) n x (

M

In matrix notation, the general linear model components are:

The Y and X Model Components

The column vector y consists of random variables with a continuous

scale measure, while the column vectors of X may consist of:

continuous scale measures, or functions of continuous scale

measures (e.g. polynomials, splines).

binary indicators (e.g. gender).

nominal or ordinal classification variables (e.g. age class).

product terms computed between the values of two or more of

the columns of X; referred to as interaction terms.

Examples of Linear Models

i

o

i

o

i

o

+

+ + + =

+ + + + + =

+ + + + =

) exp(x ) (x log x y c)

x x x x x y b)

x x x y a)

i,3

3

i,2 e

2

i,1

1

i

i,2 i,1

4

i,2

3

i,1

2

2

i,1

1

i

1

p i, 1

p

i,2

2

i,1

1

i

L

* Note that in each case the value y

i

is linear in the model

parameters.

Examples of Non-Linear Models

i

i

i

+

=

+

+

=

+ =

) x exp( y

Model Weibull c)

) x exp( 1

y

) x exp(

y

i i

i

2 1

o

i

i

2

o

i

a) Exponential Model

b) Logistic Model

1

+

IV) Parameter Estimation for the Ordinary Least Squares Model.

a) For the estimation of the vector , we minimize Q

by simultaneously solving the p normal equations.

= =

0

0

0

0

1

1

p

o

Q

Q

Q

M

2

1 - p i, 1 p i1 1 0

n

1 i

i

) x , , x (y

=

Q

In Matrix Notation

We minimize

Q = (y X )'(y-X )

(X X)

-1

X y

y X' X) (X'

X) 2(X' y 2X'

X )] = 0 - (y X )' - y [(

=

=

=

with the resulting estimator for expressed as:

(X X)

-1

X y b

=

b) Estimation of the variance of y

i

;

symbolically expressed as

2

{y

i

}.

parameters. regression of number p ere wh

p - n

SSE

). y

- )'(y y

- y (

e e' SSE

equals (SSE) error squares of sum The

Xb - y e

fit model the from residuals of vector the denote e Let

1) x (1

1) (n x

= =

=

=

=

=

). y (y

{y

i

}

and the estimator for

2

{y

i

} is expressed as:

residual MSE. the as to referred is } y { Typically,

i

2

c) Estimation of the variance of b; symbolically expressed as

2

{b}.

X). MSE(X'

X) X' (

{b}

the estimator for

2

{b} is expressed as:

X). (X'

] X) X)(X' (X' X) Var(y)[(X'

Var(y) ]' X' X) ][(X' X' X) [(X' Var(b)

y X' X) (X' b

Since

1 -

1 -

2

1 - 2

1 - 1 -

1 - 1 -

1 -

=

=

=

=

=

=

{y

i

}

{y

i

}

] x X) (X' [x'

] x X) (X'

x' [

the estimator for

] {b} x x' [ } y {

b x y

Since

) .. 1 ( ,

i

1 -

) .. 1 ( ,

i

2

) .. 1 ( ,

i

1 - 2

) .. 1 ( ,

i

) .. 1 ( ,

i

2

) .. 1 ( ,

i i

2

) .. 1 ( ,

i i

p p

p p

p p

p

=

=

=

=

} y {

i

=

MSE

] x X) (X' [x'

) .. 1 ( ,

i

1 -

) .. 1 ( ,

i

p p

} is expressed as: y {

i

2

}. y {

; symbolically expressed as y of variance the of Estimation d)

i

2

i

{y

i

}

{y

i

}

V) Statistical Inference for the Least Squares Regression Model.

a) ANOVA sum of squares decomposition.

) y y ( .) y y ( .) y (y

Error SS Regression SS Total SS

2

i i

2

n

1 i

i

2

n

1

i

+ =

+ =

= =

i

MSR/MSE SSR/(p-1) p-1 SSR Regression

SSE/(n-p) n-p SSE Error

n-1 SST Total

F-test MS DF SS Source

Table 1. Regression ANOVA table.

X

Y

0 1 2 3 4 5 6 7 8

0

100

200

300

400

500

600

700

800

900

i i

y y

y y

i

y y

i

y

SSE

SST

SSR

b) Hypothesis tests related to b

a

*

o

*

*

*

i a

i o

H conclude p), - n /2; - t(1 | t | If

H conclude p), - n /2; - t(1 | t | If

p) - n ( ~ t where

t

Test Statistic

0 : H

0 : H

<

=

=

t

1 -

i i,

i

X) MSE(X'

0 b

=

} b {

0 b

i

2

i

) x X) X' ( MSE(x' p) - n /2, - t(1

p) - n /2, - t(1 y CL )% - (1

i

1 -

i

i

=

=

p) - n /2, - t(1 y )%PL - (1

i

=

i i

x specific a at y for the limits Confidence c)

+/-

+/-

+/-

) x X) X' ( x'

n

1

MSE(1 p) - n /2, - t(1

- /

y

i

1 -

i i

+ + + =

. x specific a at y new a for limits Prediction d)

i i

} y {

} y {

new,i

y

i

e) Simultaneous confidence band for the regression line Y=X.

. percentile

100 ) - (1 at the evaluated df p - n and p

on with distributi - F a of value critical the F

. parameters regression of number the p

where

]

X)

-1

x X' ( MSE[x' pF

y )%CB (1

pF

y )%CB (1

p) - n p, ; - (1

i

i p) - n p, ; - (1

i

p) - n p, ; - (1

i

=

=

=

+/ =

+/

} y {

i

2

X

Y

0.5 1.5 2.5 3.5 4.5 5.5 6.5

-200

-100

0

100

200

300

400

500

600

700

800

900

PL

CB

CL

When to Use CL, CB, and PL.

Apply CL when your goal is to predict the expect value of y

i

for

one specific vector of predictors x

i

within the range of the data.

Apply CB when your goal is to predict the expect value of y

i

for

all vectors of predictors x

i

within the range of the data.

Apply PL when your goal is to predict the value of y

i

for one specific

vector of predictors x

i

within the range of the data.

VI) Overview of the Model-Building Process

Data

Collection

Data

Reduction

Model

Development

Model Diagnostics

and

Refinement

Model

Validation

Interpretation

a) Data Collection

Controlled experiments.

Covariate information consists of explanatory variables

that are under the experimenters control.

Controlled experiments with supplemental variables.

Covariate information includes supplemental variables related

to the characteristics of the experimental units, in addition to the

variables that are under the experimenters control.

Exploratory observational studies.

Covariate information may include a large number of variables

related to the characteristics of the observational unit, none of

which are under the investigators control.

b) Data Reduction

Controlled experiments.

Variable reduction is typically not required because the

explanatory variables of interest are predetermined by the

experimenter.

Controlled experiments with supplemental variables.

Variable reduction is typically not required because the primary

explanatory variables of interest, as well as the supplemental

variables of interest are predetermined by the experimenter.

Exploratory observational studies.

Variable reduction is typically required because numerous sets of

explanatory variables will be examined. As a rule of thumb,

there should be at least 6-10 observations for every explanatory

variable in the model. (e.g. 5 predictors, 50 observations).

Data Reduction Methods.

Rank Predictors

Rank your predictors based on their general importance with respect

to the subject matter. Select the most important predictors using the

rule of thumb that for each predictor you need 6-10 independent

observations.

Cluster Predictors

Cluster your predictors variables base on a similarity measure,

choosing one or perhaps two predictors from within each unique

cluster. (Cluster Analysis in Johnson et. al 1999).

Create a Summary Composite Measure

Produce a composite summary measure (score) that is base on the

original set of predictors, which still retains the majority of the

information that is contained within the original set of predictors

(Principle Components Analysis, in Johnson et. al 1999).

c) Model Development.

Model development should first and foremost be driven by

your knowledge of the subject matter and by your hypotheses.

Graphics, such as a scatter-plot matrix can be utilized to

initially examine the univariate relationship between each

explanatory variable and the response, as well as the

relationship between each pair of the explanatory variables.

Constructing a correlation matrix may also be informative with

regard to quantitatively assessing the degree of the linear

association between each explanatory variable and the response

variable, as well as between each pair of the explanatory

variables.

+Note, that two explanatory variables that are highly correlated

essentially provide the same information.

x

1

20 40 60 80 1 2 3 4 5 6

4

6

8

1

0

2

0

4

0

6

0

8

0

x

2

x

3

2

0

4

0

6

0

8

0

1

0

0

1

2

0

1

2

3

4

5

6

x

4

4 6 8 10 20 40 60 80 100 120

y

200 400 600 800

2

0

0

4

0

0

6

0

0

8

0

0

Scatter Plots Scatter Plot

d) Model Diagnostics and Refinement.

Once you have fit your initial regression model the things to assess

include the following:

The Assumption of Constant Variance.

The Assumption of Normality.

The Correctness of Functional Form.

The Assumption of Additivity.

The Influence of Individual Observations on Model Stability.

+All these model assessments can be carried out by using standard

residual diagnostics provided in statistical packages such as SAS .

X

Y

) , x | y (

1 1

f

) , x | y (

2 2

f

) , x | y (

3 3

f

) , x | y (

4 4

f

x

1

x

2

x

3

x

4

Model Assumptions

E(y|x, )

Assessment of the Assumption of Constant Variance.

Plot the residuals from your the model versus the fitted values.

Examine if the variability between the residuals remains relatively

constant across the range of the fitted values.

Assessment of the Assumption of Normality.

Plot the residuals from your model versus their expected value

under normality (Normal Probability Plot). The expected

value of the kth order residual (e

k

) is determined by the formula:

size. sample

total the is n and on, distributi normal standard the value of

() quantile the denotes z() variance from the regression model,

residual estimated the is MSE Where . )

0.25 n

0.375 - k

z( MSE ) E(e

k

+

=

Assumption of Constant Variance

Fitted Values

1.6 1.8 2.0 2.2 2.4 2.6 2.8

-2

-1

0

1

2

3

M

o

d

e

l

R

e

s

i

d

u

a

l

s

Fitted Values

1.6 1.8 2.0 2.2 2.4 2.6 2.8

-2

-1

0

1

2

3

Holds Fails

Quantile of Standard Normal

-2 -1 0 1 2 3

-2

-1

0

1

2

3

Quantile of Standard Normal

M

o

d

e

l

R

e

s

i

d

u

a

l

s

-2 -1 0 1 2 3

-2

-1

0

1

2

3

Assumption of Normality

Holds Fails

Assessment of Correct Functional Form.

Plot the residuals from a model which excludes the explanatory

variable of interest against the residuals from a model in which the

explanatory variable of interest is regressed on the remaining

explanatory variables. This type of plot is referred to as a partial

regression plot (Neter et al. 1996). Examine whether there is a

non-linear relationship between the two sets of residuals. If there is

a non-linear relationship, fit a higher order term in addition to the

linear term.

Assessment of the Assumption of Additivity.

For each plausible interaction use a partial residual plot to examine

whether or not there is a systematic pattern in the residuals. If so

it may indicate that by adding the interaction term to the model it will

enhance your models predictive performance.

e(X

i

| X

-i,

)

-3 -2 -1 0 1 2 3

-0.4

-0.2

0.0

0.2

0.4

-0.4

-0.2

0.0

0.2

0.4

Partial Regression Plots

Linear

-3 -2 -1 0 1 2 3

Non-linear

e

(

Y

|

X

-

i

)

e(X

i

| X

-i,

)

Assess the Influence of Individual Observations on Model Stability.

1) Identify outlying Y observations by examining the studentized

residuals

matrix). hat ( x X) X' ( x' h and variance,

residual the is MSE residual, ith the of value the is e where

) h - MSE(1

e

r

i

1 -

i ii

i

ii

i

i

=

=

2) Identify outlying X observations by examining what is referred

to as the leverage.

x X) X' ( x' h

i

1 -

i ii

=

3) Evaluate the influence of the ith case on all n fitted values by

examining the Cook distance (D

i

).

stated. previously defined as are

h and

, MSE , e and parameter, model of number the is p where

) h - (1

h

pMSE

e

D

ii

i

2

ii

ii

2

i

i

=

X

Y

0 20 40 60 80 100 120 140

1.0

1.5

2.0

2.5

3.0

3.5

large |Studentized Residual|

large Cooks distance

Influence Measures

and large leverage.

large leverage

S

t

u

d

e

n

t

R

e

s

i

d

u

a

l

0 10 20 30 40 50

-2

0

2

4

Student Residual

22

38

9

L

e

v

e

r

a

g

e

0 10 20 30 40 50

0.1

0.2

0.3

0.4

0.5

Residual Leverage

28

43

38

Residual Index Number

C

o

o

k

D

i

s

t

a

n

c

e

0 10 20 30 40 50

0.0

0.2

0.4

0.6

Cooks Distance

22

38

Some Remedial Measures

Non-Constant Variance

A transformation of the response variable to a new scale (e.g. log)

is often helpful in attaining equal residual variation across the range

of the predicted values. The Box-Cox transformation method

(Neter et al., 1996) can be utilized to determine the proper form of

the transformation. If there is no variance stabilizing transformation

which rectifies the situation an alternative approach is to use a more

robust estimator, such as iterative weighted least squares

(Myers, 1990).

Non-Normality

Generally, non-normality and non-constant variance go hand

in hand. An appropriate variance stabilizing transformation will

more than likely also be remedial in attaining normally distributed

residual error.

The Box-Cox Transformation

The Box-Cox procedure functions to identify a transformation

from the family of power transformations on Y which corrects for

skewness in the response distribution and for unequal error variance.

The family of power transformations is of the form Y

= Y

, where

is determined from the data. This family encompasses the following

simple transformations.

Value of Transformation

2.0 Y= Y

2

0.5 Y= Y

1/2

0 Y= log

e

(Y)

-0.5 Y=1/Y

1/2

1.0 Y=1/Y

For each value, the Y

i

observations are first standardized so

that the magnitude of the error sum of squares does not depend

on the value of .

0 ) 1 Y ( K

0 ) Y (log K

i

W

1

i 2

=

=

e

n / 1

n

1 i

i 2

1 -

2

1

) Y ( K

K

1

K

=

=

=

where

Once the standardized observations W

i

have been obtained for a

given value, they are regressed on the predictors X and the error

sum of squares SSE is obtained. It can be shown that the maximum

likelihood estimate for is the value of for which SSE is minimum.

We therefore choose the value which produces the smallest SSE.

Outliers

Outliers, either with respect to the response variable, or with respect

to the explanatory variables can have a major influence on the values

of the regression parameter estimates. Gross outliers should always

be checked first for data authenticity. In terms of the response

variable, if there is no legitimate reason to remove the offending

observations it may be informative to fit the regression model

with and without the outliers. If statistical inference changes

depending on the inclusion or the exclusion of the outliers, it is

probably best to use a robust form of regression, such as least

median squares or least absolute deviation regression (Myers, 1990).

For the explanatory variables, if your data set is reasonably large,

its is generally recommended to use some from of truncation that

reduces the range of the offending explanatory variable.

e) Model Validation

Model validation applies mainly to those models that will be utilized

as a predictive tool. Types of validation procedure include:

External Validation.

Predictive accuracy is determined by applying your model to a new

sample of data. External validation, when feasible should always

be your first choice for the method of model validation.

Internal Validation.

Predictive accuracy can be determined by first fitting your model

to a subset of the data and then applying your model to the data that

you withheld from the model building process (Cross-validation).

Alternatively, measures of predictive accuracy can be evaluated by

a bootstrap re-sampling procedure. The bootstrap procedure

provides a measure of the optimism that is induced by optimizing

your models fit to your sample of data.

VII) An Example Case Study.

A hospital surgical unit was interested in predicting survival

in patients undergoing a particular type of liver operation. A

random sample of 54 patients was available for analysis. From

each patient, the following information was extracted from the

patients pre-operative records.

x

1

blood clotting score.

x

2

prognostic index, which includes the age of the

patient.

x

3

enzyme function test score.

x

4

liver function test score.

Taken from Neter et al. (1996).

Summary Statistics

Variable n Mean SD SE Median Min Max

Bld Clotting 54 5.78 1.60 0.22 5.80 2.60 11.20

Prog Index 54 63.24 16.90 2.30 63.00 8.00 96.00

Enzyme Fun 54 77.11 21.25 2.89 79.00 23.00 119.00

Liver Fun 54 2.74 1.07 0.15 2.60 0.74 6.40

Survival 54 197.17 145.30 19.77 155.50 34.00 830.00

Blood

Clotting

20 40 60 80 1 2 3 4 5 6

4

6

8

1

0

2

0

4

0

6

0

8

0

Prognostic

Index

Enzyme

Function

2

0

4

0

6

0

8

0

1

0

0

1

2

0

1

2

3

4

5

6

Liver

Function

4 6 8 10 20 40 60 80 100 120

Survival

Time

200 400 600 800

2

0

0

4

0

0

6

0

0

8

0

0

Scatter Plots

Pearson Correlation Matrix

Correlate Blood

Clotting

Prognostic

Index

Enzyme

Function

Liver

Function Time

Bld Clotting 1.000 0.090 -0.150 0.502 0.372

p-value 0.517 0.280 0.000 0.000

Prog. Index 1.000 -0.024 0.369 0.554

p-value 0.865 0.006 0.000

Enzyme Function 1.000 0.416 0.580

p-value 0.002 0.000

Liver Function 1.000 0.722

p-value 0.000

Survival

Things to Consider.

Functional Form

Are the explanatory variables linearly related to the response?

If not, is there a transformation of the explanatory or the response

variable that leads to a linear relationship. If only a few of the

relationships between the explanatory and response variable are

non-linear, it is best to begin by transforming the Xs, or by

modeling the non-linearity.

Multicollinearity

Are there pairs of explanatory variables that appear to be highly

correlated?. A high degree of collinearity may cause the parameter

standard errors to be substantially inflated, as well as induce the

regression coefficients to flip sign.

Blood

Clotting

20 40 60 80 1 2 3 4 5 6

4

6

8

1

0

2

0

4

0

6

0

8

0

Prognostic

Index

Enzyme

Function

2

0

4

0

6

0

8

0

1

0

0

1

2

0

1

2

3

4

5

6

Liver

Function

4 6 8 10 20 40 60 80 100 120 1.6 2.0 2.4 2.8

1

.

6

2

.

0

2

.

4

2

.

8

Scatter Plot

log(S. Time)

Pearson Correlation Matrix

Correlate Blood

Clotting

Prognostic

Index

Enzyme

Function

Liver

Function

log

10

(S

Time)

Bld Clotting 1.000 0.090 -0.150 0.502 0.346

p-value 0.517 0.280 0.000 0.010

Prog. Index 1.000 -0.024 0.369 0.593

p-value 0.865 0.006 0.000

Enzyme Function 1.000 0.416 0.665

p-value 0.002 0.000

Liver Function 1.000 0.726

p-value 0.000

Term b SE(b) t

P(T

t)

Intercept 0.4888 0.0502

9.729

<0.0001

Bld. Clotting 0.0685 0.0054 12.596 <0.0001

Prog. Index 0.0098 0.0004 21.189 <0.0001

Enzyme Fun. 0.0095 0.0004 23.910 <0.0001

Liver Fun. 0.0019 0.0097 0.198 0.8436

) y (

i

=MSE df R

2

R

a

2

0.0473 49 0.9724 0.9701

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index) +

3

(Enzyme

Fun.) +

4

(Liver Function).

Model Statement

Term df SS MS f

P(F

f)

Regression 4 3.9727 0.9932 431.10 <0.0001

Bld. Clotting 1 0.4767 0.4767 212.79 <0.0001

Prog. Index 1 1.2635 1.2635 564.04 <0.0001

Enzyme Fun. 1 2.1122 2.1126 947.51 <0.0001

Liver Fun. 1 0.0000 0.0000 0.0393 0.8436

Residual 49 0.1097 0.0022

Total 53 4.0477

Regression ANOVA Table

X

Y

) , x | y (

1 1

f

) , x | y (

2 2

f

) , x | y (

3 3

f

) , x | y (

4 4

f

x

1

x

2

x

3

x

4

Model Assumptions

E(y|x, )

Fitted Values

S

t

u

d

e

n

t

i

z

e

d

R

e

s

i

d

u

a

l

s

1.6 1.8 2.0 2.2 2.4 2.6 2.8

-2

-1

0

1

2

3

Quantile of Standard Normal

S

t

u

d

e

n

t

i

z

e

d

R

e

s

i

d

u

a

l

s

-2 -1 0 1 2 3

-2

-1

0

1

2

3

r = 0.952

Residual Diagnostics

e(Blood Clotting|X)

e

(

Y

|

-

B

l

o

o

d

C

l

o

t

t

i

n

g

)

-3 -2 -1 0 1 2 3

-0.4

-0.2

0.0

0.2

0.4

e(Progostic Index|X)

e

(

Y

|

-

P

r

o

g

o

s

t

i

c

I

n

d

e

x

)

-50 -40 -30 -20 -10 0 10 20 30

-0.4

-0.2

0.0

0.2

0.4

Partial Regression Plots

(Functional Form)

e(Enzyme Function|X)

e

(

Y

|

-

E

n

z

y

m

e

F

u

n

c

t

i

o

n

)

-50 -26 -2 22 46

-0.6

-0.3

0.0

0.3

0.6

e(Liver Function|X)

e

(

Y

|

-

L

i

v

e

r

F

u

n

c

t

i

o

n

)

-2 -1 0 1 2

-0.2

-0.1

0.0

0.1

0.2

Partial Regression Plots

(Functional Form)

e(Bld Clotting x Prog.Index|X)

e

(

Y

|

-

B

l

d

C

l

o

t

t

i

n

g

x

P

r

o

g

I

n

d

e

x

)

-40 -20 0 20 40

-0.2

-0.1

0.0

0.1

0.2

e(Bld Clotting x Enzyme Fun|X)

e

(

Y

|

-

B

l

d

C

l

o

t

t

i

n

g

x

E

n

z

y

m

e

F

u

n

)

-3 -2 -1 0 1 2 3

-0.2

-0.1

0.0

0.1

0.2

e(Bld Clotting x Liver Fun|X)

e

(

Y

|

-

B

l

d

C

l

o

t

t

i

n

g

x

L

i

v

e

r

.

F

u

n

)

-4 -2 0 2 4 6

-0.2

-0.1

0.0

0.1

0.2

e(Prog Index x Enzyme Fun|X)

e

(

Y

|

-

P

r

o

g

I

n

d

e

x

x

E

n

z

y

m

e

.

F

u

n

)

-10 -8 -6 -4 -2 0 2 4 6

-0.2

-0.1

0.0

0.1

0.2

Partial Regression Plots (Additivity)

e(Prog Index x Liver Fun|X)

e

(

Y

|

-

P

r

o

g

I

n

d

e

x

x

L

i

v

e

r

F

u

n

)

-6 -4 -2 0 2 4 6 8

-0.2

-0.1

0.0

0.1

0.2

e(Enzyme Fun x Liver Fun|X)

e

(

Y

|

-

E

n

z

y

m

e

F

u

n

x

L

i

v

e

r

F

u

n

)

-30 -20 -10 0 10 20 30 40 50

-0.2

-0.1

0.0

0.1

0.2

Partial Regression Plots (Additivity)

Term b SE(b) t

P(T

t)

Intercept 0.3671 0.0682 5.386 0.0028

Bld. Clotting 0.0876 0.0092 9.511 <0.0001

Prog. Index 0.0093 0.0004 22.419 <0.0001

Enzyme Fun. 0.0095 0.0004 25.262 <0.0001

Liver Fun 0.0389 0.0175 2.231 0.0304

Bld C. x Liver Fun -0.0059 0.0024 -2.500 0.0159

) y (

i

=MSE df R

2

R

a

2

0.0450 48 0.9756 0.9724

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index)+

3

(Enzyme Fun.)

+

4

(Liver Fun)+

5

(Bld. Clotting x Liver Fun.).

Model Statement

Fitted Values

S

t

u

d

e

n

t

i

t

i

z

e

d

R

e

s

i

d

u

a

l

s

1.6 1.8 2.0 2.2 2.4 2.6 2.8

-2

0

2

4

Quantile of Standard Normal

S

t

u

d

e

n

t

i

z

e

d

R

e

s

i

d

u

a

l

s

-2 0 2 4

-2

0

2

4

r = 0.872

Residual Diagnostics

22 22

S

t

u

d

e

n

t

R

e

s

i

d

u

a

l

0 10 20 30 40 50

-2

0

2

4

Student Residual

22

38

9

L

e

v

e

r

a

g

e

0 10 20 30 40 50

0.1

0.2

0.3

0.4

0.5

Residual Leverage

28

43

38

Residual Index Number

C

o

o

k

D

i

s

t

a

n

c

e

0 10 20 30 40 50

0.0

0.2

0.4

0.6

Cooks Distance

22

38

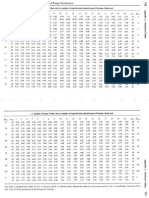

Index Bld.C Prog.Ind Enyz.Fun Liv.Fun Surv log.Surv

9 6.00 67.00 93.00 2.50 202.00 2.31

22 3.40 83.00 53.00 1.12 136.00 2.13

28 11.20 76.00 90.00 5.59 574.00 2.76

38 4.30 8.00 119.00 2.85 120.00 2.08

43 8.80 86.00 88.00 6.40 483.00 2.68

Mean 5.78 63.24 77.11 2.74 197.16 19.77

SD 0.21 2.30 2.90 0.14 19.77 0.04

Summary of Influential Observations

Term b SE(b) t

P(T

t)

Intercept 0.2768 0.0574 4.822 <0.0001

Bld. Clotting 0.0996 0.0081

12.343

<0.0001

Prog. Index 0.0094 0.0004 24.684 <0.0001

Enzyme Fun. 0.0096 0.0003 31.571 <0.0001

Liver Fun 0.0566 0.0145 3.913 0.0003

Bld C. x Liver Fun -0.0084 0.0021 -4.004 0.0002

) y (

i

=MSE df R

2

R

a

2

0.0432 44 0.9853 0.9832

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index)+

3

(Enzyme Fun.)

+

4

(Liver Fun)+

5

(Bld. Clotting x Liver Fun.).

Model Statement (excluding obs. 22, 38, and 43)

Bootstrap Validation

Original Index

Parameter estimate (P

org

) obtained from

the original fit of the OLS model.

E(Y

org

) = X

org

b

Train Index

For bootstrap random samples i=1b

Parameter estimate (P

training,i

) is obtained

from an OLS model fit, in which

X

training,i

= X

boot,i.

and

Y

training,i

= Y

boot,i

E(Y

training,i

) =X

training,i

b

training

Test Index

Parameter estimate (P

test,i

) obtained

from a model, in which X

test.i

= X

org

and Y

test,i

= Y

org,

E(Y

test,i

)=X

test,i

b

training,i

Optimism

The optimism for the ith bootstrap

sample is estimated by:

O

i

= P

training,i

- P

test,i

Index (Bias) Corrected

The bootstrap corrected estimate is

computed as :

=

B

i org corrected

O

B

1

P P

i

Parameter Index

original

training test Optimism Index

corrected

N

Bootstraps

R-square 0.9756 0.9772 0.9712 0.0060 0.9695 500

MSE 0.0018 0.0016 0.0021 -0.0005 0.0023 500

Intercept 0.0000 0.0000 0.0093 -0.0093 0.0093 500

Slope 1.0000 1.0000 0.9959 0.0041 0.9959 500

Bootstrap Model Validation

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index)+

3

(Enzyme Fun.)

+

4

(Liver Fun)+

5

(Bld. Clotting x Liver Fun.).

Full Model

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index)+

3

(Enzyme Fun.)

Reduced Model

Parameter Index

original

training test Optimism Index

corrected

N

Bootstraps

R-square 0.9723 0.9773 0.9691 0.0048 0.9678 500

MSE 0.0020 0.0018 0.0022 -0.0005 0.0025 500

Intercept 0.0000 0.0000 0.0070 -0.0070 0.0070 500

Slope 1.0000 1.0000 0.9966 0.0034 0.9966 500

Bootstrap Model Validation

Term b SE(b) t

P(T

t)

Intercept 0.4836 0.0426 11.345 <0.0001

Bld. Clotting 0.0699 0.0041

16.975

<0.0001

Prog. Index 0.0093 0.0003 24.300 <0.0001

Enzyme Fun. 0.0095 0.0003 31.082 <0.0001

) y (

=MSE df R

2

R

a

2

0.0432 50 0.9723 0.9694

E(y|X) =

o

+

1

(Bld Clotting) +

2

(Prog. Index)+

3

(Enzyme Fun.)

Model Statement

Fitted Values

S

t

u

d

e

n

t

i

t

i

z

e

d

R

e

s

i

d

u

a

l

s

1.6 1.8 2.0 2.2 2.4 2.6 2.8

-2

-1

0

1

2

3

Quantile of Standard Normal

S

t

u

d

e

n

t

i

z

e

d

R

e

s

i

d

u

a

l

s

-2 -1 0 1 2 3

-2

-1

0

1

2

3

r = 0.952

Residual Diagnostics

Interpretation

The model validation suggests that if we were to use the regression

coefficients from the model which included terms for the patients pre-

operative blood clotting score, prognostic index, and enzyme function

score on a new sample of patients, approximately 96.8% of the

variation in postoperative survival time would be explained by this

model.

If we were to use the regression coefficients from the model that

also included the patients pre-operative liver function score and a term

for blood clotting by liver function interaction on a new sample of

patient, we would expect that approximately 96.9% of the variation in

postoperative survival time would be explained by this model.

References

Johnson RA, Wichern DW, Applied Multivariate Statistical

Analysis. (1999) Prentice Hall, Upper Saddle River, NJ.

Neter J, Kutner MH, Nachtsheim CJ, Wasserman W. Applied

Linear Statistical Models. Fourth Edition. (1996) IRWIN,

Chicago, IL.

Myers RH. Classical and Modern Regression with Applications

Second Edition. (1990) Duxbury Press, Belmont, Cal.

Вам также может понравиться

- Type 1 and Type 3 SS M&D TablesДокумент2 страницыType 1 and Type 3 SS M&D Tablesssheldon_222Оценок пока нет

- Sum of Squares & DFs For Each Effect in A Split-Plot DesignДокумент1 страницаSum of Squares & DFs For Each Effect in A Split-Plot Designssheldon_222Оценок пока нет

- Founding of Switzerland's First Independent Neurological JournalДокумент14 страницFounding of Switzerland's First Independent Neurological Journalssheldon_222Оценок пока нет

- Two-Way Anova Using SPSSДокумент13 страницTwo-Way Anova Using SPSSssheldon_222Оценок пока нет

- Some Multiple Comparison Procedures III - Tukey HSD and Scheffe TestsДокумент11 страницSome Multiple Comparison Procedures III - Tukey HSD and Scheffe Testsssheldon_222Оценок пока нет

- TableA4 StudentizedRangeDistributionДокумент1 страницаTableA4 StudentizedRangeDistributionssheldon_222Оценок пока нет

- Formulae For Calculating IDI and NRIДокумент8 страницFormulae For Calculating IDI and NRIssheldon_222Оценок пока нет

- The Distinction Between Fixed and Random EffectsДокумент6 страницThe Distinction Between Fixed and Random Effectsssheldon_222Оценок пока нет

- Two-Way Mixed Effects ModelsДокумент6 страницTwo-Way Mixed Effects Modelsssheldon_222Оценок пока нет

- TableA3 Bonferoni F DistributionДокумент1 страницаTableA3 Bonferoni F Distributionssheldon_222Оценок пока нет

- Equations For Linear RegressionДокумент3 страницыEquations For Linear Regressionssheldon_222Оценок пока нет

- Refcard RmsДокумент2 страницыRefcard Rmsssheldon_222Оценок пока нет

- The Concepts of Linear Dependence - Independence and OrthogonalityДокумент6 страницThe Concepts of Linear Dependence - Independence and Orthogonalityssheldon_222Оценок пока нет

- Summary of Four Mixed-Model ApproachesДокумент2 страницыSummary of Four Mixed-Model Approachesssheldon_222Оценок пока нет

- Sum of Squares and Degrees of Freedom For Each Effect in A Two-Way Within-Subjects DesigДокумент1 страницаSum of Squares and Degrees of Freedom For Each Effect in A Two-Way Within-Subjects Desigssheldon_222Оценок пока нет

- Some Multiple Comparison Procedures II - Bonferroni and Related ApproachesДокумент7 страницSome Multiple Comparison Procedures II - Bonferroni and Related Approachesssheldon_222Оценок пока нет

- ARVO PresentationДокумент45 страницARVO Presentationssheldon_222Оценок пока нет

- Counts & Proportions-Logistic and Log-Linear ModelsДокумент58 страницCounts & Proportions-Logistic and Log-Linear Modelsssheldon_222Оценок пока нет

- Presentation - R Package eRMДокумент40 страницPresentation - R Package eRMssheldon_222100% (1)

- R Refcard RegressionДокумент6 страницR Refcard Regressionshadydogv5Оценок пока нет

- Some Multiple Comparison Procedures I - Fisher LSD TestДокумент4 страницыSome Multiple Comparison Procedures I - Fisher LSD Testssheldon_222Оценок пока нет

- Lecture Numerical OptimizationДокумент22 страницыLecture Numerical Optimizationssheldon_222Оценок пока нет

- Sudoku SolverДокумент1 страницаSudoku SolveriPakistan100% (15)

- T1T2promo Poster2Документ1 страницаT1T2promo Poster2ssheldon_222Оценок пока нет

- Quntification of Brain Morphometry and Anatomical Change in NeonatesДокумент44 страницыQuntification of Brain Morphometry and Anatomical Change in Neonatesssheldon_222Оценок пока нет

- Honolulu SNRP 4-18-2008Документ44 страницыHonolulu SNRP 4-18-2008ssheldon_222Оценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Climate and Urban FormДокумент10 страницClimate and Urban FormYunita RatihОценок пока нет

- Year 9 Autumn 1 2018Документ55 страницYear 9 Autumn 1 2018andyedwards73Оценок пока нет

- Meena ResumeДокумент3 страницыMeena ResumeAnonymous oEzGNEОценок пока нет

- BBFH107 - Business Statistics II Assignment IIДокумент2 страницыBBFH107 - Business Statistics II Assignment IIPeter TomboОценок пока нет

- SPELD SA A Trip To The Top End-DSДокумент16 страницSPELD SA A Trip To The Top End-DSThien Tho NguyenОценок пока нет

- SPE-21639-MSДокумент8 страницSPE-21639-MSehsanОценок пока нет

- Sample Thesis Title in Business ManagementДокумент6 страницSample Thesis Title in Business Managementlisabrownomaha100% (2)

- A Practice Teaching Narrative of Experience in Off Campus InternshipДокумент84 страницыA Practice Teaching Narrative of Experience in Off Campus InternshipClarenz Jade Magdoboy MonserateОценок пока нет

- Counseling techniques for special populationsДокумент67 страницCounseling techniques for special populationsSittie Nabila Panandigan100% (2)

- Semaphore Twinsoft Manual PDFДокумент101 страницаSemaphore Twinsoft Manual PDFReza AnantoОценок пока нет

- Epd-Lca Meranti Plywood-Final ReportДокумент29 страницEpd-Lca Meranti Plywood-Final ReporttangОценок пока нет

- Tanada, Et Al. Vs Angara, Et Al., 272 SCRA 18, GR 118295 (May 2, 1997)Документ3 страницыTanada, Et Al. Vs Angara, Et Al., 272 SCRA 18, GR 118295 (May 2, 1997)Lu CasОценок пока нет

- Contracts-Nature and TerminologyДокумент19 страницContracts-Nature and TerminologyNguyễn Trần HoàngОценок пока нет

- Vastu Colors (Room by Room Home Coloring Guide)Документ25 страницVastu Colors (Room by Room Home Coloring Guide)yuva razОценок пока нет

- Bellak Tat Sheet2pdfДокумент17 страницBellak Tat Sheet2pdfTalala Usman100% (3)

- King Rama VДокумент3 страницыKing Rama Vsamuel.soo.en.how2324Оценок пока нет

- Business English Syllabus GuideДокумент48 страницBusiness English Syllabus Guidenicoler1110% (1)

- Eastern Broadcasting vs. Dans 137 Scra 628.Документ15 страницEastern Broadcasting vs. Dans 137 Scra 628.Lyka Lim PascuaОценок пока нет

- Planning Worksheet For Access and QualityДокумент3 страницыPlanning Worksheet For Access and QualityBar BetsОценок пока нет

- One, Two and Three PerspectiveДокумент10 страницOne, Two and Three PerspectiveNikko Bait-itОценок пока нет

- A.T Jones-Great Empires of Prophecy From Babylon To The Fall of RomeДокумент640 страницA.T Jones-Great Empires of Prophecy From Babylon To The Fall of RomeDANTZIE100% (2)

- Alpha To Omega PPT (David & Krishna)Документ11 страницAlpha To Omega PPT (David & Krishna)gsdrfwpfd2Оценок пока нет

- 58 58 International Marketing Chapter WiseДокумент126 страниц58 58 International Marketing Chapter WiseNitish BhaskarОценок пока нет

- Regulation of Implantation and Establishment of Pregnancy in MammalsДокумент271 страницаRegulation of Implantation and Establishment of Pregnancy in MammalsHelmer Hernán Sabogal Matias100% (1)

- TQM Study of Quality Practices at Unicast AutotechДокумент32 страницыTQM Study of Quality Practices at Unicast AutotechAkshay Kumar RОценок пока нет

- Aprils Detox GuideДокумент20 страницAprils Detox GuideKwasi BempongОценок пока нет

- Cunningham S Book of Shadows PDFДокумент258 страницCunningham S Book of Shadows PDFAngela C. Allen100% (10)

- Usa Field Hockey Teaching Games For Understanding WorkshopДокумент5 страницUsa Field Hockey Teaching Games For Understanding Workshopapi-341389340Оценок пока нет

- 2339321 16 pf test reportДокумент15 страниц2339321 16 pf test reportIndrashis MandalОценок пока нет