Академический Документы

Профессиональный Документы

Культура Документы

Face Recognition Using Support Vector Machines

Загружено:

zzzxxccvvvОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Face Recognition Using Support Vector Machines

Загружено:

zzzxxccvvvАвторское право:

Доступные форматы

FACE RECOGNITION USING SUPPORT VECTOR MACHINES

Ashwin Swaminathan

ashwins@umd.edu

ENEE633: Statistical and Neural Pattern Recognition

Instructor : Prof. Rama Chellappa

Project 2, Part (b)

1. INTRODUCTION

Face recognition has a wide variety of applications such as in identity authentication, access control and

surveillance. There has been a lot of research on face recognition over the past few years. They have

mainly dealt with different aspects of face recognition. Algorithms have been proposed to recognize

faces beyond variations in viewpoint, illumination, pose and expression. This has led to increased and

sophisticated techniques for face recognition and has further enhanced the literature on pattern classi-

cation. In this project, we study face recognition as a pattern classication problem. We will extend

the methods presented in Project 1 and use the Support Vector Machine [13] for classication. We will

consider three techniques in this work

Principal Component Analysis

Fischer Linear Discriminant

Multiple Exemplar Discriminant Analysis

We apply these classication techniques for recognizing human faces and do an elaborate and detailed

comparison of these techniques in terms of classication accuracy when classied with the SVM. We

will nally discuss tradeoffs and the reasons for performance and compare the results obtained with

those obtained in project 1.

2. PRINCIPAL COMPONENT ANALYSIS FOR FACE RECOGNITION

2.1. Algorithm Description

The face is the primary focus of attention in the society. The ability of human beings to remember and

recognize faces is quite robust. Automation of this process nds practical application in various tasks

such as criminal identication, security systems and human-computer interactions. Various attempts to

implement this process have been made over the years.

Eigen face recognition is one such technique that relies on the method of principal components. This

method is based on an information theoretical approach, which treats faces as intrinsically 2 dimensional

entities spanning the feature space. The method works well under carefully controlled experimental

conditions but is error-prone when used in a practical situation.

This method functions by projecting a face onto a multi-dimensional feature space that spans the

gamut of human faces. A set of basis images is extracted from the database presented to the system by

Eigenvalue-Eigenvector decomposition. Any face in the feature space is then characterized by a weight

vector obtained by projecting it onto the set of basis images. When a new face is presented to the system,

its weight vector is calculated and a SVM based classier is used for classication. The full details of

the algorithm can be found in [1].

2.2. Simulation Results

We study the performance of the PCA algorithm under varying illumination and expression conditions

under both the nearest neighbor classier and the SVM based classier. The sample input data given

DATA.mat was used for simulation. This dataset contains images of 200 people with 3 pictures per

person. The rst image corresponds to the neutral face, the second one is the picture of the person with

facial expression and the third corresponds to the illumination change. In our simulation, we used the

neutral face for training and tested the image of the person under expression and illumination change.

The overall classication accuracy was 62% with the nearest neighbor classier. The performance im-

proved slightly on using the SVM to around 65%. We used four different kernel functions for the SVM

namely linear, polynomial, sigmoid and the radial basis functions (RBF). We noticed that the classica-

tion accuracy did not change much as the kernel type was changed for this dataset.

When tested with illumination alone, the percentage accuracy in classication was around 65% with

the nearest neighbor classier and 68%with the SVMclassier. The corresponding results for expression

variations were around 59% and 62%. This indicates that the PCA is not a very good tool for face

classication. This can be mainly attributed to the fact that it cannot handled illumination and expression

variations. Further, for accurate and improved performance, the PCA requires more images for training

greater the number of images available for training, the better the performance. By this way, it can

handle greater amounts of variations among individuals.

To better understand the performance of PCA, we study its detection accuracy when more images

were available for training. In this case, we use the dataset provided POSE.mat. This contains images

of 68 people under various illuminations and pose variations. It contains 13 images per person under

various conditions. Some sample images from this dataset is shown in Fig. 1 and the different poses

for one particular person is shown in Fig. 2. For our analysis, we rst consider its performance for all

faces with the frontal pose. We noticed that image indices 3, 4, 9 and 10 correspond to a similar frontal

pose and we use these in our simulations. We train our classier with two images per person and test

it with the remaining two images. The results with both the nearest neighbor classier and the SVM

are shown in Fig. 3. We notice that the percentage accuracy in this case is as high as 92% (with 20

basis vectors) and further the accuracy gradually increases as the number of basis vectors is increased

till around 97% (with 60 basis vectors). Thus, we notice that the PCA can perform better as the number

of basis vectors are increased. We also notice that the nearest neighbor does not perform as well as the

SVM and the corresponding detection accuracies are around 17% less compared to SVM. This indicates

the superiority of the SVM over the nearest neighbor rule for classication.

Next, we test the performance of the PCA under the leave one out strategy. Here, out of the 13 images

per person, we use 12 images for training and the remaining one for testing. The performance of the PCA

algorithm under these conditions are shown in Table. 1. The results with the nearest neighbor classier

are shown alongside the SVM based classier for the purpose of comparison. In our experiments, we

test with two different types of SVM kernels namely Linear and Polynomial.

We clearly observe from the performance results in the table that the SVM with linear kernel out-

performs the nearest neighbor rule in most cases when PCA is used to generate the statistics. We also

note that the performance of the SVM classication method depends to a great extent on the choice of

the kernel function used. Our results indicate that while the SVM with linear kernel performs similar to

the SVM with the polynomial kernel functions, the SVM trained on sigmoid and radial basis functions

perform much worse. This leads us to conclude that the choice of the kernel would primarily depend

Fig. 1. Sample Images from Pose.mat

on the kind of data to be classied. Next, we study the performance for Fischer Linear Discriminant

Analysis.

3. FISHER DISCRIMINANT ANALYSIS

3.1. Algorithm Description

The PCA takes advantage of the fact that, under admittedly idealized conditions, the variation within

class lies in a linear subspace of the image space [2]. Hence, the classes are convex, and, therefore,

linearly separable. One can perform dimensionality reduction using linear projection and still preserve

linear separability. However, when the variation in classes is large, there is a lot of variance in the

observed features and this leads to wrong classication results.

This problem can be offset by considering a modied set of optimization leading to a different set

of basis vectors. Instead of nding the best set of basis vectors to minimize the representation error,

Fig. 2. Sample Pose Variations from Pose.mat

we modify our cost function to improve classication accuracy. More specically, the basic idea behind

Fisher Linear discriminant analysis is to nd the best set of vectors that can minimize the intra cluster

variability while maximizing the inter cluster distances.

The between class scatter matrix is dened as

S

B

=

c

i=1

N

i

(

i

)(

i

)

T

(1)

and the within-class scatter matrix is dened as

S

W

=

c

i=1

N

i

x

k

X

i

(x

k

i

)(x

k

i

)

T

(2)

where

i

is the mean of the features in classi and is the overall cluster mean. x

k

stand for the data

points and c represents the number of classes.

The sher linear discriminant analysis nds the features that maximizes the cost function give by

J =

|W

T

S

B

W|

|W

T

S

W

W|

(3)

10 20 30 40 50 60

65

70

75

80

85

90

95

100

No. of Basis Vectors

D

e

t

e

c

t

i

o

n

A

c

c

u

r

a

c

y

SVM classifier

Nearest Neighbor

Fig. 3. Percentage Accuracy of PCA when tested with frontal images and classied using nearest neighbor rule and the SVM

This can be solved by solving a generalized eigenvalue problem and thus the nal solution can be

expressed as a solution of

S

B

W

i

=

i

S

W

W

i

(4)

In our implementation, we rst perform a PCA on the input data to reduce its dimensionality. We

then use the modied features and perform a Fisher analysis on it. The resulting FLD basis vectors are

used in classication. For the entire details on implementation, the readers are referred to [2] and the

references therein.

3.2. Simulation Results

The FLD algorithm was simulated. It is observed that the resulting faces are very noisy for the POSE.mat

dataset that has a very high degree of variation of poses of several people. This is one of the problems

that have been observed for Fisher Analysis in literature [5]. This has also led to improved algorithms to

nd the sher faces. Some works have proposed to use a modied version of the within scatter matrix,

by replacing S

W

by S

W

+I where I is the identity matrix and is an appropriate chosen threshold. Our

analysis with the modied denition of the S

W

gave better results with less noisy sher faces. Further

details of the implementation can be found in [5].

The modied scheme was implemented. We observed that the performance of the face recognition al-

gorithms for FLD is better than that of PCA in most cases. In our implementation, we used the POSE.mat

Table 1. Percentage accuracy of PCA under leave one out for POSE.mat

Index of Left sample Percentage Accuracy Percentage Accuracy Percentage Accuracy

Nearest Neighbor rule SVM Linear classier SVM Polynomial kernel

1 80.88 100 98.53

2 83.82 100 98.53

3 92.65 88.24 86.76

4 100 100 100

5 92.65 97.06 92.65

6 91.18 97.06 97.06

7 26.47 33.82 32.35

8 86.76 76.47 69.12

9 98.53 100 100

10 92.65 100 100

11 94.12 100 94.12

12 23.53 32.53 20.21

13 67.65 67.65 67.65

dataset containing 68 subjects. In this dataset, there are 13 images per person. We used 12 for training

and the remaining one for testing. More specically, we rst performed a PCA to reduce the dimension-

ality of the data from 1920 to 748 by retaining the projections along the most signicant eigenvectors

directions. A sher analysis was then done on these projection values and a set of 67 features were

obtained for each class. We then use the nearest neighbor rule for classication and test the performance

results with those of the SVM based classiers.

Detailed studies were performed on the algorithm with the POSE.mat dataset. We observed that the

FLD on an average performed much better that the PCA in most cases. Moreover, we note that we used

all the eigen vectors in the case of PCA while we just retained the top 67 basis vectors in the case of FLD.

This also brings in much superior data compression. To further study the performance improvement, we

considered the Leave one case in greater detail. We obtained the percentage accuracy for different cases

based on the index of the sample used in training. The results are shown in Table. 2. For the sake of

comparison, we show again the corresponding results for PCA. We observe that the average performance

of the FLD is better that that of the PCA in most cases but we also note that in some cases PCA did better

than FLD in classication.

Next we compare the performance of the SVM with the nearest neighbor classier. From the results

presented in Table 2, we observe that the SVM with the linear kernel performs better than the nearest

neighbor rule in all cases. The detection accuracies observed in this case are higher than those ob-

tained using the nearest neighbor rule. Although SVM performs better, we also remark that the nearest

neighbor rule is the simplest of the classiers and uses the least amount of computations. The SVM on

the other hand is computationally more complex and involves solving a high dimensional optimization

problem. Thus, we observe a tradeoff between computational complexity and detection accuracy and in

applications where computational complexity is not a primary concern, SVM would be a better choice.

Table 2. Percentage accuracy of PCA and FLD under leave one out for POSE.mat

Index of % Accuracy with PCA % Accuracy with PCA Accuracy with FLD Accuracy with FLD

Left sample nearest neighbor rule SVM Linear kernel nearest neighbor rule SVM Linear kernel

1 80.88 100 63.24 61.76

2 83.82 100 98.53 100

3 92.65 88.24 91.18 89.71

4 100 100 100 100

5 92.65 97.06 95 100

6 91.18 97.06 60.29 63.24

7 26.47 33.82 7.35 7.35

8 86.76 76.47 61.47 61.47

9 98.53 100 100 100

10 92.65 100 92.65 100

11 94.12 100 65 65

12 23.53 32.53 33.24 33.24

13 67.65 67.65 70.59 70.59

4. MULTIPLE EXEMPLAR DISCRIMINANT ANALYSIS

4.1. Algorithm Description

In the previous section, we studied the performance of the FLD on face recognition. Although FLD

works better than PCA, we noticed that the percentage accuracy is quite low in many cases. For example,

when we train with all images except the image 7 (for all faces) and test on the image with index

7, the average percentage accuracy was very low and was around 7%. This leaves a lot of room for

improvement.

This was the basic motivation behind the algorithm proposed by Zhou and Chellappa [5]. In this

paper, the authors argue that the main disadvantage of the FLD is the use of a single exemplar. Hence,

the authors propose to use multiple exemplar. More specically, the authors re-dene the within-class

and the between class scatter matrices to get improved performance. In this case, the S

B

and S

W

have

been redened as

S

W

=

c

i=1

1

N

2

i

x

j

X

i

x

k

X

i

(x

j

x

k

)(x

j

x

k

)

T

(5)

S

B

=

c

i=1

c

j=1,j=i

1

N

i

N

j

x

m

X

i

x

k

X

j

(x

m

x

k

)(x

m

x

k

)

T

(6)

The multiple-exemplar discriminant analysis then nd the optimal set of basis vectors that maximizes

J =

|W

T

S

B

W|

|W

T

S

W

W|

(7)

with the new denitions of S

B

and S

W

. For further details, the readers are referred to [5].

4.2. Simulation Results and Discussions

We implemented the MEDA method and studied its performance under the dataset POSE.mat. We

choose images from 20 subjects for your study. The rst situation that we considered is the Leave one

out case. In this case, we randomly chose one image out of the 13 for training and used the remaining

image for testing. In Table 3, we show the performance results and compare it with the FLD method

described in the previous section. We observe that the MEDA performed better or equal to the FLD in

most cases. This improved performance can be attributed to the multiple-exemplars used in the MEDA

approach compared to FLD. The results are shown in Table 3.

Next, we study the performance of the type of classier. We show the corresponding classication re-

sults obtained using the SVM classier in Table 3. The results indicates that the SVM classier performs

equally or better than the nearest neighbor rule in most cases. This again demonstrates the superiority of

the SVM for classication.

Although, the MEDA seems to perform better in many cases, the computational complexity of MEDA

method for training is very high compared to the regular FLD method. This prohibits its usage in situa-

tions where the low computational complexity is desired. However, the training is an one-time process

and therefore the MEDA might be preferred in some applications where this is not an issue. The compu-

tational complexity during testing is same in both cases as both these techniques use the nearest neighbor

rule (or SVM as the case may be) for classication.

Table 3. Percentage accuracy of MEDA and FLD under leave one out for POSE.mat with 20 Images

Index of % Accuracy with MEDA % Accuracy with FLD MEDA FLD

Left Sample Nearest neighbor rule Nearest Neighbor SVM Linear kernel SVM Linear kernel

1 95 90 100 100

2 90 85 100 100

3 92 89 100 100

4 100 95 100 97

5 100 100 100 100

6 100 100 100 100

7 15 15 15 15

8 95 95 95 95

9 90 90 100 100

10 100 95 100 95

11 75 70 75 70

12 45 40 50 45

13 95 95 95 95

5. NEAREST FEATURE LINE METHOD FOR FACE RECOGNITION

5.1. Algorithm Description

The nearest feature line method was proposed by Li and Lu in 1999 [10]. This method is an extension

of the PCA method for face recognition. Here, the authors do an PCA rst to reduce the dimensionality

of the input data. They then propose the nearest line method to classify the data into various classes.

The basic idea behind this algorithm is to consider any two points in the same class. Given these

points, the authors then hypothesize that all the points that lie on the line joining these two points will

also belong to the same class. Therefore, the distance between the third point (test point) and the class can

now be written in terms of the distance between the point and the line joining these two training points

chosen. For the case of multiple training points, the authors then extend this method by considering all

possible combinations of the two points in the same class.

Mathematically, given two points x

1

and x

2

of the same training class, the algorithm nds the distance

between the test point x and the line joining these two points as

d(x, {x

1

, x

2

}) = ||x p|| (8)

where the point p is the point that lies on the line joining x

1

and x

2

and can be expressed as p =

x

1

+ (x

2

x

1

) with

= (x x

1

).(x

2

x

1

)/((x

2

x

1

).(x

2

x

1

)) (9)

For further details of the algorithm, the readers are referred to their paper [10].

5.2. Simulation Results

This algorithmwas implemented. The simulation results were obtained for the POSE.mat dataset. Again,

the leave one out strategy was tested. Out of the 13 images available per person, one of them was left

out and the testing was performed on the remaining. The results of our testing are shown in Table 4. The

results indicate that the Nearest feature line (NFL) method perform slightly better than the PCA. This is

because, it some ways, it considers all the points between the two data in the same class as therefore can

be effectively understood as increasing the amount of samples available during the training procedure.

The corresponding results for the SVM with linear kernel are also shown in the table for the sake of

comparison.

Thus, we observe from the table that on the pose.mat dataset, the NFL method is only provides

marginal improvement. However, it is very computationally intensive in the testing phase like the SVM.

We also notice that the SVM performs better than the NFL method in most of the cases.

6. COMPARISON STUDY WITH DIFFERENT DISTANCE METRICS

In this section, we study the performance under various distance metrics used in the nearest neighbor rule

and compare the results with that of the SVM. For our analysis, we only use the PCA method and test

it for the POSE.mat dataset. We consider the Leave one out case and then study the performance of the

PCA under three distance measures namely L1, L2 and L3 norms. The results are shown in Table. 5. We

observe that the performance varies a lot depending on the choice of the norms. Our comparison results

studying the rst three norms indicate that on an average the L2 is able to better capture the variability

and thus has superior performance. We also notice that the SVM with linear classier performs the best

Table 4. Percentage accuracy of PCA with Nearest Neighbor, NFL and SVM under leave one out for POSE.mat

Index of % Accuracy with PCA Accuracy with NFL PCA with

Left sample Nearest Neighbor SVM

1 80.88 80.88 100

2 83.82 84.23 100

3 92.65 92.65 88.24

4 100 100 100

5 92.65 93 97.06

6 91.18 91.29 97.06

7 26.47 27.15 33.82

8 86.76 88.47 76.47

9 98.53 100 100

10 92.65 94 68

11 94.12 94.8 82.35

12 23.53 24.44 32.53

13 67.65 69.23 67.65

7. CONCLUSIONS

In this project, we consider 4 different methods for face recognition. They are (1) Principal Compo-

nent Analysis (2)Fisher Linear discriminant analysis (3) Multiple Exemplar Discriminant Analysis and

(4) Nearest Feature Line method and studied its performance under the SVM based classier with the

nearest neighbor rule. The simulations were performed under various illuminations, expression and pose

variations. The performance results indicate that MEDA has a slight upper hand among the four methods

as regards to choice of features for classication.

Our comparison results with the nearest neighbor rule and the SVM indicate that the SVM performs

much better in all cases. Further, we also notice that the performance of the SVM critically depends

on the choice of the kernel functions. Our results with the four different kernels linear, polynomial,

radial basis and sigmoid indicate that the linear and polynomial kernels performs best for the data sets

that we tested on. Thus, we infer that the choice of the kernel is highly data dependent. We conclude

that the SVM classier seems to perform best but care needs to be taken to choose the best kernel for

classication.

Table 5. Percentage accuracy of PCA under different distance metrics under Leave one out strategy. *NN indicates Nearest

Neighbor rule

Index of Left sample NN* - L1 norm NN* - L2 norm NN* - L3 norm NFL SVM

1 76.47 80.88 61.76 80.88 100

2 94.12 83.82 64.71 84.23 100

3 94.12 92.65 80.88 92.65 88.24

4 100 100 98.53 100 100

5 91.18 92.65 83.82 93 97.06

6 83.82 91.18 89.71 91.29 97.06

7 13.24 26.47 26.47 27.15 33.82

8 94.12 86.76 72.06 88.47 76.47

9 98.53 98.53 97.06 100 100

10 89.71 92.65 86.76 94 68

11 88.24 94.12 91.18 94.8 82.35

12 22.06 23.53 19.12 24.44 32.53

13 64.71 67.65 47.06 69.23 67.65

8. REFERENCES

[1] M. Turk, A. Pentland, Eigenfaces for recognition, Journal of Cognitive Neuroscience, vol. 3, pp

72-86, 1991.

[2] P. Belhumeur, J. Hespanha, and D. Kriegman, Eigenfaces vs. Fisherfaces: Recognition Using Class

Specic Linear Projection, IEEE Trans. PAMI, vol. 19, pp. 711-720, 1997.

[3] K. Etemad and R. Chellappa,Discriminant Analysis for Recognition of Human Face Images, Jour-

nal of Optical Society of America A, pp. 1724-1733, 1997.

[4] W. Zhao, R. Chellappa, A. Rosenfeld, and J. Phillips, Face Recognition: A Literature Survey, to

appear ACM computing surveys, 2003

[5] S.K.Zhou and R.Chellappa, Multiple-Exemplar Discriminant Analysis for Face Recognition, ICIP.

[6] M.S.Bartlett, J.R.Movellan, T.R.Sejnowski, Face recognition by Independent Component Analy-

sis, IEEE Trans. on Neural Networks, Vol. 13, No. 6, Nov. 2002.

[7] B.Moghaddam, T.Jebara, A. Pentland, Bayesian Face recogition, MERL research report, Feb 2002.

[8] B.Moghaddam, T.Jebara, A. Pentland, Bayesian Modeling of Facial Similarity, Adv. in Neural

Info. Processing Systems 11, MIT Press, 1999.

[9] B.Moghaddam, A.Pentland, Probabilisitc Visual Learning for Object Representation, Early Visual

Learning, Oxford University Press, 1996.

[10] S.Z.Li, J. Lu, Face Recognition Using Nearest Feature Line Method, IEEE Trans. on Neural

Networks, Vol. 10, No. 2, 1999.

[11] M.Ramachandran, S.K.Zhou, R.Chellappa, D.Jhalani, Methods to convert smiling face to neutral

face with applications to face reognition, IEEE ICASSSP, March 2005.

[12] R. Chellappa, C. Wilson, and S. Sirohey, Human and Machine Recognition of Faces: A Survey,

Proceedings of IEEE, vol. 83, pp. 705-740, 1995.

[13] C. J. C. Burges, A Tutorial on Support Vector Machines for Pattern Recognition, Kluwer Aca-

demic Publishers, Boston.

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- اخبار ايران و جهان - خبرگزاري فارس - Fars News AgencyДокумент1 страницаاخبار ايران و جهان - خبرگزاري فارس - Fars News AgencyzzzxxccvvvОценок пока нет

- CheckoutДокумент19 страницCheckoutzzzxxccvvvОценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Pearson New International Edition by From PearsonДокумент1 страницаPearson New International Edition by From PearsonzzzxxccvvvОценок пока нет

- Make It Easier For Other People To Find Your Content by Providing More Information About It.Документ1 страницаMake It Easier For Other People To Find Your Content by Providing More Information About It.zzzxxccvvvОценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Help Creating A DjVu File - Wikimedia CommonsДокумент6 страницHelp Creating A DjVu File - Wikimedia CommonszzzxxccvvvОценок пока нет

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Varzesh 3Документ1 страницаVarzesh 3zzzxxccvvvОценок пока нет

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Discoverability ScoreДокумент1 страницаDiscoverability Scorezzzxxccvvv0% (1)

- دروس مصوب گرایش نانو مهندسی پلیمرДокумент16 страницدروس مصوب گرایش نانو مهندسی پلیمرzzzxxccvvvОценок пока нет

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- کتاب پراهمیت فقط برای دانلودДокумент1 страницаکتاب پراهمیت فقط برای دانلودzzzxxccvvvОценок пока нет

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- Wiley - 4th Edition - Ronald LДокумент1 страницаWiley - 4th Edition - Ronald LzzzxxccvvvОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Wiley - , 4th Edition - Ronald LДокумент1 страницаWiley - , 4th Edition - Ronald LzzzxxccvvvОценок пока нет

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Documents Found MatchingДокумент1 страницаDocuments Found MatchingzzzxxccvvvОценок пока нет

- Top Categoriestop Audiobook Categories: SearchДокумент5 страницTop Categoriestop Audiobook Categories: SearchzzzxxccvvvОценок пока нет

- 25 Documents Found MatchingДокумент1 страница25 Documents Found MatchingzzzxxccvvvОценок пока нет

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Information About It.Документ1 страницаInformation About It.zzzxxccvvvОценок пока нет

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Improve Discoverability!Документ1 страницаImprove Discoverability!zzzxxccvvv29% (7)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Improve Discoverability!Документ1 страницаImprove Discoverability!zzzxxccvvvОценок пока нет

- Add AdditionalДокумент1 страницаAdd AdditionalzzzxxccvvvОценок пока нет

- Improve Discoverability!Документ1 страницаImprove Discoverability!zzzxxccvvvОценок пока нет

- Improve Discoverability!Документ1 страницаImprove Discoverability!zzzxxccvvvОценок пока нет

- Make It Easier For Other People To Find Your Content by Providing More Information About It.Документ1 страницаMake It Easier For Other People To Find Your Content by Providing More Information About It.zzzxxccvvvОценок пока нет

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- 25 Documents Found MatchingДокумент1 страница25 Documents Found MatchingzzzxxccvvvОценок пока нет

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Documents Found MatchingДокумент1 страницаDocuments Found MatchingzzzxxccvvvОценок пока нет

- Improve Discoverability!Документ1 страницаImprove Discoverability!zzzxxccvvv29% (7)

- Required FieldДокумент1 страницаRequired FieldzzzxxccvvvОценок пока нет

- 0007 Book NewДокумент1 страница0007 Book NewzzzxxccvvvОценок пока нет

- Finance Careers MichiganStateUniversityДокумент12 страницFinance Careers MichiganStateUniversityzzzxxccvvvОценок пока нет

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Managing Soils in Pecan OrchardsДокумент4 страницыManaging Soils in Pecan OrchardszzzxxccvvvОценок пока нет

- 0006Документ1 страница0006zzzxxccvvvОценок пока нет

- 0005 Book NewДокумент1 страница0005 Book NewzzzxxccvvvОценок пока нет

- Rule Based ClassificationsДокумент14 страницRule Based ClassificationsAmrusha NaallaОценок пока нет

- Data Compression (RCS 087)Документ51 страницаData Compression (RCS 087)sakshi mishraОценок пока нет

- SLMДокумент10 страницSLMBejita AsseiyaОценок пока нет

- Line Drawing Algorithms: y MX + B B The y Intercept of A LineДокумент23 страницыLine Drawing Algorithms: y MX + B B The y Intercept of A LineKrishan Pal Singh RathoreОценок пока нет

- Q1) An Array Contains 25 Positive Integers - Write A Module Which: A) Finds All The Require of Elements Whose Sum Is 25. AnsДокумент12 страницQ1) An Array Contains 25 Positive Integers - Write A Module Which: A) Finds All The Require of Elements Whose Sum Is 25. AnsKabirul IslamОценок пока нет

- EML4312 Chap06Документ32 страницыEML4312 Chap06Kareem BadrОценок пока нет

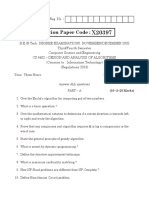

- Question Paper Code:: Reg. No.Документ3 страницыQuestion Paper Code:: Reg. No.Vijay VijayОценок пока нет

- Design: of AlgorithmsДокумент3 страницыDesign: of Algorithmspankaja_ssu3506Оценок пока нет

- Where's WallyДокумент4 страницыWhere's WallyFrank TangОценок пока нет

- A: Simplex Method: J I Ij BiДокумент4 страницыA: Simplex Method: J I Ij BiKimondo KingОценок пока нет

- Muhammad Akif Naeem Open Ended Lab Signals and SystemДокумент20 страницMuhammad Akif Naeem Open Ended Lab Signals and SystemMuhammad Akif NaeemОценок пока нет

- Final ExamДокумент4 страницыFinal ExamYingquan LiОценок пока нет

- 1 Non-Uniform Quantizer - PDFДокумент5 страниц1 Non-Uniform Quantizer - PDFABHISHEK BОценок пока нет

- 1-1 BasicsДокумент32 страницы1-1 BasicsRohan SrivastavaОценок пока нет

- Information Theory and CodingДокумент10 страницInformation Theory and CodingThe Anonymous oneОценок пока нет

- I Semester BCA Examination (NEP - SCHEME) : Subject: Computer ScienceДокумент2 страницыI Semester BCA Examination (NEP - SCHEME) : Subject: Computer Sciencechandan reddyОценок пока нет

- 10th CBSE (SA - 1) Revision Pack Booklet - 2 (Maths)Документ18 страниц10th CBSE (SA - 1) Revision Pack Booklet - 2 (Maths)anon_708612757Оценок пока нет

- ICPC Latin America Championship (Public)Документ3 страницыICPC Latin America Championship (Public)Ficapro Castro PariОценок пока нет

- A Comparative Study of K-Means and K-Medoid Clustering For Social Media Text MiningДокумент6 страницA Comparative Study of K-Means and K-Medoid Clustering For Social Media Text MiningIJASRETОценок пока нет

- Fundamentals of Algorithms - CS502 HandoutsДокумент4 страницыFundamentals of Algorithms - CS502 HandoutsPayal SharmaОценок пока нет

- Signals, Spectra, Signal Processing ECE 401 (TIP Reviewer)Документ40 страницSignals, Spectra, Signal Processing ECE 401 (TIP Reviewer)James Lindo100% (2)

- What Is Traversal?Документ14 страницWhat Is Traversal?deepinder singhОценок пока нет

- Galerkin MethodДокумент18 страницGalerkin MethodBurak Kılınç0% (1)

- The Characteristic Polynomial 1. The General Second Order Case and The Characteristic EquationДокумент3 страницыThe Characteristic Polynomial 1. The General Second Order Case and The Characteristic EquationVitor FelicianoОценок пока нет

- Tugas DDO 1 2021Документ4 страницыTugas DDO 1 2021RindangОценок пока нет

- Machine Learning LabДокумент13 страницMachine Learning LabKumaraswamy AnnamОценок пока нет

- DSP Lectures Full SetДокумент126 страницDSP Lectures Full SetAsad JaviedОценок пока нет

- L06 - Solution To A System of Linear Algebraic EquationsДокумент60 страницL06 - Solution To A System of Linear Algebraic EquationsSulaiman AL MajdubОценок пока нет

- Umass Lowell Computer Science 91.503: Graduate AlgorithmsДокумент46 страницUmass Lowell Computer Science 91.503: Graduate AlgorithmsShivam AtriОценок пока нет

- Laboratory Exercise 5: Digital Processing of Continuous-Time SignalsДокумент24 страницыLaboratory Exercise 5: Digital Processing of Continuous-Time SignalsPabloMuñozAlbitesОценок пока нет