Академический Документы

Профессиональный Документы

Культура Документы

PowerCenter 9' Level 2 Developer Student Guide Lab

Загружено:

msonumanishИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

PowerCenter 9' Level 2 Developer Student Guide Lab

Загружено:

msonumanishАвторское право:

Доступные форматы

Informatica

PowerCenter

8

Level II Developer

Lab Guide

Version - PC8LIID 20060910

Informatica PowerCenter Level II Developer Lab Guide

Version 8.1

September 2006

Copyright (c) 19982006 Informatica Corporation.

All rights reserved. Printed in the USA.

This software and documentation contain proprietary information of Informatica Corporation and are provided under a license agreement containing restrictions

on use and disclosure and are also protected by copyright law. Reverse engineering of the software is prohibited. No part of this document may be reproduced or

transmitted in any form, by any means (electronic, photocopying, recording or otherwise) without prior consent of Informatica Corporation.

Use, duplication, or disclosure of the Software by the U.S. Government is subject to the restrictions set forth in the applicable software license agreement and as

provided in DFARS 227.7202-1(a) and 227.7702-3(a) (1995), DFARS 252.227-7013(c)(1)(ii) (OCT 1988), FAR 12.212(a) (1995), FAR 52.227-19, or FAR

52.227-14 (ALT III), as applicable.

The information in this document is subject to change without notice. If you find any problems in the documentation, please report them to us in writing.

Informatica Corporation does not warrant that this documentation is error free. Informatica, PowerMart, PowerCenter, PowerChannel, PowerCenter Connect, MX,

and SuperGlue are trademarks or registered trademarks of Informatica Corporation in the United States and in jurisdictions throughout the world. All other

company and product names may be trade names or trademarks of their respective owners.

Portions of this software are copyrighted by DataDirect Technologies, 1999-2002.

Informatica PowerCenter products contain ACE (TM) software copyrighted by Douglas C. Schmidt and his research group at Washington University and

University of California, Irvine, Copyright (c) 1993-2002, all rights reserved.

Portions of this software contain copyrighted material from The JBoss Group, LLC. Your right to use such materials is set forth in the GNU Lesser General Public

License Agreement, which may be found at http://www.opensource.org/licenses/lgpl-license.php. The JBoss materials are provided free of charge by Informatica,

as-is, without warranty of any kind, either express or implied, including but not limited to the implied warranties of merchantability and fitness for a particular

purpose.

Portions of this software contain copyrighted material from Meta Integration Technology, Inc. Meta Integration is a registered trademark of Meta Integration

Technology, Inc.

This product includes software developed by the Apache Software Foundation (http://www.apache.org/). The Apache Software is Copyright (c) 1999-2005 The

Apache Software Foundation. All rights reserved.

This product includes software developed by the OpenSSL Project for use in the OpenSSL Toolkit and redistribution of this software is subject to terms available at

http://www.openssl.org. Copyright 1998-2003 The OpenSSL Project. All Rights Reserved.

The zlib library included with this software is Copyright (c) 1995-2003 Jean-loup Gailly and Mark Adler.

The Curl license provided with this Software is Copyright 1996-200, Daniel Stenberg, <Daniel@haxx.se>. All Rights Reserved.

The PCRE library included with this software is Copyright (c) 1997-2001 University of Cambridge Regular expression support is provided by the PCRE library

package, which is open source software, written by Philip Hazel. The source for this library may be found at ftp://ftp.csx.cam.ac.uk/pub/software/programming/

pcre.

InstallAnywhere is Copyright 2005 Zero G Software, Inc. All Rights Reserved.

Portions of the Software are Copyright (c) 1998-2005 The OpenLDAP Foundation. All rights reserved. Redistribution and use in source and binary forms, with or

without modification, are permitted only as authorized by the OpenLDAP Public License, available at http://www.openldap.org/software/release/license.html.

This Software is protected by U.S. Patent Numbers 6,208,990; 6,044,374; 6,014,670; 6,032,158; 5,794,246; 6,339,775 and other U.S. Patents Pending.

DISCLAIMER: Informatica Corporation provides this documentation as is without warranty of any kind, either express or implied,

including, but not limited to, the implied warranties of non-infringement, merchantability, or use for a particular purpose. The information provided in this

documentation may include technical inaccuracies or typographical errors. Informatica could make improvements and/or changes in the products described in this

documentation at any time without notice.

Tabl e of Contents

Informatica PowerCenter 8 Level II Developer iii

Table of Contents

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

About This Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Document Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Other Informatica Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Obtaining Informatica Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Visiting Informatica Customer Portal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Visiting the Informatica Web Site . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Visiting the Informatica Developer Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Visiting the Informatica Knowledge Base . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Obtaining Informatica Professional Certification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Providing Feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . x

Obtaining Technical Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . x

Lab 1: Dynamic Lookup Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Step 1: Create Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Step 2: Preview Target Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Step 3: View Source Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 4: Create Workflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 5: Run Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 6: Verify Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Step 7: Verify Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Lab 2: Workflow Alerts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 1: Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 2: Mappings Required . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 3: Reusable Sessions Required . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 4: Create a Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 5: Create a Worklet in the Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 6: Create a Timer Task in the Worklet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Step 7: Create an E-Mail Task in the Worklet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Step 8: Create a Control Task in the Worklet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Step 9: Add Reusable Session to the Worklet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Step 10: Link Tasks in Worklet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Step 11: Add Reusable Session to the Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Step 12: Link Tasks in Workflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Step 13: Run Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Table of Contents

iv Informati ca PowerCenter 8 Level II Devel oper

Lab 3: Dynamic Scheduling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 1: Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 2: Mapping Required. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 3: Copy Reusable Sessions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 4: Create Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 5: Create Workflow Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 6: Add Session to Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Step 7: Create a Timer Task. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Step 8: Create an Assignment Task. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Step 9: Link Tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Step 10: Run Workflow by Editing the Workflow Schedule . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Step 11: Monitor the Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Lab 4: Recover a Suspended Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Step 1: Copy the Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Step 2: Edit the Workflow and Session for Recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Step 3: Edit the Session to Cause an Error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Step 4: Run the Workflow, Fix the Session, and Recover the Workflow. . . . . . . . . . . . . . . . . . 23

Lab 5: Using the Transaction Control Transformation . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Step 1: Create Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Step 2: Create Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Step 3: Run Workflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Step 4: Verify Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Step 5: Verify Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Lab 6: Error Handling with Transactions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 1: Create Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 2: Create Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 3: Run Workflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 4: Verify Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 5: Verify Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Lab 7: Handling Fatal and Non-Fatal Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Step 1: Create Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Step 2: Create Workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Step 3: Run Workflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Tabl e of Contents

Informatica PowerCenter 8 Level II Developer v

Step 4: Verify Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Step 5: Verify Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Lab 8: Repository Queries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Step 1: Create a Query to Search for Targets with Customer . . . . . . . . . . . . . . . . . . . . . . . 50

Step 2: Validate, Save, and Run the Query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Step 3: Create A Query to Search For Mapping Dependencies . . . . . . . . . . . . . . . . . . . . . . . . 52

Step 4: Validate, Save, and Run the Query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Step 5: Modify and Run the Query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Step 6: Run the Query Accessed by the Repository Manager . . . . . . . . . . . . . . . . . . . . . . . . . 54

Step 7: Create Your Own Queries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Lab 9: Performance and Tuning Workshop. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Workshop Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Workshop Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Establish ETL Baseline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Documented Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Lab 10: Partitioning Workshop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Workshop Scenarios . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Scenario 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Scenario 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Scenario 3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Scenario 4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Answers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Table of Contents

vi Informati ca PowerCenter 8 Level II Devel oper

Preface

Informatica PowerCenter 8 Level II Developer vii

Preface

Welcome to PowerCenter, Informaticas software product that delivers an open, scalable data integration

solution addressing the complete life cycle for all data integration projects including data warehouses and

data marts, data migration, data synchronization, and information hubs. PowerCenter combines the latest

technology enhancements for reliably managing data repositories and delivering information resources in

a timely, usable, and efficient manner.

The PowerCenter metadata repository coordinates and drives a variety of core functions, including

extracting, transforming, loading, and managing data. The Integration Service can extract large volumes

of data from multiple platforms, handle complex transformations on the data, and support high-speed

loads. PowerCenter can simplify and accelerate the process of moving data warehouses from development

to test to production.

Preface

vii i Informati ca PowerCenter 8 Level II Devel oper

About This Guide

Welcome to the PowerCenter 8 Level II Developer course.

This course is designed for data integration and data warehousing implementers. You should be familiar

with PowerCenter, data integration and data warehousing terminology, and Microsoft Windows.

Document Conventions

This guide uses the following formatting conventions:

If you see It means Example

> Indicates a submenu to navigate to. Click Repository > Connect.

In this example, you should click the Repository menu

or button and choose Connect.

boldfaced text

Indicates text you need to type or enter. Click the Rename button and name the new source

definition S_EMPLOYEE.

UPPERCASE

Database tables and column names are shown

in all UPPERCASE.

T_ITEM_SUMMARY

italicized text

Indicates a variable you must replace with

specific information.

Connect to the Repository using the assigned login_id.

Note: The following paragraph provides additional

facts.

Note: You can select multiple objects to import by

using the Ctrl key.

Tip: The following paragraph provides suggested

uses or a Velocity best practice.

Tip: The m_ prefix for a mapping name is

Preface

Informatica PowerCenter 8 Level II Developer ix

Other Informatica Resources

In addition to the student guides, Informatica provides these other resources:

Informatica Documentation

Informatica Customer Portal

Informatica web site

Informatica Developer Network

Informatica Knowledge Base

Informatica Professional Certification

Informatica Technical Support

Obtaining Informatica Documentation

You can access Informatica documentation from the product CD or online help.

Visiting Informatica Customer Portal

As an Informatica customer, you can access the Informatica Customer Portal site at http://

my.informatica.com. The site contains product information, user group information, newsletters, access

to the Informatica customer support case management system (ATLAS), the Informatica Knowledge Base,

and access to the Informatica user community.

Visiting the Informatica Web Site

You can access Informaticas corporate web site at http://www.informatica.com. The site contains

information about Informatica, its background, upcoming events, and locating your closest sales office.

You will also find product information, as well as literature and partner information. The services area of

the site includes important information on technical support, training and education, and

implementation services.

Visiting the Informatica Developer Network

The Informatica Developer Network is a web-based forum for third-party software developers. You can

access the Informatica Developer Network at the following URL:

http://devnet.informatica.com

The site contains information on how to create, market, and support customer-oriented add-on solutions

based on interoperability interfaces for Informatica products.

Visiting the Informatica Knowledge Base

As an Informatica customer, you can access the Informatica Knowledge Base at http://

my.informatica.com. The Knowledge Base lets you search for documented solutions to known technical

issues about Informatica products. It also includes frequently asked questions, technical white papers, and

technical tips.

Obtaining Informatica Professional Certification

You can take, and pass, exams provided by Informatica to obtain Informatica Professional Certification.

For more information, go to:

http://www.informatica.com/services/education_services/certification/default.htm

Preface

x Informati ca PowerCenter 8 Level II Devel oper

Providing Feedback

Email any comments on this guide to aconlan@informatica.com.

Obtaining Technical Support

There are many ways to access Informatica Technical Support. You can call or email your nearest

Technical Support Center listed in the following table, or you can use our WebSupport Service.

Use the following email addresses to contact Informatica Technical Support:

support@informatica.com for technical inquiries

support_admin@informatica.com for general customer service requests

WebSupport requires a user name and password. You can request a user name and password at http://

my.informatica.com.

North America / South

America

Europe / Middle East / Africa Asia / Australia

Informatica Corporation

Headquarters

100 Cardinal Way

Redwood City, California

94063

United States

Toll Free

877 463 2435

Standard Rate

United States: 650 385 5800

Informatica Software Ltd.

6 Waltham Park

Waltham Road, White Waltham

Maidenhead, Berkshire

SL6 3TN

United Kingdom

Toll Free

00 800 4632 4357

Standard Rate

Belgium: +32 15 281 702

France: +33 1 41 38 92 26

Germany: +49 1805 702 702

Netherlands: +31 306 022 797

United Kingdom: +44 1628 511

445

Informatica Business

Solutions Pvt. Ltd.

301 & 302 Prestige Poseidon

139 Residency Road

Bangalore 560 025

India

Toll Free

Australia: 00 11 800 4632 4357

Singapore: 001 800 4632 4357

Standard Rate

India: +91 80 5112 5738

Lab 1

Informatica PowerCenter 8 Level II Developer 1

Lab 1: Dynamic Lookup Cache

Technical Description

You have a customer table in your target database that contains existing customer information. You also

have a flat file that contains new customer data. Some rows in the flat file contain new information on

new customers, and some contain updated information on existing customers. You need to insert the new

customers into your target table and update the existing customers.

The source file may contain multiple rows for a customer. It may also contain rows that contain updated

information for some columns and NULLs for the columns that do not need to be updated.

To do this, you will use a Lookup transformation using a dynamic cache that looks up data on the target

table. The Integration Service inserts new rows and updates existing rows in the lookup cache as it inserts

and updates rows in the target table. If you configure the Lookup transformation properly, the Integration

Service ignores NULLs in the source when it updates a row in the cache and target.

Objectives

Use a dynamic lookup cache to update and insert rows in a customer table

Use a Router transformation to route rows based on the NewLookupRow value

Use an Update Strategy transformation to flag rows for update or insert

Duration

45 minutes

Mapping Overview

Lab 1

2 Informati ca PowerCenter 8 Level II Devel oper

Velocity Deliverable: Mapping Specifications

Sources

Targets

Source To Target Field Matrix

Mapping Name m_DYN_update_customer_list_xx

Source System Flat file Target System EDWxx

Initial Rows 8 Rows/Load 8

Short Description Update the existing customer list with new and updated information.

Load Frequency On demand

Preprocessing None

Post Processing None

Error Strategy None

Reload Strategy None

Unique Source Fields CUST_ID

Files

File Name File Location

updated_customer_list.txt

Create shortcut from

DEV_SHARED folder

In the Source Files directory on the Integration Service process machine.

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Keys

CUSTOMER_LIST

Create shortcut from

DEV_SHARED folder

yes PK_KEY

CUST_ID

Target Column Source File or Transformation Source Column

Ignore NULL Inputs for Updates

(Lookup Transformation)

PK_KEY LKP_CUSTOMER_LIST Sequence-ID

CUST_ID updated_customer_list.txt CUST_ID

FIRSTNAME updated_customer_list.txt FIRSTNAME Yes

LASTNAME updated_customer_list.txt LASTNAME Yes

ADDRESS updated_customer_list.txt ADDRESS Yes

CITY updated_customer_list.txt CITY Yes

Lab 1

Informatica PowerCenter 8 Level II Developer 3

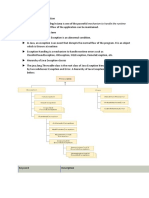

Detailed Overview

STATE updated_customer_list.txt STATE Yes

ZIP updated_customer_list.txt ZIP Yes

Repository Object Name Object Type Description and Instructions

m_DYN_update_customer_list_xx Mapping m_DYN_update_customer_list_xx

Shortcut_to_updated_customer_list Source Definition Flat file in $PMSourceFileDir directory.

Create shortcut from DEV_SHARED folder.

SQ_Shortcut_to_updated_customer_list Source Qualifier Connect to input/output ports of the Lookup transformation,

LKP_CUSTOMER_LIST.

LKP_CUSTOMER_LIST Lookup Lookup transformation based on the target definition

Shortcut_to_CUSTOMER_LIST and the target table CUSTOMER_LIST.

- Change the input/output port names prepend them with IN_.

- Use dynamic caching.

- Define the lookup condition using the customer ID ports.

- Configure the Lookup properties so it inserts new rows and updates

existing rows. (Insert Else Update)

- Ignore NULL inputs for all lookup/output ports except CUST_ID and

PK_KEY.

- Associate input/output ports with a similar name for each lookup/output

port.

- PK_KEY must be an integer in order to specify Sequence-ID as the

Associated Port.

- Connect the NewLookupRow port and all lookup/output ports to

RTR_Insert_Update.

RTR_Insert_Update Router Create two output groups with the following names:

- UPDATE_EXISTING: Condition is NewLookupRow=2. Connect output

ports to UPD_Update_Existing.

- INSERT_NEW: Condition is NewLookupRow=1. Connect output ports to

UPD_Insert_New.

Do not connect any of the NewLookupRow ports to any transformation.

Do not connect the Default output group ports to any transformation.

UPD_Insert_New Update Strategy Update Strategy Expression DD_INSERT.

Connect all input/output ports to CUSTOMER_LIST_Insert.

UPD_Update_Existing Update Strategy Update Strategy Expression DD_UPDATE.

Connect all input/output ports to CUSTOMER_LIST_Update.

CUSTOMER_LIST_Insert Target Definition First instance of the target table definition in EDWxx schema.

Create shortcut from DEV_SHARED folder of the CUSTOMER_LIST target

definition.

In the mapping, rename the target instance name to

CUSTOMER_LIST_Insert.

CUSTOMER_LIST_Update Target Definition Second instance of the target table definition in EDWxx schema.

Create shortcut from DEV_SHARED folder of the CUSTOMER_LIST target

definition.

In the mapping, rename the target instance name to

CUSTOMER_LIST_Update.

Target Column Source File or Transformation Source Col umn

Ignore NULL Inputs for Updates

(Lookup Transformation)

Lab 1

4 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Create Mapping

1. Connect to the PC8A_DEV repository using Developerxx as the user name and developerxx as the

password.

2. Create a mapping called m_DYN_update_customer_list_xx, where xx is your student number. Use

the mapping details described in Detailed Overview on page 3 for guidelines.

Figure 1-1 shows an overview of the mapping you must create:

Step 2: Preview Target Data

1. In the m_DYN_update_customer_list_xx mapping, preview the target data to view the rows that

exist in the table.

Figure 1-1. m_DYN_update_customer_list_xx Mapping

Lab 1

Informatica PowerCenter 8 Level II Developer 5

2. Use the ODBC_EDW ODBC connection to connect to the target database. Use EDWxx as the user

name and password.

The CUSTOMER_LIST table should contain the following data:

Figure 1-2. Preview Target Data for CUSTOMER_LIST Table Before Session Run

PK_KEY CUST_ID FIRSTNAME LASTNAME ADDRESS CITY STATE ZIP

111001 55001 Melvin Bradley 4070 Morning Trl New York NY 30349

111002 55002 Anish Desai 2870 Elliott Cir Ne New York NY 30305

111003 55003 J Anderson 1538 Chantilly Dr Ne New York NY 30324

111004 55004 Chris Ernest 2406 Glnrdge Strtford Dr New York NY 30342

111005 55005 Rudolph Gibiser 6917 Roswell Rd Ne New York NY 30328

111006 55006 Bianco Lo 146 W 16th St New York NY 10011

111007 55007 Justina Bradley 221 Colonial Homes Dr NW New York NY 30309

111008 55008 Monique Freeman 260 King St San Francisco CA 94107

111009 55009 Jeffrey Morton 544 9th Ave San Francisco CA 94118

Lab 1

6 Informati ca PowerCenter 8 Level II Devel oper

Step 3: View Source Data

1. Navigate to the $PMSourceFileDir directory. By default, the path is:

C:\Informatica\PowerCenter8.1.0\server\infa_shared\SrcFiles

2. Open updated_customer_list.txt in a text editor.

The updated_customer_list.txt source file contains the following data:

CUST_ID,FIRSTNAME,LASTNAME,ADDRESS,CITY,STATE,ZIP

67001,Thao,Nguyen,1200 Broadway Ave,Burlingame,CA,94010

67002,Maria,Gomez,390 Stelling Ave,Cupertino,CA,95014

67003,Jean,Carlson,555 California St,Menlo Park,CA,94025

67004,Chris,Park,13450 Saratoga Ave,Santa Clara,CA,95051

55002,Anish,Desai,400 W Pleasant View Ave,Hackensack,NJ,07601

55006,Bianco,Lo,900 Seville Dr,Clarkston,GA,30021

55003,Janice,MacIntosh,,,,

67003,Jean,Carlson,120 Villa St,Mountain View,CA,94043

3. Notice that the row for customer ID 55003 contains some NULL values. You do not want to insert

the NULL values into the target, you only want to update the other column values in the target.

4. Notice that the file contains two rows with customer ID 67003. Because of this, you must use a

dynamic cache for the Lookup transformation.

5. Close the file.

Step 4: Create Workflow

1. Open the Workflow Manager and open your ~Developerxx folder.

2. Create a workflow named wf_DYN_update_customer_list_xx.

3. Create a session named s_m_DYN_update_customer_list_xx using the

m_DYN_update_customer_list_xx mapping.

4. In the session, verify that the target connection is EDWxx.

5. Verify that the Target load type is set to Normal and the Truncate target table option is not checked.

6. Verify the specified source file name is updated_customer_list.txt and the specified location is

$PMSourceFileDir.

Step 5: Run Workflow

Run workflow wf_DYN_update_customer_list_xx.

Lab 1

Informatica PowerCenter 8 Level II Developer 7

Step 6: Verify Statistics

Step 7: Verify Results

1. Preview the target data from the mapping to verify the results.

Figure 1-3 shows the Preview Data dialog box for the CUSTOMER_LIST table:

Figure 1-3. Preview Target Data for CUSTOMER_LIST Table After Session Run

Lab 1

8 Informati ca PowerCenter 8 Level II Devel oper

The CUSTOMER_LIST table should contain the following data:

2. Look at customer ID 55003. It should not contain any NULLs.

3. Look at customer ID 67003. It should contain data from the last row for customer ID 67003 in the

source file.

PK_KEY CUST_ID FIRSTNAME LASTNAME ADDRESS CITY STATE ZIP

111001 55001 Melvin Bradley 4070 Morning Trl New York NY 30349

111002 55002 Anish Desai 400 W Pleasant View Ave Hackensack NJ 07601

111003 55003 Janice MacIntosh 1538 Chantilly Dr Ne New York NY 30324

111004 55004 Chris Ernest 2406 Glnrdge Strtford Dr New York NY 30342

111005 55005 Rudolph Gibiser 6917 Roswell Rd Ne New York NY 30328

111006 55006 Bianco Lo 900 Seville Dr Clarkston GA 30021

111007 55007 Justina Bradley 221 Colonial Homes Dr NW New York NY 30309

111008 55008 Monique Freeman 260 King St San Francisco CA 94107

111009 55009 Jeffrey Morton 544 9th Ave San Francisco CA 94118

111010 67001 Thao Nguyen 1200 Broadway Ave Burlingame CA 94010

111011 67002 Maria Gomez 390 Stelling Ave Cupertino CA 95014

111012 67003 Jean Carlson 120 Villa St Mountain View CA 94043

111013 67004 Chris Park 13450 Saratoga Ave Santa Clara CA 95051

Lab 2

Informatica PowerCenter 8 Level II Developer 9

Lab 2: Workflow Alerts

Business Purpose

A session usually runs for under an hour. Occasionally, it will run longer. The administrator would like to

be notified via an alert if the session runs longer than an hour. A second session is to run after the first

session completes.

Technical Description

A Worklet will be created with a Worklet variable to define the time the Workflow started plus one hour.

A Timer Task will be created in the Worklet to wait for one hour before sending an email. If the session

runs for less than an hour a Control Task will be issued to stop the timer.

Objectives

Create a Workflow

Create a Worklet

Create a Timer Task

Create an Email Task

Create a Control Task

Create a condition to control the Email Task

Duration

30 minutes

Worklet Overview

Workflow Overview

Lab 2

10 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Setup

Connect to the PC8A_DEV repository in the Designer and Workflow Manager.

Step 2: Mappings Required

If any of the following mappings do not exist in the ~Developerxx folder, copy them from the

SOLUTIONS_ADVANCED folder. Rename the mappings to have the _xx reflect the Developer number.

m_DIM_CUSTOMER_ACCT_xx

m_DIM_CUSTOMER_ACCT_STATUS_xx

Step 3: Reusable Sessions Required

If any of the following sessions do not exist in the ~Developerxx folder, copy them from the

SOLUTIONS_ADVANCED folder. Resolve any conflicts that may occur. Rename the mappings to have

the _xx reflect the Developer number.

s_m_DIM_CUSTOMER_ACCT_xx

s_m_DIM_CUSTOMER_ACCT_STATUS_xx

Step 4: Create a Workflow

Create a Workflow called wf_DIM_CUSTOMER_ACCT_LOAD_xx.

Step 5: Create a Worklet in the Workflow

1. Create a Worklet called wl_DIM_CUSTOMER_ACCT_LOAD_xx.

2. Open the Worklet and create the following tasks.

Step 6: Create a Timer Task in the Worklet

1. Create a Timer task and name it tim_SESSION_RUN_TIME.

2. Edit the Timer task and click the Timer tab.

3. Select the Relative time: radio button.

Lab 2

Informatica PowerCenter 8 Level II Developer 11

4. Select the Start after 1 Hour from the start time of this task.

Step 7: Create an E-Mail Task in the Worklet

1. Create an Email task and name it eml_SESSION_RUN_TIME.

2. Click the Properties tab.

3. For the Email User Name type - administrator@anycompany.com.

4. For the Email Subject type - session s_m_DIM_CUSTOMER_ACCT_xx ran an hour or longer.

5. For the Email Text type an appropriate message.

Lab 2

12 Informati ca PowerCenter 8 Level II Devel oper

Step 8: Create a Control Task in the Worklet

1. Create a Control task and name it ctrl_STOP_SESS_TIMEOUT.

2. Edit the Control task and click the Properties tab.

3. Set the Control Option attribute to Stop parent.

Step 9: Add Reusable Session to the Worklet

1. Add s_m_DIM_CUSTOMER_ACCT_xx to wl_DIM_CUSTOMER_ACCT_LOAD_xx.

2. Verify source connections are ODS and source file name is customer_type.txt.

3. Verify target connections are EDWxx.

4. Verify lookup connections are valid - DIM tables to EDWxx, ODS tables to ODS.

5. Truncate target table.

6. Ensure Target Load Type is Normal.

Step 10: Link Tasks in Worklet

1. Link Start to tim_SESSION_RUN_TIME and s_m_DIM_CUSTOMER_ACCT_xx.

2. Link tim_SESSION_RUN_TIME to eml_SESSION_RUN_TIME.

3. Link s_m_DIM_CUSTOMER_ACCT_xx to ctrl_STOP_SESS_TIMEOUT Link.

Step 11: Add Reusable Session to the Workflow

1. Add s_m_DIM_CUSTOMER_ACCT_STATUS_xx to wf_DIM_CUSTOMER_ACCT_LOAD_xx.

2. Verify source connections are ODS and source file name is customer_type.txt.

3. Verify target connections are EDWxx.

Lab 2

Informatica PowerCenter 8 Level II Developer 13

4. Verify lookup connections are valid - DIM tables to EDWxx, ODS tables to ODS.

5. Truncate target table.

6. Ensure Target Load Type is Normal.

Step 12: Link Tasks in Workflow

1. Link Start to wl_DIM_CUST_ACCT_LOAD_xx.

2. Link wl_DIM_CUST_ACCT_LOAD_xx to s_m_DIM_CUSTOMER_ACCT_STATUS_xx.

Step 13: Run Workflow

1. In the Workflow Monitor, click the Filter Tasks button in the toolbar, or select Filters > Tasks from

the menu.

2. Make sure to show all of the tasks.

3. When you run your workflow, the Task View should look as follows.

Lab 2

14 Informati ca PowerCenter 8 Level II Devel oper

Lab 3

Informatica PowerCenter 8 Level II Developer 15

Lab 3: Dynamic Scheduling

Business Purpose

The Department Dimension table must load sales information on an hourly basis during the business day.

It does not load during non-business hours (before 6 a.m. or after 6 p.m.). The start time of the loading

session should be calculated and started based on the workflow starting time.

Technical Description

Use workflow variables to calculate when the session starts. The starting time of the session has to be at

the top of the hour on or after 6 a.m. and not on or after 6 p.m. To accomplish this, the workflow will run

continuously.

Objectives

Create and use workflow variables

Create an Assignment Task

Create a Timer Task

Duration

30 minutes

Workflow Overview

Lab 3

16 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Setup

Connect to PC8A_DEV Repository in the Designer and Workflow Manager.

Step 2: Mapping Required

The following Mapping will be used in this lab. If the below Mapping does not exist in the ~Developerxx

folder, copy it from the SOLUTIONS_ADVANCED folder. Change the xx in the mapping name to

reflect the Developer Number.

m_SALES_DEPARTMENT_xx

Step 3: Copy Reusable Sessions

Copy the following reusable session from the SOLUTIONS_ADVANCED folder to the ~Developerxx

folder. Change the xx in the session name to reflect the Developer Number.

s_m_SALES_DEPARTMENT_xx

Step 4: Create Workflow

Create a Workflow called wf_SALES_DEPARTMENT_xx.

Step 5: Create Workflow Variables

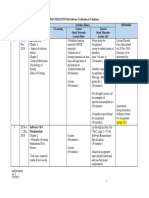

1. Add three variables as follows:

2. Click OK.

3. Save.

Step 6: Add Session to Workflow

1. Add reusable session s_m_SALES_DEPARTMENT_xx to the Workflow.

2. Source Database Connection should be ODS.

3. Target Database Connection should be EDWxx.

4. Ensure Target Load Type is Normal.

Lab 3

Informatica PowerCenter 8 Level II Developer 17

5. Truncate the Target Table.

Step 7: Create a Timer Task

1. Create a Timer Task called tim_SALES_DEPARTMENT_START.

2. Edit the Timer task and click the Timer tab.

3. Select the Absolute time: radio button.

4. Select the Use this workflow date-time variable to calculate the wait radio button.

5. Select the ellipsis to browse variables.

6. Double click on wf_SALES_DEPARTMENT_xx.

7. Select $$NEXT_START_TIME as the workflow variable.

8. Save.

Step 8: Create an Assignment Task

1. Create an Assignment Task called asgn_SALES_DEPARTMENT_START_TIME.

2. Add the following expressions:

Calculates the absolute workflow start time to the hour

$$TRUNC_START_TIME = TRUNC(WORKFLOWSTARTTIME, 'HH')

Extracts/assigns the hour from the above calculation

$$HOUR_STARTED = GET_DATE_PART($$TRUNC_START_TIME, 'HH')

Calculates/assigns the start time of the session

$$NEXT_START_TIME = IIF($$HOUR_STARTED >= 5 AND $$HOUR_STARTED < 17,

ADD_TO_Date($$TRUNC_START_TIME, 'HH',1),

DECODE($$HOUR_STARTED,

0, ADD_TO_DATE($$TRUNC_START_TIME, 'HH',6),

1, ADD_TO_DATE($$TRUNC_START_TIME, 'HH',5),

2, ADD_TO_DATE($$TRUNC_START_TIME, 'HH',4),

3, ADD_TO_DATE($$TRUNC_START_TIME, 'HH',3),

4, ADD_TO_DATE($$TRUNC_START_TIME, 'HH',2),

17, ADD_TO_DATE($$TRUNC_START_TIME,'HH',13),

18, ADD_TO_DATE($$TRUNC_START_TIME,'HH',12),

19, ADD_TO_DATE($$TRUNC_START_TIME,'HH',11),

20 ,ADD_TO_DATE($$TRUNC_START_TIME,'HH',10),

21, ADD_TO_DATE($$TRUNC_START_TIME,'HH',9),

22, ADD_TO_DATE($$TRUNC_START_TIME,'HH',8),

23, ADD_TO_DATE($$TRUNC_START_TIME,'HH',7)))

Note: The above functions could be nested together in one assignment expression if desired.

Step 9: Link Tasks

1. Create a link from the Start Task to asgn_SALES_DEPARTMENT_START_TIME.

Lab 3

18 Informati ca PowerCenter 8 Level II Devel oper

2. Create a link from asgn_SALES_DEPARTMENT_START_TIME to

tim_SALES_DEPARTMENT_START.

3. Create a link from tim_SALES_DEPARTMENT_START to s_m_SALES_DEPARTMENT_xx.

4. Save the repository.

Step 10: Run Workflow by Editing the Workflow Schedule

Note: In order for the top of the hour to be calculated based on the workflow start time, the workflow

must be configured to execute continuously.

1. Edit workflow wf_SALES_DEPARTMENT_xx.

2. Click the SCHEDULER Tab.

3. Verify that the scheduler is Non Reusable.

4. Edit the schedule.

5. Click the Schedule Tab.

6. Click Run Continuously.

7. Click OK.

8. Click OK.

9. Save the repository. This will start the workflow.

Lab 3

Informatica PowerCenter 8 Level II Developer 19

Step 11: Monitor the Workflow

1. Open the Gantt Chart View.

Note: Notice that assignment task as already executed and the timer task is running.

2. Browse the Workflow Log.

3. Verify the results of the Assignment expressions in the log file. Listed below are examples:

Variable [$$TRUNC_START_TIME], Value [05/23/2004 16:00:00].

Variable [$$HOUR_STARTED], Value [16].

Variable [$$NEXT_START_TIME], Value [05/23/2004 17:00:00].

4. Verify the Load Manager message that tells when the timer task will complete. Listed below is an

example message:

INFO : LM_36606 [Sun May 23 16:05:02 2004] : (2288|2004) Timer task instance

[TM_SALES_DEPARTMENT_START]: The timer will complete at [Sun May 23 17:00:00 2004].

5. Open Task View.

6. At or near the top of the hour, open the monitor to check the status of the session. Verify that it

starts(started) at the desired time. Below is an example:

7. After the session completes, notice that the workflow automatically starts again.

8. If the workflow starts after 5 p.m., the timer message in the workflow log will show that the timer will

end at 6 a.m. the following morning. Listed below is an example:

Lab 3

20 Informati ca PowerCenter 8 Level II Devel oper

INFO : LM_36608 [Sun May 23 17:00:25 2004] : (2288|2392) Timer task instance

[TM_SALES_DEPARTMENT_START]: Timer task specified to wait until absolute time [Mon

May 24 06:00:00 2004], specified by variable [$$NEXT_START_TIME].

INFO : LM_36606 [Sun May 23 17:00:25 2004] : (2288|2392) Timer task instance

[TM_SALES_DEPARTMENT_START]: The timer will complete at [Mon May 24 06:00:00 2004].

9. Stop or abort the workflow at any time. Afterwards, edit the workflow scheduler and select RUN ON

DEMAND.

10. Save the repository.

Lab 4

Informatica PowerCenter 8 Level II Developer 21

Lab 4: Recover a Suspended Workflow

Technical Description

In this lab, you will configure a mapping and its related session and workflow for recovery. Then, you will

change a session property to create an error that causes the session to suspend when you run it. You will

fix the error and recover the workflow.

Objectives

Configure a mapping, session, and workflow for recovery.

Recover a suspended workflow.

Duration

30 minutes

Lab 4

22 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Copy the Workflow

1. Open the Repository Manager.

2. Copy the wkf_Stage_Customer_Contacts_xx workflow from the SOLUTIONS_ADVANCED folder

to your folder.

3. In the Workflow Manager, open the wkf_Stage_Customer_Contacts_xx workflow.

4. Rename the workflow to replace xx with your student number.

5. Rename the session in the workflow to replace xx with your student number.

6. Save the workflow.

Step 2: Edit the Workflow and Session for Recovery

1. Open the wkf_Stage_Customer_Contacts_xx workflow.

2. Edit the workflow, and on the General tab, select Suspend on Error.

3. Edit the s_m_Stage_Customer_Contacts_xx session and click the Properties tab.

4. Scroll to the end of the General Options settings and select Resume from last checkpoint for the

Recovery Strategy.

5. Click the Mapping tab and change the target load type to Normal.

Note: When you configure a session for bulk load, the session is not recoverable using the resume

recovery strategy. You must use normal load.

6. Change the target database connection to EDWxx.

Lab 4

Informatica PowerCenter 8 Level II Developer 23

7. Save the workflow.

Step 3: Edit the Session to Cause an Error

In this step, you will edit the session so that when the Integration Service runs it, there will be an error.

1. Edit the s_m_Stage_Customer_Contacts_xx session, and click the Mapping tab.

The source in the mapping uses a file list, customer_list.txt. To make the session encounter an error,

you will change the value in the Source Filename session property.

2. On the Sources node, change the source file name to customer_list1234.txt.

3. Click the Config Object tab.

4. In the Error Handling settings, configure the session to stop on one error.

5. Save the workflow.

Step 4: Run the Workflow, Fix the Session, and Recover the Workflow

1. Run the workflow.

The Workflow Monitor shows that the Integration Service suspends the workflow and fails the

session.

2. Open the session log.

Suspended Workflow and FailedSession

Lab 4

24 Informati ca PowerCenter 8 Level II Devel oper

3. Scroll to the end of the session log.

Notice that the Integration Service failed the session.

Next, you will fix the session.

4. In the Workflow Manager, edit the session.

5. On the Mapping tab, enter customer_list.txt as the source file name.

6. Save the workflow.

7. In the Workflow Manager, right-click the workflow, and choose Recover Workflow.

The Workflow Monitor shows that the Integration Service is running the workflow and that the

session is running as a recovery run.

Session run has completed with failure.

Running Recovery Session Run

Lab 4

Informatica PowerCenter 8 Level II Developer 25

When the session and workflow complete, the Workflow Monitor shows that the session completed

successfully as a recovery run.

8. Open the session log.

9. Search for session run completed with failure.

Notice that the Integration Service continues to write log events to the same session log.

Successful Recovery Session Run

Lab 4

26 Informati ca PowerCenter 8 Level II Devel oper

10. Search for recovery run.

The Integration Service writes recovery information to the session log.

11. Close the Log Viewer.

Lab 5

Informatica PowerCenter 8 Level II Developer 27

Lab 5: Using the Transaction Control Transformation

Business Purpose

Line item data is read and sorted by invoice number. We need each invoice number committed to the

target database as a single transaction.

Technical Description

A flag will be created to tell PowerCenter when a new set of Invoice numbers are found. A Transaction

Control Transformation will be created to tell the database when to issue a commit.

Objectives

Create a flag to check for new INVOICE_NOs

Commit upon seeing a new set of INVOICE_NOs

Duration

45 minutes

Mapping Overview

Lab 5

28 Informati ca PowerCenter 8 Level II Devel oper

Velocity Deliverable: Mapping Specifications

Sources

Targets

Source To Target Field Matrix

Mapping Name m_DIM_LINE_ITEM_xx

Source System ODS Target System EDWxx

Initial Rows Rows/Load

Short Description Commit on a new set of INVOICE NO's

Load Frequency On demand

Preprocessing None

Post Processing None

Error Strategy None

Reload Strategy None

Unique Source Fields LINE_ITEM_NO

Tables

Table Name Schema/Owner Selection/Fil ter

ODS_LINE_ITEM

Create shortcut from

DEV_SHARED folder

ODS

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Key

DIM_LINE_ITEM

Create shortcut from

DEV_SHARED folder

yes LINE_ITEM_NO

Target Table Target Column Source Table Source Column Expression

DIM_LINE_ITEM LINE_ITEM_NO ODS_LINE_ITEM LINE_ITEM_NO Issue a commit upon a new set of Invoice Nos.

DIM_LINE_ITEM INVOICE_NO ODS_LINE_ITEM INVOICE_NO Issue a commit upon a new set of Invoice Nos.

DIM_LINE_ITEM PRODUCT_CODE ODS_LINE_ITEM PRODUCT_CODE Issue a commit upon a new set of Invoice Nos.

DIM_LINE_ITEM QUANTITY ODS_LINE_ITEM QUANTITY Issue a commit upon a new set of Invoice Nos.

DIM_LINE_ITEM PRICE ODS_LINE_ITEM PRICE Issue a commit upon a new set of Invoice Nos.

DIM_LINE_ITEM COST ODS_LINE_ITEM COST Issue a commit upon a new set of Invoice Nos.

Lab 5

Informatica PowerCenter 8 Level II Developer 29

Detailed Overview

Transformation Name Type Description

Mapping Mapping m_DIM_LINE_ITEM_xx

ODS_LINE_ITEM Source Definition Table Source definition in ODS schema.

Create shortcut from DEV_SHARED folder.

Shortcut_to_sq_ODS_LINE_ITEM Source Qualifier Send to srt_DIM_LINE_ITEM:

LINE_ITEM_NO, INVOICE_NO, PRODUCT_CODE, QUANTITY,

DISCOUNT, PRICE, COST

srt_DIM_LINE_ITEM Sorter Sort by INVOICE_NO

Send to exp_DIM_LINE_ITEM

INVOICE_NO

SEND to tc_DIM_LINE_ITEM:

LINE_ITEM_NO, INVOICE_NO, PRODUCT_CODE, QUANTITY,

DISCOUNT, PRICE, COST

exp_DIM_LINE_ITEM Expression Uncheck the 'o' on INVOICE_NO

Create a variable called v_PREVIOUS_INVOICE_NO as a decimal

10,0 to house the value of the previous row's INVOICE_NO.

Expression:

INVOICE_NO

Create a variable called v_NEW_INVOICE_NO_FLAG as an Integer to

set a flag to check whether the current row's INVOICE_NO is the same

as the previous row's INVOICE_NO

Expression:

IIF(INVOICE_NO=v_PREVIOUS_INVOICE_NO, 0,1)

Move v_NEW_INVOICE_NO_FLAG above

v_PREVIOUS_INVOICE_NO

Create an output port called NEW_INVOICE_NO_FLAG_out as a

integer to hold the value of the flag

Expression:

v_NEW_INVOICE_NO_FLAG

SEND to tc_DIM_LINE_ITEM:

NEW_INVOICE_NO_FLAG_out

tc_DIM_LINE_ITEM Transaction Control On the ports tab, delete the _out from NEW_INVOICE_FLAG_out

On the properties tab enter the following Transaction Control

Condition:

IIF(NEW_INVOICE_NO_FLAG=1,

TC_COMMIT_BEFORE,TC_CONTINUE_TRANSACTION)

SEND to DIM_LINE_ITEM:

LINE_ITEM_NO, INVOICE_NO, PRODUCT_CODE, QUANTITY,

DISCOUNT, PRICE, COST

Shortcut_to_DIM_LINE_ITEM Target Definition Target definition in the EDWxx schema.

Create a shortcut from DEV_SHARED folder.

Lab 5

30 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Create Mapping

Create a mapping called m_DIM_LINE_ITEM_xx, where xx is your student number. Use the mapping

details described in the previous pages for guidelines.

Step 2: Create Workflow

1. Open ~Developerxx folder.

2. Create workflow named wf_DIM_LINE_ITEM_xx.

3. Create session named s_m_DIM_LINE_ITEM_xx.

4. In the session, edit Mapping tab and expand the Sources node. Under Connections verify that the

Connection Value is ODS.

5. Expand the Targets node and verify that the Connection value is correct, the Target load type is set to

Normal and the Truncate target table option is checked.

Step 3: Run Workflow

Run workflow wf_DIM_LINE_ITEM_xx.

Step 4: Verify Statistics

Lab 5

Informatica PowerCenter 8 Level II Developer 31

Step 5: Verify Results

Lab 5

32 Informati ca PowerCenter 8 Level II Devel oper

Lab 6

Informatica PowerCenter 8 Level II Developer 33

Lab 6: Error Handling with Transactions

Business Purpose

The IT Department would like to prevent erroneous data from being committed into the

DIM_VENDOR_PRODUCT table. They would also like to issue a commit every time a new group of

VENDOR_IDs is written. A rollback will also be issued for an entire group of vendors if any record in

that group has an error.

Technical Description

Records will be committed when a new group of VENDOR_IDs comes in. This will require a flag to be

set to determine whether a VENDOR_ID is new or not. Rows will need to be rolled back if an error

occurs. An error flag will be set when a business rule is violated.

Objectives

Use a Transaction Control Transformation to Commit based upon Vendor IDs and issue a rollback

based upon errors.

Duration

60 minutes

Mapping Overview

Lab 6

34 Informati ca PowerCenter 8 Level II Devel oper

Velocity Deliverable: Mapping Specifications

Sources

Targets

Lookup Transformation Detail

Mapping Name m_DIM_VENDOR_PRODUCT_TC_xx

Source System Flat File Target System EDWxx

Initial Rows Rows/Load

Short Description

Issue a commit based upon VENDOR_ID, but only if the PRODUCT_CODE is not null and the

CATEGORY is valid for all records in the group. A rollback of the entire group should occur if Informatica

comes across a null PRODUCT code or an invalid CATEGORY.

Load Frequency On demand

Preprocessing None

Post Processing None

Error Strategy None

Reload Strategy None

Unique Source Fields None

Files

File Name File Location

PRODUCT.txt

Create shortcut from

DEV_SHARED folder

In the Source Files directory on the Integration Service process machine

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Key

DIM_VENDOR_PRODUCT

Create shortcut from

DEV_SHARED folder

yes

Lookup Name lkp_ODS_VENDOR

Lookup Table Name ODS_VENDOR Location ODS

Description

The VENDOR_NAME, FIRST_CONTACT and VENDOR_STATE are needed to populate

DIM_VENDOR_PRODUCT.

Match Condi tion(s) ODS.VENDOR_ID = PRODUCT.VENDOR_ID

Filter/ SQL Override N/A

Return Value(s) VENDOR_NAME, FIRST_CONTACT and VENDOR_STATE

Lab 6

Informatica PowerCenter 8 Level II Developer 35

Source To Target Field Matrix

Detailed Overview

Target Table Target Column Source Table Source Column Expression

DIM_VENDOR_PRODUCT PRODUCT_CODE PRODUCT PRODUCT_CODE

DIM_VENDOR_PRODUCT VENDOR_ID PRODUCT VENDOR_ID

DIM_VENDOR_PRODUCT VENDOR_NAME PRODUCT Derived Value from

lkp_ODS_VENDOR

Return VENDOR_NAME from

lkp_ODS_VENDOR

DIM_VENDOR_PRODUCT VENDOR_STATE PRODUCT Derived Value from

lkp_ODS_VENDOR

Return VENDOR_STATE from

lkp_ODS_VENDOR

DIM_VENDOR_PRODUCT PRODUCT_NAME PRODUCT PRODUCT_NAME

DIM_VENDOR_PRODUCT CATEGORY PRODUCT CATEGORY

DIM_VENDOR_PRODUCT MODEL PRODUCT MODEL

DIM_VENDOR_PRODUCT PRICE PRODUCT PRICE

DIM_VENDOR_PRODUCT FIRST_CONTACT PRODUCT Derived Value from

lkp_ODS_VENDOR

Return FIRST_CONTACT from

lkp_ODS_VENDOR

Transformation Name Type Description

Mapping Mapping m_DIM_VENDOR_PRODUCT_TC_xx

PRODUCT.txt Source Definition Drag in Shortcut from DEV_SHARED

Sq_Shortcut_To_ PRODUCT Source Qualifier Data Source Qualifier for flat file

SEND PORT to exp_SET_ERROR_FLAG:

PRODUCT_CODE, VENDOR_ID, CATEGORY, PRODUCT_NAME,

MODEL, PRICE

exp_SET_ERROR_FLAG Expression Output port: ERROR_FLAG

Expression:

IIF(ISNULL(PRODUCT_CODE) OR ISNULL(CATEGORY),

TRUE, FALSE)

Send all output ports to srt_VENDOR_ID.

srt_VENDOR_ID Sorter Sort data ascending by VENDOR_ID & ERROR_FLAG. This puts any

error records at the end of each group.

SEND all PORTS to exp_SET_TRANS_TYPE.

SEND PORTS to lkp_ODS_VENDOR:

VENDOR_ID

Lab 6

36 Informati ca PowerCenter 8 Level II Devel oper

exp_SET_TRANS_TYPE Expression 1. Create a variable called v_PREV_VENDOR_ID as a Decimal with

precision of 10 to house the value of the previous vendor.

Expression: VENDOR_ID

2. Create a variable port called v_NEW_VENDOR_ID_FLAG as an

integer to check and see if the current VENDOR_ID is new.

Expression:

IIF(VENDOR_ID != v_PREV_VENDOR_ID, TRUE,

FALSE)

Variables can be used to remember values across rows.

V_PREV_VENDOR_ID must always hold the value of the previous

VENDOR_ID, so it must be placed after v_NEW_VENDOR_ID_FLAG

3. Create an output port as a string(8) called TRANSACTION_TYPE

to tell the Transaction Control Transformation whether to CONTINUE,

COMMIT, or ROLLBACK.

Expression:

IIF(ERROR_FLAG = TRUE,

'ROLLBACK',

IIF(v_NEW_VENDOR_ID_FLAG = TRUE,

'COMMIT',

'CONTINUE'))

Since we sorted to put error records at the end of each group, when we

ROLLBACK, we'll be rolling back the whole group.

4. SEND all output PORTS to tc_DIM_VENDOR_PRODUCT.

lkp_ODS_VENDOR Lookup Create a connected lookup to ODS.ODS_VENDOR. Create an input

port for the source data field VENDOR_ID

Rename VENDOR_ID1 to VENDOR_ID_in

Set Lookup Condition:

VENDOR_ID = VENDOR_ID_in

SEND PORTS to tc_DIM_VENDOR_PRODUCT

VENDOR_NAME, FIRST_CONTACT, VENDOR_STATE

tc_DIM_VENDOR_PRODUCT Transaction

Control

Expression:

DECODE(TRANSACTION_TYPE,

'COMMIT', TC_COMMIT_BEFORE,

'ROLLBACK', TC_ROLLBACK_AFTER,

'CONTINUE', TC_CONTINUE_TRANSACTION)

// If we're starting a new group, we need to COMMIT the

// prior group.

// If we hit an error, we need to ROLLBACK the current

// group including the current record.

PORTS to SEND to DIM_VENDOR_PRODUCT:

All ports except for TRANSACTION_TYPE

Shorcut_To_DIM_VENDOR_PROD

UCT

Target Table All data without errors will be routed here

Create shortcut from DEV_SHARED folder

Transformation Name Type Description

Lab 6

Informatica PowerCenter 8 Level II Developer 37

Instructions

Step 1: Create Mapping

Create a mapping called m_DIM_VENDOR_PRODUCT_TC_xx, where xx is your student number.

Use the mapping details described in the previous pages for guidelines.

Step 2: Create Workflow

1. Open ~Developerxx folder.

2. Create workflow named wf_DIM_VENDOR_PRODUCT_TC_xx.

3. Create session named s_m_DIM_VENDOR_PRODUCT_TC_xx

4. Source file is found in the Source Files directory on the Integration Service machine

5. Verify that the source filename is PRODUCT.txt (extension required)

6. Verify target database connection value is EDWxx

7. Verify target load type is Normal

8. Select Truncate for DIM_VENDOR_PRODUCT

9. Set Lookup connection to ODS

Step 3: Run Workflow

Run workflow wf_DIM_VENDOR_PRODUCT_TC_xx.

Step 4: Verify Statistics

Lab 6

38 Informati ca PowerCenter 8 Level II Devel oper

Step 5: Verify Results

Lab 7

Informatica PowerCenter 8 Level II Developer 39

Lab 7: Handling Fatal and Non-Fatal Errors

Business Purpose

ABC Incorporated would like to track which records are failing when trying to run a load from the

PRODUCT Flat File to the DIM_VENDOR_PRODUCT table. Also some of the developers have

noticed dirty data being loaded into the DIM_VENDOR_PRODUCT table, therefore users are getting

dirty data in their reports.

Technical Description

Instead of using a Transaction Control Transformation, route the Fatal Errors off to a Fatal Error table

and route the Nonfatal Errors off to a Nonfatal table. All good data will be sent to the EDW.

Objectives

Trap all database errors and load them to a table called ERR_FATAL.

Trap the dirty data coming through from the CATEGORY field and write it to a table called

ERR_NONFATAL.

Write all data without fatal or nonfatal errors to DIM_VENDOR_PRODUCT.

Duration

60 minutes

Lab 7

40 Informati ca PowerCenter 8 Level II Devel oper

Mapping Overview

Lab 7

Informatica PowerCenter 8 Level II Developer 41

Velocity Deliverable: Mapping Specifications

Sources

Targets

Mapping Name m_DIM_VENDOR_PRODUCT_xx

Source System Flat File Target System EDWxx

Initial Rows Rows/Load

Short Description

If a fatal error is found, route data to a fatal error table, If a nonfatal error is found route data to a

nonfatal table, If data is free of errors route it to DIM_VENDOR_PRODUCT.

Load Frequency On demand

Preprocessing None

Post Processing None

Error Strategy Create a flag for both fatal errors and nonfatal errors. Route bad data to its respective table.

Reload Strategy None

Unique Source Fields None

Files

File Name File Location

PRODUCT.txt

Create shortcut from

DEV_SHARED folder

In the Source Files directory on the Integration Service process machine.

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Key

DIM_VENDOR_PRODUCT

Create shortcut from

DEV_SHARED folder

yes

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Key

ERR_NONFATAL

Create shortcut from

DEV_SHARED folder

yes ERR_ID

Lab 7

42 Informati ca PowerCenter 8 Level II Devel oper

Tables Schema/Owner EDWxx

Table Name Update Delete Insert Unique Key

ERR_FATAL

Create shortcut from

DEV_SHARED folder

yes ERR_ID

Lab 7

Informatica PowerCenter 8 Level II Developer 43

Lookup Transformation Detail

Source To Target Field Matrix

Lookup Name lkp_ODS_VENDOR

Lookup Table Name ODS_VENDOR Location ODS

Description

The VENDOR_NAME, FIRST_CONTACT and VENDOR_STATE are needed to populate

DIM_VENDOR_PRODUCT.

Match Condi tion(s) ODS.VENDOR_ID = PRODUCT.VENDOR_ID

Filter/ SQL Override N/A

Return Value(s) VENDOR_NAME, FIRST_CONTACT and VENDOR_STATE

Target Table Target Column Source Table Source Column Expression

ERR_NONFATAL ERR_ID PRODUCT Derived Value Generated from seq_ERR_ID_ERR_NONFATAL

ERR_NONFATAL REC_NBR PRODUCT REC_NUM N/A

ERR_NONFATAL ERR_RECORD PRODUCT Derived Value The entire source record is concatenated

ERR_NONFATAL ERR_DESCRIPTION PRODUCT Derived Value First, records must be tested for validity.

Run a check to see if the PRODUCT_CODE is

Null.

Set a flag to True or False

Run a check to see if CATEGORY is Null

Set a flag to True or False

Rows must be separated into Fatal, Nonfatal and

Good Data

All NONFATAL ERRORS have a description of

INVALID CATEGORY

ERR_NONFATAL LOAD_DATE PRODUCT Derived Value Date and time the session runs

ERR_FATAL ERR_ID PRODUCT Derived Value Generated from seq_ERR_ID_ERR_FATAL

ERR_FATAL REC_NBR PRODUCT REC_NUM N/A

ERR_FATAL ERR_RECORD PRODUCT Derived Value The entire record is concatenated and sent to

the ERR_FATAL table.

ERR_FATAL ERR_DESCRIPTION PRODUCT Derived Value First, records must be tested for validity.

Run a check to see if the PRODUCT_CODE is

null.

Set a flag to True or False

Run a check to see if CATEGORY is Null

Set a flag to True or False

Rows must be separated into Fatal, Nonfatal and

Good Data

All Fatal Errors have a description of NULL

VALUE IN KEY

ERR_FATAL LOAD_DATE PRODUCT Derived Value The Date and time the session runs.

DIM_VENDOR_PR

ODUCT

PRODUCT_CODE PRODUCT PRODUCT_CODE Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

VENDOR_ID PRODUCT VENDOR_ID Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

Lab 7

44 Informati ca PowerCenter 8 Level II Devel oper

Detailed Overview

DIM_VENDOR_PR

ODUCT

VENDOR_NAME PRODUCT Derived Value from

lkp_ODS_VENDOR

Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

VENDOR_STATE PRODUCT Derived Value from

lkp_ODS_VENDOR

Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

PRODUCT_NAME PRODUCT PRODUCT_NAME Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

CATEGORY PRODUCT CATEGORY Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

MODEL PRODUCT MODEL Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

PRICE PRODUCT PRICE Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

DIM_VENDOR_PR

ODUCT

FIRST_CONTACT PRODUCT Derived Value from

lkp_ODS_VENDOR

Rows must have a non null PRODUCT_CODE

and a valid CATEGORY.

Transformation Name Type Description

Mapping Mapping m_DIM_VENDOR_PRODUCT_xx

PRODUCT.txt Flat File Source

Definition

Drag in Shortcut from DEV_SHARED

Shortcut_To_sq_PRODUCT Source Qualifier Source Qualifier for flat file.

Create shortcut from DEV_SHARED folder

exp_ERROR_TRAPPING Expression Check to see if PRODUCT_CODE is NULL

Derive ISNULL_PRODUCT_CODE_out by creating an output port

CODE: IIF(ISNULL(PRODUCT_CODE),'FATAL','GOOD DATA')

Check to see if CATEGORY is NULL

Derive INVALID_CATEGORY_out by creating an output port

IIF(ISNULL(CATEGORY), 'NONFATAL', 'GOOD

DATA')

Derive ERR_RECORD_out by creating an output port that

concatenates the entire record.

Use a To_Char function to convert all non-strings to strings

SEND PORTS to lkp_ODS_VENDOR:

VENDOR_ID

SEND PORTS to rtr_PRODUCT_DATA:

PRODUCT_CODE, ISNULL_PRODUCT_CODE_out, VENDOR_ID,

CATEGORY, INVALID_CATEGORY_out, PRODUCT_NAME, MODEL,

PRICE, REC_NUM, ERR_RECORD_out

Target Table Target Column Source Table Source Column Expression

Lab 7

Informatica PowerCenter 8 Level II Developer 45

lkp_ODS_VENDOR Lookup Create a connected lookup to ODS.ODS_VENDOR Create an input

port for the source data field VENDOR_ID

Rename VENDOR_ID1 to VENDOR_ID_in

Set Lookup Condition:

VENDOR_ID = VENDOR_ID_in

SEND PORTS to rtr_PRODUCT_DATA:

VENDOR_NAME, FIRST_CONTACT, VENDOR_STATE

rtr_PRODUCT_DATA Router Create groups to route the data off to different paths:

Group = NONFATAL_ERRORS

CODE: INVALID_CATEGORY_out='NONFATAL'

Group = FATAL_ERRORS

CODE: ISNULL_PRODUCT_CODE_out='FATAL'

The default group will contain rows that do not match the above

conditions, hence all good rows.

PORTS TO SEND TO exp_ERR_NONFATAL:

NONFATAL_ERRORS.PRODUCT_CODE

PORTS to SEND to ERR_NONFATAL:

NONFATAL_ERRORS.REC_NUM,

NONFATAL_ERRORS.ERR_RECORD

PORTS to SEND to exp_ERR_FATAL:

FATAL_ERRORS.PRODUCT_CODE

PORTS to SEND to ERR_FATAL:

FATAL_ERRORS.REC_NUM,

FATAL_ERRORS.ERR_RECORD

PORTS to SEND to DIM_VENDOR_PRODUCT:

DEFAULT.PRODUCT_CODE, DEFAULT.VENDOR_ID,

DEFAULT.VENDOR_NAME, DEFAULT.VENDOR_STATE,

DEFAULT.PRODUCT_NAME, DEFAULT.CATEGORY,

DEFAULT.MODEL, DEFAULT.PRICE, DEFAULT.FIRST_CONTACT

exp_ERR_FATAL Expression Derive ERR_DESCRIPTION_out by creating an output port

CODE: 'NULL VALUE IN KEY'

Derive LOAD_DATE_out by creating an output port

CODE: SESSSTARTTIME

PORTS to SEND to ERR_FATAL:

LOAD_DATE_out, ERR_DESCRIPTION_out

exp_ERR_NONFATAL Expression Derive ERR_DESCRIPTION_out by creating an output port

CODE: INVALID CATEGORY'

Derive LOAD_DATE_out by creating an output port

CODE: SESSSTARTTIME

PORTS to SEND to ERR_NONFATAL:

LOAD_DATE_out, ERR_DESCRIPTION_out

seq_ERR_FATAL Sequence

Generator

Generate the ERR_ID for ERR_FATAL

seq_ERR_NONFATAL Sequence

Generator

Generate the ERR_ID for ERR_NONFATAL

ERR_FATAL Target Traps all of the FATAL ERRORS

ERR_NONFATAL Target Traps all NONFATAL ERRORS

DIM_VENDOR_PRODUCT Target All good data to be loaded into the target table.

Transformation Name Type Description

Lab 7

46 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Create Mapping

Create a mapping called m_DIM_VENDOR_PRODUCT_xx, where xx is your student number. Use the

mapping details described in the previous pages for guidelines.

Step 2: Create Workflow

1. Open ~Developerxx folder.

2. Create workflow named wf_DIM_VENDOR_PRODUCT_xx.

3. Create session named s_m_DIM_VENDOR_PRODUCT_xx.

Source file is found in the Source Files directory on the Integration Service process machine.

4. Verify source file name is PRODUCT all Uppercase with an extension of .txt.

5. Verify the target database connect is EDWxx.

6. Change the target load type to Normal.

7. Truncate DIM_VENDOR_PRODUCT.

8. Set Lookup connection to ODS.

Step 3: Run Workflow

1. Run workflow wf_DIM_VENDOR_PRODUCT_xx.

Step 4: Verify Statistics

Lab 7

Informatica PowerCenter 8 Level II Developer 47

Step 5: Verify Results

ERR_NONFATAL

ERR_FATAL

DIM_VENDOR_PRODUCT

Lab 7

48 Informati ca PowerCenter 8 Level II Devel oper

Lab 8

Informatica PowerCenter 8 Level II Developer 49

Lab 8: Repository Queries

Technical Description

In this lab, you will search for repository objects by creating and running object queries.

Objectives

Create object queries

Run object queries

Duration

15 minutes

Lab 8

50 Informati ca PowerCenter 8 Level II Devel oper

Instructions

Step 1: Create a Query to Search for Targets with Customer

First, you will create a query that searches for target objects with the string customer in the target name.

1. In the Designer, choose Tools > Queries.

The Query Browser appears.

2. Click New to create a new query.

Figure 8-4 shows the Query Editor:

3. In the Query Name field, enter targets_customer.

4. In the Parameter Name column, select Object Type.

5. In the Operator column, select Is Equal To.

6. In the Value 1 column, select Target Definition.

7. Click the New Parameter button.

Notice that the Query Editor automatically adds an AND operator for the two parameters.

Figure 8-4. Query Editor

Add AND or OR

operators.

Add a new query

parameter.

Validate the query.

Run the query.

AND Operator

Lab 8

Informatica PowerCenter 8 Level II Developer 51

8. Edit the new parameter to search for object names that contain the text customer.

Step 2: Validate, Save, and Run the Query

1. Click the Validate button to validate the query.

The PowerCenter Client displays a dialog box stating if the query is valid or not. If the query is not

valid, fix the error and validate it again.

2. Click Save.

The PowerCenter Client saves the query to the repository.

3. Click Run.

The Query Results window shows the results of the query you created. Your query results might

include more objects than in the following results:

Some columns only apply to objects in a versioned repository, such as Version Comments, Label

Name, and Purged By User.

Run

Validate

Save

Lab 8

52 Informati ca PowerCenter 8 Level II Devel oper

Step 3: Create A Query to Search For Mapping Dependencies

Next, you will create a query that returns all dependent objects for a mapping. A dependent object is an

object used by another object. The query will search for both parent and child dependent objects. An

example child object of a mapping is a source. An example parent object of a mapping is a session.

1. Close the Query Editor, and create a new query.

2. Enter product_inventory_mapping_dependents as the query name.

3. Edit the first parameter so the object name contains product.

4. Add another parameter, and choose Include Children and Parents for the parameter name.

Note: When you search for children and parents, you enter the following information in the value

columns:

Value 1. Object type(s) for dependent object(s), the children and parents.

Value 2. Object type(s) for the object(s) you are querying.

Value 3. Reusable status of the dependent object(s).

The PowerCenter Client automatically chooses Where for the operator.

5. Click the arrow in the Value 1 column, select the following objects, and click OK:

Mapplet

Source Definition

Target Definition

Transformation

6. In the Value 2 column, choose Mapping.

Note: When you access the Query Editor from the Designer, you can only search for Designer

repository objects. To search for all repository object types that use the mapping you are querying,

create a query from the Repository Manager.

7. Choose Reusable Dependency in the third value column.

Step 4: Validate, Save, and Run the Query

1. Validate the query.

2. Save and run the query.

Lab 8

Informatica PowerCenter 8 Level II Developer 53

Your query results might look similar to the following results:

The query returned objects in all folders in the repository. Next, you will modify the query so it only