Академический Документы

Профессиональный Документы

Культура Документы

Soft Computing Unit-2 by Arun Pratap Singh

Загружено:

ArunPratapSinghАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Soft Computing Unit-2 by Arun Pratap Singh

Загружено:

ArunPratapSinghАвторское право:

Доступные форматы

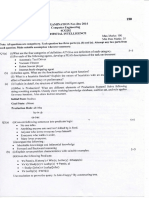

UNIT : II

SOFT COMPUTING

II SEMESTER (MCSE 205)

PREPARED BY ARUN PRATAP SINGH

PREPARED BY ARUN PRATAP SINGH 1

1

NEURAL NETWORK:

These networks are simplified models of biological neuron system which is a massively

parallel distributed processing system made up of highly interconnected neural computing

elements. The neural networks have the ability to learn that makes them powerful and flexible

and thereby acquire knowledge and make it available for use. There networks are also called

neural net or artificial neural networks. In neural network there is no need to devise an

algorithm for performing a special task. For real time systems, these networks are also well

suited due to their computational times and fast response due to their parallel architecture.

UNIT : II

PREPARED BY ARUN PRATAP SINGH 2

2

PREPARED BY ARUN PRATAP SINGH 3

3

PREPARED BY ARUN PRATAP SINGH 4

4

PREPARED BY ARUN PRATAP SINGH 5

5

PREPARED BY ARUN PRATAP SINGH 6

6

PREPARED BY ARUN PRATAP SINGH 7

7

LEARNING METHODOLOGIES:

PREPARED BY ARUN PRATAP SINGH 8

8

PREPARED BY ARUN PRATAP SINGH 9

9

PREPARED BY ARUN PRATAP SINGH 10

10

PREPARED BY ARUN PRATAP SINGH 11

11

ARTIFICIAL NEURAL NETWORK (ANN):

In computer science and related fields, artificial neural networks (ANNs) are

computational models inspired by an animal's central nervous systems (in particular the brain)

which is capable of machine learning as well as pattern recognition. Artificial neural networks are

generally presented as systems of interconnected "neurons" which can compute values from

inputs.

For example, a neural network for handwriting recognition is defined by a set of input neurons

which may be activated by the pixels of an input image. After being weighted and transformed by

a function (determined by the network's designer), the activations of these neurons are then

passed on to other neurons. This process is repeated until finally, an output neuron is activated.

This determines which character was read.

Like other machine learning methods - systems that learn from data - neural networks have been

used to solve a wide variety of tasks that are hard to solve using ordinary rule-based

programming, including computer vision and speech recognition.

PREPARED BY ARUN PRATAP SINGH 12

12

PREPARED BY ARUN PRATAP SINGH 13

13

PREPARED BY ARUN PRATAP SINGH 14

14

PREPARED BY ARUN PRATAP SINGH 15

15

PREPARED BY ARUN PRATAP SINGH 16

16

PREPARED BY ARUN PRATAP SINGH 17

17

PREPARED BY ARUN PRATAP SINGH 18

18

DIFFERENT ACTIVATION FUNCTION:

PREPARED BY ARUN PRATAP SINGH 19

19

PREPARED BY ARUN PRATAP SINGH 20

20

PREPARED BY ARUN PRATAP SINGH 21

21

PREPARED BY ARUN PRATAP SINGH 22

22

PREPARED BY ARUN PRATAP SINGH 23

23

PREPARED BY ARUN PRATAP SINGH 24

24

PREPARED BY ARUN PRATAP SINGH 25

25

PREPARED BY ARUN PRATAP SINGH 26

26

PREPARED BY ARUN PRATAP SINGH 27

27

SINGLE LAYER PERCEPTRON:

In machine learning, the perceptron is an algorithm for supervised classification of an input into

one of several possible non-binary outputs. It is a type of linear classifier, i.e. a classification

algorithm that makes its predictions based on a linear predictor function combining a set of

weights with the feature vector. The algorithm allows for online learning, in that it processes

elements in the training set one at a time.

The perceptron algorithm dates back to the late 1950s; its first implementation, in custom

hardware, was one of the first artificial neural networks to be produced.

PREPARED BY ARUN PRATAP SINGH 28

28

PREPARED BY ARUN PRATAP SINGH 29

29

PREPARED BY ARUN PRATAP SINGH 30

30

PREPARED BY ARUN PRATAP SINGH 31

31

PREPARED BY ARUN PRATAP SINGH 32

32

WINDROW HOFF/DELTA LEARNING RULE:

PREPARED BY ARUN PRATAP SINGH 33

33

PREPARED BY ARUN PRATAP SINGH 34

34

PREPARED BY ARUN PRATAP SINGH 35

35

PREPARED BY ARUN PRATAP SINGH 36

36

PREPARED BY ARUN PRATAP SINGH 37

37

DELTA LEARNING RULE:

In machine learning, the delta rule is a gradient descent learning rule for updating the weights

of the inputs to artificial neurons in single-layer neural network. It is a special case of the more

general backpropagation algorithm. For a neuron with activation function , the delta rule

for 's th weight is given by

,

where

is a small constant called learning rate

PREPARED BY ARUN PRATAP SINGH 38

38

is the neuron's activation function

is the target output

is the weighted sum of the neuron's inputs

is the actual output

is the th input.

It holds that and .

The delta rule is commonly stated in simplified form for a neuron with a linear activation

function as

While the delta rule is similar to the perceptron's update rule, the derivation is different.

The perceptron uses the Heaviside step function as the activation function , and

that means that does not exist at zero, and is equal to zero elsewhere, which

makes the direct application of the delta rule impossible.

WINNER-TAKE-ALL LEARNING RULE:

Winner-take-all is a computational principle applied in computational models of neural

networks by which neurons in a layer compete with each other for activation. In the classical form,

only the neuron with the highest activation stays active while all other neurons shut down, however

other variations that allow more than one neuron to be active do exist, for example the soft winner

take-all, by which a power function is applied to the neurons.

PREPARED BY ARUN PRATAP SINGH 39

39

In the theory of artificial neural networks, winner-take-all networks are a case of competitive

learning in recurrent neural networks. Output nodes in the network mutually inhibit each other,

while simultaneously activating themselves through reflexive connections. After some time, only

one node in the output layer will be active, namely the one corresponding to the strongest input.

Thus the network uses nonlinear inhibition to pick out the largest of a set of inputs. Winner-take-

all is a general computational primitive that can be implemented using different types of neural

network models, including both continuous-time and spiking networks (Grossberg, 1973; Oster et

al. 2009).

Winner-take-all networks are commonly used in computational models of the brain, particularly

for distributed decision-making or action selection in the cortex. Important examples include

hierarchical models of vision (Riesenhuber et al. 1999), and models of selective attention and

recognition (Carpenter and Grossberg, 1987; Itti et al. 1998). They are also common in artificial

neural networks and neuromorphic analog VLSI circuits. It has been formally proven that the

winner-take-all operation is computationally powerful compared to other nonlinear operations,

such as thresholding (Maass 2000).

In many practical cases, there is not only a single neuron which becomes the only active one but

there are exactly k neurons which become active for a fixed number k. This principle is referred

to as k-winners-take-all.

PREPARED BY ARUN PRATAP SINGH 40

40

LINEAR SEPARABILITY:

Linear separability is an important concept in neural networks. The idea is to check if you can

separate points in an n-dimensional space using only n-1 dimensions.

Lost it? Heres a simpler explanation.

One Dimension

Lets say youre on a number line. You take any two numbers. Now, there are two possibilities:

1. You choose two different numbers

2. You choose the same number

If you choose two different numbers, you can always find another number between them. This

number separates the two numbers you chose.

So, you say that these two numbers are linearly separable.

But, if both numbers are the same, you simply cannot separate them. Theyre the same. So,

theyre linearly inseparable. (Not just linearly, theyre arent separable at all. You cannot

separate something from itself)

Two Dimensions

On extending this idea to two dimensions, some more possibilities come into existence. Consider

the following:

PREPARED BY ARUN PRATAP SINGH 41

41

Here, were like to seperate the point (1,1) from the other points. You can see that there exists a

line that does this. In fact, there exist infinite such lines. So, these two classes of points are

linearly separable. The first class consists of the point (1,1) and the other class has (0,1), (1,0)

and (0,0).

Now consider this:

In this case, you just cannot use one single line to separate the two classes (one containing the

black points and one containing the red points). So, they are linearly inseparable.

PREPARED BY ARUN PRATAP SINGH 42

42

Three dimensions

Extending the above example to three dimensions. You need a plane for separating the two

classes.

The dashed plane separates the red point from the other blue points. So its linearly separable. If

bottom right point on the opposite side was red too, it would become linearly inseparable .

Extending to n dimensions

Things go up to a lot of dimensions in neural networks. So to separate classes in n-dimensions,

you need an n-1 dimensional hyperplane.

Multilayer Perceptron Neural Network Model

The following diagram illustrates a perceptron network with three layers:

PREPARED BY ARUN PRATAP SINGH 43

43

This network has an input layer (on the left) with three neurons, one hidden layer (in the

middle) with three neurons and an output layer (on the right) with three neurons.

There is one neuron in the input layer for each predictor variable. In the case of categorical

variables, N-1 neurons are used to represent the N categories of the variable.

Input Layer A vector of predictor variable values (x1...xp) is presented to the input layer. The

input layer (or processing before the input layer) standardizes these values so that the range of

each variable is -1 to 1. The input layer distributes the values to each of the neurons in the

hidden layer. In addition to the predictor variables, there is a constant input of 1.0, called

the bias that is fed to each of the hidden layers; the bias is multiplied by a weight and added to

the sum going into the neuron.

Hidden Layer Arriving at a neuron in the hidden layer, the value from each input neuron is

multiplied by a weight (wji), and the resulting weighted values are added together producing a

combined value uj. The weighted sum (uj) is fed into a transfer function, , which outputs a

value hj. The outputs from the hidden layer are distributed to the output layer.

Output Layer Arriving at a neuron in the output layer, the value from each hidden layer

neuron is multiplied by a weight (wkj), and the resulting weighted values are added together

producing a combined value vj. The weighted sum (vj) is fed into a transfer function, , which

outputs a value yk. The y values are the outputs of the network.

If a regression analysis is being performed with a continuous target variable, then there is a

single neuron in the output layer, and it generates a single y value. For classification problems

with categorical target variables, there are N neurons in the output layer producing N values,

one for each of the N categories of the target variable.

PREPARED BY ARUN PRATAP SINGH 44

44

MULTILAYER PERCEPTRON ARCHITECTURE:

The network diagram shown above is a full-connected, three layer, feed-forward, perceptron

neural network. Fully connected means that the output from each input and hidden neuron is

distributed to all of the neurons in the following layer. Feed forward means that the values only

move from input to hidden to output layers; no values are fed back to earlier layers (a Recurrent

Network allows values to be fed backward).

All neural networks have an input layer and an output layer, but the number of hidden layers may

vary. Here is a diagram of a perceptron network with two hidden layers and four total layers:

When there is more than one hidden layer, the output from one hidden layer is fed into the next

hidden layer and separate weights are applied to the sum going into each layer.

Training Multilayer Perceptron Networks

The goal of the training process is to find the set of weight values that will cause the output from

the neural network to match the actual target values as closely as possible. There are several

issues involved in designing and training a multilayer perceptron network:

Selecting how many hidden layers to use in the network.

Deciding how many neurons to use in each hidden layer.

Finding a globally optimal solution that avoids local minima.

PREPARED BY ARUN PRATAP SINGH 45

45

Converging to an optimal solution in a reasonable period of time.

Validating the neural network to test for overfitting.

Selecting the Number of Hidden Layers

For nearly all problems, one hidden layer is sufficient. Two hidden layers are required for modeling

data with discontinuities such as a saw tooth wave pattern. Using two hidden layers rarely

improves the model, and it may introduce a greater risk of converging to a local minima. There is

no theoretical reason for using more than two hidden layers. DTREG can build models with one

or two hidden layers. Three layer models with one hidden layer are recommended.

Deciding how many neurons to use in the hidden layers

One of the most important characteristics of a perceptron network is the number of neurons in the

hidden layer(s). If an inadequate number of neurons are used, the network will be unable to model

complex data, and the resulting fit will be poor.

If too many neurons are used, the training time may become excessively long, and, worse, the

network may over fit the data. When overfitting occurs, the network will begin to model random

noise in the data. The result is that the model fits the training data extremely well, but it generalizes

poorly to new, unseen data. Validation must be used to test for this.

DTREG includes an automated feature to find the optimal number of neurons in the hidden layer.

You specify the minimum and maximum number of neurons you want it to test, and it will build

models using varying numbers of neurons and measure the quality using either cross validation

or hold-out data not used for training. This is a highly effective method for finding the optimal

number of neurons, but it is computationally expensive, because many models must be built, and

each model has to be validated. If you have a multiprocessor computer, you can configure DTREG

to use multiple CPUs during the process.

The automated search for the optimal number of neurons only searches the first hidden layer. If

you select a model with two hidden layers, you must manually specify the number of neurons in

the second hidden layer.

Finding a globally optimal solution

A typical neural network might have a couple of hundred weighs whose values must be found to

produce an optimal solution. If neural networks were linear models like linear regression, it would

be a breeze to find the optimal set of weights. But the output of a neural network as a function of

the inputs is often highly nonlinear; this makes the optimization process complex.

If you plotted the error as a function of the weights, you would likely see a rough surface with

many local minima such as this:

PREPARED BY ARUN PRATAP SINGH 46

46

This picture is highly simplified because it represents only a single weight value (on the horizontal

axis). With a typical neural network, you would have a 200-dimension, rough surface with many

local valleys.

Optimization methods such as steepest descent and conjugate gradient are highly susceptible to

finding local minima if they begin the search in a valley near a local minimum. They have no ability

to see the big picture and find the global minimum.

Several methods have been tried to avoid local minima. The simplest is just to try a number of

random starting points and use the one with the best value. A more sophisticated technique

called simulated annealing improves on this by trying widely separated random values and then

gradually reducing (cooling) the random jumps in the hope that the location is getting closer to

the global minimum.

DTREG uses the Nguyen-Widrow algorithm to select the initial range of starting weight values. It

then uses the conjugate gradient algorithm to optimize the weights. Conjugate gradient usually

finds the optimum weights quickly, but there is no guarantee that the weight values it finds are

globally optimal. So it is useful to allow DTREG to try the optimization multiple times with different

sets of initial random weight values. The number of tries allowed is specified on the Multilayer

Perceptron property page.

PREPARED BY ARUN PRATAP SINGH 47

47

PREPARED BY ARUN PRATAP SINGH 48

48

PREPARED BY ARUN PRATAP SINGH 49

49

MADALINE :

PREPARED BY ARUN PRATAP SINGH 50

50

PREPARED BY ARUN PRATAP SINGH 51

51

MADALINE (Many ADALINE

[1]

) is a three-layer (input, hidden, output), fully connected, feed-

forward artificial neural network architecture for classification that uses ADALINE units in its

hidden and output layers, i.e. its activation function is the sign function. The three-layer network

uses memistors. Three different training algorithms for MADALINE networks, which cannot be

learned using backpropagation because the sign function is not differentiable, have been

suggested, called Rule I, Rule II and Rule III. The first of these dates back to 1962 and cannot

adapt the weights of the hidden-output connection. The second training algorithm improved on

Rule I and was described in 1988. The third "Rule" applied to a modified network

with sigmoid activations instead of signum; it was later found to be equivalent to backpropagation.

The Rule II training algorithm is based on a principle called "minimal disturbance". It proceeds by

looping over training examples, then for each example, it:

finds the hidden layer unit (ADALINE classifier) with the lowest confidence in its prediction,

tentatively flips the sign of the unit,

accepts or rejects the change based on whether the network's error is reduced,

stops when the error is zero.

PREPARED BY ARUN PRATAP SINGH 52

52

Additionally, when flipping single units' signs does not drive the error to zero for a particular

example, the training algorithm starts flipping pairs of units' signs, then triples of units, etc.

DIFFERENCE BETWEEN HUMAN BRAIN AND ANN:

PREPARED BY ARUN PRATAP SINGH 53

53

PREPARED BY ARUN PRATAP SINGH 54

54

PREPARED BY ARUN PRATAP SINGH 55

55

PREPARED BY ARUN PRATAP SINGH 56

56

BACK PROPAGATION:

Backpropagation, an abbreviation for "backward propagation of errors", is a common method of

training artificial neural networks used in conjunction with an optimization method such as gradient

descent. The method calculates the gradient of a loss function with respects to all the weights in

the network. The gradient is fed to the optimization method which in turn uses it to update the

weights, in an attempt to minimize the loss function.

Backpropagation requires a known, desired output for each input value in order to calculate the

loss function gradient. It is therefore usually considered to be a supervised learning method,

although it is also used in some unsupervised networks such as autoencoders. It is a

generalization of the delta rule to multi-layered feedforward networks, made possible by using

the chain rule to iteratively compute gradients for each layer. Backpropagation requires that

the activation function used by the artificial neurons (or "nodes") bedifferentiable.

PREPARED BY ARUN PRATAP SINGH 57

57

PREPARED BY ARUN PRATAP SINGH 58

58

PREPARED BY ARUN PRATAP SINGH 59

59

PREPARED BY ARUN PRATAP SINGH 60

60

PREPARED BY ARUN PRATAP SINGH 61

61

PREPARED BY ARUN PRATAP SINGH 62

62

PREPARED BY ARUN PRATAP SINGH 63

63

PREPARED BY ARUN PRATAP SINGH 64

64

PREPARED BY ARUN PRATAP SINGH 65

65

PREPARED BY ARUN PRATAP SINGH 66

66

PREPARED BY ARUN PRATAP SINGH 67

67

DERIVATION OF ERROR BACK PROPAGATION ALGORITHM (EBPA) :

PREPARED BY ARUN PRATAP SINGH 68

68

Derivation-

Since backpropagation uses the gradient descent method, one needs to calculate the derivative

of the squared error function with respect to the weights of the network. The squared error function

is:

,

= the squared error

= target output

= actual output of the output neuron

[note 2]

PREPARED BY ARUN PRATAP SINGH 69

69

(The factor of is included to cancel the exponent when differentiating.) Therefore the error, ,

depends on the output . However, the output depends on the weighted sum of all its input:

= the number of input units to the neuron

= the -th weight

= the -th input value to the neuron

The above formula only holds true for a neuron with a linear activation function (that is the output

is solely the weighted sum of the input). In general, a non-linear, differentiableactivation

function, , is used. Thus, more correctly:

This lays the groundwork for calculating the partial derivative of the error with respect to a

weight using the chain rule:

= How the error changes when the weights are changed

= How the error changes when the output is changed

= How the output changes when the weighted sum changes

PREPARED BY ARUN PRATAP SINGH 70

70

= How the weighted sum changes as the weights change

Since the weighted sum is just the sum over all products , therefore the partial

derivative of the sum with respect to a weight is the just the corresponding input . Similarly,

the partial derivative of the sum with respect to an input value is just the weight :

The derivative of the output with respect to the weighted sum is simply the derivative of

the activation function :

This is the reason why backpropagation requires the activation function to be differentiable. A

commonly used activation function is the logistic function:

which has a nice derivative of:

For example purposes, assume the network uses a logistic activation function, in which case the

derivative of the output with respect to the weighted sum is the same as the derivative of

the logistic function:

Finally, the derivative of the error with respect to the output is:

PREPARED BY ARUN PRATAP SINGH 71

71

Putting it all together:

If one were to use a different activation function, the only difference would be the term

will be replaced by the derivative of the newly chosen activation function.

To update the weight using gradient descent, one must choose a learning rate, . The change

in weight after learning then would be the product of the learning rate and the gradient:

For a linear neuron, the derivative of the activation function is 1, which yields:

This is exactly the delta rule for perceptron learning, which is why the backpropagation algorithm

is a generalization of the delta rule. In backpropagation and perceptron learning, when the

output matches the desired output , the change in weight would be zero, which is exactly

what is desired.

PREPARED BY ARUN PRATAP SINGH 72

72

MOMENTUM:

Empirical evidence shows that the use of a term called momentum in the backpropagation

algorithm can be helpful in speeding the convergence and avoiding local minima.

The idea about using a momentum is to stabilize the weight change by making nonradical

revisions using a combination of the gradient decreasing term with a fraction of the previous

weight change:

w(t) = -Ee/w(t) + w(t-1)

where a is taken 0 a 0.9, and t is the index of the current weight change.

This gives the system a certain amount of inertia since the weight vector will tend to continue

moving in the same direction unless opposed by the gradient term.

The momentum has the following effects:

- it smooths the weight changes and suppresses cross-stitching, that is cancels side-to-side

oscillations across the error valley;

- when all weight changes are all in the same direction the momentum amplifies the learning rate

causing a faster convergence;

- enables to escape from small local minima on the error surface.

The hope is that the momentum will allow a larger learning rate and that this will speed

convergence and avoid local minima. On the other hand, a learning rate of 1 with no momentum

will be much faster when no problem with local minima or non-convergence is encountered

LIMITATIONS OF NEURAL NETWORK :

There are many advantages and limitations to neural network analysis and to discuss this subject

properly we would have to look at each individual type of network, which isn't necessary for this

general discussion. In reference to backpropagational networks however, there are some specific

issues potential users should be aware of.

Backpropagational neural networks (and many other types of networks) are in a sense the

ultimate 'black boxes'. Apart from defining the general architecture of a network and

perhaps initially seeding it with a random numbers, the user has no other role than to feed

it input and watch it train and await the output. In fact, it has been said that with

backpropagation, "you almost don't know what you're doing". Some software freely

available software packages (NevProp, bp, Mactivation) do allow the user to sample the

networks 'progress' at regular time intervals, but the learning itself progresses on its own.

The final product of this activity is a trained network that provides no equations or

coefficients defining a relationship (as in regression) beyond it's own internal mathematics.

The network 'IS' the final equation of the relationship.

PREPARED BY ARUN PRATAP SINGH 73

73

Backpropagational networks also tend to be slower to train than other types of networks

and sometimes require thousands of epochs. If run on a truly parallel computer system

this issue is not really a problem, but if the BPNN is being simulated on a standard serial

machine (i.e. a single SPARC, Mac or PC) training can take some time. This is because

the machines CPU must compute the function of each node and connection separately,

which can be problematic in very large networks with a large amount of data. However,

the speed of most current machines is such that this is typically not much of an issue.

Вам также может понравиться

- Soft Computing Unit-5 by Arun Pratap SinghДокумент78 страницSoft Computing Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-2 by Arun Pratap SinghДокумент51 страницаAdvance Concept in Data Bases Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-1 by Arun Pratap SinghДокумент71 страницаAdvance Concept in Data Bases Unit-1 by Arun Pratap SinghArunPratapSingh100% (2)

- System Programming Unit-1 by Arun Pratap SinghДокумент56 страницSystem Programming Unit-1 by Arun Pratap SinghArunPratapSingh100% (2)

- Web Technology and Commerce Unit-5 by Arun Pratap SinghДокумент82 страницыWeb Technology and Commerce Unit-5 by Arun Pratap SinghArunPratapSingh100% (3)

- Information Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghДокумент36 страницInformation Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghArunPratapSingh100% (4)

- Soft Computing Unit-4 by Arun Pratap SinghДокумент123 страницыSoft Computing Unit-4 by Arun Pratap SinghArunPratapSingh67% (3)

- Web Technology and Commerce Unit-2 by Arun Pratap SinghДокумент65 страницWeb Technology and Commerce Unit-2 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghДокумент82 страницыAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Information Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghДокумент79 страницInformation Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghArunPratapSingh100% (2)

- Soft Computing Unit-1 by Arun Pratap SinghДокумент100 страницSoft Computing Unit-1 by Arun Pratap SinghArunPratapSingh100% (1)

- Information Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghДокумент46 страницInformation Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghArunPratapSingh67% (6)

- Web Technology and Commerce Unit-3 by Arun Pratap SinghДокумент32 страницыWeb Technology and Commerce Unit-3 by Arun Pratap SinghArunPratapSinghОценок пока нет

- System Programming Unit-4 by Arun Pratap SinghДокумент83 страницыSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Advance Concept in Data Bases Unit-3 by Arun Pratap SinghДокумент81 страницаAdvance Concept in Data Bases Unit-3 by Arun Pratap SinghArunPratapSingh100% (2)

- Information Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghДокумент64 страницыInformation Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghArunPratapSingh50% (4)

- System Programming Unit-2 by Arun Pratap SinghДокумент82 страницыSystem Programming Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- Web Technology and Commerce Unit-4 by Arun Pratap SinghДокумент60 страницWeb Technology and Commerce Unit-4 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Web Technology and Commerce Unit-1 by Arun Pratap SinghДокумент38 страницWeb Technology and Commerce Unit-1 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Digital Electronics & Computer OrganisationДокумент17 страницDigital Electronics & Computer Organisationabhishek125Оценок пока нет

- Challenges InThreading A Loop - Doc1Документ6 страницChallenges InThreading A Loop - Doc1bsgindia82100% (2)

- Soft Computing Notes PDFДокумент69 страницSoft Computing Notes PDFSidharth Bastia100% (1)

- 02 Data Mining-Partitioning MethodДокумент8 страниц02 Data Mining-Partitioning MethodRaj EndranОценок пока нет

- ANN Most NotesДокумент6 страницANN Most NotesUmesh KumarОценок пока нет

- Computer Network Lab Viva QuestionsДокумент3 страницыComputer Network Lab Viva QuestionsAtul GaurОценок пока нет

- Compiler Design Unit 2Документ117 страницCompiler Design Unit 2Arunkumar PanneerselvamОценок пока нет

- Chapter 5 1Документ35 страницChapter 5 1Dewanand GiriОценок пока нет

- ML Unit-2Документ141 страницаML Unit-26644 HaripriyaОценок пока нет

- Artifical Neural NetworkДокумент7 страницArtifical Neural NetworkAby MathewОценок пока нет

- Neural NetworksДокумент12 страницNeural NetworksdsjcfnpsdufbvpОценок пока нет

- Ann FileДокумент49 страницAnn Filerohit148inОценок пока нет

- Unit 2 SCДокумент6 страницUnit 2 SCKatyayni SharmaОценок пока нет

- Notes of ANNДокумент35 страницNotes of ANNSahil GoyalОценок пока нет

- Unit 4 NotesДокумент45 страницUnit 4 Notesvamsi kiran100% (1)

- NNDLДокумент96 страницNNDLYogesh KrishnaОценок пока нет

- AI ProjectДокумент29 страницAI ProjectFighter Ops GamingОценок пока нет

- ML Unit4Документ38 страницML Unit4seriesgamer61Оценок пока нет

- Back PropagationДокумент27 страницBack PropagationShahbaz Ali Khan100% (1)

- Cours 1 - Intro To Deep LearningДокумент38 страницCours 1 - Intro To Deep LearningSarah BouammarОценок пока нет

- Artificial Neural NetworksДокумент24 страницыArtificial Neural Networkspunita singhОценок пока нет

- Neural NetworksДокумент12 страницNeural NetworksP PОценок пока нет

- An Introduction To Artificial Neural NetworkДокумент5 страницAn Introduction To Artificial Neural NetworkMajin BuuОценок пока нет

- CH 9: Connectionist ModelsДокумент35 страницCH 9: Connectionist ModelsAMI CHARADAVAОценок пока нет

- Artificial IntelligentДокумент23 страницыArtificial Intelligentmohanad_j_jindeelОценок пока нет

- Practical On Artificial Neural Networks: Amrender KumarДокумент11 страницPractical On Artificial Neural Networks: Amrender Kumarnawel dounaneОценок пока нет

- Dsa Theory DaДокумент41 страницаDsa Theory Daswastik rajОценок пока нет

- Neural Network and Fuzzy LogicДокумент46 страницNeural Network and Fuzzy Logicdoc. safe eeОценок пока нет

- Feedforward Neural NetworkДокумент5 страницFeedforward Neural NetworkSreejith MenonОценок пока нет

- 4.0 The Complete Guide To Artificial Neural NetworksДокумент23 страницы4.0 The Complete Guide To Artificial Neural NetworksQian Jun AngОценок пока нет

- UNIT II Basic On Neural NetworksДокумент36 страницUNIT II Basic On Neural NetworksPoralla priyankaОценок пока нет

- Institute of Engineering and Technology Davv, Indore: Lab Assingment OnДокумент14 страницInstitute of Engineering and Technology Davv, Indore: Lab Assingment OnNikhil KhatloiyaОценок пока нет

- Artificial Neural NetworkДокумент36 страницArtificial Neural NetworkJboar TbenecdiОценок пока нет

- Neural Network: Prepared By: Nikita Garg M.Tech (CS)Документ29 страницNeural Network: Prepared By: Nikita Garg M.Tech (CS)Avdhesh GuptaОценок пока нет

- Neural NetworksДокумент57 страницNeural Networksalexaalex100% (1)

- Anjali Singhaniya (ML Internship)Документ45 страницAnjali Singhaniya (ML Internship)anjalisinha2103Оценок пока нет

- Artificial Intelligence in Mechanical Engineering: A Case Study On Vibration Analysis of Cracked Cantilever BeamДокумент4 страницыArtificial Intelligence in Mechanical Engineering: A Case Study On Vibration Analysis of Cracked Cantilever BeamShubhamОценок пока нет

- InTech-Introduction To The Artificial Neural Networks PDFДокумент17 страницInTech-Introduction To The Artificial Neural Networks PDFalexaalexОценок пока нет

- NN DevsДокумент22 страницыNN Devsaj9ajeetОценок пока нет

- ML Unit-3-1 Material WORDДокумент16 страницML Unit-3-1 Material WORDafreed khanОценок пока нет

- CS231n Convolutional Neural Networks For Visual Recognition 5Документ13 страницCS231n Convolutional Neural Networks For Visual Recognition 5Ali RahimiОценок пока нет

- Soft Computing Unit-4 by Arun Pratap SinghДокумент123 страницыSoft Computing Unit-4 by Arun Pratap SinghArunPratapSingh67% (3)

- Soft Computing Unit-1 by Arun Pratap SinghДокумент100 страницSoft Computing Unit-1 by Arun Pratap SinghArunPratapSingh100% (1)

- System Programming Unit-4 by Arun Pratap SinghДокумент83 страницыSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghОценок пока нет

- System Programming Unit-4 by Arun Pratap SinghДокумент83 страницыSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghОценок пока нет

- System Programming Unit-2 by Arun Pratap SinghДокумент82 страницыSystem Programming Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghДокумент82 страницыAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghДокумент82 страницыAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-3 by Arun Pratap SinghДокумент81 страницаAdvance Concept in Data Bases Unit-3 by Arun Pratap SinghArunPratapSingh100% (2)

- Information Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghДокумент79 страницInformation Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghArunPratapSingh100% (2)

- Information Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghДокумент64 страницыInformation Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghArunPratapSingh50% (4)

- Information Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghДокумент36 страницInformation Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghArunPratapSingh100% (4)

- Information Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghДокумент46 страницInformation Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghArunPratapSingh67% (6)

- Web Technology and Commerce Unit-3 by Arun Pratap SinghДокумент32 страницыWeb Technology and Commerce Unit-3 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Web Technology and Commerce Unit-4 by Arun Pratap SinghДокумент60 страницWeb Technology and Commerce Unit-4 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Web Technology and Commerce Unit-1 by Arun Pratap SinghДокумент38 страницWeb Technology and Commerce Unit-1 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Web Technology and Commerce Unit-2 by Arun Pratap SinghДокумент65 страницWeb Technology and Commerce Unit-2 by Arun Pratap SinghArunPratapSinghОценок пока нет

- Tactical Missile Design Presentation FleemanДокумент422 страницыTactical Missile Design Presentation Fleemanfarhadi100% (16)

- Coal Lab Assignment 2 - v5 - f2019266302Документ12 страницCoal Lab Assignment 2 - v5 - f2019266302Talha ChoudaryОценок пока нет

- Gas SolubilityДокумент59 страницGas Solubilitysomsubhra100% (1)

- 84 Cómo Crear Una User Exit para Activos Fijos ANLUДокумент8 страниц84 Cómo Crear Una User Exit para Activos Fijos ANLUPedro Francisco GomezОценок пока нет

- Adverse WeatherДокумент13 страницAdverse WeathermurugeshunivОценок пока нет

- Week 8-Wind Energy Generation - ELEC2300Документ29 страницWeek 8-Wind Energy Generation - ELEC2300Look AxxОценок пока нет

- ORM-II Theory+exercise+ Answer PDFДокумент58 страницORM-II Theory+exercise+ Answer PDFGOURISH AGRAWALОценок пока нет

- ATRA GM 4L60-4L60E (700R4) Rebuild ProceduresДокумент0 страницATRA GM 4L60-4L60E (700R4) Rebuild ProceduresJuan Manuel Aguero Diaz83% (12)

- Certificate: Internal Examiner External ExaminerДокумент51 страницаCertificate: Internal Examiner External ExamineraryanОценок пока нет

- Maintenance Manual Training Presentation - R.AДокумент232 страницыMaintenance Manual Training Presentation - R.AYasir Ammar100% (3)

- Engine Removal and DisassemblyДокумент12 страницEngine Removal and DisassemblyMinh MinhОценок пока нет

- ACI 305 Hot Weather Concrete PDFДокумент9 страницACI 305 Hot Weather Concrete PDFCristhian MartinezОценок пока нет

- Simatic S5 318-3UA11 Central Controller Interface Module: ManualДокумент37 страницSimatic S5 318-3UA11 Central Controller Interface Module: ManualAutomacao16Оценок пока нет

- US6362718 Meg Tom Bearden 1Документ15 страницUS6362718 Meg Tom Bearden 1Mihai DanielОценок пока нет

- Sachin BiradarДокумент2 страницыSachin Biradardecoo.cocooОценок пока нет

- Data Model and ER Diagram QuestionsДокумент2 страницыData Model and ER Diagram QuestionsMoses MushinkaОценок пока нет

- HART Communication With GF868, XGM868, XGS868, and XMT868 FlowmetersДокумент7 страницHART Communication With GF868, XGM868, XGS868, and XMT868 FlowmetersEnrique AntonioОценок пока нет

- Kalviexpress'Xii Cs Full MaterialДокумент136 страницKalviexpress'Xii Cs Full MaterialMalathi RajaОценок пока нет

- IET DAVV 2014 Com2Документ12 страницIET DAVV 2014 Com2jainam dudeОценок пока нет

- Chemistry 460 Problems: SET 1, Statistics and Experimental DesignДокумент69 страницChemistry 460 Problems: SET 1, Statistics and Experimental DesignDwie Sekar Tyas PrawestryОценок пока нет

- RC2 22873Документ2 страницыRC2 22873Henok AlemayehuОценок пока нет

- Solved Problems in Soil Mechanics: SolutionДокумент5 страницSolved Problems in Soil Mechanics: SolutionMemo LyОценок пока нет

- Swra 478 CДокумент29 страницSwra 478 Cchatty85Оценок пока нет

- Pig Iron Blast Furnace: Mcqs Preparation For Engineering Competitive ExamsДокумент20 страницPig Iron Blast Furnace: Mcqs Preparation For Engineering Competitive ExamschauhanОценок пока нет

- Section 5 Section 5: Weight and Balance Weight and BalanceДокумент36 страницSection 5 Section 5: Weight and Balance Weight and Balanceandres felipe sandoval porrasОценок пока нет

- Thanh Huyen - Week 5 - Final Test AnswerДокумент3 страницыThanh Huyen - Week 5 - Final Test AnswerNguyễn Sapphire Thanh HuyềnОценок пока нет

- Theoretical FrameworkДокумент5 страницTheoretical FrameworkPatziedawn GonzalvoОценок пока нет

- Astm c1060Документ6 страницAstm c1060jorgeОценок пока нет

- In Context: Subject Area: Organic Chemistry Level: 14-16 Years (Higher) Topic: Addition Polymers Source: RSC - Li/2GrwsijДокумент5 страницIn Context: Subject Area: Organic Chemistry Level: 14-16 Years (Higher) Topic: Addition Polymers Source: RSC - Li/2GrwsijRajlaxmi JainОценок пока нет

- Fiat Bravo Training ManualДокумент111 страницFiat Bravo Training ManualJa Ja75% (4)