Академический Документы

Профессиональный Документы

Культура Документы

Agent Based Approach To Knowledge Discovery in Datamining

0 оценок0% нашли этот документ полезным (0 голосов)

17 просмотров6 страницInternational Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Оригинальное название

IJAIEM-2014-06-20-44

Авторское право

© © All Rights Reserved

Доступные форматы

PDF, TXT или читайте онлайн в Scribd

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документInternational Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PDF, TXT или читайте онлайн в Scribd

0 оценок0% нашли этот документ полезным (0 голосов)

17 просмотров6 страницAgent Based Approach To Knowledge Discovery in Datamining

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PDF, TXT или читайте онлайн в Scribd

Вы находитесь на странице: 1из 6

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 180

Abstract

Data mining refers to extracting or mining useful data from large amounts of data. Due to the wide availability of huge amount

of useful information and knowledge, data mining can be used for market analysis, fraud detection and customer retention.

Network intrusion detection system is used to detect any intruder which might have entered into the computer system In this

paper, a multi agent based approach is used for network intrusion detection. An adaptive NIDS will be used. The method proposed

is the enhancement of the previous methods. Here more numbers of agents are used which will be continuously monitoring the

data to check for any intruder which might have entered in the system. Each agent is trained accordingly so that it can check for

any type of intruder entering into the system. Verification through many agents will ensure the safety of the data. Data mining

technology has emerged as a means for identifying patterns and trends from large quantities of data. Agent computing whose aim

is to deal with complex systems has revealed opportunities to improve distributed data mining systems in a number of ways.

Multi-agent systems (MAS) often deal with complex applications that require distributed problem solving. In many applications

the individual and collective behavior of the agents depends on the observed data from distributed sources. Distributed data

mining is originated from the need of mining over decentralized data sources. Since multi-agent systems are often distributed

and agents have proactive and reactive features which are very useful for Knowledge Management Systems, combining DDM with

MAS for data intensive applications is appealing.

This paper the integration of multi-agent system and distributed data mining, also known as multi agent- based distributed data

mining, in terms of significance, system overview, existing systems, and research trends.

Keywords: Distributed Data Mining, Multi-Agent Systems, Multi Agent Data Mining, Agent Based Distributed

Data Mining.

1. Introduction

When you Originated from knowledge discovery from databases (KDD), also known as data Mining (DM), distributed

data mining (DDM) mines data sources regardless of their physical locations. The need for such characteristic arises

from the fact that data produced locally at each site may not often be transferred across the network due to the

Excessive amount of data and privacy issues. Recently, DDM has become a critical component of knowledge-based

systems because its decentralized architecture reaches every networked business.

Data Mining still poses many challenges to the research community. The main challenges in data mining are:

Data mining to deal with huge amounts of data located at different sites. The amount of data Can easily exceed

the terabyte limit;

Data mi ni ng i s very computati onal l y intensive process involving very large data sets. Usually, it is necessary

to partition and distribute the data for parallel processing to achieve acceptable time and space performance;

Input data change rapidly. In many application domain data to be mined either is produced with high rate or they

actually come in streams. In those cases, knowledge has to be mined fast and efficiently in order to be usable and

updated;

Security is a major concern in that companies or other organizations may be willing to release data mining results

but not the source data itself. The remaining sections of the paper are organized as follows. In Section II we

describe the agent. In Section III we describe Agent- Based Distributed Data Mining. In Section IV we describe open

problems Strategy Section V MADM systems general Architecture Overview. Open Issues and Trends are includes the

paper.

2.Why agents

DDM is a complex system focusing on the distribution of resources over the network as well as data mining

processes. The very core of DDM systems is the scalability as the system configuration may be altered time to time,

therefore designing DDM systems deals with great details of software engineer issues, such re- usability, extensibility, and

robustness. For these reasons, agents characteristics are desirable for DDM systems. Furthermore, the decentralization

property seems to fit best with the DDM requirement. At each data site, mining strategy is deployed specifically for the

certain domain of data. However, there can be other existing or new strategies that data miner would like to test. A

data site should seamlessly integrate with external methods and perform testing on multiple strategies for further analysis.

Autonomous agent can be treated as a computing unit that performs multiple tasks based on a dynamic configuration. The

Agent Based Approach to Knowledge

Discovery in Datamining

A.Vasudeva Rao

Associate Professor, Dept. of CSE, Dadi Institute of Engineering & Technology

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 181

agent interprets the configuration and generates an execution plan to complete multiple tasks.[2], [11], [14], [10], 127],

and [2] discuss the benefits of deploying agents in DDM systems. Nature of MAS is decentralization and therefore each

agent has only limited view to the system. The limitation somehow allows better security as agents do not need to observe

other irrelevant surroundings. Agents, in this way, can be programmed as compact as possible, in which light-

weight agents can be transmitted across the network rather than the data which can be more bulky. Being able to

transmit agents from one to another host allows dynamic organization of the system. For example, mining agent a1,

located at site s1, posses algorithm alg1. Data mining task t1 at site s2 is instructed to mine the data using alg1. In this

setting, transmitting a1 to s2 is a probable way rather than transfer all data from s2 to s1 where alg1 is available.

In addition, security, a.k.a. trust-based agents [11][12], is a critical issue in ADDM. Rigid security models

intending to ensure the security may degrade the system scalability. Agents offer alternative solutions as they can

travel through the system network. As in [15], the authors present a framework in which mobile agents travel in the

system network allowing the system to maintain data privacy. Thanks to the self-organization characteristic which

excuses the system from transferring data across the network therefore adds up security of the data. A trade-off for the

previous discussed issue, scalability is also a critical issue of a distributed system. In order to inform every unit in

the system about the configuration update of the system, such as a new data site has joined the system, demands

extra human interventions or high complex mechanism in which drops in performance may occur. To this concern,

collaborative learning agents [10][6] are capable of sharing information, in this case, about changes of system

configuration, and propagate from one agent to another allowing adaptation of the system to occur at individual agent

level. Furthermore, mobile agents as discussed earlier can help reduce network and DM application server load as in

state-of-art systems [3][24].

3. AGENT-BASED DISTRIBUTED DATA MINING:

Applications of distributed data mining include credit card fraud detection system ,intrusion detection system, health

insurance, security- related applications,distributed clustering, market segmentation, sensor networks, customer profiling,

evaluation of retail promotions, credit risk analysis, etc. This DDM application can be further enhanced with agents.

ADDM takes data mining as a basis foundation and is enhanced with agents; therefore, this novel data mining

technique inherits all powerful properties of agents and, as a result, yields desirable characteristics. In general,

constructing an ADDM system concerns three key characteristics: interoperability, dynamic system configuration, and

performance aspects, discussed as follows. Interoperability concerns, not only collaboration of agents in the system, but

also external interaction which allow new agents to enter the system seamlessly. The architecture of the system must be

open and flexible so that it can support the interaction including communication protocol, integration policy, and service

directory. Communication protocol covers message encoding, encryption, and transportation between agents,

nevertheless, these are standardized by the Foundation of Intelligent Physical Agents (FIPA) and are available for public

access. Most agent platforms, such as J ADE2 and JACK3, are FIPA compliant therefore interoperability among them are

possible. Integration policy specifies how a system behaves when an external component, such as an agent or a data site,

requests to enter or leave. The issue is further discussed in [47] and [29] In relation with the interoperability

characteristic, dynamic system configuration, that tends to handle a dynamic configuration of the system, is a

challenge issue due to the complexity of the planning and mining algorithms.

A mining task may involve several agents and data sources, in which agents are configured to equip with an algorithm

and deal with given data sets. Change in data affects the mining task as an agent may be still executing the algorithm.

Lastly, performance can be either improved or impaired because the distribution of data is a major constraint. In

distributed environment, tasks can be executed in parallel, in exchange, concurrency issues arise. Quality of service

control in performance of data mining and system perspectives is desired, however it can be derived from both data

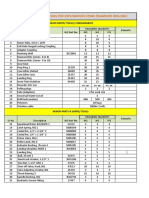

mining and agents fields. Next, we are now looking at the overview of our point of focus. An ADDM system can be

generalized into a set of components and viewed as depicted in figure 1.We may generalize activities of the system

into request and response, each of which involves a different set of components. Basic components of an ADDM

system are as follows.

Fig. 1: Overview of ADDM system.

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 182

Data: Data is the foundation layer of our interest. In distributed environment, data can be hosted in various, forms, such

as online relational databases, data stream, web pages, etc., in which purpose of the data is varied.

Communication: The system chooses the related resources from the directory service, which maintains a list of

data sources, mining algorithms, data schemas, data types, etc. The communication protocols may vary depending on

implementation of the system, such as client- server, peer-to-peer, etc.

Presentation: The user interface (UI) interacts with the user as to receive and respond to the user. The interface

simplifies complex distributed systems into user-friendly message such as network diagrams, visual reporting tools, etc.

On the other hand, when a user requests for data mining through the UI, the following components are involved.

Query optimization: A query optimizer analyses the request as to determine type of mining tasks and chooses

proper resources for the request. It al so determi nes whether i t i s possible to parallelize the tasks, since the data

is distributed and can be mined in parallel.

Discovery Plan: A planner allocates sub-tasks with related resources. At this stage, mediating agents play

important roles as to coordinate multiple computing units since mining sub-tasks performed asynchronously as

well as results from those tasks. On the other hand, when a mining task is done, the following components are

taken place,

Local Knowledge Discovery (KD): In order to transform data into patterns which adequately represent the data and

reasonable to be transferred over the network, at each data site, mining process may take place locally depending on

the individual implementation.

Knowledge Discovery: Also known as mining, it executes the algorithm as required by the task to obtain knowledge

from the specified data source.

Knowledge Consolidation: In order to present to the user with a compact and Meaningful mining result, it is necessary

to normalize the knowledge obtained from various sources. The component involves complex methodologies to combine

knowledge/patterns from distributed sites. Consolidating homogeneous knowledge/patterns is promising and yet difficult

for heterogeneous case.

Central learning strategy: is when all the data can be gathered at a central site and a single model can be build. The

only requirement is to be able to move the data to a central location in order to merge them and then apply sequential

DM algorithms. This strategy is used when the geographically distributed data is small. The strategy is generally very

expansive but also more accurate. The process of gathering data in general is not simply a merging step; it depends on

the original distribution. For example, different records are placed in different sites, different attributes of the same

records are distributed across different sites, or different tables can be placed at different sites, therefore when gathering

data it is necessary to adopt the proper merging strategy. However, as pointed before this strategy in general is unfeasible

[10]. Agent technology is not very preferred in such strategy.

Meta-learning strategy: it offers a way to mine classifiers from homogeneously distributed data. Meta-learning follows

three main steps. The first is to generate base classifiers at each site using a classifier learning algorithms. The

second step is to collect the base classifiers at a central site, and produce meta-level data from a separate validation set

and predictions generated by the base classifier on it. The third step is to generate the final classifier (meta-classifier)

from meta-level data via a combiner or an arbiter. Copies of classifier agent will exist or deployed on nodes in the

network being used. Perhaps the most mature systems of agent-based meat- learning systems are: JAM system [11], and

BODHI [11].

Hybrid learning strategy: is a technique that combines local and centralized learning for model building [12]; for

example, Papyrus [13] is designed to support both learning strategies. In contrast to J AM and BODHI, Papyrus can not

only move models from site to site, but can also move data when that strategy is desired. Papyrus is a specialized system

which is designed for clusters while JAM and BODHI are designed for data classification.

The major criticism of such systems is that it is not always possible to obtain an exact final result, i.e. the global

knowledge model obtained may be different from the one obtained by applying the one model approach (if possible) to the

same data.

Approximated results are not always a major concern, but it is important to be aware of that. Moreover, in these systems

hardware resource usage is not optimized. If the heavy computational part is always executed locally to data, when the

same data is accessed concurrently, the benefits coming from the distributed environment might vanish due to the possible

strong performance degradation. Another drawback is that occasionally, these models are induced from databases that

have different schemas and hence are incompatible.

4. MADM SYSTEMS GENERAL ARCHITECTURE OVER VIEW:

In distributed data mining, there is a fundamental trade-off between the accuracy and the cost of the computation. If

our interest is in cost functions which reflect both computation costs and communication costs, especially the cost of wide

area communications, we can process all the data locally obtaining local results, and combine the local results at the

root to obtain the final result. But if our interest is accurate result, we can ship all the data to a single node. We assume

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 183

that this produces the most accurate result. In general, this is the most expensive while the former approach is less

expensive, but also less accurate.

Fig 2: MADM systems general Architecture

Architecture:

Most of the MADM frameworks adapt similar

Architecture (see figure .2.) and provide common structural components.They use KQML or FIPA-ALC, which are a

standard agent communication language that facilitates the interactions among agents. The following is a definition

for the most common agents that are used in MADM; the names might be different but they share the same

functionalities in most cases.

Interface Agent (or User Agent): this agent interacts with the user (or user agent). It asks the user to provide his

requirements, and provides the user with mined results (may be visualized). Its interface module contains methods

for inter agent communication and getting input from the user. The process module contains methods for capturing

the user input and communicating it to the facilitator agent. In the knowledge module, the agent stores the history

of user interaction, and user profiles with their specific preferences.

Facilitator Agent(or Manager Agent):The facilitator agent is responsible of the activation and synchronization of

different agents. It elaborates a work plan and is in charge of ensuring that such a work plan is fulfilled.

It receives the assignments from the interface agent and may seek the services of a group of agents and synthesize the

final result and present it to the interface agent. The interface module is responsible for inter agent

communi cati on; the process module contains methods for control and coordination of various tasks. The sequence

of tasks to be executed is created from specific ontologiesstored in the knowledge module using a rule-based approach.

The agent task may include identifying relevant data sources, requesting services from agents, generating queries, etc.

The knowledge module also contains meta-knowledge about capabilities of other agents in the system.

Resource Agent (or Data Agent): The resource agent actively maintains meta-data information about each of the data

sources. It also provides predefined and ad hoc retrieval capabilities. It is responsible for retrieving the necessary data sets

requested by the data mining agent in preparation for a specific data mining operation. It takes into account the

heterogeneity of the databases, as well as resolves conflicts in data definition and representation. Its interface module

supports inter-agent communication as well as interface to existing data sources. The process module provides facilities

for ad hoc and predefined data retrieval. Based on the user request, appropriate queries are generated and executed

against the data base and the results are communicated back to the facilitator agent, or other agents.

Mining Agent: The data mining agent implements specific data mining techniques and algorithms. The interface

module supports inter-agent communication. The process module contains methods for initiating and carrying out the

data mining activity, capturing the results of data mining, and communicating it to result agent or the facilitator

agent. The knowledge module contains meta-knowledge about data mining methods, i.e., what method is suitable

for what type of problem, input requirements for each of the mining methods, format of input data, etc. This

knowledge is used by the process module in initiating and executing a particular data mining algorithm for the

problem at hand.

Distributed Learning

There are three ways learning can occur when data is distributed. These relate to when agents communicate with respect

to the learning process:

The first approach gathers the data into one place. The use of distributed database management systems to provide a

single set of data to an algorithm is an example of this (Simoudis, 1994). The problem with such an approach is that it

does not make efficient use of the resources usually associated with distributed computer networks.

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 184

The second approach is for agents to exchange information whilst learning on local data. This is the approach taken by

Sian (1991). No revision or integration is needed, as the agents are effectively working as a single, tightly coupled,

algorithm over the entire data. This restricts the agents to using learning algorithms that have been specially modified to

work in this way. Thus the main disadvantage with this approach is that it does not allow the use of 'off the-shelf'

learning algorithms.

The third approach is for the agents to learn locally, and then to share their results, which are then refined and

integrated by other agents in light of their own data and knowledge. This model permits the use of standard algorithms,

and also allows inter-operation between different algorithms. Brazdil & Torgo (1990), Svatek (1995) and Provost &

Hennessy (1994) have all taken this approach. The main problem here is how to integrate the local results. We are

adopting the latter approach, as it provides distributed processing together with flexibility in deploying 'off-the-shelf'

algorithms. The following section describes the relationship between theory revisions, knowledge integration and

incremental learning. We will then describe an empirical comparison of three different approaches to distributed learning

based on theory revision, knowledge integration and a combination of theory revision and knowledge integration.

4.1 Theory Revision, Knowledge Integration & Incremental Learning Theory refinement and knowledge

integration are related techniques:

Theory refinement involves revising a theory with respect to new training examples. Knowledge integration involves

combining two theories into a single unified theory. Although related, the two processes differ in certain respects. Theory

revision generally involves the modification of individual rules; knowledge integration generally involves removing

redundancy between different sets of agents' rules, then ordering the remaining rules.

Distributed learning can also be cast as an incremental learning problem. Mooney (1991), demonstrated that any theory

revision system should be capable of incremental revision. This is achieved by feeding back a partial theory,and then

revising that theory with respect to a single example. In a distributed learning system, the problem is similar; an agent

learns a local theory, then has it revised by another agent with respect to that agent's examples. This favours incremental

learning algorithms and those algorithms with a theory revision component for use in distributed learning.

4.2 An Empirical Evaluation of Distributed Learning Techniques

In order to compare the efficiency of techniques for distributed learning, we have conducted a series of experiments based

on FOCL. The 1984 Congressional Voting Records dataset (435 examples) was used. We used both the inductive and

theory revision components of FOCL. The simple knowledge integration component makes use of a simple accuracy

measure. We count the number of correct positive and negative examples covered by each theory. Every theory is

measured against all the training data. The theory with the highest score is selected. We have evaluated four different

distributed learning methods:

1 No Distribution. A conventional, non-distributed approach. All the available training examples are provided to FOCL.

2 Incremental Theory Revisions. An agent learns a local theory from its share of available training examples, and then

passes this theory to the next agent, and so on. Five agents were used. In each case the available training examples are

split equally between the agents.

3 Simple Knowledge Integration. Each of five agents learns a local theory. The five resulting theories are tested against

all the training examples, the best theory is selected and is then compared with the test set.

4 Theory Revision and Simple Knowledge Integration. As in the previous example, each of the five local agents learns a

local theory. Every agent then receives all the other agents' theories. Each agent revises these four theories to fit its local

data. As in the previous method, the best theory is found by testing against the entire training set.

REFERENCES

[1] Agrawal, R., Imielinski. T., Swami, A.: Mining association rules between sets of items in large databases. Proc.

ACM SIGMOD. (1993) 207216, Washington, D.C.

[2] Chen M.S., Han J. and Yu P.S (1996) Data Mining : An Overview from a Database Prospective, IEEE

Trans.Knowledge and Data Eng., 866-883

[3] Agarwal R, Imielinski. T., Swami, (1993) Database Mining: a performance prospective, IEEE Transaction on

Knowledge and Data Engineering 5(6), 914-925.

[4] Agrawal, R., Srikant, R: Fast Algorithms for Mining Association Rules. Proc. Of the VLDB Conference (1994) 487

489, Santiago (Chile)

[5] Pei M., Goodman E.D.,Punch F. (2000) Feature extraction using Genetic Algorithm, Case Center for Computer

Aided Engineering and Manufacturing W. Department of Computer Science

[6] Stuart J. Russel, Peter Novig (2008) Artificial Intellegence: A Modern Approach

[7] Goldberg, D.E: Genetic algorithms in search, optimization and machine learning. Addison-Wesley. (1989)

[8] Han J., Kamber M. Data Mining Concepts & Techniques, Morgan & Kaufmann, 2000.

[9] Pujari A.K., Data Mining Technique, Universities Press, 2001

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 6, June 2014 ISSN 2319 - 4847

Volume 3, Issue 6, June 2014 Page 185

[10] Anandhavalli M, Suraj Kumar Sudhanshu, Ayush Kumar and Ghose M.K. (2009) Optimized Association Rule

Mining using Genetic Algorithm, Advances in Information Mining, ISSN:0975-3265, Volume 1, Issue 2, 2009, pp-

01- 04.

[11] Markus Hegland. The Apriori Algorithm a Tutorial, CMA, Australian National University, WSPC/Lecture Notes

Series, 22-27, March 30, 2005.

[12] Pasquier, N., Bastide, Y., Taouil, R., Lakhal, L.: Discovering Frequent Closed Itemsets for Association Rules

[13] Park, J. S., Chen, M. S., Yu. P.S.: An Effective Hash Based Algorithm for Mining Association Rules. Proc. of the

ACM SIGMOD Intl Conf. on Management of Data (1995) San Jose, CA.

[14] Savarese, A., Omiecinski, E., Navathe, S.: An efficient algorithm for mining association rules in large databases.

Proc. of the VLDB Conference, Zurich, Switzerland (1995)

[15] Srikant, R, Agrawal, R.: Mining Quantitative Association Rules in Large Relational Tables. Proc. of the ACM

SIGMOD (1996) 112.

[16] GeneSight , Bioscovery, El Segundo, CA 90245, www.biodiscovery.com/genesight.asp , viewed July 15, 2005.

[17] Ghosh, A. and Nath B. (2004), Multi-objective rule mining using genetic algorithms, Information Science: An

International Journal, 163(1-3), 123-133.

[18] Girldez, R., Aguilar-Ruiz, J. and Riquelme, J. (2005), Knowledge-Based Fast Evaluation for Evolutionary Learning,

IEEE Transactions on Systems, Man & Cybernetics: Part C, 35(2), 254-261.

[19] Guan, S. and Zhu, F. (2005), An Incremental Approach to Genetic-Algorithms-Based Classification, IEEE

Transactions on Systems, Man & Cybernetics: Part B, 35(2), 227-239.

[20] Hardiman, G (2003), Microarrays Methods and Applications: Nuts & Bolts, DNA Press, www.dnapress.net.

[21] Hoppe, C. (2005), Bioinformatics: Computers or clinicians for complex disease risk assessment? European Journal of

Human Genetics, 13(8), 893-894.

[22] Krawetz, SA and Womble, DD (2003), Introduction to Bioinformatics, Humana Press, Totowa, NJ . Li, R. and Wang,

Z. (2004), Mining classification rules using rough sets and neural networks, European Journal of Operational

Research, 157, 439-448.

AUTHOR

Addala Vasudeva Rao received the M.Tech. degree in Computer Science & Technology from Andhra

University in 2008. He is an Associate Professor in the Department of Computer Science & Engineering, Dadi

Institute of Engineering&Technology, Anakapalle.He is currently Ph. D Scholar in the Computer Science &

Engineering Department, JNTU Kakinada. His research interests are Data Mining and Networks Security.

He is member of ACM, SIGKDD, CSTA, IAENG and lifemember of CSI

Вам также может понравиться

- IJAERS-SEPT-2014-020-MAD-ARM - Distributed Association Rule Mining Mobile AgentДокумент5 страницIJAERS-SEPT-2014-020-MAD-ARM - Distributed Association Rule Mining Mobile AgentIJAERS JOURNALОценок пока нет

- Accessing Secured Data in Cloud Computing EnvironmentДокумент10 страницAccessing Secured Data in Cloud Computing EnvironmentAIRCC - IJNSAОценок пока нет

- Fuzzy Keyword Search Over Encrypted Data in Cloud Computing Domain Name: Cloud ComputingДокумент4 страницыFuzzy Keyword Search Over Encrypted Data in Cloud Computing Domain Name: Cloud ComputinglakcyberwayОценок пока нет

- Intrusion Detection System For CloudДокумент8 страницIntrusion Detection System For CloudInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Adopting Trusted Third Party Services For Multi-Level Authentication Accessing CloudДокумент7 страницAdopting Trusted Third Party Services For Multi-Level Authentication Accessing CloudInternational Journal of computational Engineering research (IJCER)Оценок пока нет

- Energy Efficient & Low Power Consuming Data Aggregation Method For Intrusion Detection in MANETДокумент9 страницEnergy Efficient & Low Power Consuming Data Aggregation Method For Intrusion Detection in MANETInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Quality Management in Data Centers: A Critical PerspectiveДокумент11 страницQuality Management in Data Centers: A Critical PerspectiveHarry Wahyu RamadhanОценок пока нет

- Grid InfrastructureДокумент4 страницыGrid Infrastructureragha_449377952Оценок пока нет

- Cloud Data Integrity Auditing Over Dynamic Data Fo PDFДокумент9 страницCloud Data Integrity Auditing Over Dynamic Data Fo PDFraniОценок пока нет

- Network Anomaly Detection Over Streaming Data Using Feature Subset Selection and Ensemble ClassifiersДокумент8 страницNetwork Anomaly Detection Over Streaming Data Using Feature Subset Selection and Ensemble ClassifiersIJRASETPublicationsОценок пока нет

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Документ7 страницIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationОценок пока нет

- International Journal of Engineering Research and Development (IJERD)Документ5 страницInternational Journal of Engineering Research and Development (IJERD)IJERDОценок пока нет

- International Journal of Research Publication and Reviews: Miss. Ankita Singh, Mr. Vedant Naidu, Prof. Deepali ShahДокумент3 страницыInternational Journal of Research Publication and Reviews: Miss. Ankita Singh, Mr. Vedant Naidu, Prof. Deepali ShahPatel rajОценок пока нет

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Документ10 страницIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationОценок пока нет

- Grid Computing: 1. AbstractДокумент10 страницGrid Computing: 1. AbstractBhavya DeepthiОценок пока нет

- MANET Full DocumentДокумент80 страницMANET Full DocumentVamsee Krishna100% (2)

- Data Security in Cloud Computing Using Threshold Cryptography and User RevocationДокумент5 страницData Security in Cloud Computing Using Threshold Cryptography and User RevocationEditor IJRITCCОценок пока нет

- Enabling Public Auditability For Cloud Data Storage SecurityДокумент5 страницEnabling Public Auditability For Cloud Data Storage SecurityRakeshconclaveОценок пока нет

- (IJCST-V3I6P17) :mr. Chandan B, Mr. Vinay Kumar KДокумент4 страницы(IJCST-V3I6P17) :mr. Chandan B, Mr. Vinay Kumar KEighthSenseGroupОценок пока нет

- reviewДокумент74 страницыreviewsethumadhavansasi2002Оценок пока нет

- Edge-Based Auditing Method For Data Security in Resource-ConstrainedДокумент10 страницEdge-Based Auditing Method For Data Security in Resource-Constrained侯建Оценок пока нет

- Securing Cloud Data Storage: S. P. Jaikar, M. V. NimbalkarДокумент7 страницSecuring Cloud Data Storage: S. P. Jaikar, M. V. NimbalkarInternational Organization of Scientific Research (IOSR)Оценок пока нет

- Data Sharing and Distributed Accountability in Cloud: Mrs. Suyoga Bansode, Prof. Pravin P. KalyankarДокумент7 страницData Sharing and Distributed Accountability in Cloud: Mrs. Suyoga Bansode, Prof. Pravin P. KalyankarIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalОценок пока нет

- Ntrusion D Etection S Ystem - Via F Uzzy Artmap in A Ddition With A Dvance S Emi S Upervised F Eature S ElectionДокумент15 страницNtrusion D Etection S Ystem - Via F Uzzy Artmap in A Ddition With A Dvance S Emi S Upervised F Eature S ElectionLewis TorresОценок пока нет

- Final Report ChaptersДокумент56 страницFinal Report ChaptersJai Deep PonnamОценок пока нет

- ProjectДокумент69 страницProjectChocko AyshuОценок пока нет

- Starring Role of Data Mining in Cloud Computing ParadigmДокумент4 страницыStarring Role of Data Mining in Cloud Computing ParadigmijsretОценок пока нет

- Securing Data With Blockchain and AIДокумент22 страницыSecuring Data With Blockchain and AIkrishna reddyОценок пока нет

- Database Security in Cloud Computing via Data FragmentationДокумент7 страницDatabase Security in Cloud Computing via Data FragmentationMadhuri M SОценок пока нет

- Cloud Computing Security and PrivacyДокумент57 страницCloud Computing Security and PrivacyMahesh PalaniОценок пока нет

- LSTM-based Memory Profiling For Predicting Data Attacks in Distributed Big Data SystemsДокумент9 страницLSTM-based Memory Profiling For Predicting Data Attacks in Distributed Big Data Systemsmindworkz proОценок пока нет

- Engineering Journal::A Fuzzy Intrusion Detection System For Cloud ComputingДокумент8 страницEngineering Journal::A Fuzzy Intrusion Detection System For Cloud ComputingEngineering JournalОценок пока нет

- Grid Computing: 1. AbstractДокумент10 страницGrid Computing: 1. AbstractARVINDОценок пока нет

- GRID COMPUTING INTRODUCTIONДокумент11 страницGRID COMPUTING INTRODUCTIONKrishna KumarОценок пока нет

- Csi Zero Trust Network Environment PillarДокумент12 страницCsi Zero Trust Network Environment PillarAzaelCarionОценок пока нет

- Sumathi Sangeetha2020 - Article - AGroup Key basedSensitiveAttriДокумент15 страницSumathi Sangeetha2020 - Article - AGroup Key basedSensitiveAttriultimatekp144Оценок пока нет

- Cloud Database Performance and SecurityДокумент9 страницCloud Database Performance and SecurityP SankarОценок пока нет

- Introduction to Grid Computing Characteristics, Models and ComponentsДокумент11 страницIntroduction to Grid Computing Characteristics, Models and ComponentsKrishna KumarОценок пока нет

- CPPPMUSABДокумент75 страницCPPPMUSABMusabОценок пока нет

- Robust Data Security For Cloud While Using Third Party AuditorДокумент5 страницRobust Data Security For Cloud While Using Third Party Auditoreditor_ijarcsseОценок пока нет

- Eh Unit 3Документ15 страницEh Unit 3tixove1190Оценок пока нет

- 1 ST Review DocumentДокумент37 страниц1 ST Review DocumentsumaniceОценок пока нет

- ID-DPDP Scheme For Storing Authorized Distributed Data in CloudДокумент36 страницID-DPDP Scheme For Storing Authorized Distributed Data in Cloudmailtoogiri_99636507Оценок пока нет

- Cloud ComputingДокумент6 страницCloud ComputingpandiОценок пока нет

- Compusoft, 2 (12), 396-399 PDFДокумент4 страницыCompusoft, 2 (12), 396-399 PDFIjact EditorОценок пока нет

- A Feature Selection Based On The Farmland Fertility Algorithm For Improved Intrusion Detection SystemsДокумент27 страницA Feature Selection Based On The Farmland Fertility Algorithm For Improved Intrusion Detection SystemsRashed ShakirОценок пока нет

- PGD/MSC Cyb - Jan20O - Cloud Security - Terminal Assignment Based AssessmentДокумент22 страницыPGD/MSC Cyb - Jan20O - Cloud Security - Terminal Assignment Based Assessmentmounika kОценок пока нет

- Clustering Cloud ComputingДокумент85 страницClustering Cloud ComputingSahil YadavОценок пока нет

- Improved Authentication Scheme For Dynamic Groups in The CloudДокумент3 страницыImproved Authentication Scheme For Dynamic Groups in The CloudseventhsensegroupОценок пока нет

- Data Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentДокумент5 страницData Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentIJRASETPublicationsОценок пока нет

- Seminar FogДокумент6 страницSeminar FogMr. KОценок пока нет

- Ijet V3i4p1Документ5 страницIjet V3i4p1International Journal of Engineering and TechniquesОценок пока нет

- A.V.C.Colege of Engineering Department of Computer ApplicationsДокумент35 страницA.V.C.Colege of Engineering Department of Computer Applicationsapi-19780718Оценок пока нет

- A Novel Datamining Based Approach For Remote Intrusion DetectionДокумент6 страницA Novel Datamining Based Approach For Remote Intrusion Detectionsurendiran123Оценок пока нет

- Big Data in Cloud Computing An OverviewДокумент7 страницBig Data in Cloud Computing An OverviewIJRASETPublicationsОценок пока нет

- Authorization and Access ControlДокумент6 страницAuthorization and Access Controlchrisbhandari73Оценок пока нет

- Network Data Management Model Based On Naà Ve Bayes Classiï Er and Deep Neural Networks in Heterogeneous WirelessДокумент11 страницNetwork Data Management Model Based On Naà Ve Bayes Classiï Er and Deep Neural Networks in Heterogeneous WirelessZekrifa Djabeur Mohamed SeifeddineОценок пока нет

- Distributed Data Sharing With Security in Cloud NetworkingДокумент11 страницDistributed Data Sharing With Security in Cloud NetworkingInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSДокумент7 страницTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Customer Satisfaction A Pillar of Total Quality ManagementДокумент9 страницCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesДокумент6 страницDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyДокумент6 страницAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewДокумент9 страницAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterДокумент7 страницExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiДокумент12 страницStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSДокумент7 страницTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewДокумент9 страницAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterДокумент7 страницExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesДокумент6 страницDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- The Mexican Innovation System: A System's Dynamics PerspectiveДокумент12 страницThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiДокумент12 страницStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Customer Satisfaction A Pillar of Total Quality ManagementДокумент9 страницCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyДокумент6 страницAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityДокумент10 страницStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Soil Stabilization of Road by Using Spent WashДокумент7 страницSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemДокумент8 страницDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Advanced Load Flow Study and Stability Analysis of A Real Time SystemДокумент8 страницAdvanced Load Flow Study and Stability Analysis of A Real Time SystemInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Digital Record For Privacy and Security in Internet of ThingsДокумент10 страницA Digital Record For Privacy and Security in Internet of ThingsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- The Impact of Effective Communication To Enhance Management SkillsДокумент6 страницThe Impact of Effective Communication To Enhance Management SkillsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryДокумент8 страницImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Deep Learning Based Assistant For The Visually ImpairedДокумент11 страницA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Документ10 страницA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Performance of Short Transmission Line Using Mathematical MethodДокумент8 страницPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Challenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19Документ10 страницChallenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19International Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsДокумент9 страницSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaДокумент9 страницAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Secured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpДокумент12 страницSecured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Predicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsДокумент10 страницPredicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Optical Packet SwitchingДокумент28 страницOptical Packet Switchingarjun c chandrathilОценок пока нет

- Principles of Failure AnalysisДокумент2 страницыPrinciples of Failure AnalysisLuis Kike Licona DíazОценок пока нет

- Print to PDF without novaPDF messageДокумент60 страницPrint to PDF without novaPDF messageAyush GuptaОценок пока нет

- Architectural Design of Bangalore Exhibition CentreДокумент24 страницыArchitectural Design of Bangalore Exhibition CentreVeereshОценок пока нет

- Caustic Soda Plant 27 000 Tpy 186Документ1 страницаCaustic Soda Plant 27 000 Tpy 186Riddhi SavaliyaОценок пока нет

- Manual Solis-RSD-1G - V1.0 9 0621 - 27 V6Документ9 страницManual Solis-RSD-1G - V1.0 9 0621 - 27 V6Long ComtechОценок пока нет

- Annotated BibliographyДокумент2 страницыAnnotated BibliographyWinston QuilatonОценок пока нет

- Presentation On Pre Bid MeetingДокумент23 страницыPresentation On Pre Bid MeetinghiveОценок пока нет

- Candlescript IntroductionДокумент26 страницCandlescript IntroductionLucasLavoisierОценок пока нет

- A Real-Time Face Recognition System Using Eigenfaces: Daniel GeorgescuДокумент12 страницA Real-Time Face Recognition System Using Eigenfaces: Daniel GeorgescuAlex SisuОценок пока нет

- HAZOPДокумент47 страницHAZOPMiftakhul Nurdianto100% (4)

- Cing - Common Interface For NMR Structure Generation: Results 1 - 10 of 978Документ3 страницыCing - Common Interface For NMR Structure Generation: Results 1 - 10 of 978Judap FlocОценок пока нет

- BC Zong ProjectДокумент25 страницBC Zong Projectshahbaz awanОценок пока нет

- ABB Motors for Hazardous AreasДокумент65 страницABB Motors for Hazardous Areaslaem269Оценок пока нет

- Toyota 1ZZ FE 3ZZ FE Engine Repair Manual RM1099E PDFДокумент141 страницаToyota 1ZZ FE 3ZZ FE Engine Repair Manual RM1099E PDFJhorwind Requena87% (15)

- UnlversДокумент55 страницUnlversCan AcarОценок пока нет

- As 61800.3-2005 Adjustable Speed Electrical Power Drive Systems EMC Requirements and Specific Test MethodsДокумент11 страницAs 61800.3-2005 Adjustable Speed Electrical Power Drive Systems EMC Requirements and Specific Test MethodsSAI Global - APAC0% (1)

- Kollmorgen PMA Series CatalogДокумент6 страницKollmorgen PMA Series CatalogElectromateОценок пока нет

- "The Land of Plenty?": Student's Name: Bárbara Milla. Semester: Third Semester. Professor: Miss Patricia TapiaДокумент11 страниц"The Land of Plenty?": Student's Name: Bárbara Milla. Semester: Third Semester. Professor: Miss Patricia TapiaAndrea José Milla OrtizОценок пока нет

- AART To Revitalize Aarhus Port District With TerracedДокумент4 страницыAART To Revitalize Aarhus Port District With TerracedaditamatyasОценок пока нет

- Becoming A Changemaker Introduction To Social InnovationДокумент14 страницBecoming A Changemaker Introduction To Social InnovationRabih SouaidОценок пока нет

- CamlabДокумент22 страницыCamlabviswamanojОценок пока нет

- Technological, Legal and Ethical ConsiderationsДокумент21 страницаTechnological, Legal and Ethical ConsiderationsAngela Dudley100% (2)

- Second Year Hall Ticket DownloadДокумент1 страницаSecond Year Hall Ticket DownloadpanditphotohouseОценок пока нет

- QMCS 16 - 2 Process CapabilityДокумент27 страницQMCS 16 - 2 Process CapabilityalegabipachecoОценок пока нет

- PP43C10-10.25-VS 43c10varДокумент2 страницыPP43C10-10.25-VS 43c10varJonas RachidОценок пока нет

- Muh Eng 50hzДокумент8 страницMuh Eng 50hzaleks canjugaОценок пока нет

- Catalog CabluriДокумент7 страницCatalog CabluriAdrian OprisanОценок пока нет

- Versidrain 150: Green RoofДокумент2 страницыVersidrain 150: Green RoofMichael Tiu TorresОценок пока нет

- Minimum drilling supplies stockДокумент3 страницыMinimum drilling supplies stockAsif KhanzadaОценок пока нет