Академический Документы

Профессиональный Документы

Культура Документы

What Is Parallel Computing

Загружено:

Laura Davis0 оценок0% нашли этот документ полезным (0 голосов)

76 просмотров9 страницParallel Computing is the simultaneous use of multiple compute resources to solve a computational problem. A shared memory system is relatively easy to program since all processors share a single view of data and the communication between processors can be as fast as memory accesses to the same location. In a distributed system, all processors can access the same memory at the same time. In distributed systems, each processor executes as if other CPUs does not exist.

Исходное описание:

Оригинальное название

What is Parallel Computing (1).docx

Авторское право

© © All Rights Reserved

Доступные форматы

DOCX, PDF, TXT или читайте онлайн в Scribd

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документParallel Computing is the simultaneous use of multiple compute resources to solve a computational problem. A shared memory system is relatively easy to program since all processors share a single view of data and the communication between processors can be as fast as memory accesses to the same location. In a distributed system, all processors can access the same memory at the same time. In distributed systems, each processor executes as if other CPUs does not exist.

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате DOCX, PDF, TXT или читайте онлайн в Scribd

0 оценок0% нашли этот документ полезным (0 голосов)

76 просмотров9 страницWhat Is Parallel Computing

Загружено:

Laura DavisParallel Computing is the simultaneous use of multiple compute resources to solve a computational problem. A shared memory system is relatively easy to program since all processors share a single view of data and the communication between processors can be as fast as memory accesses to the same location. In a distributed system, all processors can access the same memory at the same time. In distributed systems, each processor executes as if other CPUs does not exist.

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате DOCX, PDF, TXT или читайте онлайн в Scribd

Вы находитесь на странице: 1из 9

What is Parallel Computing?

Traditionally, software has been written for serial computation:

To be run on a single computer having a single Central Processing Unit (CPU);

A problem is broken into a discrete series of instructions.

Instructions are executed one after another.

Only one instruction may execute at any moment in time.

Parallel computing is the simultaneous use of multiple compute resources to solve a

computational problem.

To be run using multiple CPUs

A problem is broken into discrete parts that can be solved concurrently

Each part is further broken down to a series of instructions

Instructions from each part execute simultaneously on different CPUs

What are the Resources for Parallel Computing ?

The compute resources can include:

A single computer with multiple processors;

A single computer with (multiple) processor(s) and some specialized computer

resources (GPU, FPGA )

An arbitrary number of computers connected by a network;

A combination of both.

What are the applications of Parallel Computing ?

weather and climate

chemical and nuclear reactions

biological, human genome

geological, seismic activity

mechanical devices - from prosthetics to spacecraft

electronic circuits

manufacturing processes

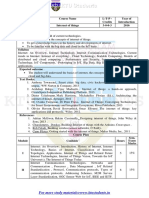

Flynns classifications

Shared Memory Multiprocessing

Shared memory systems form a major category of multiprocessors. In this category, all

processors share a global memory .

Communication between tasks running on different processors is performed through

writing to and reading from the global memory.

All interprocessor coordination and synchronization is also accomplished via the global

memory.

Address space is identical in all processors.

Memory will not know which CPU is asking for the memory.

Each CPU execute as if other CPUs does not exists.

A shared memory system is relatively easy to program since all processors share a single

view of data and the communication between processors can be as fast as memory

accesses to a same location.

Two main problems need to be addressed when designing a shared memory system:

1. performance degradation due to contention. Performance degradation might

happen when multiple processors are trying to access the shared memory

simultaneously. A typical design might use caches to solve the contention

problem.

2. coherence problems. Having multiple copies of data, spread throughout the

caches, might lead to a coherence problem. The copies in the caches are coherent

if they are all equal to the same value. However, if one of the processors writes

over the value of one of the copies, then the copy becomes inconsistent because it

no longer equals the value of the other copies.

Scalability remains the main drawback of a shared memory system.

The simplest shared memory system consists of one memory module (M) that can be

accessed from two processors P1 and P2

1. Requests arrive at the memory module through its two ports. An arbitration unit

within the memory module passes requests through to a memory controller.

2. If the memory module is not busy and a single request arrives, then the arbitration

unit passes that request to the memory controller and the request is satisfied.

3. The module is placed in the busy state while a request is being serviced. If a new

request arrives while the memory is busy servicing a previous request, the

memory module sends a wait signal, through the memory controller, to the

processor making the new request.

4. In response, the requesting processor may hold its request on the line until the

memory becomes free or it may repeat its request some time later.

5. If the arbitration unit receives two requests, it selects one of them and passes it to

the memory controller. Again, the denied request can be either held to be served

next or it may be repeated some time later.

In computer software, shared memory is either

a method of inter-process communication (IPC), i.e. a way of exchanging data between

programs running at the same time. One process will create an area in RAM which other

processes can access, or

a method of conserving memory space by directing accesses to what would ordinarily be

copies of a piece of data to a single instance instead, by using virtual memorymappings or

with explicit support of the program in question. This is most often used for shared libraries .

Support on UNIX platforms

POSIX provides a standardized API for using shared memory, POSIX Shared Memory. This uses

the function shm_open from sys/mman.h.

[1]

POSIX interprocess communication (part of the

POSIX:XSI Extension) includes the shared-memory

functions shmat, shmctl, shmdt and shmget. UNIX System V provides an API for shared

memory as well. This uses shmget from sys/shm.h. BSD systems provide "anonymous mapped

memory" which can be used by several processes.

UMA Uniform Memory Access

In the UMA system a shared memory is accessible by all processors through an

interconnection network in the same way a single processor accesses its memory.

All processors have equal access time to any memory location. The interconnection

network used in the UMA can be a single bus, multiple buses, or a crossbar switch.

CPU

CPU

CPU

Inter

Connection

Network

Memory

Because access to shared memory is balanced, these systems are also called SMP

(symmetric multiprocessor) systems. Each processor has equal opportunity to read/write

to memory, including equal access speed.

o A typical bus-structured SMP computer, attempts to reduce contention for the bus

by fetching instructions and data directly from each individual cache, as much as

possible.

o In the extreme, the bus contention might be reduced to zero after the cache

memories are loaded from the global memory, because it is possible for all

instructions and data to be completely contained within the cache.

This memory organization is the most popular among shared memory systems. Examples

of this architecture are Sun Starfire servers, HP V series, and Compaq AlphaServer GS,

Silicon Graphics Inc. multiprocessor servers.

Nonuniform Memory Access (NUMA)

In the NUMA system, each processor has part of the shared memory attached

The memory has a single address space. Therefore, any processor could access any

memory location directly using its real address. However, the access time to modules

depends on the distance to the processor. This results in a nonuniform memory access

time.

A processor can also have a built-in memory controller as present in Intels Quick Path

Interconnect (QPI) NUMA Architecture.

Unlike Distributed Memory Architecture, the memory of other processor is accessible but

the latency to access them is not same. The memory which is local to other processor is

called as remote memory or foreign memory.

A number of architectures are used to interconnect processors to memory modules in a

NUMA. Among these are the tree and the hierarchical bus networks.

Examples of NUMA architecture are BBN TC-2000, SGI Origin 3000, and Cray T3E.

Distributed memory Multiprocessing

Distributed memory refers to a multiple-processor computer system in which

each processor has its own private memory.

Computational tasks can only operate on local data, and if remote data is required, the

computational task must communicate with one or more remote processors.

There is typically a processor, a memory, and some form of interconnection that allows

programs on each processor to interact with each other.

If any cpu wants to accesslocal memory that is held by other cpu a cpu-cpu

communication takes place to access the data from other memory through corresponding

cpu.

The interconnection can be organised with point to point links or separate hardware can

provide a switching network.

Wise organization will keep all the desired data for a cpu in its local memory and only

communication through interconnection network will be then messages between cpus.

The network topology is a key factor in determining how the multi-processor machine scales.

The key issue in programming distributed memory systems is how to distribute the data over

the memories. Depending on the problem solved, the data can be distributed statically, or it

can be moved through the nodes. Data can be moved on demand, or data can be pushed to

the new nodes in advance.

Data can be kept statically in nodes if most computations happen locally, and only changes

on edges have to be reported to other nodes. An example of this is simulation where data is

modeled using a grid, and each node simulates a small part of the larger grid. On every

iteration, nodes inform all neighboring nodes of the new edge data.

The advantage of (distributed) shared memory is that it offers a unified address space in

which all data can be found.

The advantage of distributed memory is that it excludes race conditions, and that it forces the

programmer to think about data distribution.

The advantage of distributed (shared) memory is that it is easier to design a machine that

scales with the algorithm

Distributed shared memory hides the mechanism of communication - it does not hide the

latency of communication.

How Parallelism is done in Sequential machines?

1. Multiplicity of functional units

Use of multiple processing elements under one controller

Many of the ALU functions can be distributed to multiple specialized units

These multiple Functional Units are independent of each other

Example:

The CDC-6600

10 Functional execution units built into its

CPU The 6600 CP included 10 parallel functional units, allowing multiple instructions to be worked on

at the same time. Today this is known as a superscalar design, while at the time it was simply

"unique". The system read and decoded instructions from memory as fast as possible, generally faster

than they could be completed, and fed them off to the units for processing. The units were:

floating point multiply (2 copies)

floating point divide

floating point add

IBM 360/91

2 parallel execution units

Fixed point arithmetic

Floating point arithmetic(2 Functional units)

Floating point add-sub

Floating point multiply-div

2.Parallelism & pipelining within the CPU

Parallelism is provided by building parallel adders in almost all ALUs

Pipelining Each task is divided into subtasks which can be executed in parallel

Вам также может понравиться

- 02 - Lecture - Part1-Flynns TaxonomyДокумент21 страница02 - Lecture - Part1-Flynns TaxonomyAhmedОценок пока нет

- Parallel Computing Lecture # 6: Parallel Computer Memory ArchitecturesДокумент16 страницParallel Computing Lecture # 6: Parallel Computer Memory ArchitecturesKrishnammal SenthilОценок пока нет

- Classification Based On Memory Access Architecture Shared Memory General Characteristics: General CharacteristicsДокумент4 страницыClassification Based On Memory Access Architecture Shared Memory General Characteristics: General Characteristicsdevansh pandeyОценок пока нет

- Lec 5 SharedArch PDFДокумент16 страницLec 5 SharedArch PDFMuhammad ImranОценок пока нет

- Disclaimer: - in Preparation of These Slides, Materials Have Been Taken FromДокумент33 страницыDisclaimer: - in Preparation of These Slides, Materials Have Been Taken FromZara ShabirОценок пока нет

- Unit III Multiprocessor IssuesДокумент42 страницыUnit III Multiprocessor Issuesbala_07123Оценок пока нет

- Parallel Processors and Cluster Systems: Gagan Bansal IME SahibabadДокумент15 страницParallel Processors and Cluster Systems: Gagan Bansal IME SahibabadGagan BansalОценок пока нет

- Advance Computer Architecture2Документ36 страницAdvance Computer Architecture2AnujОценок пока нет

- Lecture4 (Share Memory-"According Access")Документ16 страницLecture4 (Share Memory-"According Access")hussiandavid26Оценок пока нет

- Unit 6 - Computer Organization and Architecture - WWW - Rgpvnotes.inДокумент14 страницUnit 6 - Computer Organization and Architecture - WWW - Rgpvnotes.inNandini SharmaОценок пока нет

- Multi-Processor / Parallel ProcessingДокумент12 страницMulti-Processor / Parallel ProcessingSayan Kumar KhanОценок пока нет

- Chapter - 5 Multiprocessors and Thread-Level Parallelism: A Taxonomy of Parallel ArchitecturesДокумент41 страницаChapter - 5 Multiprocessors and Thread-Level Parallelism: A Taxonomy of Parallel ArchitecturesraghvendrmОценок пока нет

- Flynn's Classification/ Flynn's Taxonomy:: StreamДокумент13 страницFlynn's Classification/ Flynn's Taxonomy:: StreamSaloni NayanОценок пока нет

- Theory of Computation Programming Locality of Reference Computer StorageДокумент4 страницыTheory of Computation Programming Locality of Reference Computer Storagesitu_elecОценок пока нет

- ACA AssignmentДокумент18 страницACA AssignmentdroidОценок пока нет

- A502018463 23825 5 2019 Unit6Документ36 страницA502018463 23825 5 2019 Unit6ayush ajayОценок пока нет

- Embedded System ArchitectureДокумент10 страницEmbedded System ArchitectureShruti KadyanОценок пока нет

- Basics of Operating Systems (IT2019-2) : Assignment VДокумент4 страницыBasics of Operating Systems (IT2019-2) : Assignment VGiang LeОценок пока нет

- Classification - Shared Memory SystemsДокумент3 страницыClassification - Shared Memory SystemsPranav KasliwalОценок пока нет

- COA AssignmentДокумент21 страницаCOA Assignment3d nat natiОценок пока нет

- Parallel Processing:: Multiple Processor OrganizationДокумент24 страницыParallel Processing:: Multiple Processor OrganizationKrishnaОценок пока нет

- Module 2 - Parallel ComputingДокумент55 страницModule 2 - Parallel ComputingmuwaheedmustaphaОценок пока нет

- Term Paper: Computer Organization and Architecure (Cse211)Документ7 страницTerm Paper: Computer Organization and Architecure (Cse211)sunnyajayОценок пока нет

- ACA Assignment 4Документ16 страницACA Assignment 4shresth choudharyОценок пока нет

- Multi-Processor-Parallel Processing PDFДокумент12 страницMulti-Processor-Parallel Processing PDFBarnali DuttaОценок пока нет

- Introduction To Distributed Operating Systems Communication in Distributed SystemsДокумент150 страницIntroduction To Distributed Operating Systems Communication in Distributed SystemsSweta KamatОценок пока нет

- 2ad6a430 1637912349895Документ51 страница2ad6a430 1637912349895roopshree udaiwalОценок пока нет

- CS8603 Unit IДокумент35 страницCS8603 Unit IPooja MОценок пока нет

- Tightly Coupled MicroprocessorsДокумент14 страницTightly Coupled Microprocessorsgk_gbu100% (1)

- So2 - Tifi - Tusty Nadia Maghfira-Firda Priatmayanti-Fatthul Iman-Fedro Jordie PDFДокумент9 страницSo2 - Tifi - Tusty Nadia Maghfira-Firda Priatmayanti-Fatthul Iman-Fedro Jordie PDFTusty Nadia MaghfiraОценок пока нет

- Solved Assignment - Parallel ProcessingДокумент29 страницSolved Assignment - Parallel ProcessingNoor Mohd Azad63% (8)

- Solution Manual For Operating System Concepts Essentials 2nd Edition by SilberschatzДокумент6 страницSolution Manual For Operating System Concepts Essentials 2nd Edition by Silberschatztina tinaОценок пока нет

- Multi-Processor / Parallel ProcessingДокумент12 страницMulti-Processor / Parallel ProcessingRoy DhruboОценок пока нет

- Unit1 Operating SystemДокумент25 страницUnit1 Operating SystemPoornima.BОценок пока нет

- Kernel (Computing) : o o o o o o o o o oДокумент13 страницKernel (Computing) : o o o o o o o o o ohritik_princeОценок пока нет

- Name of Faculty: DR Jaikaran Singh Designation: Professor Department: ECE Subject: LNCTS CS-4 Sem. CSO Unit: 5 Topic: Notes For Unit - VДокумент52 страницыName of Faculty: DR Jaikaran Singh Designation: Professor Department: ECE Subject: LNCTS CS-4 Sem. CSO Unit: 5 Topic: Notes For Unit - Vgourav sainiОценок пока нет

- Multicore ComputersДокумент18 страницMulticore ComputersMikias YimerОценок пока нет

- Thread Level ParallelismДокумент21 страницаThread Level ParallelismKashif Mehmood Kashif MehmoodОценок пока нет

- MulticoreДокумент3 страницыMulticoremasow50707Оценок пока нет

- COME6102 Chapter 1 Introduction 2 of 2Документ8 страницCOME6102 Chapter 1 Introduction 2 of 2Franck TiomoОценок пока нет

- Unit VIДокумент50 страницUnit VIoptics opticsОценок пока нет

- Symmetric Multiprocessing and MicrokernelДокумент6 страницSymmetric Multiprocessing and MicrokernelManoraj PannerselumОценок пока нет

- Hahhaha 3333Документ7 страницHahhaha 3333翁銘禧Оценок пока нет

- Synopsis On "Massive Parallel Processing (MPP) "Документ4 страницыSynopsis On "Massive Parallel Processing (MPP) "Jyoti PunhaniОценок пока нет

- Lectures On DSДокумент8 страницLectures On DSaj54321Оценок пока нет

- Dis MaterialДокумент49 страницDis MaterialArun BОценок пока нет

- Mca - Unit IiiДокумент59 страницMca - Unit IiiVivek DubeyОценок пока нет

- Unit 1Документ58 страницUnit 1Deepak Varma22Оценок пока нет

- Taxonomy of Parallel Computing ParadigmsДокумент9 страницTaxonomy of Parallel Computing ParadigmssushmaОценок пока нет

- Multi-Core Processors: Page 1 of 25Документ25 страницMulti-Core Processors: Page 1 of 25nnОценок пока нет

- SISdДокумент17 страницSISdPriyaSrihariОценок пока нет

- AOS - Theory - Multi-Processor & Distributed UNIX Operating Systems - IДокумент13 страницAOS - Theory - Multi-Processor & Distributed UNIX Operating Systems - ISujith Ur FrndОценок пока нет

- Unit-2 OsДокумент18 страницUnit-2 OsAtharva ShasaneОценок пока нет

- Unit-1 OsДокумент9 страницUnit-1 Osbharathijawahar583Оценок пока нет

- Arkom 13-40275Документ32 страницыArkom 13-40275Harier Hard RierОценок пока нет

- P D Group2-2Документ6 страницP D Group2-2Ursulla ZekingОценок пока нет

- A Review On Use of MPI in Parallel Algorithms: IPASJ International Journal of Computer Science (IIJCS)Документ8 страницA Review On Use of MPI in Parallel Algorithms: IPASJ International Journal of Computer Science (IIJCS)International Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- 0014 SharedMemoryArchitectureДокумент31 страница0014 SharedMemoryArchitectureAsif KhanОценок пока нет

- Operating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesОт EverandOperating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesОценок пока нет

- Multicore Software Development Techniques: Applications, Tips, and TricksОт EverandMulticore Software Development Techniques: Applications, Tips, and TricksРейтинг: 2.5 из 5 звезд2.5/5 (2)

- Sap NotebookДокумент176 страницSap NotebookGobara DhanОценок пока нет

- Onepager: What Is FTEC?Документ3 страницыOnepager: What Is FTEC?Виталий МельникОценок пока нет

- GPS Tracking SystemДокумент4 страницыGPS Tracking SystemSreenath SreeОценок пока нет

- NotesДокумент116 страницNotesSiddarthModiОценок пока нет

- Symantec DLP 14.6 Server FlexResponse Platform Developers GuideДокумент54 страницыSymantec DLP 14.6 Server FlexResponse Platform Developers Guidedbf75Оценок пока нет

- Software Process Improvement: Nizam Farid Ahmed Senior Software Architect, LEADSДокумент12 страницSoftware Process Improvement: Nizam Farid Ahmed Senior Software Architect, LEADSnurul000Оценок пока нет

- Computer Organization & Assembly Language: Conditional and Unconditional Jump Instructions Loop InstructionДокумент23 страницыComputer Organization & Assembly Language: Conditional and Unconditional Jump Instructions Loop InstructionAbdul RahmanОценок пока нет

- SPI: Daisy Chaining: Name: Ahmed Saleh GaberДокумент4 страницыSPI: Daisy Chaining: Name: Ahmed Saleh GaberAhmed SalehОценок пока нет

- Conformance Testing ProcessДокумент37 страницConformance Testing ProcessvietbkfetОценок пока нет

- Oracle Fusion Apps JDeveloper SetupДокумент16 страницOracle Fusion Apps JDeveloper SetupGyan Darpan YadavОценок пока нет

- Digital Marketing Services Brochure - 230819 - 110029Документ22 страницыDigital Marketing Services Brochure - 230819 - 110029Parminder SinghОценок пока нет

- DevOps Resume by DevOps ShackДокумент3 страницыDevOps Resume by DevOps Shackravi_kishore21Оценок пока нет

- Luxon CamДокумент44 страницыLuxon CamCazador29Оценок пока нет

- Grain SizeДокумент3 страницыGrain SizeFaustino Baltazar espirituОценок пока нет

- Compare Mac Models - Apple (IN)Документ3 страницыCompare Mac Models - Apple (IN)sanОценок пока нет

- Docu93977 Avamar 19 Management Console Command Line Interface (MCCLI) Programmer GuideДокумент166 страницDocu93977 Avamar 19 Management Console Command Line Interface (MCCLI) Programmer GuidelinuxirОценок пока нет

- ValueCut User Manual EnglishДокумент112 страницValueCut User Manual EnglishJavier Espinoza GamarraОценок пока нет

- Ext Js 3 0 Cookbook PDFДокумент2 страницыExt Js 3 0 Cookbook PDFJosephОценок пока нет

- RE - Full NVMe Support For Older Intel Chipsets Possible! - 33 PDFДокумент11 страницRE - Full NVMe Support For Older Intel Chipsets Possible! - 33 PDFAbdelkader DraïОценок пока нет

- Linkage New Token GuideДокумент3 страницыLinkage New Token GuidePeter CheungОценок пока нет

- SEN NotesДокумент375 страницSEN NotesSample AccountОценок пока нет

- What's New in CSS3 by Estelle WeylДокумент38 страницWhat's New in CSS3 by Estelle Weylmartin_rr100% (1)

- On-Line INTERNSHIP Weekly Progress Status Report: Institute of Engineering and TechnologyДокумент2 страницыOn-Line INTERNSHIP Weekly Progress Status Report: Institute of Engineering and TechnologyShravan thoutiОценок пока нет

- Sample Abstract For Project PresentationДокумент3 страницыSample Abstract For Project PresentationbuttermasalaОценок пока нет

- E-Learning in NigeriaДокумент13 страницE-Learning in NigeriaHoraceNwabunweneОценок пока нет

- Ebooks vs. Traditional Books: Ebook ConvenienceДокумент10 страницEbooks vs. Traditional Books: Ebook ConvenienceLOVEОценок пока нет

- 3CX VoIP Telephone, Phone SystemДокумент18 страниц3CX VoIP Telephone, Phone SystemRob Bliss Telephone, Phone System SpecialistОценок пока нет

- Exp ManualДокумент66 страницExp ManualMahmoudwaked100% (1)

- IT462 Internet of ThingsДокумент2 страницыIT462 Internet of ThingsHOD CSОценок пока нет

- Klipsch ProMedia 2.1 Owners ManualДокумент12 страницKlipsch ProMedia 2.1 Owners ManualJohn E. BeОценок пока нет