Академический Документы

Профессиональный Документы

Культура Документы

Prog Assign 2 Decoder

Загружено:

elfzwolfИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Prog Assign 2 Decoder

Загружено:

elfzwolfАвторское право:

Доступные форматы

A Greedy Decoder for Programming Assignment 2

- Some changes have been made to the decoder for PA2. If you've made changes to

these files, You might want to make a backup of your *.java files before you copy

the new ones over.

Files added/changed in /afs/ir/class/cs224n/pa2/

- java/src/cs224n/assignments/DecoderTester.java

- java/src/cs224n/decoder/Decoder.java

- java/src/cs224n/decoder/GreedyDecoder.java

- java/src/cs224n/decoder/Hypothesis.java

- java/src/cs224n/metrics/SegStats.java

- java/src/cs224n/util/Alignment.java

(The old starter code is in /afs/ir/class/cs224n/pa2/java.old in case you need them)

- Options added for "DecoderTest"

- "-lmweight" : a weight for the log(English LM prob) of a hypothesis. Default

= 0.6

- "-transweight" : a weight for the log(WordAlignment prob) of a hypothesis

(source, target and alignment). Default = 0.4

- "-lengthweight" : a weight for the length(e) of the final score. This is to

prevent the decoder to predict really short sentence in order to get higher

LM score. Default = 1.0

- Example usage:

- First, run

./run-dec

You'll notice that there are lots of "trans prob:-Infinity" in the output.

It's because the getAlignmentProb method in BaselineWordAligner

always return 0, and the "trans prob" in the output is the logarithmic of

getAlignmentProb.

- Once you have your Model 1 and Model 2 aligner implemented, try running:

./run-dec -wamodel cs224n.wordaligner.YourModel1WordAligner

./run-dec -wamodel cs224n.wordaligner.YourModel2WordAligner

- Output:

- At the end of each run, you'll get numbers like this:

WER: 0.6165982959924267

BLEU: 0.11349539343790904

--> log Ngram Scores: -0.5171279110178139/

-1.6198672087310861/-2.620151512608046/-3.3524072174927233

--> BP: 0.8619098752385267 (refLen=3169.0,hypLen=2759.0)

- Once your Word Aligner works reasonably on AER, you're required to compare the

performance your Model 1 and Model 2 on the decoder with the default weights and

the default LM. Other than that, there are more things you can try:

- Error analysis of the guessed English sentences you get

- Use different LMs you implement in PA1

- Tune the 3 weights to see what different results you get

- Run on different languages

- Look into the decoder and see if there's anything you can do to improve it

(speed, performance, anything you find interesting), etc.

Please note that some of the resources used in this assignment require

a Stanford Network Account and therefore may not be accessible.

- What numbers should we expect to get?

Here's the number I got with Model 1 and Model 2, using the

EmpiricalUnigramLanguageModel. The language listed are the different source

languages (translating into English). You might get different results based on your

implementation details. The BLEU scores here are quite low compared to

state-of-the-art systems. Keep in mind this is a simple greedy decoder, and the

original paper was using higher IBM models. The important point is to see if your

Model 2 performs better than Model 1, and see if you can find something interesting

on the error analysis.

BLEU french german spanish

Model 1 0.009614 0.006381 0.013086

Model 2 0.113495 0.06767 0.087263

Brevity Penalty french german spanish

Model 1 0.15646 0.128221 0.165661

Model 2 0.86191 0.557334 0.632112

WER french german spanish

Model 1 0.784475 0.796668 0.764453

Model 2 0.616598 0.649779 0.6125

Вам также может понравиться

- Projects With Microcontrollers And PICCОт EverandProjects With Microcontrollers And PICCРейтинг: 5 из 5 звезд5/5 (1)

- Interview Questions for IBM Mainframe DevelopersОт EverandInterview Questions for IBM Mainframe DevelopersРейтинг: 1 из 5 звезд1/5 (1)

- An Implementation of WHAM: The Weighted Histogram Analysis MethodДокумент18 страницAn Implementation of WHAM: The Weighted Histogram Analysis MethodWilliam AgudeloОценок пока нет

- Changes JumboДокумент295 страницChanges JumboPlumbi EncoreОценок пока нет

- Asic Lab3Документ11 страницAsic Lab3balukrish2018Оценок пока нет

- Lab 00Документ6 страницLab 00Abdul HadiОценок пока нет

- Tutorial JAGS PDFДокумент24 страницыTutorial JAGS PDFAlexNeumannОценок пока нет

- SPEED v.11.04: Release NotesДокумент19 страницSPEED v.11.04: Release NoteskhodabandelouОценок пока нет

- Applied Bayesian Modeling A Brief JAGS and R2jags TutorialДокумент22 страницыApplied Bayesian Modeling A Brief JAGS and R2jags TutorialAnonymous KsYY9NyОценок пока нет

- USB ThermometerДокумент40 страницUSB ThermometerTheodøros D' SpectrøømОценок пока нет

- Release Notes - READДокумент3 страницыRelease Notes - READHari KurniadiОценок пока нет

- FemtoRV32 Piplined Processor ReportДокумент25 страницFemtoRV32 Piplined Processor ReportRatnakarVarunОценок пока нет

- Creating A Matlab GUI For DC MOTORS: by Juan C. Cadena and Elizabeth Sandoval, EE444 Fall 2011Документ6 страницCreating A Matlab GUI For DC MOTORS: by Juan C. Cadena and Elizabeth Sandoval, EE444 Fall 2011Kalaichelvi KarthikeyanОценок пока нет

- Lab1 SpecДокумент6 страницLab1 Spec星期三的配音是對的Оценок пока нет

- Cs294a 2011 AssignmentДокумент5 страницCs294a 2011 AssignmentJoseОценок пока нет

- Ems-Pro Tuning GuideДокумент52 страницыEms-Pro Tuning GuideHenry CastellanosОценок пока нет

- How To Setup GRBL & Control CNC Machine With ArduinoДокумент19 страницHow To Setup GRBL & Control CNC Machine With ArduinoYasir Yasir KovanОценок пока нет

- E63 LM1-LM2Документ13 страницE63 LM1-LM2Bogdan CodoreanОценок пока нет

- ICC 201012 LG 06 FinishingДокумент14 страницICC 201012 LG 06 FinishingAparna TiwariОценок пока нет

- EEE347 CNG336 Lab1Документ6 страницEEE347 CNG336 Lab1Ilbey EvcilОценок пока нет

- Atmega TutorialДокумент18 страницAtmega TutorialEssel Jojo FlintОценок пока нет

- OpenDSS Custom ScriptingДокумент8 страницOpenDSS Custom ScriptingHassan Ali Al SsadiОценок пока нет

- Commands of PDДокумент18 страницCommands of PDRA NDYОценок пока нет

- V22LatheInstalling-Editing Post ProcessorsДокумент36 страницV22LatheInstalling-Editing Post ProcessorsCreo ParametricОценок пока нет

- Problem Project 1Документ4 страницыProblem Project 1Nandeesh GowdaОценок пока нет

- ExLlamaV2 - The Fastest Library To Run LLMsДокумент1 страницаExLlamaV2 - The Fastest Library To Run LLMstedsm55458Оценок пока нет

- NCS Dummy - Read MeДокумент10 страницNCS Dummy - Read MeJesper Meyland100% (1)

- Stinger GuideДокумент13 страницStinger GuidejrfjОценок пока нет

- Mentor Graphics Tutorial - From VHDL To Silicon Layout Design FlowДокумент7 страницMentor Graphics Tutorial - From VHDL To Silicon Layout Design FlowbipbulОценок пока нет

- Gmid RuidaДокумент7 страницGmid Ruida謝政谷Оценок пока нет

- ErgRace ManualДокумент71 страницаErgRace ManualLeo IeracitanoОценок пока нет

- STK500 User GuideДокумент16 страницSTK500 User GuideupeshalaОценок пока нет

- A Simple RS232 Guide: Posted On 12/12/2005 by Jon Ledbetter Updated 05/22/2007Документ30 страницA Simple RS232 Guide: Posted On 12/12/2005 by Jon Ledbetter Updated 05/22/2007zilooОценок пока нет

- Lab 5Документ8 страницLab 5robertoОценок пока нет

- Powertrain Tasks - : Sub-Juniors Practice TasksДокумент6 страницPowertrain Tasks - : Sub-Juniors Practice TasksAriana FrappeОценок пока нет

- SimpleScalar GuideДокумент4 страницыSimpleScalar GuideSaurabh ChaubeyОценок пока нет

- WINGRIDDS v5.15 Release NotesДокумент14 страницWINGRIDDS v5.15 Release NotesdardОценок пока нет

- mcb1700 LAB - Intro - ARM Cortex m3Документ22 страницыmcb1700 LAB - Intro - ARM Cortex m3RagulANОценок пока нет

- Lab 5 Motorola 68000Документ12 страницLab 5 Motorola 68000Ayozzet WefОценок пока нет

- Writing Code For Microcontrollers: A Rough Outline For Your ProgramДокумент14 страницWriting Code For Microcontrollers: A Rough Outline For Your ProgramSritam Kumar DashОценок пока нет

- MicroCap Info Fall2000Документ14 страницMicroCap Info Fall2000wayan.wandira8122Оценок пока нет

- Lab Experiment 3: Building A Complete ProjectДокумент4 страницыLab Experiment 3: Building A Complete ProjectArlene SalongaОценок пока нет

- hw03 SolДокумент8 страницhw03 SolmoienОценок пока нет

- Programación Módulo Confort Jetta MK4Документ8 страницProgramación Módulo Confort Jetta MK4Rulas Palacios0% (1)

- TWSДокумент81 страницаTWSjomadamourОценок пока нет

- Lab4 Testing DFTДокумент6 страницLab4 Testing DFTDeepuОценок пока нет

- Week2 - Assignment SolutionsДокумент16 страницWeek2 - Assignment Solutionsmobal62523Оценок пока нет

- Co-Simulation With SpectreRF Matlab Simulink TutorialДокумент34 страницыCo-Simulation With SpectreRF Matlab Simulink TutorialluminedinburghОценок пока нет

- Cadence Basic SimulationДокумент10 страницCadence Basic SimulationLarry FredsellОценок пока нет

- Frewq Part1Документ75 страницFrewq Part1Prasad ShuklaОценок пока нет

- DatasheetДокумент13 страницDatasheetAshok GudivadaОценок пока нет

- Alias - Wavefront OpenRenderДокумент67 страницAlias - Wavefront OpenRenderEdHienaОценок пока нет

- A GAL Programmer For LINUX PCsДокумент14 страницA GAL Programmer For LINUX PCsluciednaОценок пока нет

- Ho Moderation PDFДокумент5 страницHo Moderation PDFAhsan SheikhОценок пока нет

- User Guide For Auto-WEKA Version 2.2: Lars Kotthoff, Chris Thornton, Frank HutterДокумент15 страницUser Guide For Auto-WEKA Version 2.2: Lars Kotthoff, Chris Thornton, Frank HutterS M IbrahimОценок пока нет

- Atmega TutorialДокумент18 страницAtmega Tutorialsaran2012Оценок пока нет

- WINGRIDDS v5.12 Release NotesДокумент12 страницWINGRIDDS v5.12 Release NotesdardОценок пока нет

- Miniprog Users Guide and Example ProjectsДокумент22 страницыMiniprog Users Guide and Example ProjectsMarcos Flores de AquinoОценок пока нет

- Ns 2 LeachДокумент4 страницыNs 2 Leachsnc_90Оценок пока нет

- Analog Design and Simulation Using OrCAD Capture and PSpiceОт EverandAnalog Design and Simulation Using OrCAD Capture and PSpiceОценок пока нет

- Storage-Tanks Titik Berat PDFДокумент72 страницыStorage-Tanks Titik Berat PDF'viki Art100% (1)

- LP Pe 3Q - ShaynevillafuerteДокумент3 страницыLP Pe 3Q - ShaynevillafuerteMa. Shayne Rose VillafuerteОценок пока нет

- Random Questions From Various IIM InterviewsДокумент4 страницыRandom Questions From Various IIM InterviewsPrachi GuptaОценок пока нет

- The Limits of The Sectarian Narrative in YemenДокумент19 страницThe Limits of The Sectarian Narrative in Yemenهادي قبيسيОценок пока нет

- DeadlocksДокумент41 страницаDeadlocksSanjal DesaiОценок пока нет

- Effective TeachingДокумент94 страницыEffective Teaching小曼Оценок пока нет

- School Activity Calendar - Millsberry SchoolДокумент2 страницыSchool Activity Calendar - Millsberry SchoolSushil DahalОценок пока нет

- Do Now:: What Is Motion? Describe The Motion of An ObjectДокумент18 страницDo Now:: What Is Motion? Describe The Motion of An ObjectJO ANTHONY ALIGORAОценок пока нет

- Ferroelectric RamДокумент20 страницFerroelectric RamRijy LoranceОценок пока нет

- RESEARCHДокумент5 страницRESEARCHroseve cabalunaОценок пока нет

- Or HandoutДокумент190 страницOr Handoutyared haftu67% (6)

- Chrysler CDS System - Bulletin2Документ6 страницChrysler CDS System - Bulletin2Martin Boiani100% (1)

- MCC333E - Film Review - Myat Thu - 32813747Документ8 страницMCC333E - Film Review - Myat Thu - 32813747Myat ThuОценок пока нет

- 3DS 2017 GEO GEMS Brochure A4 WEBДокумент4 страницы3DS 2017 GEO GEMS Brochure A4 WEBlazarpaladinОценок пока нет

- Hackerearth Online Judge: Prepared By: Mohamed AymanДокумент21 страницаHackerearth Online Judge: Prepared By: Mohamed AymanPawan NaniОценок пока нет

- Debate Brochure PDFДокумент2 страницыDebate Brochure PDFShehzada FarhaanОценок пока нет

- Lab 1Документ51 страницаLab 1aliОценок пока нет

- STW 44 3 2 Model Course Leadership and Teamwork SecretariatДокумент49 страницSTW 44 3 2 Model Course Leadership and Teamwork Secretariatwaranchai83% (6)

- Chudamani Women Expecting ChangeДокумент55 страницChudamani Women Expecting ChangeMr AnantОценок пока нет

- Exemption in Experience & Turnover CriteriaДокумент4 страницыExemption in Experience & Turnover CriteriaVivek KumarОценок пока нет

- Gold Loan Application FormДокумент7 страницGold Loan Application FormMahesh PittalaОценок пока нет

- GR 9 Eng CodebДокумент6 страницGR 9 Eng CodebSharmista WalterОценок пока нет

- CBC DrivingДокумент74 страницыCBC DrivingElonah Jean ConstantinoОценок пока нет

- Periodic Table Lab AnswersДокумент3 страницыPeriodic Table Lab AnswersIdan LevyОценок пока нет

- Reinforced Concrete Design PDFДокумент1 страницаReinforced Concrete Design PDFhallelОценок пока нет

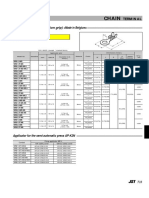

- Chain: SRB Series (With Insulation Grip)Документ1 страницаChain: SRB Series (With Insulation Grip)shankarОценок пока нет

- Ferrero A.M. Et Al. (2015) - Experimental Tests For The Application of An Analytical Model For Flexible Debris Flow Barrier Design PDFДокумент10 страницFerrero A.M. Et Al. (2015) - Experimental Tests For The Application of An Analytical Model For Flexible Debris Flow Barrier Design PDFEnrico MassaОценок пока нет

- PedagogicalДокумент94 страницыPedagogicalEdson MorenoОценок пока нет

- Structure of NABARD Grade AДокумент7 страницStructure of NABARD Grade ARojalin PaniОценок пока нет