Академический Документы

Профессиональный Документы

Культура Документы

Parallel Computing With Elmer

Загружено:

pilafaАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Parallel Computing With Elmer

Загружено:

pilafaАвторское право:

Доступные форматы

Elmer Open source finite element software for multiphysical problems

Elmer

Parallel computing with Elmer

Elmer Team

CSC IT Center for Science Ltd.

Elmer Course CSC, 9-10.1.2012

Outline

Parallel computin concepts

Parallel computin !it" Elmer

Preprocessin !it" Elmer#rid

Parallel sol$er ElmerSol$er%mpi

Postprocessin !it" Elmer#rid and ElmerPost

Introductor& e'ample( )lo! t"rou" pipe *unction

Parallel computing concepts

Parallel computing concepts

Parallel computation means e'ecutin tas+s concurrentl&.

, tas+ encapsulates a se-uential proram and local data, and its interface

to its en$ironment.

.ata of t"ose ot"er tas+s is remote.

.ata dependenc& means t"at t"e computation of one tas+ re-uires data from

an anot"er tas+ in order to proceed.

Parallel computers

S"ared memor&

,ll cores can access t"e

!"ole memor&.

.istri/uted memor&

,ll cores "a$e t"eir o!n

memor&.

Communication /et!een

cores is needed in order to

access t"e memor& of ot"er

cores.

Current supercomputers com/ine

t"e distri/uted and s"ared

memor& approac"es.

Parallel programming models

0essae passin 12pen0PI3

Can /e used /ot" in distri/uted and s"ared memor& computers.

Prorammin model allo!s ood parallel scala/ilit&.

Prorammin is -uite e'plicit.

T"reads 1pt"reads, 2pen0P3

Can /e used onl& in s"ared memor& computers.

Limited parallel scala/ilit&.

Simpler or less e'plicit prorammin.

Execution model

Parallel proram is launc"ed as a set of independent, identical processes

T"e same proram code and instructions.

Can reside in different computation nodes.

2r e$en in different computers.

Current CP45s in &our !or+stations

Si' cores 1,0. 2pteron S"an"ai3

0ulti-t"readin

)or e'ample, 2pen0P

6i" performance Computin 16PC3

0essae passin, for e'ample

2pen0PI

General remarks about parallel computing

T"e si7e of t"e pro/lem remains

constant.

E'ecution time decreases in proportion

to t"e increase in t"e num/er of cores.

Strong parallel scaling

Increasin t"e si7e of t"e pro/lem.

E'ecution time remains constant, !"en

num/er of cores increases in proportion

to t"e pro/lem si7e.

Weak parallel scaling

Parallel computing with Elmer

.omain decomposition.

,dditional pre-processin step called mes"

partitionin.

E$er& domain is runnin its o!n ElmerSol$er.

ElmerSol$er%mpi parallel process

communication

0PI

8ecom/ination of results to ElmerPost output.

Parallel computing with Elmer

2nl& selected linear ale/ra met"ods are

a$aila/le in parallel.

Iterati$e sol$ers 19r&lo$ su/space

met"ods3 are /asicall& t"e same.

)rom direct sol$ers, onl& 040PS e'ists in

parallel 1uses 2pen0P3

:it" t"e additional 6;P8E pac+ae, some

linear met"ods are a$aila/le onl& in

parallel.

Parallel computing with Elmer

Preconditioners re-uired /& iterati$e met"ods

are not t"e same as serial.

)or e'ample, IL4n.

0a& deteriorate parallel performance.

.iaonal preconditioner is t"e same as parallel

and "ence e'"i/its e'actl& t"e same /e"a$ior

in parallel as in serial.

Parallel computing with Elmer

Preprocessin

.e$elopin of automated

mes"in alorit"ms.

Postprocessin

Parallel postprocessin

Para$ie!

Grand challenges

Parallel workflow

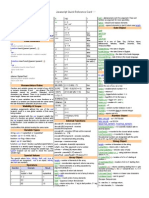

Scaling of wall clock time with dofs in

the cavity lid case using GMRES+ILU0.

Simulation y !uha Ruokolainen" #S#

and visuali$ation y Matti G%&hn" #S# .

Examples of parallel scaling of Elmer

Examples of parallel scaling of Elmer

0 1< =2 >? <> ?0 9< 112 12? 1>>

0

@00

1000

1@00

2000

2@00

=000

=@00

>000

Cores

T

i

m

e

A

s

Serial mes" structure of Elmer

eader file contains eneral dimensions( mes"."eader.

!ode file contains coordinate and o!ners"ip of nodes( mes".nodes.

Elements file contains compositions of /ul+ elements and o!ners"ips

1/odies3( mes".elements.

"oundary file contains compositions of elements and o!ners"ips

1/oundaries3 and dependencies 1parents3 /oundar& elements(

mes"./oundar&.

Parallel preprocessing with Elmer

Parallel mes" structure( Apartitionin.nA

eader file# part.1."eader, part.2."eader, B part.n."eader

!odes# part.1.nodes, part.2.nodes, B part.n.nodes

Elements# part.1.elements, part.2.elements, B part.n.elements

"oundary elements( part.1./oundar&, part.2./oundar&, B

part.n./oundar&

Shared nodes between partitions# part.1.s"ared, part.2.s"ared, B

part.n.s"ared

Parallel preprocessing with Elmer

T"e /est !a& to partition

Serial mes" Elmer#rid parallel mesh

General syntax

ElmerGrid 2 2 existing.mesh [partoption]

Two principal partitioning techniques

Along cartesian axis (simple geometries or topologies

!ET"# li$rary

Parallel preprocessing with Elmer

0inimi7es communication /et!een computation nodes.

0inimi7es t"e relati$e num/er of mes" elements /et!een

computation nodes.

E-ual load /et!een computation nodes.

$deal mesh partitioning

.irectional decomposition

Elmer#rid 2 2 dir -partition Nx Ny Nz F

-partition 2 2 1 0

-partition 2 2 1 1

element-!ise

nodal

Parallel computing with Elmer

.irectional decomposition

Elmer#rid 2 2 dir -partition C' C& C7 ) -partoder n' n& n7

.efines t"e orderin direction 1components of a $ector3.

Parallel computing with Elmer

4sin 0ETIS li/rar&

Elmer#rid 2 2 dir -metis C 0et"od

-metis 4 0

Part0es".ual

Part0es"Codal

-metis 4 1

Parallel computing with Elmer

0ETIS

Elmer#rid 2 2 dir -metis C 0et"od

-metis 4 2

Part#rap"9!a&

Part#rap"8ecursi$e

-metis 4 3

Parallel computing with Elmer

0ETIS

Elmer#rid 2 2 dir -metis C 0et"od

-metis 4 4

Part#rap"P9!a&

Parallel computing with Elmer

6alo-elements

Elmer#rid 2 2 dir -metis C 0et"od

-"alo

Cecessar&, if usin discontinuous

#aler+in met"od.

Puts D"ost cellE on eac" side of t"e

partition /oundar&.

Parallel computing with Elmer

0ore parallel options in Elmer#rid

-indirect( creates indirect connections.

-periodic )' )& )7( declares t"e periodic coordinate directions for

parallel mes"es.

-partoptim( aressi$e optimi7ation to node s"arin.

-part/! minimi7e t"e /and!idt" of partiotion-partition couplins.

Parallel computing with Elmer

0pirun -np C ElmerSol$er%mpi.

0i"t c"ane on ot"er platforms.

0i"t need a "ostfile.

Ceeds an C-partitioned mes".

Ceeds EL0E8S2LFE8%ST,8TIC)2, !"ic" contains t"e

name of t"e command file.

2ptional li/raries

6;P8E

040PS

Parallel %ersion of ElmerSol%er

.ifferent /e"a$iour of IL4 preconditioner.

Cot a$aila/le parts at partition

/oundaries.

Sometimes !or+s. If not,use 6;P8E

Linear S&stem 4se 6&pre G Loical True

Parallel %ersion of ElmerSol%er

,lternati$e preconditioners in 6;P8E

ParaSails 1sparse appro'imate in$erse preconditioner3

Linear S&stem Preconditionin G Strin DParaSailsE

Hoomer,0# 1,le/raic multirid3

Linear S&stem Preconditionin G Strin DHoomer,0#E

Parallel %ersion of ElmerSol%er

,lternati$e sol$ers

Hoomer,0# 1,le/raic 0ultirid3

Linear S&stem Sol$er G DIterati$eE

Linear S&stem Iterati$e 0et"od G DHoomer,0#E

0ultifrontal parallel direct sol$er 1040PS3

Linear S&stem Sol$er G D.irectE

Linear S&stem .irect 0et"od G 0umps

Parallel %ersion of ElmerSol%er

Elmer !rites results in partition!ise.

name.0.ep, name.1.ep, ..., name.1n-13.ep

ElmerPost fuses resultfiles into one.

Elmerrid 1@ = name

)uses all time steps 1also non-e'istin3 into a sinle file called name.ep

1o$er!ritten, if e'ists3.

Special option for onl& partial fuse

-sa$einter$al start end step

T"e first, last and t"e 1time3step of fusin parallel data.

Parallel postprocessing

$ntroductory example# &low through a pipe

'unction

)lo! t"rou" pipe *unction.

Houndar& conditions(

1. v

in

G 1 cmAs

2. Cone

=. v

in

G 1 cmAs

>. and @. no-slip

1u

i

G 0 mAs3 on !alls.

(escription of the problem

1

=

2

@

>

Partition of mes" !it" Elmer#rid.

Elmer#rid 2 2 mes" -out part%mes" -scale

0.01 0.01 0.01 -metis > 2

Scales t"e pro/lem from cm m.

Creates a mes" !it" > partitions

/& usin Part#rap"8ecursi$e

option of 0ETIS-li/rar&.

Preprocessing

Header

Mesh DB "." "flow"

End

Simulation

Coordinate System = "Cartesian 3D"

Simulation Type ="Steady"

utput !nter"als = !nte#er $

%ost &ile = &ile "parallel'flow.ep"

utput &ile = &ile "parallel'flow.result"

ma( output le"el = !nte#er )

End

Sol%er input file

Sol"er $

E*uation = "+a"ier-Sto,es"

ptimi-e Bandwidth = .o#i/al True

.inear System Sol"er = !terati"e

.inear System Dire/t Sol"er = Mumps

Sta0ili-ation Method = Sta0ili-ed

+onlinear System Con"er#en/e Toleran/e = 1eal $.2E-23

+onlinear System Ma( !terations = !nte#er 32

+onlinear System +ewton 3fter !terations = !nte#er $

+onlinear System +ewton 3fter Toleran/e = 1eal $.2E-23

End

Sol%er input file

Body $

+ame = "fluid"

E*uation = $

Material = $

Body &or/e = $

!nitial Condition = $

End

E*uation $

3/ti"e Sol"ers4$5 = $

Con"e/tion = Computed

End

Sol%er input file

!nitial Condition $

6elo/ity $ = 1eal 2.2

6elo/ity 7 = 1eal 2.2

6elo/ity 3 = 1eal 2.2

%ressure = 1eal 2.2

End

Body &or/e $

&low Body&or/e $ = 1eal 2.2

&low Body&or/e 7 = 1eal 2.2

&low Body&or/e 3 = 1eal 2.2

End

Sol%er input file

Material $

Density = 1eal $222.2

6is/osity = 1eal $.2

End

Boundary Condition $

+ame = "lar#einflow"

Tar#et Boundaries = $

+ormal-Tan#ential 6elo/ity = True

6elo/ity $ = 1eal -2.2$

6elo/ity 7 = 1eal 2.2

6elo/ity 3 = 1eal 2.2

End

Sol%er input file

2ut!ard pointin normal

Boundary Condition 7

+ame = "lar#eoutflow"

Tar#et Boundaries = 7

+ormal-Tan#ential 6elo/ity = True

6elo/ity 7 = 1eal 2.2

6elo/ity 3 = 1eal 2.2

End

Boundary Condition 3

+ame = "smallinflow"

Tar#et Boundaries = 3

+ormal-Tan#ential 6elo/ity = True

6elo/ity $ = 1eal -2.2$

6elo/ity 7 = 1eal 2.2

6elo/ity 3 = 1eal 2.2

End

Sol%er input file

2

=

Boundary Condition )

+ame = "pipewalls"

Tar#et Boundaries475 = ) 8

+ormal-Tan#ential 6elo/ity = &alse

6elo/ity $ = 1eal 2.2

6elo/ity 7 = 1eal 2.2

6elo/ity 3 = 1eal 2.2

End

Sol%er input file

>

@

Sa$e t"e sif-file !it" name parallel_flow.sif

:rite t"e name of t"e sif-file into EL0E8S2LFE8%ST,8TIC)2

Commands for $uori.csc.fi(

Parallel run

module swit/h %r#En"-p#i %r#En"-#nu

module load elmer9latest

sallo/ate -n ) --ntas,s-per-node=)

--mem-per-/pu=$222 -t 22:$2:22 ;p

intera/ti"e

srun ElmerSol"er'mpi

2n an usual 0PI platform(

mpirun ;np ) ElmerSol"er'mpi

C"ane into t"e mes" director&.

8un Elmer#rid to com/ine results.

Elmer#rid 1@ = parallel%flo!

Launc" ElmerPost.

Load parallel%flo!.ep.

)ombining the results

*dding heat transfer

Sol"er 7

E(e/ Sol"er = 3lways

E*uation = "Heat E*uation"

%ro/edure = "HeatSol"e" "HeatSol"er"

Steady State Con"er#en/e Toleran/e = 1eal 3.2E-23

+onlinear System Ma( !terations = !nte#er $

+onlinear System Con"er#en/e Toleran/e = 1eal $.2e-<

+onlinear System +ewton 3fter !terations = !nte#er $

+onlinear System +ewton 3fter Toleran/e = 1eal $.2e-7

.inear System Sol"er = !terati"e

.inear System Ma( !terations = !nte#er 822

.inear System Con"er#en/e Toleran/e = 1eal $.2e-<

Sta0ili-ation Method = Sta0ili-ed

*dding heat transfer

.inear System =se Hypre = .o#i/al True

.inear System !terati"e Method = Boomer3M>

Boomer3M> Ma( .e"els = !nte#er 78

Boomer3M> Coarsen Type = !nte#er 2

Boomer3M> +um &un/tions = !nte#er $

Boomer3M> 1ela( Type = !nte#er 3

Boomer3M> +um Sweeps = !nte#er $

Boomer3M> !nterpolation Type = !nte#er 2

Boomer3M> Smooth Type = !nte#er <

Boomer3M> Cy/le Type = !nte#er $

End

*dding heat transfer

Material $

?

Heat Capa/ity = 1eal $222.2

Heat Condu/ti"ity = 1eal $.2

End

Boundary Condition $

?

Temperature = 1eal $2.2

End

Boundary Condition 3

?

Temperature = 1eal @2.2

End

ParaFie! is an open-source, multi-platform data anal&sis

and $isuali7ation application /& 9it!are.

.istri/uted under ParaFie! License Fersion 1.2

Elmer5s 8esult2utputSol$e module pro$ides output as

FT9 4nstructured#rid files.

$tu

p$tu 1parallel3

Postprocessing with Para+iew

Output for Para+iew

Sol"er 3

E*uation = "1esult utput"

%ro/edure = "1esultutputSol"e" "1esultutputSol"er"

E(e/ Sol"er = 3fter Sa"in#

utput &ile +ame = Strin# "flowtemp"

utput &ormat = 6tu

Show 6aria0les = .o#i/al True

S/alar &ield $ = %ressure

S/alar &ield 7 = Temperature

6e/tor &ield $ = 6elo/ity

End

Para+iew

Para+iew

Вам также может понравиться

- Programming Assignment No.4Документ5 страницProgramming Assignment No.4Geeks ProgrammingОценок пока нет

- Statistical Tolerance Stack AnalysisДокумент52 страницыStatistical Tolerance Stack Analysisdineshnexus100% (1)

- A Day in Code: An illustrated story written in the C programming languageОт EverandA Day in Code: An illustrated story written in the C programming languageОценок пока нет

- Chatgpt SlidesДокумент112 страницChatgpt SlidesmkranthikumarmcaОценок пока нет

- Ansys Theory ReferenceДокумент1 067 страницAnsys Theory ReferencepilafaОценок пока нет

- Aspen Petroleum Scheduler ™ (APS) : Study Guide For CertificationДокумент5 страницAspen Petroleum Scheduler ™ (APS) : Study Guide For Certificationkhaled_behery9934Оценок пока нет

- Affiliate Marketing PDFДокумент6 страницAffiliate Marketing PDFNishantОценок пока нет

- Wireless Sensor and Actuator NetworksДокумент170 страницWireless Sensor and Actuator NetworksakelionОценок пока нет

- Space Invaders TutorialДокумент38 страницSpace Invaders TutorialcitisoloОценок пока нет

- What is ADO.NET and how to perform CRUD operationsДокумент5 страницWhat is ADO.NET and how to perform CRUD operationspacharneajayОценок пока нет

- Handout 6 (Chapter 6) : Point Estimation: Unbiased Estimator: A Point EstimatorДокумент9 страницHandout 6 (Chapter 6) : Point Estimation: Unbiased Estimator: A Point EstimatoradditionalpylozОценок пока нет

- RTDBДокумент88 страницRTDBrhvenkatОценок пока нет

- Particle Swarm Optimization Using C#Документ12 страницParticle Swarm Optimization Using C#abdulhakimbhsОценок пока нет

- 1.1. Otcl Basics: 1.1.1. Assigning Values To VariablesДокумент27 страниц1.1. Otcl Basics: 1.1.1. Assigning Values To VariablesReetika AroraОценок пока нет

- Looping Structure in InformaticaДокумент12 страницLooping Structure in InformaticaSushant SharmaОценок пока нет

- Assembly Line SchedulingДокумент9 страницAssembly Line SchedulingAroona KhanОценок пока нет

- EE497E - Lab 3:prelab: Student NameДокумент2 страницыEE497E - Lab 3:prelab: Student Namecpayne10409Оценок пока нет

- UNIX Sed, Vi and Awk Command ExamplesДокумент7 страницUNIX Sed, Vi and Awk Command ExamplesguviswaОценок пока нет

- DWT-based Watermarking AlgorithmДокумент6 страницDWT-based Watermarking AlgorithmArun DixitОценок пока нет

- Bottom Up ParserДокумент75 страницBottom Up Parsertrupti.kodinariya9810Оценок пока нет

- An 4E1 4ETH G Interface Converter ManualДокумент9 страницAn 4E1 4ETH G Interface Converter ManualAhmed Al-hamdaniОценок пока нет

- CNL Oral QuestionsДокумент7 страницCNL Oral QuestionsShree KumarОценок пока нет

- CS3570 Introduction To Multimedia: Homework #1Документ1 страницаCS3570 Introduction To Multimedia: Homework #1sandy123429Оценок пока нет

- Paper Title: Department of Electronics and Communication Engineering Organization Name, City, State, Country EmailДокумент6 страницPaper Title: Department of Electronics and Communication Engineering Organization Name, City, State, Country EmailShraddha GuptaОценок пока нет

- CW Config Sin DongleДокумент10 страницCW Config Sin DongleEduardo GomezОценок пока нет

- MCA4040 - ANALYSIS OF ALGORITHMS AND DATA STRUCTURES (THEORY) IДокумент4 страницыMCA4040 - ANALYSIS OF ALGORITHMS AND DATA STRUCTURES (THEORY) IsuidhiОценок пока нет

- Code - Aster: Opérateur ASSE - MAILLAGEДокумент4 страницыCode - Aster: Opérateur ASSE - MAILLAGElbo33Оценок пока нет

- Date Object: Javascript Quick Reference CardДокумент2 страницыDate Object: Javascript Quick Reference CardAlexander ReinagaОценок пока нет

- Introduction To Computing: Lecture No. 1Документ31 страницаIntroduction To Computing: Lecture No. 1Hamza Ahmed MirОценок пока нет

- Emuxy and KML 2.0: IndexДокумент25 страницEmuxy and KML 2.0: IndexUziel CayllahuaОценок пока нет

- Data structures through C language notesДокумент6 страницData structures through C language notessandy_qwertyОценок пока нет

- Lammps Detail CommandДокумент15 страницLammps Detail CommandSourav SahaОценок пока нет

- Unit 3 ReviewДокумент11 страницUnit 3 ReviewAlan ZhouОценок пока нет

- Circuit Theory Lab Manual DC Final Two Port NetworkДокумент9 страницCircuit Theory Lab Manual DC Final Two Port NetworkSoumyadeepto BhattacharyyaОценок пока нет

- Programming AT90S2313Документ4 страницыProgramming AT90S2313Juan Luis MerloОценок пока нет

- Operator-Precedence Parser Grammar and Parsing AlgorithmДокумент18 страницOperator-Precedence Parser Grammar and Parsing Algorithmkarthi_gopalОценок пока нет

- Date Num Topic Assignment: 2014 Advanced Algebra F Assignments Write Out The Problem. Show Your Work. Box The AnswersДокумент1 страницаDate Num Topic Assignment: 2014 Advanced Algebra F Assignments Write Out The Problem. Show Your Work. Box The Answersapi-168512039Оценок пока нет

- Data StructuresДокумент43 страницыData Structurespabharathi2005Оценок пока нет

- Arithmetic and Date Functions: What Is A Function?Документ12 страницArithmetic and Date Functions: What Is A Function?Venky PragadaОценок пока нет

- Date Num Topic Assignment: 2014 Advanced Algebra F Assignments Write Out The Problem. Show Your Work. Box The AnswersДокумент1 страницаDate Num Topic Assignment: 2014 Advanced Algebra F Assignments Write Out The Problem. Show Your Work. Box The Answersapi-168512039Оценок пока нет

- Cpe 272 Digital Logic Laboratory: Lab #3 Introduction To The Gal/Three Bit Adder Fall 2007Документ10 страницCpe 272 Digital Logic Laboratory: Lab #3 Introduction To The Gal/Three Bit Adder Fall 2007alexandermh247181Оценок пока нет

- OratopДокумент16 страницOratopjonytapiaОценок пока нет

- SAS Interview Questions: Arundathi InfotechДокумент61 страницаSAS Interview Questions: Arundathi InfotechYogesh NegiОценок пока нет

- Introduction To Bottom Up ParserДокумент75 страницIntroduction To Bottom Up ParserVidhya MohananОценок пока нет

- Syllabus MAT114 1.1 MVCDEДокумент2 страницыSyllabus MAT114 1.1 MVCDEkvhkrishnanОценок пока нет

- X86 Assembly/NASM SyntaxДокумент6 страницX86 Assembly/NASM SyntaxAnilkumar PatilОценок пока нет

- Cs2257 Operating Systems Lab ManualДокумент43 страницыCs2257 Operating Systems Lab ManualmanjulakinnalОценок пока нет

- Embedded System Design: Lab Manual OF Mces LabДокумент35 страницEmbedded System Design: Lab Manual OF Mces LabVivek RohillaОценок пока нет

- Chapter 9 Strings and Text I/O: Answer: CorrectДокумент7 страницChapter 9 Strings and Text I/O: Answer: CorrectNinh Lục TốnОценок пока нет

- Ifet College of Engineering Lesson PlanДокумент6 страницIfet College of Engineering Lesson PlanthamiztОценок пока нет

- SIMD Computer OrganizationsДокумент20 страницSIMD Computer OrganizationsF Shan Khan0% (1)

- Seminar ON Biomolecular ComputingДокумент23 страницыSeminar ON Biomolecular ComputingNaveena SivamaniОценок пока нет

- DAA Unit - IIДокумент38 страницDAA Unit - IISubathra Devi MourouganeОценок пока нет

- 9 Experiment of VlsiДокумент34 страницы9 Experiment of VlsiEr Rohit VermaОценок пока нет

- Total Credits For Diploma (25 + 23) 48Документ16 страницTotal Credits For Diploma (25 + 23) 48arundhathinairОценок пока нет

- Unit 10 (SQL Statement Processing 1)Документ13 страницUnit 10 (SQL Statement Processing 1)Rajesh KumarОценок пока нет

- Which Tights Him Into Conformism and Dictatorship of Modern ScienceДокумент6 страницWhich Tights Him Into Conformism and Dictatorship of Modern SciencezdvbОценок пока нет

- IBM Mainframes: COBOL Training Class-6Документ33 страницыIBM Mainframes: COBOL Training Class-6ravikiran_8pОценок пока нет

- CEPT ECC Monte-Carlo Simulation Tool SEAMCAT DiscussionДокумент3 страницыCEPT ECC Monte-Carlo Simulation Tool SEAMCAT DiscussionRina PuspitasariОценок пока нет

- Orcad PLDДокумент8 страницOrcad PLDmeeduma4582Оценок пока нет

- EMS Test GuideДокумент44 страницыEMS Test GuideporpoisemlОценок пока нет

- Ab Initio MethodsДокумент19 страницAb Initio MethodsPrasad Uday BandodkarОценок пока нет

- CSCI 1480 University of Central Arkansas Bonus Lab (Optional)Документ13 страницCSCI 1480 University of Central Arkansas Bonus Lab (Optional)Michael WilsonОценок пока нет

- UML Class Diagrams: Represent The (Static) Structure of The System General in JavaДокумент38 страницUML Class Diagrams: Represent The (Static) Structure of The System General in JavaUtpal LadeОценок пока нет

- High Performance Computational Electromechanical Model of The HeartДокумент4 страницыHigh Performance Computational Electromechanical Model of The HeartpilafaОценок пока нет

- FEMAP 2193 - tcm903-5012Документ16 страницFEMAP 2193 - tcm903-5012Roger JiménezОценок пока нет

- Lafortune - Et - Al - SMST06 - Superelasticity Related Phenomena of Shape Memory Alloy Passive DampersДокумент10 страницLafortune - Et - Al - SMST06 - Superelasticity Related Phenomena of Shape Memory Alloy Passive DamperspilafaОценок пока нет

- Cds 13 WorkbookДокумент99 страницCds 13 WorkbookWaqar A. KhanОценок пока нет

- Ansys Solver 2002Документ62 страницыAnsys Solver 2002pilafaОценок пока нет

- A Comparative Thermal Study of Two Motors StructureДокумент15 страницA Comparative Thermal Study of Two Motors StructurepilafaОценок пока нет

- Elmer Finite Element Software for Multiphysical Optimization ProblemsДокумент28 страницElmer Finite Element Software for Multiphysical Optimization ProblemssyammohansОценок пока нет

- Dynamic Non-Linear Electro-Thermal Simulation of A Thin-Film Thermal ConverterДокумент8 страницDynamic Non-Linear Electro-Thermal Simulation of A Thin-Film Thermal ConverterpilafaОценок пока нет

- Salome: The Open Source Integration Platform For Numerical SimulationДокумент8 страницSalome: The Open Source Integration Platform For Numerical SimulationpilafaОценок пока нет

- Easily Made Errors in FEA 1Документ6 страницEasily Made Errors in FEA 1darkwing888Оценок пока нет

- Mat C ManualДокумент8 страницMat C ManualBi8ikityОценок пока нет

- GT&D GlossaryДокумент10 страницGT&D GlossaryshawntsungОценок пока нет

- Elmer TutorialsДокумент103 страницыElmer TutorialsChandrajit ThaokarОценок пока нет

- Elmer Solver ManualДокумент131 страницаElmer Solver ManualpilafaОценок пока нет

- An Introduction To Code AsterДокумент71 страницаAn Introduction To Code AsterIommoi MoiiomОценок пока нет

- Coupled Electromechanical Model of The Heart: Parallel Finite Element FormulationДокумент15 страницCoupled Electromechanical Model of The Heart: Parallel Finite Element FormulationpilafaОценок пока нет

- Large Scale Continuum Electromechanical Simulation of The HeartДокумент1 страницаLarge Scale Continuum Electromechanical Simulation of The HeartpilafaОценок пока нет

- Coupled Electromechanical Model of The Heart: Parallel Finite Element FormulationДокумент15 страницCoupled Electromechanical Model of The Heart: Parallel Finite Element FormulationpilafaОценок пока нет

- High Performance Computational Electromechanical Model of The HeartДокумент4 страницыHigh Performance Computational Electromechanical Model of The HeartpilafaОценок пока нет

- Modal AnalysisДокумент14 страницModal Analysismichael_r_reid652Оценок пока нет

- LaptopДокумент17 страницLaptopViswanath ReddyОценок пока нет

- Programa de Impresion SmartformsДокумент2 страницыPrograma de Impresion Smartformsotracuentaaux5Оценок пока нет

- Smoke Test Mind Map PDFДокумент1 страницаSmoke Test Mind Map PDFmeet kajanaОценок пока нет

- NDG Network Virtualization concepts Course Questions اسئلةДокумент5 страницNDG Network Virtualization concepts Course Questions اسئلةMohAmed ReFatОценок пока нет

- Brochure - MIT - xPRO - Cybersecurity Professional Certificate 14 Oct 2021 V38Документ19 страницBrochure - MIT - xPRO - Cybersecurity Professional Certificate 14 Oct 2021 V38Ivan SaboiaОценок пока нет

- Colorful Creative Illustration Digital Brainstorm PresentationДокумент15 страницColorful Creative Illustration Digital Brainstorm PresentationPrincess Ara AtatadoОценок пока нет

- SGBC Certification Application GuideДокумент13 страницSGBC Certification Application GuideShahril ZainulОценок пока нет

- FileДокумент651 страницаFileSony Neyyan VargheseОценок пока нет

- First Generation of Hmi Catalog enДокумент20 страницFirst Generation of Hmi Catalog enMadhun SickОценок пока нет

- SILVERBIBLE-Book EN ContentДокумент8 страницSILVERBIBLE-Book EN ContentprathibamuthurajanОценок пока нет

- Item Submission WorkflowsДокумент21 страницаItem Submission WorkflowsGhaziAnwarОценок пока нет

- Architecture Guide SafeX3u9 ENG V1aДокумент27 страницArchitecture Guide SafeX3u9 ENG V1aأنس الساحليОценок пока нет

- Operator ManualДокумент84 страницыOperator Manualswoessner1100% (1)

- Table List BW ObjectwiseДокумент12 страницTable List BW ObjectwiseVijayendra SawantОценок пока нет

- LogДокумент87 страницLogSaya DeanОценок пока нет

- TFM Important QuestionsДокумент2 страницыTFM Important QuestionsvishwaОценок пока нет

- Topic For The Class: Ethernet Physical Layer, Ethernet Mac Sub Layer Protocol. Date & Time: 19/01/2022Документ20 страницTopic For The Class: Ethernet Physical Layer, Ethernet Mac Sub Layer Protocol. Date & Time: 19/01/2022LkdisisosoОценок пока нет

- Nadia Natasya: Working Experiences About MeДокумент1 страницаNadia Natasya: Working Experiences About MeNurul SyafiqahОценок пока нет

- CXCI Cordex 2v0 Quick RefДокумент2 страницыCXCI Cordex 2v0 Quick RefGuillermo OvelarОценок пока нет

- Broadcast TechnicianДокумент1 страницаBroadcast TechnicianHabtamu TadesseОценок пока нет

- Smart home business model using openHAB technologyДокумент48 страницSmart home business model using openHAB technologyMuhammad Ussama SirajОценок пока нет

- Chapter 3 - SelectionsДокумент52 страницыChapter 3 - Selectionsnaqibullah2022faryabОценок пока нет

- Reading Sample: First-Hand KnowledgeДокумент55 страницReading Sample: First-Hand KnowledgeHarish RОценок пока нет

- Sybex CCNA 640-802 Chapter 04Документ19 страницSybex CCNA 640-802 Chapter 04xkerberosxОценок пока нет

- Week 5 Lecture MaterialДокумент97 страницWeek 5 Lecture MaterialSmita Chavan KhairnarОценок пока нет

- ReadmeДокумент5 страницReadmeLaurentiu Marian DobreОценок пока нет