Академический Документы

Профессиональный Документы

Культура Документы

1 Hassoun Chap3 Perceptron

Загружено:

porapooka123Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

1 Hassoun Chap3 Perceptron

Загружено:

porapooka123Авторское право:

Доступные форматы

au.

~

s t r n 1

conomy'

clition

Fundamentals of ARTIFICIAL

,

NEURAL NETWORKS

Mohamad H. Hassoun

,

II,; ,

3

Learning Rules

One of the most significant attributes of a neural network is its ability to learn by

interacting with its environment or:Wiih an information source. Learning in a neural

network is normally accomplished through an adaptive procedure, known as a learn-

ing rule or algorithm, whereby the weights of the network are incrementally adjusted

so as to improve a predefined performance measure over time.

In the context of artificial neural networks, the process of learning is best viewed

as an optimization process. More precisely, the learning process can be viewed as

"search" in a multidimensional parameter (weight) space for a solution, which gradu-

~ y optimizes a prespecified objective {criterion) function. This view is adopted in this

chapter, and it allows us to unify a wide range of existing learning rules which other-

wise would have looked more like a diverse variety of learning procedures.

This chapter presents a number of basic learning rules for supervised, reinforced,

and unsupervised learning tasks. In supervised learning (also known as learning with a

teacher or assOciative learning), each input pattern/signal received from the environment

is associated with a specific desired target pattern. Usually, the weights are synthe-

sized gradually, and at eacQstep of the learning process they are updated so that the

error between the network's output and a corresponding desired target is reduced. On

the other hand, unsupervised learning involves the clustering of (or the detection of

similarities among) unlabeled patterns of a given training set. The idea here is to

optimize (maximize or minimize) some criterion or performance function defined in

terms of the output activity of the units in the network. Here, the weights and the

outputs of the network are usually expected to converge to representations that capture

the statistical regularities of the input data. Reinforcement learning involves updating

the network's. weights in response to an "evaluative" teacher signal; this differs from

\

supervised learning, where the teacher signal is the "correct answer." Reinforcement

learning rules may be viewe<1 as stochastic search mechanisms that attempt to maximize

the probability of positive external reinforcement fOl a given training set.

In most cases, these learning rules are presented in the basic form appropriate for

single-unit training. Exceptions are cases involvmg unsupervised (competitive or fea-

ture mapping) learning schemes in which an essential competition mechanism necessi-

tates the use of multiple units. For such cases, simple single-layer architectures are

assumed. Later chapters in this book (Chapters 5, 6, and 7) extend some of the

learning rules discussed here to networks with multiple units and multiple layers.

3.1 Supervised Learning in a Single-Unit Setting

Supervised learning is treated first. Here, two groups of rules are discussed: error-

correction rules and gradient-descent-based rules. By the end of this section it will be

S8

3 Learning Rules

established that all these learning rules can be systematically derived as minimizers of

an appropriate criterion function.

3.1.1 Error Correction Rules

Error-correction rules were proposed initially as ad hoc rules for single-unit training.

These rules essentially drive the output error of a given unit to zero. This section starts

with the classic perceptron learning rule and gives a proof for its convergence. Then

other error correction rules such as Mays' rule and the IX-LMS rule are covered.

Throughout this section an attempt is made to point out criterion functions that are

minimized by using each rule. These learning rules also will be cast as relaxation rules,

thus unifying them with the other gradient-based search rules such as the ones

presented in Section 3.1.2.

Perceptron Learning Rule Consider the linear threshold gate shown in Figure 3.1.1,

which will be referred to as the perceptron. The perceptron maps an input vector

x = [Xl X2 ... xn+l]T to a bipolar binary output y, and thuS-it may be viewed

as a simple two-class classifier. The input signal X

n

+

l

is usually set to 1 and

plays the role of a bias to the perceptron. We will denote by w the vector

w = [Wi W2 ... W

n

+1]T e Rn+l consisting of the free parameters (weights) of the

perceptron. The IDputjoutput relation for the perceptron is given by y = sgn(x TW),

where sgn is the "sign" function, which returns + 1 or -1 depending on whether the

sign of its scalar argument is positive or negative, respectively.

Assume we are training this perceptron to load (learn) the training pairs {xl, u i },

{x2,d

2

}, ... ,{x"',d

m

}, where x"eR"+l is the.kth input vector and lllt e {-l,+l},

XI

+1

y = sgn(XTW) .

~ - - - - - - - 1 + - - - + - - - r - - - ~

-I

Figure:U.l

The perceptron computational unit.

3.1 Supervised Learning in a Single-Unit Setting 59

k = 1,2, ... , m, is the desired target for the kth input vector (usually the order of these

training pairs is random). The entire collection of these pairs is called the training set.

The goal, then, is to design a perceptron such that for each input vector Xk of the

training set, the perceptron output l matches the desired target dk; that is, we require

l = sgn(wTx

k

) = dk, for each k = 1,2,00.,m. In this case we say that the perceptron

correctly classifies the training set. Of course, "designing" an appropriate perceptron

to correctly classify the training set amounts to determining a weight vector w* such

that the following relations are satisfied:

if d

k

= + 1

if d

k

= -1

(3.1.1 )

Recall that the set of all x which satisfy x T w* = U defines a hyperplane in R". Thus,

in the context of the preceding discussion, finding a solution vector w* to Equation

(3.1.1) is equivalent to finding a separating hyperplane that correctly classifies all

vectors Xk, k = 1,2, ... , m. In other words, we desire a hyperplane xTw* = 0 that parti-

tions the in:,ut space into two distinct regions, one containing all points Xk with

d

k

= + 1 and the other region containing all points x" with d

k

= -1.

One possible incremental method for arriving at a solution w* is to invoke the

perceptron learning rule (Rosenblatt, 1962):

{

w 1 arbitrary

wk+l = w

k

+ p(d

k

- l)xk,

k = 1,2'00'

(3.1.2)

where p is a positive constant called the learning rate. The incremental learning pro-

I,;ess given in Equation (3.1.2) proceeds as follows: First, an initial weight vectorw

1

is

selected (usually at random) to begin the process. Then, the m pairs {xk,d"} of the

training set are used to successively update the weight vector until (hopefully) a solu-

tion w* is found that correctly classifies the training set. This process of sequentially

presenting the training patterns is usually referred to as cycling through the training

set, and a complete presentation of the m training pairs is referred to as a cycle (or

pass) through the training set. In general, more than one cycle through the training set

is required to determine an appropriate solution vector. Hence, in Equation (3.1.2),

the superscript k in WI; refers to the iteration number. On the other hand, the super-

script k in Xl (and dk) is the label of the training pair presented at the kth iteration. To

be more precise, if the number of training pairs m is finite, then the superscripts in Xl

and d" should be replaced by [(k - l)modm] + 1. Here, a mod b returns the remain-

der of the division of a by b (e.g., 5 mod 8 = 5, 8 mod 8 = 0, and 19 mod 8 = 3). This

observation is valid for all incremental learning rules presented in this chapter.

60 3 Learning Rules

Notice that for p = 0.5, the perceptrop. learning rule can be written as

where

{

WI arbitrary

Wk+l = w

k

+ i'

w

k

+1 =

{

+Xk

Z

k -

- k

-x

otherwise

if d

k

= + 1

if d

k

= -1

That is, a correction is made if and only if a misclassification, indicated by

(i')TW

k

:s; 0

(3.1.3)

(3.1.4)

(3.1.5)

occurs. The addition of vector Zk to w

k

in Equation (3.1.3) moves the weight vector

directly toward and perhaps across the hyperplane' (i')TW

k

= O. The new inner prod-

uct (i')Twl+

1

is larger than (Zk)Twl by the amount of 11i'1I

2

, and the correction aw

k

=

wl+1 - w

k

is clearly moving w

k

in a good direction, the direction of increasing (i')Twl,

as can be seen from Figure 3.1.2. I Thus the perceptron learning rule attempts to find

a solution w for the following system of inequalities:

(zk)TW > 0 for k = 1,2, . .. ,m (3.1.6)

In an analysis of any learning algorithm, and in particular the perceptron learning

algorithm of Equation (3.1.2), there are two main issues to consider: (1) the existence

of solutions and (2) convergence of the algorithm to the desired solutions (if they

exist). In the case of the percentron, it is clear that a solution vector (i.e., a vector w

that correctly classifies the training set) exists if and only if the given training set is

Figure 3.1.2

Geometric representation of the perceptron learning

rule with p = 0.5.

1. The quantity IIzl12 is given by ZTZ and is sometimes referred to as the energy of z. IIzll is the Euclidean

norm (length) of vector z and is given by the square root of the sum of the squares of the components of z

[note that Ilzll = IIxll by virtue of Equation (3.1.4)].

3.1 Supervised Learning in a Single-Unit e t t i n ~

61

linearly separable. Assuming, then, that the training set is linearly separable, we may

proceed to show that the perceptron learning rule converges to a solution (Novikoff,

1962; Ridgway, 1962; Nilsson, 1965) as follows: Let w* be any solution vector so that

(i)TW* > 0 for k = 1,2, ... , m (3.1.7)

Then, from Equation (3.1.3), if the kth pattern is misclassified, we may write

(3.1.8)

where a is a positive scale factor, and hence

(3.1.9)

Since i' is misclassified, we have (i')TW

k

~ 0, and thus

(3.1.10)

Now,let fJ2 = maxtllill

2

and y = min;(zi)Tw* [y is positive because (ZI)TW* > 0] and

substitute into Equation (3.1.10) to get

Uw+1 - aW*1l

2

~ 1lW - aw*U

2

- 2ay + fJ2

If we choose a sufficiently large, in particular a = fJ2/"I, we obtain

1lw+1 - aw*U

2

~ IIW - aw*1I2 - fJ2

(3.1.11)

(3.1.12)

Thus the square distance between w

t

and !XW* is reduced by at least pI at each

correction, and after k corrections, we may write Equation (3.1.12) as

(3.1.13)

It follows that the sequence of corrections must terminate after no more than ko

corrections, where

(3.1.14)

Therefore, if a solution exists, it is achieved in a finite number of iterations. When

corrections cease, the resulting weight vector must classify all the samples correctly,

since a correction occurs whenever a sample is misclassified, and since each sample

appears infinitely often in the sequence. In general, a linearly separable problem

admits an infinite number of solutions. The perceptron learning rule in Equation

(3.1.2) converges to one of these solutions. This solution, though, is sensitive to the"

value of the learning rate p used and to the order of presentation of the training pairs.

62 3 Learning Rules

This sensitivity is responsible for the varying quality of the perceptron-generated

separating surface observed in simulations.

The bound on the number of corrections ko given by Equation (3.1.14) depends on

the choice of the initial weight vector WI. If WI = 0, we get

or

k = maxi IIx

i

1l

2

11w*1I

2

o [mini (Xi)TW*] 2

(3.1.15)

Here, ko is a function of the initially unknown solution weight vector w*. Therefore,

Equation (3.1.15) is of no help for predicting the maximum number of corrections.

However, the denominator of Equation (3.1.15) implies that the difficulty ofthe prob-

lem is essentially determined by the samples most nearly orthogonal to the solution

vector.

Generalizations of tbe Perceptron Learning Rule The perceptron lea:rning rule may

be generalized to include a variable increment p" and a fixed positive margin b. This

generalized learning rule updates the weight vector whenever (z")TW" fails to exceed

the margin b. Here, the algorithm for weight vector update is given by

{

WI arbitrary

w"+1 = w" + pkz"

W

H1

= w"

(3.1.16)

otherwise

The margin b is useful because it gives a dead-zone robustness to the decision bound-

ary. That is, the perceptron's decision hyperplane is constrained to lie in a region

between the two classes such that sufficient clearance is realized between this hyper-

plane and the extreme pomts (boundary patterns) of the training set. This makes the

perceptron robust with respect to noisy inputs. It can be shown (Duda and Hart,

1973) that if the training set is linearly separable and if the following three conditions

are satisfied:

(3.1.17a)

m

2. lim L p" = 00 (3.1.l7b)

m-oo ",k=1

m

L (pk)2

3. lim k = ~ )2 = 0

m-+C() L pk

k=1

(3.1.l7c)

3.t Supervised Learning in a Single-Unit Setting 63

(e.g., pk = p/k or even l = pk), then w converges to a solution w* that satisfies

(ZI)TW* > b, for i = 1,2,: .. , m. Furthermore, when p" is fixed at a positive constant p,

this learning rule converges in finite time.

Another variant of the perceptron learning rule is given by the batch update

procedure

{

W1 arbitrary

wk+1 = wk + p L z

zeZ(,,")

(3.1.18)

where Z(Wk) is the set of patterns z misclassified by wk. Here, the weight vector change

Aw = W

Hi

- wk is along the direction of the resultant vector of all misclassified pat-

terns. In general, this update procedure converges faster than the perceptron rule. but

it requires more storage

In the nonlInearly separable case, the preceding algorithms do not converge. Few

theoretical results are available on the behavior of these algorithms for nonlinearl)

separable problems [see Minsky and Papert (1969) and Block and Levin (1970) for

some preliminary results]. For example, it is known that the length of w in the percep-

tron rule is bounded, i.e., tends to fluctuate near some limiting value IIw* n (Efron,

1964). This information may be used to terminate the search for w*. Another ap-

proach is to average the weight vectors near the fluctuation point w*. Butz (1967)

proposed the use of a reinforcement factor ,,/, 0 I' 1, in the perceptron learning

rule. This reinforcement places w in a region that tends to minimize the probability of

error for nonlinearly separable cases. Butz's rule is as follows:

{

Wi arBitrary

W

H1

= w

k

+ pz"

W

HI

= wk + {J"lzk

if(zk)Twk 0

if (zk)TW > 0

(3.1.19)

The Perceptron Criterion Function It is interesting to see how the preceding error-

correction rules can be derived by a gradient descent on an appropriate criterion

(objective) function. For the perceptron, we may define the following criterion func-

tion (Duda and Hart, 1973):

(3.1.20)

zeZ(w)

where Z(w) is the set of samples misclassified by w (i.e., ZTW 0). Note that if Z(w) is

empty, then J(w) = 0; otherwise, J(w) > o. Geometrically, J(w) is proportional to the

sum of the distances from the misclassified samples to the decision boundary. The

smaller J is, the better the weight vector w will be.

64

3 Learning RUles

Given this objective function J(w), the search point W' can be incrementally im-

proved at each iteration by sliding downhill on the surface defined by J(w) in w space.

Specifically, we may use J to perform a discrete gradient-descent search that updates

Wi so that a step is taken downhill in the "steepest" direction along the search surface

J(w) at Wk. This can be achieved by making Aw

k

proportional to the gradient of J at

the present location w

k

; formally, we may write

2

W' 1 =W'-pVJ(W)I .. =wk=W'-P - - ... __

+ [ aJ aJ aJ JTI

aWl aW

2

aW,,+l .. ="k

(3.1.21)

Here, the initial search point WI and the learning rate (step size) p are to be specified

by the user. Equation (3.1.21) can be called the steepest gradient descent search rule or,

simply, gradient descent. Next, substituting the gradient

VJ(W') = - L 'z (3.1.22)

&eZ("k)

into Equation (3.1.21) leads to the weight update rule

W'+l = wi + P L z (3.1.23)

zeZ(wk)

The learning rule given in Equation (3.1.23) is identical to the multiple-sample (batch)

perceptron rule of Equation (3.1.18). The original perceptron learning rule of Equa-

tion (3.1.3) can be thought of as an "incremental" gradient descent search rule for

minimizing the perceptron criterion function in Equation (3.1.20). Following a similar

2. Discrete gradient-search methods are generally governed by the following equation:

~ 1 = w

k

- <xAVJ!w='"

Here, A is an n x II matrix and IX is a real number, both are functions of ~ . Numerous versions of

gradient-search methods exist, and they differ in the way in which A and <X are selected at w = ~ . For

example, if A is taken to be the identity matrix, and if <X is set to a small positive constant, the gradied(

"descent" search in Equation (3.1.21) is obtained. On the other hand, if <X is a small negative constant,

gradient "ascent" search is realized which seeks a local maximum. In either case, though, a saddle point

(nonstable equilibrium) may be reached. However, the existence of noise in practical systems prevents

convergence to such .nonstable equilibria.

It also should be noted that in addition to its simple structure, Equation (3.1.21) implements "steepest"

descent (refer to Section 8.1 for additional qualitative analysis of gradient-based search). It can be shown

(e.g., Kelley, 1962) that starting at a point w

o

, the gradient direction V J(WO) yields the greatest incremental

increase of J(w) for a fixed incremental distance Awo = w - woo The speed of convergence of steepest

descent search is alTected by the choice of <X, which is normally adjusted at each time step to make the most

error correction subject to stability constraints. This topic is explored in Sections 5.2.2 and 5.2.3. Lapidus

et al. (1961) compares six ways of choosing <X, each slightly different, by apf>Iying them to a common problem.

Finally, it should be pointed out that setting A equal to the inverse of the Hessian matrix [VVJr

t

and

IX to 1 results in the well-known Newton's search method.

3.l Supervised Learning in a Single-Unit Setting

procedure as in Equations (3.1.21) through (3.1.23), it can be shown that

J(w) = - L (ZTW - b)

7

T

,,:s:b

65

(3.1.24)

is the appropriate criterion function for the modified perceptron rule in Equation

(3.1.16).

Before moving on, it should be noted that the gradient of J in Equation (3.1.22) is

not mathematically precise. Owing to the piecewise linear nature of J, sudden changes

\in the gradient of J occur every time the perceptron output y goes through a transi-

tion at (Zk)TW = O. Therefore, the gradient of J is not defined at "transition" points w

satisfying (zk)TW = 0, k = 1,2, ... , m. However, because of the discrete nature of Equa-

tion (3.1.21), the likelihood of w

k

overlapping with one of these transition points is

negligible, and thus we may still express VJ as in Equation (3.1.22). The reader is

referred to Problem 3.1.3 for further exploration into gradient descent on the percep-

tron criterion function.

Вам также может понравиться

- Competitive Mixtures of Simple Neurons: Karthik Sridharan Matthew J. Beal Venu GovindarajuДокумент4 страницыCompetitive Mixtures of Simple Neurons: Karthik Sridharan Matthew J. Beal Venu GovindarajuAbbé BusoniОценок пока нет

- Learning Rules of ANNДокумент25 страницLearning Rules of ANNbukyaravindarОценок пока нет

- AdalineДокумент28 страницAdalineAnonymous 05P3kMIОценок пока нет

- Learning Rules: This Definition of The Learning Process Implies The Following Sequence of EventsДокумент11 страницLearning Rules: This Definition of The Learning Process Implies The Following Sequence of Eventsshrilaxmi bhatОценок пока нет

- Multilayer Perceptron and Uppercase Handwritten Characters RecognitionДокумент4 страницыMultilayer Perceptron and Uppercase Handwritten Characters RecognitionMiguel Angel Beltran RojasОценок пока нет

- NIPS 1995 Learning With Ensembles How Overfitting Can Be Useful PaperДокумент7 страницNIPS 1995 Learning With Ensembles How Overfitting Can Be Useful PaperNightsbringerofwarОценок пока нет

- The Nearest Neighbour AlgorithmДокумент3 страницыThe Nearest Neighbour AlgorithmNicolas LapautreОценок пока нет

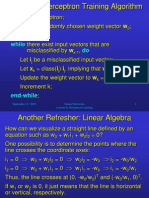

- Refresher: Perceptron Training AlgorithmДокумент12 страницRefresher: Perceptron Training Algorithmeduardo_quintanill_3Оценок пока нет

- Instructor's Solution Manual For Neural NetworksДокумент40 страницInstructor's Solution Manual For Neural NetworksshenalОценок пока нет

- instructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressДокумент40 страницinstructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressHassam HafeezОценок пока нет

- Name-Surya Pratap Singh Enrolment No. - 0829IT181023 Subject - Soft Computing Subject Code - IT701Документ17 страницName-Surya Pratap Singh Enrolment No. - 0829IT181023 Subject - Soft Computing Subject Code - IT701Surya PratapОценок пока нет

- Lecture 8 - Supervised Learning in Neural Networks - (Part 1)Документ7 страницLecture 8 - Supervised Learning in Neural Networks - (Part 1)Ammar AlkindyОценок пока нет

- DOS - ReportДокумент25 страницDOS - ReportSagar SimhaОценок пока нет

- Crammer, Kulesza, Dredze - 2009 - Adaptive Regularization of Weighted VectorsДокумент9 страницCrammer, Kulesza, Dredze - 2009 - Adaptive Regularization of Weighted VectorsBlack FoxОценок пока нет

- Section-3.8 Gauss JacobiДокумент15 страницSection-3.8 Gauss JacobiKanishka SainiОценок пока нет

- Finite-Element Methods With Local Triangulation R e F I N e M e N T For Continuous Reinforcement Learning ProblemsДокумент13 страницFinite-Element Methods With Local Triangulation R e F I N e M e N T For Continuous Reinforcement Learning ProblemsDanelysОценок пока нет

- A Tutorial on ν-Support Vector Machines: 1 An Introductory ExampleДокумент29 страницA Tutorial on ν-Support Vector Machines: 1 An Introductory Exampleaxeman113Оценок пока нет

- Fast Training of Multilayer PerceptronsДокумент15 страницFast Training of Multilayer Perceptronsgarima_rathiОценок пока нет

- Fundamentals of Artificial Neural NetworksДокумент27 страницFundamentals of Artificial Neural Networksbhaskar rao mОценок пока нет

- 9 Unsupervised Learning: 9.1 K-Means ClusteringДокумент34 страницы9 Unsupervised Learning: 9.1 K-Means ClusteringJavier RivasОценок пока нет

- Kernal Methods Machine LearningДокумент53 страницыKernal Methods Machine LearningpalaniОценок пока нет

- A Generalized Framework for Conflict Analysis in SAT SolversДокумент16 страницA Generalized Framework for Conflict Analysis in SAT Solverseliasox123Оценок пока нет

- Saxena Cs4758 Lecture4Документ2 страницыSaxena Cs4758 Lecture4Ivan AvramovОценок пока нет

- Machine Learning and Pattern Recognition Week 3 Intro_classificationДокумент5 страницMachine Learning and Pattern Recognition Week 3 Intro_classificationzeliawillscumbergОценок пока нет

- Ait AllДокумент83 страницыAit AllramasaiОценок пока нет

- Identification of Reliability Models For Non RepaiДокумент8 страницIdentification of Reliability Models For Non RepaiEr Zubair HabibОценок пока нет

- 6 A Dynamic LVQ Algorithm For Improving The Generalisation of Nearest Neighbour ClassifiersДокумент12 страниц6 A Dynamic LVQ Algorithm For Improving The Generalisation of Nearest Neighbour ClassifiersFadhillah AzmiОценок пока нет

- Linear Models: The Least-Squares MethodДокумент24 страницыLinear Models: The Least-Squares MethodAnimated EngineerОценок пока нет

- On Kernel-Target AlignmentДокумент7 страницOn Kernel-Target AlignmentJônatas Oliveira SilvaОценок пока нет

- Chapter 6 - Feedforward Deep NetworksДокумент27 страницChapter 6 - Feedforward Deep NetworksjustdlsОценок пока нет

- TutorialДокумент6 страницTutorialspwajeehОценок пока нет

- Artificial Neural Networks Unit 3: Single-Layer PerceptronsДокумент11 страницArtificial Neural Networks Unit 3: Single-Layer Perceptronsrashbari mОценок пока нет

- Regularization Networks and Support Vector MachinesДокумент53 страницыRegularization Networks and Support Vector MachinesansmechitОценок пока нет

- 3 Perceptron: Nnets - L. 3 February 10, 2002Документ31 страница3 Perceptron: Nnets - L. 3 February 10, 2002mtayelОценок пока нет

- ECE/CS 559 - Neural Networks Lecture Notes #6: Learning: Erdem KoyuncuДокумент13 страницECE/CS 559 - Neural Networks Lecture Notes #6: Learning: Erdem KoyuncuNihal Pratap GhanatheОценок пока нет

- Stability analysis and LMI conditionsДокумент4 страницыStability analysis and LMI conditionsAli DurazОценок пока нет

- Ps and Solution CS229Документ55 страницPs and Solution CS229Anonymous COa5DYzJwОценок пока нет

- CS 229, Public Course Problem Set #4: Unsupervised Learning and Re-Inforcement LearningДокумент5 страницCS 229, Public Course Problem Set #4: Unsupervised Learning and Re-Inforcement Learningsuhar adiОценок пока нет

- 2.1 The Process of Learning 2.1.1 Learning TasksДокумент25 страниц2.1 The Process of Learning 2.1.1 Learning TasksChaitanya GajbhiyeОценок пока нет

- Li Littman Walsh 2008 PDFДокумент8 страницLi Littman Walsh 2008 PDFKitana MasuriОценок пока нет

- 4 Multilayer Perceptrons and Radial Basis FunctionsДокумент6 страниц4 Multilayer Perceptrons and Radial Basis FunctionsVivekОценок пока нет

- Constraint Aggregation Principle Speeds Up Convex OptimizationДокумент21 страницаConstraint Aggregation Principle Speeds Up Convex OptimizationCarlos Eduardo Morales MayhuayОценок пока нет

- Optimization Based State Feedback Control Design For Impulse Elimination in Descriptor SystemsДокумент5 страницOptimization Based State Feedback Control Design For Impulse Elimination in Descriptor SystemsAvinash KumarОценок пока нет

- Networks With Threshold Activation Functions: NavigationДокумент6 страницNetworks With Threshold Activation Functions: NavigationsandmancloseОценок пока нет

- Topology Optimisation Example NastranДокумент12 страницTopology Optimisation Example Nastranjbcharpe100% (1)

- Presentation17 8 08Документ52 страницыPresentation17 8 08rdsrajОценок пока нет

- Perceptron PDFДокумент8 страницPerceptron PDFVel Ayutham0% (1)

- Csci567 Hw1 Spring 2016Документ9 страницCsci567 Hw1 Spring 2016mhasanjafryОценок пока нет

- CS229 Supplemental Lecture Notes: 1 BoostingДокумент11 страницCS229 Supplemental Lecture Notes: 1 BoostingLam Sin WingОценок пока нет

- ESL: Chapter 1: 1.1 Introduction To Linear RegressionДокумент4 страницыESL: Chapter 1: 1.1 Introduction To Linear RegressionPete Jacopo Belbo CayaОценок пока нет

- Variance CovarianceДокумент6 страницVariance CovarianceJoseph JoeОценок пока нет

- Learn Backprop Neural Net RulesДокумент19 страницLearn Backprop Neural Net RulesStefanescu AlexandruОценок пока нет

- Lecture Notes Stochastic Optimization-KooleДокумент42 страницыLecture Notes Stochastic Optimization-Koolenstl0101Оценок пока нет

- Lect Notes 9Документ43 страницыLect Notes 9Safis HajjouzОценок пока нет

- Optimal Stopping and Effective Machine Complexity in LearningДокумент8 страницOptimal Stopping and Effective Machine Complexity in LearningrenatacfОценок пока нет

- Evolutionary Game Theory and Multi-Agent Reinforcement LearningДокумент26 страницEvolutionary Game Theory and Multi-Agent Reinforcement LearningSumit ChakravartyОценок пока нет

- Difference Equations in Normed Spaces: Stability and OscillationsОт EverandDifference Equations in Normed Spaces: Stability and OscillationsОценок пока нет

- Date Sheet of Weekly Tests 2019-2020 2Документ3 страницыDate Sheet of Weekly Tests 2019-2020 2api-210356903Оценок пока нет

- A Rhetorical Analysis On Fidel Castro and Cory Aquino's Select National Speeches PDFДокумент26 страницA Rhetorical Analysis On Fidel Castro and Cory Aquino's Select National Speeches PDFDanah Angelica Sambrana67% (6)

- Group Decision MakingДокумент5 страницGroup Decision MakingSahilAviKapoorОценок пока нет

- Cultural Anthropology LectureДокумент18 страницCultural Anthropology LecturemlssmnnОценок пока нет

- Das Leben Einer Frau in Der Mittelalterl PDFДокумент339 страницDas Leben Einer Frau in Der Mittelalterl PDFaudubelaiaОценок пока нет

- Jonah Study GuideДокумент29 страницJonah Study GuideErik Dienberg100% (1)

- Understanding Civics, Ethics and Morality: Chapter OneДокумент82 страницыUnderstanding Civics, Ethics and Morality: Chapter Oneamanu kassahun100% (3)

- Prelim Quiz 2 - Attempt ReviewДокумент7 страницPrelim Quiz 2 - Attempt ReviewCharles angel AspacioОценок пока нет

- Constructivism As A Theory For Teaching and LearningДокумент4 страницыConstructivism As A Theory For Teaching and LearningShamshad Ali RahoojoОценок пока нет

- CBCP Monitor Vol. 17 No. 8Документ20 страницCBCP Monitor Vol. 17 No. 8Areopagus Communications, Inc.Оценок пока нет

- A Common Theme or Attitude To The Four Sections of The Poem 'Preludes' of T.S.Eliot.Документ4 страницыA Common Theme or Attitude To The Four Sections of The Poem 'Preludes' of T.S.Eliot.Rituparna Ray ChaudhuriОценок пока нет

- Poster DesignДокумент1 страницаPoster DesignSumair AzamОценок пока нет

- Priceless by William Poundstone Book ReviewДокумент3 страницыPriceless by William Poundstone Book ReviewAna-Maria Bivol50% (2)

- D1 PRE-BOARD PROF. EDUCATION - Flordeluna, An Education Student, Was Asked by Prof.Документ14 страницD1 PRE-BOARD PROF. EDUCATION - Flordeluna, An Education Student, Was Asked by Prof.Jorge Mrose26Оценок пока нет

- Ceremony - Candle Lighting PDFДокумент2 страницыCeremony - Candle Lighting PDFrafaelgsccОценок пока нет

- Art and Ecology in Ellen Meloy's The Last Cheater's WaltzДокумент25 страницArt and Ecology in Ellen Meloy's The Last Cheater's WaltzmrlОценок пока нет

- Characteristics of Business CommunicationДокумент3 страницыCharacteristics of Business Communicationrinky_trivediОценок пока нет

- 15 - The Death(s) of Baba YagaДокумент8 страниц15 - The Death(s) of Baba YagavalismedsenОценок пока нет

- Pathos Ethos and LogosДокумент2 страницыPathos Ethos and Logoshenry yandellОценок пока нет

- Cogito: Exploring Consciousness from Descartes to SartreДокумент19 страницCogito: Exploring Consciousness from Descartes to SartrePaulaSequeiraОценок пока нет

- Higher Education Strategy CenterДокумент88 страницHigher Education Strategy CentergetachewОценок пока нет

- Ei Workshop ProgrammeДокумент1 страницаEi Workshop Programmeapi-280263021Оценок пока нет

- The Purusha SuktaДокумент10 страницThe Purusha SuktapunarnavaОценок пока нет

- Count and Non Count NounsДокумент3 страницыCount and Non Count NounsSomSreyTouchОценок пока нет

- Byrne Context WithlabelsДокумент282 страницыByrne Context WithlabelssrobertjamesОценок пока нет

- BalajiДокумент1 страницаBalajiPranjal VarshneyОценок пока нет

- Pe PDFДокумент186 страницPe PDFEyob YimerОценок пока нет

- IBDP Economics HL Chapter 1 NotesДокумент6 страницIBDP Economics HL Chapter 1 NotesAditya Rathi100% (1)

- SantianoMaCheleenKrisna RealisticORJIДокумент2 страницыSantianoMaCheleenKrisna RealisticORJImeowgiduthegreatОценок пока нет

- Magnetic Braking System Report - 2nd Year ProjectДокумент11 страницMagnetic Braking System Report - 2nd Year Projectfransisca rumendeОценок пока нет