Академический Документы

Профессиональный Документы

Культура Документы

Some Slides of Lectures 11 and 12

Загружено:

Stephen BaoИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Some Slides of Lectures 11 and 12

Загружено:

Stephen BaoАвторское право:

Доступные форматы

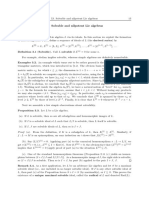

Revision: Eigenvalues and Eigenvectors

Let A be a square matrix of order n.

A nonzero column vector u in R

n

is called an eigenvector of

A if Au = u for some scalar .

The scalar is called an eigenvalue of A and u is said to be

an eigenvector of A associated with the eigenvalue .

is an eigenvalue of A if and only if det(I A) = 0.

The polynomial det(I A) is called the characteristic

polynomial of A.

The solution space E

of (I A)u = 0 is called the

eigenspace of A associated with the eigenvalue .

Every nonzero vector in E

is an eigenvector of A associated

with the eigenvalue .

Revision: Diagonalization

A square matrix A is called diagonalizable if there exists an

invertible matrix P such that P

1

AP is a diagonal matrix.

The matrix P is said to diagonalize A.

An n n square matrix A is diagonalizable if and only if A

has n linearly independent eigenvectors.

If u

1

, u

2

, ..., u

n

are linearly independent eigenvectors of A,

then P = (

u

1

u

2

... u

n

) diagonalizes A.

Suppose Au

i

=

u

i

for each i. Then

0

...

0

2

1

1

AP P

Matrix Multiplication in Blocks

Suppose A is an r m matrx, B is an r n matrx,

C is an s m matrx, D is an s n matrx,

E is an m t matrx, F is an m u matrx,

G is an n t matrx, H is an n u matrx.

Then

Warning: To do such a matrix multiplication, you need to

make sure that the sub-matrices can be multipied with each

other.

+ +

+ +

=

DH CF DG CE

BH AF BG AE

H G

F E

D C

B A

A Theorem (to replace Theorem 9.3.10)

Let T be a linear operator on a finite dimensional space V

and let C be an ordered basis for V.

Then a square matrix D is similar to [T]

C

if and only if

D = [T]

B

for an ordered basis B for V.

Proof

() It follows from Discussion 9.3.8.

A Theorem (to replace Theorem 9.3.10)

() (The proof is the same as the last paragraph of the proof

of Theorem 11.2.2 in p.109.)

Let C = { u

1

, u

2

, , u

n

} where dim(V) = n.

Suppose D = P

1

[T]

C

P where P = (p

i

j

) is an n n

invertible matrix.

Define B = { v

1

, v

2

, , v

n

} such that

v

j

= p

1j

u

1

+ p

2j

u

2

+ ... + p

n

j

u

n

for j = 1, 2, ..., n.

Using B as an ordered basis for V, we have [I

V

]

C, B

= P.

Then by Discussion 9.3.8, [T]

B

= P

1

[T]

C

P.

Triangular Forms

Let T be a linear operator on a finite dimensional space V

over F. Suppose the characteristic polynomial of T can be

factorized into linear factors over F.

Take any basis C for V.

Since the characteristic polynomial of the matrix [T]

C

can be

factorized into linear factors over F, we can find an

invertible matrix P such that P

1

[T]

C

P is an upper

triangular matrix

By the result we have just proved, there exists an ordered

basis B for V such that [T]

B

is an upper triangular matrix.

Вам также может понравиться

- The Equidistribution Theory of Holomorphic Curves. (AM-64), Volume 64От EverandThe Equidistribution Theory of Holomorphic Curves. (AM-64), Volume 64Оценок пока нет

- SC505 Linear Algebra Basis Dimensions TransformationsДокумент3 страницыSC505 Linear Algebra Basis Dimensions Transformations2021 21004Оценок пока нет

- Assignment 4 Basis, Dimensions and Linear TransformationsДокумент3 страницыAssignment 4 Basis, Dimensions and Linear Transformations2021 21004Оценок пока нет

- Linear Algebra Solutions for Eigenvalues and EigenspacesДокумент3 страницыLinear Algebra Solutions for Eigenvalues and EigenspacesAlbiToroОценок пока нет

- Olympiad Test ProblemsДокумент6 страницOlympiad Test ProblemsfxОценок пока нет

- Differential Geometry 2009-2010Документ45 страницDifferential Geometry 2009-2010Eric ParkerОценок пока нет

- Qualifying Examination: ⊂ X a closed sub-/ Y) of the complement / Y) = χ (X) + (−1) χ (Y)Документ7 страницQualifying Examination: ⊂ X a closed sub-/ Y) of the complement / Y) = χ (X) + (−1) χ (Y)Jonel PagalilauanОценок пока нет

- WOMPtalk ManifoldsДокумент11 страницWOMPtalk ManifoldsKirk BennettОценок пока нет

- Defining Linear TransformationsДокумент9 страницDefining Linear TransformationsAshvin GraceОценок пока нет

- Assignment 10Документ5 страницAssignment 10tanay.s1Оценок пока нет

- Calculation of eigenvectors of symmetric tridiagonal matrixДокумент9 страницCalculation of eigenvectors of symmetric tridiagonal matrixagbas20026896Оценок пока нет

- Cheat Sheet (Regular Font) PDFДокумент4 страницыCheat Sheet (Regular Font) PDFJosh ChandyОценок пока нет

- Tutorial 3 Mal101 PDFДокумент1 страницаTutorial 3 Mal101 PDFYash GuptaОценок пока нет

- MATH 146 Linear Algebra 1 Assignment 9Документ2 страницыMATH 146 Linear Algebra 1 Assignment 9user2357Оценок пока нет

- Solutions to Assignment-3 Metric SpacesДокумент5 страницSolutions to Assignment-3 Metric SpacesOsama YaghiОценок пока нет

- Ginzburg - Linear Algebra ProblemsДокумент5 страницGinzburg - Linear Algebra ProblemsmarioasensicollantesОценок пока нет

- When Is A Linear OperatorДокумент9 страницWhen Is A Linear OperatorRobert GibsonОценок пока нет

- 7 Diagonalization and Quadratic FormsДокумент45 страниц7 Diagonalization and Quadratic FormsAchuan ChenОценок пока нет

- E0 219 Linear Algebra and Applications / August-December 2011Документ5 страницE0 219 Linear Algebra and Applications / August-December 2011Chayan GhoshОценок пока нет

- Linear Algebra (MA - 102) Lecture - 12 Eigen Values and Eigen VectorsДокумент22 страницыLinear Algebra (MA - 102) Lecture - 12 Eigen Values and Eigen VectorsPeter 2Оценок пока нет

- The Chevalley-Warning Theorem (Featuring. - . The Erd Os-Ginzburg-Ziv Theorem)Документ14 страницThe Chevalley-Warning Theorem (Featuring. - . The Erd Os-Ginzburg-Ziv Theorem)Tatchai TitichetrakunОценок пока нет

- Homework 5 Solutions: 2.4 - Invertibility and IsomorphismsДокумент4 страницыHomework 5 Solutions: 2.4 - Invertibility and IsomorphismsCody SageОценок пока нет

- Vector Spaces PDFДокумент6 страницVector Spaces PDFSyed AkramОценок пока нет

- JRF Mathematics Examination RMI: K nk+1 NKДокумент2 страницыJRF Mathematics Examination RMI: K nk+1 NKLeo SchizoОценок пока нет

- Qualifying Exams Fall Fall 22 Wo SolutionsДокумент6 страницQualifying Exams Fall Fall 22 Wo SolutionsbassemaeОценок пока нет

- Math 4310 Homework 10 - Due April 25Документ2 страницыMath 4310 Homework 10 - Due April 25Gag PafОценок пока нет

- Aniruddha's STEM Registration DocumentДокумент13 страницAniruddha's STEM Registration DocumentHemant BodhakarОценок пока нет

- Geometry Exercises on Orthogonal Vectors and TransformationsДокумент2 страницыGeometry Exercises on Orthogonal Vectors and TransformationsEsio TrotОценок пока нет

- Linear Algebra Practice Final ExamДокумент5 страницLinear Algebra Practice Final Examcipher0completeОценок пока нет

- Eigen Values Eigen VectorsДокумент35 страницEigen Values Eigen VectorsArnav ChopraОценок пока нет

- Structure of The Solution Set To Impulsive Functional Differential Inclusions On The Half-Line - Grzegorz GaborДокумент19 страницStructure of The Solution Set To Impulsive Functional Differential Inclusions On The Half-Line - Grzegorz GaborJefferson Johannes Roth FilhoОценок пока нет

- Topics in Algebra Solution: Sung Jong Lee, Lovekrand - Github.io November 23, 2020Документ8 страницTopics in Algebra Solution: Sung Jong Lee, Lovekrand - Github.io November 23, 2020hGanieetОценок пока нет

- DG Chap3Документ6 страницDG Chap3ANDRES EDUARDO ACOSTA ORTEGAОценок пока нет

- Notation Reference UTexasДокумент8 страницNotation Reference UTexascostonsОценок пока нет

- 1 Poisson Manifolds: Classical Mechanics, Lecture 8Документ2 страницы1 Poisson Manifolds: Classical Mechanics, Lecture 8bgiangre8372Оценок пока нет

- Linear Algebra Assignment 2 PDFДокумент2 страницыLinear Algebra Assignment 2 PDFjeremyОценок пока нет

- Assignments LaДокумент13 страницAssignments LaritwikberaОценок пока нет

- ProblemsДокумент19 страницProblemsAnil BansalОценок пока нет

- Math 115a: Selected Solutions For HW 6 + More: Paul Young December 5, 2005Документ3 страницыMath 115a: Selected Solutions For HW 6 + More: Paul Young December 5, 2005KaneОценок пока нет

- Math 210A Homework 3Документ2 страницыMath 210A Homework 3Fox JoshuaОценок пока нет

- Notes On Quantifier EliminationДокумент29 страницNotes On Quantifier EliminationAndrea SabatiniОценок пока нет

- Topology NotesДокумент57 страницTopology NotesJorge ArangoОценок пока нет

- Orthogonal MatricesДокумент11 страницOrthogonal Matricesrgolfnut1100% (1)

- C8.4 Problems1Документ4 страницыC8.4 Problems1Mihnea-Gabriel DoicaОценок пока нет

- Mathematical Polynomial InterpolationДокумент3 страницыMathematical Polynomial InterpolationKlevis KasoОценок пока нет

- Assg 4Документ2 страницыAssg 4Ujjwal ThoriОценок пока нет

- Exercises Session1 PDFДокумент4 страницыExercises Session1 PDFAnonymous a01mXIKLxtОценок пока нет

- Ps 5Документ2 страницыPs 5Henry HughesОценок пока нет

- paperia_1_2023Документ7 страницpaperia_1_2023ayanoaishi1980Оценок пока нет

- Linear Algebra and Finite Dimensional Quantum Mechanics (Lecture Notes September 19)Документ10 страницLinear Algebra and Finite Dimensional Quantum Mechanics (Lecture Notes September 19)Yair HernandezОценок пока нет

- MTL 104: Linear Algebra: Department of Mathematics Minor Max. Marks 30Документ2 страницыMTL 104: Linear Algebra: Department of Mathematics Minor Max. Marks 30Seetaram MeenaОценок пока нет

- M 507 Lie Algebras - Homework 3: N N N N 1Документ2 страницыM 507 Lie Algebras - Homework 3: N N N N 1Toto de DauphineОценок пока нет

- MATS 2202 Homework 5Документ7 страницMATS 2202 Homework 5Segun MacphersonОценок пока нет

- Multinomial PointsДокумент16 страницMultinomial PointsE Frank CorneliusОценок пока нет

- Math 110 HomeworkДокумент4 страницыMath 110 HomeworkcyrixenigmaОценок пока нет

- MA 106 Linear Algebra Lecture 13 Key ConceptsДокумент11 страницMA 106 Linear Algebra Lecture 13 Key Conceptsjatin choudharyОценок пока нет

- Linear Alg Notes 2018Документ30 страницLinear Alg Notes 2018Jose Luis GiriОценок пока нет

- Outline Term1 1-2Документ4 страницыOutline Term1 1-2Nguyễn Trà GiangОценок пока нет

- ACC1002X Mid-Term Test 1 October 2011 AnswersДокумент9 страницACC1002X Mid-Term Test 1 October 2011 AnswersStephen BaoОценок пока нет

- ACC1002X Mid-Term Test 1 March 2011 AnswersДокумент9 страницACC1002X Mid-Term Test 1 March 2011 AnswersStephen BaoОценок пока нет

- 0910sem1 Ma2101Документ6 страниц0910sem1 Ma2101Stephen BaoОценок пока нет

- MA4257Документ102 страницыMA4257Stephen BaoОценок пока нет

- LAJ1201 Handout Lecture1Документ4 страницыLAJ1201 Handout Lecture1Stephen BaoОценок пока нет

- Fibonacci 1Документ27 страницFibonacci 1Stephen BaoОценок пока нет

- ACC1002X Mid-Term Test 1 March 2011 AnswersДокумент9 страницACC1002X Mid-Term Test 1 March 2011 AnswersStephen BaoОценок пока нет

- Lehman Brothers Forex Training Manual PDFДокумент130 страницLehman Brothers Forex Training Manual PDFZubaidi Othman100% (3)

- 2014 ArДокумент148 страниц2014 Artycoonshan24Оценок пока нет

- SAT Subject TestДокумент80 страницSAT Subject TestWargames9187% (15)

- B) Risk and Return IДокумент18 страницB) Risk and Return IStephen BaoОценок пока нет

- 5118 Combined ScienceДокумент45 страниц5118 Combined ScienceLin Emancipation0% (1)

- Coincidence Problems and Methods MainДокумент23 страницыCoincidence Problems and Methods MainStephen BaoОценок пока нет

- Analaytic GeogДокумент18 страницAnalaytic GeogStephen BaoОценок пока нет

- Exponent LiftingДокумент3 страницыExponent LiftingPhạm Huy HoàngОценок пока нет

- 2009 Senior Trainning Take Home TestSolnДокумент7 страниц2009 Senior Trainning Take Home TestSolnKrida Singgih KuncoroОценок пока нет

- 2009 Senior Trainning Take Home TestSolnДокумент7 страниц2009 Senior Trainning Take Home TestSolnKrida Singgih KuncoroОценок пока нет

- Introduction To MATLAB: Dr. Ben Mertz FSE 100 - Lecture 4Документ59 страницIntroduction To MATLAB: Dr. Ben Mertz FSE 100 - Lecture 4jameej42100% (1)

- 2023 Note 1Документ37 страниц2023 Note 1kavyaОценок пока нет

- Solvable and Nilpotent Lie AlgebrasДокумент4 страницыSolvable and Nilpotent Lie AlgebrasLayla SorkattiОценок пока нет

- Linear TransДокумент83 страницыLinear TransNandu100% (1)

- 6 Vector Apr18 2021Документ106 страниц6 Vector Apr18 2021Rohit SharmaОценок пока нет

- Linear Algebra and its Applications in 35 CharactersДокумент122 страницыLinear Algebra and its Applications in 35 CharactersdosspksОценок пока нет

- Linear Transformations: 7.1 Examples and Elementary PropertiesДокумент40 страницLinear Transformations: 7.1 Examples and Elementary PropertiesSteven Peter Devereux AbakashОценок пока нет

- Solutions Ark2: M N M N M N M NДокумент6 страницSolutions Ark2: M N M N M N M NCtn EnsОценок пока нет

- (Madhu N. Belur) Mathematical Control Theory II Behavioral Systems and Robust Control (PDF) (Zzzzz) (BЯ)Документ259 страниц(Madhu N. Belur) Mathematical Control Theory II Behavioral Systems and Robust Control (PDF) (Zzzzz) (BЯ)nishantОценок пока нет

- Communication and Detection Theory: Lecture 1: Amos LapidothДокумент632 страницыCommunication and Detection Theory: Lecture 1: Amos LapidothGulrez MОценок пока нет

- Unitary and Normal TransformationsДокумент26 страницUnitary and Normal TransformationsShubham Phadtare75% (4)

- Fields and RingsДокумент32 страницыFields and RingsRam babuОценок пока нет

- 02 Systems and ModelsДокумент87 страниц02 Systems and ModelsPabloОценок пока нет

- Solution Manual: Linear Algebra and Its Applications, 2edДокумент61 страницаSolution Manual: Linear Algebra and Its Applications, 2edUnholy Trinity86% (7)

- Introduction To Matrix Analysis - Hiai, PetzДокумент337 страницIntroduction To Matrix Analysis - Hiai, PetzLeonel Alejandro Obando100% (6)

- Vectors and Matrices, Problem Set 1Документ3 страницыVectors and Matrices, Problem Set 1Roy VeseyОценок пока нет

- Vectors and Tensors in Finite SpacesДокумент6 страницVectors and Tensors in Finite Spaceschemicalchouhan9303Оценок пока нет

- Linear Algebra-Final-KSOU-BCR PDFДокумент141 страницаLinear Algebra-Final-KSOU-BCR PDFRavi KumarОценок пока нет

- Department of Mathematics, IIT Madras MA2031-Linear Algebra For Engineers Assignment-IIДокумент2 страницыDepartment of Mathematics, IIT Madras MA2031-Linear Algebra For Engineers Assignment-IIhellokittyОценок пока нет

- Math 206 Section 5.4 Problem SolutionsДокумент8 страницMath 206 Section 5.4 Problem SolutionsArtianaОценок пока нет

- ALA - Assignment 3 2Документ2 страницыALA - Assignment 3 2Ravi VedicОценок пока нет

- Ex 7 - SolutionДокумент15 страницEx 7 - SolutionAmiya Biswas100% (3)

- Taylor, Introduction To Functional Analysis PDFДокумент438 страницTaylor, Introduction To Functional Analysis PDFWaqas Wick100% (1)

- BasisДокумент8 страницBasisUntung Teguh BudiantoОценок пока нет

- Math 2 Level 2 Test 2 John Chung :)Документ19 страницMath 2 Level 2 Test 2 John Chung :)yazОценок пока нет

- Hoffman Kunze Linear Algebra Chapter 3 5 3 7 SolutionsДокумент7 страницHoffman Kunze Linear Algebra Chapter 3 5 3 7 SolutionsSuyash DateОценок пока нет

- Ee263 Course ReaderДокумент430 страницEe263 Course ReadersurvinderpalОценок пока нет

- Chapter3 UpdatedДокумент54 страницыChapter3 UpdatedPedro Casariego CórdobaОценок пока нет

- 1991 06 Sauer Casdagli JStatPhys EmbedologyДокумент38 страниц1991 06 Sauer Casdagli JStatPhys EmbedologyOscarОценок пока нет

- Fortran 90 CommandsДокумент21 страницаFortran 90 CommandsRabindraSubediОценок пока нет