Академический Документы

Профессиональный Документы

Культура Документы

2101 F 12 Bootstrap R

Загружено:

ngyncloud0 оценок0% нашли этот документ полезным (0 голосов)

29 просмотров5 страницBootstarp R

Оригинальное название

2101 f 12 Bootstrap r

Авторское право

© © All Rights Reserved

Доступные форматы

PDF, TXT или читайте онлайн в Scribd

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документBootstarp R

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PDF, TXT или читайте онлайн в Scribd

0 оценок0% нашли этот документ полезным (0 голосов)

29 просмотров5 страниц2101 F 12 Bootstrap R

Загружено:

ngyncloudBootstarp R

Авторское право:

© All Rights Reserved

Доступные форматы

Скачайте в формате PDF, TXT или читайте онлайн в Scribd

Вы находитесь на странице: 1из 5

1

Bootstiap Regiession with R

> # Bootstrap regression example

>

> kars =

read.table("http://www.utstat.toronto.edu/~brunner/appliedf12/data/mcars3.data",

+ header=T)

> kars[1:4,]

Cntry kpl weight length

1 US 5.04 2.1780 5.9182

2 Japan 10.08 1.0260 4.3180

3 US 9.24 1.1880 4.2672

4 US 7.98 1.4445 5.1054

> attach(kars) # Variables are now available by name

>

> # Before regression, a garden variety univariate bootstrap

> hist(kpl) # Right skewed

> # Small example for demonstration of R syntax

> set.seed(3244)

> x = kpl[1:10]; x

[1] 5.04 10.08 9.24 7.98 7.98 7.98 9.66 7.56 5.88 10.92

> n = length(x)

> # Sample of size n from the numbers 1:n, with replacement.

> choices = sample(1:n,size=n,replace=T); choices

[1] 2 7 5 1 4 6 9 8 4 10

> x[choices]

[1] 10.08 9.66 7.98 5.04 7.98 7.98 5.88 7.56 7.98 10.92

Histogram of kpl

kpl

F

r

e

q

u

e

n

c

y

4 6 8 10 12 14 16 18

0

5

1

0

1

5

2

0

2

5

3

0

3

5

2

> # Now bootstrap the mean of kpl

> n = length(kpl); B = 1000

> mstar = NULL # mstar will contain bootstrap mean values

>

> for(draw in 1:B) mstar = c(mstar,mean(kpl[sample(1:n,size=n,replace=T)]))

> hist(mstar)

Histogram of mstar

mstar

F

r

e

q

u

e

n

c

y

8.0 8.5 9.0 9.5

0

5

0

1

0

0

1

5

0

2

0

0

2

5

0

S

> # Look at a normal qq plot. That's a plot of the order statistics against

> # the corresponding quantiles of the (standard) normal. Should be roughly linear

> # if the data are from a normal distribution.

> qqnorm(mstar); qqline(mstar)

> # Quantile bootstrap CI for mu. Use ONLY if the bootstrap distribution is symmetric.

> sort(mstar)[25]; sort(mstar)[975]

[1] 8.3034

[1] 9.3492

> # Compare the usual CI

> t.test(kpl)

One Sample t-test

data: kpl

t = 32.9363, df = 99, p-value < 2.2e-16

alternative hypothesis: true mean is not equal to 0

95 percent confidence interval:

8.264966 9.324634

sample estimates:

mean of x

8.7948

-3 -2 -1 0 1 2 3

8

.

0

8

.

5

9

.

0

9

.

5

Normal Q-Q Plot

Theoretical Quantiles

S

a

m

p

l

e

Q

u

a

n

t

i

l

e

s

4

> # Now regression

> # Compute some polynomial terms

> wsq = weight^2; lsq = length^2; wl = weight*length

> # Bind it into a nice data frame

> datta = cbind(kpl,weight,length,wsq,lsq,wl)

> datta = as.data.frame(datta)

>

> model1 = lm(kpl ~ weight + length + wsq + lsq + wl, data=datta)

> summary(model1)

Call:

lm(formula = kpl ~ weight + length + wsq + lsq + wl, data = datta)

Residuals:

Min 1Q Median 3Q Max

-4.0861 -0.8702 0.0490 0.6898 4.4006

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 79.124 29.121 2.717 0.00784 **

weight 24.336 26.570 0.916 0.36204

length -33.764 19.350 -1.745 0.08427 .

wsq 11.377 8.531 1.334 0.18556

lsq 5.140 3.410 1.507 0.13508

wl -12.442 10.174 -1.223 0.22442

---

Signif. codes: 0 *** 0.001 ** 0.01 * 0.05 . 0.1 1

Residual standard error: 1.577 on 94 degrees of freedom

Multiple R-squared: 0.6689, Adjusted R-squared: 0.6513

F-statistic: 37.98 on 5 and 94 DF, p-value: < 2.2e-16

> betahat = coef(model1); betahat

(Intercept) weight length wsq lsq wl

79.124214 24.336110 -33.763782 11.376646 5.139649 -12.442449

>

> set.seed(3244)

> bstar = NULL # Rows of bstar will be bootstrap vectors of regression coefficients.

> n = length(kpl); B = 1000

> for(draw in 1:B)

+ {

+ # Randomly sample from the rows of kars, with replacement

+ Dstar = datta[sample(1:n,size=n,replace=T),]

+ model = lm(kpl ~ weight + length + wsq + lsq + wl, data=Dstar)

+ bstar = rbind( bstar,coef(model) )

+ } # Next draw

>

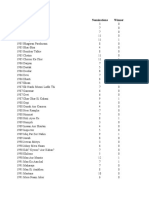

> bstar[1:5,]

(Intercept) weight length wsq lsq wl

[1,] 64.73852 15.322187 -25.549389 14.378022 4.437351 -12.779138

[2,] 270.35328 158.868074 -149.690584 26.298031 21.834487 -47.728186

[3,] 30.97446 -0.156246 -7.492504 10.815623 1.853871 -7.490135

[4,] 102.07061 48.638339 -50.062648 15.305469 7.844481 -19.669466

[5,] 74.31620 -4.632140 -23.726771 -1.497523 2.162598 1.070870

>

S

> Vb = var(bstar) # Approximate asymptotic covariance matrix of betahat

> Vb

(Intercept) weight length wsq lsq wl

(Intercept) 4009.5755 2805.7433 -2432.9272 403.57428 359.95762 -795.78318

weight 2805.7433 2337.0043 -1816.6783 434.68245 288.94772 -724.67663

length -2432.9272 -1816.6783 1511.4557 -292.33275 -229.91599 534.81663

wsq 403.5743 434.6825 -292.3327 117.92217 52.99175 -158.04873

lsq 359.9576 288.9477 -229.9160 52.99175 36.19566 -89.15841

wl -795.7832 -724.6766 534.8166 -158.04873 -89.15841 239.35661

>

> # Test individual coefficients. H0: betaj=0

> se = sqrt(diag(Vb)); Z = betahat/se

> rbind(betahat,se,Z)

(Intercept) weight length wsq lsq wl

betahat 79.124214 24.3361095 -33.7637822 11.376646 5.1396488 -12.4424491

se 63.321209 48.3425725 38.8774443 10.859198 6.0162826 15.4711541

Z 1.249569 0.5034095 -0.8684671 1.047651 0.8542898 -0.8042354

> # Now test the product terms all at once

>

> WaldTest = function(L,thetahat,Vn,h=0) # H0: L theta = h

+ # Note Vn is the asymptotic covariance matrix, so it's the

+ # Consistent estimator divided by n. For true Wald tests

+ # based on numerical MLEs, just use the inverse of the Hessian.

+ {

+ WaldTest = numeric(3)

+ names(WaldTest) = c("W","df","p-value")

+ r = dim(L)[1]

+ W = t(L%*%thetahat-h) %*% solve(L%*%Vn%*%t(L)) %*%

+ (L%*%thetahat-h)

+ W = as.numeric(W)

+ pval = 1-pchisq(W,r)

+ WaldTest[1] = W; WaldTest[2] = r; WaldTest[3] = pval

+ WaldTest

+ } # End function WaldTest

>

> Lprod = rbind( c(0,0,0,1,0,0),

+ c(0,0,0,0,1,0),

+ c(0,0,0,0,0,1) )

> WaldTest(Lprod,betahat,Vb)

W df p-value

9.463393 3.000000 0.023724

>

>

> # Normal test for comparison

> model0 = lm(kpl ~ weight + length) # No product terms

> anova(model0,model1) # p = 0.0133

Analysis of Variance Table

Model 1: kpl ~ weight + length

Model 2: kpl ~ weight + length + wsq + lsq + wl

Res.Df RSS Df Sum of Sq F Pr(>F)

1 97 261.81

2 94 233.72 3 28.095 3.7666 0.0133 *

---

Signif. codes: 0 *** 0.001 ** 0.01 * 0.05 . 0.1 1

Final comment: This is not a typical bootstiap iegiession. It's moie common to bootstiap the

iesiuuals. But that applies to a conuitional mouel in which the values of the explanatoiy vaiiables aie

fixeu constants.

Вам также может понравиться

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (120)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- CC2 CH 6 TVДокумент122 страницыCC2 CH 6 TVngyncloud100% (2)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Quantitative FinanceДокумент271 страницаQuantitative Financemkjh100% (3)

- CCC3 CH 1 SV 1Документ52 страницыCCC3 CH 1 SV 1ngyncloudОценок пока нет

- Pandas Cheat SheetДокумент2 страницыPandas Cheat Sheetngyncloud100% (4)

- Fixed Effects Lecture1 PDFДокумент40 страницFixed Effects Lecture1 PDFAnonymous 0sxQqwAIMBОценок пока нет

- STAT2, 2nd Edition, Ann Cannon: Full Chapter atДокумент5 страницSTAT2, 2nd Edition, Ann Cannon: Full Chapter atRaymond Mulinix96% (24)

- Forecasting PDFДокумент101 страницаForecasting PDFandresacastroОценок пока нет

- Variance Reduction TechniqueДокумент51 страницаVariance Reduction TechniquengyncloudОценок пока нет

- LondonR - BigR Data - Hadley Wickham - 20130716Документ56 страницLondonR - BigR Data - Hadley Wickham - 20130716ngyncloudОценок пока нет

- Google VisДокумент41 страницаGoogle VisAl TysonОценок пока нет

- Journal of Statistic PDFДокумент29 страницJournal of Statistic PDFsumantasorenОценок пока нет

- HW1 Last QuestionДокумент1 страницаHW1 Last QuestionngyncloudОценок пока нет

- Gubellini Fin 651 FL 12Документ10 страницGubellini Fin 651 FL 12ngyncloudОценок пока нет

- Chap 07 SlidesДокумент20 страницChap 07 SlidesngyncloudОценок пока нет

- Notes GeoДокумент4 страницыNotes GeongyncloudОценок пока нет

- Sas Simple Regression 2010Документ8 страницSas Simple Regression 2010ngyncloudОценок пока нет

- Simulation Models S AsДокумент7 страницSimulation Models S AsngyncloudОценок пока нет

- STAT 700 Homework 5Документ10 страницSTAT 700 Homework 5ngyncloudОценок пока нет

- 01 03Документ19 страниц01 03ngyncloudОценок пока нет

- Introduction To The R Language Introduction To The R LanguageДокумент7 страницIntroduction To The R Language Introduction To The R LanguageAugusto FerrariОценок пока нет

- Sas DianotisДокумент11 страницSas DianotisngyncloudОценок пока нет

- 01 01Документ15 страниц01 01ngyncloudОценок пока нет

- Coursework TemplateДокумент2 страницыCoursework TemplatengyncloudОценок пока нет

- Sta248 Lecture9Документ29 страницSta248 Lecture9ngyncloudОценок пока нет

- 03 04Документ9 страниц03 04ngyncloudОценок пока нет

- Anova Kuchroo LeeДокумент26 страницAnova Kuchroo LeengyncloudОценок пока нет

- 01 01Документ15 страниц01 01ngyncloudОценок пока нет

- Coding Standards For R Coding Standards For RДокумент6 страницCoding Standards For R Coding Standards For Ridoku2010Оценок пока нет

- Dummy Var Regression Lecture 2008Документ9 страницDummy Var Regression Lecture 2008ngyncloudОценок пока нет

- Computing New Variables in SASДокумент12 страницComputing New Variables in SASngyncloudОценок пока нет

- 580 Report TemplateДокумент2 страницы580 Report TemplatengyncloudОценок пока нет

- 10 Regression Analysis in SASДокумент12 страниц10 Regression Analysis in SASPekanhp OkОценок пока нет

- Save Columns: Prediction FormulaДокумент3 страницыSave Columns: Prediction FormulangyncloudОценок пока нет

- Peter H. Westfall, Andrea L. Arias - Understanding Regression Analysis - A Conditional Distribution Approach-Chapman and Hall - CRC (2020)Документ515 страницPeter H. Westfall, Andrea L. Arias - Understanding Regression Analysis - A Conditional Distribution Approach-Chapman and Hall - CRC (2020)jrvv2013gmailОценок пока нет

- EconometricsДокумент1 страницаEconometricsJessica SohОценок пока нет

- AssignmentДокумент9 страницAssignmentPrasanth Talluri40% (5)

- Cross-Validation, Regularization, and Principal Components Analysis (PCA)Документ47 страницCross-Validation, Regularization, and Principal Components Analysis (PCA)helloОценок пока нет

- Forecasting Product Sales - Dataset 1Документ9 страницForecasting Product Sales - Dataset 1hiteshОценок пока нет

- Lesson 15Документ43 страницыLesson 15Syaiful AlamОценок пока нет

- Chapter 1Документ31 страницаChapter 1Đồng DươngОценок пока нет

- Mathematics For EconomicsДокумент6 страницMathematics For Economicsdanu.permanaОценок пока нет

- Practice Set #6 Demand Management and ForecastingДокумент8 страницPractice Set #6 Demand Management and ForecastingFung CharlotteОценок пока нет

- Logistic NotaДокумент87 страницLogistic NotaMathsCatch Cg RohainulОценок пока нет

- Econometrics Assig 1Документ13 страницEconometrics Assig 1ahmed0% (1)

- English List 3BДокумент547 страницEnglish List 3BStrauss ReakОценок пока нет

- TH Ex wk2 PDFДокумент2 страницыTH Ex wk2 PDFAndré HilleniusОценок пока нет

- Ner LoveДокумент16 страницNer LoveRoger Asencios NuñezОценок пока нет

- Non Linear Probability ModelsДокумент18 страницNon Linear Probability ModelsSriram ThwarОценок пока нет

- How To Estimate Long-Run Relationships in EconomicsДокумент13 страницHow To Estimate Long-Run Relationships in EconomicsrunawayyyОценок пока нет

- Econometrics Chapter 1Документ10 страницEconometrics Chapter 1Nabila Haliza RahmadiaОценок пока нет

- Logistic Regression (2022)Документ44 страницыLogistic Regression (2022)Nadzmi NadzriОценок пока нет

- CW ReportДокумент15 страницCW ReportJoseph PalinОценок пока нет

- FMOLS ModelДокумент8 страницFMOLS ModelSayed Farrukh AhmedОценок пока нет

- 2k Factorials: Homework Solution (Page 36) : NotesДокумент9 страниц2k Factorials: Homework Solution (Page 36) : Noteslmartinez1010Оценок пока нет

- ARDL GoodSlides PDFДокумент44 страницыARDL GoodSlides PDFmazamniaziОценок пока нет

- SSRN Id2805602 PDFДокумент101 страницаSSRN Id2805602 PDFHari DharmarajanОценок пока нет

- Econ 140 - Spring 2017 - SyllanbusДокумент3 страницыEcon 140 - Spring 2017 - SyllanbusTomás VillenaОценок пока нет

- Forecasting For Economics and Business 1St Edition Gloria Gonzalez Rivera Solutions Manual Full Chapter PDFДокумент38 страницForecasting For Economics and Business 1St Edition Gloria Gonzalez Rivera Solutions Manual Full Chapter PDFmiguelstone5tt0f100% (12)

- Hayashi CH 1 AnswersДокумент4 страницыHayashi CH 1 AnswersLauren spОценок пока нет