Академический Документы

Профессиональный Документы

Культура Документы

1

Загружено:

SandipChowdhuryОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

1

Загружено:

SandipChowdhuryАвторское право:

Доступные форматы

Lecture 01

Introduction to CSE 30321

Suggested reading:

None.

1

My contact information

Michael Niemier

(Mike is ne.)

Contact information:

380 Fitzpatrick Hall

mniemier@nd.edu

(574) 631-3858

Ofce hours:

Mon: noon-1:30

Thurs: 2:30-4:00

About me

Onto the syllabus

2

Fundamental lesson(s) for this lecture:

1. Why Computer Architecture is important for your major

(CS, CPEG, EE, MATH, etc)

2. What should you be able to do when you nish class?

3

Why should I care?

If youre interested in SW

Understanding how processor HW operates, how its

organized, and the interface between HW and a HLL will

make you a better programmer.

see the matrix multiply & the 4- to 8-core examples

If youre interested in HW

Technology is rapidly changing how microprocessor

architectures are designed and organized, and has

introduced new metrics like performance per Watt

The material covered in this class is the baseline required to

both understand and to help develop the state of the art

see the technology drive to multi-core material

4

How we process information

hasnt changed much since 1930s

and 1940s

5 6

A little history... programs

Stored program model has been around for a long time...

Program memory

Data Memory

Program

counter

(index)

Processing Logic

Stored Program (continued)

7

for i=0; i<5; i++ {

a = (a*b) + c;

}

A hypothetical translation:

MULT temp,a,b # temp ! a*b

MULT r1,r2,r3 # r1 ! r2*r3

ADD a,temp,c # a ! temp+c

ADD r2,r1,r4 # r2 ! r1+r4

Can dene codes for MULT and ADD

Assume MULT = 110011 & ADD = 001110

stored

program

becomes

PC 110011 000001 000010 000011

PC+1 001110 000010 000001 000100

8

Konrad Zuse (1938) Z3 machine

Use binary numbers to encode information

Represent binary digits as on/off state of a current

switch

on=1

Electromechanical

relay

E

x

p

o

n

e

n

t

i

a

l

d

o

w

n

-

s

c

a

l

i

n

g

Vacuum tubes Solid-state transistors CMOS IC

The flow through one switch turns another on or off.

A little history... Zuses paradigm

Result:

exponential transistor

density increase

9

Transistors used to manipulate/store 1s & 0s

0

(0V)

1

(5V)

N

M

O

S

Switch-level representation Cross-sectional view

(can think of as a capacitor storing charge)

If we (i) apply a suitable voltage to the gate & (ii) then apply a

suitable voltage between source and drain, current will ow.

10

Remember these!

Moores Law

Cramming more components onto integrated circuits.

- G.E. Moore, Electronics 1965

Observation: DRAM transistor density doubles annually

Became known as Moores Law

Actually, a bit off:

Density doubles every 18 months (now more like 24)

(in 1965 they only had 4 data points!)

Corollaries:

Cost per transistor halves annually (18 months)

Power per transistor decreases with scaling

Speed increases with scaling

Of course, it depends on how small you try to make things

(I.e. no exponential lasts forever)

11

Moores Law

12

Feature sizes...

13

Moores Law

Moores Curve is a self-fullling prophecy

2X every 2 years means ~3% per month

I.e. ((1 X 1.03) * 1.03)*1.03 24 times = ~2

Can use 3% per month to judge performance features

If feature adds 9 months to scheduleit should add at least

30% to performance

(1.03

9

= 1.30 ! 30%)

14

A bit on device performance...

One way to think about switching time:

Charge is carried by electrons

Time for charge to cross channel = length/speed

= L

2

/(mVds)

What about power (i.e. heat)?

Dynamic power is: Pdyn = CLVdd

2

f0-1

CL = (eoxWL)/d

eox = dielectric, WL = parallel plate area, d = distance between

gate and substrate

Thus, to make a device

faster, we want to either

increase Vds or decrease

feature sizes (i.e. L)

Summary of relationships

(+) If V increases, speed (performance) increases

(-) If V increases, power (heat) increases

(+)If L decreases, speed (performance) increases

(?)If L decreases, power (heat) does what?

P could improve because of lower C

P could increase because >> # of devices switch

P could increase because >> # of devices switch faster!

15

Need to carefully consider tradeoffs between

speed and heat

16

So, whats happened?

Speed increases with scaling...

2005 projection was for 5.2 GHz - and we didnt make it in

production. Further, were still stuck at 3+ GHz in production.

17

So, whats happened?

Power decreases with scaling...

18

So, whats happened?

Speed increases with scaling...

Power decreases with scaling...

Why the clock attening? POWER!!!!

19

So, whats happened?

What about scaling...

Critical portion of gate

approaching

dimensions that are

just 1 atom thick!!!

Cross-sectional view

Materials innovations were and still are needed

(Short term?) solution

20

Processor complexity is good enough

Transistor sizes can still scale

Slow processors down to manage power

Get performance from...

Consider:

A chip with 1 processor core that takes

1 ns to perform an operation

A chip with 2 processor cores each of

which takes 2 ns to perform an

operation

Parallelism

Oh transistors used for memory too

21

http://www.micromagazine.com/archive/02/06/0206MI22b.jpg

http://www.ieee.org/portal/site/sscs/menuitem.f07ee9e3b2a01d06bb9305765bac26c8/index.jsp?&pName=sscs_level1_article&TheCat=2171&path=sscs/08Winter&le=Sunami.xml

http://web.sfc.keio.ac.jp/~rdv/keio/sfc/teaching/architecture/architecture-2008/6t-SRAM-cell.png

DRAM

transistors

are deep

trenches

1 transistor (dense) memory

6 transistor (fast) memory

SRAM

transistors

made with

logic

process

Oh transistors used for memory too

Why? Put faster memory closer to processing logic...

SRAM: avg. improvement: density +25%, speed +20%

DRAM: avg. improvement: density +60%, speed +4%

DISK: avg. improvement: density +25%, speed +4%

22

SRAM memory

forms an on-chip

cache

Problem: Program, data =

bigger + power problem isnt

going away...

Leads to a memory wall cant get data to processor fast enough

Scaling the memory wall

23

CPU Registers

100s Bytes

<10s ns

Cache

K Bytes

10-100 ns

1-0.1 cents/bit

Main Memory

M Bytes

200ns- 500ns

$.0001-.00001 cents /bit

Disk

G Bytes, 10 ms

(10,000,000 ns)

10

-5

- 10

-6

cents/bit

Tape

innite

sec-min

10

-8

Registers

Cache

Memory

Disk

Tape

Create a memory hierarchy

Put frequently

used instructions

and data in fast

memory

Have larger

memory, storage

options for all,

less frequently

used information

The fallout

Just a few examples that highlight the importance of

understanding a computers architecture whether

youre interested in HW or SW

(many more throughout the semester)

24

Example 1: the memory wall

25

http://www.eng.utah.edu/~cs3200/notes/matrix_storage.pdf

Scale of Dense Matrices

Example 2D problem

10,000 unknowns

Dense matrix containing floats

10,000

elements 4 bytes = 400

Same matrix contains doubles

10,000

elements 8 bytes = 800

And 10,000 unknowns isnt that large!

3

Some slides from Chris Johnson and Mike Steffen

CS 3200 Introduction to Scientific Computing

Instructor:

Topic:

Paul Rosen

Matrix Storage, Bandwidth, and Renumbering

So, can this amount

of data t in fast, on-

chip memory?

Model Cores L1 Cache L2 Cache L3 Cache Release

Date

Release

Price

i7 970 6 6 x 32 KB 6 x 256 KB 12 MB 07/19/10 $885

i7 980 6 6 x 32 KB 6 x 256 KB 12 MB 06/26/11 $583

i7 980X 6 6 x 32 KB 6 x 256 KB 12 MB 03/16/10 $999

i7 990X 6 6 x 32 KB 6 x 256 KB 12 MB 02/14/11 $999

Not even close. 14 MB

Optimizations can help, but

still problematic.

Example 1: the memory wall

26

A =

a b

c d

!

"

#

$

%

&, B =

e f

g h

!

"

#

#

$

%

&

&

then AB =

a b

c d

!

"

#

$

%

&

e f

g h

!

"

#

#

$

%

&

&

=

ae +bg af +bh

ce +dg cf +dh

!

"

#

#

$

%

&

&

Need entire row, column of each per element.

Need entire row, columns to compute new row.

for(i=0; i<XSIZE; i=i+1) {

for(j=0; j<YSIZE; j=j+1) {

r = 0;

for(k=0; k<XSIZE; k=k+1) {

r = r + y[i][k] * z[k][j];

}

x[i][j] = r;

}

}

Assume standard matrix multiply code

Ideally, (i) 1 row of 10,000 elements AND (ii) a 10,000

2

matrix is

in fast memory

(can apply more efcient storage techniques, but does not eliminate the problem)

for i=0; i<5; i++ {

a = (a*b) + c;

}

MULT temp,a,b # temp ! a*b

MULT r1,r2,r3 # r1 ! r2*r3

v2 requires 17% MORE

instructions than v1, but takes

210% less time to run than v1!

Example 1: the memory wall

Changing how you write your code can dramatically

improve execution time

Consider:

27

for(jj=0; jj<XSIZE; jj=jj+B)

for(kk=0; kk<YSIZE; kk=kk+B)

for(i=0; i<XSIZE; i=i+1)

for(j=jj; j<min(jj+B,YSIZE); j=j+1) {

r=0;

for(k=kk; k<min(kk+B,XSIZE); k=k+1)

r = r + y[i][k]*z[k][j];

x[i][j] = x[i][j] + r;

}

for(i=0; i<XSIZE; i=i+1) {

for(j=0; j<YSIZE; j=j+1) {

r = 0;

for(k=0; k<XSIZE; k=k+1) {

r = r + y[i][k] * z[k][j];

}

x[i][j] = r;

}

}

Vs.

v1

v2

Example 1: the memory wall

Youll learn why a minor code re-write could lead to

2010% performance improvements this semester

(and this this is just for 1000 x 1000 matrices!)

Foreshadowing

An understanding of the underlying HW is essential

28

Example 2: multi-core extensions

29

When will it

stop?

Practical problems

must be addressed!

Quad core chips... 7, 8, and 9 core chips...

Multi-core only as good as its algorithms

30

!

Speedup =

1

1- Fraction

parallelizable [ ]

+

Fraction

parallelizable

N

Performance

improvement

dened by

Amdhahls Law

If only 90% of a problem can be run in

parallel, a 32-core chip gives less than

a 8X speedup

Other issues

You may write nice parallel code, but implementation

issues must also be considered

Example:

Assume N cores solve a problem in parallel

At times, N cores need to communicate with one another to

continue computation

Overhead is NOT parallelizable...and can be signicant!

31

!

Speedup =

1

1- Fraction

parallelizable [ ] +

Fraction

parallelizable

N

Other issues

What if you write well-optimized, debugged code for a 4-

core processor which is then replaced with an 8-core

processor?

Does code need to be re-written, re-optimized?

How do you adapt?

32

Example #3: technologys changing

33

An Energy-Efcient Heterogeneous CMP based on Hybrid

TFET-CMOS Cores

Vinay Saripalli

, Asit K. Mishra

, Suman Datta

and Vijaykrishnan Narayanan

The Pennsylvania State University, University Park, PA 16802, USA

{vxs924,amishra,vijay}@cse.psu.edu,

sdatta@engr.psu.edu

ABSTRACT

The steep sub-threshold characteristics of inter-band tunnel-

ing FETs (TFETs) make an attractive choice for low voltage

operations. In this work, we propose a hybrid TFET-CMOS

chip multiprocessor (CMP) that uses CMOS cores for higher

voltages and TFETs for lower voltages by exploiting dif-

ferences in application characteristics. Building from the

device characterization to design and simulation of TFET

based circuits, our work culminates with a workload eval-

uation of various single/multi-threaded applications. Our

evaluation shows the promise of a new dimension to hetero-

geneous CMPs to achieve signicant energy eciencies (upto

50% energy benet and 25% ED benet with single-threaded

applications, and 55% ED benet with multi-threaded ap-

plications).

Categories and Subject Descriptors: C.1.3 [Proces-

sor Architectures]: Other Architecture Styles Heteroge-

neous (hybrid) systems; B.7.1 [Integrated Circuits]: Types

and Design Styles Advanced technologies, Memory tech-

nologies

General Terms: Design, Experimentation, Performance.

Keywords: Tunnel FETs, Heterogeneous Multi-Core.

1. INTRODUCTION

Power consumption is a critical constraint hampering prog-

ress towards more sophisticated and powerful processors. A

key challenge to reducing power consumption has been in

reducing the supply voltage due to concerns of either re-

ducing performance (due to reduced drive currents) or in-

creasing leakage (when reducing threshold voltage simulta-

neously). The sub-threshold slope of the transistor is a key

factor in inuencing the leakage power consumption. With

a steep sub-threshold device it is possible to obtain high

drive currents (IOn) at lower voltages without increasing the

o state current (IOff ). In this work, we propose the use

of Inter-band Tunneling Field Eect Transistors (TFETs)

[11] that exhibit sub-threshold slopes steeper than the the-

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for prot or commercial advantage and that copies

bear this notice and the full citation on the rst page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specic

permission and/or a fee.

DAC 2011, June 5-10, 2011, San Diego, California, USA.

Copyright 2011 ACM ACM 978-1-4503-0636-2/11/06 ...$10.00.

oretical limit of 60 mV/decade found in CMOS devices.

Consequently, TFETs can provide higher performance than

CMOS based designs at lower voltages. However, at higher

voltages, the IOn of MOSFETs are much larger than can

be accomplished by the tunneling mechanism employed in

existing TFET devices. This trade-o enables architectural

innovations through use of heterogeneous systems that em-

ploy both TFET and CMOS based circuit elements.

Heterogeneous chip-multiprocessors that incorporate cores

with dierent frequencies, micro-architectural resources, in-

struction-set architectures [6, 7] are already emerging. In

all these works, the energy-performance optimizations are

performed by appropriately mapping the application to a

preferred core. In this work, we add a new technology di-

mensionality to this heterogeneity by using a mix of TFET

and CMOS based cores. The feasibility of TFET cores is

analyzed by showing design and circuit simulations of logic

and memory components that utilize TFET based device

structure characterizations.

Dynamic voltage and frequency scaling (DVFS) is widely

used to reduce power consumption. Our heterogeneous ar-

chitecture enables to extend the range of operating voltages

possible by supporting TFET cores that are ecient at low

voltages and CMOS cores that are ecient at high voltages.

For an application that is constrained by factors such as

I/O or memory latencies, low voltage operations is possible,

sacricing little performance. In such cases a TFET core

may be preferable. However, for compute intensive perfor-

mance critical applications, MOSFETs operating at higher

voltages are necessary. Our study using two DVFS schemes

show that the choice of TFET or CMOS for executing an

application varies based on the intrinsic characteristics of

the applications. In a multi-programmed environment which

is common on platforms ranging from cell-phones to high-

performance processors, our heterogeneous architectures can

improve energy eciencies by matching the varied charac-

teristics of dierent applications.

The emerging multi-threaded workloads provide an addi-

tional dimension to this TFET-CMOS choice. Multi-threaded

applications with good performance scalability can achieve

much better energy eciencies utilizing multiple cores oper-

ating at lower voltages. While energy eciencies through

parallelism is in itself not new, our choice of TFET vs.

CMOS for the application will change based on the actual

voltage at which the cores operate and the degree of par-

allelism (number of cores). Our explorations shows TFET

based cores to become more preferred in multi-threaded ap-

plications from both energy and performance perspective.

1.E+00

1.E+01

1.E+02

1.E+03

1.E+02

1.E+03

1.E+04

I

O

n

(

u

A

/

u

m

)

IOn/IOff

!

referred

co

rner

P

referred

co

rner

BuIk-pIanar CMOS LG 22nm

In0.53Ga0.47As DoubIe-Gate

Homojunction TFET LG 22nm

GaAs0.1Sb0.9-InAs DoubIe-Gate

Heterojunction TFET LG 22nm

(A) VCC 0.8V (B) VCC 0.3V

IOn/IOff

I

O

n

(

u

A

/

u

m

)

Figure 4: Comparison of IOn versus IOn/IOff ratio

for dierent operating points on the Id-Vg for (A) a

VCC window of 0.8V and (B) a VCC window of 0.3V.

of 3 10

3

when operating at its nominal VCC of 0.8V.

We also use a GaAs0.1Sb0.9/InAs HTFET which provides

an IOn of 100 uA/um and an IOn/IOff of 2 10

5

at VCC

0.3V. In order to build logic gates, a pull-up device is also

required. A p-HTFET can be constructed using a hetero-

junction with InAs as the source, as shown in Fig 5(B).

When a positive gate and drain voltage are applied to the n-

HTFET (Fig 5(A)), electrons tunnel from the GaAs0.1Sb0.9

source into the InAs channel (Fig 5(B)). In contrast, when a

negative gate and drain voltage is applied to the p-HTFET

(Fig 5(C)), holes tunnel into the GaAs0.1Sb0.9 channel from

the InAs source. By using the modelling techniques de-

scribed in Section 2.1, we obtain the energy-delay char-

acteristics of HTFET logic gates. The TCAD parameters

used for simulating the HTFETs are shown in Table 1. The

energy-delay performance curve of a HTFET 40-stage ring-

oscillator, when compared to that of a CMOS ring-oscillator

in Fig 6(A), shows a cross-over in the energy-delay character-

istics. The CMOS ring-oscillator has a better energy-delay

compared to the HTFET ring-oscillator at VCC > 0.65V

and the HTFET ring-oscillator has a better energy-delay

trade-o at VCC < 0.55V. Other logic gates, such as Or,

Not and Xor (which are not shown here) also show a simi-

lar cross-over. This trend is consistent with the discussion

in Section 2.2 where it has been illustrated that CMOS de-

vices provide better operation at high VCC and HTFETs

provide preferred operation at low VCC. Fig 6(B) shows the

energy-delay performance of a 32-bit prex-tree based Han-

Carlson Adder has a similar crossover behavior for CMOS

and HTFETs.

3.2 Tunnel FET Pass-Transistor Logic

Due to the asymmetric source-drain architecture (Fig 7(A-

GaAs0.1Sb0.9

P++ Source

4x10

19

cm

-3

InAs

N+ Drain

4x10

17

cm

-3

InAs

Intrinsic

ChanneI

2.5 nm HiK

5

n

m

40 nm 40 nm 22 nm

eIectron

hoIe

GaAs0.1Sb0.9

P+ Source

1x10

19

cm

-3

InAs

N+ Source

1x10

18

cm

-3

GaAs0.1Sb0.9

Intrinsic

ChanneI

2.5 nm HiK

2.5 nm HiK

40 nm 40 nm 22 nm

GaAs0.1Sb0.9

P++ Source

i Channel

InAs

InAs

N+ Drain

InAs

N+ Source

GaAs0.1Sb0.9

GaAs0.1Sb0.9

i Channel

P+ Drain

(A) (C)

(B) (D)

2.5 nm HiK

Figure 5: (A-B) Double-Gate H-NTFET device

structure and operation (C-D) Double-Gate H-

PTFET device structure and operation.

Table 1: HTFET Simulation parameters.

Parameter GaAs0.1Sb0.9 InAs

Band-Gap, EG[eV ] 0.84 0.53

(Including Quantization)

Electron Anity, 0[eV ] 3.96 4.78

Tunneling Parameter, APath 1.58 10

20

1.48 10

20

Tunneling Parameter, BPath 6.45 10

6

2.4 10

6

Tunneling Parameter, RPath 1.12 1.13

Electron DoS, NC [cm

3

] 4.59 10

19

8.75 10

16

Hole DoS, NV [cm

3

] 6.84 10

18

6.69 10

18

The n-HTFET and p-HTFET are simulated separately

using the Dynamic Non-local Tunneling model imple-

mented in TCAD sentaurus (Ver. C-2009-SP2). The

work function used for n-HTFET is 4.9 eV and for p-

HTFET is 4.66 eV. The temperature is set to 300 K

and High-Field Velocity-Saturation is enabled.

DeIay [ns]

0.8

V

0.6V

0.8V

0.5V

0.3V

0.8V

0.8V

0.2V

0.2V

ELeakage

0.2V

CMOS Ring OsciIIator

HTFET Ring OsciIIator

0.4V

0.8V

0.7V

0.5

V

0.7V

0.6V

0.4V

0.6V

0.6V

0.4V

(A)

32-bit CMOS Adder

32-bit HTFET Adder

0.4V

0.6V

E

n

e

r

g

y

[

f

J

]

Frequency [MHz]

E

n

e

r

g

y

[

f

J

]

Activity Factor 1 Activity Factor 0.1

32-bit CMOS Adder

32-bit HTFET Adder

(B)

Figure 6: Energy-Delay performance comparision

for (A) a CMOS and a HTFET ring-oscillator and

(B) a CMOS 32-bit and a TFET 32-bit Adder.

B)), HTFETs cannot function as bi-directional pass transis-

tors. Though this may seem to limit the utility of TFETs

in SRAM-cell design, several SRAM designs have been pro-

posed to circumvent this problem [5, 13]. It is also important

to consider a solution for logic, because of the ubiquitous us-

age of pass-transistors in logic design. We propose using a

pass-transistor stack made of N-HTFETs, with a P-HTFET

for precharging the output (Fig 7C) . All the N-HTFET

transistors in the pass-transistor stack will be oriented to-

ward the ouput which allows them to drive the On current

when the input signals are enabled. During the pre-charge

phase, the P-HTFET precharges the output to VCC, and

during the evaluate phase, the N-HTFET stack evaluates

the output based on the inputs to the pass-transistor stack.

3.3 Tunnel FET SRAM Cache

In order to model TFET-based processor architectures, it

is important to consider the characteristics of the L1 cache,

which is an integral on-chip component of a processor. We

use the analytical method implemented in the cache analy-

sis tool CACTI [16], in order to evaluate the energy-delay

performance of a TFET-based cache. As discussed in Sec-

tion 3.2, in order to overcome the problem of asymmet-

ric conduction in TFETs, a precharge-based pass-transistor

mux (Fig 7C) is implemented in CACTI, and we also assume

a 6-T SRAM Cell with virtual-ground [13].

To compute the gate delay, CACTI uses the Horowitz ap-

proximation [3] given by:

Delay = f

(log(Vs))

2

+ 2.(in/f ).b.(1 Vs)

New transistors can affect

trends discussed earlier.

HW that adds 2 numbers

together can be more

energy efcient with lower

V for the same delay

But if high performance

really needed, older

devices are better

Future outlook?

Heterogeneous cores?

If task critical, use

CMOS, if not, use

TFET

What about things like

barrier synchronization?

You need to know what HW you have!

Lets discuss some course goals

(i.e. what youre going to learn)

34

35

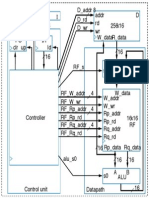

Goal #1

At the end of the semester, you should be able to...

...describe the fundamental components required in a

single core of a modern microprocessor

(Also, explain how they interact with each other, with main

memory, and with external storage media...)

E

xam

p

le

How do

on-chip memory,

processor logic,

main memory,

disk

interact?

Goal #2: (example: which plane is best?)

36

But what about fuel efciency? Load time?

In architecture, lots of times performance (or whats

best) depends on the application

Plane People Range

(miles)

Speed

(mph)

Avg. Cost

(millions)

Passenger*Miles

$

(full range)

Passenger*Miles

$

(1500 mile trip)

737-800 162 3,060 530 63.5 7806 3826

747-8I 467 8000 633 257.5 14508 2720

777-300 368 5995 622 222 9945 2486

787-8 230 8000 630 153 12026 2254

Which is best?

737 747 777 787

At the end of the semester, you should be able to...

...compare and contrast different computer architectures

to determine which one performs better...

37

E

xam

p

le

Goal #2

If you want to do X, which processor is best?

Goal #3: (motivation)

Which plane would you rather y to South Bend?

38

But this is the

one that you

(uncomfortably)

do y

Photo Credits:

http://www.airliners.net/aircraft-data/stats.main?id=196

http://images.businessweek.com/ss/07/06/0615_boeing787/image/787interior.jpg

39

Goal #3

At the end of the semester, you should be able to...

apply knowledge about a processors datapath,

different memory hierarchies, performance metrics, etc.

to design a microprocessor that (a) meets a target set of

performance goals and (b) is realistically implementable

E

xam

p

le

Image Gallery: Government's 10

Most Powerful Supercomputers

(click for larger image and for full

photo gallery)

Whitepapers

The Know-IT-Alls Guide to

eDiscovery (ebook)

Enterprise Content

Management: From Strategy To

Solution

Videos

More Government Insights

Apple's iPhone gets a battery boost

with the Mophie Juice Pack.

InformationWeek's Alex Wolfe

demos the hot new accessory.

E-mail | Print | | |

Climate Agency Awards $350 Million For Supercomputers

The National Oceanic and Atmospheric Administration will pay CSC and Cray to plan, build, and

operate high-performance computers for climate prediction research.

By J. Nicholas Hoover

InformationWeek

May 24, 2010 04:11 PM

The National Oceanic and Atmospheric Administration plans to spend as

much as $354 million on two new supercomputing contracts aimed at

improving climate forecast models.

Supercomputing plays an important role at NOAA, as supercomputers

power its dozens of models and hundreds of variants for weather,

climate, and ecological predictions. However, a recently released

59-page, multi-year strategic plan for its high-performance computing

found NOAA needs "new, more flexible" supercomputing power to

address the needs of researchers and members of the public who

access, leverage, and depend on those models.

In terms of a research and

development computer, NOAA found it requires one the power of which

will be ultimately measured in petaflops, which would make the future

machine one of the world's most powerful supercomputers.

The new supercomputer would support NOAA's environmental

modeling program by providing a test-bed for the agency to help

improve the accuracy, geographic reach, and time length of NOAA's

climate models and weather forecasting capabilities.

The more expensive of the two contracts, which goes to CSC, will cost

NOAA $317 million over nine years if the agency exercises all of the

contract options, including $49.3 million in funding over the next year

from the Obama administration's economic stimulus package, CSC

announced earlier this month.

CSC's contract includes requirements analysis, studies, benchmarking,

architecture work, and actual procurement of the new system, as well

as ongoing operations and support when the system is up and running.

In addition, CSC will do some application and modeling support.

One of the goals is to build the system in such a way as to integrate

formerly separate systems and to more easily transfer the research and

development work into the operational forecasting systems, Mina

Samii, VP and general manager of CSC's business services division's

civil and health services group, said in an interview.

This isn't the first major government supercomputing contract for CSC. The company has a dedicated

high-performance computing group and contracts with NASA Goddard Space Flight Center's computational

sciences center as well as the NASA Ames Research Center.

Cray announced last Thursday that it will lead the other contract, which stems from a partnership between

NOAA and the Oak Ridge National Laboratory. The $47 million Cray contract is also for a research

supercomputer, the forthcoming Climate Modeling and Research System, and includes a Cray XT6

supercomputer and a future upgrade to Cray's next-generation system, codenamed Baker.

"The deployment of this system will allow NOAA scientists and their collaborators to study systems of greater

complexity and at higher resolution, and in the process will hopefully improve the fidelity of global climate

modeling simulations," James Hack, director of climate research at Oak Ridge and of the National Center for

Computational Sciences, said in a statement.

While the two contracts are both related to climate research, it's unclear exactly how the two are related to

one another. NOAA did not respond to requests for comment.

Government IT Leadership Forum is a day-long venue where senior IT leadership in U.S. government comes

together to discuss how they're using technology to drive change in federal operations. It happens in

Washington, D.C., June 15. Find out more here.

Featured Government Jobs

Security Authorization (C&A) Manager-...

Accenture, Washington, DC

Computer Specialist II, Science Magazine

American Association for the Advancem..., Washington, DC

Cybersecurity Consultant (Security Te...

ROCS, Inc., Reston, VA

More Jobs >

Post a Job >

Subscribe to Government Jobs by:

Strategy: Efficient Data Centers

Government agencies face new requirements for increasing

energy efficiency and lowering their carbon footprints. The path

to success involves a combination of virtualization, server

consolidation, cooling systems and other technologies that

provide fine-grained control over data center resources.

Featured Resources

Buzz up!

How the infrastructure cloud can help agencies

reduce capital expenditures and give IT staff more

flexibility.

Benefits of a Cloud Infrastructure

Discuss This

1 message(s). Last at: May 25, 2010 11:41:58 AM

No matter how fast or how massive the parallel processing, a computer is only as good

as the model it is asked to compute. Garbage in, garbage out is still very relevant here,

especially regarding something as complex as the Earth s climate. In fact, some

mathematicians say the problem is too complex for humans to correctly model using the

mathematics we have at our disposal.

I wish them luck in using these machines to get closer to the truth, but I caution them to

moonwatcher

commented on May 25, 2010 11:41:58 AM

Climate Agency Awards $350 Million For Supercomputers -- S... http://www.informationweek.com/news/government/enterprise-...

2 of 4 5/31/10 3:25 PM

http://www.informationweek.com/news/government/enterprise-apps/showArticle.jhtml?articleID=225000152

Image Gallery: Government's 10

Most Powerful Supercomputers

(click for larger image and for full

photo gallery)

Whitepapers

The Know-IT-Alls Guide to

eDiscovery (ebook)

Enterprise Content

Management: From Strategy To

Solution

Videos

More Government Insights

Apple's iPhone gets a battery boost

with the Mophie Juice Pack.

InformationWeek's Alex Wolfe

demos the hot new accessory.

E-mail | Print | | |

Climate Agency Awards $350 Million For Supercomputers

The National Oceanic and Atmospheric Administration will pay CSC and Cray to plan, build, and

operate high-performance computers for climate prediction research.

By J. Nicholas Hoover

InformationWeek

May 24, 2010 04:11 PM

The National Oceanic and Atmospheric Administration plans to spend as

much as $354 million on two new supercomputing contracts aimed at

improving climate forecast models.

Supercomputing plays an important role at NOAA, as supercomputers

power its dozens of models and hundreds of variants for weather,

climate, and ecological predictions. However, a recently released

59-page, multi-year strategic plan for its high-performance computing

found NOAA needs "new, more flexible" supercomputing power to

address the needs of researchers and members of the public who

access, leverage, and depend on those models.

In terms of a research and

development computer, NOAA found it requires one the power of which

will be ultimately measured in petaflops, which would make the future

machine one of the world's most powerful supercomputers.

The new supercomputer would support NOAA's environmental

modeling program by providing a test-bed for the agency to help

improve the accuracy, geographic reach, and time length of NOAA's

climate models and weather forecasting capabilities.

The more expensive of the two contracts, which goes to CSC, will cost

NOAA $317 million over nine years if the agency exercises all of the

contract options, including $49.3 million in funding over the next year

from the Obama administration's economic stimulus package, CSC

announced earlier this month.

CSC's contract includes requirements analysis, studies, benchmarking,

architecture work, and actual procurement of the new system, as well

as ongoing operations and support when the system is up and running.

In addition, CSC will do some application and modeling support.

One of the goals is to build the system in such a way as to integrate

formerly separate systems and to more easily transfer the research and

development work into the operational forecasting systems, Mina

Samii, VP and general manager of CSC's business services division's

civil and health services group, said in an interview.

This isn't the first major government supercomputing contract for CSC. The company has a dedicated

high-performance computing group and contracts with NASA Goddard Space Flight Center's computational

sciences center as well as the NASA Ames Research Center.

Cray announced last Thursday that it will lead the other contract, which stems from a partnership between

NOAA and the Oak Ridge National Laboratory. The $47 million Cray contract is also for a research

supercomputer, the forthcoming Climate Modeling and Research System, and includes a Cray XT6

supercomputer and a future upgrade to Cray's next-generation system, codenamed Baker.

"The deployment of this system will allow NOAA scientists and their collaborators to study systems of greater

complexity and at higher resolution, and in the process will hopefully improve the fidelity of global climate

modeling simulations," James Hack, director of climate research at Oak Ridge and of the National Center for

Computational Sciences, said in a statement.

While the two contracts are both related to climate research, it's unclear exactly how the two are related to

one another. NOAA did not respond to requests for comment.

Government IT Leadership Forum is a day-long venue where senior IT leadership in U.S. government comes

together to discuss how they're using technology to drive change in federal operations. It happens in

Washington, D.C., June 15. Find out more here.

Featured Government Jobs

Security Authorization (C&A) Manager-...

Accenture, Washington, DC

Computer Specialist II, Science Magazine

American Association for the Advancem..., Washington, DC

Cybersecurity Consultant (Security Te...

ROCS, Inc., Reston, VA

More Jobs >

Post a Job >

Subscribe to Government Jobs by:

Strategy: Efficient Data Centers

Government agencies face new requirements for increasing

energy efficiency and lowering their carbon footprints. The path

to success involves a combination of virtualization, server

consolidation, cooling systems and other technologies that

provide fine-grained control over data center resources.

Featured Resources

Buzz up!

How the infrastructure cloud can help agencies

reduce capital expenditures and give IT staff more

flexibility.

Benefits of a Cloud Infrastructure

Discuss This

1 message(s). Last at: May 25, 2010 11:41:58 AM

No matter how fast or how massive the parallel processing, a computer is only as good

as the model it is asked to compute. Garbage in, garbage out is still very relevant here,

especially regarding something as complex as the Earth s climate. In fact, some

mathematicians say the problem is too complex for humans to correctly model using the

mathematics we have at our disposal.

I wish them luck in using these machines to get closer to the truth, but I caution them to

moonwatcher

commented on May 25, 2010 11:41:58 AM

Climate Agency Awards $350 Million For Supercomputers -- S... http://www.informationweek.com/news/government/enterprise-...

2 of 4 5/31/10 3:25 PM

The Lowest Power MP3 Playback

<6 MHz MP3 Decode

Tensilica optimized the MP3 decoder for its HiFi DSPs. This MP3 decoder now runs at the lowest power and is the most

efficient in the industry, requiring just 5.7 MHz when running at 128 Kbps, 44.1 KHz and dissipating 0.45 mW in TSMC's

65nm LP process (including memories). This makes Tensilica's Xtensa HiFi 2 Audio Engine ideal for adding MP3

playback to cellular phones, where current carrier requirements are for 100 hours of playback time on a battery charge,

and increasing to 200 hours in the near future.

This 5.7 MHz requirement includes the entire MP3 decode functionality, including MPEG container parsing and variable

length decoding (VLD, also known as Huffman decoding). Some competing offerings are merely accelerator blocks that

exclude portions of the complex control code in MP3 such as VLD, and thus rely on a processor to perform VLD

decoding. Tensilica's 5.7 MHz figure is all inclusive.

And Tensilica's MP3 decoder is 100% implemented in C. No special assembly level coding is required.

Tensilica - The Lowest Power MP3 Playback http://www.tensilica.com/products/audio/low-power-mp3-playb...

1 of 1 5/31/10 3:20 PM

http://www.tensilica.com/products/audio/low-power-mp3-playback.htm

40

Goal #4

At the end of the semester, you should be able to...

...understand how code written in a high-level language

(e.g. C) is eventually executed on-chip...

E

xam

p

le

Both programs could be run on the same processor...

How does this happen?

In C:

public static void insertionSort(int[] list, int length) {

int rstOutOfOrder, location, temp;

for(rstOutOfOrder = 1; rstOutOfOrder < length; rstOutOfOrder++) {

if(list[rstOutOfOrder] < list[rstOutOfOrder - 1]) {

temp = list[rstOutOfOrder];

location = rstOutOfOrder;

do {

list[location] = list[location-1];

location--;

}

while (location > 0 && list[location-1] > temp);

list[location] = temp;

}

}

}

In Java:

Goal #5: (motivation, part 1)

41

Image source: www.cs.unc.edu/~pmerrell/comp575/Z-Buffering.ppt

For this image, and this perspective, which

pixels should be rendered?

Solution provided by z-buffering algorithm

Depth of each object in 3D scene used to paint 2D image

Algorithm steps through list of polygons

# of polygons tends to be >> (for more detailed scene)

# of pixels/polygon tends to be small

Goal #5: (motivation, part 2)

42

Image source: http://en.wikipedia.org/wiki/Z-buffering

Often a dynamic data structure

(e.g. linked list)

Goal #5 Motivation (part 3)

43

CPU Registers

100s Bytes

<10s ns

Cache

K Bytes

10-100 ns

1-0.1 cents/bit

Main Memory

M Bytes

200ns- 500ns

$.0001-.00001 cents /bit

Disk

G Bytes, 10 ms

(10,000,000 ns)

10

-5

- 10

-6

cents/bit

Tape

innite

sec-min

10

-8

Registers

Cache

Memory

Disk

Tape

Data structure may

not t in fast memory

44

Goal #5

At the end of the semester, you should be able to...

...use knowledge about a microprocessors underlying

hardware (or architecture) to write more efcient

software

E

xam

p

le

Write this code

so it always

ts in fast,

on-chip

memory

45

Goal #6

At the end of the semester, you should be able to...

...explain and articulate why modern microprocessors

now have more than 1 core and how SW must adapt to

accommodate the now prevalent multi-core approach to

computing

Why?

For 8, 16 core chips to be practical, we have to be able to

use them

46

#1: Processor components

vs.

#2: Processor comparison

University of Notre Dame

CSE 30321 - Lecture 01 - Introduction to CSE 30321 44

vs.

for i=0; i<5; i++ {

a = (a*b) + c;

}

MULT r1,r2,r3 # r1 ! r2*r3

ADD r2,r1,r4 # r2 ! r1+r4

110011 000001 000010 000011

001110 000010 000001 000100

or

#3: HLL code translation

#4:

The right HW for the

right application

#5:

Writing more

efcient code

#6

Multicore processors

and programming

CSE 30321

Вам также может понравиться

- 05 HWДокумент3 страницы05 HWSandipChowdhuryОценок пока нет

- 04 PPT 4upДокумент8 страниц04 PPT 4upSandipChowdhuryОценок пока нет

- MIPS FSM Diagram!: Cycle 1!Документ1 страницаMIPS FSM Diagram!: Cycle 1!SandipChowdhuryОценок пока нет

- MIPS Datapath (With Exception Handling) !Документ1 страницаMIPS Datapath (With Exception Handling) !SandipChowdhuryОценок пока нет

- 05 BoardДокумент4 страницы05 BoardSandipChowdhuryОценок пока нет

- Lectures 02, 03 Introduction To Stored Programs": Suggested Reading:" HP Chapters 1.1-1.3"Документ30 страницLectures 02, 03 Introduction To Stored Programs": Suggested Reading:" HP Chapters 1.1-1.3"SandipChowdhuryОценок пока нет

- 04 PPT 1up PDFДокумент30 страниц04 PPT 1up PDFSandipChowdhuryОценок пока нет

- Lectures 02, 03 Introduction To Stored Programs": Suggested Reading:" HP Chapters 1.1-1.3"Документ30 страницLectures 02, 03 Introduction To Stored Programs": Suggested Reading:" HP Chapters 1.1-1.3"SandipChowdhuryОценок пока нет

- 03 HWДокумент3 страницы03 HWSandipChowdhuryОценок пока нет

- 03 LabДокумент8 страниц03 LabSandipChowdhuryОценок пока нет

- 04 BoardДокумент10 страниц04 BoardSandipChowdhuryОценок пока нет

- 02 LabДокумент7 страниц02 LabSandipChowdhuryОценок пока нет

- 1HДокумент8 страниц1HSandipChowdhuryОценок пока нет

- CSE 30321 - Lecture 02-03 - in Class Example Handout: Discussion - Overview of Stored ProgramsДокумент8 страницCSE 30321 - Lecture 02-03 - in Class Example Handout: Discussion - Overview of Stored ProgramsSandipChowdhuryОценок пока нет

- CSE 30321: Computer Architecture I: LogisticsДокумент3 страницыCSE 30321: Computer Architecture I: LogisticsSandipChowdhuryОценок пока нет

- Notre Dame CSE 30321 Lecture 02-03 Control and DatapathДокумент1 страницаNotre Dame CSE 30321 Lecture 02-03 Control and DatapathSandipChowdhuryОценок пока нет

- 02 HWДокумент6 страниц02 HWSandipChowdhuryОценок пока нет

- 1 LabДокумент10 страниц1 LabSandipChowdhuryОценок пока нет

- From: Subject: Date: To: Reply-ToДокумент1 страницаFrom: Subject: Date: To: Reply-ToSandipChowdhuryОценок пока нет

- Comparator PDFДокумент24 страницыComparator PDFBá Anh ĐàoОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (119)

- Debugger Xc800Документ25 страницDebugger Xc800carver_uaОценок пока нет

- SCX6555 Part ListДокумент99 страницSCX6555 Part ListWellington SantosОценок пока нет

- Kyocera-Mita KM3035 Troubleshooting CodesДокумент8 страницKyocera-Mita KM3035 Troubleshooting CodesDezta Hentreza PОценок пока нет

- Dot Net Ieee Project Titles 2017 - 2018: For More Details Contact MR - SARAVANAN - +91 9751812341Документ10 страницDot Net Ieee Project Titles 2017 - 2018: For More Details Contact MR - SARAVANAN - +91 9751812341Kpsmurugesan KpsmОценок пока нет

- Veritas Netbackup (Symantec Netbackup) : What It'S Used ForДокумент3 страницыVeritas Netbackup (Symantec Netbackup) : What It'S Used Forrhel tech07Оценок пока нет

- How To Open NEF Files in Photoshop (CS5) - Photography ForumДокумент6 страницHow To Open NEF Files in Photoshop (CS5) - Photography ForumSamson HaileyesusОценок пока нет

- Ran SharingДокумент69 страницRan SharingBegumKarabatak100% (2)

- Idirect Evolution XLC 11 Data SheetДокумент1 страницаIdirect Evolution XLC 11 Data SheetАнатолий МаловОценок пока нет

- QlikView Connector ManualДокумент100 страницQlikView Connector Manualhergamia9872Оценок пока нет

- ZTE UMTS UR15 NodeB Narrow Band Interference Cancellation Feature GuideДокумент14 страницZTE UMTS UR15 NodeB Narrow Band Interference Cancellation Feature GuideMuhammad HarisОценок пока нет

- System Analysis & DesignДокумент2 страницыSystem Analysis & DesignBijay Mishra0% (1)

- Website and Search Engine OverviewДокумент11 страницWebsite and Search Engine OverviewYemima HanniОценок пока нет

- ColleagueR18InstallationProcedures 02212013Документ312 страницColleagueR18InstallationProcedures 02212013ugaboogaОценок пока нет

- ZXSDR Series Base Station Configuration and Commissioning PDFДокумент145 страницZXSDR Series Base Station Configuration and Commissioning PDFjuan100% (1)

- BOA Installation Manual V11aДокумент29 страницBOA Installation Manual V11aSANKPLYОценок пока нет

- Changeman Administrator GuideДокумент228 страницChangeman Administrator Guideyang_wang19856844100% (3)

- Music MaestroДокумент12 страницMusic Maestrom00sygrlОценок пока нет

- LTE AcronymsДокумент8 страницLTE AcronymspravinbamburdeОценок пока нет

- E85001-0655 - Signature Series Diagnostic ToolДокумент4 страницыE85001-0655 - Signature Series Diagnostic ToolRavi100% (1)

- Using Urls in Cognos 8Документ20 страницUsing Urls in Cognos 8ijagdishraiОценок пока нет

- Smart Restaurant Menu Ordering SystemДокумент27 страницSmart Restaurant Menu Ordering SystemŚowmyà ŚrìОценок пока нет

- Seminario 1 - WiresharkДокумент9 страницSeminario 1 - WiresharkAlvaro WantedeadoraliveОценок пока нет

- Telecom Basics and Introduction to BSSДокумент8 страницTelecom Basics and Introduction to BSSJustice BaloyiОценок пока нет

- Cdce 62005Документ84 страницыCdce 62005hymavathiparvathalaОценок пока нет

- Optical Fiber Communications OverviewДокумент46 страницOptical Fiber Communications OverviewChakravarthi ChittajalluОценок пока нет

- Secure File Folder Access WindowsДокумент58 страницSecure File Folder Access Windowsviknesh61191Оценок пока нет

- DQM SetupДокумент3 страницыDQM Setupzafar.ccna1178Оценок пока нет

- Goofle How To ChartsДокумент3 страницыGoofle How To ChartsPranay MadanОценок пока нет

- FIN 2100 Module 2 Capital Financial Analysis WorksheetДокумент1 460 страницFIN 2100 Module 2 Capital Financial Analysis Worksheetsherif_awadОценок пока нет