Академический Документы

Профессиональный Документы

Культура Документы

Chapter 12 Testing For Autocorrelation (EC220)

Загружено:

Harsha DuttaОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Chapter 12 Testing For Autocorrelation (EC220)

Загружено:

Harsha DuttaАвторское право:

Доступные форматы

Christopher Dougherty

EC220 - Introduction to econometrics

(chapter 12)

Slideshow: testing for autocorrelation

Original citation:

Dougherty, C. (2012) EC220 - Introduction to econometrics (chapter 12). [Teaching Resource]

2012 The Author

This version available at: http://learningresources.lse.ac.uk/138/

Available in LSE Learning Resources Online: May 2012

This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 License. This license allows

the user to remix, tweak, and build upon the work even for commercial purposes, as long as the user

credits the author and licenses their new creations under the identical terms.

http://creativecommons.org/licenses/by-sa/3.0/

http://learningresources.lse.ac.uk/

Simple autoregression of the residuals

1

TESTS FOR AUTOCORRELATION

We will initially confine the discussion of the tests for autocorrelation to its most common

form, the AR(1) process. If the disturbance term follows the AR(1) process, it is reasonable

to hypothesize that, as an approximation, the residuals will conform to a similar process.

+ =

1 t t

e e

t 1

c + =

t t

u u

error

Simple autoregression of the residuals

2

TESTS FOR AUTOCORRELATION

t 1

c + =

t t

u u

After all, provided that the conditions for the consistency of the OLS estimators are

satisfied, as the sample size becomes large, the regression parameters will approach their

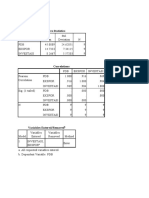

true values, the location of the regression line will converge on the true relationship, and

the residuals will coincide with the values of the disturbance term.

+ =

1 t t

e e error

Simple autoregression of the residuals

3

TESTS FOR AUTOCORRELATION

t 1

c + =

t t

u u

Hence a regression of e

t

on e

t1

is sufficient, at least in large samples. Of course, there is

the issue that, in this regression, e

t1

is a lagged dependent variable, but that does not

matter in large samples.

+ =

1 t t

e e error

4

TESTS FOR AUTOCORRELATION

This is illustrated with the simulation shown in the figure. The true model is as shown, with

u

t

being generated as an AR (1) process with = 0.7.

Simple autoregression of the residuals

0

5

-0.5 0 0.5 1

T = 25

T = 50

T = 100

T = 200

0.7

true value

t 1

7 . 0 c + =

t t

u u

1

=

t t

e e

t t

u t Y + + = 0 . 2 10

0

5

-0.5 0 0.5 1

T = 25

T = 50

T = 100

T = 200

0.7

true value

5

TESTS FOR AUTOCORRELATION

Simple autoregression of the residuals

The values of the parameters in the model for Y

t

make no difference to the distributions of

the estimator of .

t 1

7 . 0 c + =

t t

u u

1

=

t t

e e

t t

u t Y + + = 0 . 2 10

0

5

-0.5 0 0.5 1

T = 25

T = 50

T = 100

T = 200

0.7

true value

6

TESTS FOR AUTOCORRELATION

Simple autoregression of the residuals

As can be seen, when e

t

is regressed on e

t1

, the distribution of the estimator of is left

skewed and heavily biased downwards for T = 25. The mean of the distribution is 0.47.

T mean

25 0.47

50 0.59

100 0.65

200 0.68

t 1

7 . 0 c + =

t t

u u

1

=

t t

e e

t t

u t Y + + = 0 . 2 10

0

5

-0.5 0 0.5 1

T = 25

T = 50

T = 100

T = 200

0.7

true value

7

TESTS FOR AUTOCORRELATION

Simple autoregression of the residuals

T mean

25 0.47

50 0.59

100 0.65

200 0.68

t 1

7 . 0 c + =

t t

u u

1

=

t t

e e

t t

u t Y + + = 0 . 2 10

However, as the sample size increases, the downwards bias diminishes and it is clear that it

is converging on 0.7 as the sample becomes large. Inference in finite samples will be

approximate, given the autoregressive nature of the regression.

8

TESTS FOR AUTOCORRELATION

The simple estimator of the autocorrelation coefficient depends on Assumption C.7 part (2)

being satisfied when the original model (the model for Y

t

) is fitted. Generally, one might

expect this not to be the case.

BreuschGodfrey test

t

k

j

jt j t

u X Y + + =

=2

1

| |

9

TESTS FOR AUTOCORRELATION

If the original model contains a lagged dependent variable as a regressor, or violates

Assumption C.7 part (2) in any other way, the estimates of the parameters will be

inconsistent if the disturbance term is subject to autocorrelation.

BreuschGodfrey test

t

k

j

jt j t

u X Y + + =

=2

1

| |

1

2

1

=

+ + =

t

k

j

jt j t

e X e

10

TESTS FOR AUTOCORRELATION

As a repercussion, a simple regression of e

t

on e

t1

will produce an inconsistent estimate of

. The solution is to include all of the explanatory variables in the original model in the

residuals autoregression.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

11

TESTS FOR AUTOCORRELATION

If the original model is the first equation where, say, one of the X variables is Y

t1

, then the

residuals regression would be the second equation.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

12

TESTS FOR AUTOCORRELATION

The idea is that, by including the X variables, one is controlling for the effects of any

endogeneity on the residuals.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

13

TESTS FOR AUTOCORRELATION

The underlying theory is complex and relates to maximum-likelihood estimation, as does

the test statistic. The test is known as the BreuschGodfrey test.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

14

TESTS FOR AUTOCORRELATION

Several asymptotically-equivalent versions of the test have been proposed. The most

popular involves the computation of the lagrange multiplier statistic nR

2

when the residuals

regression is fitted, n being the actual number of observations in the regression.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

Test statistic: nR

2

, distributed as _

2

(1) when

testing for first-order autocorrelation

15

TESTS FOR AUTOCORRELATION

Asymptotically, under the null hypothesis of no autocorrelation, nR

2

is distributed as a chi-

squared statistic with one degree of freedom.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

Test statistic: nR

2

, distributed as _

2

(1) when

testing for first-order autocorrelation

16

TESTS FOR AUTOCORRELATION

A simple t test on the coefficient of e

t1

has also been proposed, again with asymptotic

validity.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

t

k

j

jt j t

u X Y + + =

=2

1

| |

Alternatively, simple t test on coefficient of e

t1

17

TESTS FOR AUTOCORRELATION

The procedure can be extended to test for higher order autocorrelation. If AR(q)

autocorrelation is suspected, the residuals regression includes q lagged residuals.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

=

=

+ + =

q

s

s t s

k

j

jt j t

e X e

1 2

1

t

k

j

jt j t

u X Y + + =

=2

1

| |

18

TESTS FOR AUTOCORRELATION

For the lagrange multiplier version of the test, the test statistic remains nR

2

(with n smaller

than before, the inclusion of the additional lagged residuals leading to a further loss of

initial observations).

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

=

=

+ + =

q

s

s t s

k

j

jt j t

e X e

1 2

1

t

k

j

jt j t

u X Y + + =

=2

1

| |

Test statistic: nR

2

, distributed as _

2

(q)

19

TESTS FOR AUTOCORRELATION

Under the null hypothesis of no autocorrelation, nR

2

has a chi-squared distribution with q

degrees of freedom.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

=

=

+ + =

q

s

s t s

k

j

jt j t

e X e

1 2

1

t

k

j

jt j t

u X Y + + =

=2

1

| |

Test statistic: nR

2

, distributed as _

2

(q)

20

TESTS FOR AUTOCORRELATION

The t test version becomes an F test comparing RSS for the residuals regression with RSS

for the same specification without the residual terms. Again, the test is valid only

asymptotically.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

=

=

+ + =

q

s

s t s

k

j

jt j t

e X e

1 2

1

t

k

j

jt j t

u X Y + + =

=2

1

| |

Alternatively, F test on the lagged residuals

H

0

:

1

= ... =

q

= 0, H

1

: not H

0

21

TESTS FOR AUTOCORRELATION

The lagrange multiplier version of the test has been shown to be asymptotically valid for the

case of MA(q) moving average autocorrelation.

BreuschGodfrey test

1

2

1

=

+ + =

t

k

j

jt j t

e X e

=

=

+ + =

q

s

s t s

k

j

jt j t

e X e

1 2

1

t

k

j

jt j t

u X Y + + =

=2

1

| |

Test statistic: nR

2

, distributed as _

2

(q),

valid also for MA(q) autocorrelation

22

TESTS FOR AUTOCORRELATION

The first major test to be developed and popularised for the detection of autocorrelation

was the DurbinWatson test for AR(1) autocorrelation based on the DurbinWatson d

statistic calculated from the residuals using the expression shown.

DurbinWatson test

=

=

=

T

t

t

T

t

t t

e

e e

d

1

2

2

2

1

) (

23

It can be shown that in large samples d tends to 2 2, where is the parameter in the

AR(1) relationship u

t

= u

t1

+ c

t

.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

=

=

=

T

t

t

T

t

t t

e

e e

d

1

2

2

2

1

) (

24

If there is no autocorrelation, is 0 and d should be distributed randomly around 2.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

2 2 d

=

=

=

T

t

t

T

t

t t

e

e e

d

1

2

2

2

1

) (

25

If there is severe positive autocorrelation, will be near 1 and d will be near 0.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

2 2 d

=

=

=

T

t

t

T

t

t t

e

e e

d

1

2

2

2

1

) (

26

Likewise, if there is severe positive autocorrelation, will be near 1 and d will be near 4.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

4 d

2 2 d

=

=

=

T

t

t

T

t

t t

e

e e

d

1

2

2

2

1

) (

27

Thus d behaves as illustrated graphically above.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

4 d

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

28

To perform the DurbinWatson test, we define critical values of d. The null hypothesis is H

0

:

= 0 (no autocorrelation). If d lies between these values, we do not reject the null

hypothesis.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

4 d

2 2 d

2 4 0 d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

29

The critical values, at any significance level, depend on the number of observations in the

sample and the number of explanatory variables.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

4 d

2 2 d

2 4 0 d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

30

Unfortunately, they also depend on the actual data for the explanatory variables in the

sample, and thus vary from sample to sample.

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 d

0 d

4 d

2 2 d

2 4 0 d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

31

However Durbin and Watson determined upper and lower bounds, d

U

and d

L

, for the critical

values, and these are presented in standard tables.

2 d

0 d

4 d

2 4 0 d

L

d

U

d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

32

2 d

0 d

4 d

2 4 0 d

L

d

U

d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

If d is less than d

L

, it must also be less than the critical value of d for positive

autocorrelation, and so we would reject the null hypothesis and conclude that there is

positive autocorrelation.

33

2 d

0 d

4 d

2 4 0 d

L

d

U

d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

If d is above than d

U

, it must also be above the critical value of d, and so we would not reject

the null hypothesis. (Of course, if it were above 2, we should consider testing for negative

autocorrelation instead.)

34

2 d

0 d

4 d

2 4 0 d

L

d

U

d

crit

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

crit

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

If d lies between d

L

and d

U

, we cannot tell whether it is above or below the critical value and

so the test is indeterminate.

35

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

Here are d

L

and d

U

for 45 observations and two explanatory variables, at the 5% significance

level.

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

36

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

There are similar bounds for the critical value in the case of negative autocorrelation. They

are not given in the standard tables because negative autocorrelation is uncommon, but it

is easy to calculate them because are they are located symmetrically to the right of 2.

2.38 2.57

37

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

2.38 2.57

So if d < 1.43, we reject the null hypothesis and conclude that there is positive

autocorrelation.

38

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

2.38 2.57

If 1.43 < d < 1.62, the test is indeterminate and we do not come to any conclusion.

39

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

2.38 2.57

If 1.62 < d < 2.38, we do not reject the null hypothesis of no autocorrelation.

40

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

2.38 2.57

If 2.38 < d < 2.57, we do not come to any conclusion.

41

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

1.43 1.62

(n = 45, k = 3, 5% level)

2 4 0 d

L

d

U

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

2.38 2.57

If d > 2.57, we conclude that there is significant negative autocorrelation.

42

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

Here are the bounds for the critical values for the 1% test, again with 45 observations and

two explanatory variables.

d

L

d

U

1.24 1.42 2.58 2.76

(n = 45, k = 3, 1% level)

43

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

L

d

U

1.24 1.42 2.58 2.76

(n = 45, k = 3, 1% level)

The Durbin-Watson test is valid only when all the explanatory variables are deterministic.

This is in practice a serious limitation since usually interactions and dynamics in a system

of equations cause Assumption C.7 part (2) to be violated.

44

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

L

d

U

1.24 1.42 2.58 2.76

(n = 45, k = 3, 1% level)

In particular, if the lagged dependent variable is used as a regressor, the statistic is biased

towards 2 and therefore will tend to under-reject the null hypothesis. It is also restricted to

testing for AR(1) autocorrelation.

45

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

L

d

U

1.24 1.42 2.58 2.76

(n = 45, k = 3, 1% level)

Despite these shortcomings, it remains a popular test and some major applications produce

the d statistic automatically as part of the standard regression output.

46

2 d

0 d

4 d

TESTS FOR AUTOCORRELATION

DurbinWatson test

In large samples

No autocorrelation

Severe positive autocorrelation

Severe negative autocorrelation

2 2 d

2 4 0

positive

autocorrelation

negative

autocorrelation

no

autocorrelation

d

L

d

U

1.24 1.42 2.58 2.76

(n = 45, k = 3, 1% level)

It does have the appeal of the test statistic being part of standard regression output.

Further, it is appropriate for finite samples, subject to the zone of indeterminacy and the

deterministic regressor requirement.

47

Durbin proposed two tests for the case where the use of the lagged dependent variable as a

regressor made the original DurbinWatson test inapplicable. One was a precursor to the

BreuschGodrey test.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

48

The other is the Durbin h test, appropriate for the detection of AR(1) autocorrelation.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

49

The Durbin h statistic is defined as shown, where is an estimate of in the AR(1)

process, is an estimate of the variance of the coefficient of the lagged dependent

variable, and n is the number of observations in the regression.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

2

) 1 ( Y

b

s

TESTS FOR AUTOCORRELATION

50

There are various ways in which one might estimate but, since this test is valid only for

large samples, it does not matter which is used. The most convenient is to take advantage

of the fact that d tends to 2 2 in large samples. The estimator is then 1 0.5d.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

2 2 d

d 5 . 0 1 =

TESTS FOR AUTOCORRELATION

51

The estimate of the variance of the coefficient of the lagged dependent variable is obtained

by squaring its standard error.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

2 2 d

d 5 . 0 1 =

52

Thus h can be calculated from the usual regression results. In large samples, under the

null hypothesis of no autocorrelation, h is distributed as a normal variable with zero mean

and unit variance.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

2 2 d

d 5 . 0 1 =

53

An occasional problem with this test is that the h statistic cannot be computed if n

is greater than 1, which can happen if the sample size is not very large.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

2 2 d

d 5 . 0 1 =

2

) 1 ( Y

b

s

54

An even worse problem occurs when n is near to, but less than, 1. In such a situation

the h statistic could be enormous, without there being any problem of autocorrelation.

Durbins h test

2

) 1 (

1

=

Y

b

ns

n

h

TESTS FOR AUTOCORRELATION

2 2 d

d 5 . 0 1 =

2

) 1 ( Y

b

s

55

The output shown in the table gives the result of a logarithmic regression of expenditure on

food on disposable personal income and the relative price of food.

============================================================

Dependent Variable: LGFOOD

Method: Least Squares

Sample: 1959 2003

Included observations: 45

============================================================

Variable Coefficient Std. Error t-Statistic Prob.

============================================================

C 2.236158 0.388193 5.760428 0.0000

LGDPI 0.500184 0.008793 56.88557 0.0000

LGPRFOOD -0.074681 0.072864 -1.024941 0.3113

============================================================

R-squared 0.992009 Mean dependent var 6.021331

Adjusted R-squared 0.991628 S.D. dependent var 0.222787

S.E. of regression 0.020384 Akaike info criter-4.883747

Sum squared resid 0.017452 Schwarz criterion -4.763303

Log likelihood 112.8843 Hannan-Quinn crite-4.838846

F-statistic 2606.860 Durbin-Watson stat 0.478540

Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

56

The plot of the residuals is shown. All the tests indicate highly significant autocorrelation.

TESTS FOR AUTOCORRELATION

-0.04

-0.03

-0.02

-0.01

0

0.01

0.02

0.03

0.04

0.05

0.06

1959 1963 1967 1971 1975 1979 1983 1987 1991 1995 1999 2003

Residuals, static logarithmic regression for FOOD

57

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1960 2003

Included observations: 44 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

ELGFOOD(-1) 0.790169 0.106603 7.412228 0.0000

============================================================

R-squared 0.560960 Mean dependent var 3.28E-05

Adjusted R-squared 0.560960 S.D. dependent var 0.020145

S.E. of regression 0.013348 Akaike info criter-5.772439

Sum squared resid 0.007661 Schwarz criterion -5.731889

Log likelihood 127.9936 Durbin-Watson stat 1.477337

============================================================

TESTS FOR AUTOCORRELATION

ELGFOOD in the regression above is the residual from the LGFOOD regression. A simple

regression of ELGFOOD on ELGFOOD(1) yields a coefficient of 0.79 with standard error

0.11.

1

79 . 0

=

t t

e e

58

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1960 2003

Included observations: 44 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

ELGFOOD(-1) 0.790169 0.106603 7.412228 0.0000

============================================================

R-squared 0.560960 Mean dependent var 3.28E-05

Adjusted R-squared 0.560960 S.D. dependent var 0.020145

S.E. of regression 0.013348 Akaike info criter-5.772439

Sum squared resid 0.007661 Schwarz criterion -5.731889

Log likelihood 127.9936 Durbin-Watson stat 1.477337

============================================================

TESTS FOR AUTOCORRELATION

1

79 . 0

=

t t

e e

Technical note for EViews users: EViews places the residuals from the most recent

regression in a pseudo-variable called resid. resid cannot be used directly. So the

residuals were saved as ELGFOOD using the genr command:

genr ELGFOOD = resid

59

Adding an intercept, LGDPI and LGPRFOOD to the specification, the coefficient of the

lagged residuals becomes 0.81 with standard error 0.11. R

2

is 0.5720, so nR

2

is 25.17.

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1960 2003

Included observations: 44 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.175732 0.265081 0.662936 0.5112

LGDPI -7.36E-05 0.006180 -0.011917 0.9906

LGPRFOOD -0.037373 0.049496 -0.755058 0.4546

ELGFOOD(-1) 0.805744 0.110202 7.311504 0.0000

============================================================

R-squared 0.572006 Mean dependent var 3.28E-05

Adjusted R-squared 0.539907 S.D. dependent var 0.020145

S.E. of regression 0.013664 Akaike info criter-5.661558

Sum squared resid 0.007468 Schwarz criterion -5.499359

Log likelihood 128.5543 F-statistic 17.81977

Durbin-Watson stat 1.513911 Prob(F-statistic) 0.000000

============================================================

1

81 . 0 ...

+ =

t t

e e

TESTS FOR AUTOCORRELATION

5720 . 0

2

= R

17 . 25 5720 . 0 44

2

= = nR ( ) 83 . 10 1

% 1 . 0

2

= _

60

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1960 2003

Included observations: 44 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.175732 0.265081 0.662936 0.5112

LGDPI -7.36E-05 0.006180 -0.011917 0.9906

LGPRFOOD -0.037373 0.049496 -0.755058 0.4546

ELGFOOD(-1) 0.805744 0.110202 7.311504 0.0000

============================================================

R-squared 0.572006 Mean dependent var 3.28E-05

Adjusted R-squared 0.539907 S.D. dependent var 0.020145

S.E. of regression 0.013664 Akaike info criter-5.661558

Sum squared resid 0.007468 Schwarz criterion -5.499359

Log likelihood 128.5543 F-statistic 17.81977

Durbin-Watson stat 1.513911 Prob(F-statistic) 0.000000

============================================================

1

81 . 0 ...

+ =

t t

e e

TESTS FOR AUTOCORRELATION

5720 . 0

2

= R

17 . 25 5720 . 0 44

2

= = nR ( ) 83 . 10 1

% 1 . 0

2

= _

(Note that here n = 44. There are 45 observations in the regression in Table 12.1, and one

fewer in the residuals regression.) The critical value of chi-squared with one degree of

freedom at the 0.1 percent level is 10.83.

61

Technical note for EViews users: one can perform the test simply by following the LGFOOD

regression with the command auto(1). EViews allows itself to use resid directly.

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 54.78773 Probability 0.000000

Obs*R-squared 25.73866 Probability 0.000000

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.171665 0.258094 0.665124 0.5097

LGDPI 9.50E-05 0.005822 0.016324 0.9871

LGPRFOOD -0.036806 0.048504 -0.758819 0.4523

RESID(-1) 0.805773 0.108861 7.401873 0.0000

============================================================

R-squared 0.571970 Mean dependent var-1.85E-18

Adjusted R-squared 0.540651 S.D. dependent var 0.019916

S.E. of regression 0.013498 Akaike info criter-5.687865

Sum squared resid 0.007470 Schwarz criterion -5.527273

Log likelihood 131.9770 F-statistic 18.26258

Durbin-Watson stat 1.514975 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

62

The argument in the auto command relates to the order of autocorrelation being tested. At

the moment we are concerned only with first-order autocorrelation. This is why the

command is auto(1).

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 54.78773 Probability 0.000000

Obs*R-squared 25.73866 Probability 0.000000

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.171665 0.258094 0.665124 0.5097

LGDPI 9.50E-05 0.005822 0.016324 0.9871

LGPRFOOD -0.036806 0.048504 -0.758819 0.4523

RESID(-1) 0.805773 0.108861 7.401873 0.0000

============================================================

R-squared 0.571970 Mean dependent var-1.85E-18

Adjusted R-squared 0.540651 S.D. dependent var 0.019916

S.E. of regression 0.013498 Akaike info criter-5.687865

Sum squared resid 0.007470 Schwarz criterion -5.527273

Log likelihood 131.9770 F-statistic 18.26258

Durbin-Watson stat 1.514975 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

63

When we performed the test, resid(1), and hence ELGFOOD(1), were not defined for the

first observation in the sample, so we had 44 observations from 1960 to 2003.

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 54.78773 Probability 0.000000

Obs*R-squared 25.73866 Probability 0.000000

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.171665 0.258094 0.665124 0.5097

LGDPI 9.50E-05 0.005822 0.016324 0.9871

LGPRFOOD -0.036806 0.048504 -0.758819 0.4523

RESID(-1) 0.805773 0.108861 7.401873 0.0000

============================================================

R-squared 0.571970 Mean dependent var-1.85E-18

Adjusted R-squared 0.540651 S.D. dependent var 0.019916

S.E. of regression 0.013498 Akaike info criter-5.687865

Sum squared resid 0.007470 Schwarz criterion -5.527273

Log likelihood 131.9770 F-statistic 18.26258

Durbin-Watson stat 1.514975 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

64

EViews uses the first observation by assigning a value of zero to the first observation for

resid(1). Hence the test results are very slightly different.

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 54.78773 Probability 0.000000

Obs*R-squared 25.73866 Probability 0.000000

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.171665 0.258094 0.665124 0.5097

LGDPI 9.50E-05 0.005822 0.016324 0.9871

LGPRFOOD -0.036806 0.048504 -0.758819 0.4523

RESID(-1) 0.805773 0.108861 7.401873 0.0000

============================================================

R-squared 0.571970 Mean dependent var-1.85E-18

Adjusted R-squared 0.540651 S.D. dependent var 0.019916

S.E. of regression 0.013498 Akaike info criter-5.687865

Sum squared resid 0.007470 Schwarz criterion -5.527273

Log likelihood 131.9770 F-statistic 18.26258

Durbin-Watson stat 1.514975 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

65

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1960 2003

Included observations: 44 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.175732 0.265081 0.662936 0.5112

LGDPI -7.36E-05 0.006180 -0.011917 0.9906

LGPRFOOD -0.037373 0.049496 -0.755058 0.4546

ELGFOOD(-1) 0.805744 0.110202 7.311504 0.0000

============================================================

R-squared 0.572006 Mean dependent var 3.28E-05

Adjusted R-squared 0.539907 S.D. dependent var 0.020145

S.E. of regression 0.013664 Akaike info criter-5.661558

Sum squared resid 0.007468 Schwarz criterion -5.499359

Log likelihood 128.5543 F-statistic 17.81977

Durbin-Watson stat 1.513911 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

We can also perform the test with a t test on the coefficient of the lagged variable.

66

Here is the corresponding output using the auto command built into EViews. The test is

presented as an F statistic. Of course, when there is only one lagged residual, the F

statistic is the square of the t statistic.

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 54.78773 Probability 0.000000

Obs*R-squared 25.73866 Probability 0.000000

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.171665 0.258094 0.665124 0.5097

LGDPI 9.50E-05 0.005822 0.016324 0.9871

LGPRFOOD -0.036806 0.048504 -0.758819 0.4523

RESID(-1) 0.805773 0.108861 7.401873 0.0000

============================================================

R-squared 0.571970 Mean dependent var-1.85E-18

Adjusted R-squared 0.540651 S.D. dependent var 0.019916

S.E. of regression 0.013498 Akaike info criter-5.687865

Sum squared resid 0.007470 Schwarz criterion -5.527273

Log likelihood 131.9770 F-statistic 18.26258

Durbin-Watson stat 1.514975 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

67

The DurbinWatson statistic is 0.48. d

L

is 1.24 for a 1 percent significance test (2

explanatory variables, 45 observations).

============================================================

Dependent Variable: LGFOOD

Method: Least Squares

Sample: 1959 2003

Included observations: 45

============================================================

Variable Coefficient Std. Error t-Statistic Prob.

============================================================

C 2.236158 0.388193 5.760428 0.0000

LGDPI 0.500184 0.008793 56.88557 0.0000

LGPRFOOD -0.074681 0.072864 -1.024941 0.3113

============================================================

R-squared 0.992009 Mean dependent var 6.021331

Adjusted R-squared 0.991628 S.D. dependent var 0.222787

S.E. of regression 0.020384 Akaike info criter-4.883747

Sum squared resid 0.017452 Schwarz criterion -4.763303

Log likelihood 112.8843 Hannan-Quinn crite-4.838846

F-statistic 2606.860 Durbin-Watson stat 0.478540

Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

d

L

= 1.24 (1% level, 2 explanatory variables, 45 observations)

68

The BreuschGodfrey test for higher-order autocorrelation is a straightforward extension of

the first-order test. If we are testing for order q, we add q lagged residuals to the right side

of the residuals regression. We will perform the test for second-order autocorrelation.

TESTS FOR AUTOCORRELATION

t t t t

u u u c + + =

2 2 1 1

69

Here is the regression for ELGFOOD with two lagged residuals. The BreuschGodfrey test

statistic is 25.89. With two lagged residuals, the test statistic has a chi-squared distribution

with two degrees of freedom under the null hypothesis. It is significant at the 0.1 percent

level

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1961 2003

Included observations: 43 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.071220 0.277253 0.256879 0.7987

LGDPI 0.000251 0.006491 0.038704 0.9693

LGPRFOOD -0.015572 0.051617 -0.301695 0.7645

ELGFOOD(-1) 1.009693 0.163240 6.185318 0.0000

ELGFOOD(-2) -0.289159 0.171960 -1.681548 0.1009

============================================================

R-squared 0.602010 Mean dependent var 0.000149

Adjusted R-squared 0.560117 S.D. dependent var 0.020368

S.E. of regression 0.013509 Akaike info criter-5.661981

Sum squared resid 0.006935 Schwarz criterion -5.457191

Log likelihood 126.7326 F-statistic 14.36996

Durbin-Watson stat 1.892212 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

89 . 25 6020 . 0 43

2

= = nR ( ) 82 . 13 2

% 1 . 0

2

= _

70

We will also perform an F test, comparing the RSS with the RSS for the same regression

without the lagged residuals. We know the result, because one of the t statistics is very

high.

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1961 2003

Included observations: 43 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.071220 0.277253 0.256879 0.7987

LGDPI 0.000251 0.006491 0.038704 0.9693

LGPRFOOD -0.015572 0.051617 -0.301695 0.7645

ELGFOOD(-1) 1.009693 0.163240 6.185318 0.0000

ELGFOOD(-2) -0.289159 0.171960 -1.681548 0.1009

============================================================

R-squared 0.602010 Mean dependent var 0.000149

Adjusted R-squared 0.560117 S.D. dependent var 0.020368

S.E. of regression 0.013509 Akaike info criter-5.661981

Sum squared resid 0.006935 Schwarz criterion -5.457191

Log likelihood 126.7326 F-statistic 14.36996

Durbin-Watson stat 1.892212 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

71

Here is the regression for ELGFOOD without the lagged residuals. Note that the sample

period has been adjusted to 1961 to 2003, to make RSS comparable with that for the

previous regression.

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample: 1961 2003

Included observations: 43

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.027475 0.412043 0.066680 0.9472

LGDPI -0.001074 0.009986 -0.107528 0.9149

LGPRFOOD -0.003948 0.076191 -0.051816 0.9589

============================================================

R-squared 0.000298 Mean dependent var 0.000149

Adjusted R-squared -0.049687 S.D. dependent var 0.020368

S.E. of regression 0.020868 Akaike info criter-4.833974

Sum squared resid 0.017419 Schwarz criterion -4.711100

Log likelihood 106.9304 F-statistic 0.005965

Durbin-Watson stat 0.476550 Prob(F-statistic) 0.994053

============================================================

TESTS FOR AUTOCORRELATION

72

The F statistic is 28.72. This is significant at the 1% level. The critical value for F(2,35) is

8.47. That for F(2,38) must be slightly lower.

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample: 1961 2003

Included observations: 43

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.027475 0.412043 0.066680 0.9472

LGDPI -0.001074 0.009986 -0.107528 0.9149

LGPRFOOD -0.003948 0.076191 -0.051816 0.9589

============================================================

R-squared 0.000298 Mean dependent var 0.000149

Adjusted R-squared -0.049687 S.D. dependent var 0.020368

S.E. of regression 0.020868 Akaike info criter-4.833974

Sum squared resid 0.017419 Schwarz criterion -4.711100

Log likelihood 106.9304 F-statistic 0.005965

Durbin-Watson stat 0.476550 Prob(F-statistic) 0.994053

============================================================

TESTS FOR AUTOCORRELATION

( )

( )

72 . 28

38 / 006935 . 0

2 / 006935 . 0 017419 . 0

38 , 2 =

= F

( ) 47 . 8 35 , 2

crit,0.1%

= F

73

Here is the output using the auto(2) command in EViews. The conclusions for the two

tests are the same.

============================================================

Breusch-Godfrey Serial Correlation LM Test:

============================================================

F-statistic 30.24142 Probability 0.000000

Obs*R-squared 27.08649 Probability 0.000001

============================================================

Test Equation:

Dependent Variable: RESID

Method: Least Squares

Presample missing value lagged residuals set to zero.

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.053628 0.261016 0.205460 0.8383

LGDPI 0.000920 0.005705 0.161312 0.8727

LGPRFOOD -0.013011 0.049304 -0.263900 0.7932

RESID(-1) 1.011261 0.159144 6.354360 0.0000

RESID(-2) -0.290831 0.167642 -1.734833 0.0905

============================================================

R-squared 0.601922 Mean dependent var-1.85E-18

Adjusted R-squared 0.562114 S.D. dependent var 0.019916

S.E. of regression 0.013179 Akaike info criter-5.715965

Sum squared resid 0.006947 Schwarz criterion -5.515225

Log likelihood 133.6092 F-statistic 15.12071

Durbin-Watson stat 1.894290 Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

74

The table above gives the result of a parallel logarithmic regression with the addition of

lagged expenditure on food as an explanatory variable. Again, there is strong evidence that

the specification is subject to autocorrelation.

============================================================

Dependent Variable: LGFOOD

Method: Least Squares

Sample (adjusted): 1960 2003

Included observations: 44 after adjustments

============================================================

Variable Coefficient Std. Error t-Statistic Prob.

============================================================

C 0.985780 0.336094 2.933054 0.0055

LGDPI 0.126657 0.056496 2.241872 0.0306

LGPRFOOD -0.088073 0.051897 -1.697061 0.0975

LGFOOD(-1) 0.732923 0.110178 6.652153 0.0000

============================================================

R-squared 0.995879 Mean dependent var 6.030691

Adjusted R-squared 0.995570 S.D. dependent var 0.216227

S.E. of regression 0.014392 Akaike info criter-5.557847

Sum squared resid 0.008285 Schwarz criterion -5.395648

Log likelihood 126.2726 Hannan-Quinn crite-5.497696

F-statistic 3222.264 Durbin-Watson stat 1.112437

Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

75

Here is a plot of the residuals.

TESTS FOR AUTOCORRELATION

-0.03

-0.02

-0.01

0

0.01

0.02

0.03

0.04

1959 1963 1967 1971 1975 1979 1983 1987 1991 1995 1999 2003

Residuals, ADL(1,0) logarithmic regression for FOOD

76

A simple regression of the residuals on the lagged residuals yields a coefficient of 0.43 with

standard error 0.14. We expect the estimate to be adversely affected by the presence of the

lagged dependent variable in the regression for LGFOOD.

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1961 2003

Included observations: 43 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

ELGFOOD(-1) 0.431010 0.143277 3.008226 0.0044

============================================================

R-squared 0.176937 Mean dependent var 0.000276

Adjusted R-squared 0.176937 S.D. dependent var 0.013922

S.E. of regression 0.012630 Akaike info criter-5.882426

Sum squared resid 0.006700 Schwarz criterion -5.841468

Log likelihood 127.4722 Durbin-Watson stat 1.801390

============================================================

TESTS FOR AUTOCORRELATION

1

43 . 0

=

t t

e e

77

With an intercept, LGDPI, LGPRFOOD, and LGFOOD(1) added to the specification, the

coefficient of the lagged residuals becomes 0.60 with standard error 0.17. R

2

is 0.2469, so

nR

2

is 10.62, not quite significant at the 0.1 percent level. (Note that here n = 43.)

============================================================

Dependent Variable: ELGFOOD

Method: Least Squares

Sample(adjusted): 1961 2003

Included observations: 43 after adjusting endpoints

============================================================

Variable CoefficientStd. Errort-Statistic Prob.

============================================================

C 0.417342 0.317973 1.312507 0.1972

LGDPI 0.108353 0.059784 1.812418 0.0778

LGPRFOOD -0.005585 0.046434 -0.120279 0.9049

LGFOOD(-1) -0.214252 0.116145 -1.844700 0.0729

ELGFOOD(-1) 0.604346 0.172040 3.512826 0.0012

============================================================

R-squared 0.246863 Mean dependent var 0.000276

Adjusted R-squared 0.167586 S.D. dependent var 0.013922

S.E. of regression 0.012702 Akaike info criter-5.785165

Sum squared resid 0.006131 Schwarz criterion -5.580375

Log likelihood 129.3811 F-statistic 3.113911

Durbin-Watson stat 1.867467 Prob(F-statistic) 0.026046

============================================================

TESTS FOR AUTOCORRELATION

62 . 10 2469 . 0 43

2

= = nR ( ) 83 . 10 1

% 1 . 0

2

= _

78

The DurbinWatson statistic is 1.11. From this one obtains an estimate of as 1 0.5d =

0.445. The standard error of the coefficient of the lagged dependent variable is 0.1102.

Hence the h statistic is as shown.

============================================================

Dependent Variable: LGFOOD

Method: Least Squares

Sample (adjusted): 1960 2003

Included observations: 44 after adjustments

============================================================

Variable Coefficient Std. Error t-Statistic Prob.

============================================================

C 0.985780 0.336094 2.933054 0.0055

LGDPI 0.126657 0.056496 2.241872 0.0306

LGPRFOOD -0.088073 0.051897 -1.697061 0.0975

LGFOOD(-1) 0.732923 0.110178 6.652153 0.0000

============================================================

R-squared 0.995879 Mean dependent var 6.030691

Adjusted R-squared 0.995570 S.D. dependent var 0.216227

S.E. of regression 0.014392 Akaike info criter-5.557847

Sum squared resid 0.008285 Schwarz criterion -5.395648

Log likelihood 126.2726 Hannan-Quinn crite-5.497696

F-statistic 3222.264 Durbin-Watson stat 1.112437

Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

( )

33 . 4

1102 . 0 44 1

44

445 . 0

1

2 2

) 1 (

=

=

Y

b

ns

n

h

79

Under the null hypothesis of no autocorrelation, the h statistic asymptotically has a

standardized normal distribution, so this value is above the critical value at the 0.1 percent

level, 3.29.

============================================================

Dependent Variable: LGFOOD

Method: Least Squares

Sample (adjusted): 1960 2003

Included observations: 44 after adjustments

============================================================

Variable Coefficient Std. Error t-Statistic Prob.

============================================================

C 0.985780 0.336094 2.933054 0.0055

LGDPI 0.126657 0.056496 2.241872 0.0306

LGPRFOOD -0.088073 0.051897 -1.697061 0.0975

LGFOOD(-1) 0.732923 0.110178 6.652153 0.0000

============================================================

R-squared 0.995879 Mean dependent var 6.030691

Adjusted R-squared 0.995570 S.D. dependent var 0.216227

S.E. of regression 0.014392 Akaike info criter-5.557847

Sum squared resid 0.008285 Schwarz criterion -5.395648

Log likelihood 126.2726 Hannan-Quinn crite-5.497696

F-statistic 3222.264 Durbin-Watson stat 1.112437

Prob(F-statistic) 0.000000

============================================================

TESTS FOR AUTOCORRELATION

( )

33 . 4

1102 . 0 44 1

44

445 . 0

1

2 2

) 1 (

=

=

Y

b

ns

n

h

Copyright Christopher Dougherty 2011.

These slideshows may be downloaded by anyone, anywhere for personal use.

Subject to respect for copyright and, where appropriate, attribution, they may be

used as a resource for teaching an econometrics course. There is no need to

refer to the author.

The content of this slideshow comes from Section 12.2 of C. Dougherty,

Introduction to Econometrics, fourth edition 2011, Oxford University Press.

Additional (free) resources for both students and instructors may be

downloaded from the OUP Online Resource Centre

http://www.oup.com/uk/orc/bin/9780199567089/.

Individuals studying econometrics on their own and who feel that they might

benefit from participation in a formal course should consider the London School

of Economics summer school course

EC212 Introduction to Econometrics

http://www2.lse.ac.uk/study/summerSchools/summerSchool/Home.aspx

or the University of London International Programmes distance learning course

20 Elements of Econometrics

www.londoninternational.ac.uk/lse.

2012.02.23

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Ye Ar Winner S Fina L Scor e Runners-Up Venue Location Attenda Nce Refere NcesДокумент3 страницыYe Ar Winner S Fina L Scor e Runners-Up Venue Location Attenda Nce Refere NcesHarsha DuttaОценок пока нет

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Seating ArrangementДокумент21 страницаSeating ArrangementHarsha DuttaОценок пока нет

- Pairs TradingДокумент30 страницPairs TradingSwapnil KalbandeОценок пока нет

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- AE Jan15 Assignment-1Документ1 страницаAE Jan15 Assignment-1Harsha DuttaОценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- EEE F312 Power Systems 2014-15Документ3 страницыEEE F312 Power Systems 2014-15Harsha DuttaОценок пока нет

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Econ F214Документ2 страницыEcon F214Harsha DuttaОценок пока нет

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Advice - On Becoming A Quant PDFДокумент20 страницAdvice - On Becoming A Quant PDFBalu MahindraОценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Econ-Fin Course DescriptionДокумент4 страницыEcon-Fin Course DescriptionHarsha DuttaОценок пока нет

- IT Lec 4Документ12 страницIT Lec 4Harsha DuttaОценок пока нет

- ECE EEE F311 Introduction Aug 4 2014Документ18 страницECE EEE F311 Introduction Aug 4 2014Harsha DuttaОценок пока нет

- Timetable Ii Sem 2014-15 PDFДокумент46 страницTimetable Ii Sem 2014-15 PDFHarsha DuttaОценок пока нет

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- NCFM BsmeДокумент96 страницNCFM BsmeAstha Shiv100% (1)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- EBE Dummy VariablesДокумент9 страницEBE Dummy VariablesHarsha DuttaОценок пока нет

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- IT Lec 3Документ11 страницIT Lec 3Harsha DuttaОценок пока нет

- EMEC 1 5th Aug 2013Документ25 страницEMEC 1 5th Aug 2013Harsha DuttaОценок пока нет

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- EEE F313 INSTR F313 AnalogandDigitalVLSIDesignFIrstSem 2014 15Документ2 страницыEEE F313 INSTR F313 AnalogandDigitalVLSIDesignFIrstSem 2014 15Harsha DuttaОценок пока нет

- EBE HeteroscedasticityДокумент5 страницEBE HeteroscedasticityHarsha DuttaОценок пока нет

- EBE HeteroscedasticityДокумент5 страницEBE HeteroscedasticityHarsha DuttaОценок пока нет

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- ECE EEE F311 Introduction Aug 4 2014Документ18 страницECE EEE F311 Introduction Aug 4 2014Harsha DuttaОценок пока нет

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- EEE C371-First Semester 2013-2014 EMACДокумент4 страницыEEE C371-First Semester 2013-2014 EMACHarsha DuttaОценок пока нет

- Setlabs Briefings Business AnalysisДокумент88 страницSetlabs Briefings Business AnalysisaustinfruОценок пока нет

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- Ece Eee f311 PM and FM Sept 3 - 11 2014Документ36 страницEce Eee f311 PM and FM Sept 3 - 11 2014Harsha DuttaОценок пока нет

- Random Varaibles Processes and Noise Aug 11 - 18, 2014Документ26 страницRandom Varaibles Processes and Noise Aug 11 - 18, 2014Harsha DuttaОценок пока нет

- Box-Jenkins Analysis: Plan of SessionДокумент20 страницBox-Jenkins Analysis: Plan of SessionAnokye AdamОценок пока нет

- Manchester United Financial StatementsДокумент9 страницManchester United Financial StatementsHarsha DuttaОценок пока нет

- 03a. Political Obligation and AuthorityДокумент25 страниц03a. Political Obligation and AuthorityHarsha DuttaОценок пока нет

- Assignment 1Документ1 страницаAssignment 1Krishna GhantasalaОценок пока нет

- PS-1 ReportДокумент40 страницPS-1 ReportHarsha DuttaОценок пока нет

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- VersionДокумент1 страницаVersionHarsha DuttaОценок пока нет

- Perbandingan Regresi Zero Inflated Poisson Zip Dan PDFДокумент6 страницPerbandingan Regresi Zero Inflated Poisson Zip Dan PDFalmatrisaОценок пока нет

- System Identification: Arun K. TangiralaДокумент25 страницSystem Identification: Arun K. Tangiralapv_sunil2996Оценок пока нет

- Tugas Metode Kuantitatif - Dicky WahyudiДокумент9 страницTugas Metode Kuantitatif - Dicky WahyudiDicky WahyudiОценок пока нет

- Regression: Descriptive StatisticsДокумент2 страницыRegression: Descriptive StatisticsWi GekkОценок пока нет

- Peran Balai Penyuluhan Pertanian Sebagai Pusat Data Informasi Pertanian Dalam Mendukung Program KostrataniДокумент22 страницыPeran Balai Penyuluhan Pertanian Sebagai Pusat Data Informasi Pertanian Dalam Mendukung Program KostrataniAzzahara fitriОценок пока нет

- Think StatsДокумент142 страницыThink StatsMichael McClellan100% (2)

- Exhibit 18.4 - Simple Moving AverageДокумент22 страницыExhibit 18.4 - Simple Moving AveragetriptiОценок пока нет

- Confidence IntervalsДокумент31 страницаConfidence IntervalsmissbijakpandaiОценок пока нет

- The LATE TheoremДокумент14 страницThe LATE Theoremeleben2002Оценок пока нет

- CHAPTER 14 Regression AnalysisДокумент69 страницCHAPTER 14 Regression AnalysisAyushi JangpangiОценок пока нет

- Model Fit Index Very ImportantДокумент53 страницыModel Fit Index Very ImportantbenfaresОценок пока нет

- Statistics and Probability: Department of EducationДокумент3 страницыStatistics and Probability: Department of EducationKatherine Castro Solatorio100% (1)

- Journal of Statistical Software: Elastic Net Regularization Paths For All Generalized Linear ModelsДокумент31 страницаJournal of Statistical Software: Elastic Net Regularization Paths For All Generalized Linear ModelsOyebayo Ridwan OlaniranОценок пока нет

- Chapter 4 InferentialДокумент135 страницChapter 4 Inferentialelona jcimlОценок пока нет

- Binary Logistic Regression Using Stata 17 Drop-Down MenusДокумент53 страницыBinary Logistic Regression Using Stata 17 Drop-Down Menuszuberi12345Оценок пока нет

- Exam2 100b w21Документ4 страницыExam2 100b w21AbigailОценок пока нет

- ID NoneДокумент24 страницыID NoneAdam PebrianaОценок пока нет

- EconometricsДокумент40 страницEconometricsLay ZhangОценок пока нет

- Modelling and Quantitative Methods in Fisheries - Malcolm Haddon 2th Edition-114-227Документ114 страницModelling and Quantitative Methods in Fisheries - Malcolm Haddon 2th Edition-114-227NestorОценок пока нет

- Reciprocal ModelДокумент7 страницReciprocal ModelPalak SharmaОценок пока нет

- Test Exer 6Документ3 страницыTest Exer 6Uratile PatriciaОценок пока нет

- Week 04Документ101 страницаWeek 04Osii CОценок пока нет

- Machine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann SpiessДокумент38 страницMachine Learning: An Applied Econometric Approach: Sendhil Mullainathan and Jann Spiessabdul salamОценок пока нет

- Fitting The Variogram Model of Nickel Laterite UsiДокумент11 страницFitting The Variogram Model of Nickel Laterite Usiis mailОценок пока нет

- Regression and Life Cycle CostingДокумент28 страницRegression and Life Cycle CostingbestnazirОценок пока нет

- Ecometrici ModuleДокумент338 страницEcometrici ModuleAhmed KedirОценок пока нет

- Econ301 FinalДокумент9 страницEcon301 FinalRana AhemdОценок пока нет

- Britannia Industries Historical Closing Price Data-FinalДокумент48 страницBritannia Industries Historical Closing Price Data-FinalSourabh ChiprikarОценок пока нет

- Panel Stochastic Frontier Models With Endogeneity in Stata: Mustafa U. KarakaplanДокумент13 страницPanel Stochastic Frontier Models With Endogeneity in Stata: Mustafa U. KarakaplanMireya Ríos CaliОценок пока нет

- Sta 316 Cat2 PDFДокумент2 страницыSta 316 Cat2 PDFKimondo KingОценок пока нет