Академический Документы

Профессиональный Документы

Культура Документы

Dynamic Modelling Tips and Tricks

Загружено:

mkwendeИсходное описание:

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Dynamic Modelling Tips and Tricks

Загружено:

mkwendeАвторское право:

Доступные форматы

DynamicModelling_Tricks&Tips_J uly2010.

doc

Page 1 of 20

Dynamic Modelling Tricks and Tip

Anthony Peacock, ODC, RE Discipline Principal

July 2010

Summary

This document is intended to provide a compilation of useful tricks and tips for running

DYNAMO (MoReS). Although designed for SPDC operating environment, the material

should be generally applicable to any Shell operating unit with only minor modifications.

The aim is to provide a consistent framework for the practising Reservoir Engineer

performing dynamic modelling. The note covers a practical guide on how to setup a

computing environment on both Windows and Unix platforms and guidance on how to

run DYNAMO on both. It also sets out recommendations for dynamic model naming

convention, storage and archive. It also describes how to construct production and

pressure data table from OFM and the BHP Database.

This note should be of generally interest to all Reservoir Engineers in SPDC, but

primarily those engaged in dynamic modelling in the ODC.

This document will reside on the SPDC RE webpage to be easily accessible by everyone.

It is intended that this document will updated as other useful tricks and tips become

available

Contents

1 Computing Environment ................................................................................................. 3

1.1 Getting Started with DYNAMO in Linux ........................................................ 3

1.2 Use of Environment variables (Windows and Unix) ......................................... 4

1.3 Useful Unix commands .................................................................................... 6

2 Running Dynamo ............................................................................................................... 7

2.1 Running MoReS in batch on a PC .................................................................... 7

2.2 Running Dynamic Models on Linux Cluster in parallel ..................................... 9

3 Naming Convention, Storage and Archive of Dynamic models ........................ 10

4 Generating Historical Data for MoReS from OFM ............................................... 14

4.1 Production Data ............................................................................................. 14

4.2 Required edits to Production Data files .......................................................... 15

4.3 Down-hole Pressure Data from OFM: Datum Pressure, FBHP, SBHP .......... 18

4.4 Surface Pressure Data from OFM: THP ......................................................... 19

5 Generating Pressure Data Tables from BHP Database ....................................... 20

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 2 of 20

Attachments

The files described in this report are included in the attached ZIP file

A) Files for computing

1. MoReSBatchonPC.bat File for running MoReS in batch on PC

2. execute.exe File for running MoReS in batch on PC

3. .setenv File contain example to setup environment variables

MoReS_Files.zip

B) File for extracting data from OFM

1. Production DataT3.rpt OFM report file for production data

2. PressureData.rpt OFM report file for Datum Pressure, FBHP, SBHP

3. PressureDataTHP.rpt OFM report file for THP pressure data

4. Header format.txt Example Header file for MoReS table definition

MoReS_data_OFM.zip

C) Example file for generating Pressure data for MoReS from BHP Database

NEMC_PRESSURE.zip

References

1. DYNAMO Reference Manuals,

http://sww-geo.shell.com/pro/dynamo/doc/html/

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 3 of 20

1 Computing Environment

1.1 Getting Started with DYNAMO in Linux

The following provides a quick guide to running and managing DYNAMO processes in

Linux. A full description can be found in the DYNAMO User Manuals.

DYNAMO command line options

Start an Interactive Dynamo Session (current default installed version)

DYNAMO

Start an Interactive Dynamo Session in a specific version

DYNAMO v2009.1

Start an Interactive Dynamo Session and immediately load an existing runfile

DYNAMO r RunFile.run

List the current installed and available versions

DYNAMO --list

Start a process in batch from an input file and send output to an output file and error file

DYNAMO < InputFile.inp > OutputFile.out 2> Output.File.err &

DYNAMO -i InputFile.inp -o OutputFile.out e OutputFile.err &

A full list of options is displayed by typing

DYNAMO --help

Start Parallel MoReS and specify the number of processes to use

DYNAMO -paripc 2 -i InputFile.inp -o OutputFile.out e OutputFile.err

&

___________________________________________________________________

Managing DYNAMO processes

To monitor the progress of a DYNAMO process in background

bjobs -w (-l)

options : -w create a short listing on the processes

-l creates a long listing of the processes

To stop a DYNAMO process running in background (JOBID# is output from bjobs

command)

bkill JOBID#

To stop all DYNAMO processes running in background

bkill 0

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 4 of 20

1.2 Use of Environment variables (Windows and Unix)

Environment variable are very useful and can be used for

- Navigating the Unix directory structure more easily

- Transferring and running the same input file between the Windows and Unix

Windows

On Windows, Environment variables are defined from the System Properties Menu

From the My Computer icon on the Desktop,

Right Click and select Properties > Advanced > Environment Variables

Click New to create a new variable

In the example shown below, the environment variable is used to define a shortcut to a

specific directory on the C:\ drive of the PC.

Vista

On Vista, Environment variable are define from within the Control Panel > User

Accounts page. The option Change my environment variable is found on the left hand side of

the panel.

Unix

Environment variables can be defined in Unix by creating an executable file in the user

home directory that contains a listing of the required variables and running the file as

follows

1. Open an interactive terminal window on Linux

2. Go to T-Shell

tcsh

3. Run the executable file

source .setenv

An example .setenv file is included in the attached MoReS_Files.ZIP file. Note that the

user needs to be in the T-Shell to recognise the setenv keyword. The K-Shell recognises

the export keyword. Also shown is the use of aliases that are useful unix shortcuts.

#

#Script to define useful aliases and environment variable in Linux

#

echo "\

-------------------------------------------------------------------------------\

Set Environment Variables and Aliases\

\

-------------------------------------------------------------------------------\

"

#Set useful aliases

alias dy91 'DYNAMO -v2009.1'

#Set useful environment variable

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 5 of 20

setenv EPU

"/glb/af/spdc/data/mid_project/GBAR_PH2_0108/Dynamo/Work/EPU"

How to define an environment variable in a Windows operating system.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 6 of 20

1.3 Useful Unix commands

The following is a list of basic but useful Unix commands helpful to anyone using a

Unix/Linux computing environment. The list below is very far from a complete but

contains only the most useful commands to run DYNAMO.

ls {-al} Listing a directory {all files (a), in long format (l)}

cp <file1> <file2> Copying a file

mv <file1> <file2> Renaming a file

cd <dir> Change to another directory, if <dir>is omitted it points to the users

home-dir

rm {-r} <file/dir> Removes a file or directory {recursively, i.e. including sub-

directories}

pwd Shows the present directory

mkdir {-p} <new_dir> Create a new directory (including the entire path when not present)

who Shows all users presently logged on to the system

whoami Shows the username of the present user

finger <uid> Display information about the user uid

id Shows the id (uid & gid) of the present user

hostname Shows the name of the current machine

cat <file> Printing the contents of a file to the standard output (normally:

screen)

man <command> Showing the contents of the manual pages about <command>

man k <keyword> Showing what manual pages contain the specified <keyword>

setenv Create an environment variable (in T-Shell)

echo Display line of text (eg. echo $env, displays contents of the

environment variable env .

vi Text-editor in Unix (view is the read-only version of this editor)

grep {-i} <strng> <file1> Finding a certain <string>in <file1>{ignoring upper/lowercase}

more Handling output on a screen-by-screen base

ps {-ef / -u <user>} Show the processes running at that moment {all or of a specific

<user>

| Pipe; can be used to redirect output from one command to input for

the other [ e.g. ps ef| grep <user> shows all pr ocesses of <user>]

chmod {ugorwx} <file> Change permissions on <file>(or directory) using various options

(specifing user, group, others adding (+)/removing(-)permissions

rwx). chomd R 777 * will change all file to rwx to all.

chown <uid> <file> Change owner of <file>to user-id <uid>

chgrp <gid> <file> Change group of <file>to group-id <gid>

file <file> Determine the file-type of <file>(i.e. ascii, executable, tar-archive,

etc.)

which <command/file> Displaying the entire path to the command or file intended

passwd {user} Change password {of user}

df {-k, dir} Show free/used space {in kB, on file-system involved with specified

dir

date To show system time and date

uname {-a} Shows (more) information about the operating system

ssh X username@phc-n-

s01taw.africa-me.shell.com

Logon to the system as a specific user (

bjobs {-w,-l} Returns list of active jobs on the system. l for long listing

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 7 of 20

2 Running Dynamo

2.1 Running MoReS in batch on a PC

It can be useful to run DYNAMO jobs in batch on a PC. To do this, the files included in

the attached ZIP file are needed

1. execute.exe

2. *.bat file which runs one or more DYNAMO jobs by calling the

DYNAMO executable with appropriate options

Examples can be found in the following directory and are described below.

/glb/af/spdc/data/mid_project/Templates/MoReSBatchonPC

The .bat file contains commands to run DYNAMO on a PC using the dynamo.exe

installed in a specific directory. Environment variable can be used within the DYNAMO

input decks in the same way as in Unix, so setting a common variable on both Windows

and Unix allows the same input deck to be used on each system. The .bat file can contain

multiple commands to run several jobs after one another (e.g. overnight). Note that

Environment variables in DOS are recognised by having % signs before and after the

keyword, whereas Unix uses a $ sign before the keyword only.

To run the file simple Double Click the MoReSBatchonPC.bat file from the Windows

directory.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 8 of 20

Double-click the .bat

file to run the model

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 9 of 20

2.2 Running Dynamic Models on Linux Cluster in parallel

SPDC currently has a 32 node Linux Cluster available that can be used for dynamic

modelling. The advantage of the cluster is that several dynamic models can be run in

parallel, significantly speeding up the turnaround time for a sensitivity study.

The procedure to do this outlined below.

The following files are need in the Unix directory for the specific model:

- All the necessary dynamo input decks

- A batch file with commands to run the decks

An example of a batch file is shown below.

The batch file is processed by typing:

./ BELE_D1D2_HM_BB.BATCH &

In this example the batch file will submit 10 DYNAMO jobs to the cluster, which will be

distributed across different nodes depending on availability. All jobs will run in parallel.

The jobs can be monitored by typing bjobs -w to determine which node they are

running on.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 10 of 20

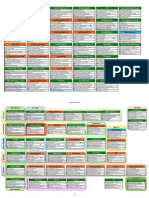

3 Naming Convention, Storage and Archive of Dynamic models

DYNAMO models can be run on either a PC (Windows) or Linux environment. Models

can also be run over a 32-node distributed Linux Cluster. While this flexibility takes

advantage of the distributed computing environment and allows many sensitivities to be

made, the results is that dynamic model input decks are not centralised and data is stored

on individual hard drives and not backed up. The pros and cons of each environment is

outlined below:

PC - Only run one process at a time

- Files located on a local hard drive are not backed up

- No one else can access the files

Lunix - Greater storage space available

- Files are centrally backed up and archived

- Files can be accessed and shared amongst other REs

- Can run multiple DYNAMO processes simultaneously on the Linux

Cluster

- Samba can be used to access the Linux drives from Windows

Recommendation

It is strongly recommended to adhere to the following guidelines for storage and naming

convention of all dynamic models in SPDC

- All dynamic models should be stored in (or copied to) the Unix directories

- Models which are currently being worked on should be stored in the Work

directory. Finalised models that were used as the basis for a review or key

decision (e.g. DG2) should be copied to the Final directory.

- Models can be developed, tested and run on a PC(Windows) at any time,

provided that at suitable intervals the models are copied to the Unix directories

for backup.

- An example of the recommended file naming convention and directory structure

is outlined below. Note that only the Work directory has been expanded. A

mirror image also exists in the Final directory. However it is expected that the

Final directories only contain a limited number of key models used for decision

making. All sensitivity work should be contained in the Work directory.

- A directory structure that mirrors the Dynamic Modelling workflow is strong

encouraged to help organise packages of work into easily identified areas (e.g.

separate directories for Experimental Design, History matching, Upscale

sensitivities, etc. )

- DYNAMO input decks should be constructed to be able to run on both Unix

and Windows environments. Environment Variable in both Windows and Unix

should be used to do this. In this way it is always possible to swap between the

systems in case of problems.

- Access rights to the directories needs to be actively managed so that models can

be quality checked appropriately.

- The use of spaces in file names should be avoided as this can cause problems in

Unix

A diagram of the recommended directory structure is outlined below.

Benefits

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 11 of 20

The benefits of centralized storage are:

- Access to increased storage space

- Improved management of dynamic models

- Allow better archiving of models and projects

- Allow hibernated projects to be restarted quickly

- Sharing of common templates, scripts and workflows

Unix Directories

The following Unix directories are available to store dynamic model data:

Mid belt reservoir models:

Linux directory Samba link

/glb/af/spdc/data/mid_project \\phc-n-s00051\mid\project\

West belt reservoir models:

Linux directory Samba link

/glb/af/spdc/data/west_project \\phc-n-s00051\west\project\

Dynamic Model Naming Convention

Dynamic model input decks and run files should be named in a consistent manner to

ease identification of key models and sensitivities. The recommended naming convention

is outlined below and is consistent with Global standards and static model naming

conventions.

Each file should have a Field, Reservoir, Description and Mode identifier.

Field Four character field abbreviation, e.g. BELE for Belema

Reservoir Reservoir description, e.g. D1000A

Description Text abbreviation to describe what the run is about, e.g. ED for

Experimental Design

Mode Optional addition to Description identifier, e.g. rpp for

Reduce++ runfile

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 12 of 20

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 13 of 20

The following directory structure is recommended for all dynamic modelling in SPDC.

The structure is compliant with global standards on data storage and is consistent with a

similar directory structure being used for static models (Petrel).

The structure has the following features

- The top level directory name describes a field

and activity, e.g. BELE for Belema, FDP_0909

for FDP update September 2009

- Beneath the top level directory, sub-directories

of Dynamo and Work exist

- An Include sub-directory exists which contains

all the individual include files being used by

MoReS. This allows easy access to and quality

checking of the data

- A series of directories exist below the Work

directory which contains dynamic model data

for each specific reservoir. Where reservoirs

are combined, the name should reflect this.

For example opposite, the D1D2A directory

refers to the analysis for the combined

D1000A and D2000A reservoir

- Beneath the reservoir level directory, several

sub-directories can be created to organise

specific items of work. In the example

opposite, the ED directory refers to

Experimental Design with further sub-

directories for PlackettBurman and

BoxBehnken analyses, HM for History

matching and PVT for PVT sensitivities.

- The static model is normally transferred to

DYNMAO via an Open Petrel Binary file

(OPB file). It is recommended to store all

OPB files in the Petrel sub-directory under the

Include directory

- Historical production data (from OFM) should

be stored inside the History sub-directory

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 14 of 20

4 Generating Historical Data for MoReS from OFM

Oil Field Manager (OFM) software from Schlumberger is used in SPDC to store

production and pressure data. The data is stored at a completion level, Field -> Reservoir

-> Well -> Zone). Data at a perforation level is not stored. This section explains how to

extract production and pressure data in tabular format suitable for direct input to

MoReS.

At the time of writing this report, the templates files outlined below only work with

OFM 2005. The templates will NOT work with OFM 2007.3.1. Until advised otherwise

the user is recommended to only use OFM 2005 to perform these tasks.

4.1 Production Data

A template (Production DataT3.rpt) is available for extracting production data from

OFM in a format that can be directly loaded into MoReS. The template file is included in

the attached MoReS_Data_OFM.ZIP file at the beginning of the report. It can be

extracted and stored in an appropriate place on the PC, e.g. C:\Apps\OFM.

The procedure to generate the MoReS data table is outlined below:

1. Open OFM and lead in the latest MSAccess database with relevant production

data (East or West)

2. Filter on the required production dataset, e.g. BELE -> D1000B

3. Open a report. Report -> Analysis -> OK

4. Load the Template Production Data report file: File -> Open, and navigate to

location where the template file is stored.

5. The report produces a table of the production data for the filtered dataset.

6. Production data for the individual completion zones can be generated by

selecting: View -> Summary -> By Item.

7. The data can be output to a text file. Right click in the top left hand corner of the

central screen and select Text. In the File: dialog box, define a name of an

output file and select Close and Update. The output file will appear in the

appropriate directory.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 15 of 20

4.2 Required edits to Production Data files

The tables generated by the OFM report files described above need to be hand edited to

work properly in MoReS. This section describes the edits required.

1. Inclusion of header information to define tables.

A MoReS header file is required to define the necessary table format. An

example is attached. The file generate by OFM should be include inside the

header file.

Header format.txt

2. Addition of zero rate/cumulative row at start of each table

It is important to ensure MoReS is synchronised with history data table. The start

time in MoReS is set using the TIME command in the SIMDATA dictionary.

For example,

SIMDATA.TIME = DATE(31,08,1993) ! (day, month, year)

MoReS will start simulating from this time. The time in the first row of a history

match table must correspond to the time that the well is opened for the first time

and the cumulatives and rates in this row must be zero.

In the example table below, the first row must be inserted by hand with zero

rates and cumulatives. It is generally recommended that liquid rate constraints are

applied to the production wells. Well BELE001S will start simulating from time

31-Aug-1993 at a liquid production constraint of 288.97 b/d until 30-Sep-1993.

The liquid rate constraint will then be increased to 1954.52 b/d and simulation

progressed to 31-Oct-1993, and so on.

3. Addition of zero rate row at end of history period

A particular well will continue to simulate as described above, being controlled by

the relevant constraint in the history data table. For situations where the well

stops production before the end of simulation run, a row must be inserted at the

end of the table with zero rates. This will stop the well producing; otherwise it

will continue to produce beyond the history period. The scripts will work

correctly in most cases; however the user needs to check that a row with zero

rates and ratios is present. The cumulative volumes should be same as the

previous month.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 16 of 20

Select Text

Right Click in

this box

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 17 of 20

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 18 of 20

4.3 Down-hole Pressure Data from OFM: Datum Pressure, FBHP, SBHP

A template (PressureData.rpt) is available for extracting down-hole pressure data on a

drainage point level from OFM in a format that can be directly loaded into MoReS. The

template file is included in the ZIP file above. It can be extracted and stored in an

appropriate place on the PC, e.g. C:\Apps\OFM.

The OFM report file will generate a table of well completion name, date, Datum BHP,

Flowing BHP and Static BHP as shown below.

The procedure to generate the MoReS data table is similar to that described for

production data above.

1. Open OFM and lead in the latest MSAccess database with relevant production

data (East or West)

2. Filter on the required production dataset, e.g. BELE -> D1000B

3. Open a report. Report -> Analysis -> OK

4. Load the Template Production Data report file: File -> Open, and navigate to

location where the template file is stored.

5. Select a particular completion from the lower left hand window

6. The pressure data for that completion are displayed in the central window

7. The data can be output to a text file. Right click in the top left hand corner of the

central screen and select Text. In the File: dialog box, define a name of an

output file and select Close and Update. The output file will appear in the

appropriate directory.

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 19 of 20

4.4 Surface Pressure Data from OFM: THP

A template (PressureDataTHP.rpt) is available for extracting surface pressure data on a

drainage point level from OFM in a format that can be directly loaded into MoReS. The

template file is included in the ZIP file above. It can be extracted and stored in an

appropriate place on the PC, e.g. C:\Apps\OFM.

The OFM report file will generate a table of well completion name, date, THP as shown

below. THP data is reported on a monthly basis. This is different from the down-hole

pressure data which is only reported at dates when specific down-hole pressure survey

where conducted.

The procedure to generate the MoReS data table is identical to that described for down-

hole pressure data in section 4.3

DynamicModelling_Tricks&Tips_J uly2010.doc

Page 20 of 20

5 Generating Pressure Data Tables from BHP Database

The BHP Database is used to store a range of pressure test data. This section describes

the process of extracting the data in a form that can be used by MoReS. Data from the

Nembe Creek Field will be used as the example. It is assumed that the user has access to

the BHP Database (ID and Password).

The procedure to generate Pressure data tables for MoReS is outlined below:

1. Open BHP Database with ID and Password

2. From Menu bar select Report -> Summary Reports -> Pdatum re-calculated

3. Specify the field name in the dialog box

4. The BHP Summary Report screen with appear containing well test data and

parameters for all wells in the Nembe Creek field. The data is order by Reservoir

/ Well / Date

5. Extract that data to an excel sheet

Report -> Save to File... -> Save as type (Excel with headers)

6. Save the excel file to a location on the C: drive

7. The pressure data excel file will have data in columns A to AB. The attached

template file contains excel function in columns AC and AD to extract data

tables in a form suitable for MoReS. Copy the functions from the template file

into the columns AC and AD of the file extracted from the database

a. Column AC: Well data (FTHP, FBHP, SBHP, Pdatum)

b. Column AD : Reservoir data (Pdatum)

8. The data can be used to create MoReS input tables. Table definition statements

are also included in row 1.

Вам также может понравиться

- Complex Fluid For Olga 5Документ10 страницComplex Fluid For Olga 5angry_granОценок пока нет

- Guidelines For Well Model and Lift TableДокумент2 страницыGuidelines For Well Model and Lift TablemkwendeОценок пока нет

- Enrtl-Rk Rate Based PZ ModelДокумент24 страницыEnrtl-Rk Rate Based PZ ModelsamandondonОценок пока нет

- SPT OLGA Drain RateДокумент69 страницSPT OLGA Drain RatecsharpplusОценок пока нет

- PIPESIM Pipeline and Facilities Modeling: Identify and Engineer The Best Pipelines and Facilities DesignДокумент2 страницыPIPESIM Pipeline and Facilities Modeling: Identify and Engineer The Best Pipelines and Facilities DesignpertmasterОценок пока нет

- Choke Sizing & Propiedaes de Los FluidosДокумент149 страницChoke Sizing & Propiedaes de Los FluidosJose RojasОценок пока нет

- Day 3-02 - Reservoir Drive Mechanisms & MBДокумент149 страницDay 3-02 - Reservoir Drive Mechanisms & MBHaider AshourОценок пока нет

- Eclipse 100Документ43 страницыEclipse 100Tubagus FadillahОценок пока нет

- Spe 6721 Pa PDFДокумент7 страницSpe 6721 Pa PDFkmilo04Оценок пока нет

- PERFORM - Nodal Analysis by ExampleДокумент2 страницыPERFORM - Nodal Analysis by ExampleAlfonso R. ReyesОценок пока нет

- l6 Flow Assurance - 15pagesДокумент16 страницl6 Flow Assurance - 15pagesAdetula Bamidele Opeyemi100% (1)

- 456Документ21 страница456David LapacaОценок пока нет

- SPE-175877-MS EOS Tuning - Comparison Between Several Valid Approaches and New RecommendationsДокумент17 страницSPE-175877-MS EOS Tuning - Comparison Between Several Valid Approaches and New RecommendationsCamilo Benítez100% (1)

- Integrated Approach To Flow Assurance AnДокумент19 страницIntegrated Approach To Flow Assurance Anrezki eriyando100% (1)

- PVT - Exercise 3Документ32 страницыPVT - Exercise 3girlfriendmrvingОценок пока нет

- Eclipse Tutorial4Документ11 страницEclipse Tutorial4Cara BakerОценок пока нет

- Reservoir Fluid Sampling (Lulav Saeed)Документ15 страницReservoir Fluid Sampling (Lulav Saeed)Lulav BarwaryОценок пока нет

- PRE - RE1 2017 Chapter 7-2Документ17 страницPRE - RE1 2017 Chapter 7-2Hari TharanОценок пока нет

- Field Scale Analysis of Heavy Oil Recovery PDFДокумент12 страницField Scale Analysis of Heavy Oil Recovery PDFXagustОценок пока нет

- MBAL Work ExampleДокумент150 страницMBAL Work ExampleQaiser HafeezОценок пока нет

- Automate Gas Field Hydrates and Erosion Prediction Using PIPESIM OpenLink and Excel 6124063 01Документ13 страницAutomate Gas Field Hydrates and Erosion Prediction Using PIPESIM OpenLink and Excel 6124063 01ToufikNechОценок пока нет

- LP - FlashДокумент6 страницLP - FlashReband AzadОценок пока нет

- 8.1 Physical Properties of The Gas: 8.1.1 Pressure and VolumeДокумент16 страниц8.1 Physical Properties of The Gas: 8.1.1 Pressure and VolumehiyeonОценок пока нет

- 03 - Fast Evaluation of Well Placements in Heterogeneous Reservoir Models Using Machine LearningДокумент13 страниц03 - Fast Evaluation of Well Placements in Heterogeneous Reservoir Models Using Machine LearningAli NasserОценок пока нет

- How To Build A VFP Table For ECLIPSE Forecast Simulation - 4238270 - 01Документ7 страницHow To Build A VFP Table For ECLIPSE Forecast Simulation - 4238270 - 01ToufikNechОценок пока нет

- Reservoir Simulation Report UKCSДокумент190 страницReservoir Simulation Report UKCSValar MorghulisОценок пока нет

- 1 Intro To MF Hydraulics UpdДокумент38 страниц1 Intro To MF Hydraulics UpdHoang Nguyen MinhОценок пока нет

- User's GuideДокумент30 страницUser's GuideAnonymous Wd2KONОценок пока нет

- Print Able Olga 7 User ManualДокумент286 страницPrint Able Olga 7 User ManualAzira Nasir100% (1)

- ImageToSEGY UserGuide PDFДокумент16 страницImageToSEGY UserGuide PDFyuri tagawaОценок пока нет

- Hesham Mokhtar Ali Senior Reservoir Engineer 2021: In/heshammokhtaraliДокумент7 страницHesham Mokhtar Ali Senior Reservoir Engineer 2021: In/heshammokhtaraliAlamen GandelaОценок пока нет

- Pressure Drop in Horizontal WellДокумент16 страницPressure Drop in Horizontal WellDor SoОценок пока нет

- CMG Applications-Pages-15-21Документ7 страницCMG Applications-Pages-15-21Kunal KhandelwalОценок пока нет

- Relative Permeability Up-Scaling Examples Using Eclipse SoftwareДокумент7 страницRelative Permeability Up-Scaling Examples Using Eclipse SoftwarecarlosОценок пока нет

- Pvtsim: The Olga 2000 Fluid Property PackageДокумент24 страницыPvtsim: The Olga 2000 Fluid Property Packagesterling100% (1)

- Summary & Schedule SectionДокумент33 страницыSummary & Schedule SectionAkmuhammet MammetjanovОценок пока нет

- PVT Modeling TutorialДокумент7 страницPVT Modeling Tutorialbillal_m_aslamОценок пока нет

- Kappa 2009 PDFДокумент48 страницKappa 2009 PDFHuascarОценок пока нет

- Spe 99446Документ8 страницSpe 99446Nana Silvana AgustiniОценок пока нет

- An Efficient Tuning Strategy To Calibrate Cubic EOS For Compositional SimulationДокумент14 страницAn Efficient Tuning Strategy To Calibrate Cubic EOS For Compositional SimulationKARARОценок пока нет

- 10 CFD Simulation of Hydrocyclone in Absense of An Air Core 2012Документ10 страниц10 CFD Simulation of Hydrocyclone in Absense of An Air Core 2012Jair GtzОценок пока нет

- Hysys CompressДокумент4 страницыHysys CompressVidyasenОценок пока нет

- Flowing Well PerformanceДокумент57 страницFlowing Well PerformancemkwendeОценок пока нет

- (2020) Permadi, A.K. - Introduction To Petroleum Reservoir EngineeringДокумент491 страница(2020) Permadi, A.K. - Introduction To Petroleum Reservoir EngineeringkadekwidyawatiОценок пока нет

- Presentation Slides Flow Assurance PDFДокумент15 страницPresentation Slides Flow Assurance PDFYan LaksanaОценок пока нет

- Process Engineering Simulation With Aspen HYSYSДокумент23 страницыProcess Engineering Simulation With Aspen HYSYSArcangelo Di TanoОценок пока нет

- Improvements in Pipeline Modeling in HYSYS V7.3Документ10 страницImprovements in Pipeline Modeling in HYSYS V7.3Gabrielito PachacamaОценок пока нет

- Flow Assurance Overview-2Документ44 страницыFlow Assurance Overview-2emenneОценок пока нет

- Applicable Version (S) : Flarenet Sonic Vel Check - HSC (100 K)Документ2 страницыApplicable Version (S) : Flarenet Sonic Vel Check - HSC (100 K)behnazrzОценок пока нет

- FA With OLGA Exercises - 20070522Документ36 страницFA With OLGA Exercises - 20070522Amin100% (1)

- How To Run A Nodal Analysis in PipeSIM - 4284982 - 02Документ20 страницHow To Run A Nodal Analysis in PipeSIM - 4284982 - 02Rosa K Chang HОценок пока нет

- PIPESIM Fundamentals: Workflow/Solutions TrainingДокумент206 страницPIPESIM Fundamentals: Workflow/Solutions TrainingFco Ignacio CamineroОценок пока нет

- Pvtsim Technical Overview 2016 Download v3Документ16 страницPvtsim Technical Overview 2016 Download v3Jorge Vásquez CarreñoОценок пока нет

- Porosity - Permeability Correlation: Core DataДокумент15 страницPorosity - Permeability Correlation: Core DataanjumbukhariОценок пока нет

- PvtsimДокумент12 страницPvtsimogunbiyi temitayo100% (1)

- Pipe Flow Module: Introduction ToДокумент30 страницPipe Flow Module: Introduction Tobastian jeriasОценок пока нет

- Pipesim HistoryДокумент22 страницыPipesim Historydndudc100% (1)

- Smith PT3 Training GuideДокумент24 страницыSmith PT3 Training Guidefarai muzondoОценок пока нет

- RecordДокумент187 страницRecordNazna Nachu100% (1)

- CS2257 OS LAB MANUAL II IT by GBN PDFДокумент91 страницаCS2257 OS LAB MANUAL II IT by GBN PDFAdinadh KrОценок пока нет

- Livingspring Citi-2 Ajegbenwa - Ibeju Lekki LayoutДокумент3 страницыLivingspring Citi-2 Ajegbenwa - Ibeju Lekki LayoutmkwendeОценок пока нет

- End of Well ReportДокумент21 страницаEnd of Well ReportmkwendeОценок пока нет

- Separator SizingДокумент18 страницSeparator SizingmkwendeОценок пока нет

- WRFM Training Pack - Waxy Well MGTДокумент20 страницWRFM Training Pack - Waxy Well MGTmkwendeОценок пока нет

- OFM 2007.2 FundamentalsДокумент308 страницOFM 2007.2 Fundamentalsmkwende100% (1)

- 16.00 CivanДокумент101 страница16.00 CivanKuenda YangalaОценок пока нет

- Pneumatic Actuator InformationДокумент24 страницыPneumatic Actuator Informationsapperbravo52Оценок пока нет

- Macam-Macam Artificial LiftДокумент52 страницыMacam-Macam Artificial LiftRichard Arnold SimbolonОценок пока нет

- Petex - Digital Oil Field Brochure PDFДокумент15 страницPetex - Digital Oil Field Brochure PDFmkwende100% (1)

- Flowing Well PerformanceДокумент57 страницFlowing Well PerformancemkwendeОценок пока нет

- Oracle Database 10g - RAC For Administrators - V1Документ574 страницыOracle Database 10g - RAC For Administrators - V1kdaniszewskiОценок пока нет

- Features of Hadoop: - Suitable For Big Data AnalysisДокумент6 страницFeatures of Hadoop: - Suitable For Big Data AnalysisbrammaasОценок пока нет

- Sun ClusterДокумент87 страницSun Clustersyedrahman75100% (1)

- Oracle Fusion Middleware: Installing and Configuring Oracle Webcenter Sites 12C (12.2.1)Документ128 страницOracle Fusion Middleware: Installing and Configuring Oracle Webcenter Sites 12C (12.2.1)Jose DominguezОценок пока нет

- Hitachi Best Practices Guide Optimize Storage Server Platforms Vmware Vsphere EnvironmentsДокумент35 страницHitachi Best Practices Guide Optimize Storage Server Platforms Vmware Vsphere Environmentsyhfg27Оценок пока нет

- VIOSДокумент28 страницVIOSmana1345Оценок пока нет

- RAC and Oracle Clusterware Best Practices and Starter Kit - AIXДокумент9 страницRAC and Oracle Clusterware Best Practices and Starter Kit - AIXSyed NoumanОценок пока нет

- Platform BPG AffinityДокумент15 страницPlatform BPG AffinitySenthil KumaranОценок пока нет

- Storage Area Network SAN SEMINARДокумент6 страницStorage Area Network SAN SEMINAREldhos MTОценок пока нет

- White Paper Deltav Virtualization Overview en 57636Документ15 страницWhite Paper Deltav Virtualization Overview en 57636Marco Antonio AgneseОценок пока нет

- 70 450examДокумент41 страница70 450examStacey ShortОценок пока нет

- OpenCall Universal Signaling Platform Datasheet V3Документ8 страницOpenCall Universal Signaling Platform Datasheet V3Bubaiko JhonОценок пока нет

- Standalone PDF FortiSIEMДокумент1 046 страницStandalone PDF FortiSIEMNirvaya KunwarОценок пока нет

- NetApp Configuration and Administration BasicsДокумент142 страницыNetApp Configuration and Administration BasicspramodОценок пока нет

- Autonomous Database 100 PDFДокумент36 страницAutonomous Database 100 PDFLesile DiezОценок пока нет

- Vsan 801 Planning Deployment GuideДокумент92 страницыVsan 801 Planning Deployment GuideSarah AliОценок пока нет

- Case Studies Network Gokulatheerthan MДокумент15 страницCase Studies Network Gokulatheerthan Mmonya1911Оценок пока нет

- Nutanix Field Installation Guide-V1 2Документ41 страницаNutanix Field Installation Guide-V1 2dean-stiОценок пока нет

- Insightiq Installation Guide 3 2Документ15 страницInsightiq Installation Guide 3 2walkerОценок пока нет

- Objective: Pavan Kumar Reddy Ms SQL Database AdministratorДокумент3 страницыObjective: Pavan Kumar Reddy Ms SQL Database AdministratorSrinu MakkenaОценок пока нет

- Cyberoam High Availability Configuration GuideДокумент30 страницCyberoam High Availability Configuration Guideolfa0Оценок пока нет

- Optimize Storage Compute Platforms in Vmware Vsphere Environments Best Practices GuideДокумент34 страницыOptimize Storage Compute Platforms in Vmware Vsphere Environments Best Practices GuideJose G. DuranОценок пока нет

- Csync2 ConfigurationДокумент11 страницCsync2 ConfigurationLeni NetoОценок пока нет

- High Availability and Fault Tolerance: © 2011 Vmware Inc. All Rights ReservedДокумент51 страницаHigh Availability and Fault Tolerance: © 2011 Vmware Inc. All Rights ReservedMadhav KarkiОценок пока нет

- The Definitive Guide To Hyperconverged Infrastructure: How Nutanix WorksДокумент26 страницThe Definitive Guide To Hyperconverged Infrastructure: How Nutanix Workselcaso34Оценок пока нет

- Apache NifiДокумент9 страницApache NifimanideepОценок пока нет

- Docu48343 NetWorker 8.1 and Service Packs Release NotesДокумент212 страницDocu48343 NetWorker 8.1 and Service Packs Release NotesKishorОценок пока нет

- Kaspersky Endpoint Security and Management. Scaling: KSC Installation On A Failover ClusterДокумент37 страницKaspersky Endpoint Security and Management. Scaling: KSC Installation On A Failover Clusterjheimss Goudner100% (1)

- h11909 Emc Isilon Best Practices Eda WPДокумент34 страницыh11909 Emc Isilon Best Practices Eda WPgabbu_Оценок пока нет

- Networker 9.x - NMDA Administration GuideДокумент504 страницыNetworker 9.x - NMDA Administration GuideZuhaib ShafiОценок пока нет

- Optimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesОт EverandOptimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesОценок пока нет

- Blockchain Basics: A Non-Technical Introduction in 25 StepsОт EverandBlockchain Basics: A Non-Technical Introduction in 25 StepsРейтинг: 4.5 из 5 звезд4.5/5 (24)

- The Future of Competitive Strategy: Unleashing the Power of Data and Digital Ecosystems (Management on the Cutting Edge)От EverandThe Future of Competitive Strategy: Unleashing the Power of Data and Digital Ecosystems (Management on the Cutting Edge)Рейтинг: 5 из 5 звезд5/5 (1)

- Grokking Algorithms: An illustrated guide for programmers and other curious peopleОт EverandGrokking Algorithms: An illustrated guide for programmers and other curious peopleРейтинг: 4 из 5 звезд4/5 (16)

- Agile Metrics in Action: How to measure and improve team performanceОт EverandAgile Metrics in Action: How to measure and improve team performanceОценок пока нет

- Ultimate Snowflake Architecture for Cloud Data Warehousing: Architect, Manage, Secure, and Optimize Your Data Infrastructure Using Snowflake for Actionable Insights and Informed Decisions (English Edition)От EverandUltimate Snowflake Architecture for Cloud Data Warehousing: Architect, Manage, Secure, and Optimize Your Data Infrastructure Using Snowflake for Actionable Insights and Informed Decisions (English Edition)Оценок пока нет

- Data Smart: Using Data Science to Transform Information into InsightОт EverandData Smart: Using Data Science to Transform Information into InsightРейтинг: 4.5 из 5 звезд4.5/5 (23)

- SQL QuickStart Guide: The Simplified Beginner's Guide to Managing, Analyzing, and Manipulating Data With SQLОт EverandSQL QuickStart Guide: The Simplified Beginner's Guide to Managing, Analyzing, and Manipulating Data With SQLРейтинг: 4.5 из 5 звезд4.5/5 (46)

- Monitored: Business and Surveillance in a Time of Big DataОт EverandMonitored: Business and Surveillance in a Time of Big DataРейтинг: 4 из 5 звезд4/5 (1)

- Modelling Business Information: Entity relationship and class modelling for Business AnalystsОт EverandModelling Business Information: Entity relationship and class modelling for Business AnalystsОценок пока нет

- Fusion Strategy: How Real-Time Data and AI Will Power the Industrial FutureОт EverandFusion Strategy: How Real-Time Data and AI Will Power the Industrial FutureОценок пока нет

- Relational Database Design and ImplementationОт EverandRelational Database Design and ImplementationРейтинг: 4.5 из 5 звезд4.5/5 (5)