Академический Документы

Профессиональный Документы

Культура Документы

SOLUTIONS

Загружено:

mm8871Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

SOLUTIONS

Загружено:

mm8871Авторское право:

Доступные форматы

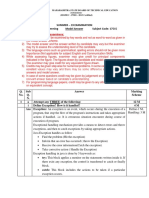

Hints and Answers to Selected Problems of the Exercises

Chapter 1.

1.1

1.2

Hint : See the first and second paragraph of section 1.2 (Fuzzy Systems).

Hint : See Table 1.2 (Soft Computing Techniques) and the subsequent text.

Chapter 2.

2.1.

Introduction

Fuzzy Sets

Hint : Apply Venn diagram.

2.3 & 2.4.

Hint : Use a graph paper. For various values of x, see the value of (x), and then map

this value to the new value using the transformation function.

2.7

A = { 0, 1, 2, 3 }, B = { 2, 3, 5 }

R = { ( 0, 2 ), ( 0, 3 ), ( 0, 5 ), ( 1, 2 ), ( 2, 3 ), ( 2, 5 ), ( 3, 2 ) }

S = { ( 0, 2 ), ( 0, 3 ), ( 0, 5 ), ( 1, 3 ), ( 2, 5 ), ( 3, 5 ) }

T = { ( 2, 0 ), ( 3, 0 ), ( 3, 2 ), ( 3, 4 ), ( 5, 0 ), ( 5, 2 ) }

2.9

See Example 2.28 (-cut).

2.10

F = 0.6/a + 0.2/b + 0.3/c + 0.9/d

F0.2 = { a, b, c, d } and 0.2F0..2 = 0.2/a + 0.2/b +0.2/c + 0.2/d

F0.3 = { a, c, d } and 0.3F0.3 = 0.3/a + 0.3/c + 0.3/d

F0.6 = { a, d } and 0.6F0.6 = 0.6/a + 0.6/d

F0.9 = { d } and 0.9F0.9 = 0.9/d

2.11

See Example 2.32 (Fuzzy Cardinality).

2.12 & 2.13

See Examples 2.32 (Fuzzy Cardinality) and 2.33 (Fuzzy Extension principle).

Chapter 3.

Fuzzy Logic

3.1

Hint : Realize AND and OR using , and .

3.2

Hint : Apply truth table method.

3.3 & 3.4

See Example 3.3 (Validity of an argument).

3.5

Hint : Recall that a collection of statements is said to be consistent if they can all be true

simultaneously. Construct the truth table and check.

3.10

See Examples 3.19 (Zadehs interpretation of fuzzy rule)

3.11 & 3.12

See Examples 3.21 (Fuzzy reasoning with the help of Generalized Modus Ponens)

Chapter 4.

Fuzzy Inference Systems

4.1

See Sections 4.7.1 (Fuzzy air conditioner controller) and 4.7.2 (Fuzzy cruise controller).

Chapter 5.

Rough Sets

5.1

Proof follows from the definitions of equivalence relations and indiscernibility.

5.2

Let x B (U-X). Then [x]B U X [x]B X [x]B X = x B (X) x UB (X). Therefore, B (U-X) U- B (X). Similarly prove B (U-X) U- B (X).

5.5

The reduced table is

#

1

2

3

4

5

6

7

8

9

10

Customer

Name

Mili

Bill

Rita

Pam

Maya

Bob

Tony

Gaga

Sam

Abu

Gender

(GD)

F

M

F

F

F

M

M

F

M

M

Amount

(A)

High

Low

High

High

Medium

Medium

Low

High

Low

Low

Payment

Mode (P)

CC

Cash

CC

CC

Cash

CC

Cash

CC

Cash

Cash

Considering the indiscernible set of objects { 1, 3, 4, 8 } we derive the rule : IF Gender = F

AND (Age = High) THEN (Payment Mode = Credit Card). Obtain the other rules similarly.

5.7

See Example 5.17 (Data Clustering).

Chapter 6.

6.2

Artificial Neural Networks : Basic Concepts

3 input, 1 output net with w1 = w2 = w3 = 1, and activation function

1, if y_in 2

y_out = f ( y_in ) =

0, otherwise

6.3

Hint : Draw two straight lines separating the sets A and B.

6.4

Hint : What is the equation of a plane that separates the two classes?

6.6

See Example 6.6 (Realizing the logical AND function through Hebb learning).

6.7

See Example 6.7 (Learning the logical AND function by a perceptron).

6.9

See Example 6.9 (Competitive learning through winner-takes-all strategy).

Chapter 7.

Elementary Pattern Classifiers

7.1

Hint : Take the training data in bipolar form and then apply Hebb learning rule.

7.3

See Example 7.4 (ADALINE training for the AND-NOT function).

7.5

See Example 7.5 (MADALINE training for the XOR function).

Chapter 8.

8.1

Pattern Associators

An n-input auto-associative net can store at most n - 1 patterns.

So,

1.

Check whether number of patterns <= n-1

2.

Check orthogonality of input patterns

3.

Obtain weight matrix of individual pattern [See Sec. 8.1.1]

4.

Obtain weight matrix for storage of multiple patterns

See Example 8.5 (Storage of multiple bipolar patterns in auto-associative neural nets) and

8.6 (Recognizing patterns by auto-associative nets storing multiple patterns).

8.2

See Example 8.9 (Recognition of noisy input by hetero-associative net) for single pair

pattern association. Multiple associations are stored by adding up the individual weight

matrices.

8.3

Hint: Use bipolar representation of input string. See Example 8.10 (Computing the weight

matrix of a Hopfield net and testing its performance).

8.4

Find weight matrix for individual pattern association. Add weight matrices of individual

patterns to determine overall weight matrix. See Example 8.13 (Storage of multiple

associations on a BAM).

Chapter 9.

Competitive Neural Nets

9.1

See Example 9.1 (Clustering by MAXNET).

9.2

See Example 9.2 (Learning by Kohonens self-organizing map)..

9.3

See Examples 9.3 (Learning by LVQ net) and 9.4 (Clustering application of LVQ net).

9.4

See Examples 9.5 (Learning by ART1 net) and 9.6 (ART1 net operation).

Chapter 10. Backpropagation

10.2. Hint : You may consider a network with 8 nodes at the input layer corresponding to 8 letters

of the word computer. A single output is sufficient for the Yes/No decision. Design a

procedure to map a given word to some numerical expression suitable to feed the proposed

net. Train the net with the given training set. Test the trained net with the given test words.

Do the outcome tallies with your intuitive solution?

Chapter 11. Elementary Search Techniques

11.1

Hint: Consider the entire X-Y plane as a 2-dimensional array of cells, each cell having unit

length and breadth. Then take g ( n ) = length of the actual path from the starting cell to the

current cell n, and h ( n ) = the Manhattan distance between n and the destination. See

Example 11.6 (An A* algorithm to solve a maze problem).

11.5

Hint: Include additional rules, e.g., <adverb> very <adverb>. However, this will enable

you to put as many verys as you want. However, at most one very is to be allowed, then

include production rules such as <adverb> very <adverb> etc.

11.6

See Problem 11.7 (Solving the Satisfiability problem using AND-OR graph).

11.7

Hint : The production rules will be expanded as AND arcs. When there is a choice of

production rules, i.e. there are several rules with the same string on the left hand side, use

OR arcs.

11.10 See Problem 11.10 (Applying constraint satisfaction to solve cryptarithmetic puzzle).

11.11 See Problem 11.9 (Applying constraint satisfaction to solve crossword puzzle).

11.12 See the text on the Map colouring problem in Subsection 11.4.8.

11.13 See Problem 11.2 (Monkey and Banana problem)

Chapter 12. Advanced Search Strategies

12.1

Hint: The chromosomes will be a binary string of length n k, where n is the number of

nodes and k = ceiling ( log 2 n ). Given such a chromosome, it is divided into n parts each

consisting of k bits. The first k-bit substring encodes 1, the 2nd k-bit string encodes node 2

and so on. As the function f is to be minimized, we may take 1/f as the fitness function. The

initial population is generated randomly.

12.2

Hint : Encode the solutions as in Ex. 12.1. Use f directly as the energy function. Try with

Tmax = 100, Tmin = 0.01, = 0.8. Tune the parameters if necessary.

Вам также может понравиться

- Advance Algorithms PDFДокумент2 страницыAdvance Algorithms PDFEr Umesh Thoriya0% (1)

- Basic Questions and Answers Digital NetworksДокумент2 страницыBasic Questions and Answers Digital NetworksGuillermo Fabian Diaz Lankenau100% (1)

- Lab 5 Best First Search For SchedulingДокумент3 страницыLab 5 Best First Search For SchedulingchakravarthyashokОценок пока нет

- PC FileДокумент57 страницPC FileAvinash VadОценок пока нет

- Fundamentals of Apache Sqoop NotesДокумент66 страницFundamentals of Apache Sqoop Notesparamreddy2000Оценок пока нет

- CH1 - Introduction To Soft Computing TechniquesДокумент25 страницCH1 - Introduction To Soft Computing Techniquesagonafer ayeleОценок пока нет

- Lab Manual Soft ComputingДокумент44 страницыLab Manual Soft ComputingAnuragGupta100% (1)

- Java Sample Papers With AnswersДокумент154 страницыJava Sample Papers With AnswersRenu Deshmukh100% (1)

- Galgotias University Datascience Lab ManualДокумент39 страницGalgotias University Datascience Lab ManualRaj SinghОценок пока нет

- MA7155-Applied Probability and Statistics Question BankДокумент15 страницMA7155-Applied Probability and Statistics Question Bankselvakrishnan_sОценок пока нет

- VTU Network Lab Experiment Manual - NetSimДокумент89 страницVTU Network Lab Experiment Manual - NetSimshuliniОценок пока нет

- CS2259 Set1Документ3 страницыCS2259 Set1Mohanaprakash EceОценок пока нет

- Structured vs Object-Oriented Programming ReportДокумент10 страницStructured vs Object-Oriented Programming ReportMustafa100% (1)

- Dce Lab ManualДокумент21 страницаDce Lab ManualSarathi Nadar0% (1)

- Lab Manual B.Sc. (CA) : Department of Computer Science Ccb-2P2: Laboratory Course - IiДокумент31 страницаLab Manual B.Sc. (CA) : Department of Computer Science Ccb-2P2: Laboratory Course - IiJennifer Ledesma-PidoОценок пока нет

- Matlab Colour Sensing RobotДокумент5 страницMatlab Colour Sensing Robotwaqas67% (3)

- Signals and SystemsДокумент72 страницыSignals and Systemsajas777BОценок пока нет

- A Complete Note On OOP (C++)Документ155 страницA Complete Note On OOP (C++)bishwas pokharelОценок пока нет

- RAP Protocol Explained - Route Access ProtocolДокумент1 страницаRAP Protocol Explained - Route Access ProtocolRough MetalОценок пока нет

- 3 Sem - Data Structure NotesДокумент164 страницы3 Sem - Data Structure NotesNemo KОценок пока нет

- Iare DWDM and WT Lab Manual PDFДокумент69 страницIare DWDM and WT Lab Manual PDFPrince VarmanОценок пока нет

- Ann FLДокумент102 страницыAnn FLdpkfatnani05Оценок пока нет

- 15csl47 Daa Lab Manual-1Документ53 страницы15csl47 Daa Lab Manual-1Ateeb ArshadОценок пока нет

- HPC: High Performance Computing Course OverviewДокумент2 страницыHPC: High Performance Computing Course OverviewHarish MuthyalaОценок пока нет

- Infytq-Practice Sheet ProblemsДокумент22 страницыInfytq-Practice Sheet ProblemsKhushi SaxenaОценок пока нет

- 05-Adaptive Resonance TheoryДокумент0 страниц05-Adaptive Resonance TheoryCHANDRA BHUSHANОценок пока нет

- OOPs in C++ UNIT-IДокумент135 страницOOPs in C++ UNIT-IAnanya Gupta0% (1)

- Industrial RevolutionДокумент13 страницIndustrial RevolutionRenu Yadav100% (1)

- Tutorial Bank: Unit-I The Cellular Concept-System Design FundamentalsДокумент7 страницTutorial Bank: Unit-I The Cellular Concept-System Design Fundamentalsvictor kОценок пока нет

- Opencv Interview QuestionsДокумент3 страницыOpencv Interview QuestionsYogesh YadavОценок пока нет

- FLNN Lab Manual 17-18Документ41 страницаFLNN Lab Manual 17-18PrabakaranОценок пока нет

- Local Area Networks: Ethernet Chapter SolutionsДокумент2 страницыLocal Area Networks: Ethernet Chapter Solutionsluda392Оценок пока нет

- FDSP SДокумент22 страницыFDSP SYogesh Anand100% (1)

- Computer Arithmetic in Computer Organization and ArchitectureДокумент41 страницаComputer Arithmetic in Computer Organization and ArchitectureEswin AngelОценок пока нет

- CS34 Digital Principles and System DesignДокумент107 страницCS34 Digital Principles and System DesignSara PatrickОценок пока нет

- Digital Signal Processing QuantumДокумент332 страницыDigital Signal Processing QuantumSudhir MauryaОценок пока нет

- IoT NotesДокумент91 страницаIoT NotesVishu JaiswalОценок пока нет

- Anatomy of A MapReduce JobДокумент5 страницAnatomy of A MapReduce JobkumarОценок пока нет

- OFDM Complete Block DiagramДокумент2 страницыOFDM Complete Block Diagramgaurav22decОценок пока нет

- Open Book TestДокумент2 страницыOpen Book Testjoshy_22Оценок пока нет

- RGPV 7th Sem Scheme CSE.Документ1 страницаRGPV 7th Sem Scheme CSE.Ankit AgrawalОценок пока нет

- Assignment # 1 (Solution) : EC-331 Microprocessor and Interfacing TechniquesДокумент7 страницAssignment # 1 (Solution) : EC-331 Microprocessor and Interfacing TechniquesJacob Odongo Onyango100% (2)

- The FIS StructureДокумент3 страницыThe FIS StructureManea CristiОценок пока нет

- Department of Computer Science and Engineering 18Cs43: Operating Systems Lecture Notes (QUESTION & ANSWER)Документ8 страницDepartment of Computer Science and Engineering 18Cs43: Operating Systems Lecture Notes (QUESTION & ANSWER)Manas Hassija100% (1)

- Introduction to Arduino and Raspberry Pi for IoT ProjectsДокумент23 страницыIntroduction to Arduino and Raspberry Pi for IoT Projects4JK18CS031 Lavanya PushpakarОценок пока нет

- Mobile Computing Viva Voce QuestionsДокумент10 страницMobile Computing Viva Voce QuestionsMohamed Rafi100% (1)

- Case Study of Various Routing AlgorithmДокумент25 страницCase Study of Various Routing AlgorithmVijendra SolankiОценок пока нет

- ADA Week 5Документ3 страницыADA Week 5swaraj bhatnagar100% (1)

- Electronically Tunable Chaotic Oscillators Using OTA-C Derived From Jerk ModelДокумент23 страницыElectronically Tunable Chaotic Oscillators Using OTA-C Derived From Jerk ModelKhunanon KarawanichОценок пока нет

- Tutorial On Network Simulator (NS2)Документ24 страницыTutorial On Network Simulator (NS2)RakhmadhanyPrimanandaОценок пока нет

- Multi Way Merge SortДокумент20 страницMulti Way Merge SortDurga Lodhi100% (2)

- Neural Representation of AND, OR, NOT, XOR and XNOR Logic Gates (Perceptron Algorithm)Документ14 страницNeural Representation of AND, OR, NOT, XOR and XNOR Logic Gates (Perceptron Algorithm)MehreenОценок пока нет

- CS535 Assignment QuestionДокумент2 страницыCS535 Assignment QuestionhackermlfОценок пока нет

- Hints and Answers To Selected Problems of The Exercises: Chapter 1. IntroductionДокумент4 страницыHints and Answers To Selected Problems of The Exercises: Chapter 1. IntroductionayeniОценок пока нет

- C Se 150 Assignment 3Документ5 страницC Se 150 Assignment 3VinhTranОценок пока нет

- Javaplex TutorialДокумент37 страницJavaplex Tutorialphonon770% (1)

- ME730A - Modal Analysis: Theory and PracticeДокумент5 страницME730A - Modal Analysis: Theory and PracticePankaj KumarОценок пока нет

- Homework Verification and Stiffness Matrix DerivationДокумент14 страницHomework Verification and Stiffness Matrix DerivationShehbazKhanОценок пока нет

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"От EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Рейтинг: 2.5 из 5 звезд2.5/5 (2)

- Class5 PDFДокумент16 страницClass5 PDFSohaib MasoodОценок пока нет

- Hasse Diagram Practice WorksheetДокумент2 страницыHasse Diagram Practice Worksheetmm8871Оценок пока нет

- Class5 PDFДокумент16 страницClass5 PDFSohaib MasoodОценок пока нет

- 03 Life Processes Chapter Wise Important QuestionsДокумент17 страниц03 Life Processes Chapter Wise Important Questionsmm8871100% (1)

- Instruction For Photo & Signature: Sign With Black Ink PenДокумент2 страницыInstruction For Photo & Signature: Sign With Black Ink Penmm8871Оценок пока нет

- Nmbtetstjjj PDFДокумент175 страницNmbtetstjjj PDFmm8871Оценок пока нет

- AghabozorgiSeyedShirkhorshidiYingWah 2015 Time SeriesclusteringAdecadereviewДокумент23 страницыAghabozorgiSeyedShirkhorshidiYingWah 2015 Time SeriesclusteringAdecadereviewmm8871Оценок пока нет

- Information Technology (402) Class X (NSQF) : Time: 2 Hours Max. Marks: 50Документ1 страницаInformation Technology (402) Class X (NSQF) : Time: 2 Hours Max. Marks: 50citsОценок пока нет

- FinalReport PDFДокумент45 страницFinalReport PDFmm8871Оценок пока нет

- IT Class X 2019-2020 SyllabusДокумент1 страницаIT Class X 2019-2020 SyllabusanilОценок пока нет

- List of Skill Subjects Offered At: Secondary Level (For Ix & X)Документ1 страницаList of Skill Subjects Offered At: Secondary Level (For Ix & X)dilshadОценок пока нет

- Carbon and It's Compounds Theory and Worksheet Class 10Документ15 страницCarbon and It's Compounds Theory and Worksheet Class 10subham kumarОценок пока нет

- Pandas Data Wrangling Cheatsheet Datacamp PDFДокумент1 страницаPandas Data Wrangling Cheatsheet Datacamp PDFPamungkas AjiОценок пока нет

- CS IT ListДокумент1 страницаCS IT Listmm8871Оценок пока нет

- 01 Metals and Non Metals Imp Question and AnswersДокумент16 страниц01 Metals and Non Metals Imp Question and Answersmm8871Оценок пока нет

- G2 2Документ3 страницыG2 2mm8871Оценок пока нет

- Weekly Schedule Template 6-7Документ2 страницыWeekly Schedule Template 6-7zazasanОценок пока нет

- Credit CommitteeДокумент4 страницыCredit Committeemm8871Оценок пока нет

- Bill No Date Issued by Towards Amount (RS)Документ4 страницыBill No Date Issued by Towards Amount (RS)mm8871Оценок пока нет

- CO Class Test 1Документ1 страницаCO Class Test 1mm8871Оценок пока нет

- Fundamentals of Computer Design: Bina Ramamurthy CS506Документ25 страницFundamentals of Computer Design: Bina Ramamurthy CS506mm8871Оценок пока нет

- Ooad Lab ManualДокумент20 страницOoad Lab Manualmm8871Оценок пока нет

- Basics of Computers: Presentation by Trisha Kulhaan IX - DДокумент32 страницыBasics of Computers: Presentation by Trisha Kulhaan IX - Dmm8871Оценок пока нет

- Roll No. Paper Code:: Other Than MBAДокумент1 страницаRoll No. Paper Code:: Other Than MBAmm8871Оценок пока нет

- Student Achievements & Extracurricular ActivitiesДокумент1 страницаStudent Achievements & Extracurricular Activitiesmm8871Оценок пока нет

- Reviewers Response SheetДокумент1 страницаReviewers Response Sheetmm8871Оценок пока нет

- CS/IT Faculty Members Counselling Duties For 2 and 3 June 2018 B.Tech BlockДокумент1 страницаCS/IT Faculty Members Counselling Duties For 2 and 3 June 2018 B.Tech Blockmm8871Оценок пока нет

- B.Tech III Sem Student Details SheetДокумент2 страницыB.Tech III Sem Student Details Sheetmm8871Оценок пока нет

- Modalities MEДокумент2 страницыModalities MEmm8871Оценок пока нет

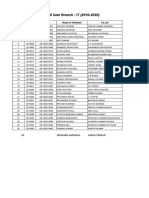

- B.Tech III Sem Branch: IT (2016-2020) : SN Roll No. En. No. Name of Students Fat - IntДокумент1 страницаB.Tech III Sem Branch: IT (2016-2020) : SN Roll No. En. No. Name of Students Fat - Intmm8871Оценок пока нет

- Describe Your Ideal Company, Location and JobДокумент9 страницDescribe Your Ideal Company, Location and JobRISHIОценок пока нет

- Quantifying Technology Leadership in LTE Standard DevelopmentДокумент35 страницQuantifying Technology Leadership in LTE Standard Developmenttech_geekОценок пока нет

- WHODAS 2.0 Translation GuideДокумент8 страницWHODAS 2.0 Translation Guidecocoon cocoonyОценок пока нет

- Edtpa LessonsДокумент12 страницEdtpa Lessonsapi-304588377100% (1)

- Marzano Teacher Evaluation ModelДокумент77 страницMarzano Teacher Evaluation ModelJosè ManuelОценок пока нет

- BTL CI Conference Interpreting Trainers Guide IndexДокумент12 страницBTL CI Conference Interpreting Trainers Guide IndexRosario GarciaОценок пока нет

- Telling Story Lesson PlanДокумент3 страницыTelling Story Lesson PlanivyredredОценок пока нет

- In Praise of Plain EnglishДокумент3 страницыIn Praise of Plain EnglishrahmathsОценок пока нет

- Storacles of ProphecyДокумент48 страницStoracles of ProphecyJorge Enrique Quispe AtoccsaОценок пока нет

- Inductive Approach Is Advocated by Pestalaozzi and Francis BaconДокумент9 страницInductive Approach Is Advocated by Pestalaozzi and Francis BaconSalma JanОценок пока нет

- Ayodhya501 563Документ63 страницыAyodhya501 563ss11yОценок пока нет

- On Hitler's Mountain by Ms. Irmgard A. Hunt - Teacher Study GuideДокумент8 страницOn Hitler's Mountain by Ms. Irmgard A. Hunt - Teacher Study GuideHarperAcademicОценок пока нет

- Conference-Proceedings Eas 2016Документ137 страницConference-Proceedings Eas 2016paperocamilloОценок пока нет

- Templars and RoseCroixДокумент80 страницTemplars and RoseCroixrobertorobe100% (2)

- The Fractal's Edge Basic User's GuideДокумент171 страницаThe Fractal's Edge Basic User's Guideamit sharmaОценок пока нет

- BRM Full NotesДокумент111 страницBRM Full Notesshri_chinuОценок пока нет

- Corporate Environmental Management SYSTEMS AND STRATEGIESДокумент15 страницCorporate Environmental Management SYSTEMS AND STRATEGIESGaurav ShastriОценок пока нет

- PCOM EssayДокумент6 страницPCOM EssayrobertОценок пока нет

- Short Report AstrologyДокумент9 страницShort Report Astrologyadora_tanaОценок пока нет

- Factors Affecting The Decision of Upcoming Senior High School Students On Choosing Their Preferred StrandДокумент7 страницFactors Affecting The Decision of Upcoming Senior High School Students On Choosing Their Preferred StrandJenikka SalazarОценок пока нет

- Feminine Aspects in Bapsi Sidhwa's The Ice-Candy - ManДокумент6 страницFeminine Aspects in Bapsi Sidhwa's The Ice-Candy - ManAnonymous CwJeBCAXp100% (3)

- Basic Concepts of ManagementДокумент30 страницBasic Concepts of ManagementMark SorianoОценок пока нет

- PHIL 103 SyllabusДокумент5 страницPHIL 103 SyllabusjenniferespotoОценок пока нет

- Good-LifeДокумент34 страницыGood-LifecmaryjanuaryОценок пока нет

- Poemandres Translation and CommentaryДокумент59 страницPoemandres Translation and Commentarynoctulius_moongod6002Оценок пока нет

- Power Evangelism Manual: John 4:35, "Say Not Ye, There Are Yet Four Months, and Then ComethДокумент65 страницPower Evangelism Manual: John 4:35, "Say Not Ye, There Are Yet Four Months, and Then Comethdomierh88Оценок пока нет

- Continuity and Changes: A Comparative Study On China'S New Grand Strategy Dongsheng DiДокумент12 страницContinuity and Changes: A Comparative Study On China'S New Grand Strategy Dongsheng DiFlorencia IncaurgaratОценок пока нет

- People's Participation for Good Governance in BangladeshДокумент349 страницPeople's Participation for Good Governance in BangladeshRizza MillaresОценок пока нет

- Extraverted Sensing Thinking PerceivingДокумент5 страницExtraverted Sensing Thinking PerceivingsupernanayОценок пока нет

- 71 ComsДокумент23 страницы71 ComsDenaiya Watton LeehОценок пока нет