Академический Документы

Профессиональный Документы

Культура Документы

Fast Generation of Video Panorama: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Fast Generation of Video Panorama: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Авторское право:

Доступные форматы

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 3, Issue 6, November-December 2014

ISSN 2278-6856

Fast Generation of Video Panorama

Saif Salah Abood 1, Abdulamir Abdullah Karim 2

1,2

University of Technology, School, Computer Science Dept.

Abstract

This paper discusses the possibility of stitching two video

stream to create a panoramic view in shortest possible time,

The challenge with constructing Panorama Video is the timeconsuming in stitching images. Image alignment didn't need

to detect all interest point, only few well distributed point is

enough to calculate good projection matrix, and the great

similarity between the frames in video stream provides

temporal information which can be used to reduce the number

of selected points. In this paper the stitching images method

using Harris Corners Detector has been customized to

accelerate the generation of Panorama Video, through reduce

the number of Interest Points in stitching each pair of frames.

Reducing the number of Interest Points has been done by

fragmentation of images into numerous regions and getting a

feedback information from the stitching of the previous frame

to limitate the search space based on previous area of

overlapping. Results shows that (i) panoramas generated from

the proposed algorithm feature a smooth transition in image

overlapping areas and satisfy human visual requirements; and

(ii) the speed of the generated panorama satisfies the

commonly accepted requirement in video panorama stitching.

Keywords: Panorama Video, Image Stitching, Corners

Detector

1. Introduction

Image stitching is based on finding the similarity between

adjacent images and determination of overlapping regions.

Image stitching (Image Registration or Image Alignment)

algorithms can be classified into three different categories:

Intensity-based algorithms which need a large amount of

computation and in case of do not give good result

rotation and scaling [1] [2]. Frequency-domain-based

algorithms are faster with small translation, rotation, and

scaling, but it fail with small overlapping regions [3] .

Feature-based

algorithms,

which

reduced

the

computational complexity by processed a small amount of

information (points, lines, or edges) to align images, and

they are robust to changes in image intensity [4] [5] .

Therefore, most of the research has been focused on

feature-based algorithms. one of the most commonly used

feature-based algorithms is the one which has been shown

in (Figure 1) below, in which Harris Corners Detector

detect the common points (Interest Points) between the

two images, these Points (Corners) have a large intensity

variations between the directions around. then these points

are matched by (Normalized-Cross-Correlation) to

generate a candidate pairs. It found that some of these

pairs are wrong and must be eliminated, so the RANSAC

algorithm has been used, which predicts a mathematical

model representing the largest number of these pairs and

remove all the pairs that do not fit with this model. After

Volume 3, Issue 6, November-December 2014

that Projection one of the two images overlaid on top of

the other and calculate Matrix (Homography Transform

Matrix) to transform this image, finally the two images

blended together. This algorithm is success with small

overlapping areas but with small difference in rotation,

and scaling.

Figure 1: the structure of the traditional images stitching

system

In this work ,the above traditional image stitching system

has been improved through two main orientations , first

one is the Fragmentation of images into regions, which

can reduce the number of Interest Points and thus reduce

the time of matching and correlation, while maintaining

the distribution and avoid clustering of the Interest Points

in one side of the frame. And the second one is the

Feedback information from previous stitching, which can

reduce the number of Interest Points by limiting the search

space based on previous Area of overlapping. The rest of

this paper is organized as: Section 2 Related Work

briefly discusses related work; Section 3 Concepts of the

Proposed Video Stitching Algorithm describes two basic

concept of the solution; Section 4 Structure of the

Proposed Video Stitching Algorithm Introduces the

Proposed Algorithm; Section 5 Performance Evaluation

and Results Discussion describes experimental results;

and finally Section 6 Conclusions.

2. Related Work

When studying the subject of video and image stitching ,

it is clear that there are many works relating image

stitching, on the other side very little works has been done

on the video stitching, especially considering the time of

stitching. Note that in this work will not used the

terminology "Video Mosaics" like other researchers [6] [7]

because it is refer to the construction of a static panoramic

image from a video stream, Alternatively, The

Page 80

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 3, Issue 6, November-December 2014

terminology "Panoramic Video" will be used to refer to

the stream of panoramic frames constructed from two or

more video streams. The authors in [8] [4], proposed a

video stitching system combine multiple video feeds from

ordinary cameras or webcams into a single panoramic

video. And to satisfy the real-time requirements , the

parameters for stitching are calculated only once, during

the system initialization. So their system requires an

initialization phase that is not real-time and is needed

whenever the cameras or webcams positions change. In

[9]. The authors presented a new improved algorithm,

self-adaptive selection of Harris corners by fragment the

image into regions and select corners according to the

normalized variance of region grayscales. they apply

multiple constraints, e.g., their midpoints, distances, and

slopes, on every two candidate pairs to remove incorrectly

matched pairs. Their algorithm, solved the video stitching

problem on a frame-by-frame basis, and does not fully

exploit the correlation between video frames, and requires

to identify which of the two images on the right and which

is on the left, and matching only the right-third of the left

image with the left-third of the right image. finally they

fixed the total number of points that are matching in each

stitching. The authors in [5] proposed a system for

stitching videos streamed previously recorded by freely

moving mobile phones cameras. however, the proposed

system treats the video stitching problem essentially as an

image stitching problem on individual frames independent

of each other. In [10] the authors exploits temporal

information to avoid solving the stitching problem fully on

a frame by frame basis. by employ previous frames

stitching results such as tracking interest points using

optical flow and using areas of overlap to limit the search

space for interest points. In all these works it was found

that the most time-consuming steps is the computation of

detection and matching large number of Interest Points, it

takes more than 85% of the time in stitching. While only

four well distributed Interest Points are enough to

calculate the Homography Transform Matrix. Hence, most

of the study approaches in this paper are aiming at

detection and matching less number of Interest Point.

ISSN 2278-6856

(1)

Where i is the current frame stitching. If the number of

detected corners in the previous stitching are few, then the

fragments number will be increased and reduced its size,

and vice versa. This fragmentation will enable to reduce

the time of matching and correlation, while maintaining

the distribution and avoid clustering of the corners in one

side of the frame.

3.2Exploit the area of overlap from previous frame

The area of overlap from frames (i) can potentially limit

the search space for Interest Points in frames (i+1). The

stitching algorithm performs the same steps as in the case

of the first frame pairs but instead of trying to detect

Interest Points in the whole frame (i+1), they are detected

in the area of overlap found in frames (i) only, plus some

buffer region in the frames (i+1) (best value

experimentally determined to be a 7 pixel band). The

efficiency of the buffer based approach performance is

inversely proportional to the size of the overlap area

between frames (n-1) and (n).

4. Structure of the Proposed Video Stitching

Algorithm

As previously mentioned the proposed algorithm for

stitching operations, contain six steps Fragmentation,

Detection, Matching and Estimation & Projecting,

Blending and Feedback. As in the (Figure 2).

3. Concepts of the Proposed Video Stitching

Algorithm

The performance of the proposed video stitching

algorithm is based on two basic concepts:

3.1Fragmentation

With Harris corners detector, the corners tend to cluster

around regions with richer texture, whereas fewer corners

will be selected in regions with less texture information.

That mean, selected corners are not evenly distributed.

Therefore, each frame will be fragmented into (nn)

regions where n is the number of fragments in the one side

of frame, and select one corner from each region by sorted

all candidate corners in their values, The number of all

selected corners will not be more than the number of

fragments (nn), this number of fragments will be

modified depending on the feedback from stitching the

previous frame, (Eq. (1))

Volume 3, Issue 6, November-December 2014

Figure 2: the structure of the proposed image stitching

system

Feedback contains three key information: the result of

previous stitching (success or failure), the overlap area

boundaries, and the number of the Interest Points in the

previous frame. Inability of the algorithm to stitch frame

pairs can be due to either: (i) there is no enough overlap

between frames (ii) enough correlation pairs between

frames, cant be found (iii) the algorithm computes an

incorrect geometric alignment. In the Blending step the

degradation method has been used, by linear gradient

Transparency from the center of one image to the other

Page 81

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 3, Issue 6, November-December 2014

5. Performance

Discussion

Evaluation

and

Results

Experiments were conducted on an Intel Core2 Duo

CPU T6500@ 2.10GHZ, with 4.0 GB RAM, NVIDIA

GeForce G 105M video card and two Logitech C905

webcam HD720p , USB 2.0, Ultra-smooth, light

correction, Auto Focus with Carl Zeiss optics and 2megapixel resolution for video and photos up to 8megapixels. The application was built using C#.Net

framework 4.0 and Accord.NET 2013 library. The details

of images pairs that have been used to test the algorithm

are shown in (Table 1) and (Figure 3).

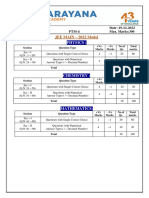

Table 1: Details of the testing images pairs.

Name

Dimension

Size

Overlapping

Corners

Horizontal

POV

Scene

Lake

640640

73KB

82KB

486 pixel

= 76 %

clustering

similar

similar

too far

House

640640

121KB

132KB

235 pixel

= 36 %

distribution

different

different

close

indoor

School

1024768

151KB

157KB

747 pixel

= 73 %

clustering

different

similar

nearby

outdoor

Nature

16701024

846KB

914KB

775 pixel

= 46 %

distribution

similar

similar

far

ISSN 2278-6856

5.1Evaluation of Fragmentation

The experimental results on the (House) image pair shown

in (Figure 4). The result of applying the traditional Harris

detector on the original image, (Figure 4.a ), is shown in

(Figure 4.b ) give 351361 corners, and the result with

fragmentation 1010 in (Figure 4.c ) give 5557 corners ,

also with fragmentation 2020 in (Figure 4.d ) give

131132 corners. To be more specific, with increasing the

value of n, the number of corners will be increased and the

result will be closer to that of The Traditional Harris

Detector. On the other hand, if the value of n is small, the

number of corners will be decreased, Knowing that the

execution time has not changed. The (Table 2) and (Chart

1) contains the results of Applying the Fragmentation on

the four images pairs with different value of n .

Table 2: Details of testing Fragmentation on four images

pairs

Harris detector

Fragmentation

with n=20

reduced corners

Fragmentation

with n=15

reduced corners

Fragmentation

with n=10

reduced corners

Lake

77132

House

351361

School

524519

Nature

26224404

4058

131132

151125

295324

53 %

63 %

73 %

91 %

3040

9888

11191

177194

66 %

73 %

80 %

94 %

1822

5557

6856

8893

80 %

84 %

88 %

97 %

Chart 1: Details of testing Fragmentation on four images pairs

It is clear from the above, that benefit of fragmentation

increases with the large-size and high-resolution images.

5.2Evaluation of Limitation the search space

The results of limitation the search space based on the

area of overlap in the previous frame on the image pair

(House) (without using the Fragmentation) is shown in

(Figure 5). Where the number of the selected corners

become 249295 corners instead of 351361 corner in

traditional Harris detector. as well as the time of the

search became 0.34 seconds instead of 0.82 seconds.

Figure 5. Limitation the search space on House image:

Figure 3: images pairs which used to test the algorithm.

Volume 3, Issue 6, November-December 2014

The (Chart 2) and (Table 3) illustrate the Change in

selected Corners limitation the search space on the four

images pairs, and how the usefulness of limitation is

directly proportional to the ratio of overlap between

images

Page 82

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 3, Issue 6, November-December 2014

ISSN 2278-6856

(A): Change in selected Corners

(B): Change in Time

Chart 2: Details of testing Limitation on four images pairs

Figure 6. stitching the image pair (House) using the proposed

algorithm:

Table 3: Details of testing Limitation on four images

pairs

Table (4) show details of outputs and execution time of

each stitching operations in proposed algorithm compared

with the outputs and execution time in the traditional

image stitching.

Table 4: Details of outputs and execution time of each stitching

operation on the four images pairs

5.3Final Result

the implementation of both fragmentation and limitation

on the pair (House) will decrease the total execution time

of all stitching operations from 0.95sec. to 0.36sec.

without any change in the quality of the resulting

panorama. (Figure 6) show the four stitching operations

on the image pair (House) with using the proposed

algorithm.

5.4Discussion

From compared the percentage of reducing time between

the execution time of the traditional algorithm and the

execution time of the proposed algorithm with the

percentage of overlapping area in each image pairs, find

that the percentage of reducing time (i) directly

proportional to the size and resolution of the image, in

other words, percentage of reducing time increases with

larger and high resolution images, because these image

have a lot of interest points that can be discarded, (ii) an

inverse proportional to the area of the overlapping , in

other words, percentage of reducing time increases with

small overlap area, because the search in a smaller space

needs less time.

Volume 3, Issue 6, November-December 2014

Page 83

International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)

Web Site: www.ijettcs.org Email: editor@ijettcs.org

Volume 3, Issue 6, November-December 2014

ISSN 2278-6856

6. Conclusions

A new algorithm has been presented to handle challenges

in real-time video panoramic Construction, which is timeconsuming of stitching processes. the contribution can be

summarized by improving images stitching algorithm to

reduce the number of Interest Points in each stitching

process through: (i) Employ Fragmentation to avoid the

points clustering as much as possible, (ii) Employ

Feedback to update the Fragmentation and limitate the

search space by exploit the previous overlapping area. (iii)

Employ Parallel Processing which has been resulted in

more efficient panorama stitching process. Experimental

results shows that (i) panoramas generated from the

proposed algorithm feature a smooth transition in image

overlapping areas and satisfy human visual requirements;

and (ii) the preview speed of the generated panorama

satisfies the real-time requirement that are commonly

accepted in video panorama stitching.

[10] M. El-Saban, M. Izz and A. Kaheel, Fast stitching of

videos captured from freely moving devices by

exploiting temporal redundancy, ICIP 2010.

[11] Harris CG, Stephen M "A combined corner and edge

detector", 4th Alvey vision conference. Manchester,

147151(1998).

[12] M. Fischler and R. Bolles, Random sample

consensus: A paradigm for model fitting with

applications to image analysis and automated

cartography, Communications of the ACM, 24(6),

1981.

[13] Feng Zhao, Qingming Huang and Wen Gao,

IMAGE MATCHING BY NORMALIZED CROSSCORRELATION 142440469X/ 2006 IEEE II 729

ICASSP 2006

References

[1] Hartley R, Gupta R ,"Linear pushbroom cameras.

IEEE Transactions on Pattern Analysis and Machine

Intelligence", 19: 963975, 1997.

[2] Chalechale A, Naghdy G, Mertins ," A Sketch-based

image matching using angular partitioning systems",

IEEE Trans on Man and Cybernetics and Part A 35:

2841. , 2005.

[3] Sivic J, Zisserman A ," Video google: A text retrieval

approach to object matching in videos". In:

Proceedings of the 9th IEEE International Conference

on Computer Vision. IEEE Computer Society

Washington DC and USA: Computer Vision-Volume

2, 2738, 2003.

[4] M. Zheng, X. Chen and L. Guo, Stitching Video

from Webcams, Lecture Notes In Computer Science;

Vol. 5359 archive Proceedings of the 4th

International Symposium on Advances in Visual

Computing, Part II, 2008.

[5] A. Kaheel, M. El-Saban, M. Refaat, and M. Izz,

Mobicast - A system for collaborative event casting

using mobile phones, in ACM MUM '09.

[6] Manuel Guillen Gonzalez, Phil Holifield, and Martin

Varley," Improved video mosaic construction by

accumulated alignment error distribution". In

Proceedings of the British Machine Vision

Conference, 1998.

[7] Richard Szeliski. "Video mosaics for virtual

environments", Virtual Reality, pages 2230, March

1996.

[8] W. Tang, T. Wong and P. Heng, "A System for Realtime Panorama Generation and Display in Teleimmersive Applications ", Multimedia, IEEE

Transactions on (Volume:7 , Issue: 2 ) April 2005.

[9] Several researchers from the Fuzhou University in

China and University of South Alabama in United

States of America published " Efficient Video

Panoramic Image Stitching Based on an Improved

Selection of Harris Corners and a Multiple-Constraint

Corner Matching", 2013.

Volume 3, Issue 6, November-December 2014

Page 84

Вам также может понравиться

- All Layer MEДокумент21 страницаAll Layer MEparamkusamОценок пока нет

- DEPTH MAPstereo-Pair VideoДокумент7 страницDEPTH MAPstereo-Pair VideoNk OsujiОценок пока нет

- Real-Time Stereo Vision For Urban Traffic Scene UnderstandingДокумент6 страницReal-Time Stereo Vision For Urban Traffic Scene UnderstandingBasilОценок пока нет

- A Foss Motion Estimation AlgorithmДокумент5 страницA Foss Motion Estimation AlgorithmVijay VijitizerОценок пока нет

- Time Stamp Extracting From CCTV FootageДокумент10 страницTime Stamp Extracting From CCTV FootageSakib ShahriyarОценок пока нет

- To Propose An Improvement in Zhang-Suen Algorithm For Image Thinning in Image ProcessingДокумент8 страницTo Propose An Improvement in Zhang-Suen Algorithm For Image Thinning in Image ProcessingIJSTEОценок пока нет

- Short Listing Likely Images Using Proposed Modified-Sift Together With Conventional Sift For Quick Sketch To Photo MatchingДокумент9 страницShort Listing Likely Images Using Proposed Modified-Sift Together With Conventional Sift For Quick Sketch To Photo MatchingijfcstjournalОценок пока нет

- School of Electrical, Computer and Energy Engineering, Arizona State University, Tempe, AZ Qianxu@asu - Edu, Chaitali@asu - Edu, Karam@asu - EduДокумент6 страницSchool of Electrical, Computer and Energy Engineering, Arizona State University, Tempe, AZ Qianxu@asu - Edu, Chaitali@asu - Edu, Karam@asu - Edumadhu.nandha9285Оценок пока нет

- Fast Video Motion Estimation Algorithm For Mobile Devices: Ho-Jae Lee, Panos Nasiopoulos, Victor C.M. LeungДокумент4 страницыFast Video Motion Estimation Algorithm For Mobile Devices: Ho-Jae Lee, Panos Nasiopoulos, Victor C.M. Leungamirsaid463Оценок пока нет

- Target Detection Based On Normalized Cross Co-Relation: Niladri Shekhar Das, S. Arunvinthan, E. GiriprasadДокумент7 страницTarget Detection Based On Normalized Cross Co-Relation: Niladri Shekhar Das, S. Arunvinthan, E. GiriprasadinventionjournalsОценок пока нет

- Camera Calibration ThesisДокумент5 страницCamera Calibration Thesislaurahallportland100% (2)

- Cvpr91-Debby-Paper Segmentation and Grouping of Object Boundaries Using Energy MinimizationДокумент15 страницCvpr91-Debby-Paper Segmentation and Grouping of Object Boundaries Using Energy MinimizationcabrahaoОценок пока нет

- Thesis BayerДокумент5 страницThesis Bayercarlamolinafortwayne100% (2)

- SIFT Feature MatchingДокумент12 страницSIFT Feature MatchingethanrabbОценок пока нет

- Transaccion de ImagenДокумент11 страницTransaccion de ImagenMatthew DorseyОценок пока нет

- EE698B-Digital Video Processing 2006-2007/I Term Project Hawk-Eye Tracking of A Cricket BallДокумент9 страницEE698B-Digital Video Processing 2006-2007/I Term Project Hawk-Eye Tracking of A Cricket BallTarun PahujaОценок пока нет

- Fractal Image Compression by Local Search and Honey Bee Mating OptimizationДокумент6 страницFractal Image Compression by Local Search and Honey Bee Mating OptimizationMaria MoraОценок пока нет

- An Efficient Video Segmentation Algorithm With Real Time Adaptive Threshold TechniqueДокумент4 страницыAn Efficient Video Segmentation Algorithm With Real Time Adaptive Threshold Techniquekhushbu7104652Оценок пока нет

- Algorithm For SegmentationДокумент28 страницAlgorithm For SegmentationKeren Evangeline. IОценок пока нет

- Natural Image Inpainting Through Examplar-Based Approach Using Local and Non Local Patch SparserДокумент5 страницNatural Image Inpainting Through Examplar-Based Approach Using Local and Non Local Patch SparsernagaidlОценок пока нет

- A New Fast Motion Estimation Algorithm - Literature Survey Instructor: Brian L. EvansДокумент8 страницA New Fast Motion Estimation Algorithm - Literature Survey Instructor: Brian L. EvanssssuganthiОценок пока нет

- Improved SIFT-Features Matching For Object Recognition: Emails: (Alhwarin, Wang, Ristic, Ag) @iat - Uni-Bremen - deДокумент12 страницImproved SIFT-Features Matching For Object Recognition: Emails: (Alhwarin, Wang, Ristic, Ag) @iat - Uni-Bremen - dethirupathiОценок пока нет

- Scene Detection in Videos Using Shot Clustering and Sequence AlignmentДокумент12 страницScene Detection in Videos Using Shot Clustering and Sequence AlignmentNivedha MsseОценок пока нет

- Automated Video Segmentation: Wei Ren, Mona Sharma, Sameer SinghДокумент11 страницAutomated Video Segmentation: Wei Ren, Mona Sharma, Sameer SinghtastedifferОценок пока нет

- Bundle Adjustment - A Modern Synthesis: Bill - Triggs@Документ71 страницаBundle Adjustment - A Modern Synthesis: Bill - Triggs@EmidioFernandesBuenoNettoОценок пока нет

- Design and Analysis of Motion Content Classifier For Block Matching Algorithm in H.264/AvcДокумент6 страницDesign and Analysis of Motion Content Classifier For Block Matching Algorithm in H.264/Avcrayar_sОценок пока нет

- Accurate Real-Time Disparity Map Computation Based On Variable Support WindowДокумент13 страницAccurate Real-Time Disparity Map Computation Based On Variable Support WindowAdam HansenОценок пока нет

- Dynamic Resolution of Image Edge Detection Technique Among Sobel, Log, and Canny AlgorithmsДокумент5 страницDynamic Resolution of Image Edge Detection Technique Among Sobel, Log, and Canny AlgorithmsijsretОценок пока нет

- Fast Stereo Matching Using Adaptive Guided Filtering: KeywordsДокумент22 страницыFast Stereo Matching Using Adaptive Guided Filtering: Keywordsthomas cyriacОценок пока нет

- An Efficient and Robust Algorithm For Shape Indexing and RetrievalДокумент5 страницAn Efficient and Robust Algorithm For Shape Indexing and RetrievalShradha PalsaniОценок пока нет

- 5614sipij05 PDFДокумент20 страниц5614sipij05 PDFsipijОценок пока нет

- Block Matching Algorithms For Motion EstimationДокумент6 страницBlock Matching Algorithms For Motion EstimationApoorva JagadeeshОценок пока нет

- Efficient Motion Estimation by Fast Threestep Search X8ir95p9y3Документ6 страницEfficient Motion Estimation by Fast Threestep Search X8ir95p9y3Naveen KumarОценок пока нет

- Hiep SNAT R2 - FinalДокумент15 страницHiep SNAT R2 - FinalTrần Nhật TânОценок пока нет

- Bayesian Stochastic Mesh Optimisation For 3D Reconstruction: George Vogiatzis Philip Torr Roberto CipollaДокумент12 страницBayesian Stochastic Mesh Optimisation For 3D Reconstruction: George Vogiatzis Philip Torr Roberto CipollaneilwuОценок пока нет

- Jordan1996 516 PDFДокумент12 страницJordan1996 516 PDFDeni ChanОценок пока нет

- Latex SubmissionДокумент6 страницLatex SubmissionguluОценок пока нет

- Efficient Graph-Based Image SegmentationДокумент15 страницEfficient Graph-Based Image SegmentationrooocketmanОценок пока нет

- A Dynamic Search Range Algorithm For H.264/AVC Full-Search Motion EstimationДокумент4 страницыA Dynamic Search Range Algorithm For H.264/AVC Full-Search Motion EstimationNathan LoveОценок пока нет

- Performance Evaluation of The Masking Based Watershed SegmentationДокумент6 страницPerformance Evaluation of The Masking Based Watershed SegmentationInternational Journal of computational Engineering research (IJCER)Оценок пока нет

- Point Pair Feature-Based Pose Estimation With Multiple Edge Appearance Models (PPF-MEAM) For Robotic Bin PickingДокумент20 страницPoint Pair Feature-Based Pose Estimation With Multiple Edge Appearance Models (PPF-MEAM) For Robotic Bin PickingBích Lâm NgọcОценок пока нет

- A Distributed Canny Edge Detector and Its Implementation On F PGAДокумент5 страницA Distributed Canny Edge Detector and Its Implementation On F PGAInternational Journal of computational Engineering research (IJCER)Оценок пока нет

- A Novel Adaptive Rood Pattern Search Algorithm: Vanshree Verma, Sr. Asst. Prof. Ravi MishraДокумент5 страницA Novel Adaptive Rood Pattern Search Algorithm: Vanshree Verma, Sr. Asst. Prof. Ravi MishraInternational Organization of Scientific Research (IOSR)Оценок пока нет

- Efficient Hierarchical Graph-Based Video Segmentation: Matthias Grundmann Vivek Kwatra Mei Han Irfan EssaДокумент8 страницEfficient Hierarchical Graph-Based Video Segmentation: Matthias Grundmann Vivek Kwatra Mei Han Irfan EssaPadmapriya SubramanianОценок пока нет

- A Fragment Based Scale Adaptive Tracker With Partial Occlusion HandlingДокумент6 страницA Fragment Based Scale Adaptive Tracker With Partial Occlusion HandlingJames AtkinsОценок пока нет

- Marker Detection For Augmented Reality Applications: Martin HirzerДокумент27 страницMarker Detection For Augmented Reality Applications: Martin HirzerSarra JmailОценок пока нет

- An Advanced Canny Edge Detector and Its Implementation On Xilinx FpgaДокумент7 страницAn Advanced Canny Edge Detector and Its Implementation On Xilinx FpgalambanaveenОценок пока нет

- An Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringДокумент10 страницAn Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringAchuth VishnuОценок пока нет

- Image CompressionДокумент7 страницImage CompressionVeruzkaОценок пока нет

- MlaaДокумент8 страницMlaacacolukiaОценок пока нет

- Finite Element Mesh SizingДокумент12 страницFinite Element Mesh SizingCandace FrankОценок пока нет

- Image SegmentationДокумент18 страницImage SegmentationAlex ChenОценок пока нет

- Efficiency Issues For Ray Tracing: Brian Smits University of Utah February 19, 1999Документ12 страницEfficiency Issues For Ray Tracing: Brian Smits University of Utah February 19, 19992hello2Оценок пока нет

- A Distributed Genetic Algorithm For Restoration of Vertical Line ScratchesДокумент8 страницA Distributed Genetic Algorithm For Restoration of Vertical Line ScratchesDamián Abalo MirónОценок пока нет

- Image Segmentation Based On Histogram Analysis and Soft ThresholdingДокумент6 страницImage Segmentation Based On Histogram Analysis and Soft ThresholdingSaeideh OraeiОценок пока нет

- IJETR031551Документ6 страницIJETR031551erpublicationОценок пока нет

- Tracking with Particle Filter for High-dimensional Observation and State SpacesОт EverandTracking with Particle Filter for High-dimensional Observation and State SpacesОценок пока нет

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesДокумент6 страницDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Customer Satisfaction A Pillar of Total Quality ManagementДокумент9 страницCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyДокумент6 страницAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiДокумент12 страницStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewДокумент9 страницAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterДокумент7 страницExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSДокумент7 страницTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesДокумент6 страницDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiДокумент12 страницStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyДокумент6 страницAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSДокумент7 страницTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterДокумент7 страницExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Customer Satisfaction A Pillar of Total Quality ManagementДокумент9 страницCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- The Mexican Innovation System: A System's Dynamics PerspectiveДокумент12 страницThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityДокумент10 страницStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Soil Stabilization of Road by Using Spent WashДокумент7 страницSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemДокумент8 страницDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Digital Record For Privacy and Security in Internet of ThingsДокумент10 страницA Digital Record For Privacy and Security in Internet of ThingsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Deep Learning Based Assistant For The Visually ImpairedДокумент11 страницA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewДокумент9 страницAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- The Impact of Effective Communication To Enhance Management SkillsДокумент6 страницThe Impact of Effective Communication To Enhance Management SkillsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Performance of Short Transmission Line Using Mathematical MethodДокумент8 страницPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Документ10 страницA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Challenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19Документ10 страницChallenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19International Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Secured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpДокумент12 страницSecured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaДокумент9 страницAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Advanced Load Flow Study and Stability Analysis of A Real Time SystemДокумент8 страницAdvanced Load Flow Study and Stability Analysis of A Real Time SystemInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsДокумент9 страницSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryДокумент8 страницImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- Predicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsДокумент10 страницPredicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsInternational Journal of Application or Innovation in Engineering & ManagementОценок пока нет

- BS7430 Earthing CalculationДокумент14 страницBS7430 Earthing CalculationgyanОценок пока нет

- Content Analysis of Studies On Cpec Coverage: A Comparative Study of Pakistani and Chinese NewspapersДокумент18 страницContent Analysis of Studies On Cpec Coverage: A Comparative Study of Pakistani and Chinese NewspapersfarhanОценок пока нет

- Frequency Polygons pdf3Документ7 страницFrequency Polygons pdf3Nevine El shendidyОценок пока нет

- Workplace Risk Assessment PDFДокумент14 страницWorkplace Risk Assessment PDFSyarul NizamzОценок пока нет

- In Search of Begum Akhtar PDFДокумент42 страницыIn Search of Begum Akhtar PDFsreyas1273Оценок пока нет

- HyperconnectivityДокумент5 страницHyperconnectivityramОценок пока нет

- Barack ObamaДокумент3 страницыBarack ObamaVijay KumarОценок пока нет

- Cultural Practices of India Which Is Adopted by ScienceДокумент2 страницыCultural Practices of India Which Is Adopted by ScienceLevina Mary binuОценок пока нет

- APPSC GROUP 4 RESULTS 2012 - Khammam District Group 4 Merit ListДокумент824 страницыAPPSC GROUP 4 RESULTS 2012 - Khammam District Group 4 Merit ListReviewKeys.comОценок пока нет

- EE360 - Magnetic CircuitsДокумент48 страницEE360 - Magnetic Circuitsبدون اسمОценок пока нет

- S010T1Документ1 страницаS010T1DUCОценок пока нет

- Gauss' Law: F A FAДокумент14 страницGauss' Law: F A FAValentina DuarteОценок пока нет

- Riveted JointsДокумент28 страницRiveted Jointsgnbabuiitg0% (1)

- Quemador BrahmaДокумент4 страницыQuemador BrahmaClaudio VerdeОценок пока нет

- Certified Vendors As of 9 24 21Документ19 страницCertified Vendors As of 9 24 21Micheal StormОценок пока нет

- Information Brochure: (Special Rounds)Документ35 страницInformation Brochure: (Special Rounds)Praveen KumarОценок пока нет

- Apps Android StudioДокумент12 страницApps Android StudioDaniel AlcocerОценок пока нет

- Soil Liquefaction Analysis of Banasree Residential Area, Dhaka Using NovoliqДокумент7 страницSoil Liquefaction Analysis of Banasree Residential Area, Dhaka Using NovoliqPicasso DebnathОценок пока нет

- Hawassa University Institute of Technology (Iot) : Electromechanical Engineering Program Entrepreneurship For EngineersДокумент133 страницыHawassa University Institute of Technology (Iot) : Electromechanical Engineering Program Entrepreneurship For EngineersTinsae LireОценок пока нет

- Surge Arrester PresentationДокумент63 страницыSurge Arrester PresentationRamiro FelicianoОценок пока нет

- Bosch Injectors and OhmsДокумент6 страницBosch Injectors and OhmsSteve WrightОценок пока нет

- Guia Instalacion APP Huawei Fusion HmeДокумент4 страницыGuia Instalacion APP Huawei Fusion Hmecalinp72Оценок пока нет

- Bio 104 Lab Manual 2010Документ236 страницBio 104 Lab Manual 2010Querrynithen100% (1)

- Methods of Estimation For Building WorksДокумент22 страницыMethods of Estimation For Building Worksvara prasadОценок пока нет

- Xii - STD - Iit - B1 - QP (19-12-2022) - 221221 - 102558Документ13 страницXii - STD - Iit - B1 - QP (19-12-2022) - 221221 - 102558Stephen SatwikОценок пока нет

- Warning: Shaded Answers Without Corresponding Solution Will Incur Deductive PointsДокумент1 страницаWarning: Shaded Answers Without Corresponding Solution Will Incur Deductive PointsKhiara Claudine EspinosaОценок пока нет

- AnimDessin2 User Guide 01Документ2 страницыAnimDessin2 User Guide 01rendermanuser100% (1)

- The Practice Book - Doing Passivation ProcessДокумент22 страницыThe Practice Book - Doing Passivation ProcessNikos VrettakosОценок пока нет

- Lifecycle of A Butterfly Unit Lesson PlanДокумент11 страницLifecycle of A Butterfly Unit Lesson Planapi-645067057Оценок пока нет

- BS 07533-3-1997Документ21 страницаBS 07533-3-1997Ali RayyaОценок пока нет