Академический Документы

Профессиональный Документы

Культура Документы

Data Lake Architecture: Delivering Insight and Scale From Hadoop As An Enterprise-Wide Shared Service

Загружено:

juergen_urbanskiОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Data Lake Architecture: Delivering Insight and Scale From Hadoop As An Enterprise-Wide Shared Service

Загружено:

juergen_urbanskiАвторское право:

Доступные форматы

Memo from Analytix.

is

Data Lake Architecture:

Delivering Insight and Scale from Hadoop as an

Enterprise-Wide Shared Service

EXECUTIVE SUMMARY

Business Drivers for Hadoop 2.x

Our whitepaper Data Lake Business Value laid out why Apache1 HadoopTM 2.x

is well suited to enable revenue growth and cost savings across the enterprise.

Traditional solutions were not designed to extract full value from the flood of data

arriving in the enterprise today. Apache Hadoop 2.x overcomes the limitations of

traditional solutions by delivering unprecedented insight and scale. This

creates significant value both at the application and infrastructure level:

Application level: Allows simultaneous access and timely insights for all

your users across all your data irrespective of the processing engine. This

is possible because Hadoop 2.x allows you to store data first and query it in

the moment or later in a flexible fashion.

Infrastructure level: Allows you to acquire all data in its original format and

store it in one place, cost effectively and for an unlimited time. This is

possible because Hadoop 2.x delivers 20x cheaper data storage than

alternatives.

This step change in effectiveness and efficiency allows you to extract maximum

business value from the rapid growth in data volume, variety and velocity.

Business Drivers for a Data Lake

We posit that deploying Hadoop 2.x as an enterprise-wide shared service is the

best way of turning data into profit. We call this shared service a data lake.

The value created by Hadoop 2.x grows exponentially as data from more

applications lands in the data lake. More and more of that data will be retained

for decades. For many enterprises, data becomes possibly as important as

capital and talent in the quest for profit. Therefore, it is important to future-proof

your investments in big data. Even your first Hadoop 2.x project should consider

the data lake as the target architecture.

In this white paper, you will learn about the technology that makes the data lake

a reality in your environment. We will introduce the modular target architecture

for the data lake, detail its functional requirements and highlight how the

enterprise-grade capabilities of Hadoop 2.x deliver on these expectations. As

more use cases join the data lake, more of the enterprise-grade functionality of

that the second generation of Hadoop brings comes into focus.

1

Apache, Apache Hadoop and any Apache projects are trademarks of the Apache Software

Foundation.

Memo from Analytix.is

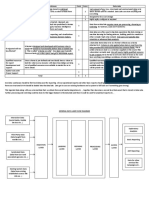

Data Lake Architecture

Deploying Apache Hadoop 2.x as an enterprise-wide shared service requires

more than just the ability to deliver insights and scale. Five enabling

capabilities of Hadoop 2.x deliver on the functional requirements of a data lake:

1. Allows a seamless, well-orchestrated flow of data between existing

environments and Hadoop 2.x.

2. Implements enterprise-grade security requirements such as authentication,

authorization, accountability, encryption.

3. Provides highly automated operations, including multi-tenancy, both

natively in Hadoop 2.x and integrated with existing management tools.

4. Runs anywhere, across operating systems, deployment form factors, onpremise and in the cloud.

5. Enables both existing and new applications to provide value to the

organization.

Benefits of a Data Lake

Deploying an enterprise-wide Apache Hadoop data lake brings a series of

benefits relative to spinning up dedicated clusters for each project. Most

importantly, larger questions can be asked, yielding deeper insights because

any authorized user can interact with the pool of data in multiple ways. More

data typically leads to better answers.

The data lake, as a shared service across the organization, also brings

operational benefits that are very similar to a private infrastructure cloud:

Speed of provisioning and de-provisioning.

Fast learning curve and reduced operational complexity.

Consistent enforcement of data security, privacy and governance.

Optimal capital efficiency.

PAGE 2

Memo from Analytix.is

BUSINESS DRIVERS FOR HADOOP 2.X

Many enterprises are overwhelmed by the variety, velocity and volume of data.

Extracting value from that data requires the ability to store data first and query it

in the moment or later in a flexible fashion. Traditional solutions however do not

provide timely or actionable insights. Questions have to be determined before

new data even arrives. Defining schemas on write severely limits your ability to

take advantage of the wealth of new data types available. Moreover, traditional

solutions do not scale well technically or economically. Data is locked into

operational silos of proprietary technology. Retaining all your historical data for

analysis is unaffordable. Therefore, enterprises run out of budget long before

they run out of useful data.

For the business, extracting value from big data is all about asking the right

questions. Often the combination of data sources that are most valuable and the

questions that yield real insights only become apparent after multiple rounds of

exploration and iteration. In other words, questions are not always known in

advance and the data to answer these questions could be of varying types, likely

including unstructured data. What the business requires is an ability to store

data first and query it in the moment or later, in a flexible fashion.

Apache Hadoop 2.x excels at doing exactly that. It is the open source

technology at the center of the big data revolution. Apache Hadoop 2.x has

become the leading platform for capturing, storing, managing and processing

vast quantities of structured and unstructured data in a cost-efficient and scalable

manner, unleashing analytic opportunities to grow revenue and reduce cost.

Apache Hadoop 2.x overcomes some of the challenges of traditional solutions by

delivering unprecedented insight and scale. Hadoop 2.x allows you to store data

first and query it in the moment or later in a flexible fashion. The key reason

Hadoop 2.x allows you to capture more data is because the marginal cost of

retaining data is less than the marginal value. In fact, Hadoop provides 20x

cheaper data storage than alternatives. That results in order-of-magnitude better

insights and business value compared to legacy solutions. This step change in

effectiveness and efficiency completely transforms the value of your data,

allowing you to take full advantage of the rapid growth in data volume, variety

and velocity. Exceptionally powerful analyses are now possible, whether using

existing tools or entirely new applications.

Therefore, only Hadoop 2.x is able to provide distributed storage and processing

that is

Affordable and performing well into the 100+ petabyte scale.

Flexible enough to deal with increasing data variety and velocity.

PAGE 3

Memo from Analytix.is

BUSINESS DRIVERS FOR A DATA LAKE

We posit that deploying Hadoop 2.x as an enterprise-wide shared service is the

best way of turning data into profit. We call this shared service a data lake. The

attributes of a data lake are:

Delivers maximum scale and insight to the entire enterprise.

Allows authorized users across all business units to refine, explore and enrich

data.

Contains data from transactions, interactions and observations.

Enables multiple data access patterns across a shared infrastructure: batch,

interactive, online, search, in-memory and other processing engines.

Many enterprises are making Hadoop 2.x available throughout the organization

in a very thoughtful manner to avoid the fragmentation of the legacy world.

Instead of deploying one Hadoop 2.x cluster per project, they are moving to

Hadoop 2.x as an enterprise-wide shared service for all projects, for all analytic

workloads and for all business units. Clearly it does not make sense for an

enterprise to have one cluster per team, or one cluster for each of Storm, Hbase,

MapReduce, Lucene. In other words, these customers are avoiding data ponds

and moving toward a unified data lake.

The value created by Hadoop 2.x grows exponentially as data from more

applications lands in the data lake. More and more of that data will be retained

for decades. For many enterprises, data becomes possibly as important as

capital and talent in the quest for profit. Therefore, it is important to future-proof

your investments in big data. Even your first Hadoop 2.x project should consider

the data lake as the target architecture.

In this white paper, you will learn about the technology that makes the data lake

a reality in your environment. We will introduce the modular target architecture

for the data lake and detail its functional requirements

PAGE 4

Memo from Analytix.is

DATA LAKE ARCHITECTURE

Hadoop 2.x creates significant value both at the application and infrastructure

level:

Application level: Allows simultaneous access and timely insights for all

your users across all your data irrespective of the processing engine.

Infrastructure level: Allows you to acquire all data in its original format and

store it in one place, cost effectively and for an unlimited time, subject only

to constraints imposed by your compliance policies.

Two essential capabilities of Hadoop make this possible:

1. Infrastructure-level data management:

Storage: Hadoop Distributed File System (HDFS) is a Java-based file system

that provides scalable and reliable data storage that is designed to span large

clusters of commodity servers. In production clusters, HDFS has demonstrated

scalability of up to 200 PB of storage and a single cluster of 4,500 servers,

supporting close to a billion files and blocks. In other words, HDFS provides

distributed, redundant storage. It is now in its second major release, called

HDFS2. HDFS is also able to distinguish between different storage media.

Processing operating system: Apache Hadoop YARN is the data operating

system for Hadoop 2.x. YARN enables a user to interact with all data in multiple

ways simultaneously, making Hadoop 2.x a true multi-use data platform. In

essence, YARN is the operating system responsible for resource management in

the cluster. YARN plays a fundamental role in the data lake since it achieves two

objectives: scheduling resource usage across a cluster and the ability to

accommodate any data processing engine.

2. Data access for a variety of applications:

As we said above, Hadoop 2.x allows simultaneous access and timely insights

for all your users across all your data, irrespective of the processing engine or

analytical application. In other words, one pool of data can be analyzed for many

different purposes using a wide range of processing engines such as batch,

interactive, streaming, search, graph, machine learning, and NoSQL databases.

Much more than just a stagnant pool of stored data, the Hadoop 2.x data lake

comes alive through analytics.

One important reason these processing engines allow enterprises to ask larger

questions and obtain fresher insights is that semantic schemas are only defined

on read. Any and all data can flow into the lake without the upfront effort that

would be required in a legacy solution to define which questions will be asked of

the data. With Hadoop 2.x, enterprises can store first, ask questions later.

More recently, customers can even store first, ask questions in the moment as

PAGE 5

Memo from Analytix.is

the second generation of Hadoop enables low latency queries.

This second essential capability is enabled by the following Apache modules at

the data access layer:

Metadata management: HCatalog is a table management layer that

exposes SQL metadata to a wide range of Hadoop 2.x applications and tools,

thereby enabling users with different data processing tools (i.e. domain specific

languages including Pig and MapReduce among others) to more easily read and

write data on the data lake. HCatalogs table abstraction presents users with a

relational view of data in HDFS and ensures that users need not worry about

where or in what format their data is stored. HCatalog displays data from

ORCFile or RCFile formats, JSON (text) files, CSV files and sequence files in a

tabular view. It also provides REST APIs so that external systems can access

these tables metadata.

Batch processing: Apache Hadoop MapReduce is a framework for writing

applications that process large amounts of structured and unstructured data in

parallel across a cluster of thousands of machines, in a reliable and fault-tolerant

manner.

Interactive processing: Apache Tez. While Apache Hadoop MapReduce

has been the batch-oriented data processing backbone for Hadoop 2.x, Tez

enables projects in the Apache Hadoop ecosystem such as Apache Hive and

Apache Pig, as well as commercial solutions originally built on Apache Hadoop

MapReduce, to meet the demands for fast response times and extreme

throughput at petabyte scale. Together with HDFS2, YARN and MapReduce

described above, Tez extends the speed of data processing that is possible from

the core of Apache Hadoop.

SQL: Apache Hive is a data warehouse built on top of Apache Hadoop for

providing data summarization, ad-hoc queries, and analysis of large datasets.

Hive provides a mechanism to project structure onto the data in Hadoop 2.x and

to query that data using standard SQL syntax, easing integration between

Hadoop 2.x and mainstream tools for business intelligence and visualization.

Scripting: Apache Pig is a scripting platform for processing and analyzing large

data sets. Apache Pig allows customers to write complex data processing

transformations using a simple scripting language. Pig Latin (the language)

defines a set of transformations on a data set such as aggregate, join and

sort. Pig translates the Pig Latin script into Apache Hadoop MapReduce jobs

(and soon Apache Tez jobs) so that it can be executed within Hadoop. Pig is

ideal for standard extract-transform-load (ETL) data pipelines, which enables the

enterprise data warehouse rightsizing discussed below. It is also used for

research on raw data and iterative processing of data.

Online database: Apache HBase is a non-relational (NoSQL) document

database that runs on top of HDFS. It is columnar and provides quick access to

large quantities of sparse data. It also adds transactional capabilities to Hadoop

2.x, allowing users to conduct updates, inserts and deletes. Because of this

PAGE 6

Memo from Analytix.is

capability, it is often used for online applications.

Online database: Hortonworks also supports Apache Accumulo, a sorted,

distributed key-value store with cell-based access control. Cell-level access

control is important for organizations with complex policies governing who is

allowed to see data. It enables the intermingling of different data sets with

different access control policies and proper handling of individual data sets that

have some sensitive portions.

Streaming: Apache Storm is a distributed system for quick processing of large

streams of data in real time.

Machine Learning: Apache Mahout is a library of scalable machine-learning

algorithms. Machine learning is a discipline of artificial intelligence focused on

enabling machines to learn without being explicitly programmed. Once data is

stored on HDFS, Mahout provides the data science tools to automatically find

meaningful patterns in those big data sets.

But deploying Apache Hadoop 2.x as an enterprise-wide shared service requires

more than just the ability to deliver insights and scale. Five enabling

capabilities of Hadoop 2.x deliver on the functional requirements of a data lake.

These enabling capabilities create the trust that is needed for Hadoop 2.x to

become the standard platform for enterprise data. We will refer to this target

architecture by the term data lake.

1. Data integration & governance

Batch Ingestion: Apache Sqoop is a tool designed for efficiently transferring

bulk data between Apache Hadoop and structured datastores such as relational

databases. Sqoop imports data from external structured datastores into HDFS or

related systems like Hive and HBase. Sqoop can also be used to extract data

from Hadoop and export it to external structured datastores such as relational

databases and enterprise data warehouses. Sqoop works with relational

databases such as: Teradata, Netezza, Oracle, MySQL, Postgres, and HSQLDB.

API access to Hadoop: WebHDFS defines a public HTTP REST API, which

permits clients to access Hadoop 2.x from multiple languages without installing

Hadoop 2.x

NFS access to Hadoop: With NFS access to HDFS, customers can mount the

HDFS cluster as a volume on client machines and have native command line,

scripts or file explorer UI to view HDFS files and load data into HDFS. NFS thus

enables file-based applications to perform file read and write operations directly

to Hadoop 2.x.

Streaming: Apache Flume is a service for efficiently collecting, aggregating,

and moving large amounts of streaming data into HDFS.

PAGE 7

Memo from Analytix.is

Real Time Ingestion: Apache Storm is a distributed system for processing fast,

large streams of data in real time. (See above)

Data Workflow and Lifecycle: Apache Falcon is a framework that enables

users to automate the movement and processing of datasets for ingest,

pipelines, disaster recovery and data retention use cases. Crucially for the data

lake, Falcon enables import, scheduling & coordination, replication, lifecycle

policies, SLA & multi-cluster management, and compaction. Falcon will in the

near future also address lineage and audit trails, implemented via a time series

traversal of logs and data.

These capabilities are complemented by data integration tools, for instance

connectors enabling data transfer between HDFS and EDW, in-memory DB,

relational DB, scale-out relational DB, and No-SQL DB

2. Security

Hadoop 2.x supports enterprise-grade security requirements such as

authentication, authorization, accountability, and data protection / encryption.

Multi-tenancy is also supported and a core security requirement. Given the

prominent role of security in discussions around any shared service in IT, we will

go into a bit more detail than on some of the other capabilities. We discuss multitenancy in the section on Operations below.

Authentication and authorization: The Knox Gateway (Knox) is a single

security perimeter for REST/HTTP access to one or more Hadoop clusters. It

provides secure authentication and authorization in a way that is fully integrated

with enterprise and cloud identity management environments.

Authentication verifies the identity of a system or user accessing the system.

Hadoop 2.x provides two modes of authentication. The first, simple or pseudo

authentication, essentially places trust in users assertion about who they are.

The second, Kerberos, provides a fully secure Hadoop cluster. In line with best

practice, Hadoop 2.x provides these capabilities while relying on widely accepted

corporate user-identity-stores (such as LDAP or Active Directory) so that a single

source can be used for a credential catalog across Hadoop 2.x and existing

systems.

Authorization specifies access privileges for a user or system. Hadoop 2.x

provides fine-grained authorization via or with file permissions in HDFS and

resource level access control (via ACL) for Apache Hadoop MapReduce and

coarser-grained access control at a service level. For data, HBase provides

authorization with ACL on tables and column families, and Accumulo extends this

even further to cell-level control. Also, Apache Hive provides coarse-grained

access control on tables.

Accounting provides the ability to track resource use within a system. Within

Hadoop 2.x, insight into usage and data access is critical for compliance or

forensics. As part of core Apache Hadoop 2.x, HDFS and MapReduce provide

base audit support. Additionally, Apache Hive metastore records audit

PAGE 8

Memo from Analytix.is

(who/when) information for Hive interactions. Finally, Apache Oozie, the workflow

engine, provides audit trail for services.

Data Protection ensures privacy and confidentiality of information. HDP allows

you to protect data in motion. HDP provides encryption capability for various

channels such as Remote Procedure Call (RPC), HTTP, JDBC/ODBC, and Data

Transfer Protocol (DTP) to protect data in motion. Finally, HDFS supports

operating system-level encryption. Current capabilities also include encryption

with SSL for NameNode, JobTracker and data encryption for HDFS, HBase &

Hive. In the near future, HDP will support wire encryption for Shuffle, HDFS Data

Transfer and JDBC/ODBC access to Hive.

As Hadoop 2.x evolves, so do the solutions to support enterprise security

requirements. Much of the focus is centered around weaving the security

frameworks together and to make them even simpler to manage.

3. Operations

Hadoop 2.x supports highly automated operations whether natively in Hadoop or

integrated with existing management tools. In a data lake architecture, data as

well as the underlying infrastructure are shared across the enterprise. This

shared infrastructure pool can span different data centers and is managed by a

unified data management operating system. The data in this pool is accessible

to all authorized users across the enterprise through advanced multi-tenancy

features. Every department or user in the organization connects via their own

sandbox, with dedicated SLAs and differentiated views of the data, which are

defined by data protection rules.

Provisioning, Management, Monitoring: Apache Ambari is a 100-percent

open source operational framework for provisioning, managing and monitoring

Apache Hadoop 2.x clusters. Ambari includes an intuitive collection of operator

tools and a robust set of APIs that hide the complexity of Hadoop, simplifying the

operation of clusters. The APIs provide connectivity to market-leading

management tools including Microsoft System Center, Teradata Viewpoint and

OpenStack.

Scheduling: Apache Oozie is a Java Web application used to schedule Apache

Hadoop jobs on a recurring basis. Oozie combines multiple jobs sequentially into

one logical unit of work. It is integrated with the Hadoop 2.x stack and supports

Hadoop jobs for Apache Hadoop MapReduce, Apache Pig, Apache Hive, and

Apache Sqoop. It can also be used to schedule jobs specific to a system, like

Java programs or shell scripts.

Multi-tenancy - Apps: YARN. A multi-tenant architecture shares core resources

while isolating services and data. Apache Hadoop YARN is a multi-application,

multi-workload general-purpose data operating system which enables multitenancy. One important feature of YARN that avoids contention between

workloads is the Capacity Scheduler. The Capacity Scheduler assigns

minimum guaranteed capacities to users, applications or entire business units

PAGE 9

Memo from Analytix.is

sharing a cluster. At the same time, if the cluster has some idle capacity, then

users and applications are allowed to consume more of the cluster than their

guaranteed minimum share, maximizing overall cluster utilization. This approach

is superior to both the first-in-first-out (FIFO) job scheduler or the Fair Scheduler

found in other Hadoop distributions, since the latter can result in catastrophic

cluster failures (for instance due to user error) whereby all workloads are stuck

waiting rather than running.

Multi-tenancy - Data: HDFS. HDFS is a scalable, flexible and fault tolerant data

storage system that enables data of varying types to co-exist in the same

architecture. HDFS provides mechanisms for data access control and storage

capacity management to enable multiple tenants to manage data in the same

physical cluster. HDFS utilizes a familiar POSIX based permission model to

assign read and write access privileges for each dataset to a set of users. This

enables strong data access isolation across datasets in the data lake. HDFS

provides quota management functionality to set limits on storage capacity used

by tenants' datasets. This ensures that all tenants in the system get their share of

storage space in the shared storage system.

High Availability & Disaster Recovery: YARN, HDFS, Falcon. Yarn enables

re-use of key platform services for reliability, redundancy and security across

multiple workloads. The raw storage layer in HDFS is fully distributed and faulttolerant. Prior to Hadoop 2.x, the file system metadata though was stored in a

single master server called NameNode. Hadoop 2.x provides hot standby,

automatic failover and file system journaling to the NameNode. This improves

the overall service availability both for planned and unplanned downtime of the

NameNode. It also eliminates the need for external HA frameworks and highly

available external shared storage for storing journals. Disaster recovery is

orchestrated via Falcon and leverages the capability of distcp, which distributes a

copy from one cluster to another. Vendors like WANdisco complement this.

4. Environment and deployment model

Enterprises evaluating a Hadoop distribution should look at the broadest range of

deployment options for Hadoop, from Windows Server or Linux to virtualized

cloud deployments. A portable Hadoop distribution allows to easily and reliably

migrate from one deployment type to another. A portable Hadoop distribution

also delivers choice and flexibility irrespective of physical location, deployment

form factor or storage medium, augmenting your current operational data stores.

5. Presentation and application

Hadoop 2.x is of value to both existing and new applications. It allows all

authorized users in the organization to ask larger questions and get deeper

insights using familiar business intelligence and data warehouse solutions.

PAGE 10

Memo from Analytix.is

At the presentation layer, it is easy for business users and analysts to access

and visualize all the data via familiar tools ranging from Microsoft Excel to

Tableau. In Excel for instance, simply set the data source to Hadoop.

At the application layer, it is easy for developers to write applications that take

advantage of one enterprise-wide shared pool of data, the data lake.

Jointly, these five categories of enabling capabilities form the reference

architecture for the data lake.

BENEFITS

Hadoop 2.x is the foundation for the big data and analytics revolution that is

unfolding because of its superior economics and transformational capabilities

compared to legacy solutions. Enterprises moving away from fragmented data

storage and analytics silos stand to realize numerous benefits:

Deeper insights. Larger questions can be asked because all of the data is

available for analysis, including all time periods, all data formats and from all

sources. And semantic schemas are only defined at the time of analysis. The

combinatorial effect of analyzing all data produces a much deeper level of insight

than what is available in the stove-piped legacy world. Having a single 360degree view of the customer relationship across time, across interaction

channels, products and organizational boundaries is a perfect example.

Actionable insights. Hadoop 2.x enables closed-loop analytics by bringing the

time to insight as close to real time as possible.

The data lake, as a shared service across the organization, also brings benefits

that are very similar to a private infrastructure cloud:

Agile provisioning and de-provisioning of both capacity and users. With

the data lake, your business users can be up and running in minutes. Simply tap

into the pool of capacity available, instead of setting up a dedicated Hadoop

cluster for every project. Moreover, should a project no longer be required, the

capex is not stranded since the physical assets can be repurposed. This means

no minimum investment is required to get started. Likewise, on-ramping a new

user is a matter of minutes both operationally and skills-wise. The single

infrastructure pool works seamlessly with a range of BI and analytics tools. For a

new user, this means they may already have different analytic lenses configured

for them the moment they log in for the first time. And they can use Microsoft

Excel as a familiar tool, simply selecting Hadoop as the data source.

Faster learning curve and reduced operational complexity. Hadoop is a new

topic to many IT professionals. Many organizations have found it beneficial to

create a Hadoop center of excellence in the organization, forming either a central

pool of expertise or a coordinated set of teams that can easily learn from each

other. A data lake provides the natural setting for this to happen. Having

dedicated technical and organizational Hadoop silos for every project would

PAGE 11

Memo from Analytix.is

multiply the effort of supporting and managing Hadoop and ensuring currency

with one release across the enterprise. Interoperability with existing

environments would suffer due to additional testing and integration, notably since

not every project would run on the latest stable release. A data lake on the other

hand avoids inconsistent approaches and a skills mismatch between projects.

Consistent data security, privacy and governance. Physically distinct silos

would make it very hard in practice to enforce policies across boundaries even

within one large organization.

Optimal capital efficiency driven by scale and the ability to load balance. The

opex and capex required to deploy and manage several small ponds of Hadoop

is higher than that for one big lake of identical capacity. Not all Hadoop jobs are

busy at the same time, and some may work on the same data sets. Therefore, a

common pool of capacity can be smaller than capacity spread over several

smaller ponds. Silos will show low average utilization. In one lake, data can be

de-duplicated.

CONCLUSION

To summarize, a data lake allows you to

Store every shred of data throughout the enterprise in original fidelity.

Provide access to this data across the organization.

Explore, discover and mine your data for value using multiple engines.

Secure and govern all data in accordance with enterprise policies.

This is enabled by the core value proposition of Hadoop 2.x:

Performance scales in linear fashion due to its distributed compute and

storage model, making exabyte-scale possible.

Cost is 20x lower than traditional solutions due to open source software and

industry standard hardware.

Integration with your existing environment makes it easy to ingest, analyze

and visualize data, and to develop applications that take advantage of

Hadoop 2.x.

PAGE 12

Вам также может понравиться

- Teradata To Snowflake Migration GuideДокумент15 страницTeradata To Snowflake Migration GuideUsman Farooqi100% (2)

- Delphi 7-Interbase TutorialДокумент78 страницDelphi 7-Interbase Tutorialdee26suckОценок пока нет

- Anne Van Ardsall - Medieval Herbal RemediesДокумент92 страницыAnne Van Ardsall - Medieval Herbal RemediesMirrОценок пока нет

- Talend Data Quality GuideДокумент45 страницTalend Data Quality GuideLuis GallegosОценок пока нет

- How To Summon GusoinДокумент4 страницыHow To Summon Gusoinhery12100% (3)

- IT Director CIO Data Architect in Bethel CT NYC Resume Robert FlanaganДокумент3 страницыIT Director CIO Data Architect in Bethel CT NYC Resume Robert FlanaganRobertFlanaganОценок пока нет

- Designing The Data Warehouse - Part 1Документ45 страницDesigning The Data Warehouse - Part 1Pranav BadhwarОценок пока нет

- Standard Naming Conventions Improve Data QualityДокумент12 страницStandard Naming Conventions Improve Data QualityHimanshu JauhariОценок пока нет

- Azure DataEngineer TrainingДокумент12 страницAzure DataEngineer Trainingsrinivasarao dhanikondaОценок пока нет

- 1 - DBT - A New Way To Transform Data and Build Pipelines at The Telegraph - by Stefano Solimito - The Telegraph Engineering - MediumДокумент14 страниц1 - DBT - A New Way To Transform Data and Build Pipelines at The Telegraph - by Stefano Solimito - The Telegraph Engineering - MediumhyoitoОценок пока нет

- Databricks Interview Questions & Answers GuideДокумент10 страницDatabricks Interview Questions & Answers GuideAdithya Vardhan Reddy Kothwal PatelОценок пока нет

- BlueGranite Data Lake EbookДокумент23 страницыBlueGranite Data Lake EbookPrasanthОценок пока нет

- Training Report On C and C++Документ20 страницTraining Report On C and C++Guddu Rai67% (3)

- Metadata Management: Reporter: Padpad, Justin Jay Pastolero, John Lloyd Passion, JayveeДокумент28 страницMetadata Management: Reporter: Padpad, Justin Jay Pastolero, John Lloyd Passion, JayveeBenz Choi100% (1)

- Introduction To Data EngineeringДокумент23 страницыIntroduction To Data EngineeringChandra Putra100% (1)

- Tuning Your PostgreSQL ServerДокумент12 страницTuning Your PostgreSQL ServerSrinivas GandikotaОценок пока нет

- Real Time Event Processing With Microsoft Azure Stream AnalyticsДокумент31 страницаReal Time Event Processing With Microsoft Azure Stream Analyticschakri.unguturu2543100% (1)

- Cloud Data Lakes For Dummies Snowflake Special Edition V1 4Документ10 страницCloud Data Lakes For Dummies Snowflake Special Edition V1 4CarlosVillamilОценок пока нет

- Data Science - Hierarchy of NeedsДокумент20 страницData Science - Hierarchy of NeedsLamis AhmadОценок пока нет

- Defining The Data Lake White PaperДокумент7 страницDefining The Data Lake White Papernaga_yalamanchili0% (1)

- DW Vs Data LakeДокумент5 страницDW Vs Data LakepetreОценок пока нет

- Durgesh Sr. Data Architect / Modeler/BigdataДокумент5 страницDurgesh Sr. Data Architect / Modeler/BigdataMadhav Garikapati100% (1)

- BigQuery - A Serverless Data WarehouseДокумент2 страницыBigQuery - A Serverless Data WarehouseGaurav SainiОценок пока нет

- Snowflake Certification SyllabusДокумент4 страницыSnowflake Certification SyllabusWeAre1Оценок пока нет

- Implemententerprise Data LakeДокумент9 страницImplemententerprise Data LakeK Kunal RajОценок пока нет

- DWH Fundamentals (Training Material)Документ21 страницаDWH Fundamentals (Training Material)svdonthaОценок пока нет

- Notes of Azure Data BricksДокумент16 страницNotes of Azure Data BricksVikram sharmaОценок пока нет

- ILT-Fundamentals 4-Day - DatasheetДокумент4 страницыILT-Fundamentals 4-Day - DatasheetResolehtmai DonОценок пока нет

- Solution Design & Target Architecture For Hadoop As An Enterprise-Wide Shared ServiceДокумент1 страницаSolution Design & Target Architecture For Hadoop As An Enterprise-Wide Shared Servicejuergen_urbanskiОценок пока нет

- Introduction to Airflow Platform Programmatically Author Schedule Monitor Data PipelinesДокумент9 страницIntroduction to Airflow Platform Programmatically Author Schedule Monitor Data PipelinesParesh BhatiaОценок пока нет

- Cloudera Developer Training For Apache Spark: Hands-On ExercisesДокумент61 страницаCloudera Developer Training For Apache Spark: Hands-On ExercisesPavan KumarОценок пока нет

- HOL Informatica DataQuality 9.1Документ48 страницHOL Informatica DataQuality 9.1vvmexОценок пока нет

- Architecting Data Lakes Zaloni PDFДокумент63 страницыArchitecting Data Lakes Zaloni PDFSaurabh NolakhaОценок пока нет

- Azure Cosmos DB WorkshopДокумент147 страницAzure Cosmos DB WorkshopspringleeОценок пока нет

- The Definitive Guide to Azure Data Engineering: Modern ELT, DevOps, and Analytics on the Azure Cloud PlatformОт EverandThe Definitive Guide to Azure Data Engineering: Modern ELT, DevOps, and Analytics on the Azure Cloud PlatformОценок пока нет

- Data Lake or Data Swamp?Документ16 страницData Lake or Data Swamp?Kira HoffmanОценок пока нет

- Data Processing FundamentalsДокумент81 страницаData Processing FundamentalsvisОценок пока нет

- How To Work With Apache AirflowДокумент111 страницHow To Work With Apache AirflowSakshi ArtsОценок пока нет

- Brought To You in Partnership WithДокумент49 страницBrought To You in Partnership WithKP SОценок пока нет

- Choosing Technologies For A Big Data Solution in The Cloud: James SerraДокумент58 страницChoosing Technologies For A Big Data Solution in The Cloud: James SerraGautham Ram RajendiranОценок пока нет

- Day1 MainДокумент188 страницDay1 MainaissamemiОценок пока нет

- Data Lake StorageДокумент237 страницData Lake Storagevishwah22100% (1)

- Resume-Senior Data Engineer-Etihad Airways-Kashish SuriДокумент4 страницыResume-Senior Data Engineer-Etihad Airways-Kashish SuriAviraj kalraОценок пока нет

- Data Warehousing AND Data MiningДокумент90 страницData Warehousing AND Data MiningDevendra Prasad Murala100% (1)

- Data Migration To HadoopДокумент26 страницData Migration To Hadoopkrishna100% (2)

- Data Warehousing With GreenplumДокумент95 страницData Warehousing With Greenplumcosdoo0% (1)

- Reference Architecture Big DataДокумент3 страницыReference Architecture Big Datajuergen_urbanski100% (1)

- AWS Big Data Specialty Study Guide PDFДокумент13 страницAWS Big Data Specialty Study Guide PDFarjun.ec633Оценок пока нет

- Cheat Sheet DP900Документ7 страницCheat Sheet DP900Amir LehmamОценок пока нет

- Trivago PipelineДокумент18 страницTrivago Pipelinebehera.eceОценок пока нет

- Thomas Aquinas - Contemporary Philosophical Perspectives (PDFDrive)Документ415 страницThomas Aquinas - Contemporary Philosophical Perspectives (PDFDrive)MartaОценок пока нет

- Hadoop Data Lake: Hadoop Log Files JsonДокумент5 страницHadoop Data Lake: Hadoop Log Files JsonSrinivas GollanapalliОценок пока нет

- Three Data Lake Blueprints: A Data Architects' Guide To Building A Data Lake For SuccessДокумент20 страницThree Data Lake Blueprints: A Data Architects' Guide To Building A Data Lake For SuccessArsenij KroptyaОценок пока нет

- Fundamentals of Big Data Engineering: A Guide To TheДокумент14 страницFundamentals of Big Data Engineering: A Guide To Thehemanth katkamОценок пока нет

- DeZyre - Apache - SparkДокумент12 страницDeZyre - Apache - SparkMadhuОценок пока нет

- Understanding Data Lakes EMCДокумент1 страницаUnderstanding Data Lakes EMCpedro_luna_43Оценок пока нет

- Big Data With Hadoop & Spark - IntroductionДокумент42 страницыBig Data With Hadoop & Spark - IntroductionCit AssocDean RosarioОценок пока нет

- StreamSets: An Open Source Data Operations PlatformДокумент23 страницыStreamSets: An Open Source Data Operations PlatformRekha GОценок пока нет

- RDBMS To MongoDB MigrationДокумент19 страницRDBMS To MongoDB Migrationphurtado1112Оценок пока нет

- Build A True Data Lake With A Cloud Data WarehouseДокумент15 страницBuild A True Data Lake With A Cloud Data WarehouseRadhouane ZahraОценок пока нет

- Whitepaper BigData PDFДокумент21 страницаWhitepaper BigData PDFAnonymous ul5cehОценок пока нет

- Complete Data Engineering Career Path: Step-by-Step Roadmap in 2022Документ43 страницыComplete Data Engineering Career Path: Step-by-Step Roadmap in 2022Tarun bhattОценок пока нет

- Velocity v8 Data Warehousing MethodologyДокумент1 106 страницVelocity v8 Data Warehousing MethodologySaketh GaddeОценок пока нет

- Reference Architecture MarketingДокумент1 страницаReference Architecture Marketingjuergen_urbanskiОценок пока нет

- Spark Streaming Versus Storm: Comparing Systems For Processing Fast and Large Streams of Data in Real TimeДокумент4 страницыSpark Streaming Versus Storm: Comparing Systems For Processing Fast and Large Streams of Data in Real Timejuergen_urbanski100% (1)

- Analytix Industry White Paper - Big Data Accelerates Earnings Growth in Banking and InsuranceДокумент9 страницAnalytix Industry White Paper - Big Data Accelerates Earnings Growth in Banking and Insurancejuergen_urbanski100% (1)

- Top Questions For Evaluating Hadoop Platform VendorsДокумент3 страницыTop Questions For Evaluating Hadoop Platform Vendorsjuergen_urbanski100% (1)

- Building A High-Performing Marketing Function For A Global IT Services PlayerДокумент4 страницыBuilding A High-Performing Marketing Function For A Global IT Services Playerjuergen_urbanski100% (1)

- Driving Success With Hadoop at The World's Largest TelcosДокумент29 страницDriving Success With Hadoop at The World's Largest Telcosjuergen_urbanskiОценок пока нет

- Hadoop Summit - 21 Telco Use Cases - UrbanskiДокумент14 страницHadoop Summit - 21 Telco Use Cases - Urbanskijuergen_urbanskiОценок пока нет

- Plural and Singular Rules of NounsДокумент6 страницPlural and Singular Rules of NounsDatu rox AbdulОценок пока нет

- Progress Test-Clasa A IV-AДокумент2 страницыProgress Test-Clasa A IV-Alupumihaela27Оценок пока нет

- Shad Dharan YogДокумент10 страницShad Dharan YogSanita Anjayya EppalpalliОценок пока нет

- English 8thДокумент153 страницыEnglish 8thCarlos Carreazo MОценок пока нет

- M18 - Communication À Des Fins Professionnelles en Anglais - HT-TSGHДокумент89 страницM18 - Communication À Des Fins Professionnelles en Anglais - HT-TSGHIbrahim Ibrahim RabbajОценок пока нет

- Laser WORKS 8 InstallДокумент12 страницLaser WORKS 8 InstalldavicocasteОценок пока нет

- Precis Writing Class AssignemntДокумент2 страницыPrecis Writing Class AssignemntRohit KorpalОценок пока нет

- Grammar 19 Countable and Uncountable NounsДокумент7 страницGrammar 19 Countable and Uncountable NounsHà Anh Minh LêОценок пока нет

- Grade-7 TOSДокумент6 страницGrade-7 TOSmerlyn m romerovОценок пока нет

- Gatsby's False Prophecy of the American DreamДокумент14 страницGatsby's False Prophecy of the American DreamDorottya MagyarОценок пока нет

- Algorithms and Data Structures-Searching AlgorithmsДокумент15 страницAlgorithms and Data Structures-Searching AlgorithmsTadiwanashe GanyaОценок пока нет

- English Week PaperworkДокумент6 страницEnglish Week PaperworkYusnamariah Md YusopОценок пока нет

- Identity Politics Challenges To Psychology S UnderstandingДокумент12 страницIdentity Politics Challenges To Psychology S UnderstandingDiego GonzalezОценок пока нет

- Government Polytechnic, Solapur: Micro Project Proposal OnДокумент10 страницGovernment Polytechnic, Solapur: Micro Project Proposal OnRitesh SulakheОценок пока нет

- TagThatPhotoUserGuide LatestДокумент37 страницTagThatPhotoUserGuide Latestob37Оценок пока нет

- Solutions - Principle of Mathematical Induction: Exercise # IДокумент12 страницSolutions - Principle of Mathematical Induction: Exercise # Inucleus unacademyОценок пока нет

- Jesus Is AliveДокумент3 страницыJesus Is AliveNatalie MulfordОценок пока нет

- Daily Story Simile Stella Snow QueenДокумент3 страницыDaily Story Simile Stella Snow Queenapi-300281604Оценок пока нет

- X++ RDP ReportДокумент18 страницX++ RDP ReportosamaanjumОценок пока нет

- Works: Oland ArthesДокумент27 страницWorks: Oland ArthesManuel De Damas Morales100% (1)

- Mini Python - BeginnerДокумент82 страницыMini Python - BeginnerBemnet GirmaОценок пока нет

- Most Famous Paintings of The RenaissanceДокумент4 страницыMost Famous Paintings of The RenaissanceKathy SarmientoОценок пока нет

- Peace at Home, Peace in The World.": M. Kemal AtatürkДокумент12 страницPeace at Home, Peace in The World.": M. Kemal AtatürkAbdussalam NadirОценок пока нет

- UntitledДокумент2 страницыUntitledrajuronaldОценок пока нет

- Schools Division of Camarines Sur Learning Activity Sheet in Computer Systems Servicing NC IiДокумент12 страницSchools Division of Camarines Sur Learning Activity Sheet in Computer Systems Servicing NC IiBula NHS (Region V - Camarines Sur)Оценок пока нет