Академический Документы

Профессиональный Документы

Культура Документы

Chapter 16

Загружено:

maustroОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Chapter 16

Загружено:

maustroАвторское право:

Доступные форматы

ECON1203 Statistics

Chapter 16 Simple Linear

Regression & Correlation

Contents

1.

2.

3.

4.

5.

6.

Model

Estimating the Coefficients

Error Variable: Required Conditions

Assessing the Model

Using the Regression Equation

Regression Diagnostics (Part 1)

Introduction

Regression analysis predicts one variable based on other variables.

The dependant variable is to be forecast ( Y ).

The statistics practitioner believes that it is related to independent

variables ( X 1 , X 2 , , X k ).

Correlation analysis determines whether a relationship exists:

o Scatter diagram

o Coefficient of correlation

o Covariance

16.1 Model

Deterministic models determine the dependent variable from the

independent variables. They are unrealistic because there may be other

influencing variables.

Probabilistic models include the randomness of real life (e.g. an error

variable).

The error variable ( ) is the difference between the estimated and

actual dependent variable.

The first-order linear model (or simple linear regression model) is a

straight-line model with one independent variable:

o

y= 0 + 1 x +

y=

dependant variable

x=

independent variable

0=

y-intercept

1=

slope of the line

error variable

16.2 Estimating the coefficients

The least squares line ( ^y =b 0+ b1 x ) uses the least squares method

n

to minimise the sum of squared deviations (

i=1

).

The sum of squares for error (SSE) is the minimised sum of squared

deviations.

Residuals are the deviations between the actual data points and the line:

o

( y i ^y i )2

e i= y i ^y i

Least squares line coefficients:

s xy

b1 =

b0 = y b 1 x

s2x

( x ix )( y i y )

s xy = i=1

n1

( x i x )2

s 2x = i=1

n1

xi

x = i=1

n

n

y = i=1

n

Shortcuts:

o

yi

s xy =

1

n1

1

s =

n1

2

x

xi yi

i=1

x i y i i=1

x

i=1

( )

xi

n

2

i

i=1

i=1

Excel:

o Have two columns of data: one for the dependent variable; the

other for the independent variable.

o Click Data, Data Analysis, and Regression.

o

Specify the Input

Range and the Input

Range.

Assumptions of Classical Linear Regression Model

1. The least squares line coefficients ( 0

1 ) are linear.

and

2. The observed variables ( x i , y i ) are randomly sampled.

x i ; they are not all equal.

3. There is sample variation in

4. The mean of

a. Therefore,

5. The variance of

x :

is 0, regardless of

and

E ( i|x i )=0 .

are uncorrelated.

is a constant:

Var ( i ) = 2 .

i

a. But in reality, not necessarily true (e.g. higher income may increase

variance in expenditure because they have a greater range of

choices)

6. The error variables are uncorrelated:

Cov ( i , j )=0 .

7. The error variables are normally distributed:

i N ( 0, 2 ) .

16.4 Assessing the Model

There are three ways of assess how well the linear model fits the data:

1. The standard error of estimate

2. The

t -test of the slope

3. The coefficient of determination

Sum of Squares for Error (SSE)

n

SSE= ( y i ^y i ) =( n1 ) s

i=1

2

y

s2xy

s2x

Standard Error of Estimate ( s )

SSE

( s = n2

) isusually compared with y

Testing the Slope

We can use hypothesis testing to infer the population slope ( 1 ) from

the sample slope ( b1 ).

If

1=0 , there is no linear relationship (but there may be a quadratic

relationship).

The sample slope ( b1 ) is an unbiased estimator of the population

slope ( 1 ) ( E ( b1 )= 1 ) because the estimated standard error of

b1

s

s

=

b

(

( n1 ) s 2x ) decreases as

1

Test statistic for

1=t=

increases.

b1 1

[ where =n2 ]

sb

1

Confidence interval estimator of

1=b1 t 2 s b [ where =n2 ]

1

Coefficient of Determination

Coefficient of Determination:

R 2=

s2xy

2

=1

2

sx s y

( y i y ) SSE = Explained variation

SSE

=

2

Variation y

( y i y )

( yi y )2

( y i y ) =( y i y ) + ^y i^y i

( y i y ) =Unexplained residual ( y i ^y i ) + Explained variation ( ^y y )

( y i y )2= ( yi ^y i )2+ ( ^y i y )2

Variation y=SSE+ SSR

Coefficient of Correlation

We can use hypothesis testing to infer the population coefficient of

correlation ( ) from the sample coefficient of correlation ( r ).

Sample coefficient of correlation:

Test statistic for

t=r

r=

s xy

sx sy

n2

1r 2 [where

=n2 and variables are

bivariate normally distributed]

16.5 Using the Regression Equation

^y i=b 0+b1 x i

is a point estimator.

There are two interval estimators:

1. Prediction interval:

1 ( x gx )

^y t 2,n2 s 1+ +

n ( n1 ) s 2x

2. Confidence interval estimator of the expected value of

y : ^y t 2,n2 s

2

1 ( x gx )

+

n ( n1 ) s 2x

The farther the given value of

x , the greater the estimated error:

is from

( x gx )

( n1 ) s 2x

16.6 Regression Diagnostics (Part 1)

Residual analysis

Standard deviation of the ith residual : s =s 1h i

i

2

1 ( x ix )

Where hi= +

n ( n1 ) s 2x

Normality

The residuals should be normally distributed.

Homoscedasticity

The variance of the error variable should be constant.

Independence of the error variable

The error variable should be independent.

Outliers

Outliers may be:

1. Recording errors

2. Points that should not have been included in the sample

3. Valid and should belong to the sample

Influential observations

Some points are influence in determining a least squares line. Without it,

there would be no least squares line.

Procedure

1. Develop a model that has a theoretical basis; find an independent variable

that you believe is linearly related to the dependent variable.

2. Gather data for the two variables from (preferably) a controlled

experiment, or observational data.

3. Draw a scatter diagram. Determine whether a linear model is appropriate.

Identify outliers and influential observations.

4. Determine the regression equation.

5. Calculate the residuals and check the required conditions:

a. Is the error variable normal?

b. Is the variance constant?

c. Are the errors independent?

6. Assess the models fit:

a. Compute the standard error of estimate.

b. Test

c.

7. If the

a.

b.

1 or

to determine whether there is a linear

relationship.

Compute the coefficient of determination.

model fits the data, use the regression equation to:

Predict a particular value of the dependant variable

Estimate its mean

Вам также может понравиться

- Studio Ghibli - Ghibli MedleyДокумент6 страницStudio Ghibli - Ghibli MedleyNurul Ain Razali100% (14)

- Christmas - Classical - Bach and Rachmaninoff MedleyДокумент8 страницChristmas - Classical - Bach and Rachmaninoff Medleymaustro100% (1)

- Formulas and Tables for Inferential StatisticsДокумент29 страницFormulas and Tables for Inferential StatisticsRobert ChapmanОценок пока нет

- Career FAQs - Accounting PDFДокумент150 страницCareer FAQs - Accounting PDFmaustroОценок пока нет

- Career FAQs - Accounting PDFДокумент150 страницCareer FAQs - Accounting PDFmaustroОценок пока нет

- LSS Cheat SheetsДокумент29 страницLSS Cheat SheetsPaweł Szpynda100% (2)

- Career FAQs - Legal PDFДокумент197 страницCareer FAQs - Legal PDFmaustroОценок пока нет

- Stat331-Multiple Linear RegressionДокумент13 страницStat331-Multiple Linear RegressionSamantha YuОценок пока нет

- Detailed Lesson Plan: (Abm - Bm11Pad-Iig-2)Документ15 страницDetailed Lesson Plan: (Abm - Bm11Pad-Iig-2)Princess Duqueza100% (1)

- Career FAQs - Work From Home PDFДокумент202 страницыCareer FAQs - Work From Home PDFmaustroОценок пока нет

- The Sims - Build 5Документ12 страницThe Sims - Build 5maustroОценок пока нет

- The Sims - Build 5Документ12 страницThe Sims - Build 5maustroОценок пока нет

- 9.1 Multiple Choice: Chapter 9 Assessing Studies Based On Multiple RegressionДокумент38 страниц9.1 Multiple Choice: Chapter 9 Assessing Studies Based On Multiple Regressiondaddy's cock100% (1)

- Multiple Regression Analysis: I 0 1 I1 K Ik IДокумент30 страницMultiple Regression Analysis: I 0 1 I1 K Ik Iajayikayode100% (1)

- Learn Statistics Fast: A Simplified Detailed Version for StudentsОт EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsОценок пока нет

- Emet2007 NotesДокумент6 страницEmet2007 NoteskowletОценок пока нет

- Linear Regression Model with 1 PredictorДокумент35 страницLinear Regression Model with 1 PredictorgeeorgiОценок пока нет

- Lesson 2: Multiple Linear Regression Model (I) : E L F V A L U A T I O N X E R C I S E SДокумент14 страницLesson 2: Multiple Linear Regression Model (I) : E L F V A L U A T I O N X E R C I S E SMauricio Ortiz OsorioОценок пока нет

- Heteroskedasticity Tests and GLS EstimatorsДокумент21 страницаHeteroskedasticity Tests and GLS EstimatorsnovicasupicnsОценок пока нет

- Chapter 1Документ17 страницChapter 1Getasew AsmareОценок пока нет

- Lecture3 Module2 EconometricsДокумент11 страницLecture3 Module2 Econometricssujit_sekharОценок пока нет

- Standard ErrorДокумент3 страницыStandard ErrorUmar FarooqОценок пока нет

- Statistics NotesДокумент15 страницStatistics NotesMarcus Pang Yi ShengОценок пока нет

- Chapter 4Документ68 страницChapter 4Nhatty WeroОценок пока нет

- 2b Multiple Linear RegressionДокумент14 страниц2b Multiple Linear RegressionNamita DeyОценок пока нет

- Oversikt ECN402Документ40 страницOversikt ECN402Mathias VindalОценок пока нет

- Cunningham Spring 2017 Causal InferenceДокумент766 страницCunningham Spring 2017 Causal InferenceHe HОценок пока нет

- TMA1111 Regression & Correlation AnalysisДокумент13 страницTMA1111 Regression & Correlation AnalysisMATHAVAN A L KRISHNANОценок пока нет

- Statistical Methods for ANOVA and Multiple Regression AnalysisДокумент18 страницStatistical Methods for ANOVA and Multiple Regression Analysis李姿瑩Оценок пока нет

- Instruction Manual: PH412: General Physics Laboratory IДокумент27 страницInstruction Manual: PH412: General Physics Laboratory IDhananjay KapseОценок пока нет

- Measurement Error Models: Frisch Bounds and Attenuation BiasДокумент79 страницMeasurement Error Models: Frisch Bounds and Attenuation BiasTandis AsadiОценок пока нет

- Simple Linear Regression (Chapter 11) : Review of Some Inference and Notation: A Common Population Mean ModelДокумент24 страницыSimple Linear Regression (Chapter 11) : Review of Some Inference and Notation: A Common Population Mean ModelHaizhou ChenОценок пока нет

- 325unit 1 Simple Regression AnalysisДокумент10 страниц325unit 1 Simple Regression AnalysisutsavОценок пока нет

- Regression Analysis Techniques and AssumptionsДокумент43 страницыRegression Analysis Techniques and AssumptionsAmer RahmahОценок пока нет

- Regression Explains Variation in Dependent VariablesДокумент15 страницRegression Explains Variation in Dependent VariablesJuliefil Pheng AlcantaraОценок пока нет

- TSNotes 1Документ29 страницTSNotes 1YANGYUXINОценок пока нет

- HW 03 SolДокумент9 страницHW 03 SolfdfОценок пока нет

- Simple Linear Regression AnalysisДокумент7 страницSimple Linear Regression AnalysiscarixeОценок пока нет

- CH 2Документ31 страницаCH 2Hama arasОценок пока нет

- MicecxДокумент44 страницыMicecxchrisignmОценок пока нет

- MAE 300 TextbookДокумент95 страницMAE 300 Textbookmgerges15Оценок пока нет

- SST307 CompleteДокумент72 страницыSST307 Completebranmondi8676Оценок пока нет

- Solving Multicollinearity Problem: Int. J. Contemp. Math. Sciences, Vol. 6, 2011, No. 12, 585 - 600Документ16 страницSolving Multicollinearity Problem: Int. J. Contemp. Math. Sciences, Vol. 6, 2011, No. 12, 585 - 600Tiberiu TincaОценок пока нет

- Applied Linear Models in Matrix FormДокумент22 страницыApplied Linear Models in Matrix FormGeorgiana DumitricaОценок пока нет

- Question 4 (A) What Are The Stochastic Assumption of The Ordinary Least Squares? Assumption 1Документ9 страницQuestion 4 (A) What Are The Stochastic Assumption of The Ordinary Least Squares? Assumption 1yaqoob008Оценок пока нет

- 1B40 DA Lecture 2v2Документ9 страниц1B40 DA Lecture 2v2Roy VeseyОценок пока нет

- Linear ModelsДокумент92 страницыLinear ModelsCART11Оценок пока нет

- CH - 4 - Econometrics UGДокумент33 страницыCH - 4 - Econometrics UGMewded DelelegnОценок пока нет

- Unit 3 Simple Correlation and Regression Analysis1Документ16 страницUnit 3 Simple Correlation and Regression Analysis1Rhea MirchandaniОценок пока нет

- Wooldridge NotesДокумент15 страницWooldridge Notesrajat25690Оценок пока нет

- Applied Business Forecasting and Planning: Multiple Regression AnalysisДокумент100 страницApplied Business Forecasting and Planning: Multiple Regression AnalysisRobby PangestuОценок пока нет

- MULTIPLE LINEAR REGRESSION MODEL FIT AND COEFFICIENT INTERPRETATIONДокумент18 страницMULTIPLE LINEAR REGRESSION MODEL FIT AND COEFFICIENT INTERPRETATIONWERU JOAN NYOKABIОценок пока нет

- STAT3003 Chapter08Документ8 страницSTAT3003 Chapter08SapphireОценок пока нет

- unit 5Документ104 страницыunit 5downloadjain123Оценок пока нет

- Types of StatisticsДокумент7 страницTypes of StatisticsTahirОценок пока нет

- Lecture 6 Simple Linear RegressionДокумент36 страницLecture 6 Simple Linear RegressionAshley Sophia GarciaОценок пока нет

- Multiple Regression AnalysisДокумент8 страницMultiple Regression AnalysisLeia SeunghoОценок пока нет

- Lecture Notes for 36-707 Linear RegressionДокумент228 страницLecture Notes for 36-707 Linear Regressionkeyyongpark100% (2)

- Lecture 2Документ15 страницLecture 2satyabashaОценок пока нет

- Lec9 1Документ63 страницыLec9 1Kashef HodaОценок пока нет

- STA 2101 Assignment 1 ReviewДокумент8 страницSTA 2101 Assignment 1 ReviewdflamsheepsОценок пока нет

- Regression Model AssumptionsДокумент50 страницRegression Model AssumptionsEthiop LyricsОценок пока нет

- The Uncertainty of A Result From A Linear CalibrationДокумент13 страницThe Uncertainty of A Result From A Linear Calibrationfra80602Оценок пока нет

- Regression Equation GuideДокумент12 страницRegression Equation GuideKainaat YaseenОценок пока нет

- Scatter Plot/Diagram Simple Linear Regression ModelДокумент43 страницыScatter Plot/Diagram Simple Linear Regression ModelnooraОценок пока нет

- ch12 0Документ82 страницыch12 0pradeep kumarОценок пока нет

- BivariateДокумент28 страницBivariateVikas SainiОценок пока нет

- LSE EC221 Solutions to Problem Set 2Документ6 страницLSE EC221 Solutions to Problem Set 2slyk1993Оценок пока нет

- Regression & CorrelationДокумент18 страницRegression & CorrelationJunaid JunaidОценок пока нет

- Simple Linear Regression Analysis in 40 CharactersДокумент55 страницSimple Linear Regression Analysis in 40 Characters王宇晴Оценок пока нет

- Econ-T2 EngДокумент60 страницEcon-T2 EngEnric Masclans PlanasОценок пока нет

- Career FAQs - Save The World PDFДокумент193 страницыCareer FAQs - Save The World PDFmaustroОценок пока нет

- Career FAQs - Be Your Own Boss PDFДокумент233 страницыCareer FAQs - Be Your Own Boss PDFmaustroОценок пока нет

- Career FAQs InformationTechnologyДокумент178 страницCareer FAQs InformationTechnologyxx1yyy1Оценок пока нет

- Career FAQs Banking CareersДокумент181 страницаCareer FAQs Banking CareersTimothy NguyenОценок пока нет

- Career FAQs - Sample Cover Letter PDFДокумент2 страницыCareer FAQs - Sample Cover Letter PDFmaustroОценок пока нет

- Career FAQs - Property PDFДокумент169 страницCareer FAQs - Property PDFmaustroОценок пока нет

- Career FAQs - Law (NSW and ACT) PDFДокумент148 страницCareer FAQs - Law (NSW and ACT) PDFmaustroОценок пока нет

- Career FAQs - Sample CV PDFДокумент4 страницыCareer FAQs - Sample CV PDFmaustroОценок пока нет

- Career FAQs - Financial Planning PDFДокумент206 страницCareer FAQs - Financial Planning PDFmaustroОценок пока нет

- Career FAQs - Investment Banking PDFДокумент170 страницCareer FAQs - Investment Banking PDFmaustroОценок пока нет

- Career FAQs - Sample CV PDFДокумент4 страницыCareer FAQs - Sample CV PDFmaustroОценок пока нет

- Career FAQs - Save The World PDFДокумент193 страницыCareer FAQs - Save The World PDFmaustroОценок пока нет

- Career FAQs - Law (NSW and ACT) PDFДокумент148 страницCareer FAQs - Law (NSW and ACT) PDFmaustroОценок пока нет

- Career FAQs - Entertainment PDFДокумент190 страницCareer FAQs - Entertainment PDFmaustroОценок пока нет

- Career FAQs - Investment Banking PDFДокумент170 страницCareer FAQs - Investment Banking PDFmaustroОценок пока нет

- Career FAQs Banking CareersДокумент181 страницаCareer FAQs Banking CareersTimothy NguyenОценок пока нет

- Career FAQs - Sample Cover Letter PDFДокумент2 страницыCareer FAQs - Sample Cover Letter PDFmaustroОценок пока нет

- Commentary On Cases of BreachДокумент46 страницCommentary On Cases of BreachmaustroОценок пока нет

- The Sims - Build 3, 'Since We Met'Документ9 страницThe Sims - Build 3, 'Since We Met'maustroОценок пока нет

- Career FAQs - Financial Planning PDFДокумент206 страницCareer FAQs - Financial Planning PDFmaustroОценок пока нет

- Career FAQs - Entertainment PDFДокумент190 страницCareer FAQs - Entertainment PDFmaustroОценок пока нет

- Laputa - Main Theme (A Minor)Документ10 страницLaputa - Main Theme (A Minor)maustroОценок пока нет

- Ajpe 6570Документ1 страницаAjpe 6570Andre FaustoОценок пока нет

- AAI 2 Part 12 PSA 530-Audit SamplingДокумент171 страницаAAI 2 Part 12 PSA 530-Audit SamplingDanielle May CequiñaОценок пока нет

- PAIRED T TEST Note and Example1Документ25 страницPAIRED T TEST Note and Example1Zul ZhafranОценок пока нет

- Analysis of Variance, Design, and RegressionДокумент587 страницAnalysis of Variance, Design, and Regressionsdssd sdsОценок пока нет

- Prof. Januario Flores JRДокумент11 страницProf. Januario Flores JRJeraldine RepolloОценок пока нет

- Chap 6Документ64 страницыChap 6Phạm Thu HòaОценок пока нет

- How To Do Xtabond2: An Introduction To "Difference" and "System" GMM in Stata by David RoodmanДокумент45 страницHow To Do Xtabond2: An Introduction To "Difference" and "System" GMM in Stata by David RoodmanAnwar AanОценок пока нет

- rr311801 Probability and StatisticsДокумент9 страницrr311801 Probability and StatisticsSRINIVASA RAO GANTAОценок пока нет

- Soederlind P. Lecture Notes For Econometrics (LN, Stockholm, 2002) (L) (86s) - GL - PDFДокумент86 страницSoederlind P. Lecture Notes For Econometrics (LN, Stockholm, 2002) (L) (86s) - GL - PDFLorena LCОценок пока нет

- DocxДокумент3 страницыDocxMikey MadRat0% (1)

- Best Linear PredictorДокумент15 страницBest Linear PredictorHoracioCastellanosMuñoaОценок пока нет

- Ijimsep M 2 2018Документ13 страницIjimsep M 2 2018JosephNathanMarquezОценок пока нет

- STPM (Maths M) Paper2 2013Документ5 страницSTPM (Maths M) Paper2 2013Yvette MackОценок пока нет

- 5DATAA1Документ68 страниц5DATAA1angelicОценок пока нет

- FIDPДокумент9 страницFIDPMyla Rose AcobaОценок пока нет

- Crosstabs: NotesДокумент21 страницаCrosstabs: NotesUnik TianОценок пока нет

- ANOVA, Pearson EtcДокумент6 страницANOVA, Pearson EtcMatt Joseph CabantingОценок пока нет

- 4b) ppt-C4-prt 2Документ48 страниц4b) ppt-C4-prt 2Gherico MojicaОценок пока нет

- 6.3 (2) : and of Binomial Random Variables AP Statistics NameДокумент2 страницы6.3 (2) : and of Binomial Random Variables AP Statistics NameMcKenna ThomОценок пока нет

- By: Prof. Radhika Sahni Binomial DistributionДокумент23 страницыBy: Prof. Radhika Sahni Binomial DistributionYyyyyОценок пока нет

- MRCP Passmedicine Statistics 2021Документ25 страницMRCP Passmedicine Statistics 2021shenouda abdelshahidОценок пока нет

- Thong Ke 3Документ19 страницThong Ke 3Tịnh Vân PhạmОценок пока нет

- A1INSE6220 Winter17sol PDFДокумент5 страницA1INSE6220 Winter17sol PDFpicalaОценок пока нет

- 2.2 Hyphothesis Testing (Continuous)Документ35 страниц2.2 Hyphothesis Testing (Continuous)NS-Clean by SSCОценок пока нет

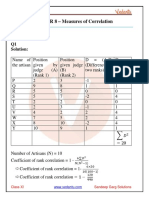

- Sandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationДокумент5 страницSandeep Garg Economics Class 11 Solutions For Chapter 8 - Measures of CorrelationRiddhiman JainОценок пока нет

- Regression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurДокумент36 страницRegression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurAbcОценок пока нет