Академический Документы

Профессиональный Документы

Культура Документы

3 Kirkpatrick Evaluation Model Paper

Загружено:

erine5995Исходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

3 Kirkpatrick Evaluation Model Paper

Загружено:

erine5995Авторское право:

Доступные форматы

kirkpatrick's learning and

training evaluation theory

Donald L Kirkpatrick's training

evaluation model - the four levels of

learning evaluation

Donald L Kirkpatrick, Professor Emeritus, University Of Wisconsin

(where he achieved his BBA, MBA and PhD), first published his ideas

in 1959, in a series of articles in the Journal of American Society of

Training Directors. The articles were subsequently included in

Kirkpatrick's book Evaluating Training Programs (originally published

in 1994; now in its 3rd edition - Berrett-Koehler Publishers).

Donald Kirkpatrick was president of the American Society for

Training and Development (ASTD) in 1975. Kirkpatrick has written

several other significant books about training and evaluation, more

recently with his similarly inclined son James, and has consulted

with some of the world's largest corporations.

Donald Kirkpatrick's 1994 book Evaluating Training Programs

defined his originally published ideas of 1959, thereby further

increasing awareness of them, so that his theory has now become

arguably the most widely used and popular model for the evaluation

of training and learning. Kirkpatrick's four-level model is now

considered an industry standard across the HR and training

communities.

More recently Don Kirkpatrick formed his own company, Kirkpatrick

Partners, whose website provides information about their services

and methods, etc.

kirkpatrick's four levels of evaluation

model

The four levels of Kirkpatrick's evaluation model essentially

measure:

reaction of student - what they thought and felt about

the training

learning - the resulting increase in knowledge or

capability

behaviour - extent of behaviour and capability

improvement and implementation/application

results - the effects on the business or environment

resulting from the trainee's performance

All these measures are recommended for full and meaningful

evaluation of learning in organizations, although their application

broadly increases in complexity, and usually cost, through the levels

from level 1-4.

Quick Training Evaluation and Feedback

Form, based on Kirkpatrick's

Learning Evaluation Model - (Excel

file)

kirkpatrick's four levels of training

evaluation

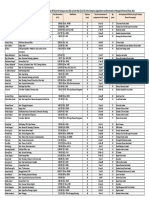

This grid illustrates the basic Kirkpatrick structure at a glance. The

second grid, beneath this one, is the same thing with more detail.

leve evaluatio evaluation

l

n type

description and

(what is

characteristics

measured

)

examples of

evaluation tools

and methods

relevance and

practicability

Reaction

evaluation is how

the delegates

felt about the

training or

learning

experience.

'Happy sheets',

feedback forms.

Quick and very easy

to obtain.

Verbal reaction,

post-training

surveys or

questionnaires.

Not expensive to

gather or to analyse.

Learning

evaluation is the

measurement of

the increase in

knowledge before and after.

Typically

assessments or

tests before and

after the training.

Relatively simple to

set up; clear-cut for

quantifiable skills.

Behaviour

Observation and

Reaction

Learning

Behaviou

Interview or

observation can

also be used.

Less easy for complex

learning.

Measurement of

evaluation is the

extent of applied

learning back on

the job implementation.

interview over

time are required

to assess change,

relevance of

change, and

sustainability of

change.

behaviour change

typically requires

cooperation and skill

of line-managers.

Results

Results

evaluation is the

effect on the

business or

environment by

the trainee.

Measures are

already in place

via normal

management

systems and

reporting - the

challenge is to

relate to the

trainee.

Individually not

difficult; unlike whole

organisation.

Process must

attribute clear

accountabilities.

kirkpatrick's four levels of training

evaluation in detail

This grid illustrates the Kirkpatrick's structure detail, and particularly

the modern-day interpretation of the Kirkpatrick learning evaluation

model, usage, implications, and examples of tools and methods.

This diagram is the same format as the one above but with more

detail and explanation:

evaluatio

n level

and type

evaluation

description and

characteristics

examples of

evaluation tools and

methods

relevance and

practicability

1.

Reaction

Reaction

evaluation is how

the delegates felt,

and their personal

reactions to the

training or learning

experience, for

example:

Typically 'happy

sheets'.

Can be done

immediately the

training ends.

Did the trainees like

and enjoy the

training?

Did they consider the

training relevant?

Was it a good use of

their time?

Feedback forms based

on subjective personal

reaction to the training

experience.

Verbal reaction which

can be noted and

analysed.

Very easy to obtain

reaction feedback

Feedback is not

expensive to gather

or to analyse for

groups.

Post-training surveys or Important to know

questionnaires.

that people were not

upset or

Online evaluation or

disappointed.

grading by delegates.

Important that

people give a

Did they like the

venue, the style,

timing, domestics,

etc?

Level of participation.

Subsequent verbal or

written reports given

by delegates to

managers back at their

jobs.

positive impression

when relating their

experience to others

who might be

deciding whether to

experience same.

Typically assessments

or tests before and

after the training.

Relatively simple to

set up, but more

investment and

thought required

than reaction

evaluation.

Ease and comfort of

experience.

Level of effort

required to make the

most of the learning.

Perceived

practicability and

potential for applying

the learning.

2.

Learning

Learning

evaluation is the

measurement of the

increase in

knowledge or

intellectual

capability from

before to after the

learning experience:

Did the trainees learn

what what intended

to be taught?

Did the trainee

experience what was

intended for them to

experience?

What is the extent of

advancement or

change in the

trainees after the

training, in the

direction or area that

was intended?

Interview or

observation can be

used before and after

although this is timeconsuming and can be

inconsistent.

Highly relevant and

clear-cut for certain

training such as

quantifiable or

Methods of assessment technical skills.

need to be closely

related to the aims of

Less easy for more

the learning.

complex learning

such as attitudinal

Measurement and

development, which

analysis is possible and is famously difficult

easy on a group scale. to assess.

Reliable, clear scoring

and measurements

need to be established,

so as to limit the risk of

inconsistent

assessment.

Cost escalates if

systems are poorly

designed, which

increases work

required to measure

and analyse.

Hard-copy, electronic,

online or interview

style assessments are

all possible.

3.

Behaviou

r

Behaviour

evaluation is the

extent to which the

trainees applied the

learning and

changed their

Observation and

interview over time are

required to assess

change, relevance of

change, and

sustainability of

Measurement of

behaviour change is

less easy to quantify

and interpret than

reaction and

behaviour, and this

can be immediately

and several months

after the training,

depending on the

situation:

Did the trainees put

their learning into

effect when back on

the job?

Were the relevant

skills and knowledge

used

Was there noticeable

and measurable

change in the activity

and performance of

the trainees when

back in their roles?

Was the change in

behaviour and new

level of knowledge

sustained?

Would the trainee be

able to transfer their

learning to another

person?

Is the trainee aware

of their change in

behaviour,

knowledge, skill

level?

change.

learning evaluation.

Arbitrary snapshot

assessments are not

reliable because people

change in different

ways at different times.

Simple quick

response systems

unlikely to be

adequate.

Assessments need to

be subtle and ongoing,

and then transferred to

a suitable analysis tool.

Assessments need to

be designed to reduce

subjective judgement

of the observer or

interviewer, which is a

variable factor that can

affect reliability and

consistency of

measurements.

The opinion of the

trainee, which is a

relevant indicator, is

also subjective and

unreliable, and so

needs to be measured

in a consistent defined

way.

Cooperation and skill

of observers,

typically linemanagers, are

important factors,

and difficult to

control.

Management and

analysis of ongoing

subtle assessments

are difficult, and

virtually impossible

without a welldesigned system

from the beginning.

Evaluation of

implementation and

application is an

extremely important

assessment - there

is little point in a

good reaction and

good increase in

360-degree feedback is capability if nothing

useful method and

changes back in the

need not be used

job, therefore

before training,

evaluation in this

because respondents

area is vital, albeit

can make a judgement challenging.

as to change after

training, and this can

Behaviour change

be analysed for groups evaluation is

of respondents and

possible given good

trainees.

support and

involvement from

Assessments can be

line managers or

designed around

trainees, so it is

relevant performance

helpful to involve

scenarios, and specific them from the start,

key performance

and to identify

indicators or criteria.

benefits for them,

which links to the

Online and electronic

level 4 evaluation

assessments are more

below.

difficult to incorporate assessments tend to be

more successful when

integrated within

existing management

and coaching protocols.

Self-assessment can be

useful, using carefully

designed criteria and

measurements.

4.

Results

Results evaluation

is the effect on the

business or

environment

resulting from the

improved

performance of the

trainee - it is the acid

test.

Measures would

typically be business

or organisational key

performance

indicators, such as:

It is possible that many

of these measures are

already in place via

normal management

systems and reporting.

The challenge is to

identify which and how

relate to to the

trainee's input and

influence.

Therefore it is

important to identify

and agree

accountability and

Volumes, values,

relevance with the

percentages,

trainee at the start of

timescales, return on the training, so they

investment, and other understand what is to

quantifiable aspects

be measured.

of organisational

This process overlays

performance, for

instance; numbers of normal good

management practice complaints, staff

it simply needs linking

turnover, attrition,

to the training input.

failures, wastage,

non-compliance,

Failure to link to

quality ratings,

training input type and

achievement of

timing will greatly

standards and

reduce the ease by

accreditations,

which results can be

growth, retention, etc.

attributed to the

training.

Individually, results

evaluation is not

particularly difficult;

across an entire

organisation it

becomes very much

more challenging,

not least because of

the reliance on linemanagement, and

the frequency and

scale of changing

structures,

responsibilities and

roles, which

complicates the

process of

attributing clear

accountability.

Also, external factors

greatly affect

organisational and

business

performance, which

cloud the true cause

of good or poor

results.

For senior people

particularly, annual

appraisals and ongoing

agreement of key

business objectives are

integral to measuring

business results

derived from training.

Since Kirkpatrick established his original model, other theorists (for

example Jack Phillips), and indeed Kirkpatrick himself, have referred

to a possible fifth level, namely ROI (Return On Investment). In my

view ROI can easily be included in Kirkpatrick's original fourth level

'Results'. The inclusion and relevance of a fifth level is therefore

arguably only relevant if the assessment of Return On Investment

might otherwise be ignored or forgotten when referring simply to

the 'Results' level.

Learning evaluation is a widely researched area. This is

understandable since the subject is fundamental to the existence

and performance of education around the world, not least

universities, which of course contain most of the researchers and

writers.

While Kirkpatrick's model is not the only one of its type, for most

industrial and commercial applications it suffices; indeed most

organisations would be absolutely thrilled if their training and

learning evaluation, and thereby their ongoing people-development,

were planned and managed according to Kirkpatrick's model.

For reference, should you be keen to look at more ideas, there are

many to choose from...

Jack Phillips' Five Level ROI Model

Daniel Stufflebeam's CIPP Model (Context, Input, Process,

Product)

Robert Stake's Responsive Evaluation Model

Robert Stake's Congruence-Contingency Model

Kaufman's Five Levels of Evaluation

CIRO (Context, Input, Reaction, Outcome)

PERT (Program Evaluation and Review Technique)

Alkins' UCLA Model

Michael Scriven's Goal-Free Evaluation Approach

Provus's Discrepancy Model

Eisner's Connoisseurship Evaluation Models

Illuminative Evaluation Model

Portraiture Model

and also the American Evaluation Association

Also look at Leslie Rae's excellent Training Evaluation and tools

available on this site, which, given Leslie's experience and

knowledge, will save you the job of researching and designing your

own tools.

Вам также может понравиться

- Basic Kirkpatrick Structure at A GlanceДокумент4 страницыBasic Kirkpatrick Structure at A GlanceGayatri GuhanОценок пока нет

- Adecco Escalation Matrix - HCLДокумент5 страницAdecco Escalation Matrix - HCLJaspal KumarОценок пока нет

- Joining Kit Sample 2Документ15 страницJoining Kit Sample 2Utkarsh AnandОценок пока нет

- MEIPL Attendance PolicyДокумент7 страницMEIPL Attendance PolicyShashank DoshiОценок пока нет

- 11 Employees Transfer Request FormДокумент1 страница11 Employees Transfer Request FormsekarОценок пока нет

- Details of Employee Remuneration1Документ2 страницыDetails of Employee Remuneration1Yogesh ChhaprooОценок пока нет

- A Study On Training and Development at Quality Hotel Sabari Classic, ChennaiДокумент61 страницаA Study On Training and Development at Quality Hotel Sabari Classic, ChennaiSubashree SubramanianОценок пока нет

- Acesoft Leave PolicyДокумент7 страницAcesoft Leave PolicySanthosh KumarОценок пока нет

- Exit InterviewДокумент3 страницыExit InterviewjohnhenryvОценок пока нет

- Daksh Leave Policy (India)Документ12 страницDaksh Leave Policy (India)ajithk75733Оценок пока нет

- Job Selection LetterДокумент3 страницыJob Selection Letterneao_rexОценок пока нет

- Tasc Nbad Joining Form 04062014Документ9 страницTasc Nbad Joining Form 04062014mohd_nasir4uОценок пока нет

- Benefits For Employees in IndiaДокумент3 страницыBenefits For Employees in IndiaYerragola PrakashОценок пока нет

- R.R. Ispat (A Unit of Gpil) : Recruitment PolicyДокумент3 страницыR.R. Ispat (A Unit of Gpil) : Recruitment PolicyVinod KumarОценок пока нет

- Self Evaluation QuestionsДокумент4 страницыSelf Evaluation QuestionsNeeraj KhatriОценок пока нет

- Employee Accommodation Policy: Ramana Gounder Medical TrustДокумент22 страницыEmployee Accommodation Policy: Ramana Gounder Medical TrustAaju KausikОценок пока нет

- Final Project by TamannaДокумент105 страницFinal Project by Tamannasarangk87Оценок пока нет

- Whichever Is Lower Is Exempt From Tax. For ExampleДокумент13 страницWhichever Is Lower Is Exempt From Tax. For ExampleNasir AhmedОценок пока нет

- Assistant Professor Gift University Gujranwala: Job DescriptionДокумент3 страницыAssistant Professor Gift University Gujranwala: Job DescriptionAli AslamОценок пока нет

- DeputationДокумент2 страницыDeputationsuryadtp suryadtpОценок пока нет

- Ehs Training Calendar - 2019Документ2 страницыEhs Training Calendar - 2019Ritesh kumarОценок пока нет

- HR 101 Employee Set Up Form PDFДокумент5 страницHR 101 Employee Set Up Form PDFLexОценок пока нет

- Perf App Model 2 - For ManagersДокумент8 страницPerf App Model 2 - For ManagersVamsi Krishna NukarajuОценок пока нет

- HR Employees and Other Department Managers To Learn Whether Certain Policies and Procedures Are Understood, Practiced and AcceptedДокумент6 страницHR Employees and Other Department Managers To Learn Whether Certain Policies and Procedures Are Understood, Practiced and Acceptedzeeshanshani1118Оценок пока нет

- Policy On Performance EvaluationДокумент5 страницPolicy On Performance EvaluationSathish Haran100% (1)

- Handling Disciplinary Matters Legally and EfficientlyДокумент44 страницыHandling Disciplinary Matters Legally and EfficientlySatyam mishra100% (2)

- HRA-004 Time and Attendance Monitoring Rev 1 v2Документ4 страницыHRA-004 Time and Attendance Monitoring Rev 1 v2Emmanuel C. DumayasОценок пока нет

- 9) Training CalendarДокумент1 страница9) Training CalendarSufiyan KhedekarОценок пока нет

- JD - HR AssistantДокумент6 страницJD - HR AssistantKashif Niaz MeoОценок пока нет

- HR PolicyДокумент25 страницHR PolicySaurabh DwivediОценок пока нет

- FY08 Car Lease Document V2Документ3 страницыFY08 Car Lease Document V2Swanidhi SinghОценок пока нет

- Technical Interview Feedback FormДокумент1 страницаTechnical Interview Feedback FormSunny DayalОценок пока нет

- Human Resoure ManagementДокумент5 страницHuman Resoure ManagementKulmeet SinghОценок пока нет

- Leave Application FormДокумент1 страницаLeave Application Formarshed777Оценок пока нет

- Training Requisition FormatДокумент1 страницаTraining Requisition FormatSGОценок пока нет

- Business Communication CUSAT Module 4Документ18 страницBusiness Communication CUSAT Module 4Anand S PОценок пока нет

- Skills MatrixДокумент3 страницыSkills Matrixjagansd3Оценок пока нет

- ECMS User Manual - : PAN IndiaДокумент70 страницECMS User Manual - : PAN IndiaKrishОценок пока нет

- Leave and Attendance Requirements - V2.0Документ43 страницыLeave and Attendance Requirements - V2.0simplynotesОценок пока нет

- 02.c.d.e.f.h.induction and Orientation PolicyДокумент7 страниц02.c.d.e.f.h.induction and Orientation PolicyArjun Bhanot100% (1)

- Exit Handbook For HR Managers and IndividualsДокумент7 страницExit Handbook For HR Managers and IndividualsExenta HrmsОценок пока нет

- Ergonomics PresentationДокумент16 страницErgonomics Presentationapi-352405713Оценок пока нет

- 221 HRM PPL Ta Ijp v4 0Документ12 страниц221 HRM PPL Ta Ijp v4 0Srishti GoyalОценок пока нет

- HR Induction Manual - Final - MIT Only (Compatibility Mode) PDFДокумент31 страницаHR Induction Manual - Final - MIT Only (Compatibility Mode) PDFAjay PrajapatiОценок пока нет

- Attendance Policy Ver 6.0Документ6 страницAttendance Policy Ver 6.0naveen kumarОценок пока нет

- Employee Referral Scheme - Cite HRДокумент3 страницыEmployee Referral Scheme - Cite HRAthiq MohamedОценок пока нет

- Relocation Policy FirstFixДокумент2 страницыRelocation Policy FirstFixmarklee torresОценок пока нет

- Induction Policy For New Employees 782 205Документ12 страницInduction Policy For New Employees 782 205mehtaОценок пока нет

- LTA PolicyДокумент2 страницыLTA PolicyAnuradha ParasaramОценок пока нет

- Help Desk Review Handbook For Employees and Managers: The Exenta HRMS Training ManualДокумент5 страницHelp Desk Review Handbook For Employees and Managers: The Exenta HRMS Training ManualExenta HrmsОценок пока нет

- Job AnalysisДокумент5 страницJob AnalysisNashwa El bahnasawyОценок пока нет

- Relocation PolicyДокумент3 страницыRelocation PolicyShiva Kant VermaОценок пока нет

- Corporate ChanakyaДокумент2 страницыCorporate Chanakyanikhil tolambe100% (1)

- HR Policy of DBL GroupДокумент2 страницыHR Policy of DBL GroupRihan Ahmed RubelОценок пока нет

- Expats Working in IndiaДокумент17 страницExpats Working in IndiaPratap ReddyОценок пока нет

- COVID 19 MarutiДокумент50 страницCOVID 19 MarutiPrabhat KhareОценок пока нет

- VP Human Resources CHRO in Southeast MI Resume Joan MoreheadДокумент2 страницыVP Human Resources CHRO in Southeast MI Resume Joan MoreheadJoanMoreheadОценок пока нет

- Recruitment Policy1 178Документ7 страницRecruitment Policy1 178vinay8464Оценок пока нет

- Evaluationofobesity 2015Документ11 страницEvaluationofobesity 2015erine5995Оценок пока нет

- Exploring Social Media Marketing Strategies in Smes: January 2015Документ19 страницExploring Social Media Marketing Strategies in Smes: January 2015PARAGОценок пока нет

- Radiotherapy in Cancer Care Facing The Global ChallengeДокумент578 страницRadiotherapy in Cancer Care Facing The Global Challengeerine5995Оценок пока нет

- NursingДокумент5 страницNursingerine5995Оценок пока нет

- Educationalinterventiontoimprove GoodДокумент8 страницEducationalinterventiontoimprove Gooderine5995Оценок пока нет

- Medu 13934Документ12 страницMedu 13934erine5995Оценок пока нет

- The Challengesof Nursing StudentsДокумент9 страницThe Challengesof Nursing StudentsVicky Torina ShilohОценок пока нет

- Conceptual Framework Comments-1Документ2 страницыConceptual Framework Comments-1erine5995Оценок пока нет

- Since The 1980s Accounting Reform Has Been A Major Focus in The Context of Philosophy of New Public ManagementДокумент2 страницыSince The 1980s Accounting Reform Has Been A Major Focus in The Context of Philosophy of New Public Managementerine5995Оценок пока нет

- Model Nurses ProcessДокумент1 страницаModel Nurses Processerine5995Оценок пока нет

- Soalan Sample MurajДокумент1 страницаSoalan Sample Murajerine5995Оценок пока нет

- Commuters' Perceptions On Rail Based Public Transport Services: A Case Study of KTM Komuter in Kuala Lumpur City, MalaysiaДокумент28 страницCommuters' Perceptions On Rail Based Public Transport Services: A Case Study of KTM Komuter in Kuala Lumpur City, Malaysiaerine5995Оценок пока нет

- Since The 1980s Accounting Reform Has Been A Major Focus in The Context of Philosophy of New Public ManagementДокумент2 страницыSince The 1980s Accounting Reform Has Been A Major Focus in The Context of Philosophy of New Public Managementerine5995Оценок пока нет

- KlinefelterДокумент2 страницыKlinefeltererine5995Оценок пока нет

- Annex2. InvitationEPCBA2 - Nomination FormДокумент3 страницыAnnex2. InvitationEPCBA2 - Nomination FormKhairi OthmanОценок пока нет

- Good Ict Governance 538 827 1 SPДокумент22 страницыGood Ict Governance 538 827 1 SPerine5995Оценок пока нет

- CLES Contstructive Learning EnvironmentДокумент42 страницыCLES Contstructive Learning Environmenterine5995Оценок пока нет

- 2 AntonsenДокумент16 страниц2 Antonsenerine5995Оценок пока нет

- Impact of Motivation On Employee Performances A Case Study of Karmasangsthan Bank Limited Bangladesh GoodДокумент8 страницImpact of Motivation On Employee Performances A Case Study of Karmasangsthan Bank Limited Bangladesh Gooderine5995Оценок пока нет

- Annex2. InvitationEPCBA2 - Nomination FormДокумент3 страницыAnnex2. InvitationEPCBA2 - Nomination FormKhairi OthmanОценок пока нет

- Best Sample Arabic Literature HistoryДокумент8 страницBest Sample Arabic Literature Historyerine5995Оценок пока нет

- Audit Questionnaire June 2013Документ21 страницаAudit Questionnaire June 2013erine5995Оценок пока нет

- Soalan Study Design of IT Gov Delphi ResearchДокумент29 страницSoalan Study Design of IT Gov Delphi Researcherine5995Оценок пока нет

- Good One Using ICT For TeachingДокумент11 страницGood One Using ICT For Teachingerine5995Оценок пока нет

- Born in SomersetДокумент5 страницBorn in Somerseterine5995Оценок пока нет

- Small & Medium Enterprises (SME) in Malaysia - Overview & AnalysisДокумент37 страницSmall & Medium Enterprises (SME) in Malaysia - Overview & Analysisdandusanjh100% (19)

- Low Birth Weight InfantsДокумент5 страницLow Birth Weight Infantserine5995Оценок пока нет

- Extra Reference Chapter 1Документ2 страницыExtra Reference Chapter 1erine5995Оценок пока нет

- Born in SomersetДокумент5 страницBorn in Somerseterine5995Оценок пока нет

- Sop ECUДокумент5 страницSop ECUSumaira CheemaОценок пока нет

- Packages: Khidmatul A'WaamДокумент4 страницыPackages: Khidmatul A'WaamAltaaf IzmaheroОценок пока нет

- BBBB - View ReservationДокумент2 страницыBBBB - View ReservationBashir Ahmad BashirОценок пока нет

- NF en 1317-5 In2Документ23 страницыNF en 1317-5 In2ArunОценок пока нет

- Pmwasabi EB3Документ4 страницыPmwasabi EB3AlleleBiotechОценок пока нет

- Solution Manual For A Friendly Introduction To Numerical Analysis Brian BradieДокумент14 страницSolution Manual For A Friendly Introduction To Numerical Analysis Brian BradieAlma Petrillo100% (43)

- Sciencedirect Sciencedirect SciencedirectДокумент7 страницSciencedirect Sciencedirect SciencedirectMiguel AngelОценок пока нет

- Decisions Made by The DecisionДокумент2 страницыDecisions Made by The Decisionneil arellano mutiaОценок пока нет

- Research and Practice in HRM - Sept 8Документ9 страницResearch and Practice in HRM - Sept 8drankitamayekarОценок пока нет

- Mangla Refurbishment Project Salient FeaturesДокумент8 страницMangla Refurbishment Project Salient FeaturesJAZPAKОценок пока нет

- Working of Online Trading in Indian Stock MarketДокумент81 страницаWorking of Online Trading in Indian Stock MarketVarad Mhatre100% (1)

- Reinforcement Project Examples: Monopole - Self Supporter - Guyed TowerДокумент76 страницReinforcement Project Examples: Monopole - Self Supporter - Guyed TowerBoris KovačevićОценок пока нет

- Electronic Service Tool 2020C v1.0 Product Status ReportДокумент6 страницElectronic Service Tool 2020C v1.0 Product Status ReportHakim GOURAIAОценок пока нет

- Cir vs. de La SalleДокумент20 страницCir vs. de La SalleammeОценок пока нет

- The Effect of Bicarbonate Additive On Corrosion ResistanceДокумент11 страницThe Effect of Bicarbonate Additive On Corrosion ResistancebexigaobrotherОценок пока нет

- 14.symmetrix Toolings LLPДокумент1 страница14.symmetrix Toolings LLPAditiОценок пока нет

- Flange ALignmentДокумент5 страницFlange ALignmentAnonymous O0lyGOShYG100% (1)

- Oxidation Ponds & LagoonsДокумент31 страницаOxidation Ponds & LagoonsDevendra Sharma100% (1)

- Account Statement From 27 Dec 2017 To 27 Jun 2018Документ4 страницыAccount Statement From 27 Dec 2017 To 27 Jun 2018mrcopy xeroxОценок пока нет

- Donor's Tax Post QuizДокумент12 страницDonor's Tax Post QuizMichael Aquino0% (1)

- Lab Manual: Department of Computer EngineeringДокумент65 страницLab Manual: Department of Computer EngineeringRohitОценок пока нет

- Performance Online - Classic Car Parts CatalogДокумент168 страницPerformance Online - Classic Car Parts CatalogPerformance OnlineОценок пока нет

- CN842 HBДокумент15 страницCN842 HBElif SarıoğluОценок пока нет

- UST G N 2011: Banking Laws # I. The New Central Bank Act (Ra 7653)Документ20 страницUST G N 2011: Banking Laws # I. The New Central Bank Act (Ra 7653)Clauds GadzzОценок пока нет

- DocumentДокумент2 страницыDocumentAddieОценок пока нет

- Starbucks Delivering Customer Service Case Solution PDFДокумент2 страницыStarbucks Delivering Customer Service Case Solution PDFRavia SharmaОценок пока нет

- Cover LetterДокумент16 страницCover LetterAjmal RafiqueОценок пока нет

- Scope and Sequence Plan Stage4 Year7 Visual ArtsДокумент5 страницScope and Sequence Plan Stage4 Year7 Visual Artsapi-254422131Оценок пока нет

- DSP QBДокумент8 страницDSP QBNithya VijayaОценок пока нет

- Queen Elizabeth Olympic Park, Stratford City and Adjacent AreasДокумент48 страницQueen Elizabeth Olympic Park, Stratford City and Adjacent AreasRavi WoodsОценок пока нет