Академический Документы

Профессиональный Документы

Культура Документы

Solution of Linear Algebraic Equations

Загружено:

Anonymous IwqK1NlОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Solution of Linear Algebraic Equations

Загружено:

Anonymous IwqK1NlАвторское право:

Доступные форматы

2.

0 Introduction

A set of linear algebraic equations looks like this:

a11 x1 + a12 x2 + a13 x3 + + a1N xN = b1

a21 x1 + a22 x2 + a23 x3 + + a2N xN = b2

a31 x1 + a32 x2 + a33 x3 + + a3N xN = b3

(2.0.1)

aM1 x1 + aM2 x2 + aM3 x3 + + aMN xN = bM

Here the N unknowns x j , j = 1, 2, . . . , N are related by M equations. The

coefficients aij with i = 1, 2, . . . , M and j = 1, 2, . . . , N are known numbers, as

are the right-hand side quantities b i , i = 1, 2, . . . , M .

Nonsingular versus Singular Sets of Equations

If N = M then there are as many equations as unknowns, and there is a good

chance of solving for a unique solution set of x j s. Analytically, there can fail to

be a unique solution if one or more of the M equations is a linear combination of

the others, a condition called row degeneracy, or if all equations contain certain

variables only in exactly the same linear combination, called column degeneracy.

(For square matrices, a row degeneracy implies a column degeneracy, and vice

versa.) A set of equations that is degenerate is called singular. We will consider

singular matrices in some detail in 2.6.

Numerically, at least two additional things can go wrong:

While not exact linear combinations of each other, some of the equations

may be so close to linearly dependent that roundoff errors in the machine

render them linearly dependent at some stage in the solution process. In

this case your numerical procedure will fail, and it can tell you that it

has failed.

22

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

Chapter 2. Solution of Linear

Algebraic Equations

2.0 Introduction

23

Much of the sophistication of complicated linear equation-solving packages

is devoted to the detection and/or correction of these two pathologies. As you

work with large linear sets of equations, you will develop a feeling for when such

sophistication is needed. It is difficult to give any firm guidelines, since there is no

such thing as a typical linear problem. But here is a rough idea: Linear sets with

N as large as 20 or 50 can be routinely solved in single precision (32 bit floating

representations) without resorting to sophisticated methods, if the equations are not

close to singular. With double precision (60 or 64 bits), this number can readily

be extended to N as large as several hundred, after which point the limiting factor

is generally machine time, not accuracy.

Even larger linear sets, N in the thousands or greater, can be solved when the

coefficients are sparse (that is, mostly zero), by methods that take advantage of the

sparseness. We discuss this further in 2.7.

At the other end of the spectrum, one seems just as often to encounter linear

problems which, by their underlying nature, are close to singular. In this case, you

might need to resort to sophisticated methods even for the case of N = 10 (though

rarely for N = 5). Singular value decomposition (2.6) is a technique that can

sometimes turn singular problems into nonsingular ones, in which case additional

sophistication becomes unnecessary.

Matrices

Equation (2.0.1) can be written in matrix form as

Ax=b

(2.0.2)

Here the raised dot denotes matrix multiplication, A is the matrix of coefficients, and

b is the right-hand side written as a column vector,

a11 a12 . . . a1N

b1

a22 . . . a2N

a

b

A = 21

b= 2

(2.0.3)

aM1 aM2 . . . aMN

bM

By convention, the first index on an element a ij denotes its row, the second

index its column. A computer will store the matrix A as a two-dimensional array.

However, computer memory is numbered sequentially by its address, and so is

intrinsically one-dimensional. Therefore the two-dimensional array A will, at the

hardware level, either be stored by columns in the order

a11 , a21 , . . . , aM1 , a12 , a22 , . . . , aM2 , . . . , a1N , a2N , . . . aMN

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

Accumulated roundoff errors in the solution process can swamp the true

solution. This problem particularly emerges if N is too large. The

numerical procedure does not fail algorithmically. However, it returns a

set of xs that are wrong, as can be discovered by direct substitution back

into the original equations. The closer a set of equations is to being singular,

the more likely this is to happen, since increasingly close cancellations

will occur during the solution. In fact, the preceding item can be viewed

as the special case where the loss of significance is unfortunately total.

24

Chapter 2.

Solution of Linear Algebraic Equations

np

n

5

6

2

m

3

7

mp

3

18

10

38

34

30

37

33

25

29

36

32

28

20

24

31

27

23

19

15

2

14

26

22

1

9

17

13

21

8

4

16

12

1

11

39

35

40

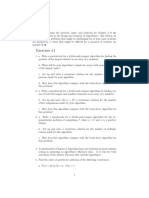

Figure 2.0.1. A matrix of logical dimension m by n is stored in an array of physical dimension mp by np.

Locations marked by x contain extraneous information which may be left over from some previous use of

the physical array. Circled numbers show the actual ordering of the array in computer memory, not usually

relevant to the programmer. Note, however, that the logical array does not occupy consecutive memory

locations. To locate an (i,j) element correctly, a subroutine must be told mp and np, not just i and j.

or else stored by rows in the order

a11 , a12 , . . . , a1N , a21 , a22 , . . . , a2N , . . . , aM1 , aM2 , . . . aMN

FORTRAN always stores by columns, and user programs are generally allowed

to exploit this fact to their advantage. By contrast, C, Pascal, and other languages

generally store by rows. Note one confusing point in the terminology, that a matrix

which is stored by columns (as in FORTRAN) has its row (i.e., first) index changing

most rapidly as one goes linearly through memory, the opposite of a cars odometer!

For most purposes you dont need to know what the order of storage is, since

you reference an element by its two-dimensional address: a 34 = a(3,4). It is,

however, essential that you understand the difference between an arrays physical

dimensions and its logical dimensions. When you pass an array to a subroutine,

you must, in general, tell the subroutine both of these dimensions. The distinction

between them is this: It may happen that you have a 4 4 matrix stored in an array

dimensioned as 10 10. This occurs most frequently in practice when you have

dimensioned to the largest expected value of N , but are at the moment considering

a value of N smaller than that largest possible one. In the example posed, the 16

elements of the matrix do not occupy 16 consecutive memory locations. Rather they

are spread out among the 100 dimensioned locations of the array as if the whole

10 10 matrix were filled. Figure 2.0.1 shows an additional example.

If you have a subroutine to invert a matrix, its call might typically look like this:

call matinv(a,ai,n,np)

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

2.0 Introduction

25

Tasks of Computational Linear Algebra

We will consider the following tasks as falling in the general purview of this

chapter:

Solution of the matrix equation Ax = b for an unknown vector x, where A

is a square matrix of coefficients, raised dot denotes matrix multiplication,

and b is a known right-hand side vector (2.12.10).

Solution of more than one matrix equation A x j = bj , for a set of vectors

xj , j = 1, 2, . . . , each corresponding to a different, known right-hand side

vector bj . In this task the key simplification is that the matrix A is held

constant, while the right-hand sides, the bs, are changed (2.12.10).

Calculation of the matrix A 1 which is the matrix inverse of a square matrix

A, i.e., A A1 = A1 A = 1, where 1 is the identity matrix (all zeros

except for ones on the diagonal). This task is equivalent, for an N N

matrix A, to the previous task with N different b j s (j = 1, 2, . . . , N ),

namely the unit vectors (b j = all zero elements except for 1 in the jth

component). The corresponding xs are then the columns of the matrix

inverse of A (2.1 and 2.3).

Calculation of the determinant of a square matrix A (2.3).

If M < N , or if M = N but the equations are degenerate, then there

are effectively fewer equations than unknowns. In this case there can be either no

solution, or else more than one solution vector x. In the latter event, the solution space

consists of a particular solution x p added to any linear combination of (typically)

N M vectors (which are said to be in the nullspace of the matrix A). The task

of finding the solution space of A involves

Singular value decomposition of a matrix A.

This subject is treated in 2.6.

In the opposite case there are more equations than unknowns, M > N . When

this occurs there is, in general, no solution vector x to equation (2.0.1), and the

set of equations is said to be overdetermined. It happens frequently, however, that

the best compromise solution is sought, the one that comes closest to satisfying

all equations simultaneously. If closeness is defined in the least-squares sense, i.e.,

that the sum of the squares of the differences between the left- and right-hand sides

of equation (2.0.1) be minimized, then the overdetermined linear problem reduces to a

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

Here the subroutine has to be told both the logical size of the matrix that

you want to invert (here n = 4), and the physical size of the array in which it is

stored (here np = 10).

This seems like a trivial point, and we are sorry to belabor it. But it turns out that

most reported failures of standard linear equation and matrix manipulation packages

are due to user errors in passing inappropriate logical or physical dimensions!

26

Chapter 2.

Solution of Linear Algebraic Equations

(usually) solvable linear problem, called the

Linear least-squares problem.

The reduced set of equations to be solved can be written as the N N set of equations

(2.0.4)

where AT denotes the transpose of the matrix A. Equations (2.0.4) are called the

normal equations of the linear least-squares problem. There is a close connection

between singular value decomposition and the linear least-squares problem, and the

latter is also discussed in 2.6. You should be warned that direct solution of the

normal equations (2.0.4) is not generally the best way to find least-squares solutions.

Some other topics in this chapter include

Iterative improvement of a solution (2.5)

Various special forms: symmetric positive-definite (2.9), tridiagonal

(2.4), band diagonal (2.4), Toeplitz (2.8), Vandermonde (2.8), sparse

(2.7)

Strassens fast matrix inversion (2.11).

Standard Subroutine Packages

We cannot hope, in this chapter or in this book, to tell you everything there is

to know about the tasks that have been defined above. In many cases you will have

no alternative but to use sophisticated black-box program packages. Several good

ones are available. LINPACK was developed at Argonne National Laboratories and

deserves particular mention because it is published, documented, and available for

free use. A successor to LINPACK, LAPACK, is now becoming available. Packages

available commercially include those in the IMSL and NAG libraries.

You should keep in mind that the sophisticated packages are designed with very

large linear systems in mind. They therefore go to great effort to minimize not only

the number of operations, but also the required storage. Routines for the various

tasks are usually provided in several versions, corresponding to several possible

simplifications in the form of the input coefficient matrix: symmetric, triangular,

banded, positive definite, etc. If you have a large matrix in one of these forms,

you should certainly take advantage of the increased efficiency provided by these

different routines, and not just use the form provided for general matrices.

There is also a great watershed dividing routines that are direct (i.e., execute

in a predictable number of operations) from routines that are iterative (i.e., attempt

to converge to the desired answer in however many steps are necessary). Iterative

methods become preferable when the battle against loss of significance is in danger

of being lost, either due to large N or because the problem is close to singular. We

will treat iterative methods only incompletely in this book, in 2.7 and in Chapters

18 and 19. These methods are important, but mostly beyond our scope. We will,

however, discuss in detail a technique which is on the borderline between direct

and iterative methods, namely the iterative improvement of a solution that has been

obtained by direct methods (2.5).

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

(AT A) x = (AT b)

2.1 Gauss-Jordan Elimination

27

CITED REFERENCES AND FURTHER READING:

Golub, G.H., and Van Loan, C.F. 1989, Matrix Computations, 2nd ed. (Baltimore: Johns Hopkins

University Press).

Dongarra, J.J., et al. 1979, LINPACK Users Guide (Philadelphia: S.I.A.M.).

Coleman, T.F., and Van Loan, C. 1988, Handbook for Matrix Computations (Philadelphia: S.I.A.M.).

Forsythe, G.E., and Moler, C.B. 1967, Computer Solution of Linear Algebraic Systems (Englewood Cliffs, NJ: Prentice-Hall).

Wilkinson, J.H., and Reinsch, C. 1971, Linear Algebra, vol. II of Handbook for Automatic Computation (New York: Springer-Verlag).

Westlake, J.R. 1968, A Handbook of Numerical Matrix Inversion and Solution of Linear Equations

(New York: Wiley).

Johnson, L.W., and Riess, R.D. 1982, Numerical Analysis, 2nd ed. (Reading, MA: AddisonWesley), Chapter 2.

Ralston, A., and Rabinowitz, P. 1978, A First Course in Numerical Analysis, 2nd ed. (New York:

McGraw-Hill), Chapter 9.

2.1 Gauss-Jordan Elimination

For inverting a matrix, Gauss-Jordan elimination is about as efficient as any

other method. For solving sets of linear equations, Gauss-Jordan elimination

produces both the solution of the equations for one or more right-hand side vectors

b, and also the matrix inverse A 1 . However, its principal weaknesses are (i) that it

requires all the right-hand sides to be stored and manipulated at the same time, and

(ii) that when the inverse matrix is not desired, Gauss-Jordan is three times slower

than the best alternative technique for solving a single linear set (2.3). The methods

principal strength is that it is as stable as any other direct method, perhaps even a

bit more stable when full pivoting is used (see below).

If you come along later with an additional right-hand side vector, you can

multiply it by the inverse matrix, of course. This does give an answer, but one that is

quite susceptible to roundoff error, not nearly as good as if the new vector had been

included with the set of right-hand side vectors in the first instance.

For these reasons, Gauss-Jordan elimination should usually not be your method

of first choice, either for solving linear equations or for matrix inversion. The

decomposition methods in 2.3 are better. Why do we give you Gauss-Jordan at all?

Because it is straightforward, understandable, solid as a rock, and an exceptionally

good psychological backup for those times that something is going wrong and you

think it might be your linear-equation solver.

Some people believe that the backup is more than psychological, that GaussJordan elimination is an independent numerical method. This turns out to be

mostly myth. Except for the relatively minor differences in pivoting, described

below, the actual sequence of operations performed in Gauss-Jordan elimination is

very closely related to that performed by the routines in the next two sections.

Sample page from NUMERICAL RECIPES IN FORTRAN 77: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43064-X)

Copyright (C) 1986-1992 by Cambridge University Press. Programs Copyright (C) 1986-1992 by Numerical Recipes Software.

Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copying of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website

http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America).

Gill, P.E., Murray, W., and Wright, M.H. 1991, Numerical Linear Algebra and Optimization, vol. 1

(Redwood City, CA: Addison-Wesley).

Stoer, J., and Bulirsch, R. 1980, Introduction to Numerical Analysis (New York: Springer-Verlag),

Chapter 4.

Вам также может понравиться

- TheHackersHardwareToolkit PDFДокумент130 страницTheHackersHardwareToolkit PDFMega Met X100% (1)

- 6th Central Pay Commission Salary CalculatorДокумент15 страниц6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- 6th Central Pay Commission Salary CalculatorДокумент15 страниц6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Connection DesignДокумент33 страницыConnection Designjesus curielОценок пока нет

- Foundation Repair ProceeduresДокумент128 страницFoundation Repair ProceeduresAnonymous IwqK1NlОценок пока нет

- Bhagvad Geeta For Common Man PDFДокумент172 страницыBhagvad Geeta For Common Man PDFSayuj Nath100% (1)

- Connections: Best Tips of The 21st CenturyДокумент4 страницыConnections: Best Tips of The 21st Centurysymon ellimac100% (1)

- INDIAN Steel TableДокумент8 страницINDIAN Steel Tablezaveeq80% (5)

- Bolted Connections: Teaching Resources © IIT Madras, SERC Madras, Anna Univ., INSDAG CalcuttaДокумент26 страницBolted Connections: Teaching Resources © IIT Madras, SERC Madras, Anna Univ., INSDAG CalcuttaAnonymous IwqK1NlОценок пока нет

- Antilock Brakes PDFДокумент117 страницAntilock Brakes PDFZak Carrigan100% (1)

- NEC Article 250Документ42 страницыNEC Article 250unknown_3100% (1)

- Handbook For Silo Foundation Investigation and DesignДокумент30 страницHandbook For Silo Foundation Investigation and DesignAnonymous IwqK1Nl100% (1)

- Cambridge Mathematics AS and A Level Course: Second EditionОт EverandCambridge Mathematics AS and A Level Course: Second EditionОценок пока нет

- PCC3.3 Diagram: Download NowДокумент5 страницPCC3.3 Diagram: Download NowHillol DuttaguptaОценок пока нет

- SR-50S - W (Cont. Climare) Manual de Peças TK 51281-0.0Документ57 страницSR-50S - W (Cont. Climare) Manual de Peças TK 51281-0.0Электроника Eletroeletrônica100% (1)

- Leadthrough Programming and Motion InterpolationДокумент18 страницLeadthrough Programming and Motion Interpolationyashbhoyar60% (5)

- Common Misunderstandings About Gasket and Bolted Connection InteractionsДокумент9 страницCommon Misunderstandings About Gasket and Bolted Connection InteractionsJuan KirozОценок пока нет

- Fast matrix multiplication with Strassen's algorithmДокумент4 страницыFast matrix multiplication with Strassen's algorithmMahesh YadavОценок пока нет

- Norsok Z-002-DP (Norwegian Coding System)Документ38 страницNorsok Z-002-DP (Norwegian Coding System)vcilibertiОценок пока нет

- EMV v4.2 Book 4Документ155 страницEMV v4.2 Book 4systemcoding100% (1)

- Understand The Basics of Centrifugal Pump Operations (CEP)Документ5 страницUnderstand The Basics of Centrifugal Pump Operations (CEP)Ari Firmansyah100% (1)

- Solution of Linear Algebraic EquationsДокумент5 страницSolution of Linear Algebraic EquationssamОценок пока нет

- Solution of Linear Algebraic EquationsДокумент5 страницSolution of Linear Algebraic EquationsAmit PandeyОценок пока нет

- Matrix Computations, Marko HuhtanenДокумент63 страницыMatrix Computations, Marko HuhtanenHoussam MenhourОценок пока нет

- Iterative Matrix ComputationДокумент55 страницIterative Matrix Computationnicomh2Оценок пока нет

- Determinants and EigenvalueДокумент40 страницDeterminants and EigenvalueAОценок пока нет

- Instrumentation & Control Systems Lab MEEN 4250 Lab 01 ObjectiveДокумент11 страницInstrumentation & Control Systems Lab MEEN 4250 Lab 01 ObjectiveUsama TariqОценок пока нет

- 3.5 Coefficients of The Interpolating Polynomial: Interpolation and ExtrapolationДокумент4 страницы3.5 Coefficients of The Interpolating Polynomial: Interpolation and ExtrapolationVinay GuptaОценок пока нет

- CS323: Lecture #1: Types of ErrorsДокумент4 страницыCS323: Lecture #1: Types of ErrorsMuhammad chaudharyОценок пока нет

- Matrix Mult 09Документ12 страницMatrix Mult 09Pranalistia Tiara PutriОценок пока нет

- 316 Ac 2Документ2 страницы316 Ac 2watewafferОценок пока нет

- Random Matrix TheoryДокумент65 страницRandom Matrix TheoryHaider AlSabbaghОценок пока нет

- (Turner) - Applied Scientific Computing - Chap - 02Документ19 страниц(Turner) - Applied Scientific Computing - Chap - 02DanielaSofiaRubianoBlancoОценок пока нет

- Control Engineering Lab-1 Introduction To MATLAB Its Functions and ApplicationsДокумент7 страницControl Engineering Lab-1 Introduction To MATLAB Its Functions and ApplicationsAtif ImtiazОценок пока нет

- CME 323: Strassen's Matrix Multiplication AlgorithmДокумент8 страницCME 323: Strassen's Matrix Multiplication AlgorithmShubh YadavОценок пока нет

- 3.5 Coefficients of The Interpolating Polynomial: Interpolation and ExtrapolationДокумент4 страницы3.5 Coefficients of The Interpolating Polynomial: Interpolation and Extrapolationsalem nourОценок пока нет

- 7.6 Simple Monte Carlo Integration: Random NumbersДокумент6 страниц7.6 Simple Monte Carlo Integration: Random NumbersVinay GuptaОценок пока нет

- Matlab 2Документ40 страницMatlab 2Husam AL-QadasiОценок пока нет

- Trends in Numerical Linear AlgebraДокумент19 страницTrends in Numerical Linear Algebramanasdutta3495Оценок пока нет

- MIR2012 Lec1Документ37 страницMIR2012 Lec1yeesuenОценок пока нет

- Blocked Matrix MultiplyДокумент6 страницBlocked Matrix MultiplyDeivid CArpioОценок пока нет

- MATH1080Документ695 страницMATH1080AaronJay JimankgeОценок пока нет

- Computing The Rank and A Small Nullspace Basis of A Polynomial MatrixДокумент8 страницComputing The Rank and A Small Nullspace Basis of A Polynomial MatrixMeng HuiОценок пока нет

- S Ccs AnswersДокумент192 страницыS Ccs AnswersnoshinОценок пока нет

- Year 1 MT Vacation Work, Vectors and MatricesДокумент5 страницYear 1 MT Vacation Work, Vectors and MatricesRoy VeseyОценок пока нет

- Eliminacion Gaussiana-KincaidДокумент8 страницEliminacion Gaussiana-KincaidfrankОценок пока нет

- Solu 4Документ44 страницыSolu 4Eric FansiОценок пока нет

- UNIT 3 Dimentionality Problems and Nonparametic Pattern ClassificationДокумент18 страницUNIT 3 Dimentionality Problems and Nonparametic Pattern ClassificationKalyanОценок пока нет

- Quadratic SieveДокумент7 страницQuadratic SieveSUBODH VERMA 17BCE1241Оценок пока нет

- MIT Numerical Fluid Mechanics Problem Set 1Документ7 страницMIT Numerical Fluid Mechanics Problem Set 1Aman JalanОценок пока нет

- Dan Um Jay AДокумент561 страницаDan Um Jay AsupriyakajjidoniОценок пока нет

- LinearAlgebra MatlabДокумент12 страницLinearAlgebra MatlabAndres Felipe CisfОценок пока нет

- AMS 147 Computational Methods and Applications Exercise #9Документ2 страницыAMS 147 Computational Methods and Applications Exercise #9WОценок пока нет

- Chapter 5 - Linear ProgrammingДокумент8 страницChapter 5 - Linear ProgrammingKhabib moginiОценок пока нет

- MATLAB Lecture 3. Polynomials and Curve Fitting PDFДокумент3 страницыMATLAB Lecture 3. Polynomials and Curve Fitting PDFTan HuynhОценок пока нет

- Numerical Methods HW1: Nonlinear Equation SolutionsДокумент2 страницыNumerical Methods HW1: Nonlinear Equation SolutionsShafayet A SiddiqueОценок пока нет

- Tutorial 02Документ1 страницаTutorial 02Tussank GuptaОценок пока нет

- Math 243M, Numerical Linear Algebra Lecture NotesДокумент71 страницаMath 243M, Numerical Linear Algebra Lecture NotesRonaldОценок пока нет

- Experiment 2 ExpressionsДокумент4 страницыExperiment 2 Expressionskurddoski28Оценок пока нет

- Numerical Analysis 1Документ81 страницаNumerical Analysis 1tinotenda nziramasangaОценок пока нет

- Matrix Analysis Chapter on Linear Algebra and Eigenvalue DecompositionДокумент17 страницMatrix Analysis Chapter on Linear Algebra and Eigenvalue DecompositionIoana CîlniceanuОценок пока нет

- More On Converting Numbers To The Double-Base Number System: Research Report LIRMM-04031Документ20 страницMore On Converting Numbers To The Double-Base Number System: Research Report LIRMM-04031Clouds DarkОценок пока нет

- 2.5 Iterative Improvement of A Solution To Linear EquationsДокумент5 страниц2.5 Iterative Improvement of A Solution To Linear EquationsVinay GuptaОценок пока нет

- Problem Set 5Документ4 страницыProblem Set 5Yash VarunОценок пока нет

- El Moufatich PapemjhjlklkllllllllrДокумент9 страницEl Moufatich PapemjhjlklkllllllllrNeerav AroraОценок пока нет

- 2 Homework Set No. 2: Problem 1Документ3 страницы2 Homework Set No. 2: Problem 1kelbmutsОценок пока нет

- A Deterministic Strongly Polynomial Algorithm For Matrix Scaling and Approximate PermanentsДокумент23 страницыA Deterministic Strongly Polynomial Algorithm For Matrix Scaling and Approximate PermanentsShubhamParasharОценок пока нет

- Manual Experiment 1 Part - 2Документ3 страницыManual Experiment 1 Part - 2Love IslifeОценок пока нет

- LFD 2005 Nearest NeighbourДокумент6 страницLFD 2005 Nearest NeighbourAnahi SánchezОценок пока нет

- Problem Set 3: Notes: Please Provide A Hard-Copy (Handwritten/printed) of Your Solutions. Please Do Not Print Your CodesДокумент5 страницProblem Set 3: Notes: Please Provide A Hard-Copy (Handwritten/printed) of Your Solutions. Please Do Not Print Your CodesAman JalanОценок пока нет

- 16E Application of Matrices To Simultaneous Equations: Worked Example 13Документ11 страниц16E Application of Matrices To Simultaneous Equations: Worked Example 13MuhammadОценок пока нет

- Remezr2 ArxivДокумент30 страницRemezr2 ArxivFlorinОценок пока нет

- Analysis of Algorithms: C RecurrencesДокумент5 страницAnalysis of Algorithms: C RecurrencesFathimaОценок пока нет

- Clicker Question Bank For Numerical Analysis (Version 1.0 - May 14, 2020)Документ91 страницаClicker Question Bank For Numerical Analysis (Version 1.0 - May 14, 2020)Mudasar AbbasОценок пока нет

- Solutions Manual Scientific ComputingДокумент192 страницыSolutions Manual Scientific ComputingSanjeev Gupta0% (1)

- MIT Numerical Fluid Mechanics Problem SetДокумент5 страницMIT Numerical Fluid Mechanics Problem SetAman JalanОценок пока нет

- 17.5 Automated Allocation of Mesh PointsДокумент2 страницы17.5 Automated Allocation of Mesh PointsVinay GuptaОценок пока нет

- 1.3 Error, Accuracy, and Stability: PreliminariesДокумент4 страницы1.3 Error, Accuracy, and Stability: PreliminariessamОценок пока нет

- Elastic Half Space Planes TrainДокумент1 страницаElastic Half Space Planes TrainAnonymous IwqK1NlОценок пока нет

- Floor LoadДокумент7 страницFloor LoadAnonymous IwqK1NlОценок пока нет

- What Is A Tsunami Fact SheetДокумент7 страницWhat Is A Tsunami Fact SheetAnonymous IwqK1NlОценок пока нет

- Marbonite DC 60x60cm & 100x100cm Vijaywada Catalogue Aw - June 2017 LowressДокумент16 страницMarbonite DC 60x60cm & 100x100cm Vijaywada Catalogue Aw - June 2017 LowressAnonymous IwqK1NlОценок пока нет

- Geotechnical Testign Methods II - ASДокумент24 страницыGeotechnical Testign Methods II - ASelcivilengОценок пока нет

- Floor LoadДокумент2 страницыFloor LoadAnonymous IwqK1NlОценок пока нет

- Behind Stark Political Divisions, A Mor..Документ3 страницыBehind Stark Political Divisions, A Mor..Anonymous IwqK1NlОценок пока нет

- Wave DemoДокумент18 страницWave DemoAnonymous IwqK1NlОценок пока нет

- Buckling of ColumnДокумент29 страницBuckling of ColumnIsczuОценок пока нет

- National Building Code of India 2016Документ5 страницNational Building Code of India 2016Anonymous IwqK1NlОценок пока нет

- World's 10 Most Powerful ArmiesДокумент10 страницWorld's 10 Most Powerful ArmiesAnonymous IwqK1Nl100% (1)

- 24 Sample ChapterДокумент14 страниц24 Sample ChapterRomyMohanОценок пока нет

- Conbextra HRДокумент4 страницыConbextra HRAnonymous IwqK1NlОценок пока нет

- Lecture - 04 Bolted ConnectionsДокумент57 страницLecture - 04 Bolted ConnectionsAnonymous IwqK1NlОценок пока нет

- Eng GrammarДокумент10 страницEng GrammarAnonymous IwqK1NlОценок пока нет

- Industrial Chimney Design and Construction SeminarДокумент31 страницаIndustrial Chimney Design and Construction SeminarcteranscribdОценок пока нет

- Alucobond PlusДокумент4 страницыAlucobond PlusAnonymous IwqK1NlОценок пока нет

- Crane Operation Manual PDFДокумент23 страницыCrane Operation Manual PDFcippolippo123100% (1)

- The House: Materials May Be Reproduced For Educational PurposesДокумент1 страницаThe House: Materials May Be Reproduced For Educational PurposesdyanaplusОценок пока нет

- Troubleshooting Verity PDFДокумент3 страницыTroubleshooting Verity PDFrahul_rajgriharОценок пока нет

- Boyer - Moore - Performance ComparisonДокумент12 страницBoyer - Moore - Performance ComparisonUriVermundoОценок пока нет

- Computer GraphicsДокумент66 страницComputer GraphicsGuruKPO100% (3)

- Soliphant M With Electronic Insert FEM58: Functional Safety ManualДокумент12 страницSoliphant M With Electronic Insert FEM58: Functional Safety ManualTreeОценок пока нет

- Types of I/O Commands & Techniques in Computer ArchitectureДокумент9 страницTypes of I/O Commands & Techniques in Computer ArchitectureANUJ TIWARIОценок пока нет

- IMS Information Gateway - Issue 1Документ9 страницIMS Information Gateway - Issue 1Avianto MarindaОценок пока нет

- Technology in Action Complete 9th Edition Evans Solutions ManualДокумент14 страницTechnology in Action Complete 9th Edition Evans Solutions ManualMrsKellyHammondqrejw100% (13)

- Exercises Chapter1.1Документ7 страницExercises Chapter1.1ponns80% (5)

- CCNA Lab Equipment List PDFДокумент2 страницыCCNA Lab Equipment List PDFJalal MapkarОценок пока нет

- Digigram VX222v2 BrochureДокумент2 страницыDigigram VX222v2 BrochureDr. Emmett Lathrop "Doc" BrownОценок пока нет

- 03 Data Input OutputДокумент43 страницы03 Data Input OutputAlexandra Gabriela GrecuОценок пока нет

- Linux QsДокумент7 страницLinux QsSayantan DasОценок пока нет

- Documents - Tips Isc Class Xii Computer Science Project Java ProgramsДокумент83 страницыDocuments - Tips Isc Class Xii Computer Science Project Java ProgramsSheerinОценок пока нет

- Interfacing S1 - 568 S1 - 1068 To PowerDB-En - V01Документ6 страницInterfacing S1 - 568 S1 - 1068 To PowerDB-En - V01MIRCEA MIHAIОценок пока нет

- Volvo InstructionsДокумент4 страницыVolvo InstructionsRonaldo Adriano WojcikiОценок пока нет

- Unlock Huawei E220 Hsdpa ModemДокумент6 страницUnlock Huawei E220 Hsdpa Modemjennifer_montablanОценок пока нет

- Interrupt Processing: 1. What Is An Interrupt?Документ3 страницыInterrupt Processing: 1. What Is An Interrupt?Sazid AnsariОценок пока нет

- TAB-P1048IPS Spec SheetДокумент1 страницаTAB-P1048IPS Spec SheetDanko VranicОценок пока нет

- MCSE-011: Time: 3 Hours Maximum Marks: 100Документ3 страницыMCSE-011: Time: 3 Hours Maximum Marks: 100priyaОценок пока нет

- Nasir Ali Bhatti 5120 Bscs C EveningДокумент4 страницыNasir Ali Bhatti 5120 Bscs C EveningSadeed AhmadОценок пока нет

- Instructions AND Parts Manual: Universal Bug-O-MaticДокумент31 страницаInstructions AND Parts Manual: Universal Bug-O-MaticrobyliviuОценок пока нет

- 1-DBS Hardware IntroductionДокумент10 страниц1-DBS Hardware IntroductionShahzad AhmedОценок пока нет