Академический Документы

Профессиональный Документы

Культура Документы

Huffman Coding

Загружено:

aramacataАвторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Huffman Coding

Загружено:

aramacataАвторское право:

Доступные форматы

3/1/2016

Huffman Coding

Huffman Coding

The Huffman coding procedure finds the optimum (least rate) uniquely decodable, variable length

entropy code associated with a set of events given their probabilities of occurrence. The procedure is

simple enough that we can present it here.

The Huffman coding method is based on the construction of what is known as a binary tree. The path

from the top or root of this tree to a particular event will determine the code group we associate with that

event.

Suppose, for example, that we have six events with names and probabilities given in the table below.

Event Name Probability

A

0.30

B

0.30

C

0.13

D

0.12

E

0.10

F

0.05

Our first step is to order these from highest (on the left) to lowest (on the right) probability as shown in

the following figure, writing out next to each event its probability for since this value will drive the

process of constructing the code.

Figure 7.6: Preparing for Huffman code construction. List all events in descending order of probability.

Now we perform a construction process in which we will pair events to form a new single combined

event which will replace the pair members. This step will be repeated many times, until there are no more

pairs left.

First we find the two events with least combined probability. The first time we do this, the answer will

always be the two right hand events. We connect them together, as shown in Figure 7.2 calling this a

combined event (EF in this case) and noting its probability (which is the sum of those of E and F in this

case.) We also place a 0 next to the left hand branch and a 1 next to the right hand branch of the

connection of the pair as shown in the figure. The 0 and 1 make up part of the code we are constructing

for these elements.

Figure: Preparing for Huffman code construction. List all events in descending order of probability.

https://www.math.upenn.edu/~deturck/m170/wk7/lecture/huffman/huffman.html

1/3

3/1/2016

Huffman Coding

Now we repeat the last step, dealing only with the remaining events and the combined event. This time

combining C and D creates a combined event with less probability than combining any others.

Again we repeat the process. This time, combining the combined events CD and EF create the new

combined event with least probability.

The next time around the best combination is of A and B.

Finally there is only one pair left, which we simply combine.

https://www.math.upenn.edu/~deturck/m170/wk7/lecture/huffman/huffman.html

2/3

3/1/2016

Huffman Coding

Having finished our connection tree, we are ready to read off of the diagram the codes that we will

associated with each of the original events. To obtain the code, we start at the top level of the tree and

make our way to the event we wish to code. The series of 0's and 1's we encounter along the way on the

branches of the tree comprise our code. Doing so for each event in this case yields the following result.

Event Name Probability Code Length

A

0.3

00 2

B

0.3

01 2

C

0.13

100 3

D

0.12

101 3

E

0.1

110 3

F

0.05

111 3

If we sum the products of the event probabilities and the code lengths for this case we obtain an average

bit rate of 2.4 bits per event. If we compute the true minimum bit rate, that is the information rate, of

these events as we did with the previous example, we obtain 2.34 bits.

Suppose that we had been originally planning to code our events originally as all 3 bit codes in a fixed

length code scheme. Then, if we code a document which is long enough so that we obtain the average

promised by this new scheme instead, we will find that we will obtain a compression ratio over the

original scheme of 2.4/3 = 80% whereas the ultimate possible compression ratio is 2.34/3 = 78%.

It can be shown that the Huffman code provides the best compression for any communication problem for

a given grouping of the events. In this problem we chose not to group events, but to code them

individually. If we were to create the 36 events we would get by forming pairs of the above events, we

would get substantially closer to the optimum rate suggested by the information rate calculation.

https://www.math.upenn.edu/~deturck/m170/wk7/lecture/huffman/huffman.html

3/3

Вам также может понравиться

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Anaconda With Python 2 On 32-Bit Windows - Anaconda 2.0 DocumentationДокумент2 страницыAnaconda With Python 2 On 32-Bit Windows - Anaconda 2.0 DocumentationedsonОценок пока нет

- All Matlab Codes PDFДокумент44 страницыAll Matlab Codes PDFLeena Rohan Mehta100% (2)

- RimaДокумент42 страницыRimaEbti Salah ArabОценок пока нет

- DSP Lab 13Документ5 страницDSP Lab 13mpssassygirlОценок пока нет

- LDPCДокумент20 страницLDPCKshitija PendkeОценок пока нет

- CH3 SourcecodingДокумент2 страницыCH3 SourcecodingRoshankumar B. BhamareОценок пока нет

- Convolutional CodesДокумент26 страницConvolutional CodesPiyush MittalОценок пока нет

- Hashing For Message Authentication by Avi KakДокумент52 страницыHashing For Message Authentication by Avi KakKumara PrathipatiОценок пока нет

- Jpeg-Ls: Akshit Chheda (1891004) Jaladhi Sonagara (1991106)Документ6 страницJpeg-Ls: Akshit Chheda (1891004) Jaladhi Sonagara (1991106)jaldhi sonagaraОценок пока нет

- SR VW MQB-FL-Touch ICEV M0055 014966-19-BQ 20191226 enДокумент23 страницыSR VW MQB-FL-Touch ICEV M0055 014966-19-BQ 20191226 enalekОценок пока нет

- Optimization Coverage by Adjustment RS Pa PBДокумент6 страницOptimization Coverage by Adjustment RS Pa PBodhi iskandarОценок пока нет

- Broadcast ChannelsДокумент94 страницыBroadcast Channelsİsmail AkkaşОценок пока нет

- Information Theory and Logical Analysis in The Tractatus Logico-PhilosophicusДокумент32 страницыInformation Theory and Logical Analysis in The Tractatus Logico-PhilosophicusFelipe LopesОценок пока нет

- Weick's Model and Its Applications To Health Promotion and CommunicationДокумент10 страницWeick's Model and Its Applications To Health Promotion and Communicationrichmond_austria7635Оценок пока нет

- Channel Coding: TM355: Communication TechnologiesДокумент58 страницChannel Coding: TM355: Communication TechnologiesHusseinJdeedОценок пока нет

- Data Center TCP (DCTCP) : Stanford University Microsod ResearchДокумент25 страницData Center TCP (DCTCP) : Stanford University Microsod ResearchJulian MorenoОценок пока нет

- ECE4007 Information-Theory-And-Coding ETH 1 AC40Документ3 страницыECE4007 Information-Theory-And-Coding ETH 1 AC40harshitОценок пока нет

- Erlang B TableДокумент2 страницыErlang B TablecgakalyaОценок пока нет

- An Introduction To Information Theory: Adrish BanerjeeДокумент16 страницAn Introduction To Information Theory: Adrish BanerjeeSushant SharmaОценок пока нет

- Abuse Workshop WS5Документ72 страницыAbuse Workshop WS5krumkaОценок пока нет

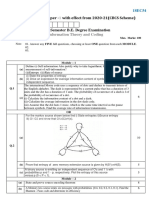

- Model Question Paper - With Effect From 2020-21 (CBCS Scheme)Документ5 страницModel Question Paper - With Effect From 2020-21 (CBCS Scheme)Hemanth KumarОценок пока нет

- ECT305: Analog and Digital Communication Module 2, Part 3: DR - Susan Dominic Assistant Professor Dept. of ECE RsetДокумент21 страницаECT305: Analog and Digital Communication Module 2, Part 3: DR - Susan Dominic Assistant Professor Dept. of ECE RsetanuОценок пока нет

- Flowing The Information From Shannon To Fisher TowДокумент7 страницFlowing The Information From Shannon To Fisher TowOsama AL TayarОценок пока нет

- Shanon Fano1586521731 PDFДокумент6 страницShanon Fano1586521731 PDFanil kumarОценок пока нет

- Disk Recovery ReportДокумент54 страницыDisk Recovery ReportjuanluzonОценок пока нет

- The Invention of Turbo Codes The Ten-Year-Old Turbo Codes Are Entering Into ServiceДокумент8 страницThe Invention of Turbo Codes The Ten-Year-Old Turbo Codes Are Entering Into ServicemalusОценок пока нет

- Aim: To Implement Huffman Coding Using MATLAB Experimental Requirements: PC Loaded With MATLAB Software TheoryДокумент5 страницAim: To Implement Huffman Coding Using MATLAB Experimental Requirements: PC Loaded With MATLAB Software TheorySugumar Sar DuraiОценок пока нет

- 3GPP TS 38.212Документ153 страницы3GPP TS 38.212vikram_908116002Оценок пока нет

- 15EC54Документ2 страницы15EC54Arnab HazraОценок пока нет

- Tunnels in Hash Functions: MD5 Collisions Within A Minute: V.klima@volny - CZДокумент17 страницTunnels in Hash Functions: MD5 Collisions Within A Minute: V.klima@volny - CZranshul95Оценок пока нет