Академический Документы

Профессиональный Документы

Культура Документы

Young1980 PDF

Загружено:

amit1234Исходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Young1980 PDF

Загружено:

amit1234Авторское право:

Доступные форматы

This article was downloaded by: [Ohio State University Libraries]

On: 19 June 2012, At: 05:18

Publisher: Taylor & Francis

Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House,

37-41 Mortimer Street, London W1T 3JH, UK

International Journal of Control

Publication details, including instructions for authors and subscription information:

http://www.tandfonline.com/loi/tcon20

Refined instrumental variable methods of recursive

time-series analysis Part III. Extensions

a

PETER YOUNG & ANTHONY JAKEMAN

Centre for Resource and Environmental Studies, Australian National University, Canberra,

Australia

Available online: 21 May 2007

To cite this article: PETER YOUNG & ANTHONY JAKEMAN (1980): Refined instrumental variable methods of recursive timeseries analysis Part III. Extensions, International Journal of Control, 31:4, 741-764

To link to this article: http://dx.doi.org/10.1080/00207178008961080

PLEASE SCROLL DOWN FOR ARTICLE

Full terms and conditions of use: http://www.tandfonline.com/page/terms-and-conditions

This article may be used for research, teaching, and private study purposes. Any substantial or systematic

reproduction, redistribution, reselling, loan, sub-licensing, systematic supply, or distribution in any form to

anyone is expressly forbidden.

The publisher does not give any warranty express or implied or make any representation that the contents

will be complete or accurate or up to date. The accuracy of any instructions, formulae, and drug doses should

be independently verified with primary sources. The publisher shall not be liable for any loss, actions, claims,

proceedings, demand, or costs or damages whatsoever or howsoever caused arising directly or indirectly in

connection with or arising out of the use of this material.

Refined instrumental variable methods of recursive

time-series analysis

Part 111. Extensions

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

PETER YOUNGtS and ANTHONY JAKEMANt

This is the final paper in a series of three which have been concerned with the comprehensive evaluation of the refined instrumental variable ( I V ) method of recursive

time-series analysis. The paper shows how the refined IV procedure can be extended

in various important directions and how it can provide the basis for the synthesis of

optimal generalized equation error (GEE) algorithms for a wide class of etochastic

dynamic systems. The topics discussed include the estimation of parameters in

continuous-time differential equation models from continuous or discrete d a t a ;

the estimation of time-variable parameters in continuous or discrete-time models of

dynamic systems ; the design of stochestic state reconstruction (Wiener-Kalmen)

filters direct from data ; the estimation of parametere in multi-input, single output

(MISO) transfer function models ; the design of simple stochastic approximation (SA)

implementations of the refined I V algorithms ; and the use of the recursive algorithms

in self-adaptive (self tuning) control.

1. Introduction

I n the first two parts of this paper (Young and Jakeman 1979 a, Jakeman

and Young 1979 a ) we have been concerned with the description a n d comprehensive evaluation of the refined instrumental variable (IV) approach t o

time-series analysis for single input, single output (SISO) and multivariable

dynamic systems described by discrete-time series models. In this, the third

and final part of the paper, we consider how the refined I V method can be

extended in various directions to handle continuous time-series models and

discrete or continuous time-series models with time-variable parameters. We

also discuss briefly other extensions including off-line and on-line adaptive

methods of designing state reconstruction (Kalman) filters for stochastic

systems ; the development of IV estimation procedures for specific time-series

models, such as the multiple input-single output transfer function model ; and

finally the estimation of parameters in multivariable system models in those

situations where the observation space is less than the dimension of the model

space. For convenience, in a11 cases except the latter, we shall consider refined

I V estimation algorithms with non-symmetric matrix gains. Bearing in mind

the results of the first two parts of the paper, however, it is clear t h a t symmetric

matrix gain alternatives could be implemented and it is likely that, a t least for

reasonable sample size, they would perform in a similar manner.

Received 10 August, 1979

t Centre for Resource and Environmental Studies, Australian National University,

Canberra, Australia.

1Currently Visiting Professor, Control and Management Systems Division,

Engineering Department, University of Cambridge

002&7179/80/3104 0741 $02.00

0 1980 Taylor & Francls Ltd

P. Young and A. Jakeman

742

Continuous-time dynamic systems described by ordinary differential equations

models

The refined IV procedure can be applied to both SISO and multivariable

continuous time-series models but, for simplicity of exposition, we will describe

here only the SISO implementation. The extension of the SISO procedures

t o the multivariable situation is, however, quite obvious by analogy with the

discrete-time case discussed in Part I1 of the paper (Jakeman and Young

1979 a). Using nomenclature similar t o that used previously, the continuoustime SISO model is illustrated in block M (within dotted lines) of Fig. 1 a n d

can be written as

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

2.

(ii)

(iii)

YV) = x ( t ) + f (1)

where' s is the differential operator, i .e. sx(t) = dx(t)/dl (Ioosely interpreted here

as the Laplace operator) ; A , B, C and D are polynomials in s of the following

form,

and [(t) is a continuous-time ' white noise ' process. It is well known (e.g.

AstrGm 1970, Jazwinski 1970) that theoretical and analytical difficulties

arise because of the use of continuous white noise in mathematical models of

dynamic systems, particularly transfer function formulations such as eqn.

( 1 ) (ii). In the present context, this difficulty is manifested in the form of

practical problems associated with the recursive estimation of the parameters in

the C and D polynomials characterizing the noise model. For the moment,

however, it is convenient to assume a continuous-time model of the form 1 (ii)

although, as we shall see, i t is necessary in practice to evaluate the noisecomponents of the model in discrete-time in order t o circumvent estimation

problems.

2.1. Discrete and continuous time recursive algorithms

It is clear that the model (1) is algebraically equivalent to the discrete-time

SISO model discussed in previous parts of this paper. Let us consider,

therefore, the situation whcre we wish t o implement the estimation algorithm

in discrete-time using sampled data from the continuous-time system ; we will

refer to this as CD (continuous-discrete) analysis (Young 1979 a). Using a n

approach similar to that used in previous parts of the paper, i t is then possible

to obtain estimates of the parameters in the continuous-time model polynomials

Refined instrumental variable methods of recursive time-series analysis 743

A and B by minimizing a least squares cost function J of the form

Here y = [yo,, yo,, ..., yOTlTand u = [u,,, uo2,..., uOTIT,where the first zero

subscript on u and y indicates that the variables are, respectively, the basic

input and output variables (i.e. the ' zeroth ' derivatives of u and y), while the

second subscript i = l , 2 , ..., T denotes the sampled values of the variables at

time ti, i.e. y(t,) and u(t,).

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

'noise

w~(si .

~(t)

:

YO*(

I'

As

I state

or

-

t1 +

-

r.5thte.--

1)

! reconstruct~oni

Iv

z!

-&GoRITw

.,

recurswe

or

iteraiive update

A~SI -

a c

... I

I-

D[s)A(s)

-- ki(t) C(sl

kit)

filter

r

u(t) variable

f ~ l t e r s u*.dt)

aux~l

iary

model

-

REFIND

Figure 1. Refined IV algorithm for continuous-time systems.

Now, by direct analogy with the analysis in the DD (discrete-discrete) case

of Part I, the recursive estimate d of the unknown parameter vector a =

[a,, a,, .. ., a,, b,, b,, ..., b,-,IT can be obtained from the following discrete-time

algorithm,

i

a, = a,-,

+ z , * ~P k - l ~ k * ] - l ( ~ k *a,-,T

- P,-,ak*[sz

- yOk*)

or

(ii)

a, =

- P,lt,*{~,*~

- yOk*)

and

(iii)

P, = P,-,-

Pk-,g,*[e2

+z

~ pk-lltk*]-l~k*T

* ~

Pk-l

where

Here 6, is an estimate of the variance of e ( t ) ; i!ki s the output of an adaptive

' auxiliary model ' as shown in Fig. 1, and the star superscript indicates that

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

744

P. Young and A . Jakeman

the Jariables are filtered by adaptive ' prefilters ' C/BA again as shown in

Fig. 1. The i = 1, 2 , ..., n subscript on the variables within the square brackets

in (5) denotes the ith time-derivative of the variabIe, while the k subscript

outside the brackets indicates that the enclosed variables are all sampled a t the

kth sampling instant.

.

This algorithm has close similarity with the I V algorithm suggested some

years ago by Young (1969),the only difference lies in the nature of the prefilters ;

in the previous algorithm, these were termed ' state variable filters ' and were

introduced mainly t o avoid direct differentiation of noisy signals. I n this

sense, the function of the present filters is identical : their presence means that

it is not the direct derivatives of the variables y(l), i ( t ) and u(t)that are required

for estimation but the derivatives of the filtered variables y*(t), j.*(t) and u*(t).

And these filtered derivatives, unlike the direct derivatives, are physically

realizable as a product of the filtering operation (Young 1964, 1969). Of

course the prefilters here do more than just avoid differentiation of noisy

signals ; they also represent the mechanism for inducing asymptotic statistical

efficiency.

In the present case, the ' optimal ' prefilters are defined in terms of estimates

of the a priori unknown polynomials A , C and D. It is necessary, therefore,

to define some adaptive procedure for synthesizing the prefilters as the estimation proceeds. I n the situation where C = D = 1.0, i.e. ( ( t ) is white noise, t h e

adaptive synthesis of the prefilters 1/A is fairly straightforward : both the

prefilter and auxiliary model parameters can be updated either recursively or

'

iteratively as shown in Fig. 1, exactly as .in the discrete-time model case

described in P a r t I of this paper. When the noise [ ( t ) is coloured (i.e. C # 1.0 ;

and/or D # 1.0), however, the situation is not so straightforward : in contrast t o

the discrete-time model situation, it is not easy to construct a similarly motivated recursive estimator for the continuous-time noise model parameters since

the derivatives of the white noise e ( t ) do not exist in theory.

While it may be possible to solve this noise estimation problem by considering either band-limited noise or purely autoregressive noise (where

derivatives of e ( t ) do not occur), we feel that i t may be better to consider a

hybrid approach. Here, the noise is estimated in purely discrete-time (DD)

terms by the use of the AML or refined AML algorithms described previously.

This does not create any implementation problems because the noise model is

only required for adaptive prefiltering operations, which can easily be carried

out in discrete-time when using CD analysis. The general implementation

in this case is shown in Fig. 2 (a) and the detailed structure of the derivative

generating filters l/A(s) is illustrated in Fig. 2 (b). It should be noted here

that the filter in Fig. 2 ( b ) is similar to the ' state variable filter ' suggested by

Kohr (see, e.g. Kohr and Hoberock 1966) : the only difference is that the

coefficients ti,, i = 1, 2, . . . , n are not constant, as in the Kohr case, but are

aduplively adjusted, either iteratively or recursively, as the estimation proceeds.

Up t o this point we have assumed that, while the algorithm (4) is implementcd in discrete-time, the signals y(t),4(t)and u ( t )are available in continuoustime form so that they can be passed through the continuous-time prefilters

prior to sampling. I n practice, however, it could well be that both input and

output signals are naturally in sampled data form. This difficulty can be

circumvented, albeit in an approximate manner, by assuming that the signals

Refined instrumental variable methods of recursive time-series analysis 7 4 5

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

remain constant over the sampling interval and passing them directly into the

continuous-time filters. In other words, the sampled data are converted to a

continuous time ' staircase ' form prior to filtering. I n this manner, the

prefilters perform a n additional, useful, ' interpolation ' role and provide

estimates ' of the continuous-time filtered variables.

' and I

! x

-

r - ' '

'

i.-.J

.J

(a1

.- -

I S v F (see block

and

I. hold

Y

I hold I

u:(t)

above 1n(a1)

u:-l(t)

I:

4u; ( t )

I

I

u.' ( t )

- uE-,(t)

I .:

am->

=-

I

I

I_

-.

- -

( b)

Figure 2. Refined IV algorithm : CD implementation. (a)Overall implementation

(X closed : continuous-time data available ; X operative : discrete data only

available ; ( b ) state variable filter l / A ( s ) applied to u(t).

Of course the estimates of the filtered variables emerging from the prefilters

are in no sense optimal and the efficacy of this approach is clearly dependent

upon the sampling period T,: the approximation will be good for small T,

and will become progressively worse as T, is lengthened. Fortunately the

estimation results do not appear particula.rly sensitive to the choice of T,and

acceptable performance can be obtained from quite coarse sampling frequencies.

P. Young and A. Jakeman

746

Finally, i t is worth noting that, if continuous-time measurements of y(t) and

u(t) are available, then it is possible to consider a continuous-time implementation.of the estimation algorithm itself (i.e. using CC analysis). This is a logical

development of early continuous-time gradient procedures for estimating

dynamic system parameters (see, e.g. Young 1965 a, Levadi 1964, Kaya a n d

Yamamura 1962, Young 1976). The most obvious impleinentation would be

an estimation algorithm of the form

(i)

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

(ii)

which is a continuous-time equivalent of the discrete-time recursive algorithm.

Algorithms of this form are also discussed by Solo (1978).

Note that i t would be difficult to implement the estimation algorithm (6)

for other than C = D = 1.0 because of the difficulty in estimating the C and D

polynomials (unless we once again consider some hybrid mechanization which

would be rather impractical). Thus when f(t) is not white noise, the estimates

produced by the algorithm will not have any optimal properties. They will,

however,. be consistent, asymptotically unbiased and, on the basis of previous

experience, they should be reasonably efficient (see Jakeman 1979). Note also

that we can reduce the computational complexity further by replacing '(t) in

(6) by a simpler stochastic approximation (SA) gain (e.g. Young 1976). This

would be a continuous-time equivalent of the SA algorithms discussed in $ 7.

2.2. Experimental results .

The CD approach to the continuous-time model estimation discussed in the

previous section has been evaluated by Monte Ca;rlo simulation analysis applied

to two systems described by second order differential equations. In the first

case, the system was of the form

with u(t) chosen as a random binary signal with levels plus and minus 1.0.

I n the second, the system was modified to

with K = 0-781, w, = 1-6, 5 = 0.5 and u(t) chosen as the following combination

of three sinusoidal signals,

u(t) =sin (0.5wdt)+ sin (w,t) +sin (l.5wdt)

c2).

where w , is the damped natural frequency of the system i.e. w , = o,Z/(1In both of these examples, the noise l(t) was simulated white noise adjusted

to give several different signal/noise ratios S (defined as in Young and Jakeman

1979 a).

Refined instrumental variable methods.of recursive time-series analysis 747

Tables 1 (a)and 1 ( b ) are typical of the results obtained during the analysis.

For each sample size, column 1 represents the average parameter value over 10

experiments, while columns 2 and 3 represent standard deviation from the true

and average parameter value, respectively. I n Table 1 , the sampling interval

T, is chosen to be quite rapid a t 0.1 sec which represents 1/31.4 of the Shannon

maximum sampling period, P , = n / w , In Table 1 ( b ) ,two different sampling

intervals are compared : one fairly coarse ( P , / 8 ) ,the other quite small ( P , / 4 0 );

there seems to be some bias on a , in the coarse sampling situation.

Number of samples

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

Parameter

True

value

100

500

Table 1 (a). S=10, T,=0.1.

Sampling rate

Parameter

True

value

PJ40

Pel8

Table 1 ( b ) . S= 10, 500 samples.

Other simulations have tended to confirm that quite coarse sampling

intervals can be tolerated but suggest that the degree of bias is, not surprisingly,

a function of the system dynamic characteristics and the value of S. As a

result, the algorithm should always be used with great care if the sampling rate

is low and, as a rule of thumb, sampling intervals should always be chosen less

than P,/10. But there is clearly a need for more research on this topic before

the algorithm can be used with confidence with coarsely sampled data.

The algorithm (4) has also been applied with, some success both to multivariable systems (Jakeman 1979) and to real data. Typical of the latter are

the results shown in Table 1 (c) and Fig. 3. Table 1 (c)compares the estimates

obtained when carrying out CD and DD time-series analysis on data obtained

during fluorescence decay experiments on 1-naphthol (Jakeman et al. 1978).

I t is clear t h a t the continuous-time and discrete-time models have virtually

identical dynamic characteristics in this case, where the sampling period was

short in relation to P , (approximately P,/113).

P . Young nnd -1. Jakernan

006

dye tracer data

- model

model

ovtput C ( t )

at)=b,l~(t-~l

1 + b,stGs

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

time ( hours)

Figure 3 . Results from model of dye tracer concentration in Murrumbidgee River,

Australia.

Dynamic characteristics

Model

Parameter

estimates

Time constant Steady state.

(nsec)

gain

Discrete time T,= 0.212 nsec

a, = - 0.9724

7.575

1.0

Continuous time; time unit =0-212

nsec

bo= 0.0276

a, = 35.9622

bo= 1.00

7.624

1-0

Table 1 (c).

Figure 3 shows the observed and estimated dye concentration in a river,

where the estimated concentration is generated by a second order differential

equation model estimated using algorithm 4. The data used in this exercise

wcre collected during dye tracer experiments carried out on the Murrumbidgee

River system in Australia (Whitehead et al. 1978). Here, as can be seen from

Fig. 3 , i t was not possible t o maintain a completely regular sampling interval

but T, is approximately half an hour ( P , / 3 0 ) . This demonstrates how d a t a

with irregular sampling intervals can be used, provided the longest sampling

interval does not lead to serious interpolation errors and estimation bias.

3. Systems described by time variable parameter models

The idea of modifying recursive algorithms to allow for the estimation of

time-variable model parameters has been exploited many times since the

publication of R. E. Kalman's seminal papers on state variable filter-estimation

theory in the early nineteen sixties (Kalman 1960, Kalman and Bucy 1 9 6 1 ) .

In the case of I V algorithms, this particular extension has so far been heuristic

(see Young 1969). But now, with the advent of the refined I V algorithm, i t is

possible to put such modifications on a sounder theoretical base and t o construct

algorithms which have greater practical potential.

There are a number of ways in which the time variable parameter modifications can be introduced. The most straightforward is simply to take note of

Refined instrumental variable nzethods of recursive time-series analysis 749

the relationship between the refined IV algorithm and the Kalman estimation

algorithms and introduce additional a priori information in the form of a

stochastic model for the parameter variations. The general form of this model

is the following discrete-time, Gauss-Markov model,

Here @ and 'I are assumed known and possibly time variable matrices, while

q k is a discrete white noise vector with zero mean .and covariance matrix Q

which is independent of the ' observational ' white noise source e,, i.e.

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

where S,, is the Kronecker delta function.

This device is now well known in the recursive parameter estimation literature and it is straightforward to show (see, e.g. Young 1974) how the simple

recursive linear least squares regression equation can be modified in the light

of the additional a priori information inherent in (7) to include additional

prediction equations ' which allow for the update between samples of both the

parameter estimates and the covariance matrix of the parametric estimation

errors, P,.

Unlike the basic I V algorithm, the refined I V algorithm would appear to

have certain optimal properties. In particular, the theoretical results of Pierce

(1972), together with the stochastic simulation results reported in Parts I and

I1 of this paper, have shown that the pk matrix generated by the refined

algorithm provides a good empirical estimate of the covariance matrix P of

the estimation errors, where

P = E piT)

and B = a A. It is possible, therefore, to employ the same approach used t o

modify the recursive linear regression equations to similarly modify the refined

I V algorithms. The resulting algorithmt takes the following predictioncorrection form (see, e.g. Young 1974, p. 214)

(i) Iklkvl

=

(ii)

Pklk-,= ~ f i , - , @+~r Q F T

(iii )

ak= ~ ~ ~ ~ - ~ - +pz ~ ~ ~pklk-l~k*]'-l

* ~ ~- ~ ~ ~ * [ 6 ~

correction

on receipt

sample ( i ~ )

{ ~ k Sk~k-l-~k*)

* ~

f i = PkIk-l-PkIk-I~k*[@

+

bkIk-I~k*]-l

~

k pklk-l

*

~

Equations (8) (i) to (iv) constitute the refined I V algorithm for estimating

stochastically variable parameters in a discrete time-series model of a SISO

t It will be noted that the derivation of this algorithm is made a little more

obvious if the symmetric gain matrix form of the refined IV algorithm is utilized.

?,' from this algorithm is a somewhat closer approximation to P than f),

in the nonsymmetric gain case (see Young and Jakeman 1979 a).

750

P . Young and A . Jakeman

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

system. At first sight, this algorithm appears somewhat restrictive since i t

requires a priori knowledge of the matrices 0 and I? in the stochastic model of

the parameter variations. But past experience with similar algorithms (e.g.

Young 1969, Norton 1975) has indicated that the assumption is not as limiting

as i t might appear. First, it is possible to consider a class of simple ' random

walk ' models which represent special cases of (7) with very simple @ a n d I?

matrices and which seem to offer some considerable practical potential.

Second, a priori information on the nature of parameter variations can sometimes be utilized to arrive at simple Gauss-Markov models.

I n the first case, the three random walk models that have proven most

useful in practice are as follows :

The pure random walk (RW)

The smoothed random walk (SRW)

where a is a constant scalar with 0 < a < 1.0.

The integrated random walk (IRW)

The R W model (9) was first used in the early nineteen sixties (see, e.g. Kopp

and Orford 1963, Lee 1964). The IRW model (11) was suggested in the

parameter estimation context by Norton (1975) who has used it successfully

in a number of practical applications. The SRW model (10) is of more recent

origin (Young and .Kaldor 1978) and seems to provide .a good compromise

between models (9) and ( l l ) ,although it requires the specification of one additional parameter, the smoothing constant u = 1 / ~ , where

,

i-, is the approximate

exponential smoothing constant in sampling intervalst.

All of the models (9) to (1 1) are non-stationary in a statistical sense and so

they allow for wide variation in the parameters. Their different characteristics

are described fully by Norton (1975) and Young and Kaldor (1978). P u t

simply, the progression from model (9) through (10) to (11) allows for greater

overall variation in the estimated parameters for any specified covariance

matrix Q, accompanied by greater ' smoothing ' of the short-term variations.

Jn the case where more general @ and '

I matrices are considered i t may

often be possible to assl~methat, for physical reasons, the variations in the

parameter are correlated with the variations in other measured variables

affecting the system. For example, the parameters in a n aerospace vehicle are

known t o be functions of variables such as dynamic pressure, Mach number,

altitude, etc. (Young 1979 b). Or again, the numerator coefficients i n a

t Strictly, the time constant,

sampling interval in time units.

T,=

-T,/log, (1 -a) time units, where T, is the

Refined instrumental variable methods of recursive time-series analysis 751

transfer function model between rainfall and runoff flow in hydrological systems

are known to be functions of soil moisture and evapo-transpiration (Young 1975,

Whitehead and Young i975).

I n such examples,i t is often possible to define a, in the following form

where T k is a matrix (often diagonal) of the relevant measuredvariables ; a,*

is a vector of residual parameter variations which, if the T, transformation is

effective, will be only slowly variable and can be described, for example,. b y

one of the random walk models. I n the case of the R W (9), i.e.

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

ak*= akPl*+ T k - 1

(13)

we see that, upon substitution from (13) into (12), the variations in ak are given

by a Gauss-Markov m'odel such as (7) with @ = 0,= TkTk-,-I and r = I?,= T k .

It is clearly possible, therefore, t o utilize the refined I V algorithm (8) with

0, and Q in the prediction eqns. (8) (i) and (ii) defined accordingly. Such a n

approach has been used previously with other recursive algorithms b y Young

(1969, 1979 b).

The implementation of the algorithm defined by eqns. 8 (i) t o (iv) offers

several problems. I n particular, the equations imply the parallel implementation of the refined AML algorithm and its interactive use with the refined I V

algorithm, as described by Young and Jakeman (1979 a). This introduces

considerable complexity and, for the present paper, we have once again

implemented only the special case where El, is white noise, i.e. C(z-1) = D(z-1) =

1.0. Here, the full refined AML is not required and the prefilters (nominally

e/Ab)are defined as 1/A^. This simpler algorithm works very well and

seems to give good results even if 5, is coloured noise. Moreover, the algorithm

in this form has also been modified further to allow for an off-line ' smoothing '

solution in which the recursive estimate a t any sampling instant k, is a conditional estimate ti,,,, based on the whole data set of N sampIes. The smoothing

algorithm is an extension of Norton's work (Norton 1975) within a n I V context

and it requires both forward and backward recursive processing of the d a t a

(Young and Kaldor 1978, Kaldor 1978, Gelb 1974).

Finally, i t should be remarked that the above approach to time variable

parameter estimation can be extended straightforwardly both to the multivariable and continuous-time situations. Such extensions are fairly obvious

and so they are not considered in detail in the present paper.

3.1. Experimental results

The IV algorithm (8) has been applied to the follpwing second order discrete

time system

where 5, is white noise chosen to make S = 20 ; while a, = 0.5, Vk ; and both

b, and a , are time-variable with

0.3 ; k = 1, ..., 30

0.5 ; k=31, ..., 60

0.4 ; k=61, ..., 100

P. Young and A . Jake-

752

and

-0.35 ; k = 1 , ..., 60

alk

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

-0.6 ; k=61,

..., 100

Figures 4 (a), ( b ) and (c) show the estimation results obtained when a n IRW

model (11) is assumed, with Q selected as a diagonal matrix with elements

0.001, 0, and 0-001 respectively. Both the recursive filtering and smoothing

estimates are shown in all cases (for the constant parameter a,, the smoothed

estimate is, of course, itself a constant). I t is interesting to note t h a t very

similar results t o these were obtained using the SRW model with a = 0.9 and

the diagonal elements of Q set to 0.05, 0, and 0.05, respectively.

It is clear t h a t in this example where step changes in parameters occur, the

smoothing algorithm is not pa,rticularly appropriate, since i t attempts to

provide a smooth transition where abrupt changes are actually being encountered. I n practice, however, i t is quite'likely that smoother changes in

parameters will often occur and it is here that the smoothing algorithm will have

maximum potential. But i t should be emphasized that the smoothing

algorithm used.here is a n off-line procedure and is computationally expensive

in comparison to the filtering algorithm (8). On line, ' fixed lag ' smoothing

algorithms (Gelb 1974) could be developed, however, if c i r c u m s t a n ~ sso

demanded.

III

-recursive

estimte

5'0

number of sarqdes k

1DO

Figure 4. Time variable parameter estimation for second order, stochastic system.

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

Refined instrumental variable methods of recursive time-series analysis 753

Algorithms such as (8) with.parameter variation model (12) and (13) have

been used successfully for the estimation of the rapid.1~changing parameters

of a simulated missile system (e.g. Young 1979 b). I n this example, additional

flexibility was required to estimate the particularly rapid changes in parameters

that occurred over the rocket boost period and this was introduced by making

the covariance matrix Q also a function of k.

The algorithm (8) has also been applied to many other sets of simulated

and real data. These include time-series obtained from a large econometric

model of the Australian economy (Young and Jakernan 19.79 b) ; real data

from the United States economy (Young 1978) ; and various sets of environmental data (Young 1975, Whitehead and Young 1975, Young 1978). I n the

latter examples, the time-variable estimation was utilized specifically for the

identification of non-linearities in the model structure, a procedure for which i t

seems singularly well suited.

4. State rCconstruction filter design

Recently Young (1979 c) has shown how the estimates of the state of a

stochastic, discrete-time, SISO system can be obtained as a linear function

of the outputs of the adaptive prefilters used in the refined IV algorithm when

i t is applied to the following ARMAX model

I n particular, i t can be shown that the state estimate 'jCk, is generated theoretically from a relationship of the following form,

(16)

'jCk='k~

where

zk=[Nl<lk!

and

...

N n < l k : N1<2k!

p~ = I

Here

<,,and

<2k

~ T aT

... ! N n < 2 k l

i PTl

(I7)

(18)

are the following vectors of prefiltered variables

while N i are n x n matrices, i = 1, 2, ..., n, composed of the numerator coefficients of [I- Fz-1]-16,,

where Si is the ith unit vector and F is the following

matrix

Finally, the vectors a , (3 and d in (18) are defined as

a = [ a , , ..., a n J T ; P=[b,, ..., b,lT

and

d=[dl, ..., dnJT

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

764

P . Young and A. J a k e m n

The state reconstruction filter (16) can be implemented in practice by replacing

p by its estimate obtained from a recursive IVAML algorithm and using t h e

outputs of the prefilters in the same algorithm to define 2,.

If the fully recursive I V solution is utilized in the above fashion then t h e

overall procedure constitutes an optimal adaptive Kalman filter, in t h e sense

that the asymptotically optimal state estimates are obtained as a by-product of

an asymptotically efficient parameter estimation scheme. I n the off-line

recursive-iterative implementation, use of the algorithm in this fashion

represents a Kalman filter design procedure in which the ' asymptotic gain '

Kalman (or Wiener) filter represented by eqn.,(l6)is synthesized directly from

the system data?. This should be particularly useful in practice because i t

obviates the need for specifying noise statistics and solving the covariance

matrix R,iccati equation, as required in normal Kalman filter design. Note also

that this approach t o state estimation can also be applied in the purely

stochastic situation where no input variables u, are present : here 'the refined

AML algorithm would provide the source of parameter estimates and prefiltered

variables.

An extension of the above approach to continuous-time systems with

deterministic inputs is fairly obvious but involves some technical problems.

The expression for the estimate of the continuous-time state -vector .jC(t) is of

the same basic form as (16) (see Fig. I ) , i.e.

where, theoretica.lly, Z(t) is the continuous equivalent of Zk. But t h e noise

model in the continuous-time equivalent of eqn. (15) has equal order numerator

and denominator polynomials which introduces estimation problems (see, e.g.

Phillips 1959, Phadke and Wu 1974).

We will not discuss these problems in this present paper but will merely

note that, in this situation, a straightforward yet clearly suboptimal approach

is to use a more arbitrary state variable filter ( l / B ) . For example, the choice

of D could be based on the heuristic notion that its passband should encompass

the passband of the system under study (e.g. b = d ) . I n this manner, the

filter will pass frequency components of interest in the estimation but attenuate

.high frequency noise. The resultant IV algorithm is then identical to t h a t

suggested by Young (1969), and the state estimate obtained from (22) with p

replaced by p, although not optimal in any minimum variance sense, will be

asymptotically unbiased and consistent, i.e. jC(t)+x(t) for 1-co, where x is

the true state vector.

The I V algorithm in this latter case can be considered as a stochastic

equivalent of the ' adaptive observer ' suggested by Kreisselmeier (1 977) which

is based on the deterministic equivalent of eqn. (22) and uses a continuous-time

deterministic estimation algorithm with .constant gains. B u t unlike the

Kreisselmeier observer, this stochastic state reconstruction filter will function

satisfactorily, albeit non-optimally, in the presence of even high levels of noise.

t This would seem to satisfy Kalman's requirement (1960),that ' the two problems

(parameter and state estimation) should be optimized jointly if possible '.

Refined instrumental variable methods of recursive time-series analysis 755

Finally, with the utilization of suitable multivariable canonical forms, it is

possible to extend the arguments in this section to multivariable systems

(Jakeman and Young 1979 c). Such extensions will, however, suffer from the

disadvantages of complexity associated with all multivariable ' black box '

methods (see Jakernan and Young 1979 a ) and they will need to be considered

in detail before their practical potential can be evaluated.

4 . 1 . Experimental results

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

Figures 5 (a)and ( b ) show the results obtained when the off-line Kalman

filter design procedure is applied to the following model

which is simply the ' innovations ' or Kalman filter description of model 5,

considered in Part I of the paper. These results were obtkined using Monte

Carlo analysis with ten random simulations and the figures show the ensemble

averages of the two state variable estimates compared with the true state

variables generated by the model. The variance associated with the ensemble

averages was quite small as shown by the standard error bounds marked on the

la1

-true value

lo\

--- estimte

50

100

number of samples, k

Figure 5. Joint parameter state estimation : output of adaptive state reconstruction

(Kalman) filter for second order, stochastic system.

756'

plots.

P . Young and A . Jakernan

It is interesting to observe that the estimate of the first element of

gk is the optimally filtered output of the system ik,which corresponds with the

optimal one step ahead prediction of the output.

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

5. The general refined IV approach to estimation and its application to some

special model forms

So far in this paper we have talked mainly in terms of specific mathematical

model forms. One attraction of the refined IV method, however, is t h a t i t

suggests a general approach to stochastic model estimation that has some

similarities with the alternative prediction error (PE) minimization approach,

but which leads to algorithms with subtle but important practical differences of

mechanization. I n this section, we will outline this approach and show t h a t

i t can be considered to arise from a conceptual basis which we will term

' generalized equation-error ' (GEE) minimizationt. We will also demonstrate

the efficacy of the approach by showing how i t can be applied to two specific

model forms, namely the multi-input, single output (MISO) transfer function

model ; and the ' tanks in series ' representation, as used in chemical engineering

and water resources modelling work.

5.1. Generalized equution-error ( G E E ) minimization

'

The general refined I V approach to time-series analysis consists of the

following three steps.

(1) Formulate the stochastic, dynamic model so that the stochastic characteristics are defined in relation t o a ' source ' term consisting of a white noise

' innovations ' process ek (or ek in the multivariable case), and then obtain a n

expression for e, in terms of all the other model variables.

(2) By defining appropriate prefilters, manipulate the expression for e,

until i t is a linear relationship (or set of relationships) in the unknown parameters of the basic, deterministic part of the model (i.e. the A and B polynomial

coefficients in all examples considered so far). Because of their similarity to

the equation-error relationships used in ordinary IV analysis, these linear

expressions can be considered as ' generalized equation-error ' (GEE) functions.

( 3 ) Ap'ply the recursive or recursive-iterative I V algorithms to the estimation of the parameters in the GEE model(s) obtained in step (2), with the IV's

generated in the usual manner as functions of the output of a n adaptive

auxiliary model (in the form of the deterministic part of the system model).

If prefilters are required in the definition of GEE, then they will also need t o be

made adaptive, if necessary by reference to a noise model parameter estimation

algorithm (e.g. the refined A m algorithm) utilized in parallel and co-ordinated

with the I V algorithm.

This decomposition of the estimation problem into parallel but co-ordinated

system and noise model estimation, as outlined in step (3), is central to the

concept of GEE minimization ; and it contributes to the robustness of the

resultant algorithms in comparison with equivalent prediction error. (PE)

minimization algorithms (Ljung 1976, Young and Jakeman 1979 c).

f GEE has been used previously to denote any EE function defined in terms of

prefiltered variables ; here we use i t more specifically to mean an ' optimal ' GEE

function with prefilters chosen to induce asymptotic statistical efficiency.

Refined instrumental variable methods of recursive time-series analysis 757

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

The robustness is enhanced further by the IV mechanization, which ensures

that the algorithm is not susceptible to contravention of theoretical assumptions

about the nature of the noise process. In particular, the supposition that the

noise arises from a white noise source, usually via some dynamic model (e.g. a

rational transfer function, as in the ARMA model) is not restrictive : provided

that the system input signals (u, or uk)are independent of the noise, then the

refined IV algorithms will yield estimates which are asymptotically unbiased

and consistent even if the noise assumplions are imrrect. This remains true

even if the noise is highly structured, e.g. a periodic signal or d.c. bias. On the

other hand, if the assumptions are valid, then the resulting estimates will, as

we have seen in this series of papers, have the additional desirable property of

asymptotic statistical efficiency.

5.2. The M I S 0 transfer function model

To exemplify the GEE minimization approach, let us consider the MIS0

transfer function model. Most time-series research in the M I S 0 case has been

directed towards the so-called ARMAX representation, where the transfer

functions relating each separate input to the output have common denominator

polynomials. An alternative and potentially more useful model form is the

following MISO ' transfer function ' model where them individual input-output

transfer functions are defined independently (see, e.g. Box and Jenkins 1970),

This model can be considered as the ' dynamic ' equivalent of regression

analysis, with the regression coefficients replaced by transfer functions. I n

this sense, such a model has wide potential for application.

Considering the two input case for convenience, we note from (24) that the

white noise source e , is defined as

I t is now straightforward to show that (25) can be written in two GEE forms.

First, if a single star superscript is utilized to denote prefiltering by CIDA,,

then .

Here

elk is defined as

=Yk-&k

where gZkis the output of the auxiliary model between the second input u,,

and the output, i.e. it is that part of the output ' explained ' by the second input

alone. Similarly, e, can be defined in terms of ,t2,where

tlk

f2k =Yk

(28)

I n this case,

ek= A,(2k** - B2u2,**

where the double star superscript denotes prefiltering by CIDA,.

P9)

P. Young and A. Jakeman

758

By decomposing the problem into the two expressions (26) and (29), we have

been able to define two separate GEE'S which are linear in t2ie unknown moae?

parameters for each transfer function in turn. Now let us define,

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

where aif, j = 1, 2, .. ., n ; and bij, j = 0, 1, . .. , n are the j t h coefficients of A ,

and Bi respectively. It is now possible to obtain estimates 8, and 6, of a,

and a, from the following refined I V algorithm

where Qi, and Kik* are defined as follows

Qik*= [gi,k-l * ,

I

(ik*=[fi,k-L

- * uik*,

.., xi,k-n

%

,

...I

Ui,k-n*]T

'.., '!i,k-n*r ~ i k * ...,

, Ui,k-n*IT

Algorithm (30) is used twice for i = 1, 2, but when i = 2, the single star superscripts are replaced by double star superscripts. The adaptive prefiltering is

then executed in the same manner as for the SISO case, with the refined AML

algorithm providing estimates of the C and D polynomial coefficients. T h e

extension to the general case of m inputs is obvious. There is also a symmetric

gain version of (30) with gik*Tbeing replaced by gik* everywhere except within

the braces.

5.3. The tanks-in-series model

I n chemical engineering and water resources research i t is quite often useful

to describe a dynamic system by means of a serial connection of identical

' tanks ' with each tank described by a first order differential equation with

transfer function b/(s+a) ; in other words the input u ( t ) and the output y ( t )

are related by

where m is the number of tanks in series and t ( t ) is a noise term. Using a GEE

approsch,it is possible to obtain refined I V estimates of a and b for different

m and so identify and estimate the tanks-in-series model. We will not discuss

this in detail here since i t is done elsewhere (Jakeman and Young 1979 b), b u t

a n example of its use will be described in the next section.

5.4. Experimental results

Jakeman et a2. (1979) have evaluated the M I S 0 transfer function model

estimation procedure using both simulated and real data. Figure 6 compares

the deterministic output of a MIS0 air pollution model obtained in this manner

with the measured data. Here the d i t a are in the form of atmospheric ozone

measurements a t a ' downstream ' location in the San Joaquin Valley of California. These are modelled in terms of two ' input ' ozone measurements a t

Refined instrumental variable methods of recursive time-series analysis 759

upstream ' (in relation t o the prevailing wind) locations. This analysis proved

particularly useful for interpreting across ' gaps ' in downstream data.

Table 2 shows the results obtained when the tanks-in-series estimation

procedure was applied to 100 samples of simulated data obtained from a second

order system ( m= 2). The results again include averages a n d standard errors

over ten experiments.

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

model output , i t

50

100

number of mrrQles-,k

Figure 6. Results from model of ozone concentration in San Joaquin Valley,

California.

Signal to noise ratio, S

Parameter

True

value

10

30

Table 2. T,=0.2 sec (P,/15-7).

6. Multivariable systems with limited dimension observation space

Another example of the many possibilities which are opened up by the

refined I V approach to time-series analysis is the case where the number of

observed variables in a multivariable system is less than the number of output

variables in the model. I n theory, the symmetric matrix gain refined I V

algorithm for multivariable systems described by Jakeman and Young (1979 a)

can be modified t o allow for such a situation. This will bnly be possible, .of

course, provided the complete model is identifiable from the limited observations. The conditions for identifiability in these situations are not the subject

of the present paper but, if we assume that the model is identifiable (i.e. unique

estimates of all the model parameters can be obtained -from the available

observations) then the modifications to the symmetric gain refined I V algorithm

are fairly straightforward.

P. Young and A . Jakeman

760

Consider the following multivariable model

,

(i)

(ii)

(iii)

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

Here the nomenclature is as in Jakeman and Young (1979 a ) with A, .B, C,

and D denoting matrix polynomials in z-I and yk representing the q dimensiona1

' observation ' vector of system output variables, where q is normally less than

the dimension p of the model vector xk and H is a q x p observation matrix.

The refined I V algorithm in this case can be written in the following form (cf.

Jakeman and Young 1979 a )

(i)

AIL = 6k-i - P k - l ? k * [ ~+ ?k** ~k-lvk*]-l{Sk*T~k-l-yk*)

(ii)

8, = P,-, - Pk-,Pk*[6 + Pk*T Bk-,?k*]-l?k*T PkSl

(34)

where ?,*, Sk* and yk* are defined in a similar nianner to their counterparts

in equation (21) of P a r t I1 of the paper, except that the prefilters are now

D-1C HA-' and y, is made dimension p where necessary by appending zeros

after the qth element. The refined AML algorithm and the recursive algorithm

for Q remain the same but note that = y, - Hgk.

The algorithm (34) is perfectly general for multivariable systems in which

the output observations are a linear combination of the systein variables. It

includes as a special case, therefore, the discrete state-space model with

deterministic input vector u, and output measurement noise (not necessarily

white), but no system noise. I n this case x, is the state of the system while

ek

)I

- A ( ) and

B(z-1) = B,(z-1)

Furthermore, a similar algorithm consisting simply of the refined AML equations could,be developed in the purely stochastic case, i.e. when (33) (i) is

removed so t h a t the model becomes

(i)

(ii)

C(z-l)Ek= D ( r l ) e k

'

(35)

~ k = ~ E vk

k+

Here v, is a q vector of white measurement noise. This model includes as a

special case, t h e stochastic state-space model with system and measurement

noise, which is obtained when

C(zL1)= I + C,(z-l)

and

D(z-l)

= Dl(+)

The algorithm '(34) has not yet been evaluated satisfactorily. Like any

multivariable estimation algorithm, it is not only computationally quite

complex, but also likely to be a very sensitive algorithm, particularly when t h e

data base is small : we have seen in the ordinary MIMO situation (Jakeman

and Young 1979 a),for example, t h a t the symmetric gain algorithm often fails

to converge in such a small sample situation and it is almost certain that (34)

will prove even more sensitive.

Refined instrumental variable methods of recursive time-series analysis 761

7. Stochastic approximation algorithms

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

It is well known that the recursive least squares and related algorithms,

such as those discussed in this series of papers, can be interpreted as special

examples of matrix-gain, multi-dimensional, stochastic approximation (SA)

procedures (Tsypkin 1971, Young 1976). It is clearly possible, therefore, to

modify any of the refined IV algorithms to form simpler, scalar gain alternatives. While such SA procedures are computationally efficient, they will

not usually possess the rapid convergence characteristics and low sample

statistical efficiency of their matrix gain equivalents. They may prove

advantageous, however, where data are plentiful but computational load must

be kept to a minimum.

I n the basic SA algorithms, the matrix gain p, is replaced by a scalar gain

which obeys the conditions of Dvoretzky (see, e.g. Tsypkin 1971). I n the

discrete-time case, the best known gain sequence of this type is y, = y/k when y

is a constant scalar : in other words, the gain sequence is made a monotonically

decreasing function of the recursive step number, k. I n the continuous-time

case the best known example is simply the continuous-time equivalent of y,.

Such SA algorithms can also be modified (normally heuristically) to allow

for variation in the estimated parameters : this is achieved by restricting the

monotonic decrease in gain in some manner, usually by making y, or y(t)

approach a constant yo exponentially as k or t approaches infinity. This

modification is based on a partial analogjr with the behaviour of the f', matrix

in algorithm (8) when a RW model (9) for parameter variations is used to define

@ and I? (Young 1979 d).

The simple SA versions of the refined IV algorithms cannot be recommended for general application since their rates of convergence can be intolerably low (see, e.g. Ho and Blaydon 1966). But i t is possible to consider

somewhat more complicated algorithms which represent a compromise between

the simplicity of basic SA and the complexity of the fully recursive matrix-gain

algorithms. A simple example would be the following modification t o the

refined IV algorithm given by eqn. (4) (i) in Part I of the paper (Young and

Jakeman 1979 a, p. 4)

ak=

- YkfjkDfik*{zk*T

-yk*)

(36) .

Here f ' , ~

is a 2n + 1, diagonal matrix with elements ( $ k - i * ) - 2 , i = 1 , 2, ... , n,

~=

, 0,1, .. . , n ; while y, is a SA sequence, say y/k. I n other

and ( u , - ~ * ) - j

words, the gain matrix pk is replaced by a purely diagonal ' approximation '

y,P,D, so that the computational burden is proportional to n rather than n2

for the full refined IV algorithm.

Algorithms such as (36) seem to work reasonably well (see, e.g. Kumar and

Moore 1979). As might be expected, their performance seems t o fall somewhere between that of the full algorithm and the scalar gain equivalent. I n

general, however, the simpler algorithms should only be used when this is

necessitated by computational limitations, as for example in on-line applications using special low storage capacity microprocessors.

8. Self adaptive control

Perhaps the prime motivation for the development of recursive estimation

algorithms during the early nineteen sixties was their potential use in

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

762

P. Young and A. Jakeman

self-adaptive control. Of late, this, early stimulus has been revived with the

development of the ' self-tuning regulator ' (STR) based on recursive least

squares (RLS) estimation (e.g. Astrom and Wittenmark 1973, Clarke a n d

Gawthrop 1975):. - I n the STR the effect of the noise induced asymptotic bias

on the RLS parametric estimates is cleverly neutralized by embedding the

algorithm within a closed adaptive loop which automatically adjusts the

estimates and the control law t o yield either minimum variance regulation or

some other objective, such as closed loop pole assignment (Wellstead et a2.

1979).

The concept of the STR can be contrasted with the earlier concept of self

adaptive control by ' identification and synthesis ' (SAIS), where the objective

is to explicitly obtain unbiased parameter estimates and then to separately

synthesize the control law using these estimates (e.g. Kalman 1958, Young

1965 b, Young 1979 b).

While the STR seems to possess good practical potential, there are certain

situations where the alternative of SAIS will have some advantages. For

example, the stability of the adaptive loop in the STR is not easy to ensure a

priori because of the close integration between the recursive algorithm and the

control law, and the highly non-linear nature of the resulting closed loop system.

On the other hand, the separation of estimation .and synthesis in the SAIS

system means that the question of convergence and stability is largely one of

ensuring the identifiability of the system under closed loop control. This will

always be possible provided an external command input is present which is both

' sufficiently exciting ' and statistically independent of the noise in the closed

loop. The requirement for such an input can be problematical, however, in t h e

pure regulatory situation, where the STR clearly comes into its own.

I n cases where the SAIS procedure seems advantageous, the refined I V

algorith rn provides the best, currently available recursive estimation strategy :

i t is robust, can be applied in continuous or discrete-time and its results can

be used for either deterministic o r stochastic control system design. The

efficiency of such an SAIS strategy is demonstrated in the self adaptive

autostabilization system described by Young (1979 b) : here the recursive

estimation is used to synthesize a deterministically designed control system

based on closed loop pole assignment using state variable feedback. This

system achieved tight 'control of the simulated missile over the whoIe of the

mission, which included a difficult boost phase where parameters changed

rapidly by factors ,of up to 30 in 5 sec.

I n the case of adaptive, stochastic control by SAIS, the present paper has

shown that the refined I V approach provides an added bonus : the single IV

AML algorithm yields not only the estimates of the model parameters but also

estimates of the state variables, which can then be used in state variable feedback control. And in the discrete-time, linear case, such an SAIS system could

be considered optima'lly adaptive, since the state variable estimates would

then, as we have seen, be optimal in a Kalman sense.

9. Conclusions

1

This is the third of three papers which have described and comprehensively

evaluated the refined I V approach to time-series analysis. I n the present

paper, we have seen how this approach can be extended in various important

Refined instrumental variable methods of recursive time-series analysis 763

directions and can also provide a conceptual basis for the synthesis of refined

.

.

I V algorithms for a wide class of stochastic dynamic systems.

This conceptual base, which we have termed generalized equation-error

(GEE) minimization, has some similarities with the alternative prediction error

( P E ) minimization concept b u t tends to yield algorithms which are more robust

both in a computational sense and to mis-specification of the noise characteristics. We feel t h a t this robustness, which arises primarily because of the

errors-in-variables formulation (Johnston 1963) and consequent IV mechanization, is an important feature of the refined I V algorithms and more detailed

discussion on this topic will appear in a forthcoming paper (Young and Jakeman

1979 c).

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

c.

This paper was completed while the authors were visitors in the Control

and Management Systems Division of the Engineering Department, University

of Cambridge.

REFERENCES

ASTROM,

K. J . , 1970, Introduetwn to Stochastic Control Theory (New York : Academic

Press).

ASTROM,

K. J., and WITTENMARK,

B., 1973, Automutica, 9, 185.

BOX,G. E. P., and JENKLNS,

G. M., 1970, Time Series Analysis (San Francisco:

Holden Day).

P. J., 1975, Proc. Instn elect. Engrs, 122, 929.

CLARKE,D. W., and GAWTHROP,

GELB,A. (ed.), 1974, Applied Optimal Estimutwn (Boston : M I T Press).

Ho, Y. C., and BLAYDON,

C., 1966, Proceedings of the N . E.C. Conference, U.S.A.

JAKEMAN,

A. J., 1979, Proc. I P A C Symp. on Identification and System Parameter

E s t i m t w n , Darmstadt, Federal Republic of Germany.

JAKEMAN,

A. J., STEELE,L. P., and YOUNG,

P. C., 1978, Rep. No. AS/R26, Centre

for Resource and Environmental Studies, Australian National University ;

1979, Rep. No. AS/R35.

JAKEMAN,

A: J., and YOUNG,

P. C., 1979 a, Int. J. Control, 29,621 ; 1979 b, Rep. No.

AS/R36, Centre for Resource and Environmental Studies, Australian National

University ; 1979 c, Rep. No. AS/R37 (submitted to Electron. Lett.).

JAZWINSKI,A. H., 1970, Stochastic Processes and Filtering Theory (New York :

Academic Press).

JOHNSTON,

J., 1963, Econometric Methods (New York : McGraw-Eill).

KALDOR,J . , 1978, M.A. Thesis, Centre for Resource and Environmental Studies,

Australian National University.

KALMAN,

R. E., 1958, Trans. Am. Soc. mech. Engrs, 80-D, 468 ; 1960, T r a m . Am.

Soc. mech. Engrs, 82-D, 35.

KALMAN,

R. E., and BUCY,R. S., 1961, Trans. Am. Soc. mech. Engrs, 83-D, 95.

S., 1962, A . I . E . E . Trans. A p p . Ind., 80 11, 378.

KAYA,Y., and YAMAMURA,

L. L., 1966, Proc. J.A.C.C., p. 616.

KOHR,R. H., and HOBEROCK,

KOPP,R. E.,and ORFORD,R. J . , 1963, A I A A J., I, 2300.

KREISSELMEIER,

G., 1977, I E E E Trans. autom. Control, 22, 2.

KUMAR,R., and MOORE,J. B., 1979, Automatics (to appear).

LEE, R. C. K., 1964, Optimal Estimation, Identification and Control (Boston : MIT

Press).

LEVADI,V. S., 1964, International Conference on Microwaves, Circuit Theory and

In.formation Theory, Tokyo.

LJUNO,L., 1976, System Identification: Advances and Case Studies, edited by R. K.

Mehra and D. G. Lainiotis (New York : Academic Press).

J., 1975, Proc. lnstn elect.. Engrs, 122, 663.

NORTON,

Downloaded by [Ohio State University Libraries] at 05:18 19 June 2012

764 Refined instrumental variable metho& of recursive time-series analysis

PHADKE,

M. S., and Wu, S. M., 1974, J. Am. statist. Ass., 69, 325.

PHILLIPS, A. W., 1959, Biometriku, 46, 67.

PIERCE,D. A., 1972, Biometrika, 59, 73.

SOLO,V., 1978, Rep. No. AS/R20, Centre for Resource and Environmental Studies,

Australian National University.

T S Y P K I NYA.

,

Z., 1971, Adaption and Learning in Adomatic Systems (New York :

Academic Press).

P. E., EDMUNDS,

J. M.,.PRAoER,D., and ZANKER,

P., 1979, Int. J.

WELLSTEAD,

Control, 30, 1.

WHITEHEAD,P. G., and YOUNG,

P. C., 1975, Compder Simulution of Water Resource

Systems, edited by G . C. Vansteenkiste (Amsterdam : North Holland).

P. C., and IV~ICHELL,P., 1978, Proc. I . E . Hydrology Symp.,

WHITEHEAD,

P. G., YOUNG,

Canberra, p. 1 .

YOUNG,

P. C.,1964, I.E.E.E. Trans. Aerosp., 2, 1106 ; 1965 a, Rad. and Elect. Eng.

( J .I E R E ) , 29,345 ; 1965 b, Theory of Self Aahptive Conlrol Systems, edited by

P. H. Hammond (New York : Plenum Press) ; 1969, Proceedings of the World

Congress, Warsaw (see also Adomatim, 6, 271) ; 1974, Bull. Inst. Math.

Appl., 10, 209 ; 1975, J1 R . statist. Soc. B, 37, 149 ; 1976, Oplimisation in

Action, edited by L. C. W. Dixon (New York : Academic Press), 517 ; 1978,

The Modeling of Environmental Systems, edited by G. C. Vansteenkiste

(Amsterdam : North Holland) ; 1979 a, Proceedings of the I P A C Symp. on

Identification and System Parameter Estimation, Darmstadt, Federal Republic

of Germany ; 1979 b, Ibid. ; 1979 c, Electron. Lett., 15,358 ; 1979 d, Recursive

Estimation (New York : Springer).

YOUNO,

P. C.,and JAKEMAN,

A. J., 1979 a, Int. J. Control, 2 9 , l ; 1979 b, Proceedings

01 the I P A C Symposium on Computer Aded Design of Control Systems, Zurich ;

1979 c, Rep. No. AS/R27, Centre for Resource and Environmental Studies,

Australian National University.

Y o u ~ aP.

, C., and KALDOR.

J., 1978, Rep. No. AS/R14, Centre for Resource and

Environmental Studies, Australian National University. .

Вам также может понравиться

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Alice in Wonderland ReadAlongДокумент28 страницAlice in Wonderland ReadAlongamit1234Оценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- Outback Sleepers Structural Calculations For Reinforced Concrete Sleepers PDFДокумент24 страницыOutback Sleepers Structural Calculations For Reinforced Concrete Sleepers PDFamit1234Оценок пока нет

- SuraДокумент2 страницыSuraamit1234Оценок пока нет

- RBA Real Estate InvestmentДокумент19 страницRBA Real Estate Investmentamit1234Оценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- House Price IncreaseДокумент4 страницыHouse Price Increaseamit1234Оценок пока нет

- M FL 30138153Документ80 страницM FL 30138153amit1234Оценок пока нет

- Veranda Palms PH II Web BrochureДокумент17 страницVeranda Palms PH II Web Brochureamit1234Оценок пока нет

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- CSR 103 Review 4 at PurdueДокумент1 страницаCSR 103 Review 4 at Purdueamit1234Оценок пока нет

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- 2004 Ap Psych Multiple Choice Key PDFДокумент5 страниц2004 Ap Psych Multiple Choice Key PDFamit1234Оценок пока нет

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- BrainstructuresДокумент2 страницыBrainstructuresapi-284490927100% (1)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Public Sector Enterprises ReformsДокумент28 страницPublic Sector Enterprises Reformsamit1234Оценок пока нет

- 6 Sigma Kaizen & ISOДокумент9 страниц6 Sigma Kaizen & ISOamit1234Оценок пока нет

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Aluminum 3003-H112: Metal Nonferrous Metal Aluminum Alloy 3000 Series Aluminum AlloyДокумент2 страницыAluminum 3003-H112: Metal Nonferrous Metal Aluminum Alloy 3000 Series Aluminum AlloyJoachim MausolfОценок пока нет

- Online EarningsДокумент3 страницыOnline EarningsafzalalibahttiОценок пока нет

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- Experiment On Heat Transfer Through Fins Having Different NotchesДокумент4 страницыExperiment On Heat Transfer Through Fins Having Different NotcheskrantiОценок пока нет

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- T1500Z / T2500Z: Coated Cermet Grades With Brilliant Coat For Steel TurningДокумент16 страницT1500Z / T2500Z: Coated Cermet Grades With Brilliant Coat For Steel TurninghosseinОценок пока нет

- Unit-5 Shell ProgrammingДокумент11 страницUnit-5 Shell ProgrammingLinda BrownОценок пока нет

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Computer First Term Q1 Fill in The Blanks by Choosing The Correct Options (10x1 10)Документ5 страницComputer First Term Q1 Fill in The Blanks by Choosing The Correct Options (10x1 10)Tanya HemnaniОценок пока нет

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- TEVTA Fin Pay 1 107Документ3 страницыTEVTA Fin Pay 1 107Abdul BasitОценок пока нет

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Sky ChemicalsДокумент1 страницаSky ChemicalsfishОценок пока нет

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- Catalog Celule Siemens 8DJHДокумент80 страницCatalog Celule Siemens 8DJHAlexandru HalauОценок пока нет

- Asphalt Plant Technical SpecificationsДокумент5 страницAsphalt Plant Technical SpecificationsEljoy AgsamosamОценок пока нет

- Wiley Chapter 11 Depreciation Impairments and DepletionДокумент43 страницыWiley Chapter 11 Depreciation Impairments and Depletion靳雪娇Оценок пока нет

- Job Description For QAQC EngineerДокумент2 страницыJob Description For QAQC EngineerSafriza ZaidiОценок пока нет

- Proceedings of SpieДокумент7 страницProceedings of SpieNintoku82Оценок пока нет

- Gardner Denver PZ-11revF3Документ66 страницGardner Denver PZ-11revF3Luciano GarridoОценок пока нет

- Polytropic Process1Документ4 страницыPolytropic Process1Manash SinghaОценок пока нет

- Strobostomp HD™ Owner'S Instruction Manual V1.1 En: 9V DC Regulated 85maДокумент2 страницыStrobostomp HD™ Owner'S Instruction Manual V1.1 En: 9V DC Regulated 85maShane FairchildОценок пока нет

- Embedded Systems DesignДокумент576 страницEmbedded Systems Designnad_chadi8816100% (4)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Recommended Practices For Developing An Industrial Control Systems Cybersecurity Incident Response CapabilityДокумент49 страницRecommended Practices For Developing An Industrial Control Systems Cybersecurity Incident Response CapabilityJohn DavisonОценок пока нет

- Presentation Report On Customer Relationship Management On SubwayДокумент16 страницPresentation Report On Customer Relationship Management On SubwayVikrant KumarОценок пока нет

- SBL - The Event - QuestionДокумент9 страницSBL - The Event - QuestionLucio Indiana WalazaОценок пока нет

- What Caused The Slave Trade Ruth LingardДокумент17 страницWhat Caused The Slave Trade Ruth LingardmahaОценок пока нет

- P 1 0000 06 (2000) - EngДокумент34 страницыP 1 0000 06 (2000) - EngTomas CruzОценок пока нет

- Notifier AMPS 24 AMPS 24E Addressable Power SupplyДокумент44 страницыNotifier AMPS 24 AMPS 24E Addressable Power SupplyMiguel Angel Guzman ReyesОценок пока нет

- Employees' Pension Scheme, 1995: Form No. 10 C (E.P.S)Документ4 страницыEmployees' Pension Scheme, 1995: Form No. 10 C (E.P.S)nasir ahmedОценок пока нет

- Agfa CR 85-X: Specification Fuji FCR Xg5000 Kodak CR 975Документ3 страницыAgfa CR 85-X: Specification Fuji FCR Xg5000 Kodak CR 975Youness Ben TibariОценок пока нет

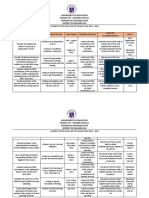

- Action Plan Lis 2021-2022Документ3 страницыAction Plan Lis 2021-2022Vervie BingalogОценок пока нет

- Guide For Overseas Applicants IRELAND PDFДокумент29 страницGuide For Overseas Applicants IRELAND PDFJasonLeeОценок пока нет

- CIR Vs PAL - ConstructionДокумент8 страницCIR Vs PAL - ConstructionEvan NervezaОценок пока нет

- XgxyДокумент22 страницыXgxyLïkïth RäjОценок пока нет

- Getting StartedДокумент45 страницGetting StartedMuhammad Owais Bilal AwanОценок пока нет