Академический Документы

Профессиональный Документы

Культура Документы

Ecsbip

Загружено:

Poonam KatariaИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Ecsbip

Загружено:

Poonam KatariaАвторское право:

Доступные форматы

Comparative Analysis of clustering & enhancing

classification Using Bio- Inspired Approaches

1&2

Navpreet Rupal 1, Poonam Kataria 2

Department of CSE, SUSCET, Tangori, Distt.Mohali, Punjab ,India

Abstract Data Mining is a technique of discovering

hidden patterns & relationship in data with the help of

various tools & techniques to make a valid prediction .

Clustering is defined as process of partitioning a set of

objects or data into a set of meaningful sub-classes

called as clusters. It helps the users to understand the

structure in a data set. Classification groups the data

under different classes. Bio- inspired approaches are

various evolutionary algorithms inspired from nature

and solves hard and complex computing problems. In

this work , we first form the clusters of the dataset of a

bank with the help of h-means clustering. This work is

also based on comparative study of GA, PSO & BFO

based Data clustering methods. To compare the results

we use different performance parameters for

classification such as precision, cohesion, recall and

variance. The results prove that BFO yields better

outputs as compared to GA and PSO. So this work

shows that BFO results as a better optimization

technique

Keywords Data Mining;

approaches; GA; BFO; PSO

Clustering;

Bio-inspired

I. INTRODUCTION

Recent development in science and technology has given a

big rise to the data in the data warehouse, so it becomes a

cumbersome task to the information required. To solve this

problem, various data mining techniques has been

proposed. Data mining simply means to extract the data

from the database, but to improve the result of query these

data mining techniques have to be more efficient in order

to get the optimized result of a query. Thus data mining is

a process through which data is discovered with respect to

its pattern and interrelationship because of which it has

become a powerful tool. The process of data mining is

shown in the figure 1

Fig. 1 Process of data mining

The data mining process involves various techniques and

methods such as clustering, classification, association,

regression, pattern matching etc. Here classification maps

each data element to one of a set of pre-determined classes

based on the difference among data elements belonging to

different classes. Clustering groups data elements into

different groups based on similarity between elements

within a single group. Association is a rule of form, if X

then Y denoted by X-> Y. Regression uses a numerical

data set to develop a best fit mathematical formula. Pattern

Matching allows predicting behaviour patterns and trends

based on the sequential rule A->B which implies that event

B will always be followed by A. The paper here presents

an overview of pattern clustering methods from a

statistical pattern recognition perspective with a goal of

providing useful advice and references to fundamental

concepts accessible to the broad community of clustering

practitioners. Different approaches to clustering data can

be described. The other representations of clustering

methodology are possible. At the top level, there is a

distinction between hierarchical and partitioned

approaches (hierarchical methods produce a nested series

of partitions while partitioned methods produce only one)

[8].

II. PROPOSED METHODOLOGY

As discussed earlier, data mining is a technique of

discovering the hidden patterns of data which helps in

decision making. Classification is the supervised learning

technique that maps each data element to one of a set of

pre-determined classes based on the difference among data

elements belonging to different classes. Clustering groups

the data elements under different groups based on

similarity between within a single group.

There are number of artificial intelligence techniques

which helps in data mining to get the optimized result of

the query. Various bio-inspired approaches (Genetic

Algorithm, Particle Swarm Optimization, Bacterial

Foraging Optimization) will be compared on the basis of

performance parameters of classification (precision, recall,

cohesion, variance) and therefore enhancement will be

done.

To form the clusters of the dataset using Hmeans clustering.

To study and implement the various bio-inspired

techniques GA,PSO, BFO on the dataset of a

bank.

To make enhancement through various

performance parameters for evaluation of

classification scheme.

Comparison of results based on GA, PSO and

BFO on bank dataset.

The proposed model focuses on the above objectives

which are helpful in improving the classification

parameters and are practically implemented using

MATLAB 7.11.0 environment. In this proposed work, we

used Bacterial foraging optimization algorithm to enhance

the classification process. This algorithm provides better

results as compare to previous techniques. The data set

chosen for the experimental simulation test was of a bank

and that was obtained from the UCI Repository of

Machine Learning Databases.. The work is proposed to be

completed in following stages which have to be preceded

in parallel fashion, as described below in Figure 2:

Figure 2: Basic Design of the System

2.1 Database used for mining

Samples

The data set chosen for the experimental simulation test

were obtained from the UCI Repository of Machine

Learning Databases. Dataset of a bank is taken for the

experimental work..

Attributes

The dataset taken is of a bank which is extracted from UCI

Repository. It is a multivariate data set and it has 17

attributes and 45222 instances. The description of dataset

is shown as below. The attributes on which experimental

work is done is age and balance.

TABLE 1: TESTED ATTRIBUTES

Att. No.

Testing Attribute

1

Age

2

Type of job

3

4

5

6

7

8

9

10

Marital Status

Educational details

Balance

Housing required

Loan Availed

Type of contact

Duration time

Pay days

III. H-MEANS CLUSTERING

K-means algorithm performs clustering based on

partitioning the total population as k-number of

clusters. The convergence speed depends on the

number of iterations chosen for achieving stated

clusters. k-means+ algorithm may contain empty

clusters. The L loyds h-means+ algorithm improve the

concept of k-mean+ algorithm. It is more appropriate

for finding a local optimal solution and is faster

compared to k-means. The h-means+ algorithms

improve the cluster classification by performing

iterations in order to reduce error function. This

algorithm includes repeats until loop which is executed

repeated until optimal clustering is obtained with nonempty clusters. The two algorithms can be used

together referred as two-phase hk-means algorithm.

The optimal partitioning works well or not it depends

upon the randomly and initially chosen partition

classes for the formation of clusters. Subsequently the

partitioning is optimized by minimizing the distance

among the objects in a cluster in terms of minimum

sum squared error. Algorithm iterations can remove

empty clusters if found during the execution.

Additional clusters are added to the current partition

and computation is repeated until an optimal partition

is obtained. The algorithm also searches for outliers. If

outliers are found these are removed from clusters.

This operation splits one cluster in two parts to

increase the value of k. The algorithm also looks for

overlapping clusters and if found these are merged to

form one unique cluster. H-means+ algorithm

execution steps can be summarized as follows.

Step 1: Assign each sample randomly to one of the kclusters

Step 2: repeat until all samples assigned to clusters

Step 3: Compute t, the number of empty clusters

Step 4: If some of the clusters are empty, then find the t

samples farther from their centers

Step 5: for each of these t samples, assign the sample to

an empty cluster, end for, end if

Step 6: Compute center for each of the clusters while

clusters are not stable

Step 7: for each sample compute the distances between

Samples and all the centers

Step 12: if the solution is efficient then terminate else

perform mutation operation up to 0.05%

Step 8: Find the location of the sample which is close to

the center

A genetic algorithm searches best solution within a

collection of large number of solutions of a problem being

solved. Figure 3 shows the cluster forming by using

Genetic Algorithm.

Step 9: Assign Sample to the cluster, end for, end while

Step 10: Re-compute all the centers until all the clusters

are non-empty

The main functions of the h-means+ algorithm involve

selecting of clusters and determining precision, recall,

cohesion, variance for 2 attributes. Subsequently the

classes are tested for positive and negative conditions.

IV. OPTIMIZATION BASED CLUSTERING

This work deals with the implementation of the clustering

by using different optimization algorithms that are Genetic

Algorithm, Ant Colony Optimization Algorithm and

Particle Swarm Optimization Algorithms then we compare

the results of clustering and find the best optimization

algorithm with high percentage of accuracy.

4.1 Genetic Algorithm

Genetic algorithms can be considered as a search

technique whose algorithm is based on the mechanics of

natural selection and genetics. It has been used in realms

as diverse as search optimization and machine learning

problems since they are not restricted by problem specific

assumptions such as continuity or unimodality. In rough

terms a genetic algorithm creates a collection of possible

solutions to a specific problem. Initially the solutions are

typically randomly generated solutions so their initial

performance is normally poor. No matters how bad, there

will be small segments of our collection of solutions that

will be nearby our desired solution that is partially correct

answers. Genetic Algorithms exploit this characteristic by

recombination and progressively creating better solutions

so that by the end of the run one have achieved one

solution that is at least nearly optimal.[16]

The flow steps of genetic algorithm for finding a solution

of a given problem may be summarised as follows.

Step 1: Initialize population for possible solution

Step 2: Generate chromosomes of a population with 0s

and 1s randomly

Step 3: If the solution is satisfied then terminate else jump

to next step

Step 4: Compute population fitness value

Step 5: Initialize number of generation

Step 6: While number of generation * 2 termination

condition; do

Step 7: Select all the genetic solutions which can

propagate to next generation

Step 8: Increment number of generation

Step 9: Identify each bit in genetic solution

Step 10: Perform crossover operation up to until 50% of

bits are crossed

Step 11: end while

Figure 3: GA Clustering

4.2 Bacterial Foraging Optimization

Bacterial foraging Optimization is a type of

evolutionary algorithm which estimates cost function after

each iterative step of program and finally gives the better

fitness. The parameters to be optimized represent

coordinates of bacteria. Bacteria is positioned at different

coordinates. At each progressive step, the bacteria move to

new point and each position cost function is calculated.

With this calculated value, further movement of bacteria is

decided by decreasing direction of cost function. This

finally leads to position with highest fitness. The foraging

strategy of E.Coli is governed by four processes. i.e.

Chemotaxis, Swarming, Reproduction, Elimination &

Dispersion.

When

bacterium

meets

favourable

environment it continues swimming in same direction.

The increase in cost function represents unfavourable

environment and then tumbles. In swarming, bacteria

move out from their respective places in ring of cells by

bringing mean square to minimum value.[24] Figure4

shows BFO clustering.

Figure 4: BFO Clustering

4.3 Particle Swarm Optimization

PSO is a computational method that optimizes a problem

by trying to improve a candidate solution through a

number of iterations with respect to quality. In PSO, each

single solution is a bird in a search space, call it a

particle. All of the particles have fitness values which

are to be evaluated by fitness function to be optimized.

Each particle has velocity and position . at each iteration it

updates particles for two best values pbest(best fitness it

has achieved so far) and gbest(best value obtained by any

particle). PSO combines local search method and global

search method to balance exploration and exploitation.

Figure 5 shows the cluster forming by using Particle

Swarm Optimization Algorithm.

Figure 5: PSO Clustering

V. RESULTS ANALYSIS BASED ON PARAMETERS

A. Implementation of BFO

The figure 6 shows the implementation of BFO on

dataset of a bank.

B. Performance Parameters for Classification

Performance metric measures how well your data mining

algorithm is performing on a given dataset. For example, if

we apply a classification algorithm on a dataset, we first

check to see how many of the data points were classified

correctly. The various parameters taken for performance

evaluation are as follows:

1 Precision:- It is also known as positive predictive value

which is fraction of retrieved instances that are relevant

2 Recall :- It is defined as a set of relevant documents (e.g.

the list of all documents on the internet that are relevant

for a certain topic)

3 Cohesion:- It measures how closely objects in the same

cluster are related

4 Variance:- Variance measures how distinct or well

separated are clusters from each other

B. Results

The Precision v/s Recall graph using PSO, GA and BFO is

represented in the figure 8 . In the figure, x -axis

represents recall and y- axis represents precision. BFO has

highest precision for low recall values, while PSO has

highest precision for high recall value. Genetic Algorithm

is worse than BFO and PSO

Figure 6 BFO clustering

The clusters formed from the dataset is represented in the

figure 7. In this graph the clusters are in the form of circles

and the dataset under the clusters are symbolise by same

colour.

Figure 7 Clusters formation

Figure 8 Graph for precision vs recall

In the figure 9, the x-axis represent precision and y-axis

represent cut-off similarity . This graph shows that

precision increases with respect to similarity. BFO has

highest precision followed by PSO and GA.

dataset of a bank has been calculated using GA, PSO and

BFO are tabulated.

TABLE 2

COMPARISON OF GA,PSO,BFO ON THE BASIS OF

EVALUATION PARAMETERS

Parameters

Cohesion

Variance

Precision

Recall

Cohesion

Variance

Figure 9 Precision with Cut-off Similarity Using GA, PSO & BFO

Precision

Recall

In the figure 10, precision using PSO and BFO is taken

Cohesion

into account to check it on more closer detail. This graph

Variance

indicate BFO is better, recall increases as precision

Precision

increases.

Recall

GA

PSO

BFO

.3079

.3910

.20

.29

.4008

.2450

.32

.26

.5087

.4960

.49

.31

.6850

.2910

.32

.31

.6221

.2301

.36

.34

.8012

.3810

.52

.35

.7120

.1634

.41

.40

.7890

..2010

.39

.46

.8490

.2816

.64

.40

VI. CONCLUSION & FUTURE WORK

Figure 10 Precision Using PSO & BFO

In the figure 11, the graph shows recall with cut-off

similarity using GA and BFO. This graph indicate BFO is

better, cut-off increases as recall decreases.

The paper presents enhancement of classification scheme

using various bio-inspired approaches. In the thesis,

three algorithms GA,PSO and BFO are implemented

on dataset of a bank taken from UCI Machine Learning

Repository. Using each algorithm, some performance

parameters such as Cohesion, Variance, Precision,

Recall are calculated. It can be concluded that

Cohesion, Recall, Precision on dataset is more in

Bacterial Foraging Optimization as compared to

Genetic Algorithm and Particle Swarm optimization,

while Variance is less in BFO. As seen in this work,

BFO has been implemented successfully over GA,PSO

In the present work we have implemented the BFO based

classification quite successfully using UCI Repository of

Machine Learning Databases. If we can use more than two

optimization algorithms together with large data sets for

the classification, the results could have been better as

compare to single optimization algorithm. So, future work

could go on the direction of hybrid systems using more

than one algorithm together.

REFERENCES

[1] Lyman, P., and Hal R. Varian, "How much storage is

enough?" Storage, 1:4 (2003).

[2] Way, Jay, and E. A. Smith,"Evolution of Synthetic

Aperture Radar Systems and Their Progression to the EOS

SAR," IEEE Trans. Geoscience and Remote Sensing, 29:6

Figure 11 Recall with Cut-off Similarity

(1991), pp. 962-985.

Comparison of Evaluation Parameters

[3] Usama, M. Fayyad, "Data-Mining and Knowledge

Discovery: Making Sense Out of Data," Microsoft

For the purpose of analysis, the comparison of different Research IEEE Expert, 11:5. (1996), pp. 20-25.

parameters such as cohesion, variance, precision, recall on

[4] Berson, A., K. Thearling, and J. Stephen, Building Data

Mining Applications for CRM, USA, McGraw-Hill

(1999).

[5] Berry, Michael J. A. et al., Data-Mining Techniques for

Marketing, Sales and Customer Support. U.S.A: John

Wiley and Sons (1997).

[6] Weiss, Sholom M. et al., Predictive Data-Mining: A

Practical Guide. San Francisco, Morgan Kaufmann (1998).

[7] Giudici, P., Applied Data-Mining: Statistical Methods for

Business and Industry. West Sussex, England: John Wiley

and Sons (2003).

[8] A.K. JAIN,M.N. MURTY AND P.J. FLYNN, Data

Clustering, ACM Computing Surveys, Vol.31, No. 3,

September 1999.

[9] Enrico Minack, Gianluca Demartini, and Wolfgang Nejdl,

Current

Approaches

to

Search

Result

Diversitication,L3S Research Center, Leibniz Universitt

Hannover, 30167 Hannover, Germany

[10] Basheer M. Al-Maqaleh and Hamid Shahbazkia, A

Genetic Algorithm for Discovering Classification Rules in

Data Mining International Journal of Computer

Applications (0975 8887), Volume 41 No.18, March

2012.

[11] Vivekanandan , P., Dr. R. Nedunchezhian, A New

Incremental Genetic Algorithm Based Classification

Model to Mine Data with Concept Drift Journal of

Theoretical and Applied Information Technology.

[12] Andy Tsai, William M. Wells, Simon K. Warfield, and

Alan S. Willsky, An EM algorithm for shape

classification based on level sets Medical Image

Analysis, Elsevier, pp. 491-502.

[13] Kamal Nigam, Andrew Kachites Mccallum, Sebastian

Thrun, and Tom Mitchell, Text Classification from

Labeled and Unlabeled Documents using EM Machine

Learning, pp. 1-34.

[14]Bhanumathi, S., and Sakthivel., A New Model for

Privacy Preserving Multiparty Collaborative Data

Mining, 2013 International Conference on Circuits,

Power and Computing Technologies [ICCPCT-2013].

[15] Ciubancan, Mihai., et al., Data Mining preprocessing

using GRID technologies, 2013 11th

Roedunet

International Conference (RoEduNet), pp. 1-3.

[16]Velu, C.M., and Kashwan, K.R., Visual Data Mining

Techniques for Classification of Diabetic Patients, 3rd

International Advance Computing Conference (IACC), pp.

1070-1075, 2013 IEEE.

[17] Ming-Hsien Hiesh; Lam, Y.-Y.A. ; Chia-Ping Shen ;

and Wei Chen, Classification of schizophrenia using

Genetic Algorithm-Support Vector Machine (GA-SVM)

2013 35th Annual International Conference of the IEEE

Engineering in Medicine and Biology Society (EMBC),

pp. 6047 6050.

[18] Feng-Seng Lin ; Chia-Ping Shen ; Hsiao-Ya

Sung ; and Yan-Yu Lam, A High performance cloud

computing platform for mRNA analysis 2013 35th

Annual International Conference of the IEEE Engineering

in Medicine and Biology Society (EMBC), pp. 1510-1513.

[19] Saraswathi, S. ; Mahanand, B.S. ; Kloczkowski, A. ;

and Suresh, S., Detection of onset of Alzheimer's disease

from MRI images using a GA-ELM-PSO classifier 2013

IEEE Fourth International Workshop on Computational

Intelligence in Medical Imaging (CIMI), pp. 42-48.

[20] Relan, D. ; MacGillivray, T. ; Ballerini, L. ; and

Trucco, E., Retinal vessel classification: Sorting arteries

and veins 2013 35th Annual International Conference of

the IEEE Engineering in Medicine and Biology Society

(EMBC), pp. 7396-7399.

[21] Samdin, S.B. ; Chee-Ming Ting ; Salleh, S.-H. ; and

Ariff, A.K., Linear dynamic models for classification of

single-trial EEG 2013 35th Annual International

Conference of the IEEE Engineering in Medicine and

Biology Society (EMBC), pp. 4827-4830.

[22] Vargas Cardona, H.D.; Orozco, A.A. ; and Alvarez,

M.A., Unsupervised learning applied in MER and ECG

signals through Gaussians mixtures with the ExpectationMaximization algorithm and Variational Bayesian

Inference 2013 35th Annual International Conference of

the IEEE Engineering in Medicine and Biology Society

(EMBC), pp. 4326-4329.

[23] Keshavamurthy B. N, Asad Mohammed Khan &

Durga Toshniwal, Improved Genetic Algorithm Based

Classification International Journal of Computer Science

and Informatics (IJCSI), Volume-1, Issue-3.

[24] Sharma Vipul , S S Pattnaik and Tanuj Garg (2012)A

Review of Bacterial Foraging Optimization and Its

Applications. IJCA Proceedings on National Conference

on Future Aspects of Artificial intelligence in Industrial

Automation 2012 NCFAAIIA(1) pp.9-12,Foundation of

Computer Science, New York, USA

Вам также может понравиться

- Develop Strategies To Manage Conflict in An: OrganizationДокумент10 страницDevelop Strategies To Manage Conflict in An: OrganizationPoonam KatariaОценок пока нет

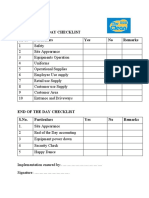

- Quality Assurance Checklist at The Clean: Exterior AppearanceДокумент2 страницыQuality Assurance Checklist at The Clean: Exterior AppearancePoonam KatariaОценок пока нет

- Start of The Day Checklist S.No. Particulars Yes No RemarksДокумент1 страницаStart of The Day Checklist S.No. Particulars Yes No RemarksPoonam KatariaОценок пока нет

- DHSM-303 Managing Service Delivery in Health SectorДокумент13 страницDHSM-303 Managing Service Delivery in Health SectorPoonam KatariaОценок пока нет

- Staff Training Plan: Not Trained Trained Re-TrainedДокумент1 страницаStaff Training Plan: Not Trained Trained Re-TrainedPoonam KatariaОценок пока нет

- Leading and Managing Project: Name - Ramandeep Singh STUDENT - 102886Документ11 страницLeading and Managing Project: Name - Ramandeep Singh STUDENT - 102886Poonam KatariaОценок пока нет

- Abd 702 Creating and Managing Competitive Advantage: Submitted by Student ID Submitted ToДокумент21 страницаAbd 702 Creating and Managing Competitive Advantage: Submitted by Student ID Submitted ToPoonam KatariaОценок пока нет

- Submitted in Partial Fulfillment of The Requirement For The Award of The Degree of Master of Technology IN (Computer Science & Engineering)Документ35 страницSubmitted in Partial Fulfillment of The Requirement For The Award of The Degree of Master of Technology IN (Computer Science & Engineering)Poonam KatariaОценок пока нет

- The CleanДокумент41 страницаThe CleanPoonam KatariaОценок пока нет

- BurnsДокумент3 страницыBurnsPoonam KatariaОценок пока нет

- Mining Spatial Data & Enhancing Classification Using Bio - Inspired ApproachesДокумент7 страницMining Spatial Data & Enhancing Classification Using Bio - Inspired ApproachesPoonam KatariaОценок пока нет

- Assignment 1Документ6 страницAssignment 1Poonam KatariaОценок пока нет

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5795)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- Particle Swarm Optimization With Gaussian Mutation: Higashi@miv.t.u-Tokyo - Ac.jpДокумент8 страницParticle Swarm Optimization With Gaussian Mutation: Higashi@miv.t.u-Tokyo - Ac.jpYacer Hadadi RadwanОценок пока нет

- (Use R!) Paulo Cortez (Auth.) - Modern Optimization With R-Springer International Publishing (2014) PDFДокумент182 страницы(Use R!) Paulo Cortez (Auth.) - Modern Optimization With R-Springer International Publishing (2014) PDFJohn ArfОценок пока нет

- Journal-1 (Literature Survey)Документ19 страницJournal-1 (Literature Survey)AjinkyaA.Wankhede100% (1)

- Bat Algorithm Literature Review and Appl PDFДокумент10 страницBat Algorithm Literature Review and Appl PDFMushaer AhmedОценок пока нет

- Hybrid Prediction-Optimization Approaches For Maximizing Parts Density in SLM of Ti6Al4V Titanium AlloyДокумент23 страницыHybrid Prediction-Optimization Approaches For Maximizing Parts Density in SLM of Ti6Al4V Titanium AlloyAbdul KhaladОценок пока нет

- Performance Evaluation of Task Scheduling Using HybridMeta-heuristicin HeterogeneousCloudEnvironmentДокумент10 страницPerformance Evaluation of Task Scheduling Using HybridMeta-heuristicin HeterogeneousCloudEnvironmentArunvinodh ChellathuraiОценок пока нет

- Antenna Design Explorer 1.0 User's GuideДокумент39 страницAntenna Design Explorer 1.0 User's GuideNabil Dakhli0% (1)

- Load Balancing by Dynamic BBU-RRH Mapping in A Self-Optimised Cloud Radio Access NetworkДокумент5 страницLoad Balancing by Dynamic BBU-RRH Mapping in A Self-Optimised Cloud Radio Access NetworksayondeepОценок пока нет

- Real Estate Price Prediction ModelДокумент3 страницыReal Estate Price Prediction ModelSenthiltk162778Оценок пока нет

- Capicitor PlacementДокумент5 страницCapicitor PlacementSharad PatelОценок пока нет

- Hybrid Particle Swarm Optimization Approach For Solving The Discrete OPF Problem Considering The Valve Loading EffectsДокумент9 страницHybrid Particle Swarm Optimization Approach For Solving The Discrete OPF Problem Considering The Valve Loading EffectsjjithendranathОценок пока нет

- Modified Biogeography Based Optimization Algorithm For Power Dispatch Using Max/Max Price Penalty FactorДокумент6 страницModified Biogeography Based Optimization Algorithm For Power Dispatch Using Max/Max Price Penalty FactorKS SanОценок пока нет

- PSO - Kota Baru Parahyangan 2017Документ52 страницыPSO - Kota Baru Parahyangan 2017IwanОценок пока нет

- Pricing of Reactive Power Service in Deregulated Electricity Markets Based On Particle Swarm OptimizationДокумент4 страницыPricing of Reactive Power Service in Deregulated Electricity Markets Based On Particle Swarm OptimizationSushil ChauhanОценок пока нет

- 38 - Factor Analysis and Forecasting of CO2 Emissions in Hebei, UsingДокумент23 страницы38 - Factor Analysis and Forecasting of CO2 Emissions in Hebei, UsingTheRealCodiciaОценок пока нет

- Jha 2017Документ21 страницаJha 2017bepol88Оценок пока нет

- A Hunger Game Search Algorithm For Economic Load DispatchДокумент9 страницA Hunger Game Search Algorithm For Economic Load DispatchIAES IJAIОценок пока нет

- Performance Comparison of Evolutionary AlgorithmsДокумент6 страницPerformance Comparison of Evolutionary AlgorithmsAdin AhmetovicОценок пока нет

- Solving Sudoku Using Particle Swarm Optimization On CUDAДокумент6 страницSolving Sudoku Using Particle Swarm Optimization On CUDAyahyagrich5Оценок пока нет

- Deep Learning Algorithm For Arrhythmia Detection: Hilmy Assodiky, Iwan Syarif, Tessy BadriyahДокумент7 страницDeep Learning Algorithm For Arrhythmia Detection: Hilmy Assodiky, Iwan Syarif, Tessy BadriyahKani MozhiОценок пока нет

- Literature Survey: 2.1 Review On Machine Learning Techniques For Stock Price PredictionДокумент15 страницLiterature Survey: 2.1 Review On Machine Learning Techniques For Stock Price Predictionritesh sinhaОценок пока нет

- Airfoil Optimisation by Swarm Algorithm With Mutation and Artificial Neural NetworksДокумент20 страницAirfoil Optimisation by Swarm Algorithm With Mutation and Artificial Neural NetworksJaume TriayОценок пока нет

- Gantry Crane SystemДокумент17 страницGantry Crane SystemTarmizi KembaliОценок пока нет

- Smoothing Wind Power Fluctuations by Particle Swarm Optimization Based Pitch Angle ControllerДокумент17 страницSmoothing Wind Power Fluctuations by Particle Swarm Optimization Based Pitch Angle ControllerMouna Ben SmidaОценок пока нет

- CS8461 - Oslabmanual - ProcessДокумент65 страницCS8461 - Oslabmanual - Processsksharini67Оценок пока нет

- A Multiobjective Diet Planning Model For Diabetic Patients in The Moroccan Health Context Using Particle Swarm Intelligence RedДокумент11 страницA Multiobjective Diet Planning Model For Diabetic Patients in The Moroccan Health Context Using Particle Swarm Intelligence Redabdellah.ahouragОценок пока нет

- A Generic Particle Swarm Optimization Matlab FunctionДокумент6 страницA Generic Particle Swarm Optimization Matlab FunctionMühtür HerhaldeОценок пока нет

- 1378Документ28 страниц1378Kean PagnaОценок пока нет

- Optimal Power Flow 2014-TLBOДокумент11 страницOptimal Power Flow 2014-TLBOAnkur MaheshwariОценок пока нет

- Ajith Abraham Swagatam Das Computational Intelligence in Power Engineering Studies in Computational Intelligence 302 2010Документ389 страницAjith Abraham Swagatam Das Computational Intelligence in Power Engineering Studies in Computational Intelligence 302 2010Jose Fco. Aleman ArriagaОценок пока нет