Академический Документы

Профессиональный Документы

Культура Документы

Quality Development of Learning Objects: Comparison, Adaptation and Analysis of Learning Object Evaluation Frameworks For Online Courses

Загружено:

SEP-PublisherОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Quality Development of Learning Objects: Comparison, Adaptation and Analysis of Learning Object Evaluation Frameworks For Online Courses

Загружено:

SEP-PublisherАвторское право:

Доступные форматы

JournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014www.jitae.

org

doi:10.14355/jitae.2014.0302.02

QualityDevelopmentofLearningObjects:

Comparison,AdaptationandAnalysisof

LearningObjectEvaluationFrameworksfor

OnlineCourses:

ELearningCenterofanIranianUniversitycasestudy

PeymanAkhavan*1,MajidFeyzArefi2

DepartmentofManagementandSoftTechnologies,MalekAshtarUniversityofTechnology,Iran,Tehran

*1

akhavan@iust.ac.ir;2majidfeyzarefi@gmail.com

Received10July2013;Revised23December2013;Accepted4March2014;Published27May2014

2014ScienceandEngineeringPublishingCompany

Abstract

Thepurposeofthepresentstudyisinvestigating,comparing

evaluation frameworks of learning objects and deriving the

more important indices. After doing so, comparison,

correspondence and analysis of the frameworks are done.

Realizing the most important indices, the econtent of the

twolessonsofM.Sccoursesinoneoftheforesaidcentershas

been evaluated. In this research, we pursue the analytic

approach of literature review in offering theoretic bases to

practical application of the theory, and then by tworound

Delphi technique, important criteria and their significance

were identified by experts. The purpose of this study is to

showtheimportantscalesfortheevaluationofthelearning

objects, having the most important role in econtent of E

Lesson and the use of casestudy for detecting weak points

andimprovableaspects.Thefindingsreflectthatmostofthe

performedstudieshavefocusedonqualityoftheobjectsand

on correspondence of the elearning standards with objects

formulation and there isnt sufficient study in strategic and

planningaspectsoflearning,havingcrucialroleinlearning

quality and effectiveness of the Econtent of Elessons. In

addition, in these studies, the classification of the scales is

notbasedonspecifiedaspectsforlearningobjectsevaluation.

The present study answers the following questions: Which

factors affect the design and formulation of the quality of

learningobjects?Ontowhichaspectsonemustpayattention

to effective designing of Econtent of ELessons? Which

scales of learning objects are the main highly referred ones

evaluation in previous studies? Which aspects are the

improvableandchallengingones,notbeingfocusedenough?

Keywords

Elearning, Econtent; Learning Object; Learning Object

Evaluation;ElearningStandards;InstructionalDesign

Introduction

Elearning as a very functional term has been

introduced in education field along with information

technology and elearning has been considered as a

long term planning with a huge investment in

educational centers, especially in the universities of

many countries (Triantafillou, et al. 2002; Kuo, 2012).

Many universities and educational institutes around

the world have been designed for providing e

learning from the beginning in order to response to

increasing educational demands. Betts reports that in

many developed countries application in eleaning

courses are many times higher than the total higher

educationgrowth(Betts,2009).

The essential ability of Elearning is more than its

access to information and its transactional and

communicative abilities are its base. The purpose of

the qualitative Elearning is combining variety and

solidarity in such an active learning environment

which is mentally challenging. Level of these

transactions is more than a one sided transition of

contentandembedsourthoughtrangewithrespectto

the relations between the participants in learning

process(GrisonandAnderson,2003;BonnerandLee,

2012).

ELearningElements

The first are the ones capable of being named

physically these exist physically or at least

57

www.jitae.orgJournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014

previous resources: its simple, and it has balance

capability, universal acceptance and development

capacity.ARIADNEhasbeencommenceditsactivities

since 1966. Its focus is on providing tools and

protocols supporting productivity, storage, delivery

andreuseoflearningcourses(FallonandBrown,2003).

electronically. These elements are: learning files,

management software and information banks. The

second are the conceptual ones such as: courses and

lessons,necessarytobeunderstandcompletelyforthe

discussionofElearning(FallonandBrown,2003).

LearningObjectsandEcontent

TechnicalLOEvaluation

LO1is the smallest part of the content which itself

could be a learning unit or meaning. The size of LO

canbevaried,butthebestperformanceofLoishaving

a special learning aim. Lo must be meaningful and

independent on content. In other words, it must not

depend on other parts of learning content to get

completed.ThismeansthateachLOcouldbeusedin

severallessonsorcourses(FallonandBrown,2003).

The various approaches to LOs attempt to meet two

commonobjectives:

ToreducetheoverallcostsofLOs.

ToobtainbetterLOs(Wiley,2003).

LO evaluation is a rather new concern. [5, 6] increase

of LOs, writers, design variety and their accessibility

to educators trained or untrained led to the tendency

to investigate how to evaluate LOs and which scales

areappropriatetojudgetheirqualityandprofitability

(HaugheyandMuirhead,2005).

One can infer the LOs as the elements of Elearning.

As they correspond to same standards, one can use

any combination of them provided that they were

matched each other. Anyway, by matching LOs, one

canformbiggerpartsofthelearningcontentsuchas:

topics, lessons or all courses and so on (Fallon and

Brown,2003).

Literature Analysis

Evaluation of learning objects, is needed to develop

criteria for judging them (Kurilovas and Dagiene,

2009).

Knowledge element,learningsource, online materials

andlearningcomponentsareallwordswiththesame

usageasLO(KraussandAlly,2005).

Vargoetal(2002)LearningObjectReviewInstrument

(LORI)forevaluatinglearningobjectshavedeveloped.

Version1.3LORIusing10criteriatoevaluatelearning

objects, including: 1 Presentation: aesthetics 2

Presentation: Design for Learning 3 Accuracy of

content4supportforlearninggoals5Motivation6

Interaction: usability 7 Interaction: feedback and

adaptation 8 Reusability 9 Metadata and

interoperabilitycompliance10Accessibility(Vargo,et

al. 2003). The 10 criteria of a literature review related

to instructional design, computer science, multimedia

development and educational psychology have been

achieved(KurilovasandDagiene,2009).Eachmeasure

was weighted equally and was rated on a fourpoint

scale from weak to moderate to strong to

perfect(Vargo,etal.2003).

ElearningStandards

Aviation is one of the first industries in early 80s

which accepts the computerbased learning in a large

scale. AICC was founded in 1988 (Fallon and Brown,

2003). At the same year, US Department of Defense

founded Advanced Distributed Learning Initiative

(ADL).Itsprimarymissionistodevelopandpromote

the learning methods for US Military, but the results

have many applications in other public and private

sectors.ADLpublishedthefirsteditionoftheSCORM

in1999(FallonandBrown,2003).

The foresaid standards offered proposals in both

conceptualdesignandperformance.Someofthemlike:

AICC and IMS are more focused on determination of

the technical specifications and the others like:

SCORM and AICC are the reference models of

performance (Triantafillou, et al. 2002). One can refer

to Dublin Core and ARIADNE as other standards

institutions. The first was commenced offering

metadatastandards.Itrepresentedamodelincluding

fifteen parts, supporting online storage and recovery

of public resources. It differed over these issues from

1

Inthesameyear,BelferetalPresentedtheversion1.4

LORI, with the 8 criteria which are such as: content

quality, learning goal alignment, feedback and

adaptation,

motivation,

presentation

design,

interaction usability, reusability, and value of

accompanyinginstructorguide(Belfer,etal.2002).

Nesbit et al in 2004 propose version 1.5 of LORI that

hadninecriteria,thesecriteriainclude:contentquality,

learning goal alignment, feedback and adaptation,

.Learningobject

58

JournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014www.jitae.org

quality criteria for Learning Objects. Other quality

criteria, such as pedagogical quality, usability or

functionalqualityareinthesescopes.Someaspectsof

quality are addressed by Van Assche and Vourikari

(2006), they suggested a quality framework for the

whole life cycle of learning objects (Paulsson and

Naeve,2006).

motivation, presentation design, interaction usability,

accessibility, reusability and standards compliance

(LeacockandNesbit,2007).

KraussandAllygaveapaperin2005;itwasbasedon

LORI.Theaimofthisstudywasidentifyingchallenges

and problems of instructional designers in the design

andevaluatetheeffectivenessofalearningobject.This

paper presents a framework of eight criteria for

evaluating learning objects that these criteria include:

content quality, learning goal alignment, feedback,

and adaptation, motivation, presentation design,

interaction usability, reusability, student/Instructor

guides(KraussandAlly,2005).

The MELT content audited in 2007, including an in

depth examination of project partners who exist

content quality guidelines. It proposed a checklist to

help them make decisions about what content from

their repositories for enrichment in the project that

should be made available. This checklist is divided

intofivecategories:pedagogical,usability,reusability,

accessibility and production. This list is by no means

visionandnotallofthecriteriacanalwaysbeapplied

to all of Learning Objects. TheMELT project partners

seek to provide access to learning content that meets

nationallyrecognizedqualitycriteria(MELT,2007)

Susan Smith Nashs article entitled learning objects,

learning object repositories, and learning theory:

Preliminary best practices for online courses,

published in 2005, This paper examines the current

practices of learning objects and best practices have

been studied. And by combining theories of learning,

anewapproachhasbeenproposedtoimprove.Inthis

paper, the factors for determining the usability of

learning objects are presented. These factors include:

relevance, usability, cultural appropriateness,

infrastructure support, redundancy of access, size of

object, relation to the infrastructure / delivery (Nash,

2005).

SREB (Southern Regional Education Board) in 2007

with the aim of improving the quality of its training

programs,areprovidedachecklistforlearningobject

evaluation,thathas10criteriathatinclude:1)Content

quality 2) Learning goal alignment 3) feedback 4)

Motivation 5) Presentation design 6) Interface

Usability 7) Accessibility 8) Reusability 9) Standards

compliance 10) Intellectual property and copyright

(SREBSCORE,2007).

Nicole BuzzettoMoore and kaye pinhey 2006, an

article entitled Guidelines and standards for the

development of Fully online learning objects

presented, that in these article, 18 Qualitative criteria

for evaluating learning objects of online courses of

University of Maryland Eastern Shore were

introduced, these criteria include: Prerequisites,

Technology requirements, Objectives and outcomes,

Activities support learning, Assessment, A Variety of

tools enhance interaction, Course materials, Student

support, Frequent and timely feedback, Appropriate

Pacing, Expectations for student discussion / chat

participation, Grading, Course content, Navigation,

Display, Multimedia (if appropriate), Time devoted,

Reusability(BuzzettoMoreandPinhey,2006).

TeleUniversity of Quebec in 2007 improved the

effectiveness, efficiency and flexibility of Learning

objects, implement a quality assurance strategy wich

called quality for reuse (Q4R), the scientific projects

that were started at the university, as well as proper

storing and retrieval strategies. They have organized

these strategies into four main groups, this group

includes: organizational strategies, and then three

strategies inspired by the lifecycle of a LO, that is

fromitsconceptiontoitsuse/reuse(adaptations)(Q4R,

2007).

MinistryofEducationandScienceofLithuaniainJune

2008, the criteria for technical evaluating of learning

objects to help teach computer, set out to take the

assessment tool called the Lithuanian learning objects

evaluation tool. These criteria are: 1) Methodical

aspects. 2) User interface 4) LOs arrangement

possibilities. 5) Communication and collaboration

possibilities and tools. 6) Technical Features. 7)

Documentation. 8) Implementation and maintenance

expenditure(KubilinskieneandKurilovas,2008).

Six action areas for establishing Learning Object of

technical quality criteria are suggested by Paulsson

andNaeve in 2006, these criteriainclude:1) A narrow

definition 2) A mapping taxonomy 3) More extensive

standards4)Bestpracticeforuseofexistingstandards

5)Architecturemodels6)Theseparationofpedagogy

from the supporting technology of LOs. Most

evaluations of learning objects have not done much

with this vision Focus in this Model on technical

59

www.jitae.orgJournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014

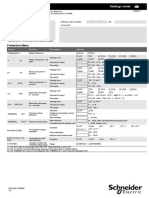

TABLE1LOSEVALUATIONFRAMEWORKSANDECONTENT

The study of Kurilovas and Dagiene in 2009 with the

combinationframework(Vargoetal,2003),(Paulsson

and Naeve, 2006), (MELT, 2007), (Q4R, 2007) and

studies in 2007 (Kurilovas, 2007), proposed the

original set of LO evaluation criteria, and it is called

Recommended learning objects technical evaluation

tool. This tool includes LO technical evaluation

criteria suitable for different LO lifecycle stages.

Thesecriteriaare:

The first (before LO inclusion in the LOR): Narrow

definition

compliance,

Reusability

level:

interoperabilitydecontextualisationlevelcultural/

learning diversity principles Accessibility LO

Architecture, working stability, design and usability.

The second (during LO inclusion in the LOR):

Membership or contribution control strategies,

technicalinteroperability.Thethird(afterLOinclusion

intheLOR)retrievalquality,informationquality.[5]

Year

LOsevaluationFrameworksandE

content

F1

2002

Vargoetal(LORI1.3)

F2

2002

Belferetal(LORI1.4)

F3

2004

Nesbitetal(LORI1.5)

F4

2005

KraussandAlly

F5

2005

SusanSmithNash

F6

2006

PaulssonandNaeve

F7

2006

NicoleBuzzettomoreandKayePinhey

F8

2007

MELT

F9

2007

SREB(SREBSCORE)

F10

2007

Q4R

F11

2008

KubilinskieneandKurilovas

F12

2009

KurilovasandDagiene

F13

2012

Guenagaetal

F14

2013

KurilovasandZilinskiene

TABLE2CRITERIAOFLOSEVALUATIONFRAMEWORKSANDECONTENT

Symbolsused

intable3

C1

C2

C3

C4

C5

C6

C7

C8

C9

C10

C11

C12

C13

C14

C15

Guenagaetal.in2012,introducedatoolforevaluating

learning objects, that this tool is composed of two

aspectsofthetechnologyandpedagogy.Inthissurvey,

aquestionnairewasdesignedwhereeachofthesetwo

aspectswasincludedinseveralcriteria(Guenaga,etal.

2012).

Kurilovas and Zilinskiene in 2013, introduced a new

AHP method for evaluating quality of learning

scenarios. In this research, qualitative measures of

learning scenarios are divided into three sections:

learning objects, learning activities and learning

environment, that each of three sections has several

indicesin terms of internalqualityand quality in use

(KurilovasandZilinskiene,2013).

C16

C17

C18

C19

C20

Research Analysis

AnalysisandComparisonofLOsEvaluation

FrameworksandEcontent

C21

C22

C23

C24

C25

C26

C27

C28

C29

C30

C31

C32

C33

Inthissection,frameworksandmodelsaretechnically

focusedonLOsandEcontent(Table1).

Intable2,wehavequalitativescalesofthesefourteen

frameworksalong witharespective figure. At last, in

table3,thescalescorrespondedtoeachother.Infact,

table 3 shows the scales frequency in models and

frameworks, then their importance, it also shows the

differences between models and frameworks and the

scales existing in some of them. We can recognize

researchers focus, improvable challenges and aspects

by such comparison that the future studies will

handletheweakpointsandstrongpoints.

60

Symbolsused

intable3

C34

C35

CriteriaofLOsevaluationFrameworksand

Econtent

Presentationaesthetics

Presentationdesignforlearning

Accuracyofcontent

Supportforlearninggoals

Motivatioon

Interactioonusability

Interactionfeedbackandadaptation

Reusability

Standardscompliance

Accessibility

Learninggoalalignment

Student/Instructorguides

moreextensiveStandards

Bestpracticeforuseofexistingstandards

Architecturemodels

TheSeparationofPedagogyfromthesupporting

technologyofLOs

Organizationalstrategies

LifeCycleStrategies

Intellectualpropertyandcopyright

Losarrangementpossibilities

Communicationandcollaborationpossibilities

andtools

Technicalfeatures

Interoperability

Cultural/learningdiversityprinciples

Technicalinteroperability

Retrievalquality

Informationquality

Relevance

Redundancyofaccess

Infrastructuresupport

Sizeofobject

Relationtotheinfrastructure/delivery

Assessment

Expectationsforstudentdiscussion/chat

participation

Navigation

JournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014www.jitae.org

TABLE3COMPARISONOFCRITERIAOFLOSEVALUATIONFRAMEWORKS

different steps of life time cycle of LOs. Q4R didnt

sufficiently investigate the technical evaluation scales

of LOs prior to their location in LOs store. LORI 1.3,

LORI 1.5, MELT, SREB, Q4R and Guenaga didnt

sufficiently investigate the scales of reusability.

(Kurilovas & Dagiene, 2009) and (Kurilovas &

Zilinskiene, 2013) model is focused on technical

evaluation of LOs and put aside the strategies and

learning purposes and as the result the

appropriatenessofthecontentandstrategy.

F14

F8

F13

F7

F12

F6

F11

F5

F9

F4

F10

F3

Criteria

C1

C2

C3

C4

C5

C6

C7

C8

C9

C10

C11

C12

C13

C14

C15

C16

C17

C18

C19

C20

C21

C22

C23

C24

C25

C26

C27

C28

C29

C30

C31

C32

C33

C34

C35

F2

Frame

work

F1

ANDECONTENT

Method of Research

TworoundDelphiMethod

We used Delphi Method to determine the validity of

the papers criteria. Delphi has been designed as a

structured communication technique by RAND in

1950stocollectdatathroughcollectiveopinionpolling

(Gallenseon,etal.2002).

In fact, experts opinions were used to assess the

validity of the papers criteria. In this tworound

Delphi method, the panel of experts includes 16

professors and experts in elearning, information

technology, computer engineering, instructional

technology and systems engineering fields. The

questionnaire construct was designed based on the

following Likert Scale: 1. strongly unimportant 2.

unimportant 3. neutral 4. important 5. strongly

important.Researchstructureillustratedinfigure2.

LimitsofQualitativeFrameworksofLOEvaluation

Undoubtedly,eachoftheframeworksandmodelsand

Econtents has its limits because of unique activity of

respective institution, model or the strategy and

purpose of the researchers. According to the above

table,LORI,Krauss&AllyandSREBhavemanysame

scales and their focus is on Econtent evaluation and

qualitativepromotionofcontentwithrespecttosome

standardscomponents.Thereisnofocusonmetadata,

objectdetailsandorganizationtechnicalfeaturesand

its strategies. But Q4R has 4 main strategies to

guarantee the quality of LO which Kurilovas &

Dagieneofferedamorecomplicatedmodelbytheuse

ofthemastheirbasealongwithsomelimitsincluding:

(LORI 1.3), (LORI 1.5), (MELT,2007), (SREB,2007),

(Guenaga, et al. 2012). They didnt investigate the

Delphi

Delphi

#Round1

#Round2

Validation

of

Indicators

Adding

and

subtracting

indicators

Output:

d1,,d12

Reviewof

Indicators

Determine

importance

of

indicators

ReviewIndicators

anddetermine

theirimportance

Output:

Table2

LearningObjectEvaluation

FIG.1RESEARCHSTRUCTURE

After studying literature, research exploring Delphi

method has been used in this study. And several

61

www.jitae.orgJournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014

specialized sessions and working group appropriate

criteria for evaluating learning objects of econtent

were studied. Apart from the questionnaire survey

interviewsandmeetingswereconductedaswell.

roundDelphimethod,importantindicatorshavebeen

identified and their significance was determined by

experts. These indicators are: Presentation design for

learning, Accuracy of content, Motivation, Interaction

usability, Interaction feedback and Adaptation,

Reusability, Standards compliance, Accessibility,

Learning goal alignment , Architecture models,

Organizational strategies and Cultural/learning

diversity principles which represented in table 4; d1,

d2,d3,d12,respectively.

After reviewing the literature of the learning objects ,

econtent evaluation and examining the previous

credible quality criteria, in expert panels and

specialized working groups, criteria with respect to

the scope and nature of these applications were

identified. After identification and validation of

indicators, and adding orsubtracting of indicators by

them,inthefirstpartofDelphiMethod,inthesecond

part of Delphi Method, after review and approval of

selected indicators, a questionnaire with 35 criteria

was given based on Likert range in order that the

experts indicate their views about the importance of

eachindicator.AftertworoundsoftheDelphimethod,

the indicators were fully identified and validated by

experts.

In this method, before the comparison, one must

determine the importance of the indices, and then

refer to its overall importance by scoring it. In white

slots, we do pair comparison and write the letter of

moreimportantindexthereandthenscoreit_Zerofor

the ones with the same importance and three for the

most difference between importances of indices

according to experts in the Likert. Finally, the results

werearrangedbyaggregatingthescores.

Asshowninthelasttable,d1,d4,d6,d8havethemost

importance, and then comes d3, d5, d9, d12, at the

thirdstepcomesd2,d10,d1,andatlastrankcomesd7.

Pair comparisons and importance

determination of indices

Asmentionedinthemethodologyofresearch,intwo

TABLE4PAIREDCOMPARISONINDICES

Index

d1

d2

d3

d4

d5

d6

d7

d8

d9

d10

d11

d12

d1

d2

d1:2

d3

d1:1

d3:1

d4

0

d4:2

d4:1

d5

d1:1

d5:1

0

d4:1

d6

0

d6:2

d6:1

0

d6:1

d7

d1:3

d2:1

d3:2

d4:3

d5:2

d6:3

d8

0

d8:2

d8:1

0

d8:1

0

d8:3

d9

d1:1

d9:1

0

d4:1

0

d6:1

d9:2

d8:1

d10

d1:2

0

d3:1

d4:2

d5:1

d6:2

d10:1

d8:2

d9:1

d11

d1:2

0

d3:1

d4:2

d5:1

d6:2

d11:1

d8:2

d9:1

0

d12

d1:1

d12:1

0

d4:1

0

d6:1

d12:2

d8:1

0

d12:1

d12:1

TABLE5THEFINALSCOREFOREACHCRITERIAUSINGPAIREDCOMPARISONSWITHOTHERINDICATORS

62

Index

TotalScore

Rating

d1

13

d1 : 17.33 %

d2

d4 : 17.33 %

d3

d6 : 17.33 %

d4

13

d8 : 17.33 %

d5

d3 : 6.66 %

d6

13

d5 : 6.66 %

d7

d9 : 6.66 %

d8

13

d12 : 6.66 %

d9

d2 : 1.33 %

d10

d10 : 1.33 %

d11

d11 : 1.33 %

d12

d7 : 0

JournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014www.jitae.org

Case Study

the first one. Again the content consists of tables,

figuresect.forbetterunderstandingofthelesson.But

in the design of the foresaid lessons, the masters are

more focused on the course discussion rather than

learning, evaluativeness, effectiveness and other

aspects. As an example, in the content of the lessons

there is no point about needs evaluation,

determination of masterstudent tasks, students

evaluation strategies and methods. In addition, there

isnothingaboutstrategies,purposesandsessions.As

the students have no physical attendance, masters

should present a useful content by the use of

equipmentsandcreativityandfocusonenvironmental,

cultural, social, telecommunication and other

limitation.Mastercanincreasetheeffectivenessbythe

useofanimatedfilesandfilms_thesetwolessonsare

weakwithregardtothispoint.

Accordingtotheindiceswhichidentifiedbyexperts,2

lessonsofElessonshavebeenofferedinoneoftheE

learning centers in Iran universities and then they

wereevaluated.

This university Elearning center performed some

studies in 2003. It then commenced its activity as the

pivotal project in order to reach to the knowledge of

designing and performing E learning systems and to

establishininternalnetworkofuniversity.Theproject

isfocusedononelessoninsomefieldsofM.Scin2008

2009.Inthemid2010,itsuccessfullytakestheMasters

course of those fields. As a result, Econtent of the 2

lessons was selected for evaluation by the use of a

frameworkincludingtheforesaidindices.

First lesson content is about human resources which

representedasaWORDandaPOWERPOINTfile.83

hoursessionscoverthe134pageWORDfile(font:11).

Thecontentconsistsoftables,figuresandforbetter

understandingofthelesson.Attheendofeachsession

therearesomerelativequestions.ThePOWERPOINT

file consists of 206 slides presenting the headlines. In

thiscenter,therearesomerecordroomsformastersto

recordsaudiofileofthelessonsandalsotheycanchat

withstudentsandanswertheirquestions.

The contents of the lessons are evaluated by 3 expert

masters by a 9question questionnaires and Likert

scale.

EcontentEvaluationofFirstCourse

Theresultofecontentevaluationofthefirstcourse

areshownintable6.

EcontentEvaluationofSecondCourse

The content of the second lesson is about knowledge

management. The course consists of a WORD (150

pages, font: 11) and a POWERPOINT presented like

Resultofecontentevaluationofsecondcourseare

shownintable7.

TABLE6.RESULTOFECONTENTEVALUATIONOFFIRSTCOURSE

Indices

Professors

d1

d2

d3

d4

d5

d6

d7

d8

d9

d10

d11

d12

Averageofall

indices

Firstprofessor

3.08

Secondprofessor

3.42

Thirdprofessor

3.08

Average

2.66

4.33

3.33

3.66

3.66

2.66

4.33

2.33

2.33

2.66

2.33

3.19

TABLE7.RESULTOFECONTENTEVALUATIONOFSECONDCOURSE

Indices

Professors

d1

d2

d3

d4

d5

d6

d7

d8

d9

d10

d11

d12

Averageofall

indices

Firstprofessor

Secondprofessor

2.75

Thirdprofessor

2.83

Average

3.33

3.33

2.33

3.66

2.66

3.66

4.33

2.33

2.33

2.33

2.86

63

www.jitae.orgJournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014

Discussion

Asshownintable6andtable7,thecontentofthefirst

lessonhasalowperformanceinscalesnumber1,6,9,

10,11and12whichmeansitwasweakatPresentation

design for learning, Reusability, Learning goal

alignment, Architecture models, Organizational

strategies and Cultural/learning diversity principles;

because master designing of them are weak at

designing regarding visual, hearing, image display

and graphical elements relative to learning purposes.

Also, they have unacceptable level of LO designing

with respect to reuse and effectiveness and their

respective content dont appropriately assign the

purposes. This content has medial performance

regardingscalesnumber3,4and5whichmeansthat

ithasmediallevelinMotivationalcontent,Interaction

usability, Interaction feedback and Adaptation. One

can say that the use of tables, Figures and etc as well

as coherence of the content, raising questions and

incitement challenges are useful, but lack of visual

slides, films and animations makes it medial. The

content is also medial regarding to offering LOs

havingsimplemutualrelationandoperationalliaison

with qualitative features, so corresponding to

studentsneedsandgivingfeedbacktothemasters.At

last, it has acceptable performance regarding scales

number 2, 7 and 8; that is, Accuracy of content,

StandardscomplianceandAccessibility,becauseithas

content credit, accuracy and enough details and

balanced ideas and the foresaid standards and has

accessibility for everyone. The content of the second

lesson is weak at Presentation design for learning,

Reusability, Learning goal alignment, Architecture

and

models,

Organizational

strategies

Cultural/learning diversity principles; it means, it is

weakatdesigningLOswithnecessarycapabilitiesand

designing inappropriate Econtent regarding to meet

the purposes. It has the medial level with respect to

the scales number 2, 3, 5 and 7. At last it is only

acceptable in scale number 8_ accessibility. Paying

attention to the weaknesses and challenges in the

center, we should promote masters ability and

familiarity regarding to qualitative indices of LO

evaluation to improve the Econtents. Designers also

should interact with design models and learning

technology and the approaches to meet the learning

needsofcontent.Wecanalsopredictandevaluatethe

offeredcontentsandgivefeedbacktomasters.

literature, and were examined by two rounds of

Delphiwithexpertsinvariousfields.Afteridentifying

the appropriate indicators, and their significance was

determined by experts. Then the paired comparisons

were performed to determine the percentage of

indicators relative to each other, and then as a case

study, econtent of the two courses of virtual e

learning courses generated from the one elearning

center of Iran were evaluated by experts and

professorsinthisfield.

Inthisstudytopicssuchascomponentsofelearning,

learning objects and econtent, elearning standards,

technical evaluation of learning objects, analysis and

comparison of LOs evaluation frameworks and e

contentwerediscussed.

According to the results, in order to design and

develop learning objects and econtent, various

aspects anddimensionsshould beaddressed,suchas

instructional design, strategy and importance

qualitativeaspects.Thisstudyidentified35important

criteria for evaluating learning objects, and after

surveys, significant weaknesses and areas for

improvement were obtained. Given that these areas

werealsoprovidedwithvaluablesuggestions.

Summary and Suggestions

The Evaluation of Econtent helps organizations to

promotetheircontentqualityandoffermoreeffective

learning plans. Although there are many standard

institutions,weneedsomethingelsetoproduceanE

content with an acceptable quality and effectiveness

consistingofusefulLOs.Infact,standardreferstothe

leastqualitativespecificationsofLOs.

Modelsandframeworkspresentedhereareevaluated

byresearchers.TheyareusefulforofferingbetterLOs

andasaresultbetterEcontent.Theseframeworksare

settopromotethequalityofEcontentbydeveloping

standardsandmorefeaturesandcomponents.Butthe

challenge is the use of such a framework with

appropriatequalitativescalesmeetingstudentsneeds

andtendencies.

Accordingtothelimits,toevaluatetheEcontent,one

firstdetermineshisaimandfocusandthenchooseone

of the models and frameworks. As said before, to

evaluate LOs technically,Kurilovas &Dagiene model

coversmoreaspectsandhasthecapabilitytodevelop

regardingtostrategy.Butoneofthemainissuesisthe

ignorance of coordination of E content and learning

design in the previousstudies. Weshould review the

Conclusion

Asshowninthisstudy,themostimportantcriteriafor

evaluating learning objects are analyzed by studying

64

JournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014www.jitae.org

design models and learning technologies in order to

evaluatetheEcontentsregardingtoeffectivenessand

learningaims.Wemustdothatbecausethefinalaim

ofElessonsistopresentaneffectivecontentandreach

to high quality learning. So we should promote the

designersabilities.

Gallenseon, A., Heins, J. and Heins, T. Macromedia MX:

Creating learning objects., 2002. [Macromedia White

Paper].MacromediaInc.Retrieved6/02/06from

http://download.macromedia.com/pub/elearning/objects/mx

_creating_lo.pdf

Grison D.R. and Anderson T. Elearning in the 21ST

In all the foresaid models and frameworks,

researchers had chosen the scales for the evaluation,

some of which take same aspects and others take

different aspects of content. In present study,

important criteria was identified and confirmed by

experts and then we performed a case study in e

learningcenterofauniversityinIran.Inthisresearch,

econtent of 2 Masters lessons were evaluated by

some experts .The results show that this study center

facesproblemsregardingtoproductionofhighquality

Econtent.Somerecommendationsweremadeaswell

topromotetheabilityofthedesigners.

centuryaframeworkforresearchandpractice,2003.

Guenaga, M., Mechaca, I., Romero, S. and Eguiluz, A. A

tool to evaluate the level of inclusion of digital learning

objects.ProcediaComputerScience14(2012):148154.

Haughey,M.andMuirhead,B.Evaluatinglearningobjects

for

Krauss, F. and Ally, M. A study of the design and

evaluation of a learning object and implications for

content development. Interdisciplinary journal of

knowledgeandlearningobjects1(2005):122.

Kubilinskien, S. and Kurilovas, E. Lithuanian Learning

ObjectsTechnicalEvaluationToolanditsApplicationin

Learning Object Metadata Repository. In: Informatics

Education

Contributing

Across

the

Curriculum:

Proceedings of the 3rd International Conference

Informatics in Secondary Schools Evolution and

Perspective(ISSEP2008).Torun,Poland,July14,2008.

Selectedpapers,147158.

Belfer, K., Nesbit, J. and Leacock, T. Learning Object

Kuo, C. Evaluation of Elearning Effectiveness in Culture

ReviewInstrument(LORI).Version1.4,2002.

and Arts Promotion: The Case of Cultural Division in

Betts,K.OnlineHumanTouch(OHT)Training&Support:

Framework

to

Increase

Taiwan, Journal of Information Technology and

Faculty

ApplicationinEducation,Vol.1No.1,918,2012.

Engagement, Connectivity, and Retention in Online

Kurilovas,E.DigitalLibraryofEducationalResourcesand

Education, Part 2, Journal of Online Learning and

Services: Evaluation of Components. Informacijos

Teaching,Vol.5No.1,2009.

mokslai(InformationSciences).Vilnius,4243(2007):69

Bonner, J. M. And Lee, D. Blended Learning in

Organizational

[online],

jist/docs/vol8_no1/fullpapers/Haughey_Muirhead.pdf

REFERENCES

Conceptual

2005.

http://www.usq.edu.au/electpub/e

It is recommended in future studies, main aspects

relatedtoLOevaluationbedeterminedandclassified.

Also organizational strategies and learning purposes

shall be investigating cause of their importance in

content production. It is also recommended that

researchers investigate the scales related to LO

designing in known models and learning technology

in order to increase the effectiveness of Econtent for

thestudentsofecourses.

schools,

Setting,

Journal

of

77.

Information

Kurilovas,E.andDagiene,V.LearningObjectsandVirtual

Technology and Application in Education, Vol. 1 No. 4,

Learning Environments Technical Evaluation Criteria.

164172,2012.

Electronic Journal of elearning, Vol. 7 No. 2, 127136,

BuzzettoMore, N. and Pinhey, K. Guidelines and

2009.

Standards for the development of fully online learning

Kurilovas, E. and Zilinskiene, I. New MCEQLS AHP

objects. Interdisciplinary journal of knowledge and

Method for Evaluating Quality of Learning Scenarios.,

learningobjects2(2006):95104.

Technological and Economic Development of Economy,

Fallon,C.andBrown,Sh.ELearningstandards:aguideto

Vol.19No.1,7892,2013.

purchasing. developling and deplaying standards

conformantelearning.,2003.

65

www.jitae.orgJournalofInformationTechnologyandApplicationinEducationVol.3Iss.2,June2014

Leacock,T.L.andNesbit,J.C.AFrameworkforevaluating

Wiley,

the quality of multimedia learning resources.,

D.

A.

Learning

objects:

Difficulties

and

opportunities, 2003. [online], http://wiley.ed.usu.edu/

Educational Technology & Society, Vol. 10 No. 2, 4459,

docs/lo_do.pdf

2007.

MELT.MetadataEcologyforLearningandTeaching,2007.

projectwebsite.[online],http://meltproject.eun.org.

Nash, S.S. Learning Objects, Learning Object Repositories,

and Learning Theory: Premilinary Best Practices for

OnlineCourses.Interdisciplinaryjournalofknowledge

Peyman Akhavan received his M.Sc. and

Ph.D.degreesinindustrialengineeringfromIranUniversity

of Science and Technology, Tehran, Iran. His research

interests are in knowledge management, information

technology, innovation and strategic planning. He has

published6booksandhasmorethan100researchpapersin

differentconferencesandjournals.

andlearningobjects1(2005):217228.

Paulsson, F. and Naeve, A. Establishing Technical Quality

Criteria

for

Learning

Objects,

2006.

[online],

http://www.frepa.org/wp/wpcontent/files/paulsson

EstablTechQual_finalv1.pdf

Q4R. Quality for Reuse, 2007. project web site, [online].

http://www.q4r.org.

SREBSCORE. Checklist for evaluating SREBSCORE

Learning Objects, Educational Technology Cooperative,

2007.

Triantafillou,E.,Pomportis,A.andGeorgiadou,E.AESCS:

Majid Feyz Arefi received his B.Sc. degree

in Pure Mathematic from Ferdowsi University of Mashhad,

Iran in 2008. He received his M.Sc. degree in Industrial

Engineering with specialty in System Management and

Productivity from MalekAshtar University of Technology,

Tehran,Iranin2012.Duringhisstudieshehasbeenworking

ontopicssuchas:Elearning,LearningObjects,Information

Technology,CustomerSatisfactionandAfterSalesServices.

HisfieldsofinterestsincludeElearning,EcontentandCo

creation. He is currently continuing his research with Dr.

Akhavan.

Adaptive Educational System Based on Cognitive

Styles. Proc.OFAH 2002 workshop Systems for Web

basedEducation,2002.

Vargo,J.,Nesbit,J.C.andArchambault,A.LearningObject

Evaluation:ComputerMediatedCollaborationandInter

Rater Reliability., International Journal of Computers

andApplications,Vol.25No.3,198205,2003.

66

Вам также может понравиться

- Improving of Motor and Tractor's Reliability by The Use of Metalorganic Lubricant AdditivesДокумент5 страницImproving of Motor and Tractor's Reliability by The Use of Metalorganic Lubricant AdditivesSEP-PublisherОценок пока нет

- Influence of Aluminum Oxide Nanofibers Reinforcing Polyethylene Coating On The Abrasive WearДокумент13 страницInfluence of Aluminum Oxide Nanofibers Reinforcing Polyethylene Coating On The Abrasive WearSEP-PublisherОценок пока нет

- Contact Characteristics of Metallic Materials in Conditions of Heavy Loading by Friction or by Electric CurrentДокумент7 страницContact Characteristics of Metallic Materials in Conditions of Heavy Loading by Friction or by Electric CurrentSEP-PublisherОценок пока нет

- Experimental Investigation of Friction Coefficient and Wear Rate of Stainless Steel 202 Sliding Against Smooth and Rough Stainless Steel 304 Couter-FacesДокумент8 страницExperimental Investigation of Friction Coefficient and Wear Rate of Stainless Steel 202 Sliding Against Smooth and Rough Stainless Steel 304 Couter-FacesSEP-PublisherОценок пока нет

- Microstructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingДокумент5 страницMicrostructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingSEP-PublisherОценок пока нет

- Effect of Slip Velocity On The Performance of A Magnetic Fluid Based Squeeze Film in Porous Rough Infinitely Long Parallel PlatesДокумент11 страницEffect of Slip Velocity On The Performance of A Magnetic Fluid Based Squeeze Film in Porous Rough Infinitely Long Parallel PlatesSEP-PublisherОценок пока нет

- Quantum Meditation: The Self-Spirit ProjectionДокумент8 страницQuantum Meditation: The Self-Spirit ProjectionSEP-PublisherОценок пока нет

- Isage: A Virtual Philosopher System For Learning Traditional Chinese PhilosophyДокумент8 страницIsage: A Virtual Philosopher System For Learning Traditional Chinese PhilosophySEP-PublisherОценок пока нет

- Microstructural Development in Friction Welded Aluminum Alloy With Different Alumina Specimen GeometriesДокумент7 страницMicrostructural Development in Friction Welded Aluminum Alloy With Different Alumina Specimen GeometriesSEP-PublisherОценок пока нет

- Device For Checking The Surface Finish of Substrates by Tribometry MethodДокумент5 страницDevice For Checking The Surface Finish of Substrates by Tribometry MethodSEP-PublisherОценок пока нет

- Reaction Between Polyol-Esters and Phosphate Esters in The Presence of Metal CarbidesДокумент9 страницReaction Between Polyol-Esters and Phosphate Esters in The Presence of Metal CarbidesSEP-PublisherОценок пока нет

- FWR008Документ5 страницFWR008sreejith2786Оценок пока нет

- Mill's Critique of Bentham's UtilitarianismДокумент9 страницMill's Critique of Bentham's UtilitarianismSEP-PublisherОценок пока нет

- Enhancing Wear Resistance of En45 Spring Steel Using Cryogenic TreatmentДокумент6 страницEnhancing Wear Resistance of En45 Spring Steel Using Cryogenic TreatmentSEP-PublisherОценок пока нет

- Enhanced Causation For DesignДокумент14 страницEnhanced Causation For DesignSEP-PublisherОценок пока нет

- Delightful: The Saturation Spirit Energy DistributionДокумент4 страницыDelightful: The Saturation Spirit Energy DistributionSEP-PublisherОценок пока нет

- Mindfulness and Happiness: The Empirical FoundationДокумент7 страницMindfulness and Happiness: The Empirical FoundationSEP-PublisherОценок пока нет

- Metaphysics of AdvertisingДокумент10 страницMetaphysics of AdvertisingSEP-PublisherОценок пока нет

- Social Conflicts in Virtual Reality of Computer GamesДокумент5 страницSocial Conflicts in Virtual Reality of Computer GamesSEP-PublisherОценок пока нет

- Cold Mind: The Released Suffering StabilityДокумент3 страницыCold Mind: The Released Suffering StabilitySEP-PublisherОценок пока нет

- Technological Mediation of Ontologies: The Need For Tools To Help Designers in Materializing EthicsДокумент9 страницTechnological Mediation of Ontologies: The Need For Tools To Help Designers in Materializing EthicsSEP-PublisherОценок пока нет

- Computational Fluid Dynamics Based Design of Sump of A Hydraulic Pumping System-CFD Based Design of SumpДокумент6 страницComputational Fluid Dynamics Based Design of Sump of A Hydraulic Pumping System-CFD Based Design of SumpSEP-PublisherОценок пока нет

- The Effect of Boundary Conditions On The Natural Vibration Characteristics of Deep-Hole Bulkhead GateДокумент8 страницThe Effect of Boundary Conditions On The Natural Vibration Characteristics of Deep-Hole Bulkhead GateSEP-PublisherОценок пока нет

- A Tentative Study On The View of Marxist Philosophy of Human NatureДокумент4 страницыA Tentative Study On The View of Marxist Philosophy of Human NatureSEP-PublisherОценок пока нет

- Architectural Images in Buddhist Scriptures, Buddhism Truth and Oriental Spirit WorldДокумент5 страницArchitectural Images in Buddhist Scriptures, Buddhism Truth and Oriental Spirit WorldSEP-PublisherОценок пока нет

- Ontology-Based Testing System For Evaluation of Student's KnowledgeДокумент8 страницOntology-Based Testing System For Evaluation of Student's KnowledgeSEP-PublisherОценок пока нет

- Legal Distinctions Between Clinical Research and Clinical Investigation:Lessons From A Professional Misconduct TrialДокумент4 страницыLegal Distinctions Between Clinical Research and Clinical Investigation:Lessons From A Professional Misconduct TrialSEP-PublisherОценок пока нет

- Damage Structures Modal Analysis Virtual Flexibility Matrix (VFM) IdentificationДокумент10 страницDamage Structures Modal Analysis Virtual Flexibility Matrix (VFM) IdentificationSEP-PublisherОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- C DAC Winter Project Report-7Документ29 страницC DAC Winter Project Report-7Sanchit AlekhОценок пока нет

- Employee Handbook 12-15-14Документ54 страницыEmployee Handbook 12-15-14Anonymous jP9ozdnz100% (1)

- English Cloze PassageДокумент2 страницыEnglish Cloze PassageKaylee CheungОценок пока нет

- Process Owner Manager Difference PDFДокумент2 страницыProcess Owner Manager Difference PDFNikolay DilanovОценок пока нет

- MRP DSS Simulation ProjectДокумент4 страницыMRP DSS Simulation ProjectGodwin RichmondОценок пока нет

- Critical Success Factors For The Implementation of Enterprise Resource Planning (Erp) : Empirical ValidationДокумент10 страницCritical Success Factors For The Implementation of Enterprise Resource Planning (Erp) : Empirical ValidationiacikgozОценок пока нет

- Simlim Square Computer PricelistДокумент4 страницыSimlim Square Computer PricelistBizgram AsiaОценок пока нет

- 19DCS135-Internship ReportДокумент15 страниц19DCS135-Internship ReportDhwani ShethОценок пока нет

- DBMSДокумент28 страницDBMSPratik JainОценок пока нет

- DELEM Install GBДокумент81 страницаDELEM Install GBSylwester C.Оценок пока нет

- Punching Report - Unikl MsiДокумент9 страницPunching Report - Unikl MsiRudyee FaiezahОценок пока нет

- Documents of Interest Re Synthetic Telepathy May 26, 2015Документ235 страницDocuments of Interest Re Synthetic Telepathy May 26, 2015Stan J. CaterboneОценок пока нет

- Investigation of The Condominium Building Collapse in Surfside, Florida: A Video Feature Tracking ApproachДокумент27 страницInvestigation of The Condominium Building Collapse in Surfside, Florida: A Video Feature Tracking ApproachSparkly DraigОценок пока нет

- EWM Transaction CodesДокумент22 страницыEWM Transaction CodesChuks Osagwu100% (1)

- .Game Development With Blender and Godot - SampleДокумент6 страниц.Game Development With Blender and Godot - Sampleсупер лимонОценок пока нет

- 2010-10-31 Chet Ramey Early Bash DatesДокумент2 страницы2010-10-31 Chet Ramey Early Bash Datesmsnicki100% (2)

- Ls ComandsДокумент63 страницыLs ComandsNagella AnilkumarОценок пока нет

- f3 Ict MsДокумент3 страницыf3 Ict MsAbdifatah SaidОценок пока нет

- Aman Choudhary BCA-1 C PracticalДокумент65 страницAman Choudhary BCA-1 C PracticalPoras ChahandeОценок пока нет

- Tele-Communication (Telecom) Terms Glossary and DictionaryДокумент188 страницTele-Communication (Telecom) Terms Glossary and DictionaryRaja ImranОценок пока нет

- Mean Deviation of Grouped Data - LPДокумент4 страницыMean Deviation of Grouped Data - LPKishi Nissi Dela CruzОценок пока нет

- VIP400 Settings Sheet NRJED311208ENДокумент2 страницыVIP400 Settings Sheet NRJED311208ENVăn NguyễnОценок пока нет

- General: Transport Format, Transport Format Combination (Set) TS 25.302Документ5 страницGeneral: Transport Format, Transport Format Combination (Set) TS 25.302Sowmya JayanandaОценок пока нет

- CV7001 Part 1 NoteДокумент267 страницCV7001 Part 1 NoteasdasОценок пока нет

- Kisi-Kisi Web Technologies 2023Документ36 страницKisi-Kisi Web Technologies 2023Erhamna noor riskiОценок пока нет

- W Bush133Документ4 страницыW Bush133JahaziОценок пока нет

- Variable Range Led VoltmeterДокумент1 страницаVariable Range Led VoltmeterweberpermetersquareОценок пока нет

- Data-Driven and Physics-Informed Deep Learning Operators For Solution of Heat Conduction EquationДокумент8 страницData-Driven and Physics-Informed Deep Learning Operators For Solution of Heat Conduction EquationStephen PanОценок пока нет

- Architectural Design Case Studies: Useful Online ResourcesДокумент3 страницыArchitectural Design Case Studies: Useful Online Resourcesnysidlibrary100% (1)