Академический Документы

Профессиональный Документы

Культура Документы

CRT & NRT PDF

Загружено:

Jalil AlvinoИсходное описание:

Оригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

CRT & NRT PDF

Загружено:

Jalil AlvinoАвторское право:

Доступные форматы

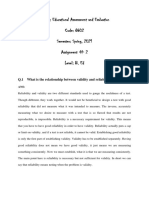

Educational Testing and Measurement, h.

61-62

COMPARING NORM-REFERENCED AND

CRITERION-REFERENCED TESTS

As you may have guessed, criterion-referenced tests must be very

specific if they are to yield information about individual skills. This is

both an advantage and a disadvantage. Using a very specific test enables

you to be relatively certain that your students have mastered or failed to

master the skill in question. The major disadvantage of criterion-referenced tests is that many such tests would be necessary to make

decisions about the multitude of skills typically taught in the average

classroom.

The norm-referenced test, in contrast, tends to be general. It

measures a variety of specific and general skills at once, but it fails to

measure them thoroughly. Thus you are not as sure as you would be with a

criterion-referenced test that your students have mastered the individual skills

in question. However, you get an estimate of ability in a variety of skills in a

much shorter time than you could through a battery of criterionreferenced tests. Since there is a trade-off in the uses of criterion- and

norm-referenced measures, there are situations in which each is

appropriate. Determining the appropriateness of a given type of test

depends on the purpose of testing.

Finally, the difficulty of items in NRTs and CRTs also differs. In the

NRT, items vary in level of difficulty from those that almost no one

answers correctly to those that almost everyone answers correctly. In the

CRT, the items tend to be equivalent to each other in difficulty. Following a

period of instruction, students tend to find CRT items easy and answer most

correctly. In a CRT, about 80% of the students completing a unit of

instruction are expected to answer each item correctly, whereas in an NRT

about 50% are expected to do so. Table 4.1 illustrates differences between

NRTs and CRTs.

Educational Testing and Measurement, h. 61-62

NORM- AND CRITERION-REFERENCED TESTS AND

CONTENT VALIDITY EVIDENCE

TABLE 4.1 Comparing NRTs and CRTs

Dimension

NRT

CRT

Average number of

students who get an item

right

Compares a student's

performance to

Breadth of content

sampled

50%

80%

The performance of

other students.

Broad, covers many

objectives.

Standards indicative

of mastery.

Narrow, covers a few

objectives.

Comprehensiveness of

content sampled

Variability

Shallow, usually

one or two items

per objective.

Since the

meaningfulness of a

norm-referenced

score basically

depends on the

relative position of

the score in

comparison with

other scores, the

more variability

or spread of scores,

the better.

Comprehensive,

usually three or more

items per objective.

The meaning of the

score does not

depend on

comparison with

other scores: It flows

directly from the

connection between

the items and the

criterion. Variability

may be minimal.

Item construction

Items are chosen to

promote variance or

spread. Items that are

"too easy" or "too

hard" are avoided.

One aim is to produce

good "distractor

options."

Reporting and interpreting Percentile rank and

considerations

standard scores used

(relative rankings).*

*For a further discussion of percentile rank and standard scores, see Chapters 13 and 14.

Items are chosen

to reflect the

criterion behavior.

Emphasis is

placed upon

identifying the

domain of relevant

responses.

Number

succeeding or

failing or range of

acceptable

performance used

(e.g., 90%

proficiency

achieved, or 80% of

class reached 90%

proficiency).

Вам также может понравиться

- Criterion Referenced Test and Normative Referenced TestДокумент5 страницCriterion Referenced Test and Normative Referenced TestHendra RaharjaОценок пока нет

- Educational Uses of The Digital Devices For Learning Human'S SkillsДокумент4 страницыEducational Uses of The Digital Devices For Learning Human'S SkillsTJPRC PublicationsОценок пока нет

- "Use of Technology in English Language Teaching and Learning": An AnalysisДокумент7 страниц"Use of Technology in English Language Teaching and Learning": An AnalysisFefe BoshaОценок пока нет

- Variation & Gradience in Phonetics N Phonology-Kugler, Fery, Vijver PDFДокумент437 страницVariation & Gradience in Phonetics N Phonology-Kugler, Fery, Vijver PDFTroy CollinsОценок пока нет

- Moral Panic and Social Theory: Current Sociology April 2010Документ17 страницMoral Panic and Social Theory: Current Sociology April 2010Kurt WilckensОценок пока нет

- Exergy: Measure of Work PotentialДокумент28 страницExergy: Measure of Work PotentialSahitya YadavОценок пока нет

- The Effectiveness of Using Internet in TEFL Learning: Amel Jassim KhalafДокумент8 страницThe Effectiveness of Using Internet in TEFL Learning: Amel Jassim KhalafIchakkkkОценок пока нет

- ICT and Language LearningДокумент6 страницICT and Language LearningAceng RahmatОценок пока нет

- Omni 2Документ1 страницаOmni 2ty391988Оценок пока нет

- Energy and Exergy Analysis of A Coal Based Thermal Power PlantДокумент7 страницEnergy and Exergy Analysis of A Coal Based Thermal Power PlantPutriОценок пока нет

- Emotional Intelligence ResearchДокумент5 страницEmotional Intelligence ResearchabayabayaОценок пока нет

- Energy and Exergy Analysis of Thermal Power Plant PDFДокумент4 страницыEnergy and Exergy Analysis of Thermal Power Plant PDFMohd RuhullahОценок пока нет

- Assessment: Polytechnic University of The PhilippinesДокумент7 страницAssessment: Polytechnic University of The PhilippinesJohn Lee Torres TeodoroОценок пока нет

- International Journal of Cognitive Research in Science, Engineering and Education (IJCRSEE) Volume 4 Issue 1Документ114 страницInternational Journal of Cognitive Research in Science, Engineering and Education (IJCRSEE) Volume 4 Issue 1Ijcrsee Journal100% (1)

- Use of Multimedia Technology in Teaching and Learning Communication Skill PDFДокумент8 страницUse of Multimedia Technology in Teaching and Learning Communication Skill PDFAyush KumarОценок пока нет

- Improving Students Speaking Skill ThrougДокумент16 страницImproving Students Speaking Skill ThrougBinh Duy NguyenОценок пока нет

- Energy and Exergy AnalysisДокумент13 страницEnergy and Exergy AnalysisYassine LemsyehОценок пока нет

- Perbandingan NRT&CRT (ETM)Документ3 страницыPerbandingan NRT&CRT (ETM)nps_ithaОценок пока нет

- ST ReportДокумент52 страницыST ReportChristine T. MompolОценок пока нет

- Criterion Referenced TestsДокумент7 страницCriterion Referenced Testskhairuddin KhairuddinОценок пока нет

- An Achievement TestДокумент20 страницAn Achievement TestBiswadip Dutta100% (2)

- Eng 211 ReportingДокумент4 страницыEng 211 ReportingKristine VirgulaОценок пока нет

- A Testing Brief V1 Type of TestsДокумент4 страницыA Testing Brief V1 Type of TestsgerongОценок пока нет

- Course Name: Teaching Testing and Assessment Professor: Dr. Mohamadi Name of Student: Mehdi Karimi SooflooДокумент4 страницыCourse Name: Teaching Testing and Assessment Professor: Dr. Mohamadi Name of Student: Mehdi Karimi SooflooIELTS CouncilОценок пока нет

- Concept of Test Validity: Sowunmi E. TДокумент14 страницConcept of Test Validity: Sowunmi E. TJohnry DayupayОценок пока нет

- Qualities of Good Test: 1. SimplicityДокумент6 страницQualities of Good Test: 1. Simplicityعالم المعرفةОценок пока нет

- Validity and RelabilityДокумент4 страницыValidity and RelabilitycynthiasenОценок пока нет

- CRT & NRT LessonДокумент10 страницCRT & NRT LessonShehari WimalarathneОценок пока нет

- Chapter 5Документ10 страницChapter 5Indra Prawira Nanda Mokodongan (bluemocco)Оценок пока нет

- Ilm - Module 5 - Organization and Interpretation of Test DataДокумент34 страницыIlm - Module 5 - Organization and Interpretation of Test DataWayne LipataОценок пока нет

- Ed 216 NOTESДокумент21 страницаEd 216 NOTESaligwayjadeОценок пока нет

- Jasmine Salsabila (18.1.01.08.0008) AssessmentДокумент15 страницJasmine Salsabila (18.1.01.08.0008) AssessmentJasmine SalsabilaОценок пока нет

- Danissa Fitriamalia Gito - 2001055014 Characteristics of A Good TestДокумент5 страницDanissa Fitriamalia Gito - 2001055014 Characteristics of A Good TestDanissa Fitriamalia GitoОценок пока нет

- What Is The Criterion and Norm Reference TestДокумент6 страницWhat Is The Criterion and Norm Reference Testasghar Khan100% (1)

- Norm and Criterion-Referenced Test: Jenelyn P. Daanoy Prusil D. DequillaДокумент35 страницNorm and Criterion-Referenced Test: Jenelyn P. Daanoy Prusil D. DequillaFerlyn Claire Basbano LptОценок пока нет

- What Is Validit1Документ5 страницWhat Is Validit1AlvyianaErinОценок пока нет

- ReliabilityДокумент5 страницReliabilityArmajaya Fajar SuhardimanОценок пока нет

- Criterian and Norm Referenced TestДокумент6 страницCriterian and Norm Referenced TestNawal100% (1)

- Validity Refers To How Well A Test Measures What It Is Purported To MeasureДокумент6 страницValidity Refers To How Well A Test Measures What It Is Purported To MeasureGilbert BersolaОценок пока нет

- The Five Principles of AssessmentДокумент10 страницThe Five Principles of AssessmentAyu Delisa Putri75% (4)

- Evaluation: by DR - Jitendra AhujaДокумент32 страницыEvaluation: by DR - Jitendra AhujaJitendra Ahuja100% (1)

- Educ 107 Midterm Course PackДокумент22 страницыEduc 107 Midterm Course PackJoshua Kevin SolamoОценок пока нет

- KTĐGДокумент3 страницыKTĐGQuỳnh Hương PhạmОценок пока нет

- 8601 - 2 AssghnmntДокумент22 страницы8601 - 2 AssghnmntKainat MumtazОценок пока нет

- Course: Educational Assessment and Evaluation Code: 8602 Semester: Spring, 2021 Assignment # 2 Level: B. EdДокумент21 страницаCourse: Educational Assessment and Evaluation Code: 8602 Semester: Spring, 2021 Assignment # 2 Level: B. EdirfanОценок пока нет

- Standardized & Non Standarized TestsДокумент24 страницыStandardized & Non Standarized TestsSavita HanamsagarОценок пока нет

- Resume Group 3 Principles of Language AssessmentДокумент4 страницыResume Group 3 Principles of Language AssessmentNila VeranitaОценок пока нет

- WassДокумент5 страницWassTs. Dr. Zulkifli Mohd SidiОценок пока нет

- AMEE Guide No. 18: Standard Setting in Student Assessment: Miriam Friedman Ben-DavidДокумент12 страницAMEE Guide No. 18: Standard Setting in Student Assessment: Miriam Friedman Ben-DavidrafiaОценок пока нет

- Principles of Language TestingДокумент48 страницPrinciples of Language TestingEmiwodew FeredeОценок пока нет

- Assessment of Learning-1Документ24 страницыAssessment of Learning-1Jessie DesabilleОценок пока нет

- PrinciplesДокумент5 страницPrinciplesalibaba0singleОценок пока нет

- POINTERS FOR TEST 311 PrelimДокумент20 страницPOINTERS FOR TEST 311 PrelimMary DenizeОценок пока нет

- Norm-Referenced InstrumentsДокумент10 страницNorm-Referenced InstrumentsRatiey Sidraa100% (1)

- 12 Characteristics of A GoodДокумент7 страниц12 Characteristics of A GoodTricia Mae Rivera100% (8)

- How To Read and Really Use An Item Analysis: Nurse EducatorДокумент6 страницHow To Read and Really Use An Item Analysis: Nurse EducatorTuấn Anh VũОценок пока нет

- Norms and The Meaning of Test ScoresДокумент49 страницNorms and The Meaning of Test ScoresLAVANYA M PSYCHOLOGY-COUNSELLINGОценок пока нет

- Comparison of Cognitive Ability and Acheivement TestsДокумент9 страницComparison of Cognitive Ability and Acheivement TestsEliana CartagenaОценок пока нет

- Measurement and Evaluation AnswersДокумент29 страницMeasurement and Evaluation Answersjimkuria90Оценок пока нет

- Koretz, Daniel. Measuring UpДокумент4 страницыKoretz, Daniel. Measuring UprafadrimarquesОценок пока нет

- Stripper Bolt, Coil Spring, Dowel PinДокумент3 страницыStripper Bolt, Coil Spring, Dowel Pinmuhamad laaliОценок пока нет

- Upload Emp Photo ConfigДокумент14 страницUpload Emp Photo Configpaulantony143Оценок пока нет

- Task 1 Methods in Teaching LiteratureДокумент2 страницыTask 1 Methods in Teaching LiteratureJaepiОценок пока нет

- Beginner Levels of EnglishДокумент4 страницыBeginner Levels of EnglishEduardoDiazОценок пока нет

- Rulings On MarriageДокумент17 страницRulings On MarriageMOHAMED HAFIZ VYОценок пока нет

- Pemahaman Sastra Mahasiswa Bahasa Dan Sastra Arab UIN Imam Bonjol Padang: Perspektif Ilmu SastraДокумент31 страницаPemahaman Sastra Mahasiswa Bahasa Dan Sastra Arab UIN Imam Bonjol Padang: Perspektif Ilmu Sastrailham nashrullahОценок пока нет

- Laurel VS GarciaДокумент2 страницыLaurel VS GarciaRon AceОценок пока нет

- PG 19 - 20 GROUP 5Документ2 страницыPG 19 - 20 GROUP 5Kevin Luis Pacheco ZarateОценок пока нет

- Creating The HardboiledДокумент20 страницCreating The HardboiledBen NallОценок пока нет

- Checkpoint PhysicsДокумент12 страницCheckpoint PhysicsRishika Bafna100% (1)

- Aswini Expert SystemsДокумент37 страницAswini Expert SystemsKarishma Satheesh KumarОценок пока нет

- 07.03.09 Chest PhysiotherapyДокумент10 страниц07.03.09 Chest PhysiotherapyMuhammad Fuad MahfudОценок пока нет

- Flexural Design of Fiber-Reinforced Concrete Soranakom Mobasher 106-m52Документ10 страницFlexural Design of Fiber-Reinforced Concrete Soranakom Mobasher 106-m52Premalatha JeyaramОценок пока нет

- Case Study 2022 - HeyJobsДокумент6 страницCase Study 2022 - HeyJobsericka.rolim8715Оценок пока нет

- HF CharactersДокумент5 страницHF CharactersAudri DebnathОценок пока нет

- The Use of Images by Claudius ClaudianusДокумент66 страницThe Use of Images by Claudius ClaudianusDracostinarumОценок пока нет

- JKSSB Panchayat Secretary Adfar NabiДокумент3 страницыJKSSB Panchayat Secretary Adfar NabiSHEIKHXUNIОценок пока нет

- E-Gift Shopper - Proposal - TemplateДокумент67 страницE-Gift Shopper - Proposal - TemplatetatsuОценок пока нет

- Press ReleaseДокумент1 страницаPress Releaseapi-303080489Оценок пока нет

- IC HDL Lab ManualДокумент82 страницыIC HDL Lab ManualRakshitha AngelОценок пока нет

- Noorul Islam Centre For Higher Education Noorul Islam University, Kumaracoil M.E. Biomedical Instrumentation Curriculum & Syllabus Semester IДокумент26 страницNoorul Islam Centre For Higher Education Noorul Islam University, Kumaracoil M.E. Biomedical Instrumentation Curriculum & Syllabus Semester Iisaac RОценок пока нет

- Course Content: SAP Fiori Implementation (SAPX03)Документ3 страницыCourse Content: SAP Fiori Implementation (SAPX03)Jathin Varma KanumuriОценок пока нет

- Mein Leben Und Streben by May, Karl Friedrich, 1842-1912Документ129 страницMein Leben Und Streben by May, Karl Friedrich, 1842-1912Gutenberg.orgОценок пока нет

- Digital Signal Processing AssignmentДокумент5 страницDigital Signal Processing AssignmentM Faizan FarooqОценок пока нет

- YaalДокумент25 страницYaalruseenyОценок пока нет

- CR Injector Repair Kits 2016Документ32 страницыCR Injector Repair Kits 2016Euro Diesel100% (2)

- Ready For First TB Unit3Документ10 страницReady For First TB Unit3Maka KartvelishviliОценок пока нет

- แนวข้อสอบเข้าม.1 อังกฤษ ชุดที่1Документ8 страницแนวข้อสอบเข้าม.1 อังกฤษ ชุดที่1ณิชคุณ สอนคุ้มОценок пока нет

- How To Manage Asthma: A GuideДокумент44 страницыHow To Manage Asthma: A GuideSrinivas YerriboinaОценок пока нет

- Meditation On God's WordДокумент26 страницMeditation On God's WordBeghin BoseОценок пока нет