Академический Документы

Профессиональный Документы

Культура Документы

Implementation of Reducing Features To Improve Code Change Based Bug Prediction by Using Cos-Triage Algorithm

Загружено:

esatjournalsОригинальное название

Авторское право

Доступные форматы

Поделиться этим документом

Поделиться или встроить документ

Этот документ был вам полезен?

Это неприемлемый материал?

Пожаловаться на этот документАвторское право:

Доступные форматы

Implementation of Reducing Features To Improve Code Change Based Bug Prediction by Using Cos-Triage Algorithm

Загружено:

esatjournalsАвторское право:

Доступные форматы

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

IMPLEMENTATION OF REDUCING FEATURES TO IMPROVE CODE

CHANGE BASED BUG PREDICTION BY USING COS-TRIAGE

ALGORITHM

Veena Jadhav1, Vandana Gaikwad2, Netra Patil3

1

Student, Computer Engineering Department, BVDUCOE Pune-43, Maharashtra, India

Assistant Professor, Computer Engineering Department, BVDUCOE Pune-43, Maharashtra, India

3

Assistant Professor, Computer Engineering Department, BVDUCOE Pune-43, Maharashtra, India

2

Abstract

Today, we are getting plenty of bugs in the software because of variations in the software and hardware technologies. Bugs are

nothing but Software faults, existing a severe challenge for system reliability and dependability. To identify the bugs from the

software bug prediction is convenient approach. To visualize the presence of a bug in a source code file, recently, Machine

learning classifiers approach is developed. Because of a huge number of machine learned features current classifier-based bug

prediction have two major problems i) inadequate precision for practical usage ii) measured prediction time. In this paper we

used two techniques first, cos-triage algorithm which have a go to enhance the accuracy and also lower the price of bug

prediction and second, feature selection methods which eliminate less significant features. Reducing features get better the quality

of knowledge extracted and also boost the speed of computation.

Keywords: Efficiency, Bug Prediction, Classification, Feature Selection, Accuracy.

--------------------------------------------------------------------***---------------------------------------------------------------------1. INTRODUCTION

Classifiers such as SVM and navies byes are accomplished

on previous software project information, also it can be

expended to estimate the presence of a bug in an specific

record in software, as complete in previous work[2].

To fix bug cos-triage algorithm is used. Afterward that data

is added in historical data or in log record. Afterward,

classifier is accomplished on data originate in previous log

record. Then, it can be expended to categorize a renewed

variation is either buggy or clean.

Now days, The Eclipse IDE contains a prototype displaying

server which is used to compute bug predictions [4]. It can

aslo offer accurate predictions. Bug prediction system must

offer a lesser number of incorrect variations that are

predicted to be pram but which are very new, if software

developers are to belief [3]. Suppose, if enormous numbers

of improved variations are incorrectly estimated to be pram,

engineers wont have belief in the bug prediction.

Previous bug prediction methodology and identical work

offered by scientist which employ the removal of features

from the prior of variations created to a software system[2].

These features comprise completely distributed by blank

space in the code and that all were included or rejected in a

variation. Henceforth, to prepare the SVM classifier entirely

variables, comment words, numerical operatives, name of

methods, and development language keywords are used as

features which is existing in this paper.

Cost of large feature set is tremendously high. Because of

multiple interactions and noise classifiers cannot handle

such an enormous feature set. As well as number of features

increases time also increases and expanding to a number of

seconds per categorization for tens of thousands of features,

and minutes for enormous project data accounts.

Henceforth, this will influences the availability of a bug

prediction scheme.

There are several classification approaches could be

working. But in this paper we used cos-triage algorithm and

SVM.

1) This paper contributes three aspects:

2) Study of various feature selection methods to

categorize bugs in software program variations.

3) Use of cos-triage algorithm to increase accuracy and

reduce cost of bug prediction.

The rest of this paper is prepared as follows: In Section 2

primary steps involved in performing change classification

is presented. Also this section discuss about feature selection

techniques in more detail. Section 3 discuss prior work.

Section 4 contains system overview for proposed system.

Finally a conclusion is made in section 5.

2. CHANGE CLASSIFICATION PROCESS

Following steps are concerned in executing Various

categorization on distinct project. Creating a Corpus:

1) Change deltas are mining from the log records of a

project, as kept in its SCM repository.

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

429

IJRET: International Journal of Research in Engineering and Technology

2) For each file bug fix variations are known by observing

keywords in SCM variation log messages.

3) At the record level without bug and buggy variations are

known by finding reverse in the review log record from bug

resolve.

4) Features contain both buggy and clean and that mined

from all variations. In Complete source code all expressions

are considered as features. Features are nothing but the lines

fitted in each variation, meta-data such as author, time etc.

we can calculate complexity metrics at this stage.

5) In this paper to compute a reduced set of features

combination of wrapper and filter approaches are used. In

this paper four filter based rankers are used. i) Relief-F. ii)

Chi-Squared iii) Gain Ratio iv) Significance. The wrapper

methods are depends on the SVM classifiers.

6) Classification model is accomplished by using reduced

set.

7) Skilled classifier is set to use. Whether a original

variation is more analogous to a buggy variation or a clean

variation is proven by classifier.

2.1 Detection of Buggy and Clean Changes

For bug prediction training data is fixed and used. Mining

variation log records is used to determine bug introducing

variations and to identify bug fixes. First is Examining for

keywords in old revision such as Set Bug or other

keywords possible to develop in a bug repair.second,

Examining for another situations to bug.

eISSN: 2319-1163 | pISSN: 2321-7308

A classification model is accomplished by using the reduced

feature set. Whether a new variation is more associated to a

buggy variation or a clean variation is decided by classifier.

3. RELATED WORK

A model proposed by scientist Khoshgoftaar and Allen to

group modules corresponding to software quality factors

such as upcoming defect intensity using different phases of

multi-regression[5][6][7]. Ostrand et al. discovered two

model i) The top 20% of problematic records in a project

[11] using upcoming defect predictors ii) A linear regression

model

Totally Ordered Program Units could be transformed into a

half ordered suite list, e.g. by giving the topmost N% of

units as offered by Ostrand et al. Hassan and A caching

algorithm to compute the set of fault-prone modules, called

the topmost-10 list offered by halt [9]. The bug cache

algorithm to envisage upcoming faults built on preceding

fault areas offered by Kim et al.

Fault sessions of the Mozilla project through numerous

releases offered by Gyimothy et al. [11]. With the help of

decision trees and neural networks that use object-oriented

metrics as features. Six different feature selection techniques

when using the Naive Bayes and the C4.5 classifier

considered by Hall and Holmes [12] .There are about 100

features in each dataset. Hall and Holmes launched many of

the feature selection methods.

2.2 Feature Extraction

With the help of support vector machine algorithm, using

buggy and clean variations a classification model must be

accomplished which is used to consolidate software

variations.

The entire word in the source code programme distributed

with the help of blank space or a semicolon which is used as

a article .Example of that is method name, keyword,

variable name, comment word, function name and operator.

2.3 Feature Selection Techniques

Huge feature groups need extended working out and

estimation periods, also need lot of memory these are the

requirement to execute classification.

Feature selection is common solution to this problem In

which only the subcategory of structures that are generally

suitable for creating grouping outcomes are truly used.

A general defect prediction framework which encompass a

data pre-processor, feature selection methods, and machine

learning algorithms elaborated by Song et al. [14]. They also

consider that slight variations to data illustration can have a

vast influence on the outcomes of article selection and bug

prediction. Numerous feature selection algorithms to

envisage buggy software modules for a hugeheritage

communications software offered by Gao et al.

Numerous feature selection algorithms to envisage buggy

software units for a huge legacy communications software

offered by Gao et al. There are two methods used. First,

filter based techniques and second, subset selection search

algorithm.

2.4 Feature Selection Process

A repetitive process of choosing increasingly fewer

important group of features is finished by using Filter and

wrapper approaches.

This process starts by splitting the primary feature set in

partial which reduces processing requirements and memory

for the remaining process.

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

430

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

4. SYSTEM OVERVIEW

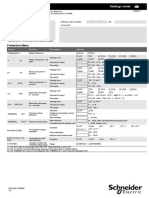

Fig -1: System Overview of bug prediction system

Above diagram fig 1. Shows general overview of the

system. Originally, user login/register into the system. It

also encompasses training data/raw data which retains the

logs or history. Then it checks for bug. If bug present in the

system verify and report bug. Then, cos-triage algorithm is

applied on that bug. Cos-triage algorithm supports us for

fixing bug or defects. Then at the back end that output with

bug is used as input to support vector machine (SVM)

classifier .SVM classifier uses different feature selection

methods which is given above. After that by reducing the

features we gets final output without bug. SVM classifier

operate on skilled data/raw data which is kept in log record.

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

431

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

5. SCREENSHOTS

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

432

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

433

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

6. GRAPHICAL REPRESENTATION

Fig 2.Graphical representation of bug prediction on projects

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

434

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

Fig.3: Cos-triage algorithm graph

7. CONCLUSION

This paper has explored algorithm called Cos-triage

algorithm which helps to reduce cost and increase accuracy

of bug fixing or bug prediction. In this paper we used

feature selection method which reduces the quantity of

structures used by a support vector device classifier and

naive byes for bug prediction.

Henceforward, the usage of classifiers with feature selection

will approve fast, correct, more accurate bug predictions, if

software designers have highly developed bug prediction

methods rooted into their software development

environment. Similarly, many algorithm will build in future

which will increase accuracy of bug prediction.

[6]

[7]

[8]

[9]

[10]

REFERENCES

[1]

[2]

[3]

[4]

[5]

T.L. Graves, A.F. Karr, J.S. Marron, and H. Siy,

Predicting Fault Incidence Using Software Change

History, IEEE Trans. Software Eng., vol. 26, no. 7,

pp. 653-661, July 2000.

S. Kim, E. W. Jr., and Y. Zhang, Classifying

Software Changes: Clean or Buggy? IEEE Trans.

Software Eng., vol. 34, no. 2, pp. 181196,2008.

A.Bessey, K. Block, B. Chelf, A. Chou, B. Fulton, S.

Hallem, C.Henri-Gros, A. Kamsky, S. McPeak, and

D.R. Engler, A Few Billion Lines of Code Later:

Using Static Analysis to Find Bugs in the Real

World, Comm. ACM, vol. 53, no. 2, pp. 66-75,

2010.

J. Madhavan and E. Whitehead Jr., Predicting

Buggy Changes Inside an Integrated Development

Environment, Proc. OOPSLA Workshop Eclipse

Technology eXchange, 2007.

T. Khoshgoftaar and E. Allen, Predicting the Order

of Fault-Prone Modules in Legacy Software, Proc.

1998 Intl Symp. on SoftwareReliability Eng., pp.

344353, 1998.

[11]

[12]

[13]

[14]

[15]

R. Kumar, S. Rai, and J. Trahan, Neural-Network

Techniques for Software-Quality Evaluation,

Reliability and Maintainability Symposium,1998.

T. Ostrand, E. Weyuker, and R. Bell, Predicting the

Location and Number of Faults in Large Software

Systems, IEEE Trans. SoftwareEng., vol. 31, no. 4,

pp. 340355, 2005.

A.Hassan and R. Holt, The Top Ten List: Dynamic

Fault Prediction,Proc. ICSM05, Jan 2005.

R. Moser, W. Pedrycz, and G. Succi, A Comparative

Analysis ofthe Efficiency of Change Metrics and

Static Code Attributes for Defect Prediction, Proc.

30th Intl Conf. Software Eng., pp. 181-190,2008..

L.C. Briand, W.L. Melo, and J. Wu st, Assessing

the Applicability of Fault-Proneness Models Across

Object-Oriented Software Projects, IEEE Trans.

Software Eng., vol. 28, no. 7, pp. 706-720,July 2002.

M. Hall and G. Holmes, Benchmarking Attribute

Selection Techniques for Discrete Class Data

Mining, IEEE Trans. Knowledge and Data Eng., vol.

15, no. 6, pp. 1437-1447, Nov./Dec. 2003.

J. Quinlan, C4.5: Programs for Machine Learning.

Morgan Kaufmann,1993.

Q. Song, Z. Jia, M. Shepperd, S. Ying, and J. Liu, A

General Software Defect-Proneness Prediction

Framework, IEEE Trans. Software Eng., vol. 37, no.

3, pp. 356-370, May/June 2011.

K. Gao, T. Khoshgoftaar, H. Wang, and N. Seliya,

Choosing Software Metrics for Defect Prediction:

An Investigation on Feature Selection Techniques,

Software: Practice and Experience,vol. 41, no. 5, pp.

579-606, 2011.

A. Bessey, K. Block, B. Chelf, A. Chou, B. Fulton, S.

Hallem, C.Henri-Gros, A. Kamsky, S. McPeak, and

D.R. Engler, A FewBillion Lines of Code Later:

Using Static Analysis to Find Bugs in the Real

World, Comm. ACM, vol. 53, no. 2, pp. 66-75, 2010

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

435

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

BIOGRAPHIES

Miss Veena jadhav M.tech (Computer)

BVDUCOE,

Pune

Prof.Vandana Gaikwad, Assistant Professor, Computer

department, BVDUCOE, Pune

Prof.Netra Patil, Assistant Professor, Computer department

BVDUCOE, Pune

_______________________________________________________________________________________

Volume: 03 Issue: 11 | Nov-2014, Available @ http://www.ijret.org

436

Вам также может понравиться

- PTE 3 Week Study ScheduleДокумент3 страницыPTE 3 Week Study ScheduleesatjournalsОценок пока нет

- Analysis and Optimization of Electrodes For Improving The Performance of Ring Laser Gyro PDFДокумент4 страницыAnalysis and Optimization of Electrodes For Improving The Performance of Ring Laser Gyro PDFesatjournalsОценок пока нет

- An Improved Geo-Encryption Algorithm in Location Based Services PDFДокумент4 страницыAn Improved Geo-Encryption Algorithm in Location Based Services PDFesatjournalsОценок пока нет

- An Efficient Information Retrieval Ontology System Based Indexing For Context PDFДокумент7 страницAn Efficient Information Retrieval Ontology System Based Indexing For Context PDFesatjournalsОценок пока нет

- Analysis and Characterization of Dendrite Structures From Microstructure Images of Material PDFДокумент5 страницAnalysis and Characterization of Dendrite Structures From Microstructure Images of Material PDFesatjournalsОценок пока нет

- An Octa-Core Processor With Shared Memory and Message-Passing PDFДокумент10 страницAn Octa-Core Processor With Shared Memory and Message-Passing PDFesatjournalsОценок пока нет

- Analytical Assessment On Progressive Collapse Potential of New Reinforced Concrete Framed Structure PDFДокумент5 страницAnalytical Assessment On Progressive Collapse Potential of New Reinforced Concrete Framed Structure PDFesatjournalsОценок пока нет

- Analysis of Outrigger System For Tall Vertical Irregularites Structures Subjected To Lateral Loads PDFДокумент5 страницAnalysis of Outrigger System For Tall Vertical Irregularites Structures Subjected To Lateral Loads PDFesatjournalsОценок пока нет

- A Review Paper On Smart Health Care System Using Internet of ThingsДокумент5 страницA Review Paper On Smart Health Care System Using Internet of ThingsesatjournalsОценок пока нет

- Analysis of Cylindrical Shell Structure With Varying Parameters PDFДокумент6 страницAnalysis of Cylindrical Shell Structure With Varying Parameters PDFesatjournalsОценок пока нет

- Analysis and Optimization of Sand Casting Defects With The Help of Artificial Neural Network PDFДокумент6 страницAnalysis and Optimization of Sand Casting Defects With The Help of Artificial Neural Network PDFesatjournalsОценок пока нет

- Analysis and Design of A Multi Compartment Central Cone Cement Storing Silo PDFДокумент7 страницAnalysis and Design of A Multi Compartment Central Cone Cement Storing Silo PDFesatjournalsОценок пока нет

- A New Type of Single-Mode Lma Photonic Crystal Fiber Based On Index-Matching Coupling PDFДокумент8 страницA New Type of Single-Mode Lma Photonic Crystal Fiber Based On Index-Matching Coupling PDFesatjournalsОценок пока нет

- A Study and Survey On Various Progressive Duplicate Detection MechanismsДокумент3 страницыA Study and Survey On Various Progressive Duplicate Detection MechanismsesatjournalsОценок пока нет

- A Comparative Investigation On Physical and Mechanical Properties of MMC Reinforced With Waste Materials PDFДокумент7 страницA Comparative Investigation On Physical and Mechanical Properties of MMC Reinforced With Waste Materials PDFesatjournalsОценок пока нет

- A Survey On Identification of Ranking Fraud For Mobile ApplicationsДокумент6 страницA Survey On Identification of Ranking Fraud For Mobile ApplicationsesatjournalsОценок пока нет

- A Servey On Wireless Mesh Networking ModuleДокумент5 страницA Servey On Wireless Mesh Networking ModuleesatjournalsОценок пока нет

- A Review On Fake Biometric Detection System For Various ApplicationsДокумент4 страницыA Review On Fake Biometric Detection System For Various ApplicationsesatjournalsОценок пока нет

- A Research On Significance of Kalman Filter-Approach As Applied in Electrical Power SystemДокумент8 страницA Research On Significance of Kalman Filter-Approach As Applied in Electrical Power SystemesatjournalsОценок пока нет

- I JR Et 20160503007dfceДокумент5 страницI JR Et 20160503007dfcegtarun22guptaОценок пока нет

- A Research On Significance of Kalman Filter-Approach As Applied in Electrical Power SystemДокумент8 страницA Research On Significance of Kalman Filter-Approach As Applied in Electrical Power SystemesatjournalsОценок пока нет

- A Critical Review On Experimental Studies of Strength and Durability Properties of Fibre Reinforced Concrete Composite PDFДокумент7 страницA Critical Review On Experimental Studies of Strength and Durability Properties of Fibre Reinforced Concrete Composite PDFesatjournalsОценок пока нет

- I JR Et 20160503007dfceДокумент5 страницI JR Et 20160503007dfcegtarun22guptaОценок пока нет

- A Review On Fake Biometric Detection System For Various ApplicationsДокумент4 страницыA Review On Fake Biometric Detection System For Various ApplicationsesatjournalsОценок пока нет

- Ad Hoc Based Vehcular Networking and ComputationДокумент3 страницыAd Hoc Based Vehcular Networking and ComputationesatjournalsОценок пока нет

- A Review Paper On Smart Health Care System Using Internet of ThingsДокумент5 страницA Review Paper On Smart Health Care System Using Internet of ThingsesatjournalsОценок пока нет

- A Women Secure Mobile App For Emergency Usage (Go Safe App)Документ3 страницыA Women Secure Mobile App For Emergency Usage (Go Safe App)esatjournalsОценок пока нет

- A Servey On Wireless Mesh Networking ModuleДокумент5 страницA Servey On Wireless Mesh Networking ModuleesatjournalsОценок пока нет

- A Three-Level Disposal Site Selection Criteria System For Toxic and Hazardous Wastes in The PhilippinesДокумент9 страницA Three-Level Disposal Site Selection Criteria System For Toxic and Hazardous Wastes in The PhilippinesesatjournalsОценок пока нет

- A Survey On Identification of Ranking Fraud For Mobile ApplicationsДокумент6 страницA Survey On Identification of Ranking Fraud For Mobile ApplicationsesatjournalsОценок пока нет

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeОт EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeРейтинг: 4 из 5 звезд4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingОт EverandThe Little Book of Hygge: Danish Secrets to Happy LivingРейтинг: 3.5 из 5 звезд3.5/5 (400)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceОт EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceРейтинг: 4 из 5 звезд4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)От EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Рейтинг: 4 из 5 звезд4/5 (98)

- The Emperor of All Maladies: A Biography of CancerОт EverandThe Emperor of All Maladies: A Biography of CancerРейтинг: 4.5 из 5 звезд4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryОт EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryРейтинг: 3.5 из 5 звезд3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItОт EverandNever Split the Difference: Negotiating As If Your Life Depended On ItРейтинг: 4.5 из 5 звезд4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureОт EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureРейтинг: 4.5 из 5 звезд4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaОт EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaРейтинг: 4.5 из 5 звезд4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaОт EverandThe Unwinding: An Inner History of the New AmericaРейтинг: 4 из 5 звезд4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnОт EverandTeam of Rivals: The Political Genius of Abraham LincolnРейтинг: 4.5 из 5 звезд4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyОт EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyРейтинг: 3.5 из 5 звезд3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreОт EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreРейтинг: 4 из 5 звезд4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersОт EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersРейтинг: 4.5 из 5 звезд4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)От EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Рейтинг: 4.5 из 5 звезд4.5/5 (121)

- English Cloze PassageДокумент2 страницыEnglish Cloze PassageKaylee CheungОценок пока нет

- 2020 - A Revisit of Navier-Stokes EquationДокумент12 страниц2020 - A Revisit of Navier-Stokes EquationAshutosh SharmaОценок пока нет

- Name Any Three Client Applications To Connect With FTP ServerДокумент5 страницName Any Three Client Applications To Connect With FTP ServerI2414 Hafiz Muhammad Zeeshan E1Оценок пока нет

- School of Science and Engineering: Experiment No-05Документ4 страницыSchool of Science and Engineering: Experiment No-05Mohammad PabelОценок пока нет

- .Game Development With Blender and Godot - SampleДокумент6 страниц.Game Development With Blender and Godot - Sampleсупер лимонОценок пока нет

- Booster Unit Auto Backwash FilterДокумент5 страницBooster Unit Auto Backwash FilterFardin NawazОценок пока нет

- Generic Classes and Methods: A Deeper Look: Java How To Program, 11/eДокумент35 страницGeneric Classes and Methods: A Deeper Look: Java How To Program, 11/eWilburОценок пока нет

- 5G Smart Port Whitepaper enДокумент27 страниц5G Smart Port Whitepaper enWan Zulkifli Wan Idris100% (1)

- EWM Transaction CodesДокумент22 страницыEWM Transaction CodesChuks Osagwu100% (1)

- PMO PresentationДокумент30 страницPMO PresentationHitesh TutejaОценок пока нет

- Access The GUI On Unity Array With No Network access-KB 501073Документ3 страницыAccess The GUI On Unity Array With No Network access-KB 501073Алексей ПетраковОценок пока нет

- Functionality Type of License AxxonДокумент3 страницыFunctionality Type of License AxxonMikael leandroОценок пока нет

- Setting Up An MVC4 Multi-Tenant Site v1.1Документ61 страницаSetting Up An MVC4 Multi-Tenant Site v1.1Anon Coder100% (1)

- Syllabus 2017Документ3 страницыSyllabus 2017sandman21Оценок пока нет

- Aseptic Tube-ToTube Transfer Lab - Module 1 CaseДокумент5 страницAseptic Tube-ToTube Transfer Lab - Module 1 Casemo100% (1)

- CSProxyCache DLLДокумент36 страницCSProxyCache DLLRadient MushfikОценок пока нет

- VIP400 Settings Sheet NRJED311208ENДокумент2 страницыVIP400 Settings Sheet NRJED311208ENVăn NguyễnОценок пока нет

- Strapack I-10 Instruction-Parts Manual MBДокумент26 страницStrapack I-10 Instruction-Parts Manual MBJesus PempengcoОценок пока нет

- Employability Assignment (Shaon Saha - 5802)Документ6 страницEmployability Assignment (Shaon Saha - 5802)Shaon Chandra Saha 181-11-5802Оценок пока нет

- PPM Pulse-Position ModulationДокумент2 страницыPPM Pulse-Position ModulationfiraszekiОценок пока нет

- The Insecurity of 802.11Документ10 страницThe Insecurity of 802.11afsfddasdОценок пока нет

- A Study of The Impact of Social Media On Consumer Buying Behaviour Submitted ToДокумент25 страницA Study of The Impact of Social Media On Consumer Buying Behaviour Submitted ToAnanya GhoshОценок пока нет

- Chapter 2 - The Origins of SoftwareДокумент26 страницChapter 2 - The Origins of Softwareلوي وليدОценок пока нет

- Question Text: Complete Mark 1.00 Out of 1.00Документ39 страницQuestion Text: Complete Mark 1.00 Out of 1.00Muhammad AliОценок пока нет

- 06 External MemoryДокумент39 страниц06 External Memoryobaid awanОценок пока нет

- Java J2EE Made EasyДокумент726 страницJava J2EE Made Easyeswar_jkОценок пока нет

- Itutor Prospectus 2013-2015 - FEB 16 - Itutor Fee StructureДокумент3 страницыItutor Prospectus 2013-2015 - FEB 16 - Itutor Fee StructureAnshuman DashОценок пока нет

- Sencore SA 1454 Manual V1.0Документ76 страницSencore SA 1454 Manual V1.0scribbderОценок пока нет

- MT6582 Android ScatterДокумент5 страницMT6582 Android ScatterSharad PandeyОценок пока нет

- Metor 6M Users Manual Ver 92102916 - 5Документ80 страницMetor 6M Users Manual Ver 92102916 - 5Stoyan GeorgievОценок пока нет